User login

Impact of Physician Facecards

The patient‐physician relationship is fundamental to safe and effective care. Hospital settings present unique challenges to this partnership, including the lack of a prior relationship for hospital‐based physicians, rapid pace of clinical care, and dynamic nature of inpatient medical teams. Prior studies document that a majority of hospitalized patients are unable to correctly identify their physicians or nurses, and patients in teaching hospitals have difficulty understanding their physicians' level of training.[1, 2, 3, 4] Acknowledging these deficits, professional societies and the Accreditation Council for Graduate Medical Education (ACMGE) have issued policies stating that patients and caregivers need to know who is responsible at every point during patient care.[5, 6] These policies do not, however, make recommendations on methods to achieve better understanding.

Simple interventions improve patients' ability to correctly identify the names and roles of their hospital physicians. Maniaci and colleagues found that patients were better able to identify attending physicians when their names were written on the dry‐erase board in the room.[7] Arora and colleagues asked hospital physicians to give facecards, which included their picture and a description of their role, to patients.[8] Patients were more likely to correctly identify 1 physicians, but, surprisingly, less likely to understand physicians' roles. In a similar study, Francis and colleagues placed photographs with names of the attending and resident physicians on the wall in patient rooms.[9] Patients who had photographs of their physicians on the wall were more likely to correctly identify physicians on their team compared with patients who had no photographs. Additionally, patients who were able to identify more physicians rated satisfaction with physicians higher in 2 of 6 survey questions used. However, the study was limited by the use of a nonvalidated instrument to assess patient satisfaction and the use of an intermediate outcome (ie, ability to identify physicians) as the independent variable rather than the intervention itself (ie, physician photographs).

Beyond satisfaction, lack of familiarity may negatively impact patients' trust and agreement with hospital physicians. Trust and agreement are important predictors of adherence to recommended treatment in outpatient settings[10, 11, 12, 13, 14, 15, 16, 17, 18] but have not been adequately evaluated in hospital settings. Therefore, we sought to pilot the use of physician facecards and assess their potential impact on patients' knowledge of physicians' names and roles as well as patient satisfaction, trust, and agreement with physicians.

METHODS

Setting and Study Design

We performed a cluster randomized controlled trial at Northwestern Memorial Hospital (NMH), an 897‐bed tertiary‐care teaching hospital in Chicago, Illinois. One of 2 similar hospitalist service units and 1 of 2 similar teaching‐service units were randomly selected to implement the use of physician facecards. General medical patients were admitted to the study units by NMH bed‐assignment personnel subject to unit bed availability. No other criteria (eg, diagnosis, severity of illness, or source of patient admission) were used in patient assignment. Each unit consisted of 30 beds, with the exception of 1 hospitalist unit, which had 23. As a result of a prior intervention, physicians were localized to care for patients on specific units.[19] Hospitalist units were each staffed by hospitalists who worked in 7‐day rotations without the assistance of residents or midlevel providers. Teaching units were staffed by physician teams consisting of 1 attending, 1 senior resident, 1 intern, and 1 or 2 third‐year medical students. No fourth‐year students (ie, acting interns) rotated on these services during the study period. Housestaff worked in 4‐week rotations, and attending physicians on the teaching service worked in 2‐week rotations.

Patient rooms included a whiteboard facing the patient with a template prompting insertion of physician name(s). Nurses had the primary responsibility for completing information on the whiteboards.

Physician Facecard

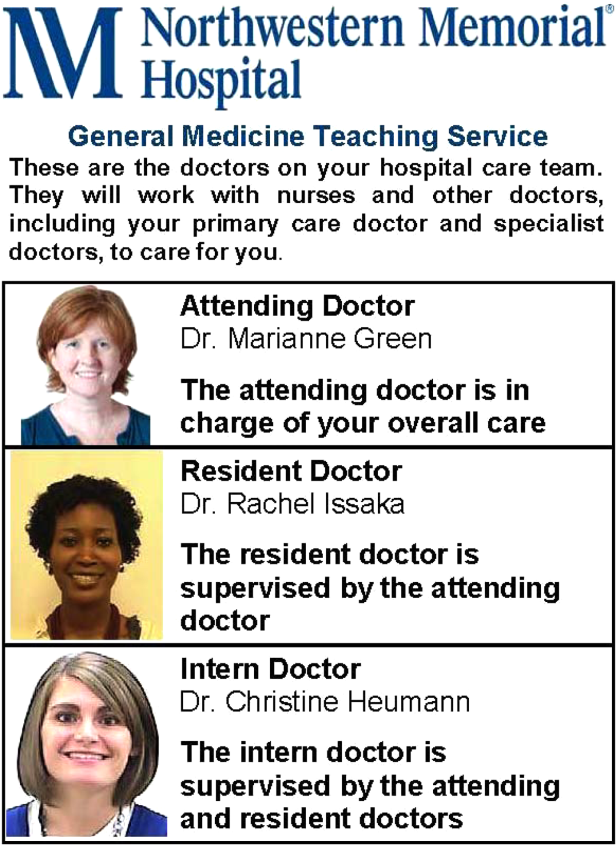

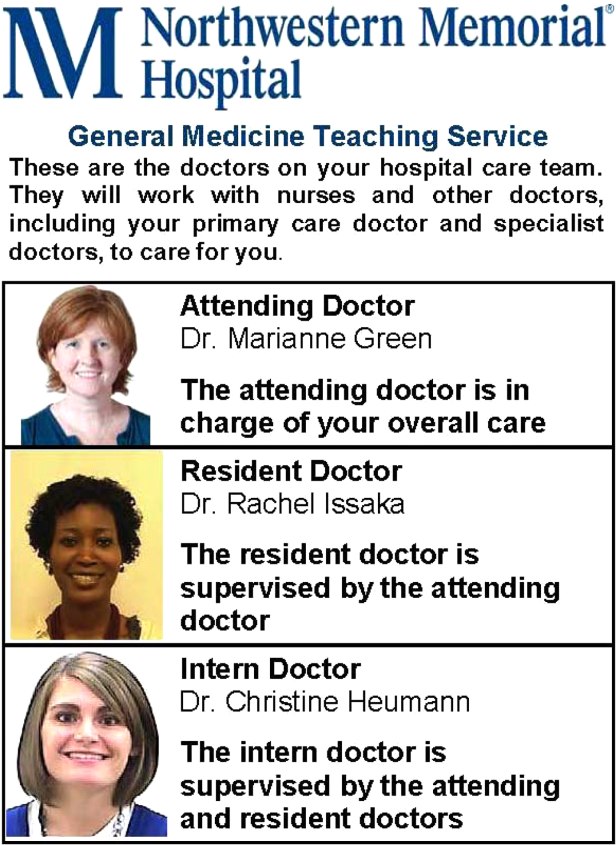

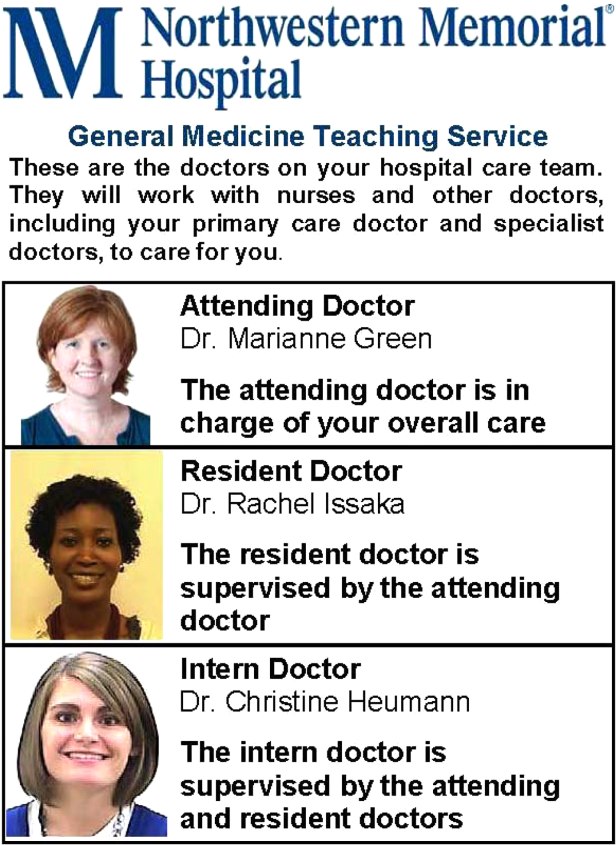

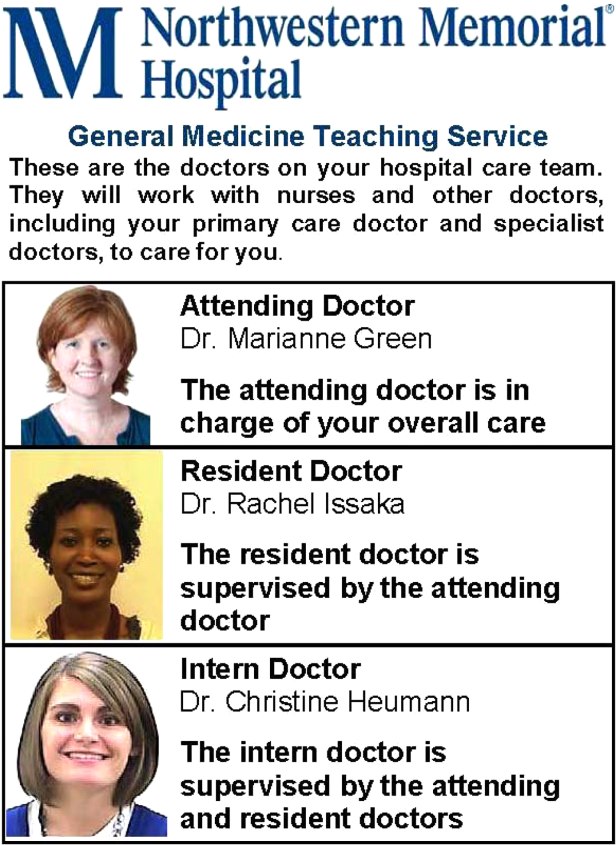

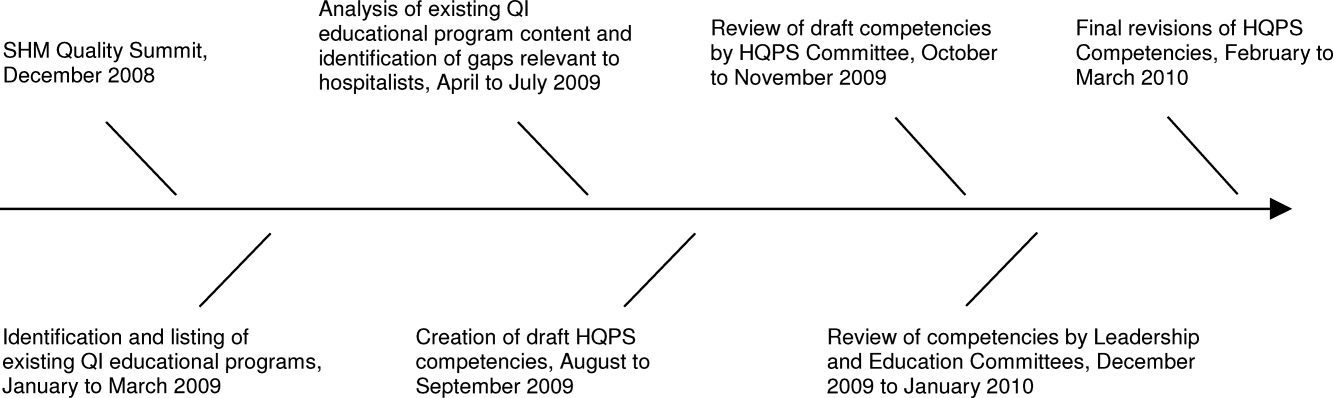

We created draft physician facecards featuring pictures of physicians and descriptions of their roles. We used Lexile analysis, a widely used measure of reading difficulty, to improve readability in an iterative fashion.[20, 21] We then sought feedback at hospitalist and resident meetings. Specifically, we asked for suggested revisions to content and recommendations on reliable methods to deliver facecards to patients. Teaching physicians felt strongly that each team member should be listed and shown on 1 card, which would fit easily into a lab‐coat pocket. We similarly engaged the NMH Patient and Family Advisory Council to seek recommended revisions to content and delivery of the facecards. The Council consists of 18 patient and caregiver members who meet regularly to provide input on hospital programs and proposals. Council members felt strongly that physicians should deliver the cards themselves during their initial introduction, rather than having patients receive cards by other means (eg, as part of unit orientation materials delivered by nonphysician staff members). We incorporated feedback from these stakeholder groups into a final version of the physician facecard and method for delivery (Figure 1).

We implemented the use of facecards from May to June 2012. Physicians on intervention units were informed of the study via email, and one of the co‐investigators (T.C.) distributed a supply of facecards to these physicians at the start of each rotation. This distribution was performed in person, and physicians were instructed to provide a facecard to each new patient during their first encounter. We also placed facecards in easily visible cardholders at the nurses' station on intervention units. Reminder emails were sent once each week to reinforce physician delivery of facecards.

Data Collection and Measures

Each weekday during the study period, we randomly selected patients for structured interviews in the afternoon of their second or third hospital day. We did not conduct interviews on the first day of physicians' rotations and excluded patients whose preferred language was not English and those disoreinted to person, place, or time.

Patients were asked to name the physician(s) primarily responsible for their hospital care and to state the role of each physician they identified. We documented receipt of facecards if one was viewed during the interview and by asking patients if they had received one. We also documented whether 1 correct physician names were written on the whiteboard in the patients' rooms. We used questions from the Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) survey to assess satisfaction with physician communication and overall hospital care. HCAHPS is a validated patient‐satisfaction survey developed by the Agency for Healthcare Research and Quality (AHRQ) to assess hospitalized patients' experiences with care. Physician‐communication questions used ordinal response options of never, sometimes, usually, and always. Overall hospital rating was assessed using a 010 scale with 0=worst hospital possible and 10=best hospital possible. Trust with physicians was assessed using the Wake Forest University Trust Scale.[22] Prior research using this instrument has shown an association between trust and self‐management behaviors.[23] This 10‐item scale uses a 5‐point Likert scale and generates scores ranging from 10 to 50. Agreement with physicians was assessed using 3 questions used in a prior study by Staiger and colleagues showing an association between levels of agreement and health outcomes among outpatients treated for back pain.[17] Specifically, we asked patients to rate their agreement with hospital physicians' (1) explanation for the cause of primary symptoms, (2) plan for diagnostic tests, and (3) suggested plan for treatment using a 5‐point Likert scale. The agreement scale generated scores ranging from 3 to 15.

Approval for the study was obtained from the institutional review board of Northwestern University.

Statistical Analysis

Patient demographic data were obtained from the electronic health record and complemented data from interviews. We used [2] and t tests to compare patient characteristics. We used [2] tests to compare the percentage of patients able to correctly identify 1 of their physicians and 1 of their physicians' roles. We used [2] tests to compare the percentage of patients giving top‐box ratings to all 3 physician‐communicationsatisfaction questions (ie, always) and giving an overall hospital rating of 9 or 10. We used top‐box comparisons, rather than comparison of mean or median scores, because patient‐satisfaction data are typically highly skewed toward favorable responses. This approach is consistent with prior HCAHPS research.[24, 25] We used Mann‐Whitney U tests to compare ratings of trust and agreement. Because delivery of facecards was imperfect, we performed analyses both by intention to treat (ie, intervention vs control units) and based on treatment received (ie, received a facecard vs did not receive a facecard). All analyses were conducted using Stata version 11.2 (StataCorp, College Station, TX).

RESULTS

Study Subjects and Facecard Receipt

Overall, 217 patients were approached for interview. Thirty‐six were excluded because of disorientation, 12 were excluded because their preferred language was not English, and 31 declined to participate in the study. Patient characteristics for the 138 study patients are shown in Table 1. There were no significant differences in patient age, sex, or race. There was no significant difference in the percentage of patients with 1 correct physicians listed on the whiteboard in the room. Delivery of facecards was incomplete, with only 68% of intervention‐unit patients confirmed as having received them. A higher percentage of patients on the hospitalist intervention unit received facecards (23 of 30; 76.7%) than on the teaching intervention unit (22 of 36; 61.1%), but the difference was not statistically significant (P=0.18). There were no significant differences in age, sex, or race between patients who received a facecard compared with those who did not.

| Characteristic | Control Group, N=72 | Intervention Group, N=66 | P Value |

|---|---|---|---|

| |||

| Mean age, years (SD) | 56.8 (18.0) | 55.2 (18.2) | 0.62 |

| Women, n (%) | 35 (48.6) | 28 (42.4) | 0.47 |

| Nonwhite race, n (%) | 35 (50.7) | 36 (57.1) | 0.46 |

| Teaching unit, n (%) | 34 (47.2) | 36 (54.6) | 0.39 |

| Correct physician name on whiteboard, n (%)a | 46 (76.7) | 37 (72.6) | 0.62 |

| Received a facecard, n (%) | 1 (1) | 45 (68.2) | <0.01 |

Patients' Knowledge of Physicians

As shown in Table 2, more patients in the intervention group were able to correctly identify 1 of their treating physicians compared with the control group, but the result was not statistically significant (69.7% vs 58.3%; P=0.17). A significantly larger percentage of patients in the intervention group were able to identify the role of their hospital physicians (51.5% vs 16.7%; P<0.01). When comparing those that received a facecard and those that did not, patients who were given a facecard were more likely to correctly identify their hospital physician (89.1% vs 51.1%; P<0.01). Similarly, patients who had received a facecard were more likely to correctly identify the role of their hospital physician than patients who had not received a facecard (67.4% vs 16.3%; P<0.01).

| Impact | Control Group, N=72, n (%) | Intervention Group, N=66, n (%) | P Value |

|---|---|---|---|

| Patient correctly named 1 hospital physician | 42 (58.3) | 46 (69.7) | 0.17 |

| Patient correctly named role of hospital physician | 12 (16.7) | 34 (51.5) | <0.01 |

| Did Not Receive Facecard, N=92 | Received Facecard, N=46 | P Value | |

| Patient correctly named 1 hospital physician | 47 (51.1) | 41 (89.1) | <0.01 |

| Patient correctly named role of hospital physician | 15 (16.3) | 31 (67.4) | <0.01 |

Levels of Satisfaction, Trust, and Agreement

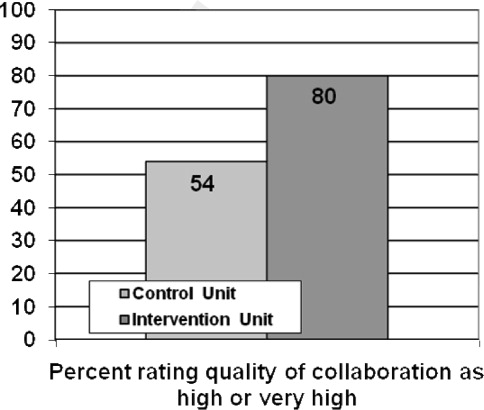

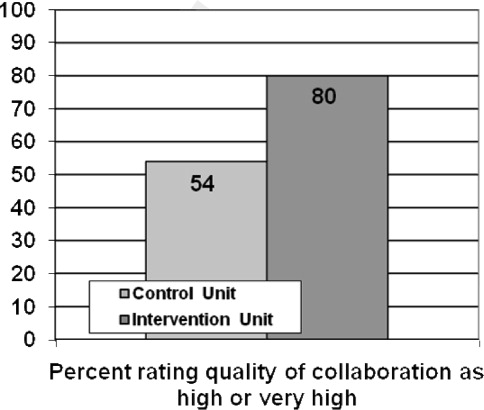

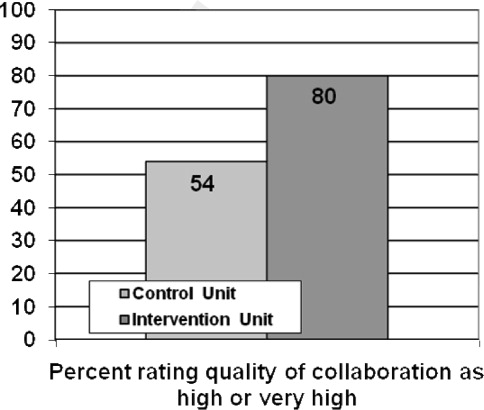

Overall, patients had high levels of satisfaction, trust, and agreement with hospital physicians. The overall satisfaction with physician communication was 75.6% (mean of top‐box scores across all 3 items), and 81 of 138 (58.7%) patients gave top‐box ratings to all 3 physician‐communicationsatisfaction items. Ninety‐seven of 137 (70.8%) patients rated overall hospital care as 9 or 10. The mean trust score for all patients was 40.77.8 and the median was 41.5 (interquartile range, 3747). The mean agreement score for all patients was 12.42.4 and the median was 12 (interquartile range, 1115). As shown in Table 3, satisfaction, trust, and agreement were similar for patients in the intervention group compared with the control group. Patients who received a facecard rated satisfaction, trust, and agreement slightly higher compared with those who had not received a facecard, but the results were not statistically significant.

| Ratings | Control Group, N=72 | Intervention Group, N=66 | P Value |

|---|---|---|---|

| |||

| Satisfaction with physicians, n (%)a | 39 (54.2) | 42 (63.6) | 0.26 |

| Overall hospital satisfaction, n (%)b | 51 (70.8) | 46 (70.8) | 0.99 |

| Median trust (IQR)c | 42 (3747) | 41 (3746) | 0.81 |

| Median agreement (IQR)c | 12 (1115) | 12 (1215) | 0.72 |

| Did Not Receive Facecard, N=92 | Received Facecard, N=46 | P Value | |

| Satisfaction with physicians, n (%)a | 51 (55.4) | 30 (65.2) | 0.27 |

| Overall hospital satisfaction, n (%)b | 64 (69.6) | 33 (73.3) | 0.65 |

| Median trust (IQR)c | 41 (3547) | 42 (3847) | 0.32 |

| Median agreement (IQR)c | 12 (1114.5) | 12.5 (1215) | 0.37 |

DISCUSSION

We found that receipt of physician facecards significantly improved patients' knowledge of the names and roles of hospital physicians but had little to no impact on satisfaction, trust, or agreement with physicians. Our finding of improved knowledge of the names and roles of physician providers is consistent with prior studies using similar interventions.[7, 8, 9] Facecards may have prompted more effective introductions on the part of physicians and may have served as memory aids for patients to better retain information about their newly introduced hospital physicians.

Patient receipt of the facecard on intervention units was incomplete in our study. Despite engagement of physicians in designing cards that could easily fit into lab coats and a robust strategy to inform and motivate physician delivery of facecards, only 68% of intended patients received them. Although not explicitly reported, prior studies appear to have similarly struggled to deliver interventions consistently. Arora and colleagues reported that facecards were visible in only 59% of patients' rooms among those able to correctly identify 1 of their physicians.[8] A post hoc survey of physicians involved in our study revealed the biggest impediment to delivering facecards was simply forgetting to do so (data not shown). Technologic innovations may help by automating the identification of providers. For example, the University of Pittsburgh Medical Center has piloted smart rooms that use sensor technology to announce the name and role of providers as they enter patients' rooms.[26]

We hypothesized that facecards might improve other important aspects of the patient‐physicians relationship. Although levels of patient satisfaction were slightly higher in patients who had received facecards, the results were not statistically significant. Levels of trust and agreement were minimally higher in patients who received facecards, and the results were not statistically significant. Notably, baseline levels of trust and agreement were higher than we had expected. In fact, levels of trust were nearly identical to those seen in a prior study of outpatients who had been with the same physician for a median of 4 years.[22] Patients in our study may have had high levels of trust in the hospital and transferred this trust to their assigned physicians as representatives of the organization. The high level of agreement may relate to patients' tendency to prefer a more passive role as they encounter serious illness.[27, 28] Paradoxically, these findings may impede optimal patient care. The high levels of trust and agreement in the current study suggest that patients may not question their physicians to clarify plans and the rationale behind them. Prior research has shown that deficits in patients' comprehension of the care plan are often not apparent to patients or their physicians.[4, 29, 30]

Our study has several limitations. First, we assessed an intervention involving 4 units in a single hospital. Generalizability may be limited, as physician‐staffing models, hospitals, and the patients they serve vary. Second, as previously mentioned, patients in the intervention group did not receive physician facecards as consistently as intended. We conducted analyses based on treatment received in an effort to evaluate the impact of facecards if optimally delivered. Third, questions assessing satisfaction, trust, and agreement did not specifically ask patients to reflect on care provided by the primary physician team. It is possible that interactions with other physicians (ie, consultants) may have influenced these results. Fourth, we were underpowered to detect statistically significant improvements in satisfaction, trust, or agreement resulting from our intervention. Assuming the intervention might truly improve satisfaction with physicians from 54.2% to 63.6%, we would have needed 900 patients (ie, 450 each for the intervention and control groups) to have 80% power to detect a statistically significant difference. However, our results show that patients have high levels of trust and agreement with hospital physicians despite the relative lack of familiarity. Therefore, any existing deficits in hospitalized patients' comprehension of the care plan do not appear to be exacerbated by a lack of trust and/or agreement with treating physicians.

CONCLUSION

In summary, we found that physician facecards significantly improved patients' knowledge of the names and roles of hospital physicians but had little to no impact on satisfaction, trust, or agreement with physicians. Baseline levels of satisfaction, trust, and agreement were high, suggesting lack of familiarity with hospital physicians does not impede these important aspects of the patient‐physician relationship. Larger studies are needed to definitively assess the impact of facecards on satisfaction, trust, and agreement with physicians.

Acknowledgments

The authors express their gratitude to members of the NMH Patient and Family Advisory Council for providing input on the design of the physician facecard.

Disclosures: This study was supported by a grant from the Globe Foundation. The authors report no conflicts of interest.

- , , , , , . Ability of hospitalized patients to identify their in‐hospital physicians. Arch Intern Med. 2009;169(2):199–201.

- , . Does your patient know your name? An approach to enhancing patients' awareness of their caretaker's name. J Healthc Qual. 2005;27(4):53–56.

- , , Hospitalized patients' understanding of their plan of care. Mayo Clin Proc. 2010;85(1):47–52.

- , . Communication discrepancies between physicians and hospitalized patients. Arch Intern Med. 2010;170(15):1302–1307.

- Accreditation Council for Graduate Medical Education. Common program requirements. Available at: http://www.acgme.org/acgmeweb/Portals/0/PFAssets/ProgramRequirements/CPRs2013.pdf. Revised July 1, 2013.

- , , , et al. Transitions of Care Consensus policy statement: American College of Physicians, Society of General Internal Medicine, Society of Hospital Medicine, American Geriatrics Society, American College Of Emergency Physicians, and Society for Academic Emergency Medicine. J Hosp Med. 2009;4(6):364–370.

- , , . Increasing a patient's ability to identify his or her attending physician using a patient room display. Arch Intern Med. 2010;170(12):1084–1085.

- , , , et al. Improving inpatients' identification of their doctors: use of FACE cards. Jt Comm J Qual Patient Saf. 2009;35(12):613–619.

- , , . Patient satisfaction associated with correct identification of physician's photographs. Mayo Clin Proc. 2001;76(6):604–608.

- , , , , , . Physician‐patient relationship and medication compliance: a primary care investigation. Ann Fam Med. 2004;2(5):455–461.

- , , , , . Trust in the health care system and the use of preventive health services by older black and white adults. Am J Public Health. 2009;99(7):1293–1299.

- , , , . The role of patient‐physician trust in moderating medication nonadherence due to cost pressures. Arch Intern Med. 2005;165(15):1749–1755.

- , , . Trust and the acceptance of and adherence to antiretroviral therapy. J Acquir Immune Defic Syndr. 2001;28(1):47–58.

- , , , , , . Linking primary care performance to outcomes of care. J Fam Pract. 1998;47(3):213–220.

- , , , ; The Stanford Trust Study Physicians. Further validation and reliability testing of the Trust in Physician Scale. Med Care. 1999;37(5):510–517.

- , , , , , . The physician's actions and the outcome of illness in family practice. J Fam Pract. 1986;23(1):43–47.

- , , , , . Brief Report: Patient‐physician agreement as a predictor of outcomes in patients with back pain. J Gen Intern Med. 2005;20(10):935–937.

- , , , , , . The influence of patient‐practitioner agreement on outcome of care. Am J Public Health. 1981;71(2):127–131.

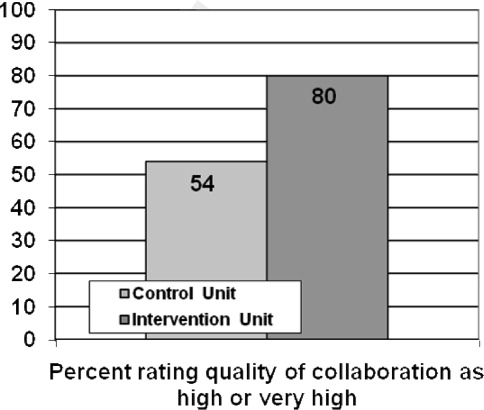

- , , , et al. Impact of localizing physicians to hospital units on nurse‐physician communication and agreement on the plan of care. J Gen Intern Med. 2009;24(11):1223–1227.

- , , , . The Lexile Framework. Durham, NC: Metametrics, Inc.; 1998.

- National Center for Education Statistics; , . Assessing the Lexile Framework: results of a panel meeting. NCES Working Paper Series, No. 2001‐08. Washington, DC: US Department of Education, Office of Educational Research and Improvement; 2001.

- , , , et al. Measuring patients' trust in their primary care providers. Med Care Res Rev. 2002;59(3):293–318.

- , , , , , . The association of patient trust and self‐care among patients with diabetes mellitus. BMC Fam Pract. 2004;5:26.

- , , , , . Development, implementation, and public reporting of the HCAHPS survey. Med Care Res Rev. 2010;67(1):27–37.

- , , , , . Measuring hospital care from the patients' perspective: an overview of the CAHPS Hospital Survey development process. Health Serv Res. 2005;40(6 pt 2):1977–1995.

- . Smart rooms, smart care delivery: UPMC clinician leaders leverage technology for greater effectiveness in patient care. Healthc Inform. 2011;28(9):36, 38–39, 42.

- , . Decision making during serious illness: what role do patients really want to play? J Clin Epidemiol. 1992;45(9):941–950.

- , , , , . The dynamics of change: cancer patients' preferences for information, involvement and support. Ann Oncol. 1997;8(9):857–863.

- , , , et al. Patient‐physician communication at hospital discharge and patients' understanding of the postdischarge treatment plan. Arch Intern Med. 1997;157(9):1026–1030.

- , , , , , . Patient comprehension of emergency department care and instructions: are patients aware of when they do not understand? Ann Emerg Med. 2009;53(4):454.e15–461.e15.

The patient‐physician relationship is fundamental to safe and effective care. Hospital settings present unique challenges to this partnership, including the lack of a prior relationship for hospital‐based physicians, rapid pace of clinical care, and dynamic nature of inpatient medical teams. Prior studies document that a majority of hospitalized patients are unable to correctly identify their physicians or nurses, and patients in teaching hospitals have difficulty understanding their physicians' level of training.[1, 2, 3, 4] Acknowledging these deficits, professional societies and the Accreditation Council for Graduate Medical Education (ACMGE) have issued policies stating that patients and caregivers need to know who is responsible at every point during patient care.[5, 6] These policies do not, however, make recommendations on methods to achieve better understanding.

Simple interventions improve patients' ability to correctly identify the names and roles of their hospital physicians. Maniaci and colleagues found that patients were better able to identify attending physicians when their names were written on the dry‐erase board in the room.[7] Arora and colleagues asked hospital physicians to give facecards, which included their picture and a description of their role, to patients.[8] Patients were more likely to correctly identify 1 physicians, but, surprisingly, less likely to understand physicians' roles. In a similar study, Francis and colleagues placed photographs with names of the attending and resident physicians on the wall in patient rooms.[9] Patients who had photographs of their physicians on the wall were more likely to correctly identify physicians on their team compared with patients who had no photographs. Additionally, patients who were able to identify more physicians rated satisfaction with physicians higher in 2 of 6 survey questions used. However, the study was limited by the use of a nonvalidated instrument to assess patient satisfaction and the use of an intermediate outcome (ie, ability to identify physicians) as the independent variable rather than the intervention itself (ie, physician photographs).

Beyond satisfaction, lack of familiarity may negatively impact patients' trust and agreement with hospital physicians. Trust and agreement are important predictors of adherence to recommended treatment in outpatient settings[10, 11, 12, 13, 14, 15, 16, 17, 18] but have not been adequately evaluated in hospital settings. Therefore, we sought to pilot the use of physician facecards and assess their potential impact on patients' knowledge of physicians' names and roles as well as patient satisfaction, trust, and agreement with physicians.

METHODS

Setting and Study Design

We performed a cluster randomized controlled trial at Northwestern Memorial Hospital (NMH), an 897‐bed tertiary‐care teaching hospital in Chicago, Illinois. One of 2 similar hospitalist service units and 1 of 2 similar teaching‐service units were randomly selected to implement the use of physician facecards. General medical patients were admitted to the study units by NMH bed‐assignment personnel subject to unit bed availability. No other criteria (eg, diagnosis, severity of illness, or source of patient admission) were used in patient assignment. Each unit consisted of 30 beds, with the exception of 1 hospitalist unit, which had 23. As a result of a prior intervention, physicians were localized to care for patients on specific units.[19] Hospitalist units were each staffed by hospitalists who worked in 7‐day rotations without the assistance of residents or midlevel providers. Teaching units were staffed by physician teams consisting of 1 attending, 1 senior resident, 1 intern, and 1 or 2 third‐year medical students. No fourth‐year students (ie, acting interns) rotated on these services during the study period. Housestaff worked in 4‐week rotations, and attending physicians on the teaching service worked in 2‐week rotations.

Patient rooms included a whiteboard facing the patient with a template prompting insertion of physician name(s). Nurses had the primary responsibility for completing information on the whiteboards.

Physician Facecard

We created draft physician facecards featuring pictures of physicians and descriptions of their roles. We used Lexile analysis, a widely used measure of reading difficulty, to improve readability in an iterative fashion.[20, 21] We then sought feedback at hospitalist and resident meetings. Specifically, we asked for suggested revisions to content and recommendations on reliable methods to deliver facecards to patients. Teaching physicians felt strongly that each team member should be listed and shown on 1 card, which would fit easily into a lab‐coat pocket. We similarly engaged the NMH Patient and Family Advisory Council to seek recommended revisions to content and delivery of the facecards. The Council consists of 18 patient and caregiver members who meet regularly to provide input on hospital programs and proposals. Council members felt strongly that physicians should deliver the cards themselves during their initial introduction, rather than having patients receive cards by other means (eg, as part of unit orientation materials delivered by nonphysician staff members). We incorporated feedback from these stakeholder groups into a final version of the physician facecard and method for delivery (Figure 1).

We implemented the use of facecards from May to June 2012. Physicians on intervention units were informed of the study via email, and one of the co‐investigators (T.C.) distributed a supply of facecards to these physicians at the start of each rotation. This distribution was performed in person, and physicians were instructed to provide a facecard to each new patient during their first encounter. We also placed facecards in easily visible cardholders at the nurses' station on intervention units. Reminder emails were sent once each week to reinforce physician delivery of facecards.

Data Collection and Measures

Each weekday during the study period, we randomly selected patients for structured interviews in the afternoon of their second or third hospital day. We did not conduct interviews on the first day of physicians' rotations and excluded patients whose preferred language was not English and those disoreinted to person, place, or time.

Patients were asked to name the physician(s) primarily responsible for their hospital care and to state the role of each physician they identified. We documented receipt of facecards if one was viewed during the interview and by asking patients if they had received one. We also documented whether 1 correct physician names were written on the whiteboard in the patients' rooms. We used questions from the Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) survey to assess satisfaction with physician communication and overall hospital care. HCAHPS is a validated patient‐satisfaction survey developed by the Agency for Healthcare Research and Quality (AHRQ) to assess hospitalized patients' experiences with care. Physician‐communication questions used ordinal response options of never, sometimes, usually, and always. Overall hospital rating was assessed using a 010 scale with 0=worst hospital possible and 10=best hospital possible. Trust with physicians was assessed using the Wake Forest University Trust Scale.[22] Prior research using this instrument has shown an association between trust and self‐management behaviors.[23] This 10‐item scale uses a 5‐point Likert scale and generates scores ranging from 10 to 50. Agreement with physicians was assessed using 3 questions used in a prior study by Staiger and colleagues showing an association between levels of agreement and health outcomes among outpatients treated for back pain.[17] Specifically, we asked patients to rate their agreement with hospital physicians' (1) explanation for the cause of primary symptoms, (2) plan for diagnostic tests, and (3) suggested plan for treatment using a 5‐point Likert scale. The agreement scale generated scores ranging from 3 to 15.

Approval for the study was obtained from the institutional review board of Northwestern University.

Statistical Analysis

Patient demographic data were obtained from the electronic health record and complemented data from interviews. We used [2] and t tests to compare patient characteristics. We used [2] tests to compare the percentage of patients able to correctly identify 1 of their physicians and 1 of their physicians' roles. We used [2] tests to compare the percentage of patients giving top‐box ratings to all 3 physician‐communicationsatisfaction questions (ie, always) and giving an overall hospital rating of 9 or 10. We used top‐box comparisons, rather than comparison of mean or median scores, because patient‐satisfaction data are typically highly skewed toward favorable responses. This approach is consistent with prior HCAHPS research.[24, 25] We used Mann‐Whitney U tests to compare ratings of trust and agreement. Because delivery of facecards was imperfect, we performed analyses both by intention to treat (ie, intervention vs control units) and based on treatment received (ie, received a facecard vs did not receive a facecard). All analyses were conducted using Stata version 11.2 (StataCorp, College Station, TX).

RESULTS

Study Subjects and Facecard Receipt

Overall, 217 patients were approached for interview. Thirty‐six were excluded because of disorientation, 12 were excluded because their preferred language was not English, and 31 declined to participate in the study. Patient characteristics for the 138 study patients are shown in Table 1. There were no significant differences in patient age, sex, or race. There was no significant difference in the percentage of patients with 1 correct physicians listed on the whiteboard in the room. Delivery of facecards was incomplete, with only 68% of intervention‐unit patients confirmed as having received them. A higher percentage of patients on the hospitalist intervention unit received facecards (23 of 30; 76.7%) than on the teaching intervention unit (22 of 36; 61.1%), but the difference was not statistically significant (P=0.18). There were no significant differences in age, sex, or race between patients who received a facecard compared with those who did not.

| Characteristic | Control Group, N=72 | Intervention Group, N=66 | P Value |

|---|---|---|---|

| |||

| Mean age, years (SD) | 56.8 (18.0) | 55.2 (18.2) | 0.62 |

| Women, n (%) | 35 (48.6) | 28 (42.4) | 0.47 |

| Nonwhite race, n (%) | 35 (50.7) | 36 (57.1) | 0.46 |

| Teaching unit, n (%) | 34 (47.2) | 36 (54.6) | 0.39 |

| Correct physician name on whiteboard, n (%)a | 46 (76.7) | 37 (72.6) | 0.62 |

| Received a facecard, n (%) | 1 (1) | 45 (68.2) | <0.01 |

Patients' Knowledge of Physicians

As shown in Table 2, more patients in the intervention group were able to correctly identify 1 of their treating physicians compared with the control group, but the result was not statistically significant (69.7% vs 58.3%; P=0.17). A significantly larger percentage of patients in the intervention group were able to identify the role of their hospital physicians (51.5% vs 16.7%; P<0.01). When comparing those that received a facecard and those that did not, patients who were given a facecard were more likely to correctly identify their hospital physician (89.1% vs 51.1%; P<0.01). Similarly, patients who had received a facecard were more likely to correctly identify the role of their hospital physician than patients who had not received a facecard (67.4% vs 16.3%; P<0.01).

| Impact | Control Group, N=72, n (%) | Intervention Group, N=66, n (%) | P Value |

|---|---|---|---|

| Patient correctly named 1 hospital physician | 42 (58.3) | 46 (69.7) | 0.17 |

| Patient correctly named role of hospital physician | 12 (16.7) | 34 (51.5) | <0.01 |

| Did Not Receive Facecard, N=92 | Received Facecard, N=46 | P Value | |

| Patient correctly named 1 hospital physician | 47 (51.1) | 41 (89.1) | <0.01 |

| Patient correctly named role of hospital physician | 15 (16.3) | 31 (67.4) | <0.01 |

Levels of Satisfaction, Trust, and Agreement

Overall, patients had high levels of satisfaction, trust, and agreement with hospital physicians. The overall satisfaction with physician communication was 75.6% (mean of top‐box scores across all 3 items), and 81 of 138 (58.7%) patients gave top‐box ratings to all 3 physician‐communicationsatisfaction items. Ninety‐seven of 137 (70.8%) patients rated overall hospital care as 9 or 10. The mean trust score for all patients was 40.77.8 and the median was 41.5 (interquartile range, 3747). The mean agreement score for all patients was 12.42.4 and the median was 12 (interquartile range, 1115). As shown in Table 3, satisfaction, trust, and agreement were similar for patients in the intervention group compared with the control group. Patients who received a facecard rated satisfaction, trust, and agreement slightly higher compared with those who had not received a facecard, but the results were not statistically significant.

| Ratings | Control Group, N=72 | Intervention Group, N=66 | P Value |

|---|---|---|---|

| |||

| Satisfaction with physicians, n (%)a | 39 (54.2) | 42 (63.6) | 0.26 |

| Overall hospital satisfaction, n (%)b | 51 (70.8) | 46 (70.8) | 0.99 |

| Median trust (IQR)c | 42 (3747) | 41 (3746) | 0.81 |

| Median agreement (IQR)c | 12 (1115) | 12 (1215) | 0.72 |

| Did Not Receive Facecard, N=92 | Received Facecard, N=46 | P Value | |

| Satisfaction with physicians, n (%)a | 51 (55.4) | 30 (65.2) | 0.27 |

| Overall hospital satisfaction, n (%)b | 64 (69.6) | 33 (73.3) | 0.65 |

| Median trust (IQR)c | 41 (3547) | 42 (3847) | 0.32 |

| Median agreement (IQR)c | 12 (1114.5) | 12.5 (1215) | 0.37 |

DISCUSSION

We found that receipt of physician facecards significantly improved patients' knowledge of the names and roles of hospital physicians but had little to no impact on satisfaction, trust, or agreement with physicians. Our finding of improved knowledge of the names and roles of physician providers is consistent with prior studies using similar interventions.[7, 8, 9] Facecards may have prompted more effective introductions on the part of physicians and may have served as memory aids for patients to better retain information about their newly introduced hospital physicians.

Patient receipt of the facecard on intervention units was incomplete in our study. Despite engagement of physicians in designing cards that could easily fit into lab coats and a robust strategy to inform and motivate physician delivery of facecards, only 68% of intended patients received them. Although not explicitly reported, prior studies appear to have similarly struggled to deliver interventions consistently. Arora and colleagues reported that facecards were visible in only 59% of patients' rooms among those able to correctly identify 1 of their physicians.[8] A post hoc survey of physicians involved in our study revealed the biggest impediment to delivering facecards was simply forgetting to do so (data not shown). Technologic innovations may help by automating the identification of providers. For example, the University of Pittsburgh Medical Center has piloted smart rooms that use sensor technology to announce the name and role of providers as they enter patients' rooms.[26]

We hypothesized that facecards might improve other important aspects of the patient‐physicians relationship. Although levels of patient satisfaction were slightly higher in patients who had received facecards, the results were not statistically significant. Levels of trust and agreement were minimally higher in patients who received facecards, and the results were not statistically significant. Notably, baseline levels of trust and agreement were higher than we had expected. In fact, levels of trust were nearly identical to those seen in a prior study of outpatients who had been with the same physician for a median of 4 years.[22] Patients in our study may have had high levels of trust in the hospital and transferred this trust to their assigned physicians as representatives of the organization. The high level of agreement may relate to patients' tendency to prefer a more passive role as they encounter serious illness.[27, 28] Paradoxically, these findings may impede optimal patient care. The high levels of trust and agreement in the current study suggest that patients may not question their physicians to clarify plans and the rationale behind them. Prior research has shown that deficits in patients' comprehension of the care plan are often not apparent to patients or their physicians.[4, 29, 30]

Our study has several limitations. First, we assessed an intervention involving 4 units in a single hospital. Generalizability may be limited, as physician‐staffing models, hospitals, and the patients they serve vary. Second, as previously mentioned, patients in the intervention group did not receive physician facecards as consistently as intended. We conducted analyses based on treatment received in an effort to evaluate the impact of facecards if optimally delivered. Third, questions assessing satisfaction, trust, and agreement did not specifically ask patients to reflect on care provided by the primary physician team. It is possible that interactions with other physicians (ie, consultants) may have influenced these results. Fourth, we were underpowered to detect statistically significant improvements in satisfaction, trust, or agreement resulting from our intervention. Assuming the intervention might truly improve satisfaction with physicians from 54.2% to 63.6%, we would have needed 900 patients (ie, 450 each for the intervention and control groups) to have 80% power to detect a statistically significant difference. However, our results show that patients have high levels of trust and agreement with hospital physicians despite the relative lack of familiarity. Therefore, any existing deficits in hospitalized patients' comprehension of the care plan do not appear to be exacerbated by a lack of trust and/or agreement with treating physicians.

CONCLUSION

In summary, we found that physician facecards significantly improved patients' knowledge of the names and roles of hospital physicians but had little to no impact on satisfaction, trust, or agreement with physicians. Baseline levels of satisfaction, trust, and agreement were high, suggesting lack of familiarity with hospital physicians does not impede these important aspects of the patient‐physician relationship. Larger studies are needed to definitively assess the impact of facecards on satisfaction, trust, and agreement with physicians.

Acknowledgments

The authors express their gratitude to members of the NMH Patient and Family Advisory Council for providing input on the design of the physician facecard.

Disclosures: This study was supported by a grant from the Globe Foundation. The authors report no conflicts of interest.

The patient‐physician relationship is fundamental to safe and effective care. Hospital settings present unique challenges to this partnership, including the lack of a prior relationship for hospital‐based physicians, rapid pace of clinical care, and dynamic nature of inpatient medical teams. Prior studies document that a majority of hospitalized patients are unable to correctly identify their physicians or nurses, and patients in teaching hospitals have difficulty understanding their physicians' level of training.[1, 2, 3, 4] Acknowledging these deficits, professional societies and the Accreditation Council for Graduate Medical Education (ACMGE) have issued policies stating that patients and caregivers need to know who is responsible at every point during patient care.[5, 6] These policies do not, however, make recommendations on methods to achieve better understanding.

Simple interventions improve patients' ability to correctly identify the names and roles of their hospital physicians. Maniaci and colleagues found that patients were better able to identify attending physicians when their names were written on the dry‐erase board in the room.[7] Arora and colleagues asked hospital physicians to give facecards, which included their picture and a description of their role, to patients.[8] Patients were more likely to correctly identify 1 physicians, but, surprisingly, less likely to understand physicians' roles. In a similar study, Francis and colleagues placed photographs with names of the attending and resident physicians on the wall in patient rooms.[9] Patients who had photographs of their physicians on the wall were more likely to correctly identify physicians on their team compared with patients who had no photographs. Additionally, patients who were able to identify more physicians rated satisfaction with physicians higher in 2 of 6 survey questions used. However, the study was limited by the use of a nonvalidated instrument to assess patient satisfaction and the use of an intermediate outcome (ie, ability to identify physicians) as the independent variable rather than the intervention itself (ie, physician photographs).

Beyond satisfaction, lack of familiarity may negatively impact patients' trust and agreement with hospital physicians. Trust and agreement are important predictors of adherence to recommended treatment in outpatient settings[10, 11, 12, 13, 14, 15, 16, 17, 18] but have not been adequately evaluated in hospital settings. Therefore, we sought to pilot the use of physician facecards and assess their potential impact on patients' knowledge of physicians' names and roles as well as patient satisfaction, trust, and agreement with physicians.

METHODS

Setting and Study Design

We performed a cluster randomized controlled trial at Northwestern Memorial Hospital (NMH), an 897‐bed tertiary‐care teaching hospital in Chicago, Illinois. One of 2 similar hospitalist service units and 1 of 2 similar teaching‐service units were randomly selected to implement the use of physician facecards. General medical patients were admitted to the study units by NMH bed‐assignment personnel subject to unit bed availability. No other criteria (eg, diagnosis, severity of illness, or source of patient admission) were used in patient assignment. Each unit consisted of 30 beds, with the exception of 1 hospitalist unit, which had 23. As a result of a prior intervention, physicians were localized to care for patients on specific units.[19] Hospitalist units were each staffed by hospitalists who worked in 7‐day rotations without the assistance of residents or midlevel providers. Teaching units were staffed by physician teams consisting of 1 attending, 1 senior resident, 1 intern, and 1 or 2 third‐year medical students. No fourth‐year students (ie, acting interns) rotated on these services during the study period. Housestaff worked in 4‐week rotations, and attending physicians on the teaching service worked in 2‐week rotations.

Patient rooms included a whiteboard facing the patient with a template prompting insertion of physician name(s). Nurses had the primary responsibility for completing information on the whiteboards.

Physician Facecard

We created draft physician facecards featuring pictures of physicians and descriptions of their roles. We used Lexile analysis, a widely used measure of reading difficulty, to improve readability in an iterative fashion.[20, 21] We then sought feedback at hospitalist and resident meetings. Specifically, we asked for suggested revisions to content and recommendations on reliable methods to deliver facecards to patients. Teaching physicians felt strongly that each team member should be listed and shown on 1 card, which would fit easily into a lab‐coat pocket. We similarly engaged the NMH Patient and Family Advisory Council to seek recommended revisions to content and delivery of the facecards. The Council consists of 18 patient and caregiver members who meet regularly to provide input on hospital programs and proposals. Council members felt strongly that physicians should deliver the cards themselves during their initial introduction, rather than having patients receive cards by other means (eg, as part of unit orientation materials delivered by nonphysician staff members). We incorporated feedback from these stakeholder groups into a final version of the physician facecard and method for delivery (Figure 1).

We implemented the use of facecards from May to June 2012. Physicians on intervention units were informed of the study via email, and one of the co‐investigators (T.C.) distributed a supply of facecards to these physicians at the start of each rotation. This distribution was performed in person, and physicians were instructed to provide a facecard to each new patient during their first encounter. We also placed facecards in easily visible cardholders at the nurses' station on intervention units. Reminder emails were sent once each week to reinforce physician delivery of facecards.

Data Collection and Measures

Each weekday during the study period, we randomly selected patients for structured interviews in the afternoon of their second or third hospital day. We did not conduct interviews on the first day of physicians' rotations and excluded patients whose preferred language was not English and those disoreinted to person, place, or time.

Patients were asked to name the physician(s) primarily responsible for their hospital care and to state the role of each physician they identified. We documented receipt of facecards if one was viewed during the interview and by asking patients if they had received one. We also documented whether 1 correct physician names were written on the whiteboard in the patients' rooms. We used questions from the Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) survey to assess satisfaction with physician communication and overall hospital care. HCAHPS is a validated patient‐satisfaction survey developed by the Agency for Healthcare Research and Quality (AHRQ) to assess hospitalized patients' experiences with care. Physician‐communication questions used ordinal response options of never, sometimes, usually, and always. Overall hospital rating was assessed using a 010 scale with 0=worst hospital possible and 10=best hospital possible. Trust with physicians was assessed using the Wake Forest University Trust Scale.[22] Prior research using this instrument has shown an association between trust and self‐management behaviors.[23] This 10‐item scale uses a 5‐point Likert scale and generates scores ranging from 10 to 50. Agreement with physicians was assessed using 3 questions used in a prior study by Staiger and colleagues showing an association between levels of agreement and health outcomes among outpatients treated for back pain.[17] Specifically, we asked patients to rate their agreement with hospital physicians' (1) explanation for the cause of primary symptoms, (2) plan for diagnostic tests, and (3) suggested plan for treatment using a 5‐point Likert scale. The agreement scale generated scores ranging from 3 to 15.

Approval for the study was obtained from the institutional review board of Northwestern University.

Statistical Analysis

Patient demographic data were obtained from the electronic health record and complemented data from interviews. We used [2] and t tests to compare patient characteristics. We used [2] tests to compare the percentage of patients able to correctly identify 1 of their physicians and 1 of their physicians' roles. We used [2] tests to compare the percentage of patients giving top‐box ratings to all 3 physician‐communicationsatisfaction questions (ie, always) and giving an overall hospital rating of 9 or 10. We used top‐box comparisons, rather than comparison of mean or median scores, because patient‐satisfaction data are typically highly skewed toward favorable responses. This approach is consistent with prior HCAHPS research.[24, 25] We used Mann‐Whitney U tests to compare ratings of trust and agreement. Because delivery of facecards was imperfect, we performed analyses both by intention to treat (ie, intervention vs control units) and based on treatment received (ie, received a facecard vs did not receive a facecard). All analyses were conducted using Stata version 11.2 (StataCorp, College Station, TX).

RESULTS

Study Subjects and Facecard Receipt

Overall, 217 patients were approached for interview. Thirty‐six were excluded because of disorientation, 12 were excluded because their preferred language was not English, and 31 declined to participate in the study. Patient characteristics for the 138 study patients are shown in Table 1. There were no significant differences in patient age, sex, or race. There was no significant difference in the percentage of patients with 1 correct physicians listed on the whiteboard in the room. Delivery of facecards was incomplete, with only 68% of intervention‐unit patients confirmed as having received them. A higher percentage of patients on the hospitalist intervention unit received facecards (23 of 30; 76.7%) than on the teaching intervention unit (22 of 36; 61.1%), but the difference was not statistically significant (P=0.18). There were no significant differences in age, sex, or race between patients who received a facecard compared with those who did not.

| Characteristic | Control Group, N=72 | Intervention Group, N=66 | P Value |

|---|---|---|---|

| |||

| Mean age, years (SD) | 56.8 (18.0) | 55.2 (18.2) | 0.62 |

| Women, n (%) | 35 (48.6) | 28 (42.4) | 0.47 |

| Nonwhite race, n (%) | 35 (50.7) | 36 (57.1) | 0.46 |

| Teaching unit, n (%) | 34 (47.2) | 36 (54.6) | 0.39 |

| Correct physician name on whiteboard, n (%)a | 46 (76.7) | 37 (72.6) | 0.62 |

| Received a facecard, n (%) | 1 (1) | 45 (68.2) | <0.01 |

Patients' Knowledge of Physicians

As shown in Table 2, more patients in the intervention group were able to correctly identify 1 of their treating physicians compared with the control group, but the result was not statistically significant (69.7% vs 58.3%; P=0.17). A significantly larger percentage of patients in the intervention group were able to identify the role of their hospital physicians (51.5% vs 16.7%; P<0.01). When comparing those that received a facecard and those that did not, patients who were given a facecard were more likely to correctly identify their hospital physician (89.1% vs 51.1%; P<0.01). Similarly, patients who had received a facecard were more likely to correctly identify the role of their hospital physician than patients who had not received a facecard (67.4% vs 16.3%; P<0.01).

| Impact | Control Group, N=72, n (%) | Intervention Group, N=66, n (%) | P Value |

|---|---|---|---|

| Patient correctly named 1 hospital physician | 42 (58.3) | 46 (69.7) | 0.17 |

| Patient correctly named role of hospital physician | 12 (16.7) | 34 (51.5) | <0.01 |

| Did Not Receive Facecard, N=92 | Received Facecard, N=46 | P Value | |

| Patient correctly named 1 hospital physician | 47 (51.1) | 41 (89.1) | <0.01 |

| Patient correctly named role of hospital physician | 15 (16.3) | 31 (67.4) | <0.01 |

Levels of Satisfaction, Trust, and Agreement

Overall, patients had high levels of satisfaction, trust, and agreement with hospital physicians. The overall satisfaction with physician communication was 75.6% (mean of top‐box scores across all 3 items), and 81 of 138 (58.7%) patients gave top‐box ratings to all 3 physician‐communicationsatisfaction items. Ninety‐seven of 137 (70.8%) patients rated overall hospital care as 9 or 10. The mean trust score for all patients was 40.77.8 and the median was 41.5 (interquartile range, 3747). The mean agreement score for all patients was 12.42.4 and the median was 12 (interquartile range, 1115). As shown in Table 3, satisfaction, trust, and agreement were similar for patients in the intervention group compared with the control group. Patients who received a facecard rated satisfaction, trust, and agreement slightly higher compared with those who had not received a facecard, but the results were not statistically significant.

| Ratings | Control Group, N=72 | Intervention Group, N=66 | P Value |

|---|---|---|---|

| |||

| Satisfaction with physicians, n (%)a | 39 (54.2) | 42 (63.6) | 0.26 |

| Overall hospital satisfaction, n (%)b | 51 (70.8) | 46 (70.8) | 0.99 |

| Median trust (IQR)c | 42 (3747) | 41 (3746) | 0.81 |

| Median agreement (IQR)c | 12 (1115) | 12 (1215) | 0.72 |

| Did Not Receive Facecard, N=92 | Received Facecard, N=46 | P Value | |

| Satisfaction with physicians, n (%)a | 51 (55.4) | 30 (65.2) | 0.27 |

| Overall hospital satisfaction, n (%)b | 64 (69.6) | 33 (73.3) | 0.65 |

| Median trust (IQR)c | 41 (3547) | 42 (3847) | 0.32 |

| Median agreement (IQR)c | 12 (1114.5) | 12.5 (1215) | 0.37 |

DISCUSSION

We found that receipt of physician facecards significantly improved patients' knowledge of the names and roles of hospital physicians but had little to no impact on satisfaction, trust, or agreement with physicians. Our finding of improved knowledge of the names and roles of physician providers is consistent with prior studies using similar interventions.[7, 8, 9] Facecards may have prompted more effective introductions on the part of physicians and may have served as memory aids for patients to better retain information about their newly introduced hospital physicians.

Patient receipt of the facecard on intervention units was incomplete in our study. Despite engagement of physicians in designing cards that could easily fit into lab coats and a robust strategy to inform and motivate physician delivery of facecards, only 68% of intended patients received them. Although not explicitly reported, prior studies appear to have similarly struggled to deliver interventions consistently. Arora and colleagues reported that facecards were visible in only 59% of patients' rooms among those able to correctly identify 1 of their physicians.[8] A post hoc survey of physicians involved in our study revealed the biggest impediment to delivering facecards was simply forgetting to do so (data not shown). Technologic innovations may help by automating the identification of providers. For example, the University of Pittsburgh Medical Center has piloted smart rooms that use sensor technology to announce the name and role of providers as they enter patients' rooms.[26]

We hypothesized that facecards might improve other important aspects of the patient‐physicians relationship. Although levels of patient satisfaction were slightly higher in patients who had received facecards, the results were not statistically significant. Levels of trust and agreement were minimally higher in patients who received facecards, and the results were not statistically significant. Notably, baseline levels of trust and agreement were higher than we had expected. In fact, levels of trust were nearly identical to those seen in a prior study of outpatients who had been with the same physician for a median of 4 years.[22] Patients in our study may have had high levels of trust in the hospital and transferred this trust to their assigned physicians as representatives of the organization. The high level of agreement may relate to patients' tendency to prefer a more passive role as they encounter serious illness.[27, 28] Paradoxically, these findings may impede optimal patient care. The high levels of trust and agreement in the current study suggest that patients may not question their physicians to clarify plans and the rationale behind them. Prior research has shown that deficits in patients' comprehension of the care plan are often not apparent to patients or their physicians.[4, 29, 30]

Our study has several limitations. First, we assessed an intervention involving 4 units in a single hospital. Generalizability may be limited, as physician‐staffing models, hospitals, and the patients they serve vary. Second, as previously mentioned, patients in the intervention group did not receive physician facecards as consistently as intended. We conducted analyses based on treatment received in an effort to evaluate the impact of facecards if optimally delivered. Third, questions assessing satisfaction, trust, and agreement did not specifically ask patients to reflect on care provided by the primary physician team. It is possible that interactions with other physicians (ie, consultants) may have influenced these results. Fourth, we were underpowered to detect statistically significant improvements in satisfaction, trust, or agreement resulting from our intervention. Assuming the intervention might truly improve satisfaction with physicians from 54.2% to 63.6%, we would have needed 900 patients (ie, 450 each for the intervention and control groups) to have 80% power to detect a statistically significant difference. However, our results show that patients have high levels of trust and agreement with hospital physicians despite the relative lack of familiarity. Therefore, any existing deficits in hospitalized patients' comprehension of the care plan do not appear to be exacerbated by a lack of trust and/or agreement with treating physicians.

CONCLUSION

In summary, we found that physician facecards significantly improved patients' knowledge of the names and roles of hospital physicians but had little to no impact on satisfaction, trust, or agreement with physicians. Baseline levels of satisfaction, trust, and agreement were high, suggesting lack of familiarity with hospital physicians does not impede these important aspects of the patient‐physician relationship. Larger studies are needed to definitively assess the impact of facecards on satisfaction, trust, and agreement with physicians.

Acknowledgments

The authors express their gratitude to members of the NMH Patient and Family Advisory Council for providing input on the design of the physician facecard.

Disclosures: This study was supported by a grant from the Globe Foundation. The authors report no conflicts of interest.

- , , , , , . Ability of hospitalized patients to identify their in‐hospital physicians. Arch Intern Med. 2009;169(2):199–201.

- , . Does your patient know your name? An approach to enhancing patients' awareness of their caretaker's name. J Healthc Qual. 2005;27(4):53–56.

- , , Hospitalized patients' understanding of their plan of care. Mayo Clin Proc. 2010;85(1):47–52.

- , . Communication discrepancies between physicians and hospitalized patients. Arch Intern Med. 2010;170(15):1302–1307.

- Accreditation Council for Graduate Medical Education. Common program requirements. Available at: http://www.acgme.org/acgmeweb/Portals/0/PFAssets/ProgramRequirements/CPRs2013.pdf. Revised July 1, 2013.

- , , , et al. Transitions of Care Consensus policy statement: American College of Physicians, Society of General Internal Medicine, Society of Hospital Medicine, American Geriatrics Society, American College Of Emergency Physicians, and Society for Academic Emergency Medicine. J Hosp Med. 2009;4(6):364–370.

- , , . Increasing a patient's ability to identify his or her attending physician using a patient room display. Arch Intern Med. 2010;170(12):1084–1085.

- , , , et al. Improving inpatients' identification of their doctors: use of FACE cards. Jt Comm J Qual Patient Saf. 2009;35(12):613–619.

- , , . Patient satisfaction associated with correct identification of physician's photographs. Mayo Clin Proc. 2001;76(6):604–608.

- , , , , , . Physician‐patient relationship and medication compliance: a primary care investigation. Ann Fam Med. 2004;2(5):455–461.

- , , , , . Trust in the health care system and the use of preventive health services by older black and white adults. Am J Public Health. 2009;99(7):1293–1299.

- , , , . The role of patient‐physician trust in moderating medication nonadherence due to cost pressures. Arch Intern Med. 2005;165(15):1749–1755.

- , , . Trust and the acceptance of and adherence to antiretroviral therapy. J Acquir Immune Defic Syndr. 2001;28(1):47–58.

- , , , , , . Linking primary care performance to outcomes of care. J Fam Pract. 1998;47(3):213–220.

- , , , ; The Stanford Trust Study Physicians. Further validation and reliability testing of the Trust in Physician Scale. Med Care. 1999;37(5):510–517.

- , , , , , . The physician's actions and the outcome of illness in family practice. J Fam Pract. 1986;23(1):43–47.

- , , , , . Brief Report: Patient‐physician agreement as a predictor of outcomes in patients with back pain. J Gen Intern Med. 2005;20(10):935–937.

- , , , , , . The influence of patient‐practitioner agreement on outcome of care. Am J Public Health. 1981;71(2):127–131.

- , , , et al. Impact of localizing physicians to hospital units on nurse‐physician communication and agreement on the plan of care. J Gen Intern Med. 2009;24(11):1223–1227.

- , , , . The Lexile Framework. Durham, NC: Metametrics, Inc.; 1998.

- National Center for Education Statistics; , . Assessing the Lexile Framework: results of a panel meeting. NCES Working Paper Series, No. 2001‐08. Washington, DC: US Department of Education, Office of Educational Research and Improvement; 2001.

- , , , et al. Measuring patients' trust in their primary care providers. Med Care Res Rev. 2002;59(3):293–318.

- , , , , , . The association of patient trust and self‐care among patients with diabetes mellitus. BMC Fam Pract. 2004;5:26.

- , , , , . Development, implementation, and public reporting of the HCAHPS survey. Med Care Res Rev. 2010;67(1):27–37.

- , , , , . Measuring hospital care from the patients' perspective: an overview of the CAHPS Hospital Survey development process. Health Serv Res. 2005;40(6 pt 2):1977–1995.

- . Smart rooms, smart care delivery: UPMC clinician leaders leverage technology for greater effectiveness in patient care. Healthc Inform. 2011;28(9):36, 38–39, 42.

- , . Decision making during serious illness: what role do patients really want to play? J Clin Epidemiol. 1992;45(9):941–950.

- , , , , . The dynamics of change: cancer patients' preferences for information, involvement and support. Ann Oncol. 1997;8(9):857–863.

- , , , et al. Patient‐physician communication at hospital discharge and patients' understanding of the postdischarge treatment plan. Arch Intern Med. 1997;157(9):1026–1030.

- , , , , , . Patient comprehension of emergency department care and instructions: are patients aware of when they do not understand? Ann Emerg Med. 2009;53(4):454.e15–461.e15.

- , , , , , . Ability of hospitalized patients to identify their in‐hospital physicians. Arch Intern Med. 2009;169(2):199–201.

- , . Does your patient know your name? An approach to enhancing patients' awareness of their caretaker's name. J Healthc Qual. 2005;27(4):53–56.

- , , Hospitalized patients' understanding of their plan of care. Mayo Clin Proc. 2010;85(1):47–52.

- , . Communication discrepancies between physicians and hospitalized patients. Arch Intern Med. 2010;170(15):1302–1307.

- Accreditation Council for Graduate Medical Education. Common program requirements. Available at: http://www.acgme.org/acgmeweb/Portals/0/PFAssets/ProgramRequirements/CPRs2013.pdf. Revised July 1, 2013.

- , , , et al. Transitions of Care Consensus policy statement: American College of Physicians, Society of General Internal Medicine, Society of Hospital Medicine, American Geriatrics Society, American College Of Emergency Physicians, and Society for Academic Emergency Medicine. J Hosp Med. 2009;4(6):364–370.

- , , . Increasing a patient's ability to identify his or her attending physician using a patient room display. Arch Intern Med. 2010;170(12):1084–1085.

- , , , et al. Improving inpatients' identification of their doctors: use of FACE cards. Jt Comm J Qual Patient Saf. 2009;35(12):613–619.

- , , . Patient satisfaction associated with correct identification of physician's photographs. Mayo Clin Proc. 2001;76(6):604–608.

- , , , , , . Physician‐patient relationship and medication compliance: a primary care investigation. Ann Fam Med. 2004;2(5):455–461.

- , , , , . Trust in the health care system and the use of preventive health services by older black and white adults. Am J Public Health. 2009;99(7):1293–1299.

- , , , . The role of patient‐physician trust in moderating medication nonadherence due to cost pressures. Arch Intern Med. 2005;165(15):1749–1755.

- , , . Trust and the acceptance of and adherence to antiretroviral therapy. J Acquir Immune Defic Syndr. 2001;28(1):47–58.

- , , , , , . Linking primary care performance to outcomes of care. J Fam Pract. 1998;47(3):213–220.

- , , , ; The Stanford Trust Study Physicians. Further validation and reliability testing of the Trust in Physician Scale. Med Care. 1999;37(5):510–517.

- , , , , , . The physician's actions and the outcome of illness in family practice. J Fam Pract. 1986;23(1):43–47.

- , , , , . Brief Report: Patient‐physician agreement as a predictor of outcomes in patients with back pain. J Gen Intern Med. 2005;20(10):935–937.

- , , , , , . The influence of patient‐practitioner agreement on outcome of care. Am J Public Health. 1981;71(2):127–131.

- , , , et al. Impact of localizing physicians to hospital units on nurse‐physician communication and agreement on the plan of care. J Gen Intern Med. 2009;24(11):1223–1227.

- , , , . The Lexile Framework. Durham, NC: Metametrics, Inc.; 1998.

- National Center for Education Statistics; , . Assessing the Lexile Framework: results of a panel meeting. NCES Working Paper Series, No. 2001‐08. Washington, DC: US Department of Education, Office of Educational Research and Improvement; 2001.

- , , , et al. Measuring patients' trust in their primary care providers. Med Care Res Rev. 2002;59(3):293–318.

- , , , , , . The association of patient trust and self‐care among patients with diabetes mellitus. BMC Fam Pract. 2004;5:26.

- , , , , . Development, implementation, and public reporting of the HCAHPS survey. Med Care Res Rev. 2010;67(1):27–37.

- , , , , . Measuring hospital care from the patients' perspective: an overview of the CAHPS Hospital Survey development process. Health Serv Res. 2005;40(6 pt 2):1977–1995.

- . Smart rooms, smart care delivery: UPMC clinician leaders leverage technology for greater effectiveness in patient care. Healthc Inform. 2011;28(9):36, 38–39, 42.

- , . Decision making during serious illness: what role do patients really want to play? J Clin Epidemiol. 1992;45(9):941–950.

- , , , , . The dynamics of change: cancer patients' preferences for information, involvement and support. Ann Oncol. 1997;8(9):857–863.

- , , , et al. Patient‐physician communication at hospital discharge and patients' understanding of the postdischarge treatment plan. Arch Intern Med. 1997;157(9):1026–1030.

- , , , , , . Patient comprehension of emergency department care and instructions: are patients aware of when they do not understand? Ann Emerg Med. 2009;53(4):454.e15–461.e15.

© 2013 Society of Hospital Medicine

Evidence Needing a Lift

In this issue of the Journal of Hospital Medicine, Hansen and colleagues provide a first, early look at the effectiveness of the BOOST intervention to reduce 30‐day readmissions among hospitalized patients.[1] BOOST[2] is 1 of a number of care transition improvement methodologies that have been applied to the problem of readmissions, each of which has evidence to support its effectiveness in its initial settings[3, 4] but has proven to be difficult to translate to other sites.[5, 6, 7]

BOOST stands in contrast with other, largely research protocol‐derived, programs in that it allows sites to tailor adoption of recommendations to local contexts and is therefore potentially more feasible to implement. Feasibility and practicality has led BOOST to be adopted in large national settings, even if it has had little evidence to support its effectiveness to date.

Given the nonstandardized and ad hoc nature of most multicenter collaboratives generally, and the flexibility of the BOOST model specifically, the BOOST authors are to be commended for undertaking any evaluation at all. Perhaps, not surprisingly, they encountered many of the problems associated with a multicenter studydropout of sites, problematic data, and limited evidence for adoption of the intervention at participating hospitals. Although these represent real‐world experiences of a quality‐improvement program, as a group they pose a number of problems that limit the study's robustness, and generate important caveats that readers should use to temper their interpretation of the authors' findings.

The first caveat relates to the substantial number of sites that either dropped out of BOOST or failed to submit data after enlisting in the collaborative. Although this may be common in quality improvement collaboratives, similar problems would not be permissible in a trial of a new drug or device. Dropout and selected ability to contribute data suggest that the ability to fully adopt BOOST may not be universal, and raises the possibility of bias, because the least successful sites may have had less interest in remaining engaged and submitting data.

The second caveat relates to how readmission rates were assessed. Because sites provided rates of readmissions at the unit level rather than the actual counts of admissions or readmissions, the authors were unable to conduct statistical analyses typically performed for these interventions, such as time series or difference‐in‐difference analyses. More importantly, one cannot discern whether their results are driven by a small absolute but large relative change in the number of readmissions at small sites. That is, large percentage changes of low statistical significance could have misleadingly affected the overall results. Conversely, we cannot identify large sites where a similar relative reduction could be statistically significant and more broadly interpreted as representing the real effectiveness of BOOST efforts.

The third caveat is in regard to the data describing the sites' performance. The effectiveness of BOOST in this analysis varied greatly among sites, with only 1 site showing a strong reduction in readmission rate, and nearly all others showing no statistical improvements. In fact, it appears that their overall results were almost entirely driven by the improvements at that 1 site.

Variable effectiveness of an intervention can be related to variable adoption or contextual factors (such as availability of personnel to implement the program). Although these authors have data on BOOST programmatic adoption, they do not have qualitative data on local barriers and facilitators to BOOST implementation, which at this stage of evaluation would be particularly valuable in understanding the results. Analyzing site‐level effectiveness is of growing relevance to multicenter quality improvement collaboratives,[8, 9] but this evaluation provides little insight into reasons for variable success across institutions.

Finally, their study design does not allow us to understand a number of key questions. How many patients were involved in the intervention? How many patients received all BOOST‐recommended interventions? Which of these interventions seemed most effective in which patients? To what degree did patient severity of illness, cognitive status, social supports, or access to primary care influence readmission risk? Such information would help frame cost‐effective deployment of BOOST or related tools.

In the end, it seems unlikely that this iteration of the BOOST program produced broad reductions in readmission rates. Having said this, the authors provide the necessary start down the road toward a fuller understanding of real‐world efforts to reduce readmissions. Stated alternately, the nuances and flaws of this study provide ample fodder for others working in the field. BOOST is in good stead with other care transition models that have not translated well from their initial research environment to real‐world practices. The question now is: Do any of these interventions actually work in clinical practice settings, and will we ever know? Even more fundamentally, how important and meaningful are these hospital‐based care transition interventions? Where is the engagement with primary care? Where are the primary care outcomes? Does BOOST truly impact outcomes other than readmission?[10]

Doing high‐quality research in the context of a rapidly evolving quality improvement program is hard. Doing it at more than 1 site is harder. BOOST's flexibility is both a great source of strength and a clear challenge to rigorous evaluation. However, when the costs of care transition programs are so high, and the potential consequences of high readmission rates are so great for patients and for hospitals, the need to address these issues with real data and better evidence is paramount. We look forward to the next phase of BOOST and to the growth and refinement of the evidence base for how to improve care coordination and transitions effectively.

- , , , et al. Project BOOST: effectiveness of a multihospital effort to reduce rehospitalization. J Hosp Med. 2013;8:421–427.

- , . BOOSTing the hospital discharge. J Hosp Med. 2009;4:209–210.

- , , , et al. A reengineered hospital discharge program to decrease rehospitalization: a randomized trial. Ann Intern Med. 2009;150:178–187.

- , , , et al. Comprehensive discharge planning and home follow‐up of hospitalized elders: a randomized clinical trial. JAMA. 1999;281:613–620.

- , , , et al. Effectiveness and cost of a transitional care program for heart failure: a prospective study with concurrent controls. Arch Intern Med. 2011;171:1238–1243.

- . Hospitals question Medicare rules on readmissions. New York Times. March 29, 2013. Available at: http://www.nytimes.com/2013/03/30/business/hospitals‐question‐fairness‐of‐new‐medicare‐rules.html?pagewanted=all

In this issue of the Journal of Hospital Medicine, Hansen and colleagues provide a first, early look at the effectiveness of the BOOST intervention to reduce 30‐day readmissions among hospitalized patients.[1] BOOST[2] is 1 of a number of care transition improvement methodologies that have been applied to the problem of readmissions, each of which has evidence to support its effectiveness in its initial settings[3, 4] but has proven to be difficult to translate to other sites.[5, 6, 7]