User login

Mixed leukemias can benefit from allo-HST

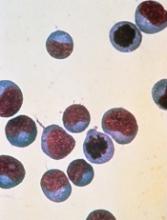

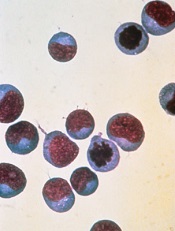

ORLANDO – Allogeneic hematopoietic stem cell transplantation using a matched donor is a valid treatment option – and potential cure – for leukemias with markers of both myeloid and lymphoid lineages, or mixed phenotype acute leukemias, according to findings from the Acute Leukemia Working Party of the European Group for Blood and Marrow Transplantation (ALWP-EBMT) database.

Treatment outcomes at 3 years in 519 patients from the database who received an allogeneic transplant (allo-HCT) for mixed-phenotype acute leukemia (MPAL) between 2000 and 2014 and were transplanted in complete remission (CR1) included an overall survival of 56.3%, a leukemia-free survival of 46.5%, a relapse incidence of 31.4%, a nonrelapse mortality of 22.1%, and an incidence of chronic graft-versus-host disease (GVHD) of 37.5%, Reinhold Munker, MD, reported at the combined annual meetings of the Center for International Blood & Marrow Transplant Research and the American Society for Blood and Marrow Transplantation.

“The outcome in this large adult study is pretty favorable based upon 519 patients; 45%-65% can expect overall survival at 5 years,” he said.

The median age of the study subjects was 38.1 years (range, 18-75). Transplants were from a matched sibling donor in 54.5% of cases, and from a matched unrelated donor in 45.5% of cases. Myeloablative conditioning was used in 400 patients and included only chemotherapy in 140 patients and chemotherapy with total body irradiation in 260 patients. The remaining patients received nonmyeloablative conditioning, said Dr. Munker of Tulane University, New Orleans.

The source of stem cells was bone marrow in 26% of patients, and peripheral blood in 73%. Grade II-IV acute GVHD developed in 32.5% of patients. Median follow-up was 32 months, he noted.

In univariate analysis, age at transplant was strongly associated with leukemia-free survival, nonrelapse mortality, relapse incidence, and overall survival. The best outcomes were among those aged 18-35 years. The nonrelapse mortality rate and overall survival rate were lower for transplants done in 2005-2014 vs. 2000-2004 (20% vs 33.2% and 58.3 vs 44.7%, respectively). No differences in outcomes were found between related and unrelated donors, but chronic GVHD was more common with female donors and male recipients, with no in vivo T-cell depletion, and with use of peripheral blood stem cells – findings which are not unexpected, Dr. Munker noted.

Use of myeloablative conditioning with total-body irradiation correlated with a lower relapse incidence and with better leukemia-free survival vs. both myeloablative conditioning with only chemotherapy and reduced-intensity conditioning, he said.

In multivariate analysis, younger age and more recent year of transplant were associated with a better leukemia-free survival and overall survival, and use of myeloablative conditioning with total-body irradiation was associated with better leukemia-free survival and with a trend for higher overall survival.

MPALs are rare, accounting for only 2%-5% of all acute leukemias, Dr. Munker said, noting that prognosis is considered to be intermediate in children and unfavorable in adults.

The diagnostic criteria for MPAL were revised by the World Health Organization (WHO) in 2008 and accepted by most centers, but until recently data were lacking with respect to the recommended treatment strategy of induction regimens similar to those used in acute lymphoblastic leukemia, and consolidation by allogeneic transplant, he explained.

However, the Center for International Blood and Marrow Transplant Research last year published a series of 95 cases showing encouraging long-term survival with allo-HCT in MPAL patients with a median age of 20 years.

The current findings confirm and extend those prior findings, Dr. Munker said.

Dr. Munker reported having no disclosures.

ORLANDO – Allogeneic hematopoietic stem cell transplantation using a matched donor is a valid treatment option – and potential cure – for leukemias with markers of both myeloid and lymphoid lineages, or mixed phenotype acute leukemias, according to findings from the Acute Leukemia Working Party of the European Group for Blood and Marrow Transplantation (ALWP-EBMT) database.

Treatment outcomes at 3 years in 519 patients from the database who received an allogeneic transplant (allo-HCT) for mixed-phenotype acute leukemia (MPAL) between 2000 and 2014 and were transplanted in complete remission (CR1) included an overall survival of 56.3%, a leukemia-free survival of 46.5%, a relapse incidence of 31.4%, a nonrelapse mortality of 22.1%, and an incidence of chronic graft-versus-host disease (GVHD) of 37.5%, Reinhold Munker, MD, reported at the combined annual meetings of the Center for International Blood & Marrow Transplant Research and the American Society for Blood and Marrow Transplantation.

“The outcome in this large adult study is pretty favorable based upon 519 patients; 45%-65% can expect overall survival at 5 years,” he said.

The median age of the study subjects was 38.1 years (range, 18-75). Transplants were from a matched sibling donor in 54.5% of cases, and from a matched unrelated donor in 45.5% of cases. Myeloablative conditioning was used in 400 patients and included only chemotherapy in 140 patients and chemotherapy with total body irradiation in 260 patients. The remaining patients received nonmyeloablative conditioning, said Dr. Munker of Tulane University, New Orleans.

The source of stem cells was bone marrow in 26% of patients, and peripheral blood in 73%. Grade II-IV acute GVHD developed in 32.5% of patients. Median follow-up was 32 months, he noted.

In univariate analysis, age at transplant was strongly associated with leukemia-free survival, nonrelapse mortality, relapse incidence, and overall survival. The best outcomes were among those aged 18-35 years. The nonrelapse mortality rate and overall survival rate were lower for transplants done in 2005-2014 vs. 2000-2004 (20% vs 33.2% and 58.3 vs 44.7%, respectively). No differences in outcomes were found between related and unrelated donors, but chronic GVHD was more common with female donors and male recipients, with no in vivo T-cell depletion, and with use of peripheral blood stem cells – findings which are not unexpected, Dr. Munker noted.

Use of myeloablative conditioning with total-body irradiation correlated with a lower relapse incidence and with better leukemia-free survival vs. both myeloablative conditioning with only chemotherapy and reduced-intensity conditioning, he said.

In multivariate analysis, younger age and more recent year of transplant were associated with a better leukemia-free survival and overall survival, and use of myeloablative conditioning with total-body irradiation was associated with better leukemia-free survival and with a trend for higher overall survival.

MPALs are rare, accounting for only 2%-5% of all acute leukemias, Dr. Munker said, noting that prognosis is considered to be intermediate in children and unfavorable in adults.

The diagnostic criteria for MPAL were revised by the World Health Organization (WHO) in 2008 and accepted by most centers, but until recently data were lacking with respect to the recommended treatment strategy of induction regimens similar to those used in acute lymphoblastic leukemia, and consolidation by allogeneic transplant, he explained.

However, the Center for International Blood and Marrow Transplant Research last year published a series of 95 cases showing encouraging long-term survival with allo-HCT in MPAL patients with a median age of 20 years.

The current findings confirm and extend those prior findings, Dr. Munker said.

Dr. Munker reported having no disclosures.

ORLANDO – Allogeneic hematopoietic stem cell transplantation using a matched donor is a valid treatment option – and potential cure – for leukemias with markers of both myeloid and lymphoid lineages, or mixed phenotype acute leukemias, according to findings from the Acute Leukemia Working Party of the European Group for Blood and Marrow Transplantation (ALWP-EBMT) database.

Treatment outcomes at 3 years in 519 patients from the database who received an allogeneic transplant (allo-HCT) for mixed-phenotype acute leukemia (MPAL) between 2000 and 2014 and were transplanted in complete remission (CR1) included an overall survival of 56.3%, a leukemia-free survival of 46.5%, a relapse incidence of 31.4%, a nonrelapse mortality of 22.1%, and an incidence of chronic graft-versus-host disease (GVHD) of 37.5%, Reinhold Munker, MD, reported at the combined annual meetings of the Center for International Blood & Marrow Transplant Research and the American Society for Blood and Marrow Transplantation.

“The outcome in this large adult study is pretty favorable based upon 519 patients; 45%-65% can expect overall survival at 5 years,” he said.

The median age of the study subjects was 38.1 years (range, 18-75). Transplants were from a matched sibling donor in 54.5% of cases, and from a matched unrelated donor in 45.5% of cases. Myeloablative conditioning was used in 400 patients and included only chemotherapy in 140 patients and chemotherapy with total body irradiation in 260 patients. The remaining patients received nonmyeloablative conditioning, said Dr. Munker of Tulane University, New Orleans.

The source of stem cells was bone marrow in 26% of patients, and peripheral blood in 73%. Grade II-IV acute GVHD developed in 32.5% of patients. Median follow-up was 32 months, he noted.

In univariate analysis, age at transplant was strongly associated with leukemia-free survival, nonrelapse mortality, relapse incidence, and overall survival. The best outcomes were among those aged 18-35 years. The nonrelapse mortality rate and overall survival rate were lower for transplants done in 2005-2014 vs. 2000-2004 (20% vs 33.2% and 58.3 vs 44.7%, respectively). No differences in outcomes were found between related and unrelated donors, but chronic GVHD was more common with female donors and male recipients, with no in vivo T-cell depletion, and with use of peripheral blood stem cells – findings which are not unexpected, Dr. Munker noted.

Use of myeloablative conditioning with total-body irradiation correlated with a lower relapse incidence and with better leukemia-free survival vs. both myeloablative conditioning with only chemotherapy and reduced-intensity conditioning, he said.

In multivariate analysis, younger age and more recent year of transplant were associated with a better leukemia-free survival and overall survival, and use of myeloablative conditioning with total-body irradiation was associated with better leukemia-free survival and with a trend for higher overall survival.

MPALs are rare, accounting for only 2%-5% of all acute leukemias, Dr. Munker said, noting that prognosis is considered to be intermediate in children and unfavorable in adults.

The diagnostic criteria for MPAL were revised by the World Health Organization (WHO) in 2008 and accepted by most centers, but until recently data were lacking with respect to the recommended treatment strategy of induction regimens similar to those used in acute lymphoblastic leukemia, and consolidation by allogeneic transplant, he explained.

However, the Center for International Blood and Marrow Transplant Research last year published a series of 95 cases showing encouraging long-term survival with allo-HCT in MPAL patients with a median age of 20 years.

The current findings confirm and extend those prior findings, Dr. Munker said.

Dr. Munker reported having no disclosures.

Key clinical point:

Major finding: Treatment outcomes at 3 years included overall survival of 56.3%, leukemia-free survival of 46.5%, relapse incidence of 31.4%, nonrelapse mortality of 22.1%, and incidence of chronic graft-versus-host disease of 37.5%.

Data source: A review of 519 cases from the ALWP-EBMT database.

Disclosures: Dr. Munker reported having no disclosures.

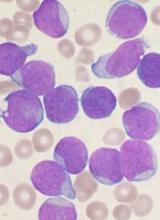

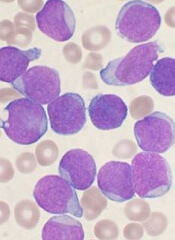

Pre- and post-HCT MRD levels predict ALL survival

ORLANDO – Minimal residual disease (MRD) measured before and after allogeneic hematopoietic stem cell transplantation (HCT) is a powerful predictor of survival in children with acute lymphoblastic leukemia (ALL), according to a review of hundreds of cases from around the world.

The findings could have implications for using minimal residual disease measures to guide posttransplant interventions, Michael A. Pulsipher, MD, reported at the combined annual meetings of the Center for International Blood & Marrow Transplant Research and the American Society for Blood and Marrow Transplantation.

“MRD pretransplant was a very powerful predictor of outcomes. MRD posttransplant highlights individual patients at risk,” Dr. Pulsipher said. Results comparing reverse transcriptase–polymerase chain reaction with flow cytometry require validation by direct comparison in the same patient cohort, but “the new risk scores ... very nicely predict outcomes both pre- and post-transplant and can guide study planning and patient counseling.”

A total of 2,960 bone marrow MRD measurements were performed in the 747 patients included in the study. MRD was assessed prior to HCT and on or near days 30, 60, 100, 180, and 365 and beyond after HCT.

Patients were grouped for analysis according to MRD level: Group 1 had no detectable MRD, group 2 had low detectable MRD levels (less than 10E-4, or 0.01% by flow cytometry), and group 3 had high detectable MRD levels (10E-4 or higher). A second analysis compared findings in those tested by flow cytometry and those tested by real-time quantitative PCR (RQ-PCR), said Dr. Pulsipher of Children’s Hospital Los Angeles.

In 648 patients with pre-HCT MRD measurements available, the 4-year probability of event-free survival was 62%, 67%, and 37% for groups 1, 2, and 3, respectively. Group 3 – the high MRD level group – had 2.47 times the increased hazard ratio for relapse and 1.67 times the increased risk of treatment-related mortality, Dr. Pulsipher said, adding that pre-HCT MRD and remission status both significantly influenced survival, while age, sex, relapse site, cytogenetics, donor type, and stem cell source did not influence outcome.

Post-HCT MRD values were analyzed as time-dependent covariates.

“As time went by more and more, any detectable level of MRD led to a very poor prognosis, whereas patients arriving at day 365 with no detectable MRD had exceptional prognosis with survival approaching 90%,” he said.

Specifically, the 4-year probability of event-free survival for groups 1, 2, and 3, respectively, were 59%, 65%, and 43% at day 30; 64%, 47%, and 36% at day 60; 65%, 69%, and 44% at day 90; 79%, 40%, and 12% at day 180; and 87%, 36%, and 25% at day 365.

Of note, a very significant interaction was seen between acute graft-versus-host disease (GVHD) and MRD, Dr. Pulsipher said, explaining that patients who were MRD positive and had developed GVHD had a significant decrease in the cumulative incidence of relapse, compared with those with no GVHD.

“This translated into improved event-free survival with patients post transplant, who were MRD-positive [and] developing GVHD, still having a reasonable chance of survival, whereas patients post transplant who had MRD measured who did not develop GVHD had a very poor chance of survival,” he added.

Additionally, based on detailed multivariate analysis including a number of clinical factors, risk predictive scores were developed for event-free survival risk at 18 months or cumulative incidence of relapse at 18 months. Multiple scores were developed for each, but, as an example of factors that had an important effect on outcomes, patients with very early pretransplant relapse (those who went into remission but relapsed within 18 months) or with greater than 2nd relapse had a high risk for poor event-free survival. Mismatched donors and unrelated cord-blood stem cell transplant recipients also had high risk, he said, noting that, “of course, MRD had a significant effect” and was the most important factor prior to transplant.

These patients, who had a 4-point or greater risk score, were the poorest risk group, with survival that was less than 50%, as opposed to better risk groups that exceeded 90%, he said.

“A score of greater than 5 could identify 80% of patients who were going to relapse after transplant, and of course, event-free and overall survival in those patients were very poor,” he added.

As time went by, the early risk of GVHD diminished somewhat, as did the risk of mismatched donors.

“Most of the risk was associated with any MRD detection,” he said.

Flow cytometry and RQ-PCR levels of at least 10-4 were highly predictive of relapse at all pre- and post-HCT time points; however, RQ-PCR values between 10-4 and 10-3, in cases where adequate numbers were available for comparison, better predicted relapse as compared with flow cytometry results.

For example, before HCT, hazard ratios were 1.26 and 2.41 with flow cytometry vs. RQ-PCR. At day 30, the hazard ratios were 1.33 and 2.53, and at day 365, they were 3.54 and 31.84, Dr. Pulsipher reported.

The findings provide important information for improving outcomes in children with high-risk ALL undergoing HCT, he said.

“Older prognostic models for relapsed and refractory high-risk ALL have focused on timing and location of relapse, as well as disease phenotype. But it is clear that, in order to treat children with very high risk ALL with transplantation, MRD has become the most important thing to look at in the pretreatment setting. The challenges that we face in assessing MRD, however, have been hampered by the fact that we have differing MRD measurements,” he said, noting that RQ-PCR is often used in Europe, while flow cytometry is more often used in the United States. As such, direct comparisons are lacking, as are T-cell and posttransplant data.

The current study represents a “tremendous effort” by international collaborators to address these shortcoming, he said.

“This is a great opportunity, as our goal, of course, is to avoid futility in transplantation, but, more importantly, to find opportunities to identify groups for which we can improve our outcomes,” he added.

Patients included in the study were treated in Europe, North America, and Australia and were transplanted during Sept. 1999-May 2016. Most were in first or second remission, and most (586) had pre-B ALL. A notable 145 had T-cell ALL – “more than ever has been analyzed previously” – and 16 had B-lineage or biphenotypic ALL. About half were under age 10 years, 62% were boys, and stem cell sources were typical, although 20% received a cord blood transplant.

Dr. Pulsipher reported serving as an advisor and/or consultant for Chimerix, Novartis, Jazz Pharmaceutical, and receiving housing support from Medac Pharma for an educational meeting.

ORLANDO – Minimal residual disease (MRD) measured before and after allogeneic hematopoietic stem cell transplantation (HCT) is a powerful predictor of survival in children with acute lymphoblastic leukemia (ALL), according to a review of hundreds of cases from around the world.

The findings could have implications for using minimal residual disease measures to guide posttransplant interventions, Michael A. Pulsipher, MD, reported at the combined annual meetings of the Center for International Blood & Marrow Transplant Research and the American Society for Blood and Marrow Transplantation.

“MRD pretransplant was a very powerful predictor of outcomes. MRD posttransplant highlights individual patients at risk,” Dr. Pulsipher said. Results comparing reverse transcriptase–polymerase chain reaction with flow cytometry require validation by direct comparison in the same patient cohort, but “the new risk scores ... very nicely predict outcomes both pre- and post-transplant and can guide study planning and patient counseling.”

A total of 2,960 bone marrow MRD measurements were performed in the 747 patients included in the study. MRD was assessed prior to HCT and on or near days 30, 60, 100, 180, and 365 and beyond after HCT.

Patients were grouped for analysis according to MRD level: Group 1 had no detectable MRD, group 2 had low detectable MRD levels (less than 10E-4, or 0.01% by flow cytometry), and group 3 had high detectable MRD levels (10E-4 or higher). A second analysis compared findings in those tested by flow cytometry and those tested by real-time quantitative PCR (RQ-PCR), said Dr. Pulsipher of Children’s Hospital Los Angeles.

In 648 patients with pre-HCT MRD measurements available, the 4-year probability of event-free survival was 62%, 67%, and 37% for groups 1, 2, and 3, respectively. Group 3 – the high MRD level group – had 2.47 times the increased hazard ratio for relapse and 1.67 times the increased risk of treatment-related mortality, Dr. Pulsipher said, adding that pre-HCT MRD and remission status both significantly influenced survival, while age, sex, relapse site, cytogenetics, donor type, and stem cell source did not influence outcome.

Post-HCT MRD values were analyzed as time-dependent covariates.

“As time went by more and more, any detectable level of MRD led to a very poor prognosis, whereas patients arriving at day 365 with no detectable MRD had exceptional prognosis with survival approaching 90%,” he said.

Specifically, the 4-year probability of event-free survival for groups 1, 2, and 3, respectively, were 59%, 65%, and 43% at day 30; 64%, 47%, and 36% at day 60; 65%, 69%, and 44% at day 90; 79%, 40%, and 12% at day 180; and 87%, 36%, and 25% at day 365.

Of note, a very significant interaction was seen between acute graft-versus-host disease (GVHD) and MRD, Dr. Pulsipher said, explaining that patients who were MRD positive and had developed GVHD had a significant decrease in the cumulative incidence of relapse, compared with those with no GVHD.

“This translated into improved event-free survival with patients post transplant, who were MRD-positive [and] developing GVHD, still having a reasonable chance of survival, whereas patients post transplant who had MRD measured who did not develop GVHD had a very poor chance of survival,” he added.

Additionally, based on detailed multivariate analysis including a number of clinical factors, risk predictive scores were developed for event-free survival risk at 18 months or cumulative incidence of relapse at 18 months. Multiple scores were developed for each, but, as an example of factors that had an important effect on outcomes, patients with very early pretransplant relapse (those who went into remission but relapsed within 18 months) or with greater than 2nd relapse had a high risk for poor event-free survival. Mismatched donors and unrelated cord-blood stem cell transplant recipients also had high risk, he said, noting that, “of course, MRD had a significant effect” and was the most important factor prior to transplant.

These patients, who had a 4-point or greater risk score, were the poorest risk group, with survival that was less than 50%, as opposed to better risk groups that exceeded 90%, he said.

“A score of greater than 5 could identify 80% of patients who were going to relapse after transplant, and of course, event-free and overall survival in those patients were very poor,” he added.

As time went by, the early risk of GVHD diminished somewhat, as did the risk of mismatched donors.

“Most of the risk was associated with any MRD detection,” he said.

Flow cytometry and RQ-PCR levels of at least 10-4 were highly predictive of relapse at all pre- and post-HCT time points; however, RQ-PCR values between 10-4 and 10-3, in cases where adequate numbers were available for comparison, better predicted relapse as compared with flow cytometry results.

For example, before HCT, hazard ratios were 1.26 and 2.41 with flow cytometry vs. RQ-PCR. At day 30, the hazard ratios were 1.33 and 2.53, and at day 365, they were 3.54 and 31.84, Dr. Pulsipher reported.

The findings provide important information for improving outcomes in children with high-risk ALL undergoing HCT, he said.

“Older prognostic models for relapsed and refractory high-risk ALL have focused on timing and location of relapse, as well as disease phenotype. But it is clear that, in order to treat children with very high risk ALL with transplantation, MRD has become the most important thing to look at in the pretreatment setting. The challenges that we face in assessing MRD, however, have been hampered by the fact that we have differing MRD measurements,” he said, noting that RQ-PCR is often used in Europe, while flow cytometry is more often used in the United States. As such, direct comparisons are lacking, as are T-cell and posttransplant data.

The current study represents a “tremendous effort” by international collaborators to address these shortcoming, he said.

“This is a great opportunity, as our goal, of course, is to avoid futility in transplantation, but, more importantly, to find opportunities to identify groups for which we can improve our outcomes,” he added.

Patients included in the study were treated in Europe, North America, and Australia and were transplanted during Sept. 1999-May 2016. Most were in first or second remission, and most (586) had pre-B ALL. A notable 145 had T-cell ALL – “more than ever has been analyzed previously” – and 16 had B-lineage or biphenotypic ALL. About half were under age 10 years, 62% were boys, and stem cell sources were typical, although 20% received a cord blood transplant.

Dr. Pulsipher reported serving as an advisor and/or consultant for Chimerix, Novartis, Jazz Pharmaceutical, and receiving housing support from Medac Pharma for an educational meeting.

ORLANDO – Minimal residual disease (MRD) measured before and after allogeneic hematopoietic stem cell transplantation (HCT) is a powerful predictor of survival in children with acute lymphoblastic leukemia (ALL), according to a review of hundreds of cases from around the world.

The findings could have implications for using minimal residual disease measures to guide posttransplant interventions, Michael A. Pulsipher, MD, reported at the combined annual meetings of the Center for International Blood & Marrow Transplant Research and the American Society for Blood and Marrow Transplantation.

“MRD pretransplant was a very powerful predictor of outcomes. MRD posttransplant highlights individual patients at risk,” Dr. Pulsipher said. Results comparing reverse transcriptase–polymerase chain reaction with flow cytometry require validation by direct comparison in the same patient cohort, but “the new risk scores ... very nicely predict outcomes both pre- and post-transplant and can guide study planning and patient counseling.”

A total of 2,960 bone marrow MRD measurements were performed in the 747 patients included in the study. MRD was assessed prior to HCT and on or near days 30, 60, 100, 180, and 365 and beyond after HCT.

Patients were grouped for analysis according to MRD level: Group 1 had no detectable MRD, group 2 had low detectable MRD levels (less than 10E-4, or 0.01% by flow cytometry), and group 3 had high detectable MRD levels (10E-4 or higher). A second analysis compared findings in those tested by flow cytometry and those tested by real-time quantitative PCR (RQ-PCR), said Dr. Pulsipher of Children’s Hospital Los Angeles.

In 648 patients with pre-HCT MRD measurements available, the 4-year probability of event-free survival was 62%, 67%, and 37% for groups 1, 2, and 3, respectively. Group 3 – the high MRD level group – had 2.47 times the increased hazard ratio for relapse and 1.67 times the increased risk of treatment-related mortality, Dr. Pulsipher said, adding that pre-HCT MRD and remission status both significantly influenced survival, while age, sex, relapse site, cytogenetics, donor type, and stem cell source did not influence outcome.

Post-HCT MRD values were analyzed as time-dependent covariates.

“As time went by more and more, any detectable level of MRD led to a very poor prognosis, whereas patients arriving at day 365 with no detectable MRD had exceptional prognosis with survival approaching 90%,” he said.

Specifically, the 4-year probability of event-free survival for groups 1, 2, and 3, respectively, were 59%, 65%, and 43% at day 30; 64%, 47%, and 36% at day 60; 65%, 69%, and 44% at day 90; 79%, 40%, and 12% at day 180; and 87%, 36%, and 25% at day 365.

Of note, a very significant interaction was seen between acute graft-versus-host disease (GVHD) and MRD, Dr. Pulsipher said, explaining that patients who were MRD positive and had developed GVHD had a significant decrease in the cumulative incidence of relapse, compared with those with no GVHD.

“This translated into improved event-free survival with patients post transplant, who were MRD-positive [and] developing GVHD, still having a reasonable chance of survival, whereas patients post transplant who had MRD measured who did not develop GVHD had a very poor chance of survival,” he added.

Additionally, based on detailed multivariate analysis including a number of clinical factors, risk predictive scores were developed for event-free survival risk at 18 months or cumulative incidence of relapse at 18 months. Multiple scores were developed for each, but, as an example of factors that had an important effect on outcomes, patients with very early pretransplant relapse (those who went into remission but relapsed within 18 months) or with greater than 2nd relapse had a high risk for poor event-free survival. Mismatched donors and unrelated cord-blood stem cell transplant recipients also had high risk, he said, noting that, “of course, MRD had a significant effect” and was the most important factor prior to transplant.

These patients, who had a 4-point or greater risk score, were the poorest risk group, with survival that was less than 50%, as opposed to better risk groups that exceeded 90%, he said.

“A score of greater than 5 could identify 80% of patients who were going to relapse after transplant, and of course, event-free and overall survival in those patients were very poor,” he added.

As time went by, the early risk of GVHD diminished somewhat, as did the risk of mismatched donors.

“Most of the risk was associated with any MRD detection,” he said.

Flow cytometry and RQ-PCR levels of at least 10-4 were highly predictive of relapse at all pre- and post-HCT time points; however, RQ-PCR values between 10-4 and 10-3, in cases where adequate numbers were available for comparison, better predicted relapse as compared with flow cytometry results.

For example, before HCT, hazard ratios were 1.26 and 2.41 with flow cytometry vs. RQ-PCR. At day 30, the hazard ratios were 1.33 and 2.53, and at day 365, they were 3.54 and 31.84, Dr. Pulsipher reported.

The findings provide important information for improving outcomes in children with high-risk ALL undergoing HCT, he said.

“Older prognostic models for relapsed and refractory high-risk ALL have focused on timing and location of relapse, as well as disease phenotype. But it is clear that, in order to treat children with very high risk ALL with transplantation, MRD has become the most important thing to look at in the pretreatment setting. The challenges that we face in assessing MRD, however, have been hampered by the fact that we have differing MRD measurements,” he said, noting that RQ-PCR is often used in Europe, while flow cytometry is more often used in the United States. As such, direct comparisons are lacking, as are T-cell and posttransplant data.

The current study represents a “tremendous effort” by international collaborators to address these shortcoming, he said.

“This is a great opportunity, as our goal, of course, is to avoid futility in transplantation, but, more importantly, to find opportunities to identify groups for which we can improve our outcomes,” he added.

Patients included in the study were treated in Europe, North America, and Australia and were transplanted during Sept. 1999-May 2016. Most were in first or second remission, and most (586) had pre-B ALL. A notable 145 had T-cell ALL – “more than ever has been analyzed previously” – and 16 had B-lineage or biphenotypic ALL. About half were under age 10 years, 62% were boys, and stem cell sources were typical, although 20% received a cord blood transplant.

Dr. Pulsipher reported serving as an advisor and/or consultant for Chimerix, Novartis, Jazz Pharmaceutical, and receiving housing support from Medac Pharma for an educational meeting.

Key clinical point:

Major finding: Patients with high pretransplant MRD levels had a 2.47-fold increased hazard ratio for relapse and a 1.67-fold increased risk of treatment-related mortality.

Data source: A review of data from 747 pediatric high-risk ALL cases.

Disclosures: Dr. Pulsipher reported serving as an adviser and/or consultant for Chimerix, Novartis, and Jazz Pharmaceuticals and receiving housing support from Medac Pharma for an educational meeting.

CAR T-cell trial in adult ALL shut down

After 2 clinical holds in 2016 and 5 patient deaths, the Seattle biotech Juno Therapeutics is shutting down the phase 2 ROCKET trial of JCAR015.

The chimeric antigen receptor (CAR) T-cell therapy JCAR015 was being tested in adults with relapsed or refractory B-cell acute lymphoblastic leukemia (ALL).

“We have decided not to move forward . . . at this time,” CEO Hans Bishop said in a statement, “even though it generated important learnings for us and the immunotherapy field.”

He said the company remains “committed to developing better treatment for patients battling ALL.”

The first clinical hold of the ROCKET trial occurred in July after 2 patients died. The company attributed the deaths primarily to the addition of fludarabine to the regimen.

Juno removed fludarabine from the treatment protocol, the clinical hold was lifted, and the trial resumed.

Then, in November, 2 more patients died from cerebral edema, and the trial was put on hold once again.

One patient had died earlier in 2016, totaling 5 patient deaths from cerebral edema, although the earliest death was not necessarily related to treatment, the company stated.

Juno attributed the deaths to multiple factors, including the patients’ treatment history and treatment received at the beginning of the trial.

Juno plans to start a new adult ALL trial in 2018. The therapy, they say, is more similar to JCAR017, which is being tested in pediatric patients.

ROCKET is not the first trial of JCAR015 to be placed on hold.

In 2014, after 2 patients died of cytokine release syndrome, the phase 1 trial was placed on clinical hold.

Juno made changes to the enrollment criteria and dosing, and the hold was lifted. Results from this trial were presented at ASCO 2015 and ASCO 2016. ![]()

After 2 clinical holds in 2016 and 5 patient deaths, the Seattle biotech Juno Therapeutics is shutting down the phase 2 ROCKET trial of JCAR015.

The chimeric antigen receptor (CAR) T-cell therapy JCAR015 was being tested in adults with relapsed or refractory B-cell acute lymphoblastic leukemia (ALL).

“We have decided not to move forward . . . at this time,” CEO Hans Bishop said in a statement, “even though it generated important learnings for us and the immunotherapy field.”

He said the company remains “committed to developing better treatment for patients battling ALL.”

The first clinical hold of the ROCKET trial occurred in July after 2 patients died. The company attributed the deaths primarily to the addition of fludarabine to the regimen.

Juno removed fludarabine from the treatment protocol, the clinical hold was lifted, and the trial resumed.

Then, in November, 2 more patients died from cerebral edema, and the trial was put on hold once again.

One patient had died earlier in 2016, totaling 5 patient deaths from cerebral edema, although the earliest death was not necessarily related to treatment, the company stated.

Juno attributed the deaths to multiple factors, including the patients’ treatment history and treatment received at the beginning of the trial.

Juno plans to start a new adult ALL trial in 2018. The therapy, they say, is more similar to JCAR017, which is being tested in pediatric patients.

ROCKET is not the first trial of JCAR015 to be placed on hold.

In 2014, after 2 patients died of cytokine release syndrome, the phase 1 trial was placed on clinical hold.

Juno made changes to the enrollment criteria and dosing, and the hold was lifted. Results from this trial were presented at ASCO 2015 and ASCO 2016. ![]()

After 2 clinical holds in 2016 and 5 patient deaths, the Seattle biotech Juno Therapeutics is shutting down the phase 2 ROCKET trial of JCAR015.

The chimeric antigen receptor (CAR) T-cell therapy JCAR015 was being tested in adults with relapsed or refractory B-cell acute lymphoblastic leukemia (ALL).

“We have decided not to move forward . . . at this time,” CEO Hans Bishop said in a statement, “even though it generated important learnings for us and the immunotherapy field.”

He said the company remains “committed to developing better treatment for patients battling ALL.”

The first clinical hold of the ROCKET trial occurred in July after 2 patients died. The company attributed the deaths primarily to the addition of fludarabine to the regimen.

Juno removed fludarabine from the treatment protocol, the clinical hold was lifted, and the trial resumed.

Then, in November, 2 more patients died from cerebral edema, and the trial was put on hold once again.

One patient had died earlier in 2016, totaling 5 patient deaths from cerebral edema, although the earliest death was not necessarily related to treatment, the company stated.

Juno attributed the deaths to multiple factors, including the patients’ treatment history and treatment received at the beginning of the trial.

Juno plans to start a new adult ALL trial in 2018. The therapy, they say, is more similar to JCAR017, which is being tested in pediatric patients.

ROCKET is not the first trial of JCAR015 to be placed on hold.

In 2014, after 2 patients died of cytokine release syndrome, the phase 1 trial was placed on clinical hold.

Juno made changes to the enrollment criteria and dosing, and the hold was lifted. Results from this trial were presented at ASCO 2015 and ASCO 2016. ![]()

Blinatumomab superior to chemotherapy for refractory ALL

Blinatumomab proved superior to standard chemotherapy for relapsed or refractory acute lymphoblastic leukemia (ALL), based on results of an international phase III trial reported online March 2 in the New England Journal of Medicine.

The trial was halted early when an interim analysis revealed the clear benefit with blinatumomab, Hagop Kantarjian, MD, chair of the department of leukemia, University of Texas MD Anderson Cancer Center, Houston, and his associates wrote.

The manufacturer-sponsored open-label study included 376 adults with Ph-negative B-cell precursor ALL that was either refractory to primary induction therapy or to salvage with intensive combination chemotherapy, first relapse with the first remission lasting less than 12 months, second or greater relapse, or relapse at any time after allogeneic stem cell transplantation.

Study participants were randomly assigned to receive either blinatumomab (267 patients) or the investigator’s choice of one of four protocol-defined regimens of standard chemotherapy (109 patients) and were followed at 101 medical centers in 21 countries for a median of 11 months.

For each 6-week cycle of blinatumomab therapy, patients received treatment for 4 weeks (9 mcg blinatumomab per day during week 1 of induction cycle one and 28 mcg/day thereafter, by continuous infusion) and then no treatment for 2 weeks.

Maintenance treatment with blinatumomab was given as a 4-week continuous infusion every 12 weeks.

At the interim analysis – when 75% of the total number of planned deaths for the final analysis had occurred – the monitoring committee recommended that the trial be stopped early because of the benefit observed with blinatumomab therapy. Median overall survival was significantly longer with blinatumomab (7.7 months) than with chemotherapy (4 months), with a hazard ratio for death of 0.71. The estimated survival at 6 months was 54% with blinatumomab and 39% with chemotherapy.

Remission rates also favored blinatumomab: Rates of complete remission with full hematologic recovery were 34% vs. 16% and rates of complete remission with full, partial, or incomplete hematologic recovery were 44% vs. 25%.

In addition, the median duration of remission was 7.3 months with blinatumomab and 4.6 months with chemotherapy. And 6-month estimates of event-free survival were 31% vs. 12%. These survival and remission benefits were consistent across all subgroups of patients and persisted in several sensitivity analyses, the investigators said (N Engl J Med. 2017 Mar 2. doi: 10.1056/nejmOA1609783).

A total of 24% of the patients in the blinatumomab group and 24% of the patients in the chemotherapy group underwent allogeneic stem cell transplantation, with comparable outcomes and death rates.

Serious adverse events occurred in 62% of patients receiving blinatumomab and in 45% of those receiving chemotherapy, including fatal adverse events in 19% and 17%, respectively. The fatal events were considered to be related to treatment in 3% of the blinatumomab group and in 7% of the chemotherapy group. Rates of treatment discontinuation from an adverse event were 12% and 8%, respectively.

Patient-reported health status and quality of life improved with blinatumomab but worsened with chemotherapy.

“Given the previous exposure of these patients to myelosuppressive and immunosuppressive treatments, the activity of an immune-based therapy such as blinatumomab, which depends on functioning T cells for its activity, provides encouragement that responses may be further enhanced and made durable with additional immune activation strategies,” Dr. Kantarjian and his associates noted.

Dr. Kantarjian reported receiving research support from Amgen, Pfizer, Bristol-Myers Squibb, Novartis, and ARIAD; his associates reported ties to numerous industry sources.

Blinatumomab proved superior to standard chemotherapy for relapsed or refractory acute lymphoblastic leukemia (ALL), based on results of an international phase III trial reported online March 2 in the New England Journal of Medicine.

The trial was halted early when an interim analysis revealed the clear benefit with blinatumomab, Hagop Kantarjian, MD, chair of the department of leukemia, University of Texas MD Anderson Cancer Center, Houston, and his associates wrote.

The manufacturer-sponsored open-label study included 376 adults with Ph-negative B-cell precursor ALL that was either refractory to primary induction therapy or to salvage with intensive combination chemotherapy, first relapse with the first remission lasting less than 12 months, second or greater relapse, or relapse at any time after allogeneic stem cell transplantation.

Study participants were randomly assigned to receive either blinatumomab (267 patients) or the investigator’s choice of one of four protocol-defined regimens of standard chemotherapy (109 patients) and were followed at 101 medical centers in 21 countries for a median of 11 months.

For each 6-week cycle of blinatumomab therapy, patients received treatment for 4 weeks (9 mcg blinatumomab per day during week 1 of induction cycle one and 28 mcg/day thereafter, by continuous infusion) and then no treatment for 2 weeks.

Maintenance treatment with blinatumomab was given as a 4-week continuous infusion every 12 weeks.

At the interim analysis – when 75% of the total number of planned deaths for the final analysis had occurred – the monitoring committee recommended that the trial be stopped early because of the benefit observed with blinatumomab therapy. Median overall survival was significantly longer with blinatumomab (7.7 months) than with chemotherapy (4 months), with a hazard ratio for death of 0.71. The estimated survival at 6 months was 54% with blinatumomab and 39% with chemotherapy.

Remission rates also favored blinatumomab: Rates of complete remission with full hematologic recovery were 34% vs. 16% and rates of complete remission with full, partial, or incomplete hematologic recovery were 44% vs. 25%.

In addition, the median duration of remission was 7.3 months with blinatumomab and 4.6 months with chemotherapy. And 6-month estimates of event-free survival were 31% vs. 12%. These survival and remission benefits were consistent across all subgroups of patients and persisted in several sensitivity analyses, the investigators said (N Engl J Med. 2017 Mar 2. doi: 10.1056/nejmOA1609783).

A total of 24% of the patients in the blinatumomab group and 24% of the patients in the chemotherapy group underwent allogeneic stem cell transplantation, with comparable outcomes and death rates.

Serious adverse events occurred in 62% of patients receiving blinatumomab and in 45% of those receiving chemotherapy, including fatal adverse events in 19% and 17%, respectively. The fatal events were considered to be related to treatment in 3% of the blinatumomab group and in 7% of the chemotherapy group. Rates of treatment discontinuation from an adverse event were 12% and 8%, respectively.

Patient-reported health status and quality of life improved with blinatumomab but worsened with chemotherapy.

“Given the previous exposure of these patients to myelosuppressive and immunosuppressive treatments, the activity of an immune-based therapy such as blinatumomab, which depends on functioning T cells for its activity, provides encouragement that responses may be further enhanced and made durable with additional immune activation strategies,” Dr. Kantarjian and his associates noted.

Dr. Kantarjian reported receiving research support from Amgen, Pfizer, Bristol-Myers Squibb, Novartis, and ARIAD; his associates reported ties to numerous industry sources.

Blinatumomab proved superior to standard chemotherapy for relapsed or refractory acute lymphoblastic leukemia (ALL), based on results of an international phase III trial reported online March 2 in the New England Journal of Medicine.

The trial was halted early when an interim analysis revealed the clear benefit with blinatumomab, Hagop Kantarjian, MD, chair of the department of leukemia, University of Texas MD Anderson Cancer Center, Houston, and his associates wrote.

The manufacturer-sponsored open-label study included 376 adults with Ph-negative B-cell precursor ALL that was either refractory to primary induction therapy or to salvage with intensive combination chemotherapy, first relapse with the first remission lasting less than 12 months, second or greater relapse, or relapse at any time after allogeneic stem cell transplantation.

Study participants were randomly assigned to receive either blinatumomab (267 patients) or the investigator’s choice of one of four protocol-defined regimens of standard chemotherapy (109 patients) and were followed at 101 medical centers in 21 countries for a median of 11 months.

For each 6-week cycle of blinatumomab therapy, patients received treatment for 4 weeks (9 mcg blinatumomab per day during week 1 of induction cycle one and 28 mcg/day thereafter, by continuous infusion) and then no treatment for 2 weeks.

Maintenance treatment with blinatumomab was given as a 4-week continuous infusion every 12 weeks.

At the interim analysis – when 75% of the total number of planned deaths for the final analysis had occurred – the monitoring committee recommended that the trial be stopped early because of the benefit observed with blinatumomab therapy. Median overall survival was significantly longer with blinatumomab (7.7 months) than with chemotherapy (4 months), with a hazard ratio for death of 0.71. The estimated survival at 6 months was 54% with blinatumomab and 39% with chemotherapy.

Remission rates also favored blinatumomab: Rates of complete remission with full hematologic recovery were 34% vs. 16% and rates of complete remission with full, partial, or incomplete hematologic recovery were 44% vs. 25%.

In addition, the median duration of remission was 7.3 months with blinatumomab and 4.6 months with chemotherapy. And 6-month estimates of event-free survival were 31% vs. 12%. These survival and remission benefits were consistent across all subgroups of patients and persisted in several sensitivity analyses, the investigators said (N Engl J Med. 2017 Mar 2. doi: 10.1056/nejmOA1609783).

A total of 24% of the patients in the blinatumomab group and 24% of the patients in the chemotherapy group underwent allogeneic stem cell transplantation, with comparable outcomes and death rates.

Serious adverse events occurred in 62% of patients receiving blinatumomab and in 45% of those receiving chemotherapy, including fatal adverse events in 19% and 17%, respectively. The fatal events were considered to be related to treatment in 3% of the blinatumomab group and in 7% of the chemotherapy group. Rates of treatment discontinuation from an adverse event were 12% and 8%, respectively.

Patient-reported health status and quality of life improved with blinatumomab but worsened with chemotherapy.

“Given the previous exposure of these patients to myelosuppressive and immunosuppressive treatments, the activity of an immune-based therapy such as blinatumomab, which depends on functioning T cells for its activity, provides encouragement that responses may be further enhanced and made durable with additional immune activation strategies,” Dr. Kantarjian and his associates noted.

Dr. Kantarjian reported receiving research support from Amgen, Pfizer, Bristol-Myers Squibb, Novartis, and ARIAD; his associates reported ties to numerous industry sources.

FROM THE NEW ENGLAND JOURNAL OF MEDICINE

Key clinical point: Blinatumomab proved superior to standard chemotherapy for relapsed or refractory ALL in a phase III trial.

Major finding: Median overall survival with blinatumomab (7.7 months) was significantly longer than with chemotherapy (4.0 months), and rates of complete remission with full hematologic recovery were 34% vs 16%, respectively.

Data source: A manufacturer-sponsored international randomized open-label phase-3 trial involving 376 adults followed for 11 months.

Disclosures: This trial was funded by Amgen, which also participated in the study design, data analysis, and report writing. Dr. Kantarjian reported receiving research support from Amgen, Pfizer, Bristol-Myers Squibb, Novartis, and ARIAD; his associates reported ties to numerous industry sources.

Acquired mutations may compromise assay

A newly discovered issue with the Ba/F3 transformation assay could “jeopardize attempts to characterize the signaling mechanisms and drug sensitivities of leukemic oncogenes,” researchers reported in Oncotarget.

The researchers said the assay “remains an invaluable tool” for validating activating mutations in primary leukemias.

However, the assay is prone to a previously unreported flaw, where Ba/F3 cells can acquire additional mutations.

This issue was discovered by Kevin Watanabe-Smith, PhD, of Oregon Health & Science University in Portland.

He identified the problem while studying a growth-activating mutation in a patient with T-cell leukemia. (The results of that research were published in Leukemia in April 2016).

“When I was sequencing the patient’s DNA to make sure the original, known mutation is there, I was finding additional, unexpected mutations in the gene that I didn’t put there,” Dr Watanabe-Smith said. “And I was getting different mutations every time.”

“After we saw this in several cases, we knew it was worth further study,” added Cristina Tognon, PhD, of Oregon Health & Science University.

So the researchers decided to study Ba/F3 cell lines with and without 4 mutations (found in 3 cytokine receptors) that have “known transformative capacity,” including:

- CSF2RB R461C, a germline mutation found in a patient with T-cell acute lymphoblastic leukemia (ALL)

- CSF3R T618I, a mutation found in chronic neutrophilic leukemia (CNL) and atypical chronic myelogenous leukemia (aCML)

- CSF3R W791X, also found in CNL and aCML

- IL7R 243InsPPCL, which was found in a patient with B-cell ALL.

The researchers said they observed acquired mutations in 1 of 3 CSF2RB wild-type cell lines that transformed and all 4 CSF3R wild-type cell lines, which all transformed.

Furthermore, most CSF2RB R461C lines (4/5) and CSF3R W791X lines (3/4) had additional acquired mutations. However, the CSF3R T618I lines and the IL7R 243InsPPCL lines did not contain acquired mutations.

The researchers said acquired mutations were observed only in weakly transforming oncogenes, which were defined as mutations with a weaker ability to transform cells (less than 1 in every 200 cells) or a slower time to outgrowth (5 days or longer to reach a 5-fold increase over the initial cell number).

The team also said their findings indicate that the majority of the acquired mutations likely exist before IL-3 withdrawal but only expand to levels detectable by Sanger sequencing in the absence of IL-3.

“The potential impact [of these acquired mutations] is that a non-functional mutation could appear functional, and a researcher could publish results that would not be reproducible,” Dr Watanabe-Smith said.

To overcome this problem, Dr Watanabe-Smith and his colleagues recommended taking an additional step when using the Ba/F3 assay—sequencing outgrown cell lines. They also said additional research is needed to devise methods that reduce the incidence of acquired mutations in such assays. ![]()

A newly discovered issue with the Ba/F3 transformation assay could “jeopardize attempts to characterize the signaling mechanisms and drug sensitivities of leukemic oncogenes,” researchers reported in Oncotarget.

The researchers said the assay “remains an invaluable tool” for validating activating mutations in primary leukemias.

However, the assay is prone to a previously unreported flaw, where Ba/F3 cells can acquire additional mutations.

This issue was discovered by Kevin Watanabe-Smith, PhD, of Oregon Health & Science University in Portland.

He identified the problem while studying a growth-activating mutation in a patient with T-cell leukemia. (The results of that research were published in Leukemia in April 2016).

“When I was sequencing the patient’s DNA to make sure the original, known mutation is there, I was finding additional, unexpected mutations in the gene that I didn’t put there,” Dr Watanabe-Smith said. “And I was getting different mutations every time.”

“After we saw this in several cases, we knew it was worth further study,” added Cristina Tognon, PhD, of Oregon Health & Science University.

So the researchers decided to study Ba/F3 cell lines with and without 4 mutations (found in 3 cytokine receptors) that have “known transformative capacity,” including:

- CSF2RB R461C, a germline mutation found in a patient with T-cell acute lymphoblastic leukemia (ALL)

- CSF3R T618I, a mutation found in chronic neutrophilic leukemia (CNL) and atypical chronic myelogenous leukemia (aCML)

- CSF3R W791X, also found in CNL and aCML

- IL7R 243InsPPCL, which was found in a patient with B-cell ALL.

The researchers said they observed acquired mutations in 1 of 3 CSF2RB wild-type cell lines that transformed and all 4 CSF3R wild-type cell lines, which all transformed.

Furthermore, most CSF2RB R461C lines (4/5) and CSF3R W791X lines (3/4) had additional acquired mutations. However, the CSF3R T618I lines and the IL7R 243InsPPCL lines did not contain acquired mutations.

The researchers said acquired mutations were observed only in weakly transforming oncogenes, which were defined as mutations with a weaker ability to transform cells (less than 1 in every 200 cells) or a slower time to outgrowth (5 days or longer to reach a 5-fold increase over the initial cell number).

The team also said their findings indicate that the majority of the acquired mutations likely exist before IL-3 withdrawal but only expand to levels detectable by Sanger sequencing in the absence of IL-3.

“The potential impact [of these acquired mutations] is that a non-functional mutation could appear functional, and a researcher could publish results that would not be reproducible,” Dr Watanabe-Smith said.

To overcome this problem, Dr Watanabe-Smith and his colleagues recommended taking an additional step when using the Ba/F3 assay—sequencing outgrown cell lines. They also said additional research is needed to devise methods that reduce the incidence of acquired mutations in such assays. ![]()

A newly discovered issue with the Ba/F3 transformation assay could “jeopardize attempts to characterize the signaling mechanisms and drug sensitivities of leukemic oncogenes,” researchers reported in Oncotarget.

The researchers said the assay “remains an invaluable tool” for validating activating mutations in primary leukemias.

However, the assay is prone to a previously unreported flaw, where Ba/F3 cells can acquire additional mutations.

This issue was discovered by Kevin Watanabe-Smith, PhD, of Oregon Health & Science University in Portland.

He identified the problem while studying a growth-activating mutation in a patient with T-cell leukemia. (The results of that research were published in Leukemia in April 2016).

“When I was sequencing the patient’s DNA to make sure the original, known mutation is there, I was finding additional, unexpected mutations in the gene that I didn’t put there,” Dr Watanabe-Smith said. “And I was getting different mutations every time.”

“After we saw this in several cases, we knew it was worth further study,” added Cristina Tognon, PhD, of Oregon Health & Science University.

So the researchers decided to study Ba/F3 cell lines with and without 4 mutations (found in 3 cytokine receptors) that have “known transformative capacity,” including:

- CSF2RB R461C, a germline mutation found in a patient with T-cell acute lymphoblastic leukemia (ALL)

- CSF3R T618I, a mutation found in chronic neutrophilic leukemia (CNL) and atypical chronic myelogenous leukemia (aCML)

- CSF3R W791X, also found in CNL and aCML

- IL7R 243InsPPCL, which was found in a patient with B-cell ALL.

The researchers said they observed acquired mutations in 1 of 3 CSF2RB wild-type cell lines that transformed and all 4 CSF3R wild-type cell lines, which all transformed.

Furthermore, most CSF2RB R461C lines (4/5) and CSF3R W791X lines (3/4) had additional acquired mutations. However, the CSF3R T618I lines and the IL7R 243InsPPCL lines did not contain acquired mutations.

The researchers said acquired mutations were observed only in weakly transforming oncogenes, which were defined as mutations with a weaker ability to transform cells (less than 1 in every 200 cells) or a slower time to outgrowth (5 days or longer to reach a 5-fold increase over the initial cell number).

The team also said their findings indicate that the majority of the acquired mutations likely exist before IL-3 withdrawal but only expand to levels detectable by Sanger sequencing in the absence of IL-3.

“The potential impact [of these acquired mutations] is that a non-functional mutation could appear functional, and a researcher could publish results that would not be reproducible,” Dr Watanabe-Smith said.

To overcome this problem, Dr Watanabe-Smith and his colleagues recommended taking an additional step when using the Ba/F3 assay—sequencing outgrown cell lines. They also said additional research is needed to devise methods that reduce the incidence of acquired mutations in such assays. ![]()

Docs create guideline to aid workup of acute leukemia

Two physician groups have published an evidence-based guideline that addresses the initial workup of acute leukemia.

The guideline includes 27 recommendations intended to aid treating physicians and pathologists involved in the diagnostic and prognostic evaluation of acute leukemia samples, including those from patients with acute lymphoblastic leukemia, acute myeloid leukemia, and acute leukemias of ambiguous lineage.

The guideline, which was developed through a collaboration between the College of American Pathologists (CAP) and the American Society of Hematology (ASH), has been published in the Archives of Pathology and Laboratory Medicine.

The recommendations in the guideline will also be available in a pocket guide and via the ASH Pocket Guides app in March.

“With its multidisciplinary perspective, this guideline reflects contemporary, integrated cancer care, and, therefore, it will also help providers realize efficiencies in test management,” said ASH guideline co-chair James W. Vardiman, MD, of the University of Chicago in Illinois.

To create this guideline, Dr Vardiman and his colleagues sought and reviewed relevant published evidence.

The group set out to answer 6 questions for the initial diagnosis of acute leukemias:

1) What clinical and laboratory information should be available?

2) What samples and specimen types should be evaluated?

3) What tests are required for all patients during the initial evaluation?

4) What tests are required for only a subset of patients?

5) Where should laboratory testing be performed?

6) How should the results be reported?

The authors say the guideline’s 27 recommendations answer these questions, providing a framework for the steps involved in the evaluation of acute leukemia samples.

In particular, the guideline includes steps to coordinate and communicate across clinical teams, specifying information that must be shared—particularly among treating physicians and pathologists—for optimal patient outcomes and to avoid duplicative testing.

“The laboratory testing to diagnose acute leukemia and inform treatment is increasingly complex, making this guideline essential,” said CAP guideline co-chair Daniel A. Arber, MD, of the University of Chicago.

“New gene mutations and protein expressions have been described over the last decade in all types of acute leukemia, and many of them impact diagnosis or inform prognosis.” ![]()

Two physician groups have published an evidence-based guideline that addresses the initial workup of acute leukemia.

The guideline includes 27 recommendations intended to aid treating physicians and pathologists involved in the diagnostic and prognostic evaluation of acute leukemia samples, including those from patients with acute lymphoblastic leukemia, acute myeloid leukemia, and acute leukemias of ambiguous lineage.

The guideline, which was developed through a collaboration between the College of American Pathologists (CAP) and the American Society of Hematology (ASH), has been published in the Archives of Pathology and Laboratory Medicine.

The recommendations in the guideline will also be available in a pocket guide and via the ASH Pocket Guides app in March.

“With its multidisciplinary perspective, this guideline reflects contemporary, integrated cancer care, and, therefore, it will also help providers realize efficiencies in test management,” said ASH guideline co-chair James W. Vardiman, MD, of the University of Chicago in Illinois.

To create this guideline, Dr Vardiman and his colleagues sought and reviewed relevant published evidence.

The group set out to answer 6 questions for the initial diagnosis of acute leukemias:

1) What clinical and laboratory information should be available?

2) What samples and specimen types should be evaluated?

3) What tests are required for all patients during the initial evaluation?

4) What tests are required for only a subset of patients?

5) Where should laboratory testing be performed?

6) How should the results be reported?

The authors say the guideline’s 27 recommendations answer these questions, providing a framework for the steps involved in the evaluation of acute leukemia samples.

In particular, the guideline includes steps to coordinate and communicate across clinical teams, specifying information that must be shared—particularly among treating physicians and pathologists—for optimal patient outcomes and to avoid duplicative testing.

“The laboratory testing to diagnose acute leukemia and inform treatment is increasingly complex, making this guideline essential,” said CAP guideline co-chair Daniel A. Arber, MD, of the University of Chicago.

“New gene mutations and protein expressions have been described over the last decade in all types of acute leukemia, and many of them impact diagnosis or inform prognosis.” ![]()

Two physician groups have published an evidence-based guideline that addresses the initial workup of acute leukemia.

The guideline includes 27 recommendations intended to aid treating physicians and pathologists involved in the diagnostic and prognostic evaluation of acute leukemia samples, including those from patients with acute lymphoblastic leukemia, acute myeloid leukemia, and acute leukemias of ambiguous lineage.

The guideline, which was developed through a collaboration between the College of American Pathologists (CAP) and the American Society of Hematology (ASH), has been published in the Archives of Pathology and Laboratory Medicine.

The recommendations in the guideline will also be available in a pocket guide and via the ASH Pocket Guides app in March.

“With its multidisciplinary perspective, this guideline reflects contemporary, integrated cancer care, and, therefore, it will also help providers realize efficiencies in test management,” said ASH guideline co-chair James W. Vardiman, MD, of the University of Chicago in Illinois.

To create this guideline, Dr Vardiman and his colleagues sought and reviewed relevant published evidence.

The group set out to answer 6 questions for the initial diagnosis of acute leukemias:

1) What clinical and laboratory information should be available?

2) What samples and specimen types should be evaluated?

3) What tests are required for all patients during the initial evaluation?

4) What tests are required for only a subset of patients?

5) Where should laboratory testing be performed?

6) How should the results be reported?

The authors say the guideline’s 27 recommendations answer these questions, providing a framework for the steps involved in the evaluation of acute leukemia samples.

In particular, the guideline includes steps to coordinate and communicate across clinical teams, specifying information that must be shared—particularly among treating physicians and pathologists—for optimal patient outcomes and to avoid duplicative testing.

“The laboratory testing to diagnose acute leukemia and inform treatment is increasingly complex, making this guideline essential,” said CAP guideline co-chair Daniel A. Arber, MD, of the University of Chicago.

“New gene mutations and protein expressions have been described over the last decade in all types of acute leukemia, and many of them impact diagnosis or inform prognosis.” ![]()

FDA grants priority review to ALL drug

The US Food and Drug Administration (FDA) has granted priority review for inotuzumab ozogamicin as a treatment for adults with relapsed or refractory B-cell precursor acute lymphoblastic leukemia (ALL).

The FDA grants priority review to applications for products that may provide significant improvements in the treatment, diagnosis, or prevention of serious conditions.

The agency’s goal is to take action on a priority review application within 6 months of receiving it, rather than the standard 10-month period.

The Prescription Drug User Fee Act goal date for inotuzumab ozogamicin is August 2017.

About inotuzumab ozogamicin

Inotuzumab ozogamicin is an antibody-drug conjugate that consists of a monoclonal antibody targeting CD22 and a cytotoxic agent known as calicheamicin.

The product originates from a collaboration between Pfizer and Celltech (now UCB), but Pfizer has sole responsibility for all manufacturing and clinical development activities.

The application for inotuzumab ozogamicin is supported by results from a phase 3 trial, which were published in NEJM in June 2016.

The trial enrolled 326 adult patients with relapsed or refractory B-cell ALL and compared inotuzumab ozogamicin to standard of care chemotherapy.

The rate of complete remission, including incomplete hematologic recovery, was 80.7% in the inotuzumab arm and 29.4% in the chemotherapy arm (P<0.001). The median duration of remission was 4.6 months and 3.1 months, respectively (P=0.03).

Forty-one percent of patients treated with inotuzumab and 11% of those who received chemotherapy proceeded to stem cell transplant directly after treatment (P<0.001).

The median progression-free survival was 5.0 months in the inotuzumab arm and 1.8 months in the chemotherapy arm (P<0.001).

The median overall survival was 7.7 months and 6.7 months, respectively (P=0.04). This did not meet the prespecified boundary of significance (P=0.0208).

Liver-related adverse events were more common in the inotuzumab arm than the chemotherapy arm. The most frequent of these were increased aspartate aminotransferase level (20% vs 10%), hyperbilirubinemia (15% vs 10%), and increased alanine aminotransferase level (14% vs 11%).

Veno-occlusive liver disease occurred in 11% of patients in the inotuzumab arm and 1% in the chemotherapy arm.

There were 17 deaths during treatment in the inotuzumab arm and 11 in the chemotherapy arm. Four deaths were considered related to inotuzumab, and 2 were thought to be related to chemotherapy. ![]()

The US Food and Drug Administration (FDA) has granted priority review for inotuzumab ozogamicin as a treatment for adults with relapsed or refractory B-cell precursor acute lymphoblastic leukemia (ALL).

The FDA grants priority review to applications for products that may provide significant improvements in the treatment, diagnosis, or prevention of serious conditions.

The agency’s goal is to take action on a priority review application within 6 months of receiving it, rather than the standard 10-month period.

The Prescription Drug User Fee Act goal date for inotuzumab ozogamicin is August 2017.

About inotuzumab ozogamicin

Inotuzumab ozogamicin is an antibody-drug conjugate that consists of a monoclonal antibody targeting CD22 and a cytotoxic agent known as calicheamicin.

The product originates from a collaboration between Pfizer and Celltech (now UCB), but Pfizer has sole responsibility for all manufacturing and clinical development activities.

The application for inotuzumab ozogamicin is supported by results from a phase 3 trial, which were published in NEJM in June 2016.

The trial enrolled 326 adult patients with relapsed or refractory B-cell ALL and compared inotuzumab ozogamicin to standard of care chemotherapy.

The rate of complete remission, including incomplete hematologic recovery, was 80.7% in the inotuzumab arm and 29.4% in the chemotherapy arm (P<0.001). The median duration of remission was 4.6 months and 3.1 months, respectively (P=0.03).

Forty-one percent of patients treated with inotuzumab and 11% of those who received chemotherapy proceeded to stem cell transplant directly after treatment (P<0.001).

The median progression-free survival was 5.0 months in the inotuzumab arm and 1.8 months in the chemotherapy arm (P<0.001).

The median overall survival was 7.7 months and 6.7 months, respectively (P=0.04). This did not meet the prespecified boundary of significance (P=0.0208).

Liver-related adverse events were more common in the inotuzumab arm than the chemotherapy arm. The most frequent of these were increased aspartate aminotransferase level (20% vs 10%), hyperbilirubinemia (15% vs 10%), and increased alanine aminotransferase level (14% vs 11%).

Veno-occlusive liver disease occurred in 11% of patients in the inotuzumab arm and 1% in the chemotherapy arm.

There were 17 deaths during treatment in the inotuzumab arm and 11 in the chemotherapy arm. Four deaths were considered related to inotuzumab, and 2 were thought to be related to chemotherapy. ![]()

The US Food and Drug Administration (FDA) has granted priority review for inotuzumab ozogamicin as a treatment for adults with relapsed or refractory B-cell precursor acute lymphoblastic leukemia (ALL).

The FDA grants priority review to applications for products that may provide significant improvements in the treatment, diagnosis, or prevention of serious conditions.

The agency’s goal is to take action on a priority review application within 6 months of receiving it, rather than the standard 10-month period.

The Prescription Drug User Fee Act goal date for inotuzumab ozogamicin is August 2017.

About inotuzumab ozogamicin

Inotuzumab ozogamicin is an antibody-drug conjugate that consists of a monoclonal antibody targeting CD22 and a cytotoxic agent known as calicheamicin.

The product originates from a collaboration between Pfizer and Celltech (now UCB), but Pfizer has sole responsibility for all manufacturing and clinical development activities.

The application for inotuzumab ozogamicin is supported by results from a phase 3 trial, which were published in NEJM in June 2016.

The trial enrolled 326 adult patients with relapsed or refractory B-cell ALL and compared inotuzumab ozogamicin to standard of care chemotherapy.

The rate of complete remission, including incomplete hematologic recovery, was 80.7% in the inotuzumab arm and 29.4% in the chemotherapy arm (P<0.001). The median duration of remission was 4.6 months and 3.1 months, respectively (P=0.03).

Forty-one percent of patients treated with inotuzumab and 11% of those who received chemotherapy proceeded to stem cell transplant directly after treatment (P<0.001).

The median progression-free survival was 5.0 months in the inotuzumab arm and 1.8 months in the chemotherapy arm (P<0.001).

The median overall survival was 7.7 months and 6.7 months, respectively (P=0.04). This did not meet the prespecified boundary of significance (P=0.0208).

Liver-related adverse events were more common in the inotuzumab arm than the chemotherapy arm. The most frequent of these were increased aspartate aminotransferase level (20% vs 10%), hyperbilirubinemia (15% vs 10%), and increased alanine aminotransferase level (14% vs 11%).

Veno-occlusive liver disease occurred in 11% of patients in the inotuzumab arm and 1% in the chemotherapy arm.

There were 17 deaths during treatment in the inotuzumab arm and 11 in the chemotherapy arm. Four deaths were considered related to inotuzumab, and 2 were thought to be related to chemotherapy. ![]()

Site of care may impact survival for AYAs with ALL, AML

Receiving treatment at specialized cancer centers may improve survival for adolescents and young adults (AYAs) with acute leukemia, according to a study published in Cancer Epidemiology, Biomarkers & Prevention.

The study showed that, when patients were treated at specialized cancer centers, survival rates were similar for younger AYAs and children with acute lymphoblastic leukemia (ALL) or acute myeloid leukemia (AML).

However, older AYAs did not appear to reap the same survival benefit from receiving treatment at a specialized cancer center.

And AYAs of all ages had significantly worse survival than children if they were not treated at specialized cancer centers.

AYAs (patients ages 15 to 39) with ALL and AML have significantly worse survival outcomes than children ages 14 and under, according to study author Julie Wolfson, MD, of the University of Alabama at Birmingham.

“A much smaller percentage of AYAs with cancer are treated at specialized cancer centers than children with cancer,” she added. “We wanted to understand whether this difference in the site of cancer care is associated with the difference in survival outcomes.”

Dr Wolfson and her colleagues used data from the Los Angeles County Cancer Surveillance Program to identify patients diagnosed with ALL or AML from ages 1 to 39.

Patients were said to have been treated at a specialized cancer center if, at any age, they were cared for at any of the National Cancer Institute-designated Comprehensive Cancer Centers (CCC) in Los Angeles County or if, at the age of 21 or younger, they were cared for at any of the Children’s Oncology Group (COG) sites not designated a Comprehensive Cancer Center.

Included in the analysis were 978 patients diagnosed with ALL as a child (ages 1 to 14), 402 patients diagnosed with ALL as an AYA (ages 15 to 39), 131 patients diagnosed with AML as a child, and 359 patients diagnosed with AML as an AYA.

Seventy percent of the children with ALL and 30% of the AYAs with ALL were treated at CCC/COG sites. Seventy-four percent of the children with AML and 22% of the AYAs with AML were treated at CCC/COG sites.

Results in ALL

AYAs diagnosed with ALL at ages 15 to 21 and 22 to 29 who were treated at CCC/COG sites had comparable survival to children who were diagnosed with ALL from ages 10 to 14 and treated at CCC/COG sites. The hazard ratios (HRs) were 1.3 for the 15-21 age group (P=0.3) and 1.2 for the 22-29 age group (P=0.8).

The reference group for this analysis was 10- to 14-year-olds treated at CCC/COG sites because the researchers excluded data from children with ALL ages 1 to 9. These patients were excluded because they have significantly better survival than the other groups, potentially as a result of different disease biology.

The researchers also found that treatment at CCC/COG sites did not improve survival for 30- to 39-year-olds with ALL. The HR for them was 3.4 (P<0.001).