User login

Glucose Management and Inpatient Mortality

Patients with diabetes currently comprise over 8% of the US population (over 25 million people) and more than 20% of hospitalized patients.[1, 2] Hospitalizations of patients with diabetes account for 23% of total hospital costs in the United States,[2] and patients with diabetes have worse outcomes after hospitalization for a variety of common medical conditions,[3, 4, 5, 6] as well as in intensive care unit (ICU) settings.[7, 8] Individuals with diabetes have historically experienced higher inpatient mortality than individuals without diabetes.[9] However, we recently reported that patients with diabetes at our large academic medical center have experienced a disproportionate reduction in in‐hospital mortality relative to patients without diabetes over the past decade.[10] This surprising trend begs further inquiry.

Improvement in in‐hospital mortality among patients with diabetes may stem from improved inpatient glycemic management. The landmark 2001 study by van den Berghe et al. demonstrating that intensive insulin therapy reduced postsurgical mortality among ICU patients ushered in an era of intensive inpatient glucose control.[11] However, follow‐up multicenter studies have not been able to replicate these results.[12, 13, 14, 15] In non‐ICU and nonsurgical settings, intensive glucose control has not yet been shown to have any mortality benefit, although it may impact other morbidities, such as postoperative infections.[16] Consequently, less stringent glycemic targets are now recommended.[17] Nonetheless, hospitals are being held accountable for certain aspects of inpatient glucose control. For example, the Centers for Medicare & Medicaid Services (CMS) began asking hospitals to report inpatient glucose control in cardiac surgery patients in 2004.[18] This measure is now publicly reported, and as of 2013 is included in the CMS Value‐Based Purchasing Program, which financially penalizes hospitals that do not meet targets.

Outpatient diabetes standards have also evolved in the past decade. The Diabetes Control and Complications Trial in 1993 and the United Kingdom Prospective Diabetes Study in 1997 demonstrated that better glycemic control in type 1 and newly diagnosed type 2 diabetes patients, respectively, improved clinical outcomes, and prompted guidelines for pharmacologic treatment of diabetic patients.[19, 20] However, subsequent randomized clinical trials have failed to establish a clear beneficial effect of intensive glucose control on primary cardiovascular endpoints among higher‐risk patients with longstanding type 2 diabetes,[21, 22, 23] and clinical practice recommendations now accept a more individualized approach to glycemic control.[24] Nonetheless, clinicians are also being held accountable for outpatient glucose control.[25]

To better understand the disproportionate reduction in mortality among hospitalized patients with diabetes that we observed, we first examined whether it was limited to surgical patients or patients in the ICU, the populations that have been demonstrated to benefit from intensive inpatient glucose control. Furthermore, given recent improvements in inpatient and outpatient glycemic control,[26, 27] we examined whether inpatient or outpatient glucose control explained the mortality trends. Results from this study contribute empirical evidence on real‐world effects of efforts to improve inpatient and outpatient glycemic control.

METHODS

Setting

During the study period, YaleNew Haven Hospital (YNHH) was an urban academic medical center in New Haven, Connecticut, with over 950 beds and an average of approximately 32,000 annual adult nonobstetric admissions. YNHH conducted a variety of inpatient glucose control initiatives during the study period. The surgical ICU began an informal medical teamdirected insulin infusion protocol in 2000 to 2001. In 2002, the medical ICU instituted a formal insulin infusion protocol with a target of 100 to 140 mg/dL, which spread to remaining hospital ICUs by the end of 2003. In 2005, YNHH launched a consultative inpatient diabetes management team to assist clinicians in controlling glucose in non‐ICU patients with diabetes. This team covered approximately 10 to 15 patients at a time and consisted of an advanced‐practice nurse practitioner, a supervising endocrinologist and endocrinology fellow, and a nurse educator to provide diabetic teaching. Additionally, in 2005, basal‐boluscorrection insulin order sets became available. The surgical ICU implemented a stringent insulin infusion protocol with target glucose of 80 to 110 mg/dL in 2006, but relaxed it (goal 80150 mg/dL) in 2007. Similarly, in 2006, YNHH made ICU insulin infusion recommendations more stringent in remaining ICUs (goal 90130 mg/dL), but relaxed them in 2010 (goal 120160 mg/dL), based on emerging data from clinical trials and prevailing national guidelines.

Participants and Data Sources

We included all adult, nonobstetric discharges from YNHH between January 1, 2000 and December 31, 2010. Repeat visits by the same patient were linked by medical record number. We obtained data from YNHH administrative billing, laboratory, and point‐of‐care capillary blood glucose databases. The Yale Human Investigation Committee approved our study design and granted a Health Insurance Portability and Accountability Act waiver and a waiver of patient consent.

Variables

Our primary endpoint was in‐hospital mortality. The primary exposure of interest was whether a patient had diabetes mellitus, defined as the presence of International Classification of Diseases, Ninth Revision codes 249.x, 250.x, V4585, V5391, or V6546 in any of the primary or secondary diagnosis codes in the index admission, or in any hospital encounter in the year prior to the index admission.

We assessed 2 effect‐modifying variables: ICU status (as measured by a charge for at least 1 night in the ICU) and service assignment to surgery (including neurosurgery and orthopedics), compared to medicine (including neurology). Independent explanatory variables included time between the start of the study and patient admission (measured as days/365), diabetes status, inpatient glucose control, and long‐term glucose control (as measured by hemoglobin A1c at any time in the 180 days prior to hospital admission in order to have adequate sample size). We assessed inpatient blood glucose control through point‐of‐care blood glucose meters (OneTouch SureStep; LifeScan, Inc., Milipitas, CA) at YNHH. We used 4 validated measures of inpatient glucose control: the proportion of days in each hospitalization in which there was any hypoglycemic episode (blood glucose value <70 mg/dL), the proportion of days in which there was any severely hyperglycemic episode (blood glucose value >299 mg/dL), the proportion of days in which mean blood glucose was considered to be within adequate control (all blood glucose values between 70 and 179 mg/dL), and the standard deviation of mean glucose during hospitalization as a measure of glycemic variability.[28]

Covariates included gender, age at time of admission, length of stay in days, race (defined by hospital registration), payer, Elixhauser comorbidity dummy variables (revised to exclude diabetes and to use only secondary diagnosis codes),[29] and primary discharge diagnosis grouped using Clinical Classifications Software,[30] based on established associations with in‐hospital mortality.

Statistical Analysis

We summarized demographic characteristics numerically and graphically for patients with and without diabetes and compared them using [2] and t tests. We summarized changes in inpatient and outpatient measures of glucose control over time numerically and graphically, and compared across years using the Wilcoxon rank sum test adjusted for multiple hypothesis testing.

We stratified all analyses first by ICU status and then by service assignment (medicine vs surgery). Statistical analyses within each stratum paralleled our previous approach to the full study cohort.[10] Taking each stratum separately (ie, only ICU patients or only medicine patients), we used a difference‐in‐differences approach comparing changes over time in in‐hospital mortality among patients with diabetes compared to those without diabetes. This approach enabled us to determine whether patients with diabetes had a different time trend in risk of in‐hospital mortality than those without diabetes. That is, for each stratum, we constructed multivariate logistic regression models including time in years, diabetes status, and the interaction between time and diabetes status as well as the aforementioned covariates. We calculated odds of death and confidence intervals for each additional year for patients with diabetes by exponentiating the sum of parameter estimates for time and the diabetes‐time interaction term. We evaluated all 2‐way interactions between year or diabetes status and the covariates in a multiple degree of freedom likelihood ratio test. We investigated nonlinearity of the relation between mortality and time by evaluating first and second‐order polynomials.

Because we found a significant decline in mortality risk for patients with versus without diabetes among ICU patients but not among non‐ICU patients, and because service assignment was not found to be an effect modifier, we then limited our sample to ICU patients with diabetes to better understand the role of inpatient and outpatient glucose control in accounting for observed mortality trends. First, we determined the relation between the measures of inpatient glucose control and changes in mortality over time using logistic regression. Then, we repeated this analysis in the subsets of patients who had inpatient glucose data and both inpatient and outpatient glycemic control data, adding inpatient and outpatient measures sequentially. Given the high level of missing outpatient glycemic control data, we compared demographic characteristics for diabetic ICU patients with and without such data using [2] and t tests, and found that patients with data were younger and less likely to be white and had longer mean length of stay, slightly worse performance on several measures of inpatient glucose control, and lower mortality (see Supporting Table 1 in the online version of this article).

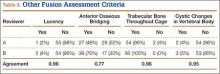

| Characteristic | Overall, N=322,939 | Any ICU Stay, N=54,646 | No ICU Stay, N=268,293 | Medical Service, N=196,325 | Surgical Service, N=126,614 |

|---|---|---|---|---|---|

| |||||

| Died during admission, n (%) | 7,587 (2.3) | 5,439 (10.0) | 2,147 (0.8) | 5,705 (2.9) | 1,883 (1.5) |

| Diabetes, n (%) | 76,758 (23.8) | 14,364 (26.3) | 62,394 (23.2) | 55,453 (28.2) | 21,305 (16.8) |

| Age, y, mean (SD) | 55.5 (20.0) | 61.0 (17.0) | 54.4 (21.7) | 60.3 (18.9) | 48.0 (23.8) |

| Age, full range (interquartile range) | 0118 (4273) | 18112 (4975) | 0118 (4072) | 0118 (4776) | 0111 (3266) |

| Female, n (%) | 159,227 (49.3) | 23,208 (42.5) | 134,296 (50.1) | 99,805 (50.8) | 59,422 (46.9) |

| White race, n (%) | 226,586 (70.2) | 41,982 (76.8) | 184,604 (68.8) | 132,749 (67.6) | 93,838 (74.1) |

| Insurance, n (%) | |||||

| Medicaid | 54,590 (16.9) | 7,222 (13.2) | 47,378 (17.7) | 35,229 (17.9) | 19,361 (15.3) |

| Medicare | 141,638 (43.9) | 27,458 (50.2) | 114,180 (42.6) | 100,615 (51.2) | 41,023 (32.4) |

| Commercial | 113,013 (35.0) | 18,248 (33.4) | 94,765 (35.3) | 53,510 (27.2) | 59,503 (47.0) |

| Uninsured | 13,521 (4.2) | 1,688 (3.1) | 11,833 (4.4) | 6,878 (3.5) | 6,643 (5.2) |

| Length of stay, d, mean (SD) | 5.4 (9.5) | 11.8 (17.8) | 4.2 (6.2) | 5.46 (10.52) | 5.42 (9.75) |

| Service, n (%) | |||||

| Medicine | 184,495 (57.1) | 27,190 (49.8) | 157,305 (58.6) | 184,496 (94.0) | |

| Surgery | 126,614 (39.2) | 25,602 (46.9) | 101,012 (37.7) | 126,614 (100%) | |

| Neurology | 11,829 (3.7) | 1,853 (3.4) | 9,976 (3.7) | 11,829 (6.0) | |

To explore the effects of dependence among observations from patients with multiple encounters, we compared parameter estimates derived from a model with all patient encounters (including repeated admissions for the same patient) with those from a model with a randomly sampled single visit per patient, and observed that there was no difference in parameter estimates between the 2 classes of models. For all analyses, we used a type I error of 5% (2 sided) to test for statistical significance using SAS version 9.3 (SAS Institute, Cary, NC) or R software (

RESULTS

We included 322,938 patient admissions. Of this sample, 54,645 (16.9%) had spent at least 1 night in the ICU. Overall, 76,758 patients (23.8%) had diabetes, representing 26.3% of ICU patients, 23.2% of non‐ICU patients, 28.2% of medical patients, and 16.8% of surgical patients (see Table 1 for demographic characteristics).

Mortality Trends Within Strata

Among ICU patients, the overall mortality rate was 9.9%: 10.5% of patients with diabetes and 9.8% of patients without diabetes. Among non‐ICU patients, the overall mortality rate was 0.8%: 0.9% of patients with diabetes and 0.7% of patients without diabetes.

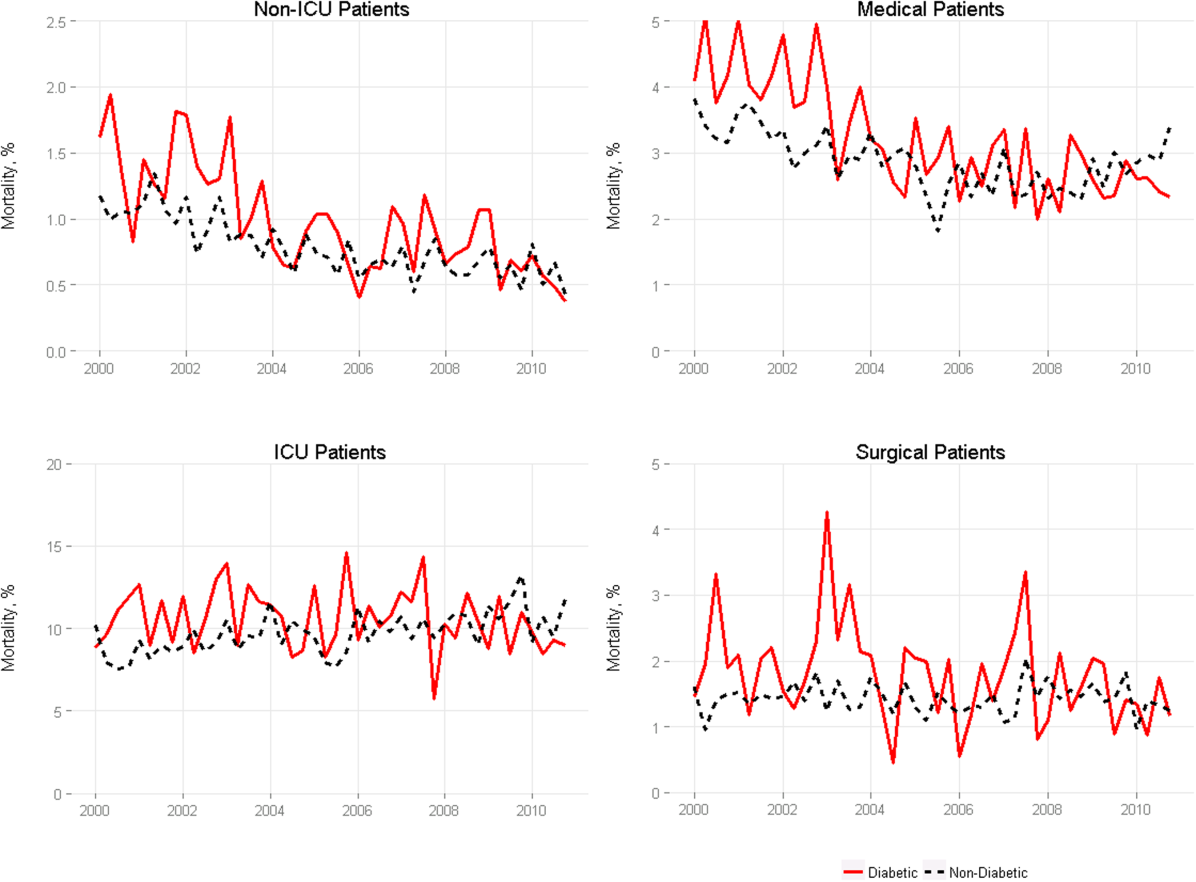

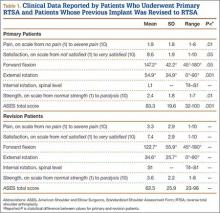

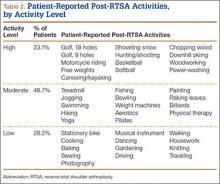

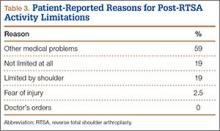

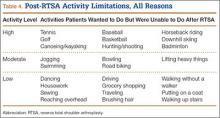

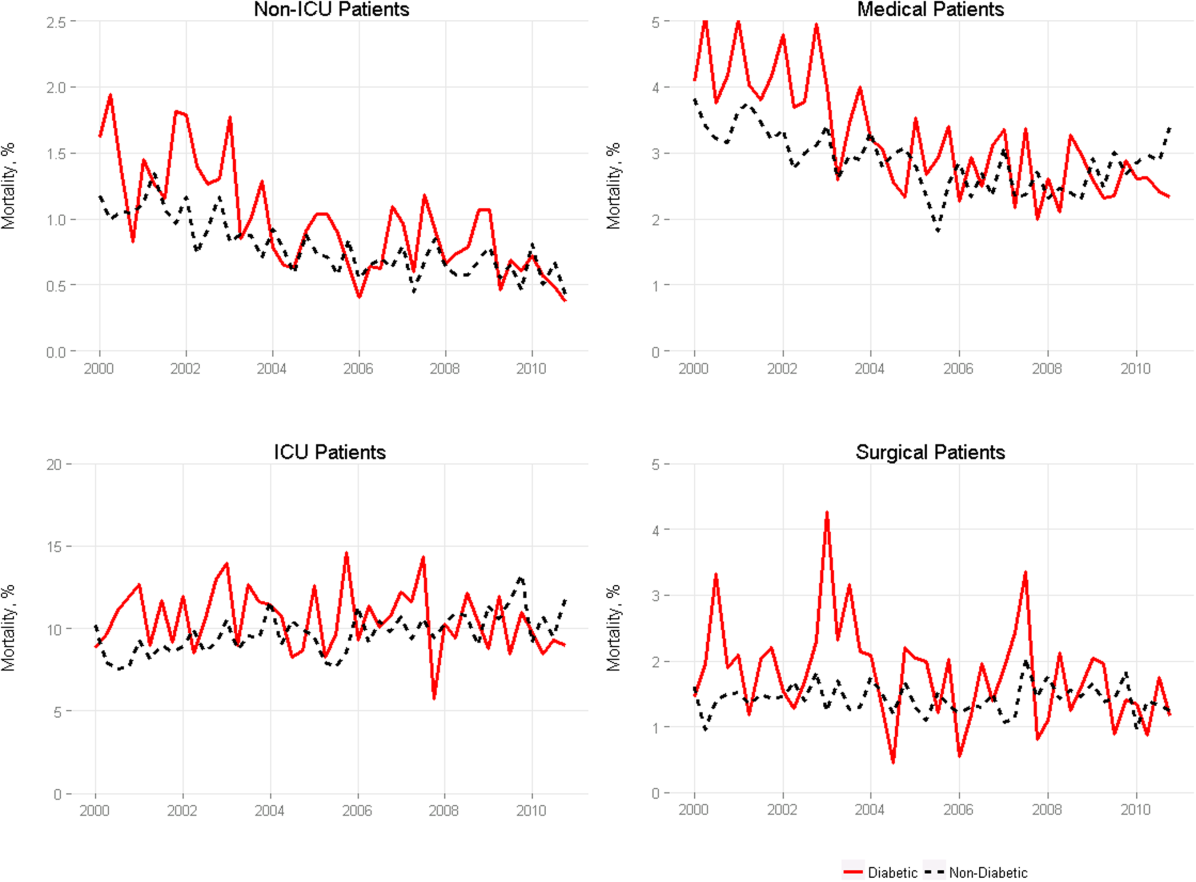

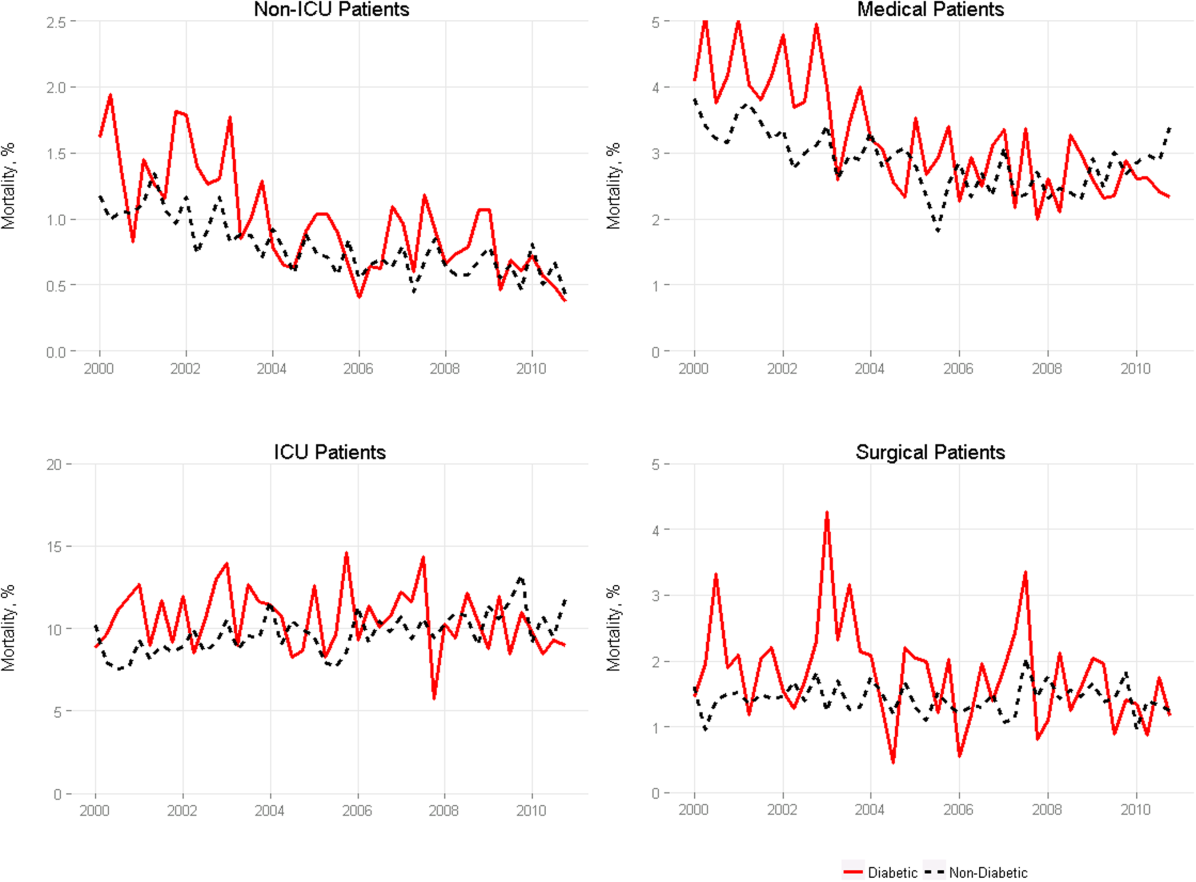

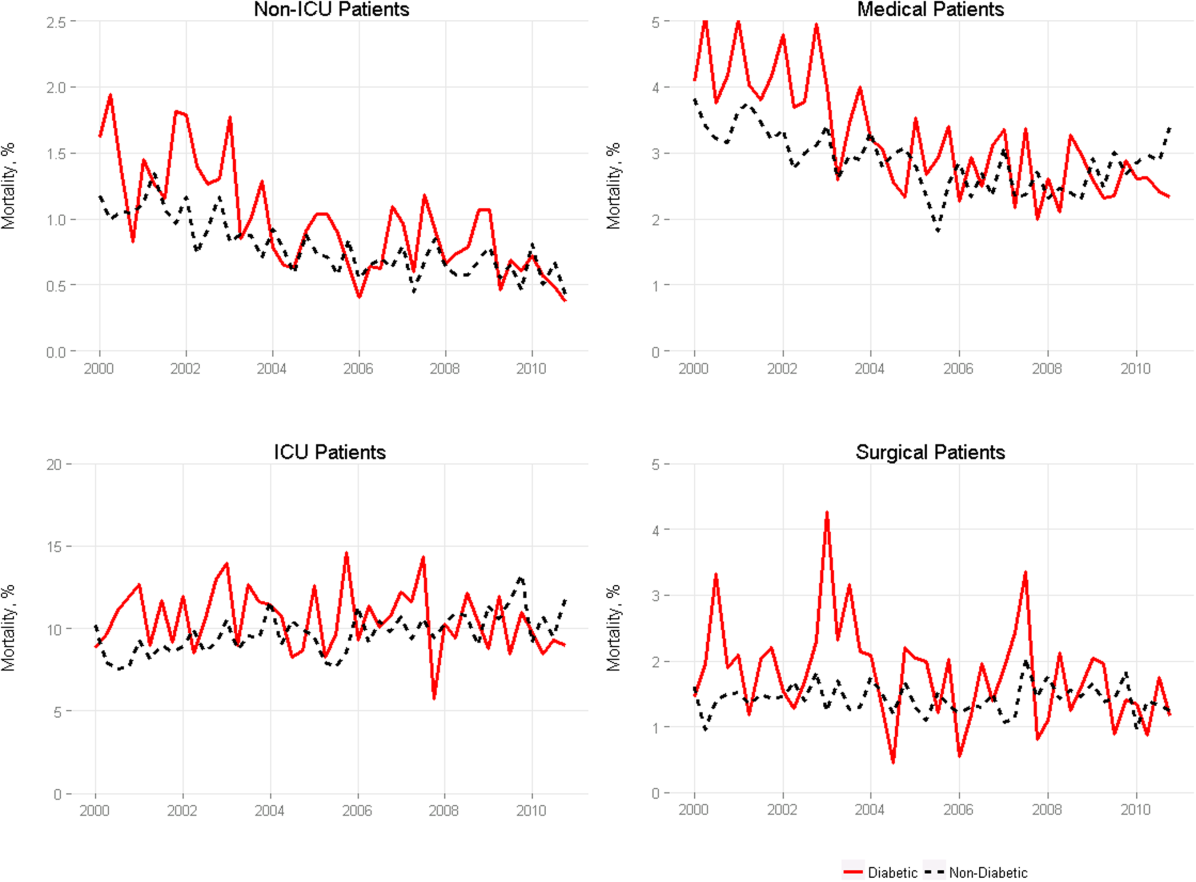

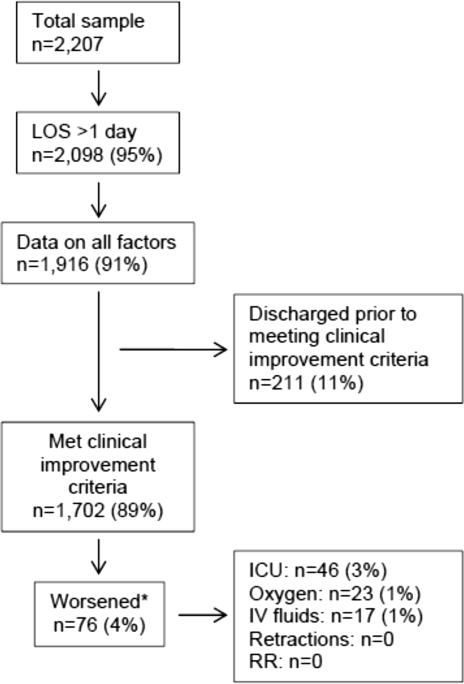

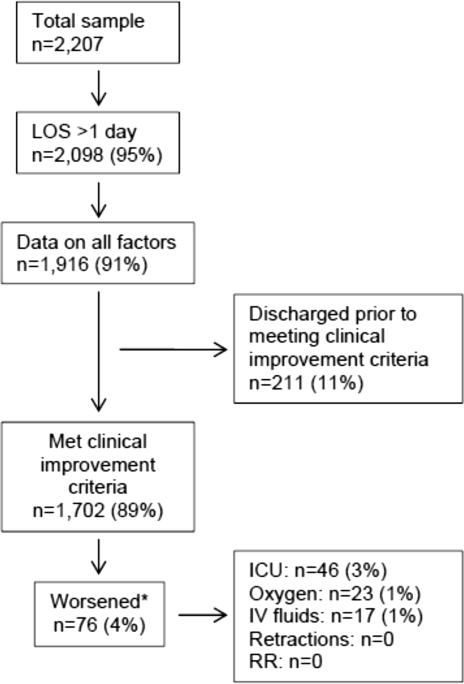

Among medical patients, the overall mortality rate was 2.9%: 3.1% of patients with diabetes and 2.8% of patients without diabetes. Among surgical patients, the overall mortality rate was 1.4%: 1.8% of patients with diabetes and 1.4% of patients without diabetes. Figure 1 shows quarterly in‐hospital mortality for patients with and without diabetes from 2000 to 2010 stratified by ICU status and by service assignment.

Table 2 describes the difference‐in‐differences regression analyses, stratified by ICU status and service assignment. Among ICU patients (Table 2, model 1), each successive year was associated with a 2.6% relative reduction in the adjusted odds of mortality (odds ratio [OR]: 0.974, 95% confidence interval [CI]: 0.963‐0.985) for patients without diabetes compared to a 7.8% relative reduction for those with diabetes (OR: 0.923, 95% CI: 0.906‐0.940). In other words, patients with diabetes compared to patients without diabetes had a significantly greater decline in odds of adjusted mortality of 5.3% per year (OR: 0.947, 95% CI: 0.927‐0.967). As a result, the adjusted odds of mortality among patients with versus without diabetes decreased from 1.352 in 2000 to 0.772 in 2010.

| Independent Variables | ICU Patients, N=54,646, OR (95% CI) | Non‐ICU Patients, N=268,293, OR (95% CI) | Medical Patients, N=196,325, OR (95% CI) | Surgical Patients, N=126,614, OR (95% CI) |

|---|---|---|---|---|

| Model 1 | Model 2 | Model 3 | Model 4 | |

| ||||

| Year | 0.974 (0.963‐0.985) | 0.925 (0.909‐0.940) | 0.943 (0.933‐0.954) | 0.995 (0.977‐1.103) |

| Diabetes | 1.352 (1.562‐1.171) | 0.958 (0.783‐1.173) | 1.186 (1.037‐1.356) | 1.213 (0.942‐1.563) |

| Diabetes*year | 0.947 (0.927‐0.967) | 0.977 (0.946‐1.008) | 0.961 (0.942‐0.980) | 0.955 (0.918‐0.994) |

| C statistic | 0.812 | 0.907 | 0.880 | 0.919 |

Among non‐ICU patients (Table 2, model 2), each successive year was associated with a 7.5% relative reduction in the adjusted odds of mortality (OR: 0.925, 95% CI: 0.909‐0.940) for patients without diabetes compared to a 9.6% relative reduction for those with diabetes (OR: 0.904, 95% CI: 0.879‐0.929); this greater decline in odds of adjusted mortality of 2.3% per year (OR: 0.977, 95% CI: 0.946‐1.008; P=0.148) was not statistically significant.

We found greater decline in odds of mortality among patients with diabetes than among patients without diabetes over time in both medical patients (3.9% greater decline per year; OR: 0.961, 95% CI: 0.942‐0.980) and surgical patients (4.5% greater decline per year; OR: 0.955, 95% CI: 0.918‐0.994), without a difference between the 2. Detailed results are shown in Table 2, models 3 and 4.

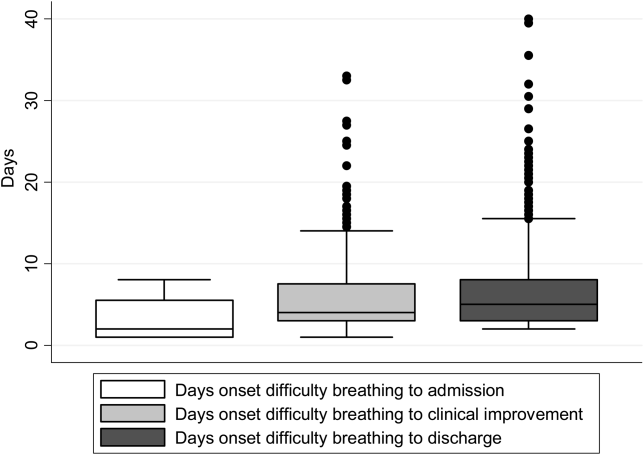

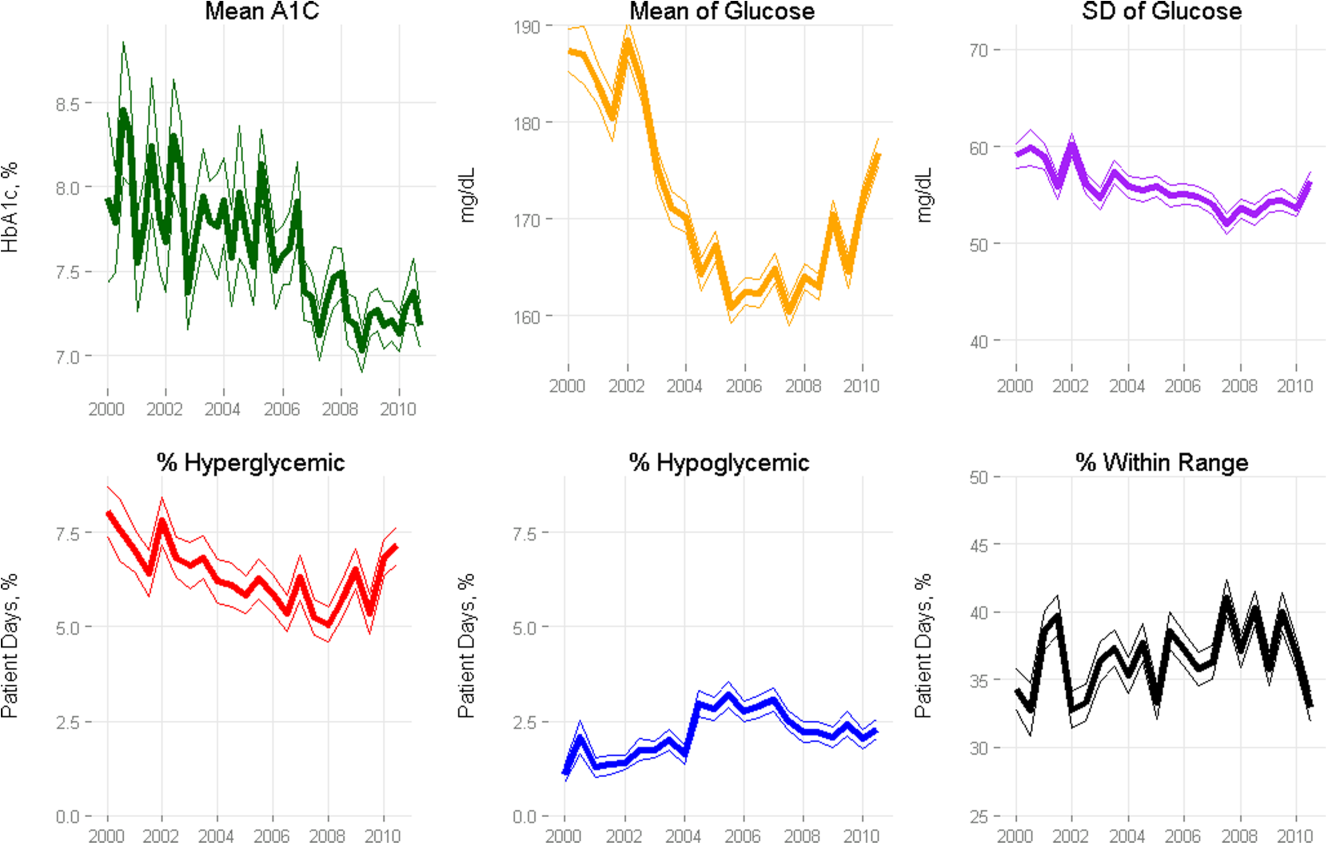

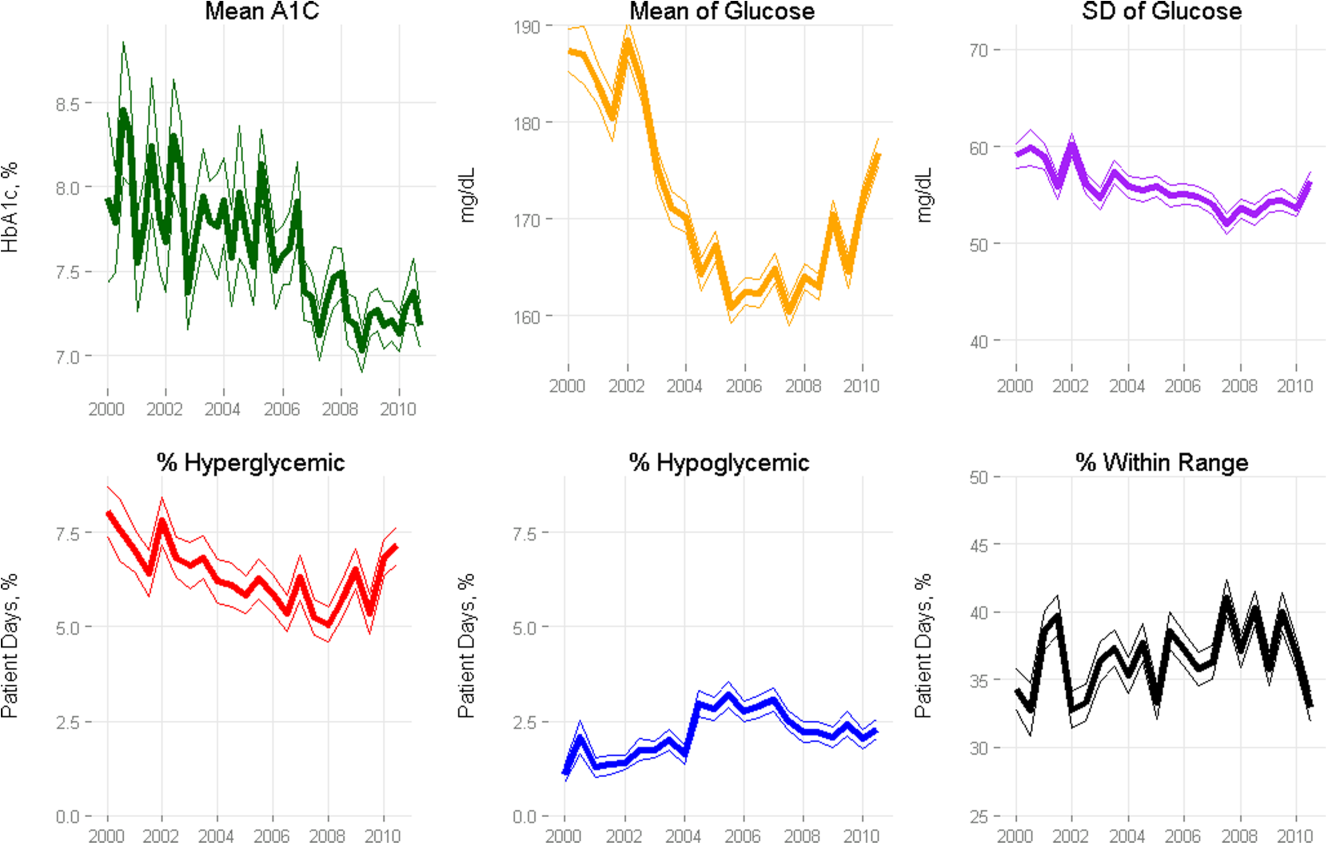

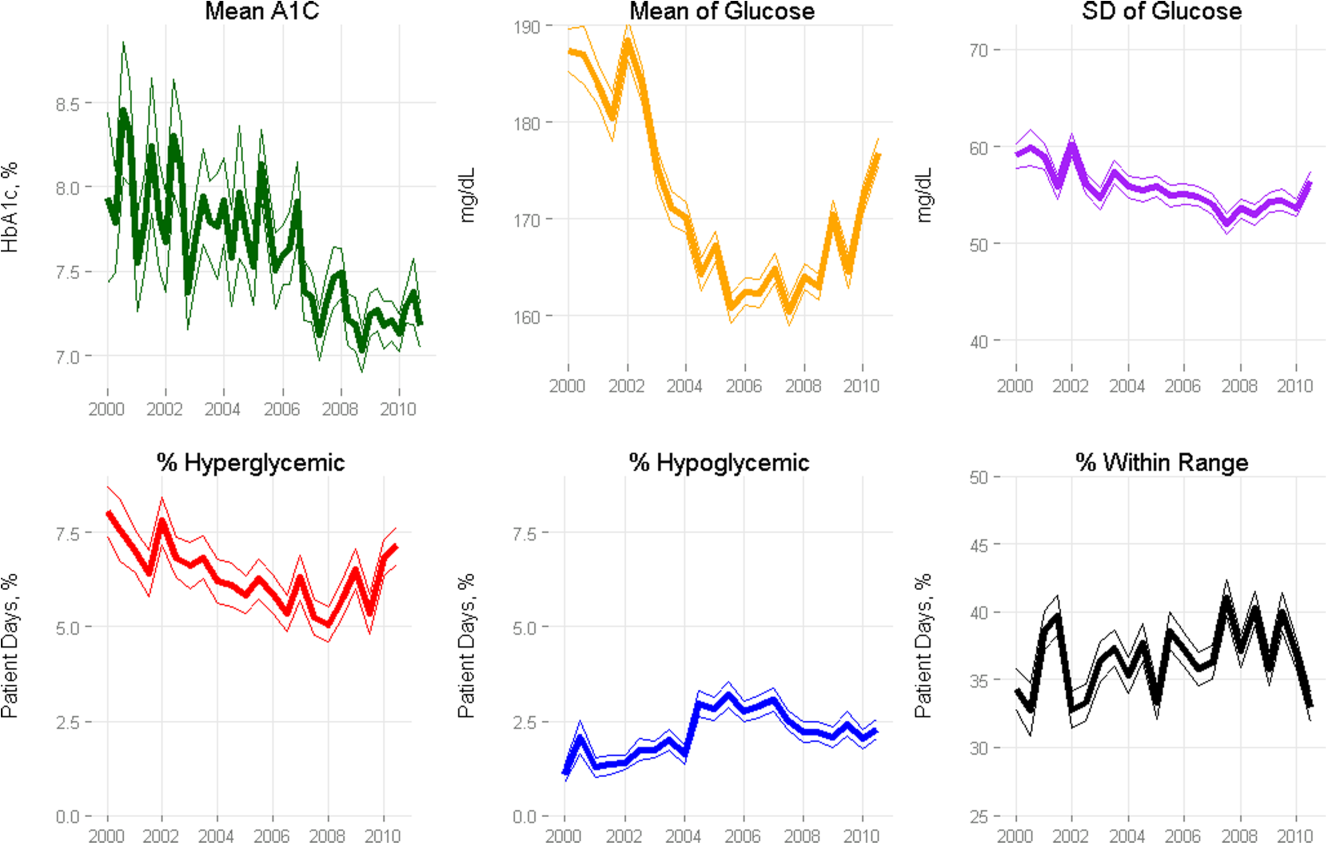

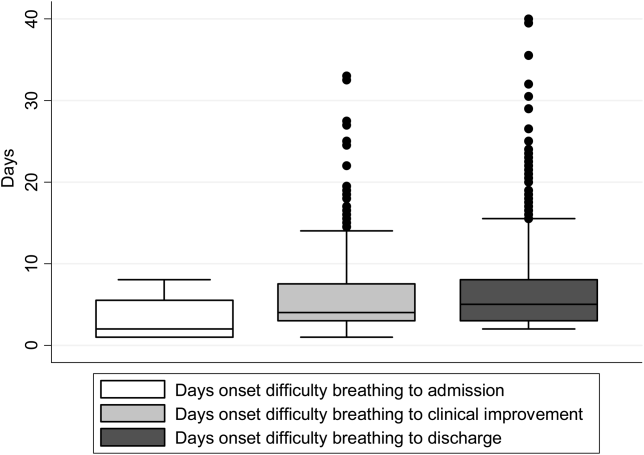

Glycemic Control

Among ICU patients with diabetes (N=14,364), at least 2 inpatient point‐of‐care glucose readings were available for 13,136 (91.5%), with a mean of 4.67 readings per day, whereas hemoglobin A1c data were available for only 5321 patients (37.0%). Both inpatient glucose data and hemoglobin A1c were available for 4989 patients (34.7%). Figure 2 shows trends in inpatient and outpatient glycemic control measures among ICU patients with diabetes over the study period. Mean hemoglobin A1c decreased from 7.7 in 2000 to 7.3 in 2010. Mean hospitalization glucose began at 187.2, reached a nadir of 162.4 in the third quarter (Q3) of 2007, and rose subsequently to 174.4 with loosened glucose control targets. Standard deviation of mean glucose and percentage of patient‐days with a severe hyperglycemic episode followed a similar pattern, though with nadirs in Q4 2007 and Q2 2008, respectively, whereas percentage of patient‐days with a hypoglycemic episode rose from 1.46% in 2000, peaked at 3.00% in Q3 2005, and returned to 2.15% in 2010. All changes in glucose control are significant with P<0.001.

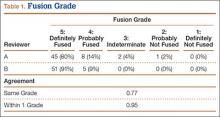

Mortality Trends and Glycemic Control

To determine whether glucose control explained the excess decline in odds of mortality among patients with diabetes in the ICU, we restricted our sample to ICU patients with diabetes and examined the association of diabetes with mortality after including measures of glucose control.

We first verified that the overall adjusted mortality trend among ICU patients with diabetes for whom we had measures of inpatient glucose control was similar to that of the full sample of ICU patients with diabetes. Similar to the full sample, we found that the adjusted excess odds of death significantly declined by a relative 7.3% each successive year (OR: 0.927, 95% CI: 0.907‐0.947; Table 3, model 1). We then included measures of inpatient glucose control in the model and found, as expected, that a higher percentage of days with severe hyperglycemia and with hypoglycemia was associated with an increased odds of death (P<0.001 for both; Table 3, model 2). Nonetheless, after including measures of inpatient glucose control, we found that the rate of change of excess odds of death for patients with diabetes was unchanged (OR: 0.926, 95% CI: 0.905‐0.947).

| Patients With Inpatient Glucose Control Measures, n=13,136 | Patients With Inpatient and Outpatient Glucose Control Measures, n=4,989 | ||||

|---|---|---|---|---|---|

| Independent Variables | Model 1, OR (95% CI) | Model 2, OR (95% CI) | Model 3, OR (95% CI) | Model 4, OR (95% CI) | Model 5, OR (95% CI) |

| |||||

| Year | 0.927 (0.907‐0.947) | 0.926 (0.905‐0.947) | 0.958 (0.919‐0.998) | 0.956 (0.916‐0.997) | 0.953 (0.914‐0.994) |

| % Severe hyperglycemic days | 1.016 (1.010‐1.021) | 1.009 (0.998‐1.020) | 1.010 (0.999‐1.021) | ||

| % Hypoglycemic days | 1.047 (1.040‐1.055) | 1.051 (1.037‐1.065) | 1.049 (1.036‐1.063) | ||

| % Normoglycemic days | 0.997 (0.994‐1.000) | 0.994 (0.989‐0.999) | 0.993 (0.988‐0.998) | ||

| SD of mean glucose | 0.996 (0.992‐1.000) | 0.993 (0.986‐1.000) | 0.994 (0.987‐1.002) | ||

| Mean HbA1c | 0.892 (0.828‐0.961) | ||||

| C statistic | 0.806 | 0.825 | 0.825 | 0.838 | 0.841 |

We then restricted our sample to patients with diabetes with both inpatient and outpatient glycemic control data and found that, in this subpopulation, the adjusted excess odds of death among patients with diabetes relative to those without significantly declined by a relative 4.2% each progressive year (OR: 0.958, 95% CI: 0.918‐0.998; Table 3, model 3). Including measures of inpatient glucose control in the model did not significantly change the rate of change of excess odds of death (OR: 0.956, 95% CI: 0.916‐0.997; Table 3, model 4), nor did including both measures of inpatient and outpatient glycemic control (OR: 0.953, 95% CI: 0.914‐0.994; Table 3, model 5).

DISCUSSION

We conducted a difference‐in‐difference analysis of in‐hospital mortality rates among adult patients with diabetes compared to patients without diabetes over 10 years, stratifying by ICU status and service assignment. For patients with any ICU stay, we found that the reduction in odds of mortality for patients with diabetes has been 3 times larger than the reduction in odds of mortality for patients without diabetes. For those without an ICU stay, we found no significant difference between patients with and without diabetes in the rate at which in‐hospital mortality declined. We did not find stratification by assignment to a medical or surgical service to be an effect modifier. Finally, despite the fact that our institution achieved better aggregate inpatient glucose control, less severe hyperglycemia, and better long‐term glucose control over the course of the decade, we did not find that either inpatient or outpatient glucose control explained the trend in mortality for patients with diabetes in the ICU. Our study is unique in its inclusion of all hospitalized patients and its ability to simultaneously assess whether both inpatient and outpatient glucose control are explanatory factors in the observed mortality trends.

The fact that improved inpatient glucose control did not explain the trend in mortality for patients with diabetes in the ICU is consistent with the majority of the literature on intensive inpatient glucose control. In randomized trials, intensive glucose control appears to be of greater benefit for patients without diabetes than for patients with diabetes.[31] In fact, in 1 study, patients with diabetes were the only group that did not benefit from intensive glucose control.[32] In our study, it is possible that the rise in hypoglycemia nullified some of the benefits of glucose control. Nationally, hospital admissions for hypoglycemia among Medicare beneficiaries now outnumber admissions for hyperglycemia.[27]

We also do not find that the decline in hemoglobin A1c attenuated the reduction in mortality in the minority of patients for whom these data were available. This is concordant with evidence from 3 randomized clinical trials that have failed to establish a clear beneficial effect of intensive outpatient glucose control on primary cardiovascular endpoints among older, high‐risk patients with type 2 diabetes using glucose‐lowering agents.[21, 22, 23] It is notable, however, that the population for whom we had available hemoglobin A1c results was not representative of the overall population of ICU patients with diabetes. Consequently, there may be an association of outpatient glucose control with inpatient mortality in the overall population of ICU patients with diabetes that we were not able to detect.

The decline in mortality among ICU patients with diabetes in our study may stem from factors other than glycemic control. It is possible that patients were diagnosed earlier in their course of disease in later years of the study period, making the population of patients with diabetes younger or healthier. Of note, however, our risk adjustment models were very robust, with C statistics from 0.82 to 0.92, suggesting that we were able to account for much of the mortality risk attributable to patient clinical and demographic factors. More intensive glucose management may have nonglycemic benefits, such as closer patient observation, which may themselves affect mortality. Alternatively, improved cardiovascular management for patients with diabetes may have decreased the incidence of cardiovascular events. During the study period, evidence from large clinical trials demonstrated the importance of tight blood pressure and lipid management in improving outcomes for patients with diabetes,[33, 34, 35, 36] guidelines for lipid management for patients with diabetes changed,[37] and fewer patients developed cardiovascular complications.[38] Finally, it is possible that our findings can be explained by an improvement in treatment of complications for which patients with diabetes previously have had disproportionately worse outcomes, such as percutaneous coronary intervention.[39]

Our findings may have important implications for both clinicians and policymakers. Changes in inpatient glucose management have required substantial additional resources on the part of hospitals. Our evidence regarding the questionable impact of inpatient glucose control on in‐hospital mortality trends for patients with diabetes is disappointing and highlights the need for multifaceted evaluation of the impact of such quality initiatives. There may, for instance, be benefits from tighter blood glucose control in the hospital beyond mortality, such as reduced infections, costs, or length of stay. On the outpatient side, our more limited data are consistent with recent studies that have not been able to show a mortality benefit in older diabetic patients from more stringent glycemic control. A reassessment of prevailing diabetes‐related quality measures, as recently called for by some,[40, 41] seems reasonable.

Our study must be interpreted in light of its limitations. It is possible that the improvements in glucose management were too small to result in a mortality benefit. The overall reduction of 25 mg dL achieved at our institution is less than the 33 to 50 mg/dL difference between intensive and conventional groups in those randomized clinical trials that have found reductions in mortality.[11, 42] In addition, an increase in mean glucose during the last 1 to 2 years of the observation period (in response to prevailing guidelines) could potentially have attenuated any benefit on mortality. The study does not include other important clinical endpoints, such as infections, complications, length of stay, and hospital costs. Additionally, we did not examine postdischarge mortality, which might have shown a different pattern. The small proportion of patients with hemoglobin A1c results may have hampered our ability to detect an effect of outpatient glucose control. Consequently, our findings regarding outpatient glucose control are only suggestive. Finally, our findings represent the experience of a single, large academic medical center and may not be generalizable to all settings.

Overall, we found that patients with diabetes in the ICU have experienced a disproportionate reduction in in‐hospital mortality over time that does not appear to be explained by improvements in either inpatient or outpatient glucose control. Although improved glycemic control may have other benefits, it does not appear to impact in‐hospital mortality. Our real‐world empirical results contribute to the discourse among clinicians and policymakers with regards to refocusing the approach to managing glucose in‐hospital and readjudication of diabetes‐related quality measures.

Acknowledgments

The authors would like to acknowledge the YaleNew Haven Hospital diabetes management team: Gael Ulisse, APRN, Helen Psarakis, APRN, Anne Kaisen, APRN, and the Yale Endocrine Fellows.

Disclosures: Design and conduct of the study: N. B., J. D., S. I., T. B., L. H. Collection, management, analysis, and interpretation of the data: N. B., B. J., J. D., J. R., J. B., S. I., L. H. Preparation, review, or approval of the manuscript: N. B., B. J., J. D., J. R., S. I., T. B., L. H. Leora Horwitz, MD, had full access to all the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis. Dr. Horwitz is supported by the National Institute on Aging (K08 AG038336) and by the American Federation for Aging Research through the Paul B. Beeson Career Development Award Program. This publication was also made possible by CTSA grant number UL1 RR024139 from the National Center for Research Resources and the National Center for Advancing Translational Science, components of the National Institutes of Health (NIH), and NIH roadmap for Medical Research. Its contents are solely the responsibility of the authors and do not necessarily represent the official view of the NIH. No funding source had any role in design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the manuscript; or decision to submit the manuscript for publication. Silvio E. Inzucchi, MD, serves on a Data Safety Monitoring Board for Novo Nordisk, a manufacturer of insulin products used in the hospital setting. The remaining authors declare no conflicts of interest.

- National Diabetes Information Clearinghouse. National Diabetes Statistics; 2011. Available at: http://diabetes.niddk.nih.gov/dm/pubs/america/index.aspx. Accessed November 12, 2013.

- Healthcare Cost and Utilization Project. Statistical brief #93; 2010. Available at: http://www.hcup‐us.ahrq.gov/reports/statbriefs/sb93.pdf. Accessed November 12, 2013.

- , , , et al. Association between diabetes mellitus and post‐discharge outcomes in patients hospitalized with heart failure: findings from the EVEREST trial. Eur J Heart Fail. 2013;15(2):194–202.

- , , , et al. Influence of diabetes mellitus on clinical outcome in the thrombolytic era of acute myocardial infarction. GUSTO‐I Investigators. Global Utilization of Streptokinase and Tissue Plasminogen Activator for Occluded Coronary Arteries. J Am Coll Cardiol. 1997;30(1):171–179.

- , , , , , . Type 2 diabetes and pneumonia outcomes: a population‐based cohort study. Diabetes Care. 2007;30(9):2251–2257.

- , , , . Prevalence and outcomes of diabetes, hypertension and cardiovascular disease in COPD. Eur Respir J. 2008;32(4):962–969.

- , , , , . The role of body mass index and diabetes in the development of acute organ failure and subsequent mortality in an observational cohort. Crit Care. 2006;10(5):R137.

- , , , , , . Type 2 diabetes and 1‐year mortality in intensive care unit patients. Eur J Clin Invest. 2013;43(3):238–247.

- , , . Excess mortality during hospital stays among patients with recorded diabetes compared with those without diabetes. Diabet Med. 2013;30(12):1393–1402.

- , , , et al. Decade‐long trends in mortality among patients with and without diabetes mellitus at a major academic medical center. JAMA Intern Med. 2014;174(7):1187–1188.

- , , , et al. Intensive insulin therapy in critically ill patients. N Engl J Med. 2001;345(19):1359–1367.

- , , , et al. Intensive versus conventional glucose control in critically ill patients. N Engl J Med. 2009;360(13):1283–1297.

- , , , et al. A prospective randomised multi‐centre controlled trial on tight glucose control by intensive insulin therapy in adult intensive care units: the Glucontrol study. Intensive Care Med. 2009;35(10):1738–1748.

- , , , et al. Intensive versus conventional insulin therapy: a randomized controlled trial in medical and surgical critically ill patients. Crit Care Med. 2008;36(12):3190–3197.

- , , , et al. Intensive insulin therapy in the medical ICU. N Engl J Med. 2006;354(5):449–461.

- , , , et al. Glycemic control in non‐critically ill hospitalized patients: a systematic review and meta‐analysis. J Clin Endocrinol Metab. 2012;97(1):49–58.

- , , , et al. American Association of Clinical Endocrinologists and American Diabetes Association consensus statement on inpatient glycemic control. Diabetes Care. 2009;32(6):1119–1131.

- Agency for Healthcare Research and Quality National Quality Measures Clearinghouse. Percent of cardiac surgery patients with controlled 6 A.M. postoperative blood glucose; 2012. Available at: http://www.qualitymeasures.ahrq.gov/content.aspx?id=35532. Accessed November 12, 2013.

- The effect of intensive treatment of diabetes on the development and progression of long‐term complications in insulin‐dependent diabetes mellitus. The Diabetes Control and Complications Trial Research Group. N Engl J Med. 1993;329(14):977–986.

- , , , et al. Intensive blood‐glucose control with sulphonylureas or insulin compared with conventional treatment and risk of complications in patients with type 2 diabetes (UKPDS 33). Lancet. 1998;352(9131):837–853.

- Effects of intensive glucose lowering in type 2 diabetes. N Engl J Med. 2008;358(24):2545–2559.

- , , , et al. Glucose control and vascular complications in veterans with type 2 diabetes. N Engl J Med. 2009;360(2):129–139.

- , , , et al. Intensive blood glucose control and vascular outcomes in patients with type 2 diabetes. N Engl J Med. 2008;358(24):2560–2572.

- . Standards of medical care in diabetes—2014. Diabetes Care. 2014;37(suppl 1):S14–S80.

- National Committee for Quality Assurance. HEDIS 2013. Available at: http://www.ncqa.org/HEDISQualityMeasurement.aspx. Accessed November 12, 2013.

- , , , . Is glycemic control improving in US adults? Diabetes Care. 2008;31(1):81–86.

- , , , et al. National trends in US hospital admissions for hyperglycemia and hypoglycemia among medicare beneficiaries, 1999 to 2011. JAMA Intern Med. 2014;174(7):1116–1124.

- , , , et al. "Glucometrics"—assessing the quality of inpatient glucose management. Diabetes Technol Ther. 2006;8(5):560–569.

- , , , , . A modification of the Elixhauser comorbidity measures into a point system for hospital death using administrative data. Med Care. 2009;47(6):626–633.

- Healthcare Cost and Utilization Project. Clinical Classifications Software (CCS) for ICD‐9‐CM; 2013. Available at: http://www.hcup‐us.ahrq.gov/toolssoftware/ccs/ccs.jsp. Accessed November 12, 2013.

- , , , , . The impact of premorbid diabetic status on the relationship between the three domains of glycemic control and mortality in critically ill patients. Curr Opin Clin Nutr Metab Care. 2012;15(2):151–160.

- , , , et al. Intensive insulin therapy in mixed medical/surgical intensive care units: benefit versus harm. Diabetes. 2006;55(11):3151–3159.

- Tight blood pressure control and risk of macrovascular and microvascular complications in type 2 diabetes: UKPDS 38. UK Prospective Diabetes Study Group. BMJ. 1998;317(7160):703–713.

- , , , et al. Effects of a fixed combination of perindopril and indapamide on macrovascular and microvascular outcomes in patients with type 2 diabetes mellitus (the ADVANCE trial): a randomised controlled trial. Lancet. 2007;370(9590):829–840.

- , , , , . MRC/BHF heart protection study of cholesterol‐lowering with simvastatin in 5963 people with diabetes: a randomised placebo‐controlled trial. Lancet. 2003;361(9374):2005–2016.

- , , , et al. Primary prevention of cardiovascular disease with atorvastatin in type 2 diabetes in the Collaborative Atorvastatin Diabetes Study (CARDS): multicentre randomised placebo‐controlled trial. Lancet. 2004;364(9435):685–696.

- , , , . Expert panel on detection, evaluation and treatment of high blood cholesterol in adults. Executive summary of the third report of the national cholesterol education program (NCEP) adult treatment panel (atp III). JAMA. 2001;285(19):2486–2497.

- , , , et al. Changes in diabetes‐related complications in the United States, 1990–2010. N Engl J Med. 2014;370(16):1514–1523.

- , , . Coronary heart disease in patients with diabetes: part II: recent advances in coronary revascularization. J Am Coll Cardiol. 2007;49(6):643–656.

- , , , et al. Management of hyperglycemia in type 2 diabetes: a patient‐centered approach position statement of the American Diabetes Association (ADA) and the European Association for the Study of Diabetes (EASD). Diabetes Care. 2012;35(6):1364–1379.

- , , , , . Assessing potential glycemic overtreatment in persons at hypoglycemic risk. JAMA Intern Med. 2013;174(2):259–268.

- , , , . Glycometabolic state at admission: important risk marker of mortality in conventionally treated patients with diabetes mellitus and acute myocardial infarction: long‐term results from the Diabetes and Insulin‐Glucose Infusion in Acute Myocardial Infarction (DIGAMI) study. Circulation. 1999;99(20):2626–2632.

Patients with diabetes currently comprise over 8% of the US population (over 25 million people) and more than 20% of hospitalized patients.[1, 2] Hospitalizations of patients with diabetes account for 23% of total hospital costs in the United States,[2] and patients with diabetes have worse outcomes after hospitalization for a variety of common medical conditions,[3, 4, 5, 6] as well as in intensive care unit (ICU) settings.[7, 8] Individuals with diabetes have historically experienced higher inpatient mortality than individuals without diabetes.[9] However, we recently reported that patients with diabetes at our large academic medical center have experienced a disproportionate reduction in in‐hospital mortality relative to patients without diabetes over the past decade.[10] This surprising trend begs further inquiry.

Improvement in in‐hospital mortality among patients with diabetes may stem from improved inpatient glycemic management. The landmark 2001 study by van den Berghe et al. demonstrating that intensive insulin therapy reduced postsurgical mortality among ICU patients ushered in an era of intensive inpatient glucose control.[11] However, follow‐up multicenter studies have not been able to replicate these results.[12, 13, 14, 15] In non‐ICU and nonsurgical settings, intensive glucose control has not yet been shown to have any mortality benefit, although it may impact other morbidities, such as postoperative infections.[16] Consequently, less stringent glycemic targets are now recommended.[17] Nonetheless, hospitals are being held accountable for certain aspects of inpatient glucose control. For example, the Centers for Medicare & Medicaid Services (CMS) began asking hospitals to report inpatient glucose control in cardiac surgery patients in 2004.[18] This measure is now publicly reported, and as of 2013 is included in the CMS Value‐Based Purchasing Program, which financially penalizes hospitals that do not meet targets.

Outpatient diabetes standards have also evolved in the past decade. The Diabetes Control and Complications Trial in 1993 and the United Kingdom Prospective Diabetes Study in 1997 demonstrated that better glycemic control in type 1 and newly diagnosed type 2 diabetes patients, respectively, improved clinical outcomes, and prompted guidelines for pharmacologic treatment of diabetic patients.[19, 20] However, subsequent randomized clinical trials have failed to establish a clear beneficial effect of intensive glucose control on primary cardiovascular endpoints among higher‐risk patients with longstanding type 2 diabetes,[21, 22, 23] and clinical practice recommendations now accept a more individualized approach to glycemic control.[24] Nonetheless, clinicians are also being held accountable for outpatient glucose control.[25]

To better understand the disproportionate reduction in mortality among hospitalized patients with diabetes that we observed, we first examined whether it was limited to surgical patients or patients in the ICU, the populations that have been demonstrated to benefit from intensive inpatient glucose control. Furthermore, given recent improvements in inpatient and outpatient glycemic control,[26, 27] we examined whether inpatient or outpatient glucose control explained the mortality trends. Results from this study contribute empirical evidence on real‐world effects of efforts to improve inpatient and outpatient glycemic control.

METHODS

Setting

During the study period, YaleNew Haven Hospital (YNHH) was an urban academic medical center in New Haven, Connecticut, with over 950 beds and an average of approximately 32,000 annual adult nonobstetric admissions. YNHH conducted a variety of inpatient glucose control initiatives during the study period. The surgical ICU began an informal medical teamdirected insulin infusion protocol in 2000 to 2001. In 2002, the medical ICU instituted a formal insulin infusion protocol with a target of 100 to 140 mg/dL, which spread to remaining hospital ICUs by the end of 2003. In 2005, YNHH launched a consultative inpatient diabetes management team to assist clinicians in controlling glucose in non‐ICU patients with diabetes. This team covered approximately 10 to 15 patients at a time and consisted of an advanced‐practice nurse practitioner, a supervising endocrinologist and endocrinology fellow, and a nurse educator to provide diabetic teaching. Additionally, in 2005, basal‐boluscorrection insulin order sets became available. The surgical ICU implemented a stringent insulin infusion protocol with target glucose of 80 to 110 mg/dL in 2006, but relaxed it (goal 80150 mg/dL) in 2007. Similarly, in 2006, YNHH made ICU insulin infusion recommendations more stringent in remaining ICUs (goal 90130 mg/dL), but relaxed them in 2010 (goal 120160 mg/dL), based on emerging data from clinical trials and prevailing national guidelines.

Participants and Data Sources

We included all adult, nonobstetric discharges from YNHH between January 1, 2000 and December 31, 2010. Repeat visits by the same patient were linked by medical record number. We obtained data from YNHH administrative billing, laboratory, and point‐of‐care capillary blood glucose databases. The Yale Human Investigation Committee approved our study design and granted a Health Insurance Portability and Accountability Act waiver and a waiver of patient consent.

Variables

Our primary endpoint was in‐hospital mortality. The primary exposure of interest was whether a patient had diabetes mellitus, defined as the presence of International Classification of Diseases, Ninth Revision codes 249.x, 250.x, V4585, V5391, or V6546 in any of the primary or secondary diagnosis codes in the index admission, or in any hospital encounter in the year prior to the index admission.

We assessed 2 effect‐modifying variables: ICU status (as measured by a charge for at least 1 night in the ICU) and service assignment to surgery (including neurosurgery and orthopedics), compared to medicine (including neurology). Independent explanatory variables included time between the start of the study and patient admission (measured as days/365), diabetes status, inpatient glucose control, and long‐term glucose control (as measured by hemoglobin A1c at any time in the 180 days prior to hospital admission in order to have adequate sample size). We assessed inpatient blood glucose control through point‐of‐care blood glucose meters (OneTouch SureStep; LifeScan, Inc., Milipitas, CA) at YNHH. We used 4 validated measures of inpatient glucose control: the proportion of days in each hospitalization in which there was any hypoglycemic episode (blood glucose value <70 mg/dL), the proportion of days in which there was any severely hyperglycemic episode (blood glucose value >299 mg/dL), the proportion of days in which mean blood glucose was considered to be within adequate control (all blood glucose values between 70 and 179 mg/dL), and the standard deviation of mean glucose during hospitalization as a measure of glycemic variability.[28]

Covariates included gender, age at time of admission, length of stay in days, race (defined by hospital registration), payer, Elixhauser comorbidity dummy variables (revised to exclude diabetes and to use only secondary diagnosis codes),[29] and primary discharge diagnosis grouped using Clinical Classifications Software,[30] based on established associations with in‐hospital mortality.

Statistical Analysis

We summarized demographic characteristics numerically and graphically for patients with and without diabetes and compared them using [2] and t tests. We summarized changes in inpatient and outpatient measures of glucose control over time numerically and graphically, and compared across years using the Wilcoxon rank sum test adjusted for multiple hypothesis testing.

We stratified all analyses first by ICU status and then by service assignment (medicine vs surgery). Statistical analyses within each stratum paralleled our previous approach to the full study cohort.[10] Taking each stratum separately (ie, only ICU patients or only medicine patients), we used a difference‐in‐differences approach comparing changes over time in in‐hospital mortality among patients with diabetes compared to those without diabetes. This approach enabled us to determine whether patients with diabetes had a different time trend in risk of in‐hospital mortality than those without diabetes. That is, for each stratum, we constructed multivariate logistic regression models including time in years, diabetes status, and the interaction between time and diabetes status as well as the aforementioned covariates. We calculated odds of death and confidence intervals for each additional year for patients with diabetes by exponentiating the sum of parameter estimates for time and the diabetes‐time interaction term. We evaluated all 2‐way interactions between year or diabetes status and the covariates in a multiple degree of freedom likelihood ratio test. We investigated nonlinearity of the relation between mortality and time by evaluating first and second‐order polynomials.

Because we found a significant decline in mortality risk for patients with versus without diabetes among ICU patients but not among non‐ICU patients, and because service assignment was not found to be an effect modifier, we then limited our sample to ICU patients with diabetes to better understand the role of inpatient and outpatient glucose control in accounting for observed mortality trends. First, we determined the relation between the measures of inpatient glucose control and changes in mortality over time using logistic regression. Then, we repeated this analysis in the subsets of patients who had inpatient glucose data and both inpatient and outpatient glycemic control data, adding inpatient and outpatient measures sequentially. Given the high level of missing outpatient glycemic control data, we compared demographic characteristics for diabetic ICU patients with and without such data using [2] and t tests, and found that patients with data were younger and less likely to be white and had longer mean length of stay, slightly worse performance on several measures of inpatient glucose control, and lower mortality (see Supporting Table 1 in the online version of this article).

| Characteristic | Overall, N=322,939 | Any ICU Stay, N=54,646 | No ICU Stay, N=268,293 | Medical Service, N=196,325 | Surgical Service, N=126,614 |

|---|---|---|---|---|---|

| |||||

| Died during admission, n (%) | 7,587 (2.3) | 5,439 (10.0) | 2,147 (0.8) | 5,705 (2.9) | 1,883 (1.5) |

| Diabetes, n (%) | 76,758 (23.8) | 14,364 (26.3) | 62,394 (23.2) | 55,453 (28.2) | 21,305 (16.8) |

| Age, y, mean (SD) | 55.5 (20.0) | 61.0 (17.0) | 54.4 (21.7) | 60.3 (18.9) | 48.0 (23.8) |

| Age, full range (interquartile range) | 0118 (4273) | 18112 (4975) | 0118 (4072) | 0118 (4776) | 0111 (3266) |

| Female, n (%) | 159,227 (49.3) | 23,208 (42.5) | 134,296 (50.1) | 99,805 (50.8) | 59,422 (46.9) |

| White race, n (%) | 226,586 (70.2) | 41,982 (76.8) | 184,604 (68.8) | 132,749 (67.6) | 93,838 (74.1) |

| Insurance, n (%) | |||||

| Medicaid | 54,590 (16.9) | 7,222 (13.2) | 47,378 (17.7) | 35,229 (17.9) | 19,361 (15.3) |

| Medicare | 141,638 (43.9) | 27,458 (50.2) | 114,180 (42.6) | 100,615 (51.2) | 41,023 (32.4) |

| Commercial | 113,013 (35.0) | 18,248 (33.4) | 94,765 (35.3) | 53,510 (27.2) | 59,503 (47.0) |

| Uninsured | 13,521 (4.2) | 1,688 (3.1) | 11,833 (4.4) | 6,878 (3.5) | 6,643 (5.2) |

| Length of stay, d, mean (SD) | 5.4 (9.5) | 11.8 (17.8) | 4.2 (6.2) | 5.46 (10.52) | 5.42 (9.75) |

| Service, n (%) | |||||

| Medicine | 184,495 (57.1) | 27,190 (49.8) | 157,305 (58.6) | 184,496 (94.0) | |

| Surgery | 126,614 (39.2) | 25,602 (46.9) | 101,012 (37.7) | 126,614 (100%) | |

| Neurology | 11,829 (3.7) | 1,853 (3.4) | 9,976 (3.7) | 11,829 (6.0) | |

To explore the effects of dependence among observations from patients with multiple encounters, we compared parameter estimates derived from a model with all patient encounters (including repeated admissions for the same patient) with those from a model with a randomly sampled single visit per patient, and observed that there was no difference in parameter estimates between the 2 classes of models. For all analyses, we used a type I error of 5% (2 sided) to test for statistical significance using SAS version 9.3 (SAS Institute, Cary, NC) or R software (

RESULTS

We included 322,938 patient admissions. Of this sample, 54,645 (16.9%) had spent at least 1 night in the ICU. Overall, 76,758 patients (23.8%) had diabetes, representing 26.3% of ICU patients, 23.2% of non‐ICU patients, 28.2% of medical patients, and 16.8% of surgical patients (see Table 1 for demographic characteristics).

Mortality Trends Within Strata

Among ICU patients, the overall mortality rate was 9.9%: 10.5% of patients with diabetes and 9.8% of patients without diabetes. Among non‐ICU patients, the overall mortality rate was 0.8%: 0.9% of patients with diabetes and 0.7% of patients without diabetes.

Among medical patients, the overall mortality rate was 2.9%: 3.1% of patients with diabetes and 2.8% of patients without diabetes. Among surgical patients, the overall mortality rate was 1.4%: 1.8% of patients with diabetes and 1.4% of patients without diabetes. Figure 1 shows quarterly in‐hospital mortality for patients with and without diabetes from 2000 to 2010 stratified by ICU status and by service assignment.

Table 2 describes the difference‐in‐differences regression analyses, stratified by ICU status and service assignment. Among ICU patients (Table 2, model 1), each successive year was associated with a 2.6% relative reduction in the adjusted odds of mortality (odds ratio [OR]: 0.974, 95% confidence interval [CI]: 0.963‐0.985) for patients without diabetes compared to a 7.8% relative reduction for those with diabetes (OR: 0.923, 95% CI: 0.906‐0.940). In other words, patients with diabetes compared to patients without diabetes had a significantly greater decline in odds of adjusted mortality of 5.3% per year (OR: 0.947, 95% CI: 0.927‐0.967). As a result, the adjusted odds of mortality among patients with versus without diabetes decreased from 1.352 in 2000 to 0.772 in 2010.

| Independent Variables | ICU Patients, N=54,646, OR (95% CI) | Non‐ICU Patients, N=268,293, OR (95% CI) | Medical Patients, N=196,325, OR (95% CI) | Surgical Patients, N=126,614, OR (95% CI) |

|---|---|---|---|---|

| Model 1 | Model 2 | Model 3 | Model 4 | |

| ||||

| Year | 0.974 (0.963‐0.985) | 0.925 (0.909‐0.940) | 0.943 (0.933‐0.954) | 0.995 (0.977‐1.103) |

| Diabetes | 1.352 (1.562‐1.171) | 0.958 (0.783‐1.173) | 1.186 (1.037‐1.356) | 1.213 (0.942‐1.563) |

| Diabetes*year | 0.947 (0.927‐0.967) | 0.977 (0.946‐1.008) | 0.961 (0.942‐0.980) | 0.955 (0.918‐0.994) |

| C statistic | 0.812 | 0.907 | 0.880 | 0.919 |

Among non‐ICU patients (Table 2, model 2), each successive year was associated with a 7.5% relative reduction in the adjusted odds of mortality (OR: 0.925, 95% CI: 0.909‐0.940) for patients without diabetes compared to a 9.6% relative reduction for those with diabetes (OR: 0.904, 95% CI: 0.879‐0.929); this greater decline in odds of adjusted mortality of 2.3% per year (OR: 0.977, 95% CI: 0.946‐1.008; P=0.148) was not statistically significant.

We found greater decline in odds of mortality among patients with diabetes than among patients without diabetes over time in both medical patients (3.9% greater decline per year; OR: 0.961, 95% CI: 0.942‐0.980) and surgical patients (4.5% greater decline per year; OR: 0.955, 95% CI: 0.918‐0.994), without a difference between the 2. Detailed results are shown in Table 2, models 3 and 4.

Glycemic Control

Among ICU patients with diabetes (N=14,364), at least 2 inpatient point‐of‐care glucose readings were available for 13,136 (91.5%), with a mean of 4.67 readings per day, whereas hemoglobin A1c data were available for only 5321 patients (37.0%). Both inpatient glucose data and hemoglobin A1c were available for 4989 patients (34.7%). Figure 2 shows trends in inpatient and outpatient glycemic control measures among ICU patients with diabetes over the study period. Mean hemoglobin A1c decreased from 7.7 in 2000 to 7.3 in 2010. Mean hospitalization glucose began at 187.2, reached a nadir of 162.4 in the third quarter (Q3) of 2007, and rose subsequently to 174.4 with loosened glucose control targets. Standard deviation of mean glucose and percentage of patient‐days with a severe hyperglycemic episode followed a similar pattern, though with nadirs in Q4 2007 and Q2 2008, respectively, whereas percentage of patient‐days with a hypoglycemic episode rose from 1.46% in 2000, peaked at 3.00% in Q3 2005, and returned to 2.15% in 2010. All changes in glucose control are significant with P<0.001.

Mortality Trends and Glycemic Control

To determine whether glucose control explained the excess decline in odds of mortality among patients with diabetes in the ICU, we restricted our sample to ICU patients with diabetes and examined the association of diabetes with mortality after including measures of glucose control.

We first verified that the overall adjusted mortality trend among ICU patients with diabetes for whom we had measures of inpatient glucose control was similar to that of the full sample of ICU patients with diabetes. Similar to the full sample, we found that the adjusted excess odds of death significantly declined by a relative 7.3% each successive year (OR: 0.927, 95% CI: 0.907‐0.947; Table 3, model 1). We then included measures of inpatient glucose control in the model and found, as expected, that a higher percentage of days with severe hyperglycemia and with hypoglycemia was associated with an increased odds of death (P<0.001 for both; Table 3, model 2). Nonetheless, after including measures of inpatient glucose control, we found that the rate of change of excess odds of death for patients with diabetes was unchanged (OR: 0.926, 95% CI: 0.905‐0.947).

| Patients With Inpatient Glucose Control Measures, n=13,136 | Patients With Inpatient and Outpatient Glucose Control Measures, n=4,989 | ||||

|---|---|---|---|---|---|

| Independent Variables | Model 1, OR (95% CI) | Model 2, OR (95% CI) | Model 3, OR (95% CI) | Model 4, OR (95% CI) | Model 5, OR (95% CI) |

| |||||

| Year | 0.927 (0.907‐0.947) | 0.926 (0.905‐0.947) | 0.958 (0.919‐0.998) | 0.956 (0.916‐0.997) | 0.953 (0.914‐0.994) |

| % Severe hyperglycemic days | 1.016 (1.010‐1.021) | 1.009 (0.998‐1.020) | 1.010 (0.999‐1.021) | ||

| % Hypoglycemic days | 1.047 (1.040‐1.055) | 1.051 (1.037‐1.065) | 1.049 (1.036‐1.063) | ||

| % Normoglycemic days | 0.997 (0.994‐1.000) | 0.994 (0.989‐0.999) | 0.993 (0.988‐0.998) | ||

| SD of mean glucose | 0.996 (0.992‐1.000) | 0.993 (0.986‐1.000) | 0.994 (0.987‐1.002) | ||

| Mean HbA1c | 0.892 (0.828‐0.961) | ||||

| C statistic | 0.806 | 0.825 | 0.825 | 0.838 | 0.841 |

We then restricted our sample to patients with diabetes with both inpatient and outpatient glycemic control data and found that, in this subpopulation, the adjusted excess odds of death among patients with diabetes relative to those without significantly declined by a relative 4.2% each progressive year (OR: 0.958, 95% CI: 0.918‐0.998; Table 3, model 3). Including measures of inpatient glucose control in the model did not significantly change the rate of change of excess odds of death (OR: 0.956, 95% CI: 0.916‐0.997; Table 3, model 4), nor did including both measures of inpatient and outpatient glycemic control (OR: 0.953, 95% CI: 0.914‐0.994; Table 3, model 5).

DISCUSSION

We conducted a difference‐in‐difference analysis of in‐hospital mortality rates among adult patients with diabetes compared to patients without diabetes over 10 years, stratifying by ICU status and service assignment. For patients with any ICU stay, we found that the reduction in odds of mortality for patients with diabetes has been 3 times larger than the reduction in odds of mortality for patients without diabetes. For those without an ICU stay, we found no significant difference between patients with and without diabetes in the rate at which in‐hospital mortality declined. We did not find stratification by assignment to a medical or surgical service to be an effect modifier. Finally, despite the fact that our institution achieved better aggregate inpatient glucose control, less severe hyperglycemia, and better long‐term glucose control over the course of the decade, we did not find that either inpatient or outpatient glucose control explained the trend in mortality for patients with diabetes in the ICU. Our study is unique in its inclusion of all hospitalized patients and its ability to simultaneously assess whether both inpatient and outpatient glucose control are explanatory factors in the observed mortality trends.

The fact that improved inpatient glucose control did not explain the trend in mortality for patients with diabetes in the ICU is consistent with the majority of the literature on intensive inpatient glucose control. In randomized trials, intensive glucose control appears to be of greater benefit for patients without diabetes than for patients with diabetes.[31] In fact, in 1 study, patients with diabetes were the only group that did not benefit from intensive glucose control.[32] In our study, it is possible that the rise in hypoglycemia nullified some of the benefits of glucose control. Nationally, hospital admissions for hypoglycemia among Medicare beneficiaries now outnumber admissions for hyperglycemia.[27]

We also do not find that the decline in hemoglobin A1c attenuated the reduction in mortality in the minority of patients for whom these data were available. This is concordant with evidence from 3 randomized clinical trials that have failed to establish a clear beneficial effect of intensive outpatient glucose control on primary cardiovascular endpoints among older, high‐risk patients with type 2 diabetes using glucose‐lowering agents.[21, 22, 23] It is notable, however, that the population for whom we had available hemoglobin A1c results was not representative of the overall population of ICU patients with diabetes. Consequently, there may be an association of outpatient glucose control with inpatient mortality in the overall population of ICU patients with diabetes that we were not able to detect.

The decline in mortality among ICU patients with diabetes in our study may stem from factors other than glycemic control. It is possible that patients were diagnosed earlier in their course of disease in later years of the study period, making the population of patients with diabetes younger or healthier. Of note, however, our risk adjustment models were very robust, with C statistics from 0.82 to 0.92, suggesting that we were able to account for much of the mortality risk attributable to patient clinical and demographic factors. More intensive glucose management may have nonglycemic benefits, such as closer patient observation, which may themselves affect mortality. Alternatively, improved cardiovascular management for patients with diabetes may have decreased the incidence of cardiovascular events. During the study period, evidence from large clinical trials demonstrated the importance of tight blood pressure and lipid management in improving outcomes for patients with diabetes,[33, 34, 35, 36] guidelines for lipid management for patients with diabetes changed,[37] and fewer patients developed cardiovascular complications.[38] Finally, it is possible that our findings can be explained by an improvement in treatment of complications for which patients with diabetes previously have had disproportionately worse outcomes, such as percutaneous coronary intervention.[39]

Our findings may have important implications for both clinicians and policymakers. Changes in inpatient glucose management have required substantial additional resources on the part of hospitals. Our evidence regarding the questionable impact of inpatient glucose control on in‐hospital mortality trends for patients with diabetes is disappointing and highlights the need for multifaceted evaluation of the impact of such quality initiatives. There may, for instance, be benefits from tighter blood glucose control in the hospital beyond mortality, such as reduced infections, costs, or length of stay. On the outpatient side, our more limited data are consistent with recent studies that have not been able to show a mortality benefit in older diabetic patients from more stringent glycemic control. A reassessment of prevailing diabetes‐related quality measures, as recently called for by some,[40, 41] seems reasonable.

Our study must be interpreted in light of its limitations. It is possible that the improvements in glucose management were too small to result in a mortality benefit. The overall reduction of 25 mg dL achieved at our institution is less than the 33 to 50 mg/dL difference between intensive and conventional groups in those randomized clinical trials that have found reductions in mortality.[11, 42] In addition, an increase in mean glucose during the last 1 to 2 years of the observation period (in response to prevailing guidelines) could potentially have attenuated any benefit on mortality. The study does not include other important clinical endpoints, such as infections, complications, length of stay, and hospital costs. Additionally, we did not examine postdischarge mortality, which might have shown a different pattern. The small proportion of patients with hemoglobin A1c results may have hampered our ability to detect an effect of outpatient glucose control. Consequently, our findings regarding outpatient glucose control are only suggestive. Finally, our findings represent the experience of a single, large academic medical center and may not be generalizable to all settings.

Overall, we found that patients with diabetes in the ICU have experienced a disproportionate reduction in in‐hospital mortality over time that does not appear to be explained by improvements in either inpatient or outpatient glucose control. Although improved glycemic control may have other benefits, it does not appear to impact in‐hospital mortality. Our real‐world empirical results contribute to the discourse among clinicians and policymakers with regards to refocusing the approach to managing glucose in‐hospital and readjudication of diabetes‐related quality measures.

Acknowledgments

The authors would like to acknowledge the YaleNew Haven Hospital diabetes management team: Gael Ulisse, APRN, Helen Psarakis, APRN, Anne Kaisen, APRN, and the Yale Endocrine Fellows.

Disclosures: Design and conduct of the study: N. B., J. D., S. I., T. B., L. H. Collection, management, analysis, and interpretation of the data: N. B., B. J., J. D., J. R., J. B., S. I., L. H. Preparation, review, or approval of the manuscript: N. B., B. J., J. D., J. R., S. I., T. B., L. H. Leora Horwitz, MD, had full access to all the data in the study and takes responsibility for the integrity of the data and the accuracy of the data analysis. Dr. Horwitz is supported by the National Institute on Aging (K08 AG038336) and by the American Federation for Aging Research through the Paul B. Beeson Career Development Award Program. This publication was also made possible by CTSA grant number UL1 RR024139 from the National Center for Research Resources and the National Center for Advancing Translational Science, components of the National Institutes of Health (NIH), and NIH roadmap for Medical Research. Its contents are solely the responsibility of the authors and do not necessarily represent the official view of the NIH. No funding source had any role in design and conduct of the study; collection, management, analysis, and interpretation of the data; preparation, review, or approval of the manuscript; or decision to submit the manuscript for publication. Silvio E. Inzucchi, MD, serves on a Data Safety Monitoring Board for Novo Nordisk, a manufacturer of insulin products used in the hospital setting. The remaining authors declare no conflicts of interest.

Patients with diabetes currently comprise over 8% of the US population (over 25 million people) and more than 20% of hospitalized patients.[1, 2] Hospitalizations of patients with diabetes account for 23% of total hospital costs in the United States,[2] and patients with diabetes have worse outcomes after hospitalization for a variety of common medical conditions,[3, 4, 5, 6] as well as in intensive care unit (ICU) settings.[7, 8] Individuals with diabetes have historically experienced higher inpatient mortality than individuals without diabetes.[9] However, we recently reported that patients with diabetes at our large academic medical center have experienced a disproportionate reduction in in‐hospital mortality relative to patients without diabetes over the past decade.[10] This surprising trend begs further inquiry.

Improvement in in‐hospital mortality among patients with diabetes may stem from improved inpatient glycemic management. The landmark 2001 study by van den Berghe et al. demonstrating that intensive insulin therapy reduced postsurgical mortality among ICU patients ushered in an era of intensive inpatient glucose control.[11] However, follow‐up multicenter studies have not been able to replicate these results.[12, 13, 14, 15] In non‐ICU and nonsurgical settings, intensive glucose control has not yet been shown to have any mortality benefit, although it may impact other morbidities, such as postoperative infections.[16] Consequently, less stringent glycemic targets are now recommended.[17] Nonetheless, hospitals are being held accountable for certain aspects of inpatient glucose control. For example, the Centers for Medicare & Medicaid Services (CMS) began asking hospitals to report inpatient glucose control in cardiac surgery patients in 2004.[18] This measure is now publicly reported, and as of 2013 is included in the CMS Value‐Based Purchasing Program, which financially penalizes hospitals that do not meet targets.

Outpatient diabetes standards have also evolved in the past decade. The Diabetes Control and Complications Trial in 1993 and the United Kingdom Prospective Diabetes Study in 1997 demonstrated that better glycemic control in type 1 and newly diagnosed type 2 diabetes patients, respectively, improved clinical outcomes, and prompted guidelines for pharmacologic treatment of diabetic patients.[19, 20] However, subsequent randomized clinical trials have failed to establish a clear beneficial effect of intensive glucose control on primary cardiovascular endpoints among higher‐risk patients with longstanding type 2 diabetes,[21, 22, 23] and clinical practice recommendations now accept a more individualized approach to glycemic control.[24] Nonetheless, clinicians are also being held accountable for outpatient glucose control.[25]

To better understand the disproportionate reduction in mortality among hospitalized patients with diabetes that we observed, we first examined whether it was limited to surgical patients or patients in the ICU, the populations that have been demonstrated to benefit from intensive inpatient glucose control. Furthermore, given recent improvements in inpatient and outpatient glycemic control,[26, 27] we examined whether inpatient or outpatient glucose control explained the mortality trends. Results from this study contribute empirical evidence on real‐world effects of efforts to improve inpatient and outpatient glycemic control.

METHODS

Setting

During the study period, YaleNew Haven Hospital (YNHH) was an urban academic medical center in New Haven, Connecticut, with over 950 beds and an average of approximately 32,000 annual adult nonobstetric admissions. YNHH conducted a variety of inpatient glucose control initiatives during the study period. The surgical ICU began an informal medical teamdirected insulin infusion protocol in 2000 to 2001. In 2002, the medical ICU instituted a formal insulin infusion protocol with a target of 100 to 140 mg/dL, which spread to remaining hospital ICUs by the end of 2003. In 2005, YNHH launched a consultative inpatient diabetes management team to assist clinicians in controlling glucose in non‐ICU patients with diabetes. This team covered approximately 10 to 15 patients at a time and consisted of an advanced‐practice nurse practitioner, a supervising endocrinologist and endocrinology fellow, and a nurse educator to provide diabetic teaching. Additionally, in 2005, basal‐boluscorrection insulin order sets became available. The surgical ICU implemented a stringent insulin infusion protocol with target glucose of 80 to 110 mg/dL in 2006, but relaxed it (goal 80150 mg/dL) in 2007. Similarly, in 2006, YNHH made ICU insulin infusion recommendations more stringent in remaining ICUs (goal 90130 mg/dL), but relaxed them in 2010 (goal 120160 mg/dL), based on emerging data from clinical trials and prevailing national guidelines.

Participants and Data Sources

We included all adult, nonobstetric discharges from YNHH between January 1, 2000 and December 31, 2010. Repeat visits by the same patient were linked by medical record number. We obtained data from YNHH administrative billing, laboratory, and point‐of‐care capillary blood glucose databases. The Yale Human Investigation Committee approved our study design and granted a Health Insurance Portability and Accountability Act waiver and a waiver of patient consent.

Variables

Our primary endpoint was in‐hospital mortality. The primary exposure of interest was whether a patient had diabetes mellitus, defined as the presence of International Classification of Diseases, Ninth Revision codes 249.x, 250.x, V4585, V5391, or V6546 in any of the primary or secondary diagnosis codes in the index admission, or in any hospital encounter in the year prior to the index admission.

We assessed 2 effect‐modifying variables: ICU status (as measured by a charge for at least 1 night in the ICU) and service assignment to surgery (including neurosurgery and orthopedics), compared to medicine (including neurology). Independent explanatory variables included time between the start of the study and patient admission (measured as days/365), diabetes status, inpatient glucose control, and long‐term glucose control (as measured by hemoglobin A1c at any time in the 180 days prior to hospital admission in order to have adequate sample size). We assessed inpatient blood glucose control through point‐of‐care blood glucose meters (OneTouch SureStep; LifeScan, Inc., Milipitas, CA) at YNHH. We used 4 validated measures of inpatient glucose control: the proportion of days in each hospitalization in which there was any hypoglycemic episode (blood glucose value <70 mg/dL), the proportion of days in which there was any severely hyperglycemic episode (blood glucose value >299 mg/dL), the proportion of days in which mean blood glucose was considered to be within adequate control (all blood glucose values between 70 and 179 mg/dL), and the standard deviation of mean glucose during hospitalization as a measure of glycemic variability.[28]

Covariates included gender, age at time of admission, length of stay in days, race (defined by hospital registration), payer, Elixhauser comorbidity dummy variables (revised to exclude diabetes and to use only secondary diagnosis codes),[29] and primary discharge diagnosis grouped using Clinical Classifications Software,[30] based on established associations with in‐hospital mortality.

Statistical Analysis

We summarized demographic characteristics numerically and graphically for patients with and without diabetes and compared them using [2] and t tests. We summarized changes in inpatient and outpatient measures of glucose control over time numerically and graphically, and compared across years using the Wilcoxon rank sum test adjusted for multiple hypothesis testing.

We stratified all analyses first by ICU status and then by service assignment (medicine vs surgery). Statistical analyses within each stratum paralleled our previous approach to the full study cohort.[10] Taking each stratum separately (ie, only ICU patients or only medicine patients), we used a difference‐in‐differences approach comparing changes over time in in‐hospital mortality among patients with diabetes compared to those without diabetes. This approach enabled us to determine whether patients with diabetes had a different time trend in risk of in‐hospital mortality than those without diabetes. That is, for each stratum, we constructed multivariate logistic regression models including time in years, diabetes status, and the interaction between time and diabetes status as well as the aforementioned covariates. We calculated odds of death and confidence intervals for each additional year for patients with diabetes by exponentiating the sum of parameter estimates for time and the diabetes‐time interaction term. We evaluated all 2‐way interactions between year or diabetes status and the covariates in a multiple degree of freedom likelihood ratio test. We investigated nonlinearity of the relation between mortality and time by evaluating first and second‐order polynomials.

Because we found a significant decline in mortality risk for patients with versus without diabetes among ICU patients but not among non‐ICU patients, and because service assignment was not found to be an effect modifier, we then limited our sample to ICU patients with diabetes to better understand the role of inpatient and outpatient glucose control in accounting for observed mortality trends. First, we determined the relation between the measures of inpatient glucose control and changes in mortality over time using logistic regression. Then, we repeated this analysis in the subsets of patients who had inpatient glucose data and both inpatient and outpatient glycemic control data, adding inpatient and outpatient measures sequentially. Given the high level of missing outpatient glycemic control data, we compared demographic characteristics for diabetic ICU patients with and without such data using [2] and t tests, and found that patients with data were younger and less likely to be white and had longer mean length of stay, slightly worse performance on several measures of inpatient glucose control, and lower mortality (see Supporting Table 1 in the online version of this article).

| Characteristic | Overall, N=322,939 | Any ICU Stay, N=54,646 | No ICU Stay, N=268,293 | Medical Service, N=196,325 | Surgical Service, N=126,614 |

|---|---|---|---|---|---|

| |||||

| Died during admission, n (%) | 7,587 (2.3) | 5,439 (10.0) | 2,147 (0.8) | 5,705 (2.9) | 1,883 (1.5) |

| Diabetes, n (%) | 76,758 (23.8) | 14,364 (26.3) | 62,394 (23.2) | 55,453 (28.2) | 21,305 (16.8) |

| Age, y, mean (SD) | 55.5 (20.0) | 61.0 (17.0) | 54.4 (21.7) | 60.3 (18.9) | 48.0 (23.8) |

| Age, full range (interquartile range) | 0118 (4273) | 18112 (4975) | 0118 (4072) | 0118 (4776) | 0111 (3266) |

| Female, n (%) | 159,227 (49.3) | 23,208 (42.5) | 134,296 (50.1) | 99,805 (50.8) | 59,422 (46.9) |

| White race, n (%) | 226,586 (70.2) | 41,982 (76.8) | 184,604 (68.8) | 132,749 (67.6) | 93,838 (74.1) |

| Insurance, n (%) | |||||

| Medicaid | 54,590 (16.9) | 7,222 (13.2) | 47,378 (17.7) | 35,229 (17.9) | 19,361 (15.3) |

| Medicare | 141,638 (43.9) | 27,458 (50.2) | 114,180 (42.6) | 100,615 (51.2) | 41,023 (32.4) |

| Commercial | 113,013 (35.0) | 18,248 (33.4) | 94,765 (35.3) | 53,510 (27.2) | 59,503 (47.0) |

| Uninsured | 13,521 (4.2) | 1,688 (3.1) | 11,833 (4.4) | 6,878 (3.5) | 6,643 (5.2) |

| Length of stay, d, mean (SD) | 5.4 (9.5) | 11.8 (17.8) | 4.2 (6.2) | 5.46 (10.52) | 5.42 (9.75) |

| Service, n (%) | |||||

| Medicine | 184,495 (57.1) | 27,190 (49.8) | 157,305 (58.6) | 184,496 (94.0) | |

| Surgery | 126,614 (39.2) | 25,602 (46.9) | 101,012 (37.7) | 126,614 (100%) | |

| Neurology | 11,829 (3.7) | 1,853 (3.4) | 9,976 (3.7) | 11,829 (6.0) | |

To explore the effects of dependence among observations from patients with multiple encounters, we compared parameter estimates derived from a model with all patient encounters (including repeated admissions for the same patient) with those from a model with a randomly sampled single visit per patient, and observed that there was no difference in parameter estimates between the 2 classes of models. For all analyses, we used a type I error of 5% (2 sided) to test for statistical significance using SAS version 9.3 (SAS Institute, Cary, NC) or R software (

RESULTS

We included 322,938 patient admissions. Of this sample, 54,645 (16.9%) had spent at least 1 night in the ICU. Overall, 76,758 patients (23.8%) had diabetes, representing 26.3% of ICU patients, 23.2% of non‐ICU patients, 28.2% of medical patients, and 16.8% of surgical patients (see Table 1 for demographic characteristics).

Mortality Trends Within Strata

Among ICU patients, the overall mortality rate was 9.9%: 10.5% of patients with diabetes and 9.8% of patients without diabetes. Among non‐ICU patients, the overall mortality rate was 0.8%: 0.9% of patients with diabetes and 0.7% of patients without diabetes.

Among medical patients, the overall mortality rate was 2.9%: 3.1% of patients with diabetes and 2.8% of patients without diabetes. Among surgical patients, the overall mortality rate was 1.4%: 1.8% of patients with diabetes and 1.4% of patients without diabetes. Figure 1 shows quarterly in‐hospital mortality for patients with and without diabetes from 2000 to 2010 stratified by ICU status and by service assignment.

Table 2 describes the difference‐in‐differences regression analyses, stratified by ICU status and service assignment. Among ICU patients (Table 2, model 1), each successive year was associated with a 2.6% relative reduction in the adjusted odds of mortality (odds ratio [OR]: 0.974, 95% confidence interval [CI]: 0.963‐0.985) for patients without diabetes compared to a 7.8% relative reduction for those with diabetes (OR: 0.923, 95% CI: 0.906‐0.940). In other words, patients with diabetes compared to patients without diabetes had a significantly greater decline in odds of adjusted mortality of 5.3% per year (OR: 0.947, 95% CI: 0.927‐0.967). As a result, the adjusted odds of mortality among patients with versus without diabetes decreased from 1.352 in 2000 to 0.772 in 2010.

| Independent Variables | ICU Patients, N=54,646, OR (95% CI) | Non‐ICU Patients, N=268,293, OR (95% CI) | Medical Patients, N=196,325, OR (95% CI) | Surgical Patients, N=126,614, OR (95% CI) |

|---|---|---|---|---|

| Model 1 | Model 2 | Model 3 | Model 4 | |

| ||||

| Year | 0.974 (0.963‐0.985) | 0.925 (0.909‐0.940) | 0.943 (0.933‐0.954) | 0.995 (0.977‐1.103) |

| Diabetes | 1.352 (1.562‐1.171) | 0.958 (0.783‐1.173) | 1.186 (1.037‐1.356) | 1.213 (0.942‐1.563) |

| Diabetes*year | 0.947 (0.927‐0.967) | 0.977 (0.946‐1.008) | 0.961 (0.942‐0.980) | 0.955 (0.918‐0.994) |

| C statistic | 0.812 | 0.907 | 0.880 | 0.919 |