User login

Interhospital Transfer Handoff Practices

Transitions of care are major sources of preventable medical errors. Incomplete or inaccurate communication during handoffs is the root cause of many adverse events.[1] In a prospective study, adverse events were found to occur during interhospital transfer up to 30% of the time.[2] Furthermore, patients subject to interhospital transfer have longer length of stay and higher inpatient mortality, even after adjusting for mortality risk predictors.[3] Standardizing intrahospital handoff structures and communication practices has been shown to reduce medical errors.[4, 5, 6] Interhospital transfer is an understudied area among the transitions of care literature. Little is known about institutional variations in the process of information transfer and its association with patient outcomes. Although it is challenging to ascertain the total burden of transferred patients, it has been estimated that 1.6 million inpatients originated at another facility.[7] Additionally, approximately 5.9% of admissions to a representative sample of US intensive care units (ICU) originated from other hospitals.[8] Patients are transferred between hospitals for multiple reasons beyond medical necessity, for example, to adjust for patient preferences, bed availability, and hospital staffing patterns. This creates a setting in which complex and often critically ill patients are subject to variable and sometimes ambiguous handoff processes.[9]

This survey of 32 tertiary care centers in the United States was undertaken to identify common practices in communication and documentation during interhospital patient transfers. Additional goals were to understand the structure of the handoff process, the role of the transfer center, and how electronic medical records (EMR) and interhospital communication play a role in this care transition. Subsequently, common challenges in coordinating interhospital transfers were identified to provide a conceptual framework for process improvement.

METHODS

Survey Process

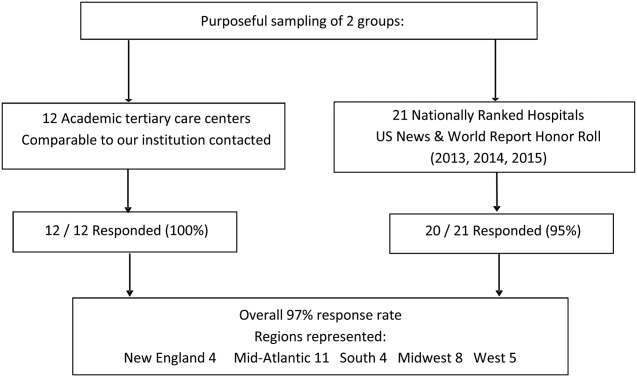

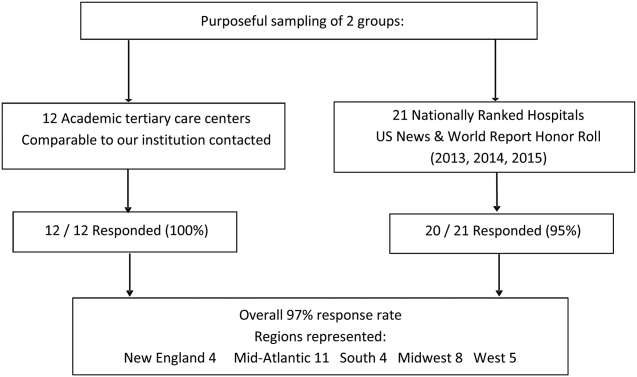

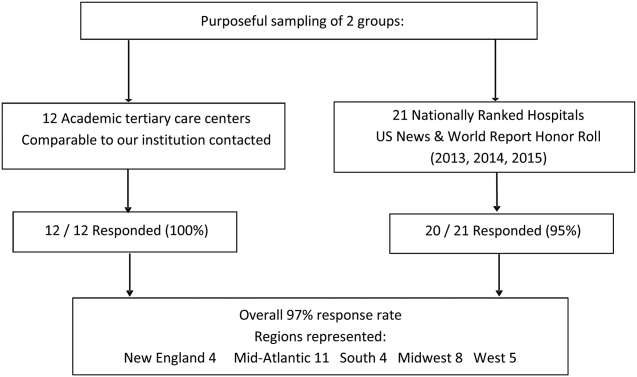

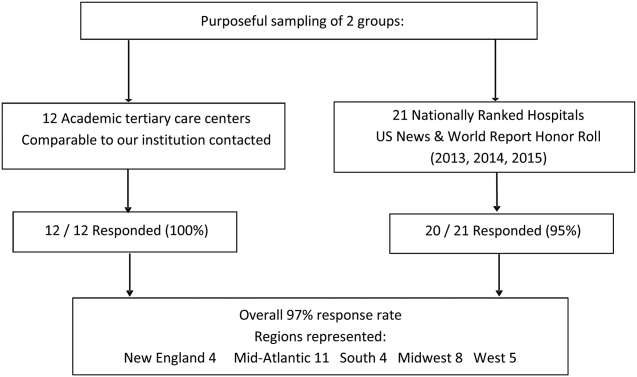

The survey was initiated in September 2013 and concluded in September 2015, and was designed to quantify patient volume and identify common as well as unique practices to improve communication across the transfer process. The respondents were transfer center directors or managers, typically with a nursing background. Mass e‐mail generated a very poor response rate and did not allow for discussion and clarification of responses. The strategy was then modified to contact individual institutions directly. The survey was performed via phone whenever possible. Figure 1 represents purposeful sampling conducted on 2 different groups of hospitals. These hospitals represent a convenience sample of institutions from a nationally ranked list of hospitals as well as others comparable to our own institutions. Hospitals were selected based on status as academic tertiary care centers with roughly similar bed sizes (600). Several were selected based on similar EMR capabilities. Geographic diversity was also taken into account. Thirty‐two academic tertiary care centers were ultimately included in the survey. Data were entered into a survey form and deidentified. The RutgersRobert Wood Johnson Medical School Institutional Review Board approved this study.

Survey Content

Qualitative and quantitative data were collected by the study team. Data included number and origin of transfers (including those from inpatient facilities and emergency departments), staff characteristics, transfer process, documentation received prior to transfer, EMR access and type, outcomes, and clinical status tracking (see Supporting Figure 1 in the online version of this article for the complete survey tool).

Measurement and Data Analysis

Descriptive statistics are presented in unweighted fashion as a number and percentage for dichotomous variables, or a numeric range for ordinal variables. When a range was given by survey participants, the lower end of the range was used to calculate the population median. Several institutions surveyed were unable to provide specific numeric values, but instead cited how many requests for transfer they received either daily or monthly; these were omitted from the demographics analysis.

Respondents also provided a description of their overall triage and acceptance process for qualitative analysis. Unique strategies were identified by the study personnel at the time of each interview and amassed at the end of the interview period. These strategies were then discussed by the study team, and separated into categories that addressed the main challenges associated with interhospital transfers. Five general tenants of the transfer process were identified: acceptance and transport, need for clinical updates, provider handoffs and coordination of care, information availability, and feedback.

RESULTS

Based on a survey question asking respondents to estimate the total number of interhospital transfers received per month, the annual burden of patients transferred into these 32 hospitals represented approximately 247,000 patients yearly. The median number of patients transferred per month, based on a point estimate if given or the lower end of the range if a range was provided, was 700 (range, 2502500). On average, 28% (range, 10%50%) were transferred directly to an ICU, representing approximately 69,000 critically ill patients. A majority of hospitals polled (65%) received patients from more than 100 referring institutions, and a minority (23%) identified EMR interoperability for more than a quarter of the sending facilities. The overall acceptance rate ranged from 50% to 95%.

Table 1 represents common transition elements of participating institutions. Thirty‐eight percent of hospitals utilize a critical caretrained registered nurse as the initial triage point of contact. The process and quality controls for coordinating transfers from outside hospitals were highly variable. Although clinical updates from acceptance to arrival were required in a majority of hospitals (81%), the acceptable time interval was inconsistent, varying from 2 to 4 hours (13%) to 24 hours (38%). A mandatory 3‐way recorded discussion (between transfer center staff, and referring and accepting physician) was nearly uniform. Objective clinical information to assist the handoff (ie, current labs, radiology images, history and physical, progress notes, or discharge summary) was available in only 29% of hospitals. Only 23% of hospitals also recorded a 3‐way nursing handoff (bedside‐to‐bedside nursing report). A minority of hospitals utilized their principal EMR to document the transfer process and share incoming clinical information among providers (32%).

| Survey Question | Survey Response | N (%) |

|---|---|---|

| ||

| What is the training background of the staff member who takes the initial call and triages patients in your transfer center? | Critical care experienced RN | 12/32 (38%) |

| Other clinical background (EMT, RN) | 13/32 (41%) | |

| Nonclinical personnel | 7/32 (22%) | |

| Prior to the patient's arrival, do you require any documentation to be transmitted from the transferring institution? | Objective clinical data required | 9/32 (28%) |

| Objective clinical data not required | 23/32 (72%) | |

| Is a 3‐way recorded conversation facilitated by the transfer center required? | Initial physician‐to‐physician acceptance discussion | 27/32 (84%) |

| RN‐to‐RN report | 6/26 (23%) | |

| Are clinical status updates required? | Updates required every 24 hours | 12/32 (38%) |

| Updates required every 812 hours | 7/32 (22%) | |

| Updates required every 24 hours | 4/32 (13%) | |

| Updates required but timing not specified | 3/32 (9%) | |

| Clinical status updates not required | 6/32 (19%) | |

| Is any clinical information obtained by the transfer center available to the patient's providers in real time on your EMR system? | Yes | 10/31 (32%) |

| No | 21/31 (68%) | |

| Do you track the outcomes of patients you accept from outside hospitals? | Yes | 14/24 (58%) |

| No | 10/24 (42%) | |

Descriptions of the transfer process were conceptually evaluated by the study team, then divided into 5 common themes: acceptance and transport, clinical updates, coordination of care, information availability, and quality improvement (Table 2). Institutions devised novel approaches including providing high bed priority to expedite transit, a dedicated quarterback physician to coordinate safe transfer and uninterrupted communication, electronic transfer notes to share communication with all providers, and a standardized system of feedback to referring hospitals. Several institutions relied on an expect note, which could be a free‐text document or a form document in the EMR. This preserves verbal handoff information that may otherwise be lost if the accepting physician at the time of transfer is not the physician receiving the handoff.

| Challenges | Innovative Practices |

|---|---|

| |

| Expedited acceptance and transport | Automatic acceptance for certain diagnoses (ie, neurosurgical indication for transfer) |

| Transferred patients prioritized for hospital beds over all patients except codes | |

| Hospital controls transportation units, allowing for immediate dispatch and patient retrieval | |

| Outsourcing of transfer center and interfacility transfer to third party | |

| Timeliness of clinical updates | Transfer center communicates with bedside RN for clinical updates at the time of transfer |

| Clinical status updates every 24 hours for critical patients | |

| Daily reevaluation of clinical status | |

| Accepting physician alerted of changes in clinical status | |

| Handoff and coordination of care | Physician accept tool in EMR |

| Quarterback physician who triages and accepts all patients during a given time period | |

| Critical patients are accepted into a critical care resuscitation unit, an all‐purpose intensive care unit staffed by an intensivist who shares decision making with the referring provider and is involved in all communications regarding the transferred patient | |

| Availability of protected clinical information | Scribed physician handoff imported into EMR |

| Expect note in EMR: summary of clinical information documented by accepting physician | |

| PACS radiology cloud networks for hospital systems or statewide | |

| EMR interoperability: Care Everywhere module in Epic EMR | |

| Health and information management department responsible for obtaining and scanning outside records into EMR | |

| Feedback and quality improvement | Automatic review if patient upgraded to ICU within 4 hours of arrival |

| Departmental chair review of physician verbal handoff if poor outcome or difficulty with transfer | |

| Outcomes and quality of handoff reported back to referring hospital | |

| Discharge summary sent to referring hospital | |

| Referring hospital able to view patient's chart for 1 year | |

Quality improvement occurred via both internal and external feedback at several institutions. There were two notable mechanisms of internal feedback. Review of recorded physician verbal handoff by department chair occurred if an adverse event involved a transferred patient. An automatic internal review was triggered if a patient was upgraded to a higher level of care within 4 hours of arrival. These advanced mechanisms require vigilance and dedication on the part of the transfer center and physicians involved in the transfer process. External feedback was provided to referring hospitals through both active and passive mechanisms. One advanced health system allowed referring providers to access the patient's inpatient medical record for 1 year and sent a discharge summary to all referring hospitals. Another hospital maintained a sophisticated scorecard, with key measures shared with internal stakeholders and referring hospitals. Some of the metrics tracked included: denials due to insufficient bed capacity, change in bed status within 12 hours of transfer, and duration of stay in the postanesthesia care unit or emergency department awaiting an inpatient bed. This organization also performed site visits to referring hospitals, addressing handoff quality improvement.

DISCUSSION

Standardizing intrahospital handoffs has been shown to decrease preventable medical errors and reduce possible near‐miss events.[6, 10] Interhospital care transitions are inherently more complex due to increased acuity and decreased continuity; yet, there is no universal standardization of these handovers. We found that practices vary widely among tertiary care centers, and the level of transfer center involvement in the verbal and written handoff is inconsistent.

Evidence‐based frameworks to improve healthcare delivery, such as TeamSTEPPS (Team Strategies and Tools to Enhance Performance and Patient Safety), first require an organizational assessment to identify barriers to effective communication.[11] Interhospital transfers offer multiple unique barriers to continuity: physical distance, uncertainty in timing, incongruent treatment goals, disparate information sources, and distractions. This study provides the first step in conceptualizing the unique aspects of interhospital transfers, as well as highlights strategies to improve care coordination (Table 2).

A tailored intervention needs not only to overcome the typical barriers to handoffs such as time constraints, information sharing, and ambiguity in provider roles, but also to overcome multiple systems barriers. Bed management systems add another time‐related variable due to fixed and frequently overburdened bed capacity. Prioritization of transfers depends upon an accurate clinical depiction of patient acuity as well as organizational strategies. For example, neurologic diagnoses are commonly a top priority and are triaged as such, sometimes instead of higher‐acuity patients with other principal diagnoses. The complexity of this process may lead to delays in high‐acuity transfers, and is contingent upon accurate and updated clinical information. Coordinating handovers amidst complex provider schedules is another systems barrier. The commonly adopted 7 on, 7 off model for hospitalists, and shift work for intensivists, may increase the possibility that a transfer occurs across multiple provider changes. Patient follow‐up and closed‐loop feedback are important components of intrahospital handovers, but are much more challenging to implement for interhospital handovers with incongruent information systems and providers.

Programs to improve intrahospital handovers (eg, IPASS) emphasize creating an accurate clinical depiction of a patient using both verbal and written handoffs.[12] This is arguably more difficult over the phone without a concurrent written handoff. Recording of 3‐way physician and nurse handoffs is common, but reviews of recorded conversations are often unavailable or cumbersome in real time. EMR documentation of verbal information exchanged during the handoff is a possible solution. However, there may be legal implications for a transcribed verbal handoff. Furthermore, transfer centers often work with a software program separate from the principal EMR, and documentation in real time is challenging. EMR integration could help reinforce a patient‐centered shared mental model by allowing visualization of lab trends, radiology, vitals, and other documentation during and after the verbal handoff.

Physician‐driven checklist accept tools are another solution. Usually the responsibility of the accepting attending or fellow, this type of document is most useful as a modifiable document in the EMR. Accept tools, such as the one created by Malpass et al., have demonstrated successful shared decision making, and have resulted in fewer emergent procedures and fewer antibiotic changes on arrival.[13] One of the challenges with this approach is the frequency of utilization. In the aforementioned study, the adoption rate of the accept tool was about 70% in a closed university medical ICU, where these types of interventions may be viewed favorably by providers instead of burdensome.[13]

The most consistent finding of this survey was the lack of common processes to improve outcomes. Simple interventions, such as regular clinical updates, documentation of the handoff process, and obtaining objective information early in the process, were inconsistently adopted. Outcomes tracking and feedback are necessary components of team‐based quality improvement. Approximately half of the hospitals surveyed specifically tracked outcomes of transferred patients, and a minority had systems in place to provide feedback to referring centers.

Improving care delivery requires buy‐in from all participants, necessitating engagement of referring hospitals. Interventions such as frequent status updates and providing early documentation have the potential to increase the burden on referring providers when feedback or incentives are not commonplace. Moreover, the referring provider has the option of transferring a patient to a hospital with reduced handoff requirements, creating a disincentive for quality improvement. Quality metrics that incorporate outcomes of transferred patients may be necessary to better align the goals of sending and receiving physicians.

This study was intended to be a qualitative investigation and has some limitations. Any verbal qualitative study has the possibility of misinterpretation of information given by transfer center personnel. A single investigator performed most of the discussions and was able to clarify when needed, providing a degree of consistency, but may also be a source of bias. Categorical answers and a team‐based approach to conceptualizing responses likely minimized this potential bias.

We selected hospitals from the U.S. News and World Report Honor Roll plus additional hospitals chosen based on similarity to our home institutions. This may be a skewed sample and may not represent other major US hospitals and networks. However, we chose to interview large academic tertiary care centers, many accepting more than 1000 patients monthly, as these are likely to be the most proficient at performing transfers, and responses may be generalizable.

CONCLUSIONS

Standardization of information exchange during interhospital transfers does not currently exist. Practices vary widely amongst academic tertiary care centers. There is a paucity of data to support the association of specific processes with patient outcomes. Ultimately, a multicenter study examining the impact of improved information transfer on patient outcomes is warranted, utilizing tracking resources already in place. Optimizing and aligning practices between sending and receiving hospitals may improve interhospital handover efficiency and patient safety.

Disclosures

Dr. Usher is supported by a National Institutes of Health Clinical and Translational Science Award at the University of Minnesota: UL1TR000114. Dr. Steinberg has received support from Arena Pharmaceuticals and Major League Baseball. Drs. Herrigel, Parikh, Fanning, and Carroll have no disclosures. A prior version of this article was presented as an abstract at the Society of General Internal Medicine Mid‐Atlantic Regional Meeting in April 2014 in New York, New York.

- , , , . Doctors' handovers in hospitals: a literature review. BMJ Qual Saf. 2011;20(2):128–133.

- , , , et al. Quality of inter‐hospital transport of critically ill patients: a prospective audit. Crit Care. 2005;9(4):R446–R451.

- , , , , . Interhospital transfer patients discharged by academic hospitalists and general internists: characteristics and outcomes [published online November 20, 2015]. J Hosp Med. doi: 10.1002/jhm.2515.

- , , , et al. Evaluation of postoperative handover using a tool to assess information transfer and teamwork. Ann Surg. 2011;253(4):831–837.

- , , , et al. Rates of medical errors and preventable adverse events among hospitalized children following implementation of a resident handoff bundle. JAMA. 2013;310(21):2262–2270.

- , , , et al. Changes in medical errors after implementation of a handoff program. N Engl J Med. 2014;371(19):1803–1812.

- HCUP National Inpatient Sample (NIS). Healthcare Cost and Utilization Project (HCUP). 2012. Agency for Healthcare Research and Quality, Rockville, MD. Available at: www.hcup‐us.ahrq.gov/nisoverview.jsp. Accessed 26 May 2015.

- , , , , , . Outcomes among patients discharged from busy intensive care units. Ann Intern Med. 2013;159(7):447–455.

- , , . Reasons underlying inter‐hospital transfers to an academic medical intensive care unit. J Crit Care. 2013;28(2):202–208.

- , , , . Avoiding handover fumbles: a controlled trial of a structured handover tool versus traditional handover methods. BMJ Qual Saf. 2012;21(11):925–932.

- , , , et al. Validation of a teamwork perceptions measure to increase patient safety. BMJ Qual Saf. 2014;23(9):718–726.

- , , , et al. Development, implementation, and dissemination of the I‐PASS handoff curriculum: a multisite educational intervention to improve patient handoffs. Acad Med. 2014;89(6):876–884.

- , , , . The interhospital medical intensive care unit transfer instrument facilitates early implementation of critical therapies and is associated with fewer emergent procedures upon arrival. J Intensive Care Med. 2015;30(6):351–357.

Transitions of care are major sources of preventable medical errors. Incomplete or inaccurate communication during handoffs is the root cause of many adverse events.[1] In a prospective study, adverse events were found to occur during interhospital transfer up to 30% of the time.[2] Furthermore, patients subject to interhospital transfer have longer length of stay and higher inpatient mortality, even after adjusting for mortality risk predictors.[3] Standardizing intrahospital handoff structures and communication practices has been shown to reduce medical errors.[4, 5, 6] Interhospital transfer is an understudied area among the transitions of care literature. Little is known about institutional variations in the process of information transfer and its association with patient outcomes. Although it is challenging to ascertain the total burden of transferred patients, it has been estimated that 1.6 million inpatients originated at another facility.[7] Additionally, approximately 5.9% of admissions to a representative sample of US intensive care units (ICU) originated from other hospitals.[8] Patients are transferred between hospitals for multiple reasons beyond medical necessity, for example, to adjust for patient preferences, bed availability, and hospital staffing patterns. This creates a setting in which complex and often critically ill patients are subject to variable and sometimes ambiguous handoff processes.[9]

This survey of 32 tertiary care centers in the United States was undertaken to identify common practices in communication and documentation during interhospital patient transfers. Additional goals were to understand the structure of the handoff process, the role of the transfer center, and how electronic medical records (EMR) and interhospital communication play a role in this care transition. Subsequently, common challenges in coordinating interhospital transfers were identified to provide a conceptual framework for process improvement.

METHODS

Survey Process

The survey was initiated in September 2013 and concluded in September 2015, and was designed to quantify patient volume and identify common as well as unique practices to improve communication across the transfer process. The respondents were transfer center directors or managers, typically with a nursing background. Mass e‐mail generated a very poor response rate and did not allow for discussion and clarification of responses. The strategy was then modified to contact individual institutions directly. The survey was performed via phone whenever possible. Figure 1 represents purposeful sampling conducted on 2 different groups of hospitals. These hospitals represent a convenience sample of institutions from a nationally ranked list of hospitals as well as others comparable to our own institutions. Hospitals were selected based on status as academic tertiary care centers with roughly similar bed sizes (600). Several were selected based on similar EMR capabilities. Geographic diversity was also taken into account. Thirty‐two academic tertiary care centers were ultimately included in the survey. Data were entered into a survey form and deidentified. The RutgersRobert Wood Johnson Medical School Institutional Review Board approved this study.

Survey Content

Qualitative and quantitative data were collected by the study team. Data included number and origin of transfers (including those from inpatient facilities and emergency departments), staff characteristics, transfer process, documentation received prior to transfer, EMR access and type, outcomes, and clinical status tracking (see Supporting Figure 1 in the online version of this article for the complete survey tool).

Measurement and Data Analysis

Descriptive statistics are presented in unweighted fashion as a number and percentage for dichotomous variables, or a numeric range for ordinal variables. When a range was given by survey participants, the lower end of the range was used to calculate the population median. Several institutions surveyed were unable to provide specific numeric values, but instead cited how many requests for transfer they received either daily or monthly; these were omitted from the demographics analysis.

Respondents also provided a description of their overall triage and acceptance process for qualitative analysis. Unique strategies were identified by the study personnel at the time of each interview and amassed at the end of the interview period. These strategies were then discussed by the study team, and separated into categories that addressed the main challenges associated with interhospital transfers. Five general tenants of the transfer process were identified: acceptance and transport, need for clinical updates, provider handoffs and coordination of care, information availability, and feedback.

RESULTS

Based on a survey question asking respondents to estimate the total number of interhospital transfers received per month, the annual burden of patients transferred into these 32 hospitals represented approximately 247,000 patients yearly. The median number of patients transferred per month, based on a point estimate if given or the lower end of the range if a range was provided, was 700 (range, 2502500). On average, 28% (range, 10%50%) were transferred directly to an ICU, representing approximately 69,000 critically ill patients. A majority of hospitals polled (65%) received patients from more than 100 referring institutions, and a minority (23%) identified EMR interoperability for more than a quarter of the sending facilities. The overall acceptance rate ranged from 50% to 95%.

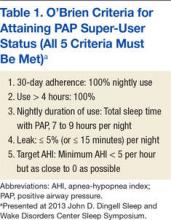

Table 1 represents common transition elements of participating institutions. Thirty‐eight percent of hospitals utilize a critical caretrained registered nurse as the initial triage point of contact. The process and quality controls for coordinating transfers from outside hospitals were highly variable. Although clinical updates from acceptance to arrival were required in a majority of hospitals (81%), the acceptable time interval was inconsistent, varying from 2 to 4 hours (13%) to 24 hours (38%). A mandatory 3‐way recorded discussion (between transfer center staff, and referring and accepting physician) was nearly uniform. Objective clinical information to assist the handoff (ie, current labs, radiology images, history and physical, progress notes, or discharge summary) was available in only 29% of hospitals. Only 23% of hospitals also recorded a 3‐way nursing handoff (bedside‐to‐bedside nursing report). A minority of hospitals utilized their principal EMR to document the transfer process and share incoming clinical information among providers (32%).

| Survey Question | Survey Response | N (%) |

|---|---|---|

| ||

| What is the training background of the staff member who takes the initial call and triages patients in your transfer center? | Critical care experienced RN | 12/32 (38%) |

| Other clinical background (EMT, RN) | 13/32 (41%) | |

| Nonclinical personnel | 7/32 (22%) | |

| Prior to the patient's arrival, do you require any documentation to be transmitted from the transferring institution? | Objective clinical data required | 9/32 (28%) |

| Objective clinical data not required | 23/32 (72%) | |

| Is a 3‐way recorded conversation facilitated by the transfer center required? | Initial physician‐to‐physician acceptance discussion | 27/32 (84%) |

| RN‐to‐RN report | 6/26 (23%) | |

| Are clinical status updates required? | Updates required every 24 hours | 12/32 (38%) |

| Updates required every 812 hours | 7/32 (22%) | |

| Updates required every 24 hours | 4/32 (13%) | |

| Updates required but timing not specified | 3/32 (9%) | |

| Clinical status updates not required | 6/32 (19%) | |

| Is any clinical information obtained by the transfer center available to the patient's providers in real time on your EMR system? | Yes | 10/31 (32%) |

| No | 21/31 (68%) | |

| Do you track the outcomes of patients you accept from outside hospitals? | Yes | 14/24 (58%) |

| No | 10/24 (42%) | |

Descriptions of the transfer process were conceptually evaluated by the study team, then divided into 5 common themes: acceptance and transport, clinical updates, coordination of care, information availability, and quality improvement (Table 2). Institutions devised novel approaches including providing high bed priority to expedite transit, a dedicated quarterback physician to coordinate safe transfer and uninterrupted communication, electronic transfer notes to share communication with all providers, and a standardized system of feedback to referring hospitals. Several institutions relied on an expect note, which could be a free‐text document or a form document in the EMR. This preserves verbal handoff information that may otherwise be lost if the accepting physician at the time of transfer is not the physician receiving the handoff.

| Challenges | Innovative Practices |

|---|---|

| |

| Expedited acceptance and transport | Automatic acceptance for certain diagnoses (ie, neurosurgical indication for transfer) |

| Transferred patients prioritized for hospital beds over all patients except codes | |

| Hospital controls transportation units, allowing for immediate dispatch and patient retrieval | |

| Outsourcing of transfer center and interfacility transfer to third party | |

| Timeliness of clinical updates | Transfer center communicates with bedside RN for clinical updates at the time of transfer |

| Clinical status updates every 24 hours for critical patients | |

| Daily reevaluation of clinical status | |

| Accepting physician alerted of changes in clinical status | |

| Handoff and coordination of care | Physician accept tool in EMR |

| Quarterback physician who triages and accepts all patients during a given time period | |

| Critical patients are accepted into a critical care resuscitation unit, an all‐purpose intensive care unit staffed by an intensivist who shares decision making with the referring provider and is involved in all communications regarding the transferred patient | |

| Availability of protected clinical information | Scribed physician handoff imported into EMR |

| Expect note in EMR: summary of clinical information documented by accepting physician | |

| PACS radiology cloud networks for hospital systems or statewide | |

| EMR interoperability: Care Everywhere module in Epic EMR | |

| Health and information management department responsible for obtaining and scanning outside records into EMR | |

| Feedback and quality improvement | Automatic review if patient upgraded to ICU within 4 hours of arrival |

| Departmental chair review of physician verbal handoff if poor outcome or difficulty with transfer | |

| Outcomes and quality of handoff reported back to referring hospital | |

| Discharge summary sent to referring hospital | |

| Referring hospital able to view patient's chart for 1 year | |

Quality improvement occurred via both internal and external feedback at several institutions. There were two notable mechanisms of internal feedback. Review of recorded physician verbal handoff by department chair occurred if an adverse event involved a transferred patient. An automatic internal review was triggered if a patient was upgraded to a higher level of care within 4 hours of arrival. These advanced mechanisms require vigilance and dedication on the part of the transfer center and physicians involved in the transfer process. External feedback was provided to referring hospitals through both active and passive mechanisms. One advanced health system allowed referring providers to access the patient's inpatient medical record for 1 year and sent a discharge summary to all referring hospitals. Another hospital maintained a sophisticated scorecard, with key measures shared with internal stakeholders and referring hospitals. Some of the metrics tracked included: denials due to insufficient bed capacity, change in bed status within 12 hours of transfer, and duration of stay in the postanesthesia care unit or emergency department awaiting an inpatient bed. This organization also performed site visits to referring hospitals, addressing handoff quality improvement.

DISCUSSION

Standardizing intrahospital handoffs has been shown to decrease preventable medical errors and reduce possible near‐miss events.[6, 10] Interhospital care transitions are inherently more complex due to increased acuity and decreased continuity; yet, there is no universal standardization of these handovers. We found that practices vary widely among tertiary care centers, and the level of transfer center involvement in the verbal and written handoff is inconsistent.

Evidence‐based frameworks to improve healthcare delivery, such as TeamSTEPPS (Team Strategies and Tools to Enhance Performance and Patient Safety), first require an organizational assessment to identify barriers to effective communication.[11] Interhospital transfers offer multiple unique barriers to continuity: physical distance, uncertainty in timing, incongruent treatment goals, disparate information sources, and distractions. This study provides the first step in conceptualizing the unique aspects of interhospital transfers, as well as highlights strategies to improve care coordination (Table 2).

A tailored intervention needs not only to overcome the typical barriers to handoffs such as time constraints, information sharing, and ambiguity in provider roles, but also to overcome multiple systems barriers. Bed management systems add another time‐related variable due to fixed and frequently overburdened bed capacity. Prioritization of transfers depends upon an accurate clinical depiction of patient acuity as well as organizational strategies. For example, neurologic diagnoses are commonly a top priority and are triaged as such, sometimes instead of higher‐acuity patients with other principal diagnoses. The complexity of this process may lead to delays in high‐acuity transfers, and is contingent upon accurate and updated clinical information. Coordinating handovers amidst complex provider schedules is another systems barrier. The commonly adopted 7 on, 7 off model for hospitalists, and shift work for intensivists, may increase the possibility that a transfer occurs across multiple provider changes. Patient follow‐up and closed‐loop feedback are important components of intrahospital handovers, but are much more challenging to implement for interhospital handovers with incongruent information systems and providers.

Programs to improve intrahospital handovers (eg, IPASS) emphasize creating an accurate clinical depiction of a patient using both verbal and written handoffs.[12] This is arguably more difficult over the phone without a concurrent written handoff. Recording of 3‐way physician and nurse handoffs is common, but reviews of recorded conversations are often unavailable or cumbersome in real time. EMR documentation of verbal information exchanged during the handoff is a possible solution. However, there may be legal implications for a transcribed verbal handoff. Furthermore, transfer centers often work with a software program separate from the principal EMR, and documentation in real time is challenging. EMR integration could help reinforce a patient‐centered shared mental model by allowing visualization of lab trends, radiology, vitals, and other documentation during and after the verbal handoff.

Physician‐driven checklist accept tools are another solution. Usually the responsibility of the accepting attending or fellow, this type of document is most useful as a modifiable document in the EMR. Accept tools, such as the one created by Malpass et al., have demonstrated successful shared decision making, and have resulted in fewer emergent procedures and fewer antibiotic changes on arrival.[13] One of the challenges with this approach is the frequency of utilization. In the aforementioned study, the adoption rate of the accept tool was about 70% in a closed university medical ICU, where these types of interventions may be viewed favorably by providers instead of burdensome.[13]

The most consistent finding of this survey was the lack of common processes to improve outcomes. Simple interventions, such as regular clinical updates, documentation of the handoff process, and obtaining objective information early in the process, were inconsistently adopted. Outcomes tracking and feedback are necessary components of team‐based quality improvement. Approximately half of the hospitals surveyed specifically tracked outcomes of transferred patients, and a minority had systems in place to provide feedback to referring centers.

Improving care delivery requires buy‐in from all participants, necessitating engagement of referring hospitals. Interventions such as frequent status updates and providing early documentation have the potential to increase the burden on referring providers when feedback or incentives are not commonplace. Moreover, the referring provider has the option of transferring a patient to a hospital with reduced handoff requirements, creating a disincentive for quality improvement. Quality metrics that incorporate outcomes of transferred patients may be necessary to better align the goals of sending and receiving physicians.

This study was intended to be a qualitative investigation and has some limitations. Any verbal qualitative study has the possibility of misinterpretation of information given by transfer center personnel. A single investigator performed most of the discussions and was able to clarify when needed, providing a degree of consistency, but may also be a source of bias. Categorical answers and a team‐based approach to conceptualizing responses likely minimized this potential bias.

We selected hospitals from the U.S. News and World Report Honor Roll plus additional hospitals chosen based on similarity to our home institutions. This may be a skewed sample and may not represent other major US hospitals and networks. However, we chose to interview large academic tertiary care centers, many accepting more than 1000 patients monthly, as these are likely to be the most proficient at performing transfers, and responses may be generalizable.

CONCLUSIONS

Standardization of information exchange during interhospital transfers does not currently exist. Practices vary widely amongst academic tertiary care centers. There is a paucity of data to support the association of specific processes with patient outcomes. Ultimately, a multicenter study examining the impact of improved information transfer on patient outcomes is warranted, utilizing tracking resources already in place. Optimizing and aligning practices between sending and receiving hospitals may improve interhospital handover efficiency and patient safety.

Disclosures

Dr. Usher is supported by a National Institutes of Health Clinical and Translational Science Award at the University of Minnesota: UL1TR000114. Dr. Steinberg has received support from Arena Pharmaceuticals and Major League Baseball. Drs. Herrigel, Parikh, Fanning, and Carroll have no disclosures. A prior version of this article was presented as an abstract at the Society of General Internal Medicine Mid‐Atlantic Regional Meeting in April 2014 in New York, New York.

Transitions of care are major sources of preventable medical errors. Incomplete or inaccurate communication during handoffs is the root cause of many adverse events.[1] In a prospective study, adverse events were found to occur during interhospital transfer up to 30% of the time.[2] Furthermore, patients subject to interhospital transfer have longer length of stay and higher inpatient mortality, even after adjusting for mortality risk predictors.[3] Standardizing intrahospital handoff structures and communication practices has been shown to reduce medical errors.[4, 5, 6] Interhospital transfer is an understudied area among the transitions of care literature. Little is known about institutional variations in the process of information transfer and its association with patient outcomes. Although it is challenging to ascertain the total burden of transferred patients, it has been estimated that 1.6 million inpatients originated at another facility.[7] Additionally, approximately 5.9% of admissions to a representative sample of US intensive care units (ICU) originated from other hospitals.[8] Patients are transferred between hospitals for multiple reasons beyond medical necessity, for example, to adjust for patient preferences, bed availability, and hospital staffing patterns. This creates a setting in which complex and often critically ill patients are subject to variable and sometimes ambiguous handoff processes.[9]

This survey of 32 tertiary care centers in the United States was undertaken to identify common practices in communication and documentation during interhospital patient transfers. Additional goals were to understand the structure of the handoff process, the role of the transfer center, and how electronic medical records (EMR) and interhospital communication play a role in this care transition. Subsequently, common challenges in coordinating interhospital transfers were identified to provide a conceptual framework for process improvement.

METHODS

Survey Process

The survey was initiated in September 2013 and concluded in September 2015, and was designed to quantify patient volume and identify common as well as unique practices to improve communication across the transfer process. The respondents were transfer center directors or managers, typically with a nursing background. Mass e‐mail generated a very poor response rate and did not allow for discussion and clarification of responses. The strategy was then modified to contact individual institutions directly. The survey was performed via phone whenever possible. Figure 1 represents purposeful sampling conducted on 2 different groups of hospitals. These hospitals represent a convenience sample of institutions from a nationally ranked list of hospitals as well as others comparable to our own institutions. Hospitals were selected based on status as academic tertiary care centers with roughly similar bed sizes (600). Several were selected based on similar EMR capabilities. Geographic diversity was also taken into account. Thirty‐two academic tertiary care centers were ultimately included in the survey. Data were entered into a survey form and deidentified. The RutgersRobert Wood Johnson Medical School Institutional Review Board approved this study.

Survey Content

Qualitative and quantitative data were collected by the study team. Data included number and origin of transfers (including those from inpatient facilities and emergency departments), staff characteristics, transfer process, documentation received prior to transfer, EMR access and type, outcomes, and clinical status tracking (see Supporting Figure 1 in the online version of this article for the complete survey tool).

Measurement and Data Analysis

Descriptive statistics are presented in unweighted fashion as a number and percentage for dichotomous variables, or a numeric range for ordinal variables. When a range was given by survey participants, the lower end of the range was used to calculate the population median. Several institutions surveyed were unable to provide specific numeric values, but instead cited how many requests for transfer they received either daily or monthly; these were omitted from the demographics analysis.

Respondents also provided a description of their overall triage and acceptance process for qualitative analysis. Unique strategies were identified by the study personnel at the time of each interview and amassed at the end of the interview period. These strategies were then discussed by the study team, and separated into categories that addressed the main challenges associated with interhospital transfers. Five general tenants of the transfer process were identified: acceptance and transport, need for clinical updates, provider handoffs and coordination of care, information availability, and feedback.

RESULTS

Based on a survey question asking respondents to estimate the total number of interhospital transfers received per month, the annual burden of patients transferred into these 32 hospitals represented approximately 247,000 patients yearly. The median number of patients transferred per month, based on a point estimate if given or the lower end of the range if a range was provided, was 700 (range, 2502500). On average, 28% (range, 10%50%) were transferred directly to an ICU, representing approximately 69,000 critically ill patients. A majority of hospitals polled (65%) received patients from more than 100 referring institutions, and a minority (23%) identified EMR interoperability for more than a quarter of the sending facilities. The overall acceptance rate ranged from 50% to 95%.

Table 1 represents common transition elements of participating institutions. Thirty‐eight percent of hospitals utilize a critical caretrained registered nurse as the initial triage point of contact. The process and quality controls for coordinating transfers from outside hospitals were highly variable. Although clinical updates from acceptance to arrival were required in a majority of hospitals (81%), the acceptable time interval was inconsistent, varying from 2 to 4 hours (13%) to 24 hours (38%). A mandatory 3‐way recorded discussion (between transfer center staff, and referring and accepting physician) was nearly uniform. Objective clinical information to assist the handoff (ie, current labs, radiology images, history and physical, progress notes, or discharge summary) was available in only 29% of hospitals. Only 23% of hospitals also recorded a 3‐way nursing handoff (bedside‐to‐bedside nursing report). A minority of hospitals utilized their principal EMR to document the transfer process and share incoming clinical information among providers (32%).

| Survey Question | Survey Response | N (%) |

|---|---|---|

| ||

| What is the training background of the staff member who takes the initial call and triages patients in your transfer center? | Critical care experienced RN | 12/32 (38%) |

| Other clinical background (EMT, RN) | 13/32 (41%) | |

| Nonclinical personnel | 7/32 (22%) | |

| Prior to the patient's arrival, do you require any documentation to be transmitted from the transferring institution? | Objective clinical data required | 9/32 (28%) |

| Objective clinical data not required | 23/32 (72%) | |

| Is a 3‐way recorded conversation facilitated by the transfer center required? | Initial physician‐to‐physician acceptance discussion | 27/32 (84%) |

| RN‐to‐RN report | 6/26 (23%) | |

| Are clinical status updates required? | Updates required every 24 hours | 12/32 (38%) |

| Updates required every 812 hours | 7/32 (22%) | |

| Updates required every 24 hours | 4/32 (13%) | |

| Updates required but timing not specified | 3/32 (9%) | |

| Clinical status updates not required | 6/32 (19%) | |

| Is any clinical information obtained by the transfer center available to the patient's providers in real time on your EMR system? | Yes | 10/31 (32%) |

| No | 21/31 (68%) | |

| Do you track the outcomes of patients you accept from outside hospitals? | Yes | 14/24 (58%) |

| No | 10/24 (42%) | |

Descriptions of the transfer process were conceptually evaluated by the study team, then divided into 5 common themes: acceptance and transport, clinical updates, coordination of care, information availability, and quality improvement (Table 2). Institutions devised novel approaches including providing high bed priority to expedite transit, a dedicated quarterback physician to coordinate safe transfer and uninterrupted communication, electronic transfer notes to share communication with all providers, and a standardized system of feedback to referring hospitals. Several institutions relied on an expect note, which could be a free‐text document or a form document in the EMR. This preserves verbal handoff information that may otherwise be lost if the accepting physician at the time of transfer is not the physician receiving the handoff.

| Challenges | Innovative Practices |

|---|---|

| |

| Expedited acceptance and transport | Automatic acceptance for certain diagnoses (ie, neurosurgical indication for transfer) |

| Transferred patients prioritized for hospital beds over all patients except codes | |

| Hospital controls transportation units, allowing for immediate dispatch and patient retrieval | |

| Outsourcing of transfer center and interfacility transfer to third party | |

| Timeliness of clinical updates | Transfer center communicates with bedside RN for clinical updates at the time of transfer |

| Clinical status updates every 24 hours for critical patients | |

| Daily reevaluation of clinical status | |

| Accepting physician alerted of changes in clinical status | |

| Handoff and coordination of care | Physician accept tool in EMR |

| Quarterback physician who triages and accepts all patients during a given time period | |

| Critical patients are accepted into a critical care resuscitation unit, an all‐purpose intensive care unit staffed by an intensivist who shares decision making with the referring provider and is involved in all communications regarding the transferred patient | |

| Availability of protected clinical information | Scribed physician handoff imported into EMR |

| Expect note in EMR: summary of clinical information documented by accepting physician | |

| PACS radiology cloud networks for hospital systems or statewide | |

| EMR interoperability: Care Everywhere module in Epic EMR | |

| Health and information management department responsible for obtaining and scanning outside records into EMR | |

| Feedback and quality improvement | Automatic review if patient upgraded to ICU within 4 hours of arrival |

| Departmental chair review of physician verbal handoff if poor outcome or difficulty with transfer | |

| Outcomes and quality of handoff reported back to referring hospital | |

| Discharge summary sent to referring hospital | |

| Referring hospital able to view patient's chart for 1 year | |

Quality improvement occurred via both internal and external feedback at several institutions. There were two notable mechanisms of internal feedback. Review of recorded physician verbal handoff by department chair occurred if an adverse event involved a transferred patient. An automatic internal review was triggered if a patient was upgraded to a higher level of care within 4 hours of arrival. These advanced mechanisms require vigilance and dedication on the part of the transfer center and physicians involved in the transfer process. External feedback was provided to referring hospitals through both active and passive mechanisms. One advanced health system allowed referring providers to access the patient's inpatient medical record for 1 year and sent a discharge summary to all referring hospitals. Another hospital maintained a sophisticated scorecard, with key measures shared with internal stakeholders and referring hospitals. Some of the metrics tracked included: denials due to insufficient bed capacity, change in bed status within 12 hours of transfer, and duration of stay in the postanesthesia care unit or emergency department awaiting an inpatient bed. This organization also performed site visits to referring hospitals, addressing handoff quality improvement.

DISCUSSION

Standardizing intrahospital handoffs has been shown to decrease preventable medical errors and reduce possible near‐miss events.[6, 10] Interhospital care transitions are inherently more complex due to increased acuity and decreased continuity; yet, there is no universal standardization of these handovers. We found that practices vary widely among tertiary care centers, and the level of transfer center involvement in the verbal and written handoff is inconsistent.

Evidence‐based frameworks to improve healthcare delivery, such as TeamSTEPPS (Team Strategies and Tools to Enhance Performance and Patient Safety), first require an organizational assessment to identify barriers to effective communication.[11] Interhospital transfers offer multiple unique barriers to continuity: physical distance, uncertainty in timing, incongruent treatment goals, disparate information sources, and distractions. This study provides the first step in conceptualizing the unique aspects of interhospital transfers, as well as highlights strategies to improve care coordination (Table 2).

A tailored intervention needs not only to overcome the typical barriers to handoffs such as time constraints, information sharing, and ambiguity in provider roles, but also to overcome multiple systems barriers. Bed management systems add another time‐related variable due to fixed and frequently overburdened bed capacity. Prioritization of transfers depends upon an accurate clinical depiction of patient acuity as well as organizational strategies. For example, neurologic diagnoses are commonly a top priority and are triaged as such, sometimes instead of higher‐acuity patients with other principal diagnoses. The complexity of this process may lead to delays in high‐acuity transfers, and is contingent upon accurate and updated clinical information. Coordinating handovers amidst complex provider schedules is another systems barrier. The commonly adopted 7 on, 7 off model for hospitalists, and shift work for intensivists, may increase the possibility that a transfer occurs across multiple provider changes. Patient follow‐up and closed‐loop feedback are important components of intrahospital handovers, but are much more challenging to implement for interhospital handovers with incongruent information systems and providers.

Programs to improve intrahospital handovers (eg, IPASS) emphasize creating an accurate clinical depiction of a patient using both verbal and written handoffs.[12] This is arguably more difficult over the phone without a concurrent written handoff. Recording of 3‐way physician and nurse handoffs is common, but reviews of recorded conversations are often unavailable or cumbersome in real time. EMR documentation of verbal information exchanged during the handoff is a possible solution. However, there may be legal implications for a transcribed verbal handoff. Furthermore, transfer centers often work with a software program separate from the principal EMR, and documentation in real time is challenging. EMR integration could help reinforce a patient‐centered shared mental model by allowing visualization of lab trends, radiology, vitals, and other documentation during and after the verbal handoff.

Physician‐driven checklist accept tools are another solution. Usually the responsibility of the accepting attending or fellow, this type of document is most useful as a modifiable document in the EMR. Accept tools, such as the one created by Malpass et al., have demonstrated successful shared decision making, and have resulted in fewer emergent procedures and fewer antibiotic changes on arrival.[13] One of the challenges with this approach is the frequency of utilization. In the aforementioned study, the adoption rate of the accept tool was about 70% in a closed university medical ICU, where these types of interventions may be viewed favorably by providers instead of burdensome.[13]

The most consistent finding of this survey was the lack of common processes to improve outcomes. Simple interventions, such as regular clinical updates, documentation of the handoff process, and obtaining objective information early in the process, were inconsistently adopted. Outcomes tracking and feedback are necessary components of team‐based quality improvement. Approximately half of the hospitals surveyed specifically tracked outcomes of transferred patients, and a minority had systems in place to provide feedback to referring centers.

Improving care delivery requires buy‐in from all participants, necessitating engagement of referring hospitals. Interventions such as frequent status updates and providing early documentation have the potential to increase the burden on referring providers when feedback or incentives are not commonplace. Moreover, the referring provider has the option of transferring a patient to a hospital with reduced handoff requirements, creating a disincentive for quality improvement. Quality metrics that incorporate outcomes of transferred patients may be necessary to better align the goals of sending and receiving physicians.

This study was intended to be a qualitative investigation and has some limitations. Any verbal qualitative study has the possibility of misinterpretation of information given by transfer center personnel. A single investigator performed most of the discussions and was able to clarify when needed, providing a degree of consistency, but may also be a source of bias. Categorical answers and a team‐based approach to conceptualizing responses likely minimized this potential bias.

We selected hospitals from the U.S. News and World Report Honor Roll plus additional hospitals chosen based on similarity to our home institutions. This may be a skewed sample and may not represent other major US hospitals and networks. However, we chose to interview large academic tertiary care centers, many accepting more than 1000 patients monthly, as these are likely to be the most proficient at performing transfers, and responses may be generalizable.

CONCLUSIONS

Standardization of information exchange during interhospital transfers does not currently exist. Practices vary widely amongst academic tertiary care centers. There is a paucity of data to support the association of specific processes with patient outcomes. Ultimately, a multicenter study examining the impact of improved information transfer on patient outcomes is warranted, utilizing tracking resources already in place. Optimizing and aligning practices between sending and receiving hospitals may improve interhospital handover efficiency and patient safety.

Disclosures

Dr. Usher is supported by a National Institutes of Health Clinical and Translational Science Award at the University of Minnesota: UL1TR000114. Dr. Steinberg has received support from Arena Pharmaceuticals and Major League Baseball. Drs. Herrigel, Parikh, Fanning, and Carroll have no disclosures. A prior version of this article was presented as an abstract at the Society of General Internal Medicine Mid‐Atlantic Regional Meeting in April 2014 in New York, New York.

- , , , . Doctors' handovers in hospitals: a literature review. BMJ Qual Saf. 2011;20(2):128–133.

- , , , et al. Quality of inter‐hospital transport of critically ill patients: a prospective audit. Crit Care. 2005;9(4):R446–R451.

- , , , , . Interhospital transfer patients discharged by academic hospitalists and general internists: characteristics and outcomes [published online November 20, 2015]. J Hosp Med. doi: 10.1002/jhm.2515.

- , , , et al. Evaluation of postoperative handover using a tool to assess information transfer and teamwork. Ann Surg. 2011;253(4):831–837.

- , , , et al. Rates of medical errors and preventable adverse events among hospitalized children following implementation of a resident handoff bundle. JAMA. 2013;310(21):2262–2270.

- , , , et al. Changes in medical errors after implementation of a handoff program. N Engl J Med. 2014;371(19):1803–1812.

- HCUP National Inpatient Sample (NIS). Healthcare Cost and Utilization Project (HCUP). 2012. Agency for Healthcare Research and Quality, Rockville, MD. Available at: www.hcup‐us.ahrq.gov/nisoverview.jsp. Accessed 26 May 2015.

- , , , , , . Outcomes among patients discharged from busy intensive care units. Ann Intern Med. 2013;159(7):447–455.

- , , . Reasons underlying inter‐hospital transfers to an academic medical intensive care unit. J Crit Care. 2013;28(2):202–208.

- , , , . Avoiding handover fumbles: a controlled trial of a structured handover tool versus traditional handover methods. BMJ Qual Saf. 2012;21(11):925–932.

- , , , et al. Validation of a teamwork perceptions measure to increase patient safety. BMJ Qual Saf. 2014;23(9):718–726.

- , , , et al. Development, implementation, and dissemination of the I‐PASS handoff curriculum: a multisite educational intervention to improve patient handoffs. Acad Med. 2014;89(6):876–884.

- , , , . The interhospital medical intensive care unit transfer instrument facilitates early implementation of critical therapies and is associated with fewer emergent procedures upon arrival. J Intensive Care Med. 2015;30(6):351–357.

- , , , . Doctors' handovers in hospitals: a literature review. BMJ Qual Saf. 2011;20(2):128–133.

- , , , et al. Quality of inter‐hospital transport of critically ill patients: a prospective audit. Crit Care. 2005;9(4):R446–R451.

- , , , , . Interhospital transfer patients discharged by academic hospitalists and general internists: characteristics and outcomes [published online November 20, 2015]. J Hosp Med. doi: 10.1002/jhm.2515.

- , , , et al. Evaluation of postoperative handover using a tool to assess information transfer and teamwork. Ann Surg. 2011;253(4):831–837.

- , , , et al. Rates of medical errors and preventable adverse events among hospitalized children following implementation of a resident handoff bundle. JAMA. 2013;310(21):2262–2270.

- , , , et al. Changes in medical errors after implementation of a handoff program. N Engl J Med. 2014;371(19):1803–1812.

- HCUP National Inpatient Sample (NIS). Healthcare Cost and Utilization Project (HCUP). 2012. Agency for Healthcare Research and Quality, Rockville, MD. Available at: www.hcup‐us.ahrq.gov/nisoverview.jsp. Accessed 26 May 2015.

- , , , , , . Outcomes among patients discharged from busy intensive care units. Ann Intern Med. 2013;159(7):447–455.

- , , . Reasons underlying inter‐hospital transfers to an academic medical intensive care unit. J Crit Care. 2013;28(2):202–208.

- , , , . Avoiding handover fumbles: a controlled trial of a structured handover tool versus traditional handover methods. BMJ Qual Saf. 2012;21(11):925–932.

- , , , et al. Validation of a teamwork perceptions measure to increase patient safety. BMJ Qual Saf. 2014;23(9):718–726.

- , , , et al. Development, implementation, and dissemination of the I‐PASS handoff curriculum: a multisite educational intervention to improve patient handoffs. Acad Med. 2014;89(6):876–884.

- , , , . The interhospital medical intensive care unit transfer instrument facilitates early implementation of critical therapies and is associated with fewer emergent procedures upon arrival. J Intensive Care Med. 2015;30(6):351–357.

© 2016 Society of Hospital Medicine

Evaluation of Internet Information About Rotator Cuff Repair

Patients are learning about health and disease more independently than before, but such self-education may pose a unique challenge for practicing physicians. Although educated patients can assist in the critical appraisal of treatment options,1 misinformed patients may have preconceived treatment biases and unrealistic expectations. More than 66 million Americans use the Internet daily, and recent surveys have shown 86% have used the Internet for health-related information.2,3 With Internet use increasing, the number of patients turning to the web for medical information is increasing as well.4 For many patients, this information can be useful in making decisions about their health and health care.5

Although accessing medical information from the Internet has grown exponentially, analysis of information quality has grown considerably slower.6 With no regulatory body monitoring content, there is easy circumvention of the peer review process, an essential feature of academic publishing.7 With no external regulation, the information retrieved may be incorrect, outdated, or misleading. Many orthopedic studies have analyzed Internet content about numerous diagnoses.3-6,8-18 Most of these studies have found this information highly variable and of poor quality.

We conducted a study to evaluate and analyze rotator cuff repair information available to the general public through the Internet; to assess changes in the quality of information over time; to determine if sites sponsored by academic institutions offered higher-quality information; and to assess whether the readability of the material varied according to DISCERN scores.

Rotator cuff repairs are among the most common surgeries performed by orthopedic surgeons. To our knowledge, this is the first study to assess the quality of web information about rotator cuff repairs. We hypothesized that the quality of information would positively correlate with the reading level of the material presented, that academic institutions would present the highest-quality information, and that the type of information presented would change over time.

Materials and Methods

We used the search phrase rotator cuff repair on the 3 most popular search engines: Google, Yahoo!, and Bing. Google is the dominant engine, taking 83.06% of total market share, followed by Yahoo! (6.86%) and Bing (4.27%).5 The first 50 websites identified by each search engine were selected for evaluation, excluding duplicates or overlapping websites. Similarly, advertisements and strictly video results lacking text were excluded. After each engine was queried, a master list of 150 websites was created for individual evaluation and assessment. To assess changes in results over time, we performed 2 searches, on November 16, 2011, and May 18, 2014.

The content of each website was analyzed for authorship, ability to contact the author, discussion of disorder, surgical treatment, complications, surgical eligibility, rehabilitation, other treatment options, and use of peer-reviewed sources. Authorship was placed in 1 of 6 categories:

1. Academic—university-affiliated physician or research group.

2. Private—physician or group without stated affiliation to an academic organization.

3. Industry—manufacturing or marketing company advertising a product or service for profit.

4. News source—bulletin or article without affiliation to a hospital or an academic institution.

5. Public education—individual or organization with noncommercial website providing third-party information (eg, Wikipedia, About.com).

6. Blog—website publishing an individual’s personal experiences in diary or journal form.

Websites were also assessed for accuracy and validity based on presence or absence of Health On the Net code (HONcode) certification and DISCERN score. Designed by the Health On the Net Foundation in 1996, HONcode provides a framework for disseminating high-quality medical information over the web.19 Website owners can request that their sites be evaluated for HONcode certification; a site that qualifies can display the HONcode seal.20 The DISCERN project, initially funded by the National Health Service in the United Kingdom, judges the quality of written information available on health-related websites.21 It determines the quality of a publication on the basis of 16 questions: The first 8 address the publication’s reliability, the next 7 involve specific details of treatment choices, and the last is an overall rating of the website.

Website readability was assessed with the Flesch-Kincaid test. This test, designed under contract with the US Navy in 1975, has been used in other orthopedic studies.19 Regression analysis was performed to check for correlation between website readability and DISCERN score. Analysis of variance was used to analyze differences between scores.

Results

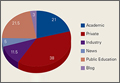

We performed a comprehensive analysis of the top 50 websites from each of the 3 search engines (N = 150 websites) (Figures 1–5, Table). Regarding authorship, our 2 searches demonstrated similar values (Figure 1). In 2011, 21% of websites were associated with an academic institution, 38% were authored by private physicians or hospital or physician groups not associated with an academic institution, 11.5% were industry-sponsored, 5% were news bulletins or media reports, 21.5% were public education websites, and 3% were personal blogs. Our 2014 search found a similar distribution of contributors. Between 2011 and 2014, the largest change was in academic authors, which decreased by 7%, from 21% to 14%. Percentage of websites authored by private physicians remained constant from the first to the second search: 38%.

When the 2011 and 2014 website content was compared, several changes were noted. Percentage of websites providing an author contact method increased from 21% to 50% (Figure 2), percentage detailing rotator cuff repairs increased from 82% to 91%, and percentage introducing treatment options in addition to surgical management increased from 11.5% to 61%. Percentage discussing surgical eligibility, however, decreased from 43% to 18%. Percentage citing peer-reviewed sources remained relatively constant (28%, 26%), as did percentage discussing surgical technique for rotator cuff repair (55%, 59%) (Figure 3). A major decrease was found in percentage of websites discussing surgical complications, 42% in 2011 down to 25% in 2014, whereas a major increase was found in percentage discussing rehabilitation, from 39% in 2011 up to 73% in 2014. In 2014, no websites discussed double- versus single-row surgery—compared with 6% in 2011. False claims remained low between 2011 and 2014. In both searches, no website guaranteed a return to sport, and few made claims of painless or bloodless surgery.

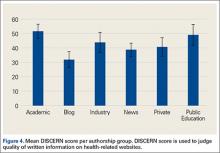

DISCERN scores for websites found during the 2014 search were averaged for each of the 6 authorship groups (Figure 4). The highest DISCERN scores were given to academic institution websites (51.6) and public education websites (49). For the academic websites, this difference was significant relative to news, blog, and private physician websites (Ps = .012, .001, .001) The lowest DISCERN scores were given to news organization websites and personal blogs. DISCERN scores were 43.8 for industry sources and 40.7 for private physician groups; the difference was not significant (P = .229). Overall mean DISCERN score for all websites was 44. Eleven percent of websites were HONcode-certified.

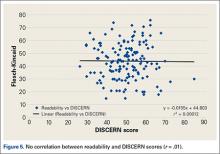

No correlation was found between website readability and DISCERN score; correlation coefficient r was .01 (Figure 5). For the websites in 2014, mean Flesch-Kincaid readability score was 50.17, and mean grade level was 10.98; coefficient of determination r2 was 0.00012.

The Table compares our data with data from other orthopedic studies that have analyzed the quality of Internet information about various orthopedic injuries, diseases, and procedures.3-6,8,9,11-18 With its mean DISCERN score of 44, the present rotator cuff tear study was ranked third of 6 studies that have used this scoring system to analyze website content. Of these 6 studies, those reviewing osteosarcoma and juvenile idiopathic arthritis were ranked highest (mean scores, 49.8 and 48.9, respectively), and the study reviewing scoliosis surgery was ranked lowest (38.9). Bruce-Brand and colleagues9 recently found a mean DISCERN score of 41 for anterior cruciate ligament (ACL) reconstruction. When considering HONcode-certified websites, our Internet search for rotator cuff tears found the third lowest percentage, 10.5%, compared with the other studies (Table); the highest percentage, 30%, was found for websites discussing concussions in athletes. When considering authorship, our rotator cuff study found the third highest percentage, 76%, authored by academic centers, physicians, and public education websites; the highest percentage was found in websites discussing ACL reconstruction. Websites discussing ACL reconstruction also had the highest percentage of websites authored by industry.9

Discussion

To our knowledge, this is the first study specifically analyzing the quality of Internet information about rotator cuff repairs. A similar study, conducted by Starman and colleagues15 in 2010, addressed the quality of web information about 10 common sports medicine diagnoses, one of which was rotator cuff tears. In that study, only 16 of the websites included discussed rotator cuff tears. In addition, the authors used a customized, HONcode-based grading system to analyze each website, making their data difficult to compare across studies.

Ideally, a high-quality medical website should be written by a credible source and should cover a disorder, treatment options, eligibility, rehabilitation, and complications. As there is no standard grading system for analyzing web content about rotator cuff repairs, we analyzed the websites for specific information we thought should be included in a high-quality website (Figures 2, 3). When considering authorship, we found academic centers, private physicians, and educational sources comprised 76% of the sources; industry sources made up only 12%. Similar findings were noted by investigators analyzing Internet information about other orthopedic topics, including ACL reconstruction, lumbar arthroplasty, osteosarcoma, and cervical spine surgery.5,11,22 Studies analyzing websites for information on ACL tears and distal radius fractures found have a higher percentage of industry-sponsored websites.9,10

DISCERN showed that the highest-quality information came from websites with academic affiliations, consistent with previous studies,3,9,17 and its mean score (51.6) was significantly higher than the scores for private physician websites, news sites, and blogs (Ps = .001, .016, .001); the least reliable information was from personal blogs and news outlets. Of note, mean DISCERN score was higher for the industry websites we found than for private physician websites (43.8 vs 40.7), though the difference was not significant (P = .229). Previous investigators considered number of industry-sponsored websites as a marker of poor quality of information relating to a given topic; however, given the DISCERN scores in our study, this might not necessarily be true.6 Based on the present study’s data, websites affiliated with academic institutions would be recommended for patients searching for high-quality information about rotator cuff tears.

Given DISCERN scores across studies, information about rotator cuff tears ranked below information about osteosarcoma and juvenile idiopathic arthritis but above information about scoliosis, cervical spine surgery, and ACL reconstruction (Table). DISCERN scores must be compared across studies, as there are no definitions for good and poor DISCERN scores.

Of the 4 studies that analyzed percentage of websites citing peer-reviewed sources, only our study and the study of cervical spine surgery18 analyzed that percentage as well as DISCERN score. Percentage citing peer-reviewed sources was 26% for rotator cuff tears and 24% for cervical spine surgery; the respective DISCERN scores were 44 and 43.6. As only these 2 studies could be compared, no real correlation between percentage of websites citing peer-reviewed sources and the quality of the content on a given topic can be assessed. More research into this relationship is needed. One already delineated association is the correlation between HONcode-certified sites and high DISCERN scores.21 For high-quality medical information, physicians can direct their patients both to academic institution websites and to HONcode-certified websites.

When we compared the present study with previous investigations, we found a large difference between search results for a given topic. In 2013, Duncan and colleagues6 and Bruce-Brand and colleagues9 used similar study designs (eg, search terms, search engines) for their investigations of quality of web information. Their results, however, were widely different. For example, percentages of industry authorship were 4.5% (Duncan and colleagues6) and 64% (Bruce-Brand and colleagues9). This inconsistency between studies conducted during similar periods might be related to what appears at the top of the results queue for a search. Duncan and colleagues6 analyzed 200 websites, Bruce-Brand and colleagues9 only 45. Industries may have made financial arrangements and used search engine optimization techniques to have their websites listed first in search results.