User login

Implications of Vancomycin Troughs Drawn Earlier Than Current Guidelines

Vancomycin was isolated in the 1950s, but due to impurities causing adverse events and semisynthetic penicillin production, its use was greatly reduced.1,2 However, this medication gained in popularity 30 years later as a first-line treatment for methicillin-resistant Staphylococcus aureus infections.

In 2009 the Infectious Diseases Society of America (IDSA), American Society of Health System Pharmacists, and Society of Infectious Diseases Pharmacists developed a consensus review of the therapeutic monitoring and dosing of vancomycin in adult patients.3 Trough serum concentration levels are recommended as the most accurate and convenient method to monitor vancomycin. Per IDSA guidelines, an optimal trough is intended to be high enough to clear infections (> 10 mg/L) and prevent the development of vancomycin intermediate and resistant bacteria. Troughs should be obtained just before the next dose in steady-state conditions (starting just before the fourth dose) in patients with normal renal function.

Since the development of these guidelines, vancomycin trough levels are often drawn early.4-7 This may lead to an overestimation of the true trough concentration. A study by Morrison and colleagues in Boston, Massachusetts, found that 41.3% of vancomycin troughs were drawn early, and this resulted in statistically significant increases in the vancomycin concentrations, the rate of vancomycin regimen adjustments (decrease, discontinuation, or holding of dose), and the repeat vancomycin level orders compared with correctly timed troughs.5 It was noted by the study authors that lowering the daily dose of vancomycin based on early trough levels could lead to an underdosing of vancomycin and an increase in intermediate or resistant bacteria.

Related: IDWEEK: Antibiotic ‘time-out’ cut vancomycin use

The prevalence and implications of early trough samples have been measured at only 1 facility, and it is unknown whether these data can be reproduced elsewhere.5 Thus, this study sought to determine the prevalence and corresponding clinical actions of early trough levels at the Captain James A. Lovell Federal Health Care Center (JALFHCC). This is a unique facility that in 2010 combined a VA hospital with a DoD hospital. This facility cares for 67,000 military and retiree beneficiaries each year from southwestern Wisconsin and northwestern Illinois.The primary objective of this study was to measure the rate of early troughs drawn and their resultant effect on vancomycin regimens compared with correctly timed troughs. Secondarily, this study sought to compare the rate of repeated vancomycin trough levels in early vs correctly timed measurements.

Methods

This retrospective cohort analysis compared the outcomes of early and correctly timed vancomycin troughs. This study was approved by the Edward Hines, Jr. VA Hospital and JALFHCC Institutional Review Board. Veteran patients aged ≥ 18 years, hospitalized at JALFHCC, and receiving IV vancomycin at dosing intervals of 8, 12, 24, and 48 hours with measured trough levels between July 1, 2009, and July 1, 2013, were included in this study. Patients were excluded from analysis if vancomycin was given at any schedule other than the previously stated frequencies, they received hemodialysis during the treatment period, or their insurance coverage was through TRICARE (these patients had either active-duty or retired active-duty status).

Potentially eligible patients were identified via a Computerized Patient Records System (CPRS) search for laboratory vancomycin level measurements. The search supplied the researcher with the patient name, vancomycin level date and time, type of vancomycin level (trough or random), and vancomycin concentration. With this information, further data were gathered through CPRS: demographics, type of clinical infection, desired trough level (inferred if not listed in CPRS note), and vancomycin administration time (through the bar code medication administration system [BCMA] in CPRS). This analysis was of troughs, and multiple troughs may have originated from the same patient.

An early trough was defined as a trough taken more than 2 hours earlier than the next theoretical administration time or anytime before the third dose. After a trough was determined to be early or on time, the clinical actions taken during the dosing interval following sample collection were documented. A dose was considered to be held if stated in the BCMA or in a CPRS provider note. A dose was considered to be decreased with a change in frequency or strength that resulted in an overall daily dose decrease. A recollected vancomycin trough was counted within 24 hours of the trough or per a note in CPRS. Finally, observations that noted trends in vancomycin trough management were recorded.

The chi-square test with a significance criterion of 0.05 was used to compare early and on time troughs. Based on the results from the Boston, Massachusetts, study and 1 other study, about 780 vancomycin troughs would be required to meet significance in the primary outcome.5,6

Results

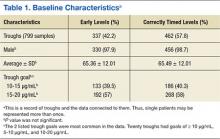

A total of 474 patient charts were reviewed, and 278 met inclusion criteria (196 were excluded). Of the included patients, 799 trough levels were analyzed. Of these, 377 (42.2%) were drawn early. There was no significant difference in the baseline characteristics of the early group vs the correctly timed group (Table 1). Of the early troughs, 190 (56.3%) were drawn prior to the third dose of vancomycin. It was observed that a large portion of these troughs occurred after a vancomycin dose adjustment.

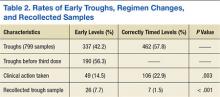

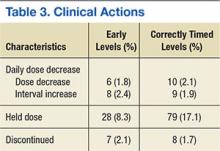

Clinical actions taken after sampling occurred at a rate of 14.5% in the early group and 22.9% in the correctly timed group (P = .003; Table 2). Early troughs led to a 7.7% rate of trough recollection, which was significantly greater than the 1.5% rate in the correctly timed group (P < .001). An analysis of each factor resulting in a clinical action illustrated that the rates of daily dose decrease and discontinued dose were similar between the groups (Table 3). However, the rate of held doses was 8.3% in the early group and 17.1% in the correctly timed group.

This research process yielded some observations. Occasionally a trough was drawn after vancomycin therapy was discontinued and when there was no concern for nephrotoxicity. After the guidelines were published, providers continued to document in CPRS notes to check troughs before the third dose. This incidence decreased over time. Troughs were taken often in patients who were receiving a short course of therapy or who were hemodynamically stable. Finally, documentation of vancomycin regimen changes occasionally did not match the record in the BCMA (in these situations, the BCMA record was used for this study).

Related: Assessment of High Staphylococcus aureus MIC and Poor Patient Outcomes

Discussion

A large portion of trough levels at the JALFHCC were drawn early and did not adhere to the 2009 consensus guidelines. The rates of early troughs in this study and in the Boston, Massachusetts, study are similar.5 However, the 2 studies differed in 1 significant aspect: Clinical actions were taken less often in the early group at JALFHCC, whereas they were taken more often in the early group in the Boston, Massachusetts, study. This dissimilarity could be attributed to a difference in software between the hospitals. In the previous study, trough levels and the time that they were drawn were not displayed together. Thus, clinicians may have been less likely to gauge whether a trough was early. Since this information is available at the JALFHCC, clinicians may have been aware that the trough was early and avoided adjusting treatment (such as holding a dose, as illustrated in the data) based on a falsely elevated trough. This point is further supported by significantly greater amounts of recollected troughs in the early group, suggesting an understanding that the trough was early.

The low trough recollection rate of 7.7% of all early samples could be due to several factors that would prevent a trough redraw. First, medication discontinuation resulting from course completion or sensitivity results would not require further trough monitoring. Second, practitioners may assess the early sample as insignificantly different from a correctly timed one and elect not to redraw the trough. Sometimes a trough was drawn at the correct time, but the time was recorded incorrectly. In this situation, a new trough level would not be necessary. Finally, a lack of sufficient staffing during nights and weekends may result in a delay in interpreting results leading to a missed opportunity for recollection. Additionally, some troughs may not have been redrawn based on a practitioner’s opinion that a trough was not significantly early and did not represent skewed results. Sometimes an incorrect recording of trough draw time reflected that it was taken after vancomycin dosing when it was not.

Specific observations regarding the timing of the trough indicate other possible concerns and areas for improvement. First, providers must cancel future trough orders concurrently with canceling treatment. Second, at the time of publication of the consensus, some providers were slow adopters of the new guidelines. Finally, the IDSA guidelines state that frequent monitoring for short course, lower intensity therapy, or in patients who are hemodynamically stable is not recommended.3 However, troughs were sometimes measured 2 to 3 times weekly in these patients.

Related: Results mixed in hospital efforts to tackle antimicrobial resistance

The data and observations lead to the conclusion that although providers may be able to discern between early and correctly timed troughs, they were not consistently adherent to the 2009 IDSA guidelines. It has been shown that pharmacy involvement of Medicare patients with infections in the intensive care unit has led to better clinical and monetary outcomes.8 Therefore, continued efforts by clinical pharmacists to monitor trough timing can be used to improve adherence and decrease costs (each trough is estimated to cost $16.97).

A study conducted in Australia demonstrated that pharmacist-led education of vancomycin dosing and monitoring (including when to measure a trough level) among prescribers and nurses led to improved adherence to the current guidelines and a greater number of patients treated within desired therapeutic ranges.9 In addition, a small study at the Atlanta VAMC in Georgia demonstrated that education of nurses, lab personnel, residents, ward clerks, and pharmacists led to a greater number of appropriately timed vancomycin and aminoglycoside levels.10 Thus, an interdisciplinary review of the current IDSA guidelines and review on the publication of the anticipated updated vancomycin guidelines should be provided to hospital personnel to aid in adoption of current dosing and monitoring recommendations.11

Limitations

This study is limited by the 4-year span of time that it encompassed, which may give a skewed depiction of current practices. Another limitation is that patients with fluctuating renal function were included in the analysis. Instead of selecting a random level order, a trough level order was sometimes selected for these patients. This could lead to a lower actual rate of early troughs. A third limitation is that this was a small and unblinded study. Also, the actual trough levels and the resulting changes that were made to specific regimens were not recorded. Thus, these data do not indicate whether the changes that were made reflected guideline recommendations. Finally, some clinical actions were taken after the dosing interval following the trough. This was often a result of off-hours lab results or waiting on attending physician or infectious disease guidance. These data were not included in the analysis.

Conclusion

Vancomycin troughs were often drawn too early and resulted in an increased rate of trough recollection. In an attempt to improve adherence to the current and the upcoming revised version of the IDSA consensus statement, it is recommended to educate and reeducate providers through interdisciplinary-led review sessions.

Author disclosures

The authors report no actual or potential conflicts of interest with regard to this article.

Disclaimer

The opinions expressed herein are those of the authors and do not necessarily reflect those of Federal Practitioner, Frontline Medical Communications Inc., the U.S. Government, or any of its agencies. This article may discuss unlabeled or investigational use of certain drugs. Please review the complete prescribing information for specific drugs or drug combinations—including indications, contraindications, warnings, and adverse effects—before administering pharmacologic therapy to patients.

1. Moellering RC Jr. Vancomycin: a 50-year reassessment. Clin Infect Dis. 2006;42(suppl 1):S3-S4.

2. Levine DP. Vancomycin: a history. Clin Infect Dis. 2006;42(suppl 1):S5-S12.

3. Rybak MJ, Lomaestro BM, Rotschafer JC, et al. Therapeutic monitoring of vancomycin in adults summary of consensus recommendations from the American Society of Health-System Pharmacists, the Infectious Diseases Society of America, and the Society of Infectious Diseases Pharmacists. Pharmacotherapy. 2009;29(11):1275-1279.

4. Davis SL, Scheetz MH, Bosso JA, Goff DA, Rybak MJ. Adherence to the 2009 consensus guidelines for vancomycin dosing and monitoring practices: a cross-sectional survey of U.S. hospitals. Pharmacotherapy. 2013;33(12):1256-1263.

5. Morrison AP, Melanson SEF, Carty MG, Bates DW, Szumita PM, Tanasijevic MJ. What proportion of vancomycin trough levels are drawn too early? Frequency and impact on clinical actions. Am J Clin Pathol. 2012;137(3):472-478.

6. Traugott KA, Maxwell PR, Green K, Frei C, Lewis JS 2nd. Effects of therapeutic drug monitoring criteria in a computerized prescriber-order-entry system on the appropriateness of vancomycin level orders. Am J Health Syst Pharm. 2011;68(4):347-352.

7. Melanson SE, Mijailovic AS, Wright AP, Szumita PM, Bates DW, Tanasijevic MJ. An intervention to improve the timing of vancomycin levels. Am J Clin Pathol. 2013;140(6):801-806.

8. MacLaren R, Bond CA, Martin SJ, Fike D. Clinical and economic outcomes of involving pharmacists in the direct care of critically ill patients with infections. Crit Care Med. 2008;36(12):3184-3189.

9. Phillips CJ, Doan H, Quinn S, Kirkpatrick CM, Gordon DL, Doogue MP. An educational intervention to improve vancomycin prescribing and monitoring. Int J Antimicrob Agents. 2013;41(4):393-394.

10. Carroll DJ, Austin GE, Stajich GV, Miyrhaya RK, Murphy JE, Ward ES. Effect of education on the appropriateness of serum drug concentration determination. Ther Drug Monit. 1992;14(1):81-84.

11. Infectious Diseases Society of America (IDSA). IDSA practice guidelines: antimicrobial agent use. IDSA Website. 2015. http://www.idsociety.org/Antimicrobial_Agents. Accessed November 16, 2015.

Vancomycin was isolated in the 1950s, but due to impurities causing adverse events and semisynthetic penicillin production, its use was greatly reduced.1,2 However, this medication gained in popularity 30 years later as a first-line treatment for methicillin-resistant Staphylococcus aureus infections.

In 2009 the Infectious Diseases Society of America (IDSA), American Society of Health System Pharmacists, and Society of Infectious Diseases Pharmacists developed a consensus review of the therapeutic monitoring and dosing of vancomycin in adult patients.3 Trough serum concentration levels are recommended as the most accurate and convenient method to monitor vancomycin. Per IDSA guidelines, an optimal trough is intended to be high enough to clear infections (> 10 mg/L) and prevent the development of vancomycin intermediate and resistant bacteria. Troughs should be obtained just before the next dose in steady-state conditions (starting just before the fourth dose) in patients with normal renal function.

Since the development of these guidelines, vancomycin trough levels are often drawn early.4-7 This may lead to an overestimation of the true trough concentration. A study by Morrison and colleagues in Boston, Massachusetts, found that 41.3% of vancomycin troughs were drawn early, and this resulted in statistically significant increases in the vancomycin concentrations, the rate of vancomycin regimen adjustments (decrease, discontinuation, or holding of dose), and the repeat vancomycin level orders compared with correctly timed troughs.5 It was noted by the study authors that lowering the daily dose of vancomycin based on early trough levels could lead to an underdosing of vancomycin and an increase in intermediate or resistant bacteria.

Related: IDWEEK: Antibiotic ‘time-out’ cut vancomycin use

The prevalence and implications of early trough samples have been measured at only 1 facility, and it is unknown whether these data can be reproduced elsewhere.5 Thus, this study sought to determine the prevalence and corresponding clinical actions of early trough levels at the Captain James A. Lovell Federal Health Care Center (JALFHCC). This is a unique facility that in 2010 combined a VA hospital with a DoD hospital. This facility cares for 67,000 military and retiree beneficiaries each year from southwestern Wisconsin and northwestern Illinois.The primary objective of this study was to measure the rate of early troughs drawn and their resultant effect on vancomycin regimens compared with correctly timed troughs. Secondarily, this study sought to compare the rate of repeated vancomycin trough levels in early vs correctly timed measurements.

Methods

This retrospective cohort analysis compared the outcomes of early and correctly timed vancomycin troughs. This study was approved by the Edward Hines, Jr. VA Hospital and JALFHCC Institutional Review Board. Veteran patients aged ≥ 18 years, hospitalized at JALFHCC, and receiving IV vancomycin at dosing intervals of 8, 12, 24, and 48 hours with measured trough levels between July 1, 2009, and July 1, 2013, were included in this study. Patients were excluded from analysis if vancomycin was given at any schedule other than the previously stated frequencies, they received hemodialysis during the treatment period, or their insurance coverage was through TRICARE (these patients had either active-duty or retired active-duty status).

Potentially eligible patients were identified via a Computerized Patient Records System (CPRS) search for laboratory vancomycin level measurements. The search supplied the researcher with the patient name, vancomycin level date and time, type of vancomycin level (trough or random), and vancomycin concentration. With this information, further data were gathered through CPRS: demographics, type of clinical infection, desired trough level (inferred if not listed in CPRS note), and vancomycin administration time (through the bar code medication administration system [BCMA] in CPRS). This analysis was of troughs, and multiple troughs may have originated from the same patient.

An early trough was defined as a trough taken more than 2 hours earlier than the next theoretical administration time or anytime before the third dose. After a trough was determined to be early or on time, the clinical actions taken during the dosing interval following sample collection were documented. A dose was considered to be held if stated in the BCMA or in a CPRS provider note. A dose was considered to be decreased with a change in frequency or strength that resulted in an overall daily dose decrease. A recollected vancomycin trough was counted within 24 hours of the trough or per a note in CPRS. Finally, observations that noted trends in vancomycin trough management were recorded.

The chi-square test with a significance criterion of 0.05 was used to compare early and on time troughs. Based on the results from the Boston, Massachusetts, study and 1 other study, about 780 vancomycin troughs would be required to meet significance in the primary outcome.5,6

Results

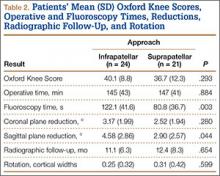

A total of 474 patient charts were reviewed, and 278 met inclusion criteria (196 were excluded). Of the included patients, 799 trough levels were analyzed. Of these, 377 (42.2%) were drawn early. There was no significant difference in the baseline characteristics of the early group vs the correctly timed group (Table 1). Of the early troughs, 190 (56.3%) were drawn prior to the third dose of vancomycin. It was observed that a large portion of these troughs occurred after a vancomycin dose adjustment.

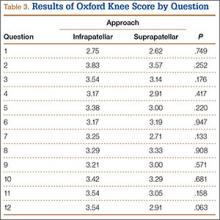

Clinical actions taken after sampling occurred at a rate of 14.5% in the early group and 22.9% in the correctly timed group (P = .003; Table 2). Early troughs led to a 7.7% rate of trough recollection, which was significantly greater than the 1.5% rate in the correctly timed group (P < .001). An analysis of each factor resulting in a clinical action illustrated that the rates of daily dose decrease and discontinued dose were similar between the groups (Table 3). However, the rate of held doses was 8.3% in the early group and 17.1% in the correctly timed group.

This research process yielded some observations. Occasionally a trough was drawn after vancomycin therapy was discontinued and when there was no concern for nephrotoxicity. After the guidelines were published, providers continued to document in CPRS notes to check troughs before the third dose. This incidence decreased over time. Troughs were taken often in patients who were receiving a short course of therapy or who were hemodynamically stable. Finally, documentation of vancomycin regimen changes occasionally did not match the record in the BCMA (in these situations, the BCMA record was used for this study).

Related: Assessment of High Staphylococcus aureus MIC and Poor Patient Outcomes

Discussion

A large portion of trough levels at the JALFHCC were drawn early and did not adhere to the 2009 consensus guidelines. The rates of early troughs in this study and in the Boston, Massachusetts, study are similar.5 However, the 2 studies differed in 1 significant aspect: Clinical actions were taken less often in the early group at JALFHCC, whereas they were taken more often in the early group in the Boston, Massachusetts, study. This dissimilarity could be attributed to a difference in software between the hospitals. In the previous study, trough levels and the time that they were drawn were not displayed together. Thus, clinicians may have been less likely to gauge whether a trough was early. Since this information is available at the JALFHCC, clinicians may have been aware that the trough was early and avoided adjusting treatment (such as holding a dose, as illustrated in the data) based on a falsely elevated trough. This point is further supported by significantly greater amounts of recollected troughs in the early group, suggesting an understanding that the trough was early.

The low trough recollection rate of 7.7% of all early samples could be due to several factors that would prevent a trough redraw. First, medication discontinuation resulting from course completion or sensitivity results would not require further trough monitoring. Second, practitioners may assess the early sample as insignificantly different from a correctly timed one and elect not to redraw the trough. Sometimes a trough was drawn at the correct time, but the time was recorded incorrectly. In this situation, a new trough level would not be necessary. Finally, a lack of sufficient staffing during nights and weekends may result in a delay in interpreting results leading to a missed opportunity for recollection. Additionally, some troughs may not have been redrawn based on a practitioner’s opinion that a trough was not significantly early and did not represent skewed results. Sometimes an incorrect recording of trough draw time reflected that it was taken after vancomycin dosing when it was not.

Specific observations regarding the timing of the trough indicate other possible concerns and areas for improvement. First, providers must cancel future trough orders concurrently with canceling treatment. Second, at the time of publication of the consensus, some providers were slow adopters of the new guidelines. Finally, the IDSA guidelines state that frequent monitoring for short course, lower intensity therapy, or in patients who are hemodynamically stable is not recommended.3 However, troughs were sometimes measured 2 to 3 times weekly in these patients.

Related: Results mixed in hospital efforts to tackle antimicrobial resistance

The data and observations lead to the conclusion that although providers may be able to discern between early and correctly timed troughs, they were not consistently adherent to the 2009 IDSA guidelines. It has been shown that pharmacy involvement of Medicare patients with infections in the intensive care unit has led to better clinical and monetary outcomes.8 Therefore, continued efforts by clinical pharmacists to monitor trough timing can be used to improve adherence and decrease costs (each trough is estimated to cost $16.97).

A study conducted in Australia demonstrated that pharmacist-led education of vancomycin dosing and monitoring (including when to measure a trough level) among prescribers and nurses led to improved adherence to the current guidelines and a greater number of patients treated within desired therapeutic ranges.9 In addition, a small study at the Atlanta VAMC in Georgia demonstrated that education of nurses, lab personnel, residents, ward clerks, and pharmacists led to a greater number of appropriately timed vancomycin and aminoglycoside levels.10 Thus, an interdisciplinary review of the current IDSA guidelines and review on the publication of the anticipated updated vancomycin guidelines should be provided to hospital personnel to aid in adoption of current dosing and monitoring recommendations.11

Limitations

This study is limited by the 4-year span of time that it encompassed, which may give a skewed depiction of current practices. Another limitation is that patients with fluctuating renal function were included in the analysis. Instead of selecting a random level order, a trough level order was sometimes selected for these patients. This could lead to a lower actual rate of early troughs. A third limitation is that this was a small and unblinded study. Also, the actual trough levels and the resulting changes that were made to specific regimens were not recorded. Thus, these data do not indicate whether the changes that were made reflected guideline recommendations. Finally, some clinical actions were taken after the dosing interval following the trough. This was often a result of off-hours lab results or waiting on attending physician or infectious disease guidance. These data were not included in the analysis.

Conclusion

Vancomycin troughs were often drawn too early and resulted in an increased rate of trough recollection. In an attempt to improve adherence to the current and the upcoming revised version of the IDSA consensus statement, it is recommended to educate and reeducate providers through interdisciplinary-led review sessions.

Author disclosures

The authors report no actual or potential conflicts of interest with regard to this article.

Disclaimer

The opinions expressed herein are those of the authors and do not necessarily reflect those of Federal Practitioner, Frontline Medical Communications Inc., the U.S. Government, or any of its agencies. This article may discuss unlabeled or investigational use of certain drugs. Please review the complete prescribing information for specific drugs or drug combinations—including indications, contraindications, warnings, and adverse effects—before administering pharmacologic therapy to patients.

Vancomycin was isolated in the 1950s, but due to impurities causing adverse events and semisynthetic penicillin production, its use was greatly reduced.1,2 However, this medication gained in popularity 30 years later as a first-line treatment for methicillin-resistant Staphylococcus aureus infections.

In 2009 the Infectious Diseases Society of America (IDSA), American Society of Health System Pharmacists, and Society of Infectious Diseases Pharmacists developed a consensus review of the therapeutic monitoring and dosing of vancomycin in adult patients.3 Trough serum concentration levels are recommended as the most accurate and convenient method to monitor vancomycin. Per IDSA guidelines, an optimal trough is intended to be high enough to clear infections (> 10 mg/L) and prevent the development of vancomycin intermediate and resistant bacteria. Troughs should be obtained just before the next dose in steady-state conditions (starting just before the fourth dose) in patients with normal renal function.

Since the development of these guidelines, vancomycin trough levels are often drawn early.4-7 This may lead to an overestimation of the true trough concentration. A study by Morrison and colleagues in Boston, Massachusetts, found that 41.3% of vancomycin troughs were drawn early, and this resulted in statistically significant increases in the vancomycin concentrations, the rate of vancomycin regimen adjustments (decrease, discontinuation, or holding of dose), and the repeat vancomycin level orders compared with correctly timed troughs.5 It was noted by the study authors that lowering the daily dose of vancomycin based on early trough levels could lead to an underdosing of vancomycin and an increase in intermediate or resistant bacteria.

Related: IDWEEK: Antibiotic ‘time-out’ cut vancomycin use

The prevalence and implications of early trough samples have been measured at only 1 facility, and it is unknown whether these data can be reproduced elsewhere.5 Thus, this study sought to determine the prevalence and corresponding clinical actions of early trough levels at the Captain James A. Lovell Federal Health Care Center (JALFHCC). This is a unique facility that in 2010 combined a VA hospital with a DoD hospital. This facility cares for 67,000 military and retiree beneficiaries each year from southwestern Wisconsin and northwestern Illinois.The primary objective of this study was to measure the rate of early troughs drawn and their resultant effect on vancomycin regimens compared with correctly timed troughs. Secondarily, this study sought to compare the rate of repeated vancomycin trough levels in early vs correctly timed measurements.

Methods

This retrospective cohort analysis compared the outcomes of early and correctly timed vancomycin troughs. This study was approved by the Edward Hines, Jr. VA Hospital and JALFHCC Institutional Review Board. Veteran patients aged ≥ 18 years, hospitalized at JALFHCC, and receiving IV vancomycin at dosing intervals of 8, 12, 24, and 48 hours with measured trough levels between July 1, 2009, and July 1, 2013, were included in this study. Patients were excluded from analysis if vancomycin was given at any schedule other than the previously stated frequencies, they received hemodialysis during the treatment period, or their insurance coverage was through TRICARE (these patients had either active-duty or retired active-duty status).

Potentially eligible patients were identified via a Computerized Patient Records System (CPRS) search for laboratory vancomycin level measurements. The search supplied the researcher with the patient name, vancomycin level date and time, type of vancomycin level (trough or random), and vancomycin concentration. With this information, further data were gathered through CPRS: demographics, type of clinical infection, desired trough level (inferred if not listed in CPRS note), and vancomycin administration time (through the bar code medication administration system [BCMA] in CPRS). This analysis was of troughs, and multiple troughs may have originated from the same patient.

An early trough was defined as a trough taken more than 2 hours earlier than the next theoretical administration time or anytime before the third dose. After a trough was determined to be early or on time, the clinical actions taken during the dosing interval following sample collection were documented. A dose was considered to be held if stated in the BCMA or in a CPRS provider note. A dose was considered to be decreased with a change in frequency or strength that resulted in an overall daily dose decrease. A recollected vancomycin trough was counted within 24 hours of the trough or per a note in CPRS. Finally, observations that noted trends in vancomycin trough management were recorded.

The chi-square test with a significance criterion of 0.05 was used to compare early and on time troughs. Based on the results from the Boston, Massachusetts, study and 1 other study, about 780 vancomycin troughs would be required to meet significance in the primary outcome.5,6

Results

A total of 474 patient charts were reviewed, and 278 met inclusion criteria (196 were excluded). Of the included patients, 799 trough levels were analyzed. Of these, 377 (42.2%) were drawn early. There was no significant difference in the baseline characteristics of the early group vs the correctly timed group (Table 1). Of the early troughs, 190 (56.3%) were drawn prior to the third dose of vancomycin. It was observed that a large portion of these troughs occurred after a vancomycin dose adjustment.

Clinical actions taken after sampling occurred at a rate of 14.5% in the early group and 22.9% in the correctly timed group (P = .003; Table 2). Early troughs led to a 7.7% rate of trough recollection, which was significantly greater than the 1.5% rate in the correctly timed group (P < .001). An analysis of each factor resulting in a clinical action illustrated that the rates of daily dose decrease and discontinued dose were similar between the groups (Table 3). However, the rate of held doses was 8.3% in the early group and 17.1% in the correctly timed group.

This research process yielded some observations. Occasionally a trough was drawn after vancomycin therapy was discontinued and when there was no concern for nephrotoxicity. After the guidelines were published, providers continued to document in CPRS notes to check troughs before the third dose. This incidence decreased over time. Troughs were taken often in patients who were receiving a short course of therapy or who were hemodynamically stable. Finally, documentation of vancomycin regimen changes occasionally did not match the record in the BCMA (in these situations, the BCMA record was used for this study).

Related: Assessment of High Staphylococcus aureus MIC and Poor Patient Outcomes

Discussion

A large portion of trough levels at the JALFHCC were drawn early and did not adhere to the 2009 consensus guidelines. The rates of early troughs in this study and in the Boston, Massachusetts, study are similar.5 However, the 2 studies differed in 1 significant aspect: Clinical actions were taken less often in the early group at JALFHCC, whereas they were taken more often in the early group in the Boston, Massachusetts, study. This dissimilarity could be attributed to a difference in software between the hospitals. In the previous study, trough levels and the time that they were drawn were not displayed together. Thus, clinicians may have been less likely to gauge whether a trough was early. Since this information is available at the JALFHCC, clinicians may have been aware that the trough was early and avoided adjusting treatment (such as holding a dose, as illustrated in the data) based on a falsely elevated trough. This point is further supported by significantly greater amounts of recollected troughs in the early group, suggesting an understanding that the trough was early.

The low trough recollection rate of 7.7% of all early samples could be due to several factors that would prevent a trough redraw. First, medication discontinuation resulting from course completion or sensitivity results would not require further trough monitoring. Second, practitioners may assess the early sample as insignificantly different from a correctly timed one and elect not to redraw the trough. Sometimes a trough was drawn at the correct time, but the time was recorded incorrectly. In this situation, a new trough level would not be necessary. Finally, a lack of sufficient staffing during nights and weekends may result in a delay in interpreting results leading to a missed opportunity for recollection. Additionally, some troughs may not have been redrawn based on a practitioner’s opinion that a trough was not significantly early and did not represent skewed results. Sometimes an incorrect recording of trough draw time reflected that it was taken after vancomycin dosing when it was not.

Specific observations regarding the timing of the trough indicate other possible concerns and areas for improvement. First, providers must cancel future trough orders concurrently with canceling treatment. Second, at the time of publication of the consensus, some providers were slow adopters of the new guidelines. Finally, the IDSA guidelines state that frequent monitoring for short course, lower intensity therapy, or in patients who are hemodynamically stable is not recommended.3 However, troughs were sometimes measured 2 to 3 times weekly in these patients.

Related: Results mixed in hospital efforts to tackle antimicrobial resistance

The data and observations lead to the conclusion that although providers may be able to discern between early and correctly timed troughs, they were not consistently adherent to the 2009 IDSA guidelines. It has been shown that pharmacy involvement of Medicare patients with infections in the intensive care unit has led to better clinical and monetary outcomes.8 Therefore, continued efforts by clinical pharmacists to monitor trough timing can be used to improve adherence and decrease costs (each trough is estimated to cost $16.97).

A study conducted in Australia demonstrated that pharmacist-led education of vancomycin dosing and monitoring (including when to measure a trough level) among prescribers and nurses led to improved adherence to the current guidelines and a greater number of patients treated within desired therapeutic ranges.9 In addition, a small study at the Atlanta VAMC in Georgia demonstrated that education of nurses, lab personnel, residents, ward clerks, and pharmacists led to a greater number of appropriately timed vancomycin and aminoglycoside levels.10 Thus, an interdisciplinary review of the current IDSA guidelines and review on the publication of the anticipated updated vancomycin guidelines should be provided to hospital personnel to aid in adoption of current dosing and monitoring recommendations.11

Limitations

This study is limited by the 4-year span of time that it encompassed, which may give a skewed depiction of current practices. Another limitation is that patients with fluctuating renal function were included in the analysis. Instead of selecting a random level order, a trough level order was sometimes selected for these patients. This could lead to a lower actual rate of early troughs. A third limitation is that this was a small and unblinded study. Also, the actual trough levels and the resulting changes that were made to specific regimens were not recorded. Thus, these data do not indicate whether the changes that were made reflected guideline recommendations. Finally, some clinical actions were taken after the dosing interval following the trough. This was often a result of off-hours lab results or waiting on attending physician or infectious disease guidance. These data were not included in the analysis.

Conclusion

Vancomycin troughs were often drawn too early and resulted in an increased rate of trough recollection. In an attempt to improve adherence to the current and the upcoming revised version of the IDSA consensus statement, it is recommended to educate and reeducate providers through interdisciplinary-led review sessions.

Author disclosures

The authors report no actual or potential conflicts of interest with regard to this article.

Disclaimer

The opinions expressed herein are those of the authors and do not necessarily reflect those of Federal Practitioner, Frontline Medical Communications Inc., the U.S. Government, or any of its agencies. This article may discuss unlabeled or investigational use of certain drugs. Please review the complete prescribing information for specific drugs or drug combinations—including indications, contraindications, warnings, and adverse effects—before administering pharmacologic therapy to patients.

1. Moellering RC Jr. Vancomycin: a 50-year reassessment. Clin Infect Dis. 2006;42(suppl 1):S3-S4.

2. Levine DP. Vancomycin: a history. Clin Infect Dis. 2006;42(suppl 1):S5-S12.

3. Rybak MJ, Lomaestro BM, Rotschafer JC, et al. Therapeutic monitoring of vancomycin in adults summary of consensus recommendations from the American Society of Health-System Pharmacists, the Infectious Diseases Society of America, and the Society of Infectious Diseases Pharmacists. Pharmacotherapy. 2009;29(11):1275-1279.

4. Davis SL, Scheetz MH, Bosso JA, Goff DA, Rybak MJ. Adherence to the 2009 consensus guidelines for vancomycin dosing and monitoring practices: a cross-sectional survey of U.S. hospitals. Pharmacotherapy. 2013;33(12):1256-1263.

5. Morrison AP, Melanson SEF, Carty MG, Bates DW, Szumita PM, Tanasijevic MJ. What proportion of vancomycin trough levels are drawn too early? Frequency and impact on clinical actions. Am J Clin Pathol. 2012;137(3):472-478.

6. Traugott KA, Maxwell PR, Green K, Frei C, Lewis JS 2nd. Effects of therapeutic drug monitoring criteria in a computerized prescriber-order-entry system on the appropriateness of vancomycin level orders. Am J Health Syst Pharm. 2011;68(4):347-352.

7. Melanson SE, Mijailovic AS, Wright AP, Szumita PM, Bates DW, Tanasijevic MJ. An intervention to improve the timing of vancomycin levels. Am J Clin Pathol. 2013;140(6):801-806.

8. MacLaren R, Bond CA, Martin SJ, Fike D. Clinical and economic outcomes of involving pharmacists in the direct care of critically ill patients with infections. Crit Care Med. 2008;36(12):3184-3189.

9. Phillips CJ, Doan H, Quinn S, Kirkpatrick CM, Gordon DL, Doogue MP. An educational intervention to improve vancomycin prescribing and monitoring. Int J Antimicrob Agents. 2013;41(4):393-394.

10. Carroll DJ, Austin GE, Stajich GV, Miyrhaya RK, Murphy JE, Ward ES. Effect of education on the appropriateness of serum drug concentration determination. Ther Drug Monit. 1992;14(1):81-84.

11. Infectious Diseases Society of America (IDSA). IDSA practice guidelines: antimicrobial agent use. IDSA Website. 2015. http://www.idsociety.org/Antimicrobial_Agents. Accessed November 16, 2015.

1. Moellering RC Jr. Vancomycin: a 50-year reassessment. Clin Infect Dis. 2006;42(suppl 1):S3-S4.

2. Levine DP. Vancomycin: a history. Clin Infect Dis. 2006;42(suppl 1):S5-S12.

3. Rybak MJ, Lomaestro BM, Rotschafer JC, et al. Therapeutic monitoring of vancomycin in adults summary of consensus recommendations from the American Society of Health-System Pharmacists, the Infectious Diseases Society of America, and the Society of Infectious Diseases Pharmacists. Pharmacotherapy. 2009;29(11):1275-1279.

4. Davis SL, Scheetz MH, Bosso JA, Goff DA, Rybak MJ. Adherence to the 2009 consensus guidelines for vancomycin dosing and monitoring practices: a cross-sectional survey of U.S. hospitals. Pharmacotherapy. 2013;33(12):1256-1263.

5. Morrison AP, Melanson SEF, Carty MG, Bates DW, Szumita PM, Tanasijevic MJ. What proportion of vancomycin trough levels are drawn too early? Frequency and impact on clinical actions. Am J Clin Pathol. 2012;137(3):472-478.

6. Traugott KA, Maxwell PR, Green K, Frei C, Lewis JS 2nd. Effects of therapeutic drug monitoring criteria in a computerized prescriber-order-entry system on the appropriateness of vancomycin level orders. Am J Health Syst Pharm. 2011;68(4):347-352.

7. Melanson SE, Mijailovic AS, Wright AP, Szumita PM, Bates DW, Tanasijevic MJ. An intervention to improve the timing of vancomycin levels. Am J Clin Pathol. 2013;140(6):801-806.

8. MacLaren R, Bond CA, Martin SJ, Fike D. Clinical and economic outcomes of involving pharmacists in the direct care of critically ill patients with infections. Crit Care Med. 2008;36(12):3184-3189.

9. Phillips CJ, Doan H, Quinn S, Kirkpatrick CM, Gordon DL, Doogue MP. An educational intervention to improve vancomycin prescribing and monitoring. Int J Antimicrob Agents. 2013;41(4):393-394.

10. Carroll DJ, Austin GE, Stajich GV, Miyrhaya RK, Murphy JE, Ward ES. Effect of education on the appropriateness of serum drug concentration determination. Ther Drug Monit. 1992;14(1):81-84.

11. Infectious Diseases Society of America (IDSA). IDSA practice guidelines: antimicrobial agent use. IDSA Website. 2015. http://www.idsociety.org/Antimicrobial_Agents. Accessed November 16, 2015.

Shared Medical Appointments and Their Effects on Achieving Diabetes Mellitus Goals in a Veteran Population

In 2012, 9.3% of the U.S. population had diabetes mellitus (DM).1 According to the American Diabetes Association, in 2012, the total cost of diagnosed DM in the U.S. was $245 billion.2 Diabetes mellitus is a leading cause of blindness, end-stage renal disease, and amputation in the U.S.3 Up to 80% of patients with DM will develop or die of macrovascular disease, such as heart attack or stroke.3

Diabetes mellitus is a chronic disease of epidemic proportion with management complexity that threatens to overwhelm providers in the acute care and primary care settings. Limited specialist availability and increased wait times continue to afflict the VA health care system, prompting efforts to increase health care provider (HCP) access and improve clinic efficiency.4 One of the methods proposed to increase HCP access and maximize clinic efficiency is the shared medical appointment (SMA).5,6

The SMA was designed to improve access and quality of care through enhanced education and support. With the number of people living with chronic diseases on the rise, the current patient-provider model is unrealistic in today’s health care environment. Shared medical appointments offer a unique format for providing evidence-based chronic disease management in which patients and a multidisciplinary team of providers collaborate toward education, discussion, and medication management in a supportive environment.7 Research has suggested that SMAs are a successful way to manage type 2 DM (T2DM).8,9 However, there is uncertainty regarding the optimal model design. The goals of this study were to evaluate whether the diabetes SMA at the Adam Benjamin, Jr. (ABJ) community-based outpatient clinic (CBOC) was an effective practice model for achieving improvements in glycemic control and to use subgroup analyses to elucidate unique characteristics about SMAs that may have been correlated with clinical success. This study may provide valuable information for other facilities considering SMAs.

Overview

The Jesse Brown VAMC (JBVAMC) and the ABJ CBOC implemented a T2DM-focused SMA in 2011. The ABJ CBOC multidisciplinary SMA team consisted of a medical administration service clerk, a registered dietician, a certified DM educator, a registered nurse, a nurse practitioner (NP), and a clinical pharmacy specialist (CPS). This team collaborated to deliver high-quality care to patients with poorly controlled T2DM to improve their glycemic control as well as clinical knowledge of their disease. A private conference room at the ABJ CBOC served as the location for the SMAs. This room was divided into 2 adjacent areas: One area with tables was organized in a semicircle to promote group discussion as well as minimize isolated conversations; the other area had computer terminals to facilitate individualized medication management. Other equipment included a scale for obtaining patient weights and various audio-visual devices.

The ABJ CBOC offered monthly SMAs. The team made several attempts to maximize SMA show rates, as previous studies indicated that low SMA show rates were a barrier to success.3,4,7-9 One review reported no-show rates as high as 70% in certain group visit models.4 About 2 weeks prior to a session, prospective SMA patients received automated and customized preappointment letters. Automated and customized phone call reminders were made to prospective SMA patients a few days before each session. As many as 18 patients participated in a single ABJ SMA.

The ABJ SMAs lasted from 60 to 90 minutes, depending on the level of patient participation and the size of the group. The first half of the SMA was dedicated to a group discussion, which involved the SMA team, the patient, and the patient’s family (if desired). The topic of conversation was typically guided by patient curiosity and knowledge deficits in a spontaneous and free-flowing manner; for this reason, these sessions were considered to be open.

The team also engaged in more structured focused sessions, which limited the spontaneous flow of conservation and narrowed the scope to provide targeted education about various aspects of T2DM care. During focused sessions, services such as dental, optometry, podiatry, MOVE! (a VA self-management weight reduction program), and nutrition also participated. Focused sessions addressed topics such as hypoglycemia management, eating around the holidays, sick-day management of T2DM, grocery shopping, exercise, oral health, eye care, and foot care. The specialty services were encouraged to be creative and interactive during the SMA. Many of these services used supportive literature, demonstrations, diagrams, and props to enrich the educational experience. Group discussion typically lasted 30 to 40 minutes; after which patients met individually with either a CPS or NP for medication management.

Medication management focused on optimizing T2DM therapy (both oral and injectable) to improve glycemic control. Interventions outside of T2DM therapy (eg, cholesterol, hypertension, and other risk reduction modalities) were not made, due to time constraints. Once a patient demonstrated improved working knowledge of T2DM and a clinically significant reduction in their glycosylated hemoglobin A1c (A1c) they were discharged from SMAs at the discretion of the SMA team. There was no set minimum or maximum duration for the SMAs.

Methods

This study was a retrospective chart review conducted at the JBVAMC and was approved by the institutional review board and the research and development committee. Patient confidentiality was maintained by identifying patients by means other than name or unique identifiers. Protected health information was accessible only by the aforementioned investigators. There was no direct patient contact during this study.

Patient lists were generated from the computerized patient record system (CPRS). Patients were tracked up to 6 months after SMA discharge or until the last SMA in which they participated. The control group was matched according to location, age, glycemic control, and time. The control group never attended an ABJ SMA but may have received regular care through their primary care provider, CPS, or endocrinologist. Prospective control group patients were randomized and reviewed sequentially to obtain the matched cohort.

The study took place at ABJ, an outpatient clinic serving veterans in northwest Indiana and surrounding areas. Inclusion criteria for the SMA group were patients with T2DM, aged ≥ 45 years, with an A1c ≥ 8.5% seen at ABJ for T2DM from May 1, 2011, to June 30, 2013. The control group included patients with T2DM, aged ≥ 45 years, with an A1c > 9% who never attended SMAs but may have received regular care at ABJ during the study period. The SMA group’s inclusion criteria threshold for A1c was lower in order to maximize sample size. The control group’s inclusion criteria threshold for A1c was higher due to use of a default reminder report called “A1c > 9%” to generate patient lists. Patients were excluded from the study if they did not meet inclusion criteria.

Baseline datum was the most recent parameter available in CPRS prior to enrollment. The endpoint datum was the parameter nearest the time of SMA discharge or the first available parameter within 6 months from the date of discharge. In the control group, the baseline datum was the initial parameter during the study period and the endpoint datum was the closest measure to 4 months after baseline. Four months was chosen to allow for at least 1 A1c measurement during the study period. In addition, it was estimated (prior to collecting any data) that 4 months was the average time a patient participated in SMAs. Serial A1c measurements were defined as values obtained at SMA discharge and 3- and 6-months postdischarge. These parameters were used to evaluate the sustainability of improvements in glycemic control. All values falling outside of these defined parameters were excluded.

Related: Experiences of Veterans With Diabetes From Shared Medical Appointments

The data analysis compared A1c change from baseline to endpoint for the SMA and control groups. Data collection included baseline characteristics, SMA show rate, number of SMA patients seen by a CPS or NP, number and type of SMA interventions made by a CPS or NP, and the number and type of non-SMA interventions made during the study period. Intervention types were medications: added, discontinued, or titrated; and other, defined as referrals made to specialty services (such as dental, optometry, and podiatry).

Secondary endpoints included the number of SMAs and glycemic improvement, SMA format style (open- vs focused session) and glycemic improvement, SMA provider (CPS vs NP) and glycemic improvement, the change in A1c stratified by baseline A1c (A1c ≥ 10% vs < 10%), the change in actual body weight (ABW) and body mass index (BMI), and maintenance of A1c (3- and 6-months postdischarge).

The primary endpoint was evaluated using a 2-sample Student t test. Secondary endpoints were evaluated using the independent t test. Statistical significance was defined as P < .05.

Results

A total of 129 unique patients were scheduled for SMAs, 62 of which met inclusion criteria and were included in the SMA group. During enrollment, 67 patients were excluded: 55 never participated in SMAs, 6 had baseline A1c values < 8.5%, 4 had insufficient data, and 2 were aged < 45 years. A total of 29 SMAs were conducted during the study period, and patients attended an average of 3.15 ± 2.14 (SD) SMAs. The average attendance at each SMA was 7.1 ± 2.62 (SD) patients. For the control group, 754 unique patients were identified and randomized. A total of 90 charts were sequentially reviewed in order to obtain the 62 patients for the control group.

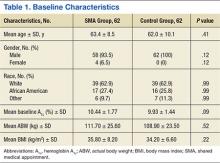

Baseline characteristics were balanced between groups. However, there were more women in the SMA group vs the control group (Table 1). Within the control group, there were a total of 107 appointments that addressed T2DM, which averaged 1.72 ± 1.51 (SD) appointments per patient. The total number of interventions made in the SMA group was 192: 64.6% (124) by a CPS and 35.4% (68) by a NP. For the CPS, the most frequent intervention was medication titration (69.5%), followed by other (23.5%), medication addition (4%), and medication discontinuation (3%). Of note, 53.2% (33) of the SMA patients were seen an average of 1.2 times by non-SMA providers. The SMA patients had a total of 45 non-SMA interventions (0.73 per patient) during the study period.

For the primary endpoint, the SMA group had a 1.48% ± 0.02 (SD) reduction in A1c compared with a 0.6% ± 0.02 (SD) decrease in the control group (P = .01). When evaluating mean changes in A1c by the number of SMAs attended, it was noted that participation in ≥ 6 SMAs led to the greatest reduction in A1c of 2.08%. In the SMA group, it was noted that patients with higher A1c values at baseline demonstrated greater improvements in glycemic control compared with patients with lower baseline A1c values. The mean change in A1c, stratified by baseline A1c, was -2.26% for those with baseline A1c values ≥ 10% and -0.87% for those with baseline A1c values < 10%.

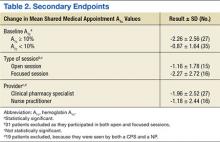

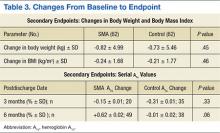

In evaluating the format style, open- vs focused-session, it was observed that participation in focused sessions led to greater improvements in glycemic control. Furthermore, when stratified by provider, greater improvements in glycemic control were demonstrated when medication management was completed by a CPS vs a NP (Table 2). The average number of interventions per SMA patient was 3.1 ± 2.22 (SD). For the control group, the total number of interventions made was 86, with an average of 1.37 ± 1.51 (SD) per patient. The overall show rate was 49% ± 16 (SD), 52% ± 16 (SD) for open visits, and 46% ± 15 (SD) for focused visits. The mean change in ABW and BMI from baseline to endpoint was no different between the SMA and control groups (Table 3). The SMA group participants demonstrated a decrease in A1c at 3 months postdischarge, and a moderate increase in A1c was noted at 6 months postdischarge.

Discussion

Shared medical appointments provide an effective alternative to standards of care in order to obtain improvements in glycemic control. Consistent with previous studies, this study reported significant improvements in glycemic control in the SMA group vs the control group. This study also elucidated unique characteristics about SMAs that may have been correlated with clinical success.

Although the greatest improvements in glycemic control were noted for those who participated in ≥ 6 SMAs, it was observed that participation in only 1 SMA also led to improvements. For a site with limited staff and a high volume of patients waiting to participate in SMAs, it may be mutually beneficial to offer only 1 SMA per patient. In addition, patients with ≥ 10% A1c at baseline demonstrated greater improvements in glycemic control compared to those with < 10% A1c at baseline. The reasons the higher baseline A1c subgroup responded to interventions more robustly are unclear and likely multifactorial. Nonetheless, factors such as psychosocial influences (eg, peer pressure to get healthy) may have increased motivation to prevent complications and improved medication adherence in the setting of closer follow-up. Additionally, hyperresponsiveness to drug therapy may have played a role. Regardless, for new SMA programs interested in making an immediate impact, it may be advantageous to initially select patients with very poorly controlled DM.

A unique aspect of the ABJ SMA was the variety of focused sessions offered. Previous studies did not demonstrate such a variety of focused sessions, nor did they evaluate the impact of a focused visit on the patient’s T2DM control. Participation in focused ABJ SMA sessions may have led to improved T2DM control, which may be attributed to the value patients assigned to specialty care and an increased motivation to get healthy.

Related: SGLT2 Inhibitors for Type 2 Diabetes Mellitus Treatment

Another factor that may have led to improved T2DM control was CPS involvement with medication management. The presence of a NP was highly valued, both from a group discussion and medication management standpoint; still, it is a good idea to involve a CPS who has a strong command of DM pharmacotherapy. One shortcoming of this SMA program was the inability for patients to maintain glycemic improvements 6 months after discharge. This pitfall was likely the result of suboptimal coordination of care after SMA discharge and may be avoided by asking the medical administration service clerk to promptly schedule discharged SMA patients for a general medicine clinic T2DM follow-up.The SMA patients had more T2DM interventions within the same time frame compared with the control patients. Although not causative, the increased number of interventions in addition to the bolstered support of the SMA may have correlated with glycemic improvements.

An important finding of this study was the SMA show rate and how it compared with attendance rates found in other group models. The favorable ABJ SMA show rate could have been due to the rigorous attention paid to reminder letters and phone calls. The literature has not established a standard approach to increasing SMA show rates; however, the current data suggest that increased reminders may have increased attendance.

Limitations

This study had several limitations. The external validity was weakened by the modest sample size and the homogenous baseline characteristics of those enrolled. Another limitation was inconsistent documentation of laboratory parameters. The inability to obtain A1c values exactly at enrollment and discharge could have potentially skewed the results. In addition, incomplete documentation of interventions for dual-care patients (ie, those who obtained care outside of the VA) was an unavoidable challenge. Last, this study did not perform an assessment of SMA patient satisfaction, cost-benefit, or safety.

Conclusion

The ABJ SMA was an effective addition to standards of care in order to achieve improvements in glycemic control in a veteran population with poorly controlled T2DM. Furthermore,the data suggest that a successful program should be multidisciplinary, select poorly controlled patients, offer focused sessions, have a CPS participate in medication management, and encourage patients to complete ≥ 6 sessions. Future studies should be conducted to include more diverse patients to see whether the efficacy of this SMA format is maintained.

A safety analysis should also be conducted to ensure that the SMA format is not only effective, but also a safe means to manage medical conditions. In addition, the scope of the ABJ SMAs should be expanded to allow for evaluation of other diseases. An evaluation of patient satisfaction and cost-benefit could provide additional support for the implementation of SMAs, as improvements in quality of life and cost savings are endpoints to be desired.

Author disclosures

The authors report no actual or potential conflicts of interest with regard to this article.

Disclaimer

The opinions expressed herein are those of the authors and do not necessarily reflect those of Federal Practitioner, Frontline Medical Communications Inc., the U.S. Government, or any of its agencies. This article may discuss unlabeled or investigational use of certain drugs. Please review complete prescribing information for specific drugs or drug combinations—including indications, contraindications, warnings, and adverse effects—before administering pharmacologic therapy to patients.

1. Centers for Disease Control and Prevention. National Diabetes Statistics Report: Estimate of Diabetes and Its Burden in the United States, 2014. Atlanta, GA: U.S. Department of Health and Human Services; 2014.

2. American Diabetes Association. Economic costs of diabetes in the U.S. in 2012. Diabetes Care. 2013;36(4):1033-1046.

3. Kirsh SR, Watts S, Pascuzzi K, et al. Shared medical appointments based on the chronic care model: a quality improvement project to address the challenges of patients with diabetes with high cardiovascular risk. Qual Saf Health Care. 2007;16(5):349-353.

4. Jaber R, Braksmajer A, Trilling JS. Group visits: a qualitative review of current research. J Am Board Fam Med. 2006;19(3):276-290.

5. Klein S. The Veterans Health Administration: implementing patient-centered medical homes in the nation's largest integrated delivery system. Commonwealth Fund Website. http://www.common wealthfund.org/publications/case-studies/2011 /sep/va-medical-homes. Published September 2011. Accessed November 11, 2015.

6. U.S. Department of Veterans Affairs. VA Shared Medical Appointments for Patients With Diabetes: Maximizing Patient & Provider Expertise to Strengthen Patient Management. Washington, DC: U.S. Department of Veterans Affairs; 2008.

7. Bronson DL, Maxwell RA. Shared medical appointments: increasing patient access without increasing physician hours. Cleve Clin J Med. 2004;71(5):369-370, 372, 374 passim.

8. Cohen LB, Taveira T, Khatana SA, Dooley AG, Pirraglia PA, Wu WC. Pharmacist-led shared medical appointments for multiple cardiovascular risk reduction in patients with type 2 diabetes. Diabetes Educ. 2011;37(6):801-812.

9. Naik AD, Palmer N, Petersen NJ, et al. Comparative effectiveness of goal setting in diabetes mellitus group clinics: randomized clinical trial. Arch Intern Med. 2011;171(5):453-459.

In 2012, 9.3% of the U.S. population had diabetes mellitus (DM).1 According to the American Diabetes Association, in 2012, the total cost of diagnosed DM in the U.S. was $245 billion.2 Diabetes mellitus is a leading cause of blindness, end-stage renal disease, and amputation in the U.S.3 Up to 80% of patients with DM will develop or die of macrovascular disease, such as heart attack or stroke.3

Diabetes mellitus is a chronic disease of epidemic proportion with management complexity that threatens to overwhelm providers in the acute care and primary care settings. Limited specialist availability and increased wait times continue to afflict the VA health care system, prompting efforts to increase health care provider (HCP) access and improve clinic efficiency.4 One of the methods proposed to increase HCP access and maximize clinic efficiency is the shared medical appointment (SMA).5,6

The SMA was designed to improve access and quality of care through enhanced education and support. With the number of people living with chronic diseases on the rise, the current patient-provider model is unrealistic in today’s health care environment. Shared medical appointments offer a unique format for providing evidence-based chronic disease management in which patients and a multidisciplinary team of providers collaborate toward education, discussion, and medication management in a supportive environment.7 Research has suggested that SMAs are a successful way to manage type 2 DM (T2DM).8,9 However, there is uncertainty regarding the optimal model design. The goals of this study were to evaluate whether the diabetes SMA at the Adam Benjamin, Jr. (ABJ) community-based outpatient clinic (CBOC) was an effective practice model for achieving improvements in glycemic control and to use subgroup analyses to elucidate unique characteristics about SMAs that may have been correlated with clinical success. This study may provide valuable information for other facilities considering SMAs.

Overview

The Jesse Brown VAMC (JBVAMC) and the ABJ CBOC implemented a T2DM-focused SMA in 2011. The ABJ CBOC multidisciplinary SMA team consisted of a medical administration service clerk, a registered dietician, a certified DM educator, a registered nurse, a nurse practitioner (NP), and a clinical pharmacy specialist (CPS). This team collaborated to deliver high-quality care to patients with poorly controlled T2DM to improve their glycemic control as well as clinical knowledge of their disease. A private conference room at the ABJ CBOC served as the location for the SMAs. This room was divided into 2 adjacent areas: One area with tables was organized in a semicircle to promote group discussion as well as minimize isolated conversations; the other area had computer terminals to facilitate individualized medication management. Other equipment included a scale for obtaining patient weights and various audio-visual devices.

The ABJ CBOC offered monthly SMAs. The team made several attempts to maximize SMA show rates, as previous studies indicated that low SMA show rates were a barrier to success.3,4,7-9 One review reported no-show rates as high as 70% in certain group visit models.4 About 2 weeks prior to a session, prospective SMA patients received automated and customized preappointment letters. Automated and customized phone call reminders were made to prospective SMA patients a few days before each session. As many as 18 patients participated in a single ABJ SMA.

The ABJ SMAs lasted from 60 to 90 minutes, depending on the level of patient participation and the size of the group. The first half of the SMA was dedicated to a group discussion, which involved the SMA team, the patient, and the patient’s family (if desired). The topic of conversation was typically guided by patient curiosity and knowledge deficits in a spontaneous and free-flowing manner; for this reason, these sessions were considered to be open.

The team also engaged in more structured focused sessions, which limited the spontaneous flow of conservation and narrowed the scope to provide targeted education about various aspects of T2DM care. During focused sessions, services such as dental, optometry, podiatry, MOVE! (a VA self-management weight reduction program), and nutrition also participated. Focused sessions addressed topics such as hypoglycemia management, eating around the holidays, sick-day management of T2DM, grocery shopping, exercise, oral health, eye care, and foot care. The specialty services were encouraged to be creative and interactive during the SMA. Many of these services used supportive literature, demonstrations, diagrams, and props to enrich the educational experience. Group discussion typically lasted 30 to 40 minutes; after which patients met individually with either a CPS or NP for medication management.

Medication management focused on optimizing T2DM therapy (both oral and injectable) to improve glycemic control. Interventions outside of T2DM therapy (eg, cholesterol, hypertension, and other risk reduction modalities) were not made, due to time constraints. Once a patient demonstrated improved working knowledge of T2DM and a clinically significant reduction in their glycosylated hemoglobin A1c (A1c) they were discharged from SMAs at the discretion of the SMA team. There was no set minimum or maximum duration for the SMAs.

Methods

This study was a retrospective chart review conducted at the JBVAMC and was approved by the institutional review board and the research and development committee. Patient confidentiality was maintained by identifying patients by means other than name or unique identifiers. Protected health information was accessible only by the aforementioned investigators. There was no direct patient contact during this study.

Patient lists were generated from the computerized patient record system (CPRS). Patients were tracked up to 6 months after SMA discharge or until the last SMA in which they participated. The control group was matched according to location, age, glycemic control, and time. The control group never attended an ABJ SMA but may have received regular care through their primary care provider, CPS, or endocrinologist. Prospective control group patients were randomized and reviewed sequentially to obtain the matched cohort.

The study took place at ABJ, an outpatient clinic serving veterans in northwest Indiana and surrounding areas. Inclusion criteria for the SMA group were patients with T2DM, aged ≥ 45 years, with an A1c ≥ 8.5% seen at ABJ for T2DM from May 1, 2011, to June 30, 2013. The control group included patients with T2DM, aged ≥ 45 years, with an A1c > 9% who never attended SMAs but may have received regular care at ABJ during the study period. The SMA group’s inclusion criteria threshold for A1c was lower in order to maximize sample size. The control group’s inclusion criteria threshold for A1c was higher due to use of a default reminder report called “A1c > 9%” to generate patient lists. Patients were excluded from the study if they did not meet inclusion criteria.

Baseline datum was the most recent parameter available in CPRS prior to enrollment. The endpoint datum was the parameter nearest the time of SMA discharge or the first available parameter within 6 months from the date of discharge. In the control group, the baseline datum was the initial parameter during the study period and the endpoint datum was the closest measure to 4 months after baseline. Four months was chosen to allow for at least 1 A1c measurement during the study period. In addition, it was estimated (prior to collecting any data) that 4 months was the average time a patient participated in SMAs. Serial A1c measurements were defined as values obtained at SMA discharge and 3- and 6-months postdischarge. These parameters were used to evaluate the sustainability of improvements in glycemic control. All values falling outside of these defined parameters were excluded.

Related: Experiences of Veterans With Diabetes From Shared Medical Appointments

The data analysis compared A1c change from baseline to endpoint for the SMA and control groups. Data collection included baseline characteristics, SMA show rate, number of SMA patients seen by a CPS or NP, number and type of SMA interventions made by a CPS or NP, and the number and type of non-SMA interventions made during the study period. Intervention types were medications: added, discontinued, or titrated; and other, defined as referrals made to specialty services (such as dental, optometry, and podiatry).

Secondary endpoints included the number of SMAs and glycemic improvement, SMA format style (open- vs focused session) and glycemic improvement, SMA provider (CPS vs NP) and glycemic improvement, the change in A1c stratified by baseline A1c (A1c ≥ 10% vs < 10%), the change in actual body weight (ABW) and body mass index (BMI), and maintenance of A1c (3- and 6-months postdischarge).

The primary endpoint was evaluated using a 2-sample Student t test. Secondary endpoints were evaluated using the independent t test. Statistical significance was defined as P < .05.

Results

A total of 129 unique patients were scheduled for SMAs, 62 of which met inclusion criteria and were included in the SMA group. During enrollment, 67 patients were excluded: 55 never participated in SMAs, 6 had baseline A1c values < 8.5%, 4 had insufficient data, and 2 were aged < 45 years. A total of 29 SMAs were conducted during the study period, and patients attended an average of 3.15 ± 2.14 (SD) SMAs. The average attendance at each SMA was 7.1 ± 2.62 (SD) patients. For the control group, 754 unique patients were identified and randomized. A total of 90 charts were sequentially reviewed in order to obtain the 62 patients for the control group.

Baseline characteristics were balanced between groups. However, there were more women in the SMA group vs the control group (Table 1). Within the control group, there were a total of 107 appointments that addressed T2DM, which averaged 1.72 ± 1.51 (SD) appointments per patient. The total number of interventions made in the SMA group was 192: 64.6% (124) by a CPS and 35.4% (68) by a NP. For the CPS, the most frequent intervention was medication titration (69.5%), followed by other (23.5%), medication addition (4%), and medication discontinuation (3%). Of note, 53.2% (33) of the SMA patients were seen an average of 1.2 times by non-SMA providers. The SMA patients had a total of 45 non-SMA interventions (0.73 per patient) during the study period.

For the primary endpoint, the SMA group had a 1.48% ± 0.02 (SD) reduction in A1c compared with a 0.6% ± 0.02 (SD) decrease in the control group (P = .01). When evaluating mean changes in A1c by the number of SMAs attended, it was noted that participation in ≥ 6 SMAs led to the greatest reduction in A1c of 2.08%. In the SMA group, it was noted that patients with higher A1c values at baseline demonstrated greater improvements in glycemic control compared with patients with lower baseline A1c values. The mean change in A1c, stratified by baseline A1c, was -2.26% for those with baseline A1c values ≥ 10% and -0.87% for those with baseline A1c values < 10%.

In evaluating the format style, open- vs focused-session, it was observed that participation in focused sessions led to greater improvements in glycemic control. Furthermore, when stratified by provider, greater improvements in glycemic control were demonstrated when medication management was completed by a CPS vs a NP (Table 2). The average number of interventions per SMA patient was 3.1 ± 2.22 (SD). For the control group, the total number of interventions made was 86, with an average of 1.37 ± 1.51 (SD) per patient. The overall show rate was 49% ± 16 (SD), 52% ± 16 (SD) for open visits, and 46% ± 15 (SD) for focused visits. The mean change in ABW and BMI from baseline to endpoint was no different between the SMA and control groups (Table 3). The SMA group participants demonstrated a decrease in A1c at 3 months postdischarge, and a moderate increase in A1c was noted at 6 months postdischarge.

Discussion

Shared medical appointments provide an effective alternative to standards of care in order to obtain improvements in glycemic control. Consistent with previous studies, this study reported significant improvements in glycemic control in the SMA group vs the control group. This study also elucidated unique characteristics about SMAs that may have been correlated with clinical success.

Although the greatest improvements in glycemic control were noted for those who participated in ≥ 6 SMAs, it was observed that participation in only 1 SMA also led to improvements. For a site with limited staff and a high volume of patients waiting to participate in SMAs, it may be mutually beneficial to offer only 1 SMA per patient. In addition, patients with ≥ 10% A1c at baseline demonstrated greater improvements in glycemic control compared to those with < 10% A1c at baseline. The reasons the higher baseline A1c subgroup responded to interventions more robustly are unclear and likely multifactorial. Nonetheless, factors such as psychosocial influences (eg, peer pressure to get healthy) may have increased motivation to prevent complications and improved medication adherence in the setting of closer follow-up. Additionally, hyperresponsiveness to drug therapy may have played a role. Regardless, for new SMA programs interested in making an immediate impact, it may be advantageous to initially select patients with very poorly controlled DM.

A unique aspect of the ABJ SMA was the variety of focused sessions offered. Previous studies did not demonstrate such a variety of focused sessions, nor did they evaluate the impact of a focused visit on the patient’s T2DM control. Participation in focused ABJ SMA sessions may have led to improved T2DM control, which may be attributed to the value patients assigned to specialty care and an increased motivation to get healthy.

Related: SGLT2 Inhibitors for Type 2 Diabetes Mellitus Treatment