User login

How coffee and cigarettes can affect the response to psychopharmacotherapy

When a patient who smokes enters a tobacco-free medical facility and has access to caffeinated beverages, he (she) might experience toxicity to many pharmaceuticals and caffeine. Similarly, if a patient is discharged from a smoke-free environment with a newly adjusted medication regimen and resumes smoking or caffeine consumption, alterations in enzyme activity might reduce therapeutic efficacy of prescribed medicines. These effects are a result of alterations in the hepatic cytochrome P450 (CYP) enzyme system.

Taking a careful history of tobacco and caffeine use, and knowing the effects that these substances will have on specific medications, will help guide treatment and management decisions.

The role of CYP enzymes

CYP hepatic enzymes detoxify a variety of environmental agents into water-soluble compounds that are excreted in urine. CYP1A2 metabolizes 20% of drugs handled by the CYP system and comprises 13% of all the CYP enzymes expressed in the liver. The wide interindividual variation in CYP1A2 enzyme activity is influenced by a combination of genetic, epigenetic, ethnic, and environmental variables.1

Influence of tobacco on CYP

The polycyclic aromatic hydrocarbons in tobacco smoke induce CYP1A2 and CYP2B6 hepatic enzymes.2 Smokers exhibit increased activity of these enzymes, which results in faster clearance of many drugs, lower concentrations in blood, and diminished clinical response. The Table lists psychotropic medicines that are metabolized by CYP1A2 and CYP2B6. Co-administration of these substrates could decrease the elimination rate of other drugs also metabolized by CYP1A2. Nicotine in tobacco or in nicotine replacement therapies does not play a role in inducing CYP enzymes.

Psychiatric patients smoke at a higher rate than the general population.2 One study found that approximately 70% of patients with schizophrenia and as many as 45% of those with bipolar disorder smoke enough cigarettes (7 to 20 a day) to induce CYP1A2 and CYP2B6 activity.2 Patients who smoke and are given clozapine, haloperidol, or olanzapine show a lower serum concentration than non-smokers; in fact, the clozapine level can be reduced as much as 2.4-fold.2-5 Subsequently, patients can experience diminished clinical response to these 3 psychotropics.3

The turnover time for CYP1A2 is rapid— approximately 3 days—and a new CYP1A2 steady state activity is reached after approximately 1 week,4 which is important to remember when managing inpatients in a smoke-free facility. During acute hospitalization, patients could experience drug toxicity if the outpatient dosage is maintained.5

When they resume smoking after being discharged on a stabilized dosage of any of the medications listed in the Table, previous enzyme activity rebounds and might reduce the drug level, potentially leading to inadequate clinical response.

Caffeine and other substances

Asking about the patient’s caffeine intake is necessary because consumption of coffee is prevalent among smokers, and caffeine is metabolized by CYP1A2. Smokers need to consume as much as 4 times the amount of caffeine as non-smokers to achieve a similar caffeine serum concentration.2 Caffeine can form an insoluble precipitate with antipsychotic medication in the gut, which decreases absorption. The interaction between smoking-related induction of CYP1A2 enzymes and forced smoking cessation during hospitalization, with ongoing caffeine consumption, could lead to caffeine toxicity.4,5

Other common inducers of CYP1A2 are insulin, cabbage, cauliflower, broccoli, and charcoal-grilled meat. Also, cumin and turmeric inhibit CYP1A2 activity, which might explain an ethnic difference in drug tolerance across population groups. Additionally, certain genetic polymorphisms, in specific ethnic distributions, alter the potential for tobacco smoke to induce CYP1A2.6

Some of these polymorphisms can be genotyped for clinical application.3

Asking about a patient’s tobacco and caffeine use and understanding their interactions with specific medications provides guidance when prescribing antipsychotic medications and adjusting dosage for inpatients and during clinical follow-up care.

Disclosures

The authors report no financial relationships with any company whose products are mentioned in this article or with manufacturers of competing products.

1. Wang B, Zhou SF. Synthetic and natural compounds that interact with human cytochrome P450 1A2 and implications in drug development. Curr Med Chem. 2009;16(31):4066-4218.

2. Lucas C, Martin J. Smoking and drug interactions. Australian Prescriber. 2013;36(3):102-104.

3. Eap CB, Bender S, Jaquenoud Sirot E, et al. Nonresponse to clozapine and ultrarapid CYP1A2 activity: clinical data and analysis of CYP1A2 gene. J Clin Psychopharmacol. 2004; 24(2):214-209.

4. Faber MS, Fuhr U. Time response of cytochrome P450 1A2 activity on cessation of heavy smoking. Clin Pharmacol Ther. 2004;76(2):178-184.

5. Berk M, Ng F, Wang WV, et al. Going up in smoke: tobacco smoking is associated with worse treatment outcomes in mania. J Affect Disord. 2008;110(1-2):126-134.

6. Zhou SF, Yang LP, Zhou ZW, et al. Insights into the substrate specificity, inhibitors, regulation, and polymorphisms and the clinical impact of human cytochrome P450 1A2. AAPS. 2009;11(3):481-494.

When a patient who smokes enters a tobacco-free medical facility and has access to caffeinated beverages, he (she) might experience toxicity to many pharmaceuticals and caffeine. Similarly, if a patient is discharged from a smoke-free environment with a newly adjusted medication regimen and resumes smoking or caffeine consumption, alterations in enzyme activity might reduce therapeutic efficacy of prescribed medicines. These effects are a result of alterations in the hepatic cytochrome P450 (CYP) enzyme system.

Taking a careful history of tobacco and caffeine use, and knowing the effects that these substances will have on specific medications, will help guide treatment and management decisions.

The role of CYP enzymes

CYP hepatic enzymes detoxify a variety of environmental agents into water-soluble compounds that are excreted in urine. CYP1A2 metabolizes 20% of drugs handled by the CYP system and comprises 13% of all the CYP enzymes expressed in the liver. The wide interindividual variation in CYP1A2 enzyme activity is influenced by a combination of genetic, epigenetic, ethnic, and environmental variables.1

Influence of tobacco on CYP

The polycyclic aromatic hydrocarbons in tobacco smoke induce CYP1A2 and CYP2B6 hepatic enzymes.2 Smokers exhibit increased activity of these enzymes, which results in faster clearance of many drugs, lower concentrations in blood, and diminished clinical response. The Table lists psychotropic medicines that are metabolized by CYP1A2 and CYP2B6. Co-administration of these substrates could decrease the elimination rate of other drugs also metabolized by CYP1A2. Nicotine in tobacco or in nicotine replacement therapies does not play a role in inducing CYP enzymes.

Psychiatric patients smoke at a higher rate than the general population.2 One study found that approximately 70% of patients with schizophrenia and as many as 45% of those with bipolar disorder smoke enough cigarettes (7 to 20 a day) to induce CYP1A2 and CYP2B6 activity.2 Patients who smoke and are given clozapine, haloperidol, or olanzapine show a lower serum concentration than non-smokers; in fact, the clozapine level can be reduced as much as 2.4-fold.2-5 Subsequently, patients can experience diminished clinical response to these 3 psychotropics.3

The turnover time for CYP1A2 is rapid— approximately 3 days—and a new CYP1A2 steady state activity is reached after approximately 1 week,4 which is important to remember when managing inpatients in a smoke-free facility. During acute hospitalization, patients could experience drug toxicity if the outpatient dosage is maintained.5

When they resume smoking after being discharged on a stabilized dosage of any of the medications listed in the Table, previous enzyme activity rebounds and might reduce the drug level, potentially leading to inadequate clinical response.

Caffeine and other substances

Asking about the patient’s caffeine intake is necessary because consumption of coffee is prevalent among smokers, and caffeine is metabolized by CYP1A2. Smokers need to consume as much as 4 times the amount of caffeine as non-smokers to achieve a similar caffeine serum concentration.2 Caffeine can form an insoluble precipitate with antipsychotic medication in the gut, which decreases absorption. The interaction between smoking-related induction of CYP1A2 enzymes and forced smoking cessation during hospitalization, with ongoing caffeine consumption, could lead to caffeine toxicity.4,5

Other common inducers of CYP1A2 are insulin, cabbage, cauliflower, broccoli, and charcoal-grilled meat. Also, cumin and turmeric inhibit CYP1A2 activity, which might explain an ethnic difference in drug tolerance across population groups. Additionally, certain genetic polymorphisms, in specific ethnic distributions, alter the potential for tobacco smoke to induce CYP1A2.6

Some of these polymorphisms can be genotyped for clinical application.3

Asking about a patient’s tobacco and caffeine use and understanding their interactions with specific medications provides guidance when prescribing antipsychotic medications and adjusting dosage for inpatients and during clinical follow-up care.

Disclosures

The authors report no financial relationships with any company whose products are mentioned in this article or with manufacturers of competing products.

When a patient who smokes enters a tobacco-free medical facility and has access to caffeinated beverages, he (she) might experience toxicity to many pharmaceuticals and caffeine. Similarly, if a patient is discharged from a smoke-free environment with a newly adjusted medication regimen and resumes smoking or caffeine consumption, alterations in enzyme activity might reduce therapeutic efficacy of prescribed medicines. These effects are a result of alterations in the hepatic cytochrome P450 (CYP) enzyme system.

Taking a careful history of tobacco and caffeine use, and knowing the effects that these substances will have on specific medications, will help guide treatment and management decisions.

The role of CYP enzymes

CYP hepatic enzymes detoxify a variety of environmental agents into water-soluble compounds that are excreted in urine. CYP1A2 metabolizes 20% of drugs handled by the CYP system and comprises 13% of all the CYP enzymes expressed in the liver. The wide interindividual variation in CYP1A2 enzyme activity is influenced by a combination of genetic, epigenetic, ethnic, and environmental variables.1

Influence of tobacco on CYP

The polycyclic aromatic hydrocarbons in tobacco smoke induce CYP1A2 and CYP2B6 hepatic enzymes.2 Smokers exhibit increased activity of these enzymes, which results in faster clearance of many drugs, lower concentrations in blood, and diminished clinical response. The Table lists psychotropic medicines that are metabolized by CYP1A2 and CYP2B6. Co-administration of these substrates could decrease the elimination rate of other drugs also metabolized by CYP1A2. Nicotine in tobacco or in nicotine replacement therapies does not play a role in inducing CYP enzymes.

Psychiatric patients smoke at a higher rate than the general population.2 One study found that approximately 70% of patients with schizophrenia and as many as 45% of those with bipolar disorder smoke enough cigarettes (7 to 20 a day) to induce CYP1A2 and CYP2B6 activity.2 Patients who smoke and are given clozapine, haloperidol, or olanzapine show a lower serum concentration than non-smokers; in fact, the clozapine level can be reduced as much as 2.4-fold.2-5 Subsequently, patients can experience diminished clinical response to these 3 psychotropics.3

The turnover time for CYP1A2 is rapid— approximately 3 days—and a new CYP1A2 steady state activity is reached after approximately 1 week,4 which is important to remember when managing inpatients in a smoke-free facility. During acute hospitalization, patients could experience drug toxicity if the outpatient dosage is maintained.5

When they resume smoking after being discharged on a stabilized dosage of any of the medications listed in the Table, previous enzyme activity rebounds and might reduce the drug level, potentially leading to inadequate clinical response.

Caffeine and other substances

Asking about the patient’s caffeine intake is necessary because consumption of coffee is prevalent among smokers, and caffeine is metabolized by CYP1A2. Smokers need to consume as much as 4 times the amount of caffeine as non-smokers to achieve a similar caffeine serum concentration.2 Caffeine can form an insoluble precipitate with antipsychotic medication in the gut, which decreases absorption. The interaction between smoking-related induction of CYP1A2 enzymes and forced smoking cessation during hospitalization, with ongoing caffeine consumption, could lead to caffeine toxicity.4,5

Other common inducers of CYP1A2 are insulin, cabbage, cauliflower, broccoli, and charcoal-grilled meat. Also, cumin and turmeric inhibit CYP1A2 activity, which might explain an ethnic difference in drug tolerance across population groups. Additionally, certain genetic polymorphisms, in specific ethnic distributions, alter the potential for tobacco smoke to induce CYP1A2.6

Some of these polymorphisms can be genotyped for clinical application.3

Asking about a patient’s tobacco and caffeine use and understanding their interactions with specific medications provides guidance when prescribing antipsychotic medications and adjusting dosage for inpatients and during clinical follow-up care.

Disclosures

The authors report no financial relationships with any company whose products are mentioned in this article or with manufacturers of competing products.

1. Wang B, Zhou SF. Synthetic and natural compounds that interact with human cytochrome P450 1A2 and implications in drug development. Curr Med Chem. 2009;16(31):4066-4218.

2. Lucas C, Martin J. Smoking and drug interactions. Australian Prescriber. 2013;36(3):102-104.

3. Eap CB, Bender S, Jaquenoud Sirot E, et al. Nonresponse to clozapine and ultrarapid CYP1A2 activity: clinical data and analysis of CYP1A2 gene. J Clin Psychopharmacol. 2004; 24(2):214-209.

4. Faber MS, Fuhr U. Time response of cytochrome P450 1A2 activity on cessation of heavy smoking. Clin Pharmacol Ther. 2004;76(2):178-184.

5. Berk M, Ng F, Wang WV, et al. Going up in smoke: tobacco smoking is associated with worse treatment outcomes in mania. J Affect Disord. 2008;110(1-2):126-134.

6. Zhou SF, Yang LP, Zhou ZW, et al. Insights into the substrate specificity, inhibitors, regulation, and polymorphisms and the clinical impact of human cytochrome P450 1A2. AAPS. 2009;11(3):481-494.

1. Wang B, Zhou SF. Synthetic and natural compounds that interact with human cytochrome P450 1A2 and implications in drug development. Curr Med Chem. 2009;16(31):4066-4218.

2. Lucas C, Martin J. Smoking and drug interactions. Australian Prescriber. 2013;36(3):102-104.

3. Eap CB, Bender S, Jaquenoud Sirot E, et al. Nonresponse to clozapine and ultrarapid CYP1A2 activity: clinical data and analysis of CYP1A2 gene. J Clin Psychopharmacol. 2004; 24(2):214-209.

4. Faber MS, Fuhr U. Time response of cytochrome P450 1A2 activity on cessation of heavy smoking. Clin Pharmacol Ther. 2004;76(2):178-184.

5. Berk M, Ng F, Wang WV, et al. Going up in smoke: tobacco smoking is associated with worse treatment outcomes in mania. J Affect Disord. 2008;110(1-2):126-134.

6. Zhou SF, Yang LP, Zhou ZW, et al. Insights into the substrate specificity, inhibitors, regulation, and polymorphisms and the clinical impact of human cytochrome P450 1A2. AAPS. 2009;11(3):481-494.

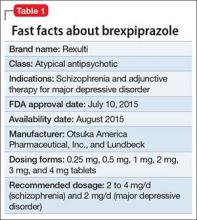

Brexpiprazole for schizophrenia and as adjunct for major depressive disorder

Brexpiprazole, FDA-approved in July 2015 to treat schizophrenia and as an adjunct for major depressive disorder (MDD) (Table 1), has shown efficacy in 2 phase-III acute trials for each indication.1-6 Although brexpiprazole is a dopamine D2 partial agonist, it differs from aripiprazole, the other available D2 partial agonist, because it is more potent at serotonin 5-HT1A and 5-HT2A receptors and displays less intrinsic activity at D2 receptors,7 which could mean better tolerability.

Clinical implications

Schizophrenia is heterogeneous, and individual response and tolerability to antipsychotics vary greatly8; therefore, new drug options are useful. For MDD, before the availability of brexpiprazole, only 3 antipsychotics were FDA-approved for adjunctive use with antidepressant therapy9; brexpiprazole represents another agent for patients whose depressive symptoms persist after standard antidepressant treatment.

Variables that limit the use of antipsychotics include extrapyramidal symptoms (EPS), akathisia, sedation/somnolence, weight gain, metabolic abnormalities, and hyperprolactinemia. If post-marketing studies and clinical experience confirm that brexpiprazole has an overall favorable side-effect profile regarding these tolerability obstacles, brexpiprazole would potentially have advantages over some other available agents, including aripiprazole.

How it works

In addition to a subnanomolar binding affinity (Ki < 1 nM) to dopamine D2 receptors as a partial agonist, brexpiprazole also exhibits similar binding affinities for serotonin 5-HT1A (partial agonist), 5-HT2A (antagonist), and adrenergic α1B (antagonist) and α2C (antagonist) receptors.7

Brexpiprazole also has high affinity (Ki < 5 nM) for dopamine D3 (partial ago nist), serotonin 5-HT2B (antagonist), and 5-HT7 (antagonist), and at adrenergic α1A (antagonist) and α1D (antagonist) receptors. Brexpiprazole has moderate affinity for histamine H1 receptors (Ki = 19 nM, antagonist), and low affinity for muscarinic M1 receptors (Ki > 1000 nM, antagonist).

Brexpiprazole’s pharmacodynamic profile differs from other available antipsychotics, including aripiprazole. Whether this translates to meaningful differences in efficacy and tolerability will depend on the outcomes of specifically designed clinical trials as well as “real-world” experience. Animal models have suggested amelioration of schizophrenia-like behavior, depression-like behavior, and anxiety-like behavior with brexipiprazole.6

Pharmacokinetics

At 91 hours, brexpiprazole’s half-life is relatively long; a steady-state concentration therefore is attained in approximately 2 weeks.1 In the phase-III clinical trials, brexpiprazole was titrated to target dosages, and therefore the product label recommends the same. Brexpiprazole can be administered with or without food.

In a study of brexpiprazole excretion, after a single oral dose of [14C]-labeled brexpiprazole, approximately 25% and 46% of the administered radioactivity was recovered in urine and feces, respectively. Less than 1% of unchanged brexpiprazole was excreted in the urine, and approximately 14% of the oral dose was recovered unchanged in the feces.

Exposure, as measured by maximum concentration and area under the concentration curve, is dose proportional.

Metabolism of brexpiprazole is mediated principally by cytochrome P450 (CYP) 3A4 and CYP2D6. Based on in vitro data, brexpiprazole shows little or no inhibition of CYP450 isozymes.

Efficacy

FDA approval for brexpiprazole for schizophrenia and for adjunctive use in MDD was based on 4 phase-III pivotal acute clinical trials conducted in adults, 2 studies each for each disorder.1-6 These studies are described in Table 2.2-5

Schizophrenia. The primary outcome measure for the acute schizophrenia trials was change on the Positive and Negative Syndrome Scale (PANSS) total scores from baseline to 6-week endpoint. Statistically significant reductions in PANSS total score were observed for brexpiprazole dosages of 2 mg/d and 4 mg/d in one study,2 and 4 mg/d in another study.3 Responder rates also were measured, with response defined as a reduction of ≥30% from baseline in PANSS total score or a Clinical Global Impressions-Improvement score of 1 (very much improved) or 2 (much improved).2,3 Pooling together the available data for the recommended target dosage of brexpiprazole for schizophrenia (2 to 4 mg/d) from the 2 phase-III studies, 45.5% of patients responded to the drug, compared with 31% for the pooled placebo groups, yielding a number needed to treat (NNT) of 7 (95% CI, 5-12).6

Although not described in product labeling, a phase-III 52-week maintenance study demonstrated brexpiprazole’s efficacy in preventing exacerbation of psychotic symptoms and impending relapse in patients with schizophrenia.10 Time from randomization to exacerbation of psychotic symptoms or impending relapse showed a beneficial effect with brexpiprazole compared with placebo (log-rank test: hazard ratio = 0.292, P < .0001). Significantly fewer patients in the brexpiprazole group relapsed compared with placebo (13.5% vs 38.5%, P < .0001), resulting in a NNT of 4 (95% CI, 3-8).

Major depressive disorder. The primary outcome measure for the acute MDD studies was change in Montgomery-Åsberg Depression Rating Scale (MADRS) scores from baseline to 6-week endpoint of the randomized treatment phase. All patients were required to have a history of inadequate response to 1 to 3 treatment trials of standard antidepressants for their current depressive episode. In addition, patients entered the randomized phase only if they had an inadequate response to antidepressant therapy during an 8-week prospective treatment trial of standard antidepressant treatment plus single-blind placebo.

Participants who responded adequately to the antidepressant in the prospective single-blind phase were not randomized, but instead continued on antidepressant treatment plus single-blind placebo for 6 weeks.

The phase-III studies showed positive results for brexpiprazole, 2 mg/d and 3 mg/d, with change in MADRS from baseline to endpoint superior to that observed with placebo.4,5

When examining treatment response, defined as a reduction of ≥50% in MADRS total score from baseline, NNT vs placebo for response were 12 at all dosages tested, however, NNT vs placebo for remission (defined as MADRS total score ≤10 and ≥50% improvement from baseline) ranged from 17 to 31 and were not statistically significant.6 When the results for brexpiprazole, 1 mg/d, 2 mg/d, and 3 mg/d, from the 2 phase-III trials are pooled together, 23.2% of the patients receiving brexpiprazole were responders, vs 14.5% for placebo, yielding a NNT of 12 (95% CI, 8-26); 14.4% of the brexpiprazole-treated patients met remission criteria, vs 9.6% for placebo, resulting in a NNT of 21 (95% CI, 12-138).6

Tolerability

Overall tolerability can be evaluated by examining the percentage of patients who discontinued the clinical trials because of an adverse event (AE). In the acute schizophrenia double-blind trials for the recommended dosage range of 2 to 4 mg, the discontinuation rates were lower overall for patients receiving brexpiprazole compared with placebo.2,3 In the acute MDD trials, 32.6% of brexpiprazole-treated patients and 10.7% of placebo-treated patients discontinued because of AEs,4,5 yielding a number needed to harm (NNH) of 53 (95% CI, 30-235).6

The most commonly encountered AEs for MDD (incidence ≥5% and at least twice the rate for placebo) were akathisia (8.6% vs 1.7% for brexpiprazole vs placebo, and dose-related) and weight gain (6.7% vs 1.9%),1 with NNH values of 15 (95% CI, 11-23), and 22 (95% CI, 15-42), respectively.6 The most commonly encountered AE for schizophrenia (incidence ≥4% and at least twice the rate for placebo) was weight gain (4% vs 2%),1 with a NNH of 50 (95% CI, 26-1773).6

Of note, rates of akathisia in the schizophrenia trials were 5.5% for brexpiprazole and 4.6% for placebo,1 yielding a non-statistically significant NNH of 112.6 In a 6-week exploratory study,11 the incidence of EPS-related AEs including akathisia was lower for brexpiprazole-treated patients (14.1%) compared with those receiving aripiprazole (30.3%), for a NNT advantage for brexpiprazole of 7 (not statistically significant).

Short-term weight gain appears modest; however, outliers with an increase of ≥7% of body weight were evident in open-label long-term safety studies.1,6 Effects on glucose and lipids were small. Minimal effects on prolactin were observed, and no clinically relevant effects on the QT interval were evident.

Contraindications

The only absolute contraindication for brexpiprazole is known hypersensitivity to brexpiprazole or any of its components. Reactions have included rash, facial swelling, urticaria, and anaphylaxis.1

As with all antipsychotics and antipsychotics with an indication for a depressive disorder:

• there is a bolded boxed warning in the product label regarding increased mortality in geriatric patients with dementia-related psychosis. Brexpiprazole is not approved for treating patients with dementia-related psychosis

• there is a bolded boxed warning in the product label about suicidal thoughts and behaviors in patients age ≤24. The safety and efficacy of brexpiprazole have not been established in pediatric patients.

Dosing

Schizophrenia. The recommended starting dosage for brexpiprazole for schizophrenia is 1 mg/d on Days 1 to 4. Brexpiprazole can be titrated to 2 mg/d on Day 5 through Day 7, then to 4 mg/d on Day 8 based on the patient’s response and ability to tolerate the medication. The recommended target dosage is 2 to 4 mg/d with a maximum recommended daily dosage of 4 mg.

Major depressive disorder. The recommended starting dosage for brexpiprazole as adjunctive treatment for MDD is 0.5 mg or 1 mg/d. Brexpiprazole can be titrated to 1 mg/d, then up to the target dosage of 2 mg/d, with dosage increases occurring at weekly intervals based on the patient’s clinical response and ability to tolerate the agent, with a maximum recommended dosage of 3 mg/d.

Other considerations. For patients with moderate to severe hepatic impairment, or moderate, severe, or end-stage renal impairment, the maximum recommended dosage is 3 mg/d for patients with schizophrenia, and 2 mg/d for patients with MDD.

In general, dosage adjustments are recommended in patients who are known CYP2D6 poor metabolizers and in those taking concomitant CYP3A4 inhibitors or CYP2D6 inhibitors or strong CYP3A4 inducers1:

• for strong CYP2D6 or CYP3A4 inhibitors, administer one-half the usual dosage

• for strong/moderate CYP2D6 with strong/moderate CYP3A4 inhibitors, administer a one-quarter of the usual dosage

• for known CYP2D6 poor metabolizers taking strong/moderate CYP3A4 inhibitors, also administer a one-quarter of the usual dosage

• for strong CYP3A4 inducers, double the usual dosage and further adjust based on clinical response.

In clinical trials for MDD, brexpiprazole dosage was not adjusted for strong CYP2D6 inhibitors (eg, paroxetine, fluoxetine). Therefore, CYP considerations are already factored into general dosing recommendations and brexpiprazole could be administered without dosage adjustment in patients with MDD; however, under these circumstances, it would be prudent to start brexpiprazole at 0.5 mg, which, although “on-label,” represents a low starting dosage. (Whenever 2 drugs are co-administered and 1 agent has the ability to disturb the metabolism of the other, using smaller increments to the target dosage and possibly waiting longer between dosage adjustments could help avoid potential drug–drug interactions.)

No dosage adjustment for brexpiprazole is required on the basis of sex, race or ethnicity, or smoking status. Although clinical studies did not include patients age ≥65, the product label recommends that in general, dose selection for a geriatric patient should be cautious, usually starting at the low end of the dosing range, reflecting the greater frequency of decreased hepatic, renal, and cardiac function, concomitant diseases, and other drug therapy.

Bottom Line

Brexpiprazole, an atypical antipsychotic, is FDA-approved for schizophrenia and as an adjunct to antidepressants in major depressive disorder. For both indications, brexpiprazole demonstrated positive results compared with placebo in phase-III trials. Brexpiprazole is more potent at serotonin 5-HT1A and 5-HT2A receptors and displays less intrinsic activity at D2 receptors than aripiprazole, which could mean that the drug may be better-tolerated.

Related Resources

• Citrome L. Brexpiprazole: a new dopamine D2 receptor partial agonist for the treatment of schizophrenia and major depressive disorder. Drugs Today (Barc). 2015;51(7):397-414.

• Citrome L, Stensbøl TB, Maeda K. The preclinical profile of brexpiprazole: what is its clinical relevance for the treatment of psychiatric disorders? Expert Rev Neurother. In press.

Drug Brand Names

Aripiprazole • Abilify

Brexpiprazole • Rexulti

Fluoxetine • Prozac

Paroxetine • Paxil

Disclosure

Dr. Citrome is a consultant to Alexza Pharmaceuticals, Alkermes, Allergan, Boehringer Ingelheim, Bristol-Myers Squibb, Eli Lilly and Company, Forum Pharmaceuticals, Genentech, Janssen, Jazz Pharmaceuticals, Lundbeck, Merck, Medivation, Mylan, Novartis, Noven, Otsuka, Pfizer, Reckitt Benckiser, Reviva, Shire, Sunovion, Takeda, Teva, and Valeant Pharmaceuticals; and is a speaker for Allergan, AstraZeneca, Janssen, Jazz Pharmaceuticals, Lundbeck, Merck, Novartis, Otsuka, Pfizer, Shire, Sunovion, Takeda, and Teva.

1. Rexulti [package insert]. Rockville, MD: Otsuka; 2015.

2. Correll CU, Skuban A, Ouyang J, et al. Efficacy and safety of brexpiprazole for the treatment of acute schizophrenia: a 6-week randomized, double-blind, placebo-controlled trial. Am J Psychiatry. 2015;172(9):870-880.

3. Kane JM, Skuban A, Ouyang J, et al. A multicenter, randomized, double-blind, controlled phase 3 trial of fixed-dose brexpiprazole for the treatment of adults with acute schizophrenia. Schizophr Res. 2015;164(1-3):127-135.

4. Thase ME, Youakim JM, Skuban A, et al. Adjunctive brexpiprazole 1 and 3 mg for patients with major depressive disorder following inadequate response to antidepressants: a phase 3, randomized, double-blind study [published online August 4, 2015]. J Clin Psychiatry. doi: 10.4088/ JCP.14m09689.

5. Thase ME, Youakim JM, Skuban A, et al. Efficacy and safety of adjunctive brexpiprazole 2 mg in major depressive disorder: a phase 3, randomized, placebo-controlled study in patients with inadequate response to antidepressants [published online August 4, 2015]. J Clin Psychiatry. doi: 10.4088/JCP.14m09688.

6. Citrome L. Brexpiprazole for schizophrenia and as adjunct for major depressive disorder: a systematic review of the efficacy and safety profile for this newly approved antipsychotic—what is the number needed to treat, number needed to harm and likelihood to be helped or harmed? Int J Clin Pract. 2015;69(9):978-997.

7. Maeda K, Sugino H, Akazawa H, et al. Brexpiprazole I: in vitro and in vivo characterization of a novel serotonin-dopamine activity modulator. J Pharmacol Exp Ther. 2014;350(3):589-604.

8. Volavka J, Citrome L. Oral antipsychotics for the treatment of schizophrenia: heterogeneity in efficacy and tolerability should drive decision-making. Expert Opin Pharmacother. 2009;10(12):1917-1928.

9. Citrome L. Adjunctive aripiprazole, olanzapine, or quetiapine for major depressive disorder: an analysis of number needed to treat, number needed to harm, and likelihood to be helped or harmed. Postgrad Med. 2010;122(4):39-48.

10. Hobart M, Ouyang J, Forbes A, et al. Efficacy and safety of brexpiprazole (OPC-34712) as maintenance treatment in adults with schizophrenia: a randomized, double-blind, placebo-controlled study. Poster presented at: the American Society of Clinical Psychopharmacology Annual Meeting; June 22 to 25, 2015; Miami, FL.

11. Citrome L, Ota A, Nagamizu K, Perry P, et al. The effect of brexpiprazole (OPC‐34712) versus aripiprazole in adult patients with acute schizophrenia: an exploratory study. Poster presented at: the Society of Biological Psychiatry Annual Scientific Meeting and Convention; May 15, 2015; Toronto, Ontario, Canada.

Brexpiprazole, FDA-approved in July 2015 to treat schizophrenia and as an adjunct for major depressive disorder (MDD) (Table 1), has shown efficacy in 2 phase-III acute trials for each indication.1-6 Although brexpiprazole is a dopamine D2 partial agonist, it differs from aripiprazole, the other available D2 partial agonist, because it is more potent at serotonin 5-HT1A and 5-HT2A receptors and displays less intrinsic activity at D2 receptors,7 which could mean better tolerability.

Clinical implications

Schizophrenia is heterogeneous, and individual response and tolerability to antipsychotics vary greatly8; therefore, new drug options are useful. For MDD, before the availability of brexpiprazole, only 3 antipsychotics were FDA-approved for adjunctive use with antidepressant therapy9; brexpiprazole represents another agent for patients whose depressive symptoms persist after standard antidepressant treatment.

Variables that limit the use of antipsychotics include extrapyramidal symptoms (EPS), akathisia, sedation/somnolence, weight gain, metabolic abnormalities, and hyperprolactinemia. If post-marketing studies and clinical experience confirm that brexpiprazole has an overall favorable side-effect profile regarding these tolerability obstacles, brexpiprazole would potentially have advantages over some other available agents, including aripiprazole.

How it works

In addition to a subnanomolar binding affinity (Ki < 1 nM) to dopamine D2 receptors as a partial agonist, brexpiprazole also exhibits similar binding affinities for serotonin 5-HT1A (partial agonist), 5-HT2A (antagonist), and adrenergic α1B (antagonist) and α2C (antagonist) receptors.7

Brexpiprazole also has high affinity (Ki < 5 nM) for dopamine D3 (partial ago nist), serotonin 5-HT2B (antagonist), and 5-HT7 (antagonist), and at adrenergic α1A (antagonist) and α1D (antagonist) receptors. Brexpiprazole has moderate affinity for histamine H1 receptors (Ki = 19 nM, antagonist), and low affinity for muscarinic M1 receptors (Ki > 1000 nM, antagonist).

Brexpiprazole’s pharmacodynamic profile differs from other available antipsychotics, including aripiprazole. Whether this translates to meaningful differences in efficacy and tolerability will depend on the outcomes of specifically designed clinical trials as well as “real-world” experience. Animal models have suggested amelioration of schizophrenia-like behavior, depression-like behavior, and anxiety-like behavior with brexipiprazole.6

Pharmacokinetics

At 91 hours, brexpiprazole’s half-life is relatively long; a steady-state concentration therefore is attained in approximately 2 weeks.1 In the phase-III clinical trials, brexpiprazole was titrated to target dosages, and therefore the product label recommends the same. Brexpiprazole can be administered with or without food.

In a study of brexpiprazole excretion, after a single oral dose of [14C]-labeled brexpiprazole, approximately 25% and 46% of the administered radioactivity was recovered in urine and feces, respectively. Less than 1% of unchanged brexpiprazole was excreted in the urine, and approximately 14% of the oral dose was recovered unchanged in the feces.

Exposure, as measured by maximum concentration and area under the concentration curve, is dose proportional.

Metabolism of brexpiprazole is mediated principally by cytochrome P450 (CYP) 3A4 and CYP2D6. Based on in vitro data, brexpiprazole shows little or no inhibition of CYP450 isozymes.

Efficacy

FDA approval for brexpiprazole for schizophrenia and for adjunctive use in MDD was based on 4 phase-III pivotal acute clinical trials conducted in adults, 2 studies each for each disorder.1-6 These studies are described in Table 2.2-5

Schizophrenia. The primary outcome measure for the acute schizophrenia trials was change on the Positive and Negative Syndrome Scale (PANSS) total scores from baseline to 6-week endpoint. Statistically significant reductions in PANSS total score were observed for brexpiprazole dosages of 2 mg/d and 4 mg/d in one study,2 and 4 mg/d in another study.3 Responder rates also were measured, with response defined as a reduction of ≥30% from baseline in PANSS total score or a Clinical Global Impressions-Improvement score of 1 (very much improved) or 2 (much improved).2,3 Pooling together the available data for the recommended target dosage of brexpiprazole for schizophrenia (2 to 4 mg/d) from the 2 phase-III studies, 45.5% of patients responded to the drug, compared with 31% for the pooled placebo groups, yielding a number needed to treat (NNT) of 7 (95% CI, 5-12).6

Although not described in product labeling, a phase-III 52-week maintenance study demonstrated brexpiprazole’s efficacy in preventing exacerbation of psychotic symptoms and impending relapse in patients with schizophrenia.10 Time from randomization to exacerbation of psychotic symptoms or impending relapse showed a beneficial effect with brexpiprazole compared with placebo (log-rank test: hazard ratio = 0.292, P < .0001). Significantly fewer patients in the brexpiprazole group relapsed compared with placebo (13.5% vs 38.5%, P < .0001), resulting in a NNT of 4 (95% CI, 3-8).

Major depressive disorder. The primary outcome measure for the acute MDD studies was change in Montgomery-Åsberg Depression Rating Scale (MADRS) scores from baseline to 6-week endpoint of the randomized treatment phase. All patients were required to have a history of inadequate response to 1 to 3 treatment trials of standard antidepressants for their current depressive episode. In addition, patients entered the randomized phase only if they had an inadequate response to antidepressant therapy during an 8-week prospective treatment trial of standard antidepressant treatment plus single-blind placebo.

Participants who responded adequately to the antidepressant in the prospective single-blind phase were not randomized, but instead continued on antidepressant treatment plus single-blind placebo for 6 weeks.

The phase-III studies showed positive results for brexpiprazole, 2 mg/d and 3 mg/d, with change in MADRS from baseline to endpoint superior to that observed with placebo.4,5

When examining treatment response, defined as a reduction of ≥50% in MADRS total score from baseline, NNT vs placebo for response were 12 at all dosages tested, however, NNT vs placebo for remission (defined as MADRS total score ≤10 and ≥50% improvement from baseline) ranged from 17 to 31 and were not statistically significant.6 When the results for brexpiprazole, 1 mg/d, 2 mg/d, and 3 mg/d, from the 2 phase-III trials are pooled together, 23.2% of the patients receiving brexpiprazole were responders, vs 14.5% for placebo, yielding a NNT of 12 (95% CI, 8-26); 14.4% of the brexpiprazole-treated patients met remission criteria, vs 9.6% for placebo, resulting in a NNT of 21 (95% CI, 12-138).6

Tolerability

Overall tolerability can be evaluated by examining the percentage of patients who discontinued the clinical trials because of an adverse event (AE). In the acute schizophrenia double-blind trials for the recommended dosage range of 2 to 4 mg, the discontinuation rates were lower overall for patients receiving brexpiprazole compared with placebo.2,3 In the acute MDD trials, 32.6% of brexpiprazole-treated patients and 10.7% of placebo-treated patients discontinued because of AEs,4,5 yielding a number needed to harm (NNH) of 53 (95% CI, 30-235).6

The most commonly encountered AEs for MDD (incidence ≥5% and at least twice the rate for placebo) were akathisia (8.6% vs 1.7% for brexpiprazole vs placebo, and dose-related) and weight gain (6.7% vs 1.9%),1 with NNH values of 15 (95% CI, 11-23), and 22 (95% CI, 15-42), respectively.6 The most commonly encountered AE for schizophrenia (incidence ≥4% and at least twice the rate for placebo) was weight gain (4% vs 2%),1 with a NNH of 50 (95% CI, 26-1773).6

Of note, rates of akathisia in the schizophrenia trials were 5.5% for brexpiprazole and 4.6% for placebo,1 yielding a non-statistically significant NNH of 112.6 In a 6-week exploratory study,11 the incidence of EPS-related AEs including akathisia was lower for brexpiprazole-treated patients (14.1%) compared with those receiving aripiprazole (30.3%), for a NNT advantage for brexpiprazole of 7 (not statistically significant).

Short-term weight gain appears modest; however, outliers with an increase of ≥7% of body weight were evident in open-label long-term safety studies.1,6 Effects on glucose and lipids were small. Minimal effects on prolactin were observed, and no clinically relevant effects on the QT interval were evident.

Contraindications

The only absolute contraindication for brexpiprazole is known hypersensitivity to brexpiprazole or any of its components. Reactions have included rash, facial swelling, urticaria, and anaphylaxis.1

As with all antipsychotics and antipsychotics with an indication for a depressive disorder:

• there is a bolded boxed warning in the product label regarding increased mortality in geriatric patients with dementia-related psychosis. Brexpiprazole is not approved for treating patients with dementia-related psychosis

• there is a bolded boxed warning in the product label about suicidal thoughts and behaviors in patients age ≤24. The safety and efficacy of brexpiprazole have not been established in pediatric patients.

Dosing

Schizophrenia. The recommended starting dosage for brexpiprazole for schizophrenia is 1 mg/d on Days 1 to 4. Brexpiprazole can be titrated to 2 mg/d on Day 5 through Day 7, then to 4 mg/d on Day 8 based on the patient’s response and ability to tolerate the medication. The recommended target dosage is 2 to 4 mg/d with a maximum recommended daily dosage of 4 mg.

Major depressive disorder. The recommended starting dosage for brexpiprazole as adjunctive treatment for MDD is 0.5 mg or 1 mg/d. Brexpiprazole can be titrated to 1 mg/d, then up to the target dosage of 2 mg/d, with dosage increases occurring at weekly intervals based on the patient’s clinical response and ability to tolerate the agent, with a maximum recommended dosage of 3 mg/d.

Other considerations. For patients with moderate to severe hepatic impairment, or moderate, severe, or end-stage renal impairment, the maximum recommended dosage is 3 mg/d for patients with schizophrenia, and 2 mg/d for patients with MDD.

In general, dosage adjustments are recommended in patients who are known CYP2D6 poor metabolizers and in those taking concomitant CYP3A4 inhibitors or CYP2D6 inhibitors or strong CYP3A4 inducers1:

• for strong CYP2D6 or CYP3A4 inhibitors, administer one-half the usual dosage

• for strong/moderate CYP2D6 with strong/moderate CYP3A4 inhibitors, administer a one-quarter of the usual dosage

• for known CYP2D6 poor metabolizers taking strong/moderate CYP3A4 inhibitors, also administer a one-quarter of the usual dosage

• for strong CYP3A4 inducers, double the usual dosage and further adjust based on clinical response.

In clinical trials for MDD, brexpiprazole dosage was not adjusted for strong CYP2D6 inhibitors (eg, paroxetine, fluoxetine). Therefore, CYP considerations are already factored into general dosing recommendations and brexpiprazole could be administered without dosage adjustment in patients with MDD; however, under these circumstances, it would be prudent to start brexpiprazole at 0.5 mg, which, although “on-label,” represents a low starting dosage. (Whenever 2 drugs are co-administered and 1 agent has the ability to disturb the metabolism of the other, using smaller increments to the target dosage and possibly waiting longer between dosage adjustments could help avoid potential drug–drug interactions.)

No dosage adjustment for brexpiprazole is required on the basis of sex, race or ethnicity, or smoking status. Although clinical studies did not include patients age ≥65, the product label recommends that in general, dose selection for a geriatric patient should be cautious, usually starting at the low end of the dosing range, reflecting the greater frequency of decreased hepatic, renal, and cardiac function, concomitant diseases, and other drug therapy.

Bottom Line

Brexpiprazole, an atypical antipsychotic, is FDA-approved for schizophrenia and as an adjunct to antidepressants in major depressive disorder. For both indications, brexpiprazole demonstrated positive results compared with placebo in phase-III trials. Brexpiprazole is more potent at serotonin 5-HT1A and 5-HT2A receptors and displays less intrinsic activity at D2 receptors than aripiprazole, which could mean that the drug may be better-tolerated.

Related Resources

• Citrome L. Brexpiprazole: a new dopamine D2 receptor partial agonist for the treatment of schizophrenia and major depressive disorder. Drugs Today (Barc). 2015;51(7):397-414.

• Citrome L, Stensbøl TB, Maeda K. The preclinical profile of brexpiprazole: what is its clinical relevance for the treatment of psychiatric disorders? Expert Rev Neurother. In press.

Drug Brand Names

Aripiprazole • Abilify

Brexpiprazole • Rexulti

Fluoxetine • Prozac

Paroxetine • Paxil

Disclosure

Dr. Citrome is a consultant to Alexza Pharmaceuticals, Alkermes, Allergan, Boehringer Ingelheim, Bristol-Myers Squibb, Eli Lilly and Company, Forum Pharmaceuticals, Genentech, Janssen, Jazz Pharmaceuticals, Lundbeck, Merck, Medivation, Mylan, Novartis, Noven, Otsuka, Pfizer, Reckitt Benckiser, Reviva, Shire, Sunovion, Takeda, Teva, and Valeant Pharmaceuticals; and is a speaker for Allergan, AstraZeneca, Janssen, Jazz Pharmaceuticals, Lundbeck, Merck, Novartis, Otsuka, Pfizer, Shire, Sunovion, Takeda, and Teva.

Brexpiprazole, FDA-approved in July 2015 to treat schizophrenia and as an adjunct for major depressive disorder (MDD) (Table 1), has shown efficacy in 2 phase-III acute trials for each indication.1-6 Although brexpiprazole is a dopamine D2 partial agonist, it differs from aripiprazole, the other available D2 partial agonist, because it is more potent at serotonin 5-HT1A and 5-HT2A receptors and displays less intrinsic activity at D2 receptors,7 which could mean better tolerability.

Clinical implications

Schizophrenia is heterogeneous, and individual response and tolerability to antipsychotics vary greatly8; therefore, new drug options are useful. For MDD, before the availability of brexpiprazole, only 3 antipsychotics were FDA-approved for adjunctive use with antidepressant therapy9; brexpiprazole represents another agent for patients whose depressive symptoms persist after standard antidepressant treatment.

Variables that limit the use of antipsychotics include extrapyramidal symptoms (EPS), akathisia, sedation/somnolence, weight gain, metabolic abnormalities, and hyperprolactinemia. If post-marketing studies and clinical experience confirm that brexpiprazole has an overall favorable side-effect profile regarding these tolerability obstacles, brexpiprazole would potentially have advantages over some other available agents, including aripiprazole.

How it works

In addition to a subnanomolar binding affinity (Ki < 1 nM) to dopamine D2 receptors as a partial agonist, brexpiprazole also exhibits similar binding affinities for serotonin 5-HT1A (partial agonist), 5-HT2A (antagonist), and adrenergic α1B (antagonist) and α2C (antagonist) receptors.7

Brexpiprazole also has high affinity (Ki < 5 nM) for dopamine D3 (partial ago nist), serotonin 5-HT2B (antagonist), and 5-HT7 (antagonist), and at adrenergic α1A (antagonist) and α1D (antagonist) receptors. Brexpiprazole has moderate affinity for histamine H1 receptors (Ki = 19 nM, antagonist), and low affinity for muscarinic M1 receptors (Ki > 1000 nM, antagonist).

Brexpiprazole’s pharmacodynamic profile differs from other available antipsychotics, including aripiprazole. Whether this translates to meaningful differences in efficacy and tolerability will depend on the outcomes of specifically designed clinical trials as well as “real-world” experience. Animal models have suggested amelioration of schizophrenia-like behavior, depression-like behavior, and anxiety-like behavior with brexipiprazole.6

Pharmacokinetics

At 91 hours, brexpiprazole’s half-life is relatively long; a steady-state concentration therefore is attained in approximately 2 weeks.1 In the phase-III clinical trials, brexpiprazole was titrated to target dosages, and therefore the product label recommends the same. Brexpiprazole can be administered with or without food.

In a study of brexpiprazole excretion, after a single oral dose of [14C]-labeled brexpiprazole, approximately 25% and 46% of the administered radioactivity was recovered in urine and feces, respectively. Less than 1% of unchanged brexpiprazole was excreted in the urine, and approximately 14% of the oral dose was recovered unchanged in the feces.

Exposure, as measured by maximum concentration and area under the concentration curve, is dose proportional.

Metabolism of brexpiprazole is mediated principally by cytochrome P450 (CYP) 3A4 and CYP2D6. Based on in vitro data, brexpiprazole shows little or no inhibition of CYP450 isozymes.

Efficacy

FDA approval for brexpiprazole for schizophrenia and for adjunctive use in MDD was based on 4 phase-III pivotal acute clinical trials conducted in adults, 2 studies each for each disorder.1-6 These studies are described in Table 2.2-5

Schizophrenia. The primary outcome measure for the acute schizophrenia trials was change on the Positive and Negative Syndrome Scale (PANSS) total scores from baseline to 6-week endpoint. Statistically significant reductions in PANSS total score were observed for brexpiprazole dosages of 2 mg/d and 4 mg/d in one study,2 and 4 mg/d in another study.3 Responder rates also were measured, with response defined as a reduction of ≥30% from baseline in PANSS total score or a Clinical Global Impressions-Improvement score of 1 (very much improved) or 2 (much improved).2,3 Pooling together the available data for the recommended target dosage of brexpiprazole for schizophrenia (2 to 4 mg/d) from the 2 phase-III studies, 45.5% of patients responded to the drug, compared with 31% for the pooled placebo groups, yielding a number needed to treat (NNT) of 7 (95% CI, 5-12).6

Although not described in product labeling, a phase-III 52-week maintenance study demonstrated brexpiprazole’s efficacy in preventing exacerbation of psychotic symptoms and impending relapse in patients with schizophrenia.10 Time from randomization to exacerbation of psychotic symptoms or impending relapse showed a beneficial effect with brexpiprazole compared with placebo (log-rank test: hazard ratio = 0.292, P < .0001). Significantly fewer patients in the brexpiprazole group relapsed compared with placebo (13.5% vs 38.5%, P < .0001), resulting in a NNT of 4 (95% CI, 3-8).

Major depressive disorder. The primary outcome measure for the acute MDD studies was change in Montgomery-Åsberg Depression Rating Scale (MADRS) scores from baseline to 6-week endpoint of the randomized treatment phase. All patients were required to have a history of inadequate response to 1 to 3 treatment trials of standard antidepressants for their current depressive episode. In addition, patients entered the randomized phase only if they had an inadequate response to antidepressant therapy during an 8-week prospective treatment trial of standard antidepressant treatment plus single-blind placebo.

Participants who responded adequately to the antidepressant in the prospective single-blind phase were not randomized, but instead continued on antidepressant treatment plus single-blind placebo for 6 weeks.

The phase-III studies showed positive results for brexpiprazole, 2 mg/d and 3 mg/d, with change in MADRS from baseline to endpoint superior to that observed with placebo.4,5

When examining treatment response, defined as a reduction of ≥50% in MADRS total score from baseline, NNT vs placebo for response were 12 at all dosages tested, however, NNT vs placebo for remission (defined as MADRS total score ≤10 and ≥50% improvement from baseline) ranged from 17 to 31 and were not statistically significant.6 When the results for brexpiprazole, 1 mg/d, 2 mg/d, and 3 mg/d, from the 2 phase-III trials are pooled together, 23.2% of the patients receiving brexpiprazole were responders, vs 14.5% for placebo, yielding a NNT of 12 (95% CI, 8-26); 14.4% of the brexpiprazole-treated patients met remission criteria, vs 9.6% for placebo, resulting in a NNT of 21 (95% CI, 12-138).6

Tolerability

Overall tolerability can be evaluated by examining the percentage of patients who discontinued the clinical trials because of an adverse event (AE). In the acute schizophrenia double-blind trials for the recommended dosage range of 2 to 4 mg, the discontinuation rates were lower overall for patients receiving brexpiprazole compared with placebo.2,3 In the acute MDD trials, 32.6% of brexpiprazole-treated patients and 10.7% of placebo-treated patients discontinued because of AEs,4,5 yielding a number needed to harm (NNH) of 53 (95% CI, 30-235).6

The most commonly encountered AEs for MDD (incidence ≥5% and at least twice the rate for placebo) were akathisia (8.6% vs 1.7% for brexpiprazole vs placebo, and dose-related) and weight gain (6.7% vs 1.9%),1 with NNH values of 15 (95% CI, 11-23), and 22 (95% CI, 15-42), respectively.6 The most commonly encountered AE for schizophrenia (incidence ≥4% and at least twice the rate for placebo) was weight gain (4% vs 2%),1 with a NNH of 50 (95% CI, 26-1773).6

Of note, rates of akathisia in the schizophrenia trials were 5.5% for brexpiprazole and 4.6% for placebo,1 yielding a non-statistically significant NNH of 112.6 In a 6-week exploratory study,11 the incidence of EPS-related AEs including akathisia was lower for brexpiprazole-treated patients (14.1%) compared with those receiving aripiprazole (30.3%), for a NNT advantage for brexpiprazole of 7 (not statistically significant).

Short-term weight gain appears modest; however, outliers with an increase of ≥7% of body weight were evident in open-label long-term safety studies.1,6 Effects on glucose and lipids were small. Minimal effects on prolactin were observed, and no clinically relevant effects on the QT interval were evident.

Contraindications

The only absolute contraindication for brexpiprazole is known hypersensitivity to brexpiprazole or any of its components. Reactions have included rash, facial swelling, urticaria, and anaphylaxis.1

As with all antipsychotics and antipsychotics with an indication for a depressive disorder:

• there is a bolded boxed warning in the product label regarding increased mortality in geriatric patients with dementia-related psychosis. Brexpiprazole is not approved for treating patients with dementia-related psychosis

• there is a bolded boxed warning in the product label about suicidal thoughts and behaviors in patients age ≤24. The safety and efficacy of brexpiprazole have not been established in pediatric patients.

Dosing

Schizophrenia. The recommended starting dosage for brexpiprazole for schizophrenia is 1 mg/d on Days 1 to 4. Brexpiprazole can be titrated to 2 mg/d on Day 5 through Day 7, then to 4 mg/d on Day 8 based on the patient’s response and ability to tolerate the medication. The recommended target dosage is 2 to 4 mg/d with a maximum recommended daily dosage of 4 mg.

Major depressive disorder. The recommended starting dosage for brexpiprazole as adjunctive treatment for MDD is 0.5 mg or 1 mg/d. Brexpiprazole can be titrated to 1 mg/d, then up to the target dosage of 2 mg/d, with dosage increases occurring at weekly intervals based on the patient’s clinical response and ability to tolerate the agent, with a maximum recommended dosage of 3 mg/d.

Other considerations. For patients with moderate to severe hepatic impairment, or moderate, severe, or end-stage renal impairment, the maximum recommended dosage is 3 mg/d for patients with schizophrenia, and 2 mg/d for patients with MDD.

In general, dosage adjustments are recommended in patients who are known CYP2D6 poor metabolizers and in those taking concomitant CYP3A4 inhibitors or CYP2D6 inhibitors or strong CYP3A4 inducers1:

• for strong CYP2D6 or CYP3A4 inhibitors, administer one-half the usual dosage

• for strong/moderate CYP2D6 with strong/moderate CYP3A4 inhibitors, administer a one-quarter of the usual dosage

• for known CYP2D6 poor metabolizers taking strong/moderate CYP3A4 inhibitors, also administer a one-quarter of the usual dosage

• for strong CYP3A4 inducers, double the usual dosage and further adjust based on clinical response.

In clinical trials for MDD, brexpiprazole dosage was not adjusted for strong CYP2D6 inhibitors (eg, paroxetine, fluoxetine). Therefore, CYP considerations are already factored into general dosing recommendations and brexpiprazole could be administered without dosage adjustment in patients with MDD; however, under these circumstances, it would be prudent to start brexpiprazole at 0.5 mg, which, although “on-label,” represents a low starting dosage. (Whenever 2 drugs are co-administered and 1 agent has the ability to disturb the metabolism of the other, using smaller increments to the target dosage and possibly waiting longer between dosage adjustments could help avoid potential drug–drug interactions.)

No dosage adjustment for brexpiprazole is required on the basis of sex, race or ethnicity, or smoking status. Although clinical studies did not include patients age ≥65, the product label recommends that in general, dose selection for a geriatric patient should be cautious, usually starting at the low end of the dosing range, reflecting the greater frequency of decreased hepatic, renal, and cardiac function, concomitant diseases, and other drug therapy.

Bottom Line

Brexpiprazole, an atypical antipsychotic, is FDA-approved for schizophrenia and as an adjunct to antidepressants in major depressive disorder. For both indications, brexpiprazole demonstrated positive results compared with placebo in phase-III trials. Brexpiprazole is more potent at serotonin 5-HT1A and 5-HT2A receptors and displays less intrinsic activity at D2 receptors than aripiprazole, which could mean that the drug may be better-tolerated.

Related Resources

• Citrome L. Brexpiprazole: a new dopamine D2 receptor partial agonist for the treatment of schizophrenia and major depressive disorder. Drugs Today (Barc). 2015;51(7):397-414.

• Citrome L, Stensbøl TB, Maeda K. The preclinical profile of brexpiprazole: what is its clinical relevance for the treatment of psychiatric disorders? Expert Rev Neurother. In press.

Drug Brand Names

Aripiprazole • Abilify

Brexpiprazole • Rexulti

Fluoxetine • Prozac

Paroxetine • Paxil

Disclosure

Dr. Citrome is a consultant to Alexza Pharmaceuticals, Alkermes, Allergan, Boehringer Ingelheim, Bristol-Myers Squibb, Eli Lilly and Company, Forum Pharmaceuticals, Genentech, Janssen, Jazz Pharmaceuticals, Lundbeck, Merck, Medivation, Mylan, Novartis, Noven, Otsuka, Pfizer, Reckitt Benckiser, Reviva, Shire, Sunovion, Takeda, Teva, and Valeant Pharmaceuticals; and is a speaker for Allergan, AstraZeneca, Janssen, Jazz Pharmaceuticals, Lundbeck, Merck, Novartis, Otsuka, Pfizer, Shire, Sunovion, Takeda, and Teva.

1. Rexulti [package insert]. Rockville, MD: Otsuka; 2015.

2. Correll CU, Skuban A, Ouyang J, et al. Efficacy and safety of brexpiprazole for the treatment of acute schizophrenia: a 6-week randomized, double-blind, placebo-controlled trial. Am J Psychiatry. 2015;172(9):870-880.

3. Kane JM, Skuban A, Ouyang J, et al. A multicenter, randomized, double-blind, controlled phase 3 trial of fixed-dose brexpiprazole for the treatment of adults with acute schizophrenia. Schizophr Res. 2015;164(1-3):127-135.

4. Thase ME, Youakim JM, Skuban A, et al. Adjunctive brexpiprazole 1 and 3 mg for patients with major depressive disorder following inadequate response to antidepressants: a phase 3, randomized, double-blind study [published online August 4, 2015]. J Clin Psychiatry. doi: 10.4088/ JCP.14m09689.

5. Thase ME, Youakim JM, Skuban A, et al. Efficacy and safety of adjunctive brexpiprazole 2 mg in major depressive disorder: a phase 3, randomized, placebo-controlled study in patients with inadequate response to antidepressants [published online August 4, 2015]. J Clin Psychiatry. doi: 10.4088/JCP.14m09688.

6. Citrome L. Brexpiprazole for schizophrenia and as adjunct for major depressive disorder: a systematic review of the efficacy and safety profile for this newly approved antipsychotic—what is the number needed to treat, number needed to harm and likelihood to be helped or harmed? Int J Clin Pract. 2015;69(9):978-997.

7. Maeda K, Sugino H, Akazawa H, et al. Brexpiprazole I: in vitro and in vivo characterization of a novel serotonin-dopamine activity modulator. J Pharmacol Exp Ther. 2014;350(3):589-604.

8. Volavka J, Citrome L. Oral antipsychotics for the treatment of schizophrenia: heterogeneity in efficacy and tolerability should drive decision-making. Expert Opin Pharmacother. 2009;10(12):1917-1928.

9. Citrome L. Adjunctive aripiprazole, olanzapine, or quetiapine for major depressive disorder: an analysis of number needed to treat, number needed to harm, and likelihood to be helped or harmed. Postgrad Med. 2010;122(4):39-48.

10. Hobart M, Ouyang J, Forbes A, et al. Efficacy and safety of brexpiprazole (OPC-34712) as maintenance treatment in adults with schizophrenia: a randomized, double-blind, placebo-controlled study. Poster presented at: the American Society of Clinical Psychopharmacology Annual Meeting; June 22 to 25, 2015; Miami, FL.

11. Citrome L, Ota A, Nagamizu K, Perry P, et al. The effect of brexpiprazole (OPC‐34712) versus aripiprazole in adult patients with acute schizophrenia: an exploratory study. Poster presented at: the Society of Biological Psychiatry Annual Scientific Meeting and Convention; May 15, 2015; Toronto, Ontario, Canada.

1. Rexulti [package insert]. Rockville, MD: Otsuka; 2015.

2. Correll CU, Skuban A, Ouyang J, et al. Efficacy and safety of brexpiprazole for the treatment of acute schizophrenia: a 6-week randomized, double-blind, placebo-controlled trial. Am J Psychiatry. 2015;172(9):870-880.

3. Kane JM, Skuban A, Ouyang J, et al. A multicenter, randomized, double-blind, controlled phase 3 trial of fixed-dose brexpiprazole for the treatment of adults with acute schizophrenia. Schizophr Res. 2015;164(1-3):127-135.

4. Thase ME, Youakim JM, Skuban A, et al. Adjunctive brexpiprazole 1 and 3 mg for patients with major depressive disorder following inadequate response to antidepressants: a phase 3, randomized, double-blind study [published online August 4, 2015]. J Clin Psychiatry. doi: 10.4088/ JCP.14m09689.

5. Thase ME, Youakim JM, Skuban A, et al. Efficacy and safety of adjunctive brexpiprazole 2 mg in major depressive disorder: a phase 3, randomized, placebo-controlled study in patients with inadequate response to antidepressants [published online August 4, 2015]. J Clin Psychiatry. doi: 10.4088/JCP.14m09688.

6. Citrome L. Brexpiprazole for schizophrenia and as adjunct for major depressive disorder: a systematic review of the efficacy and safety profile for this newly approved antipsychotic—what is the number needed to treat, number needed to harm and likelihood to be helped or harmed? Int J Clin Pract. 2015;69(9):978-997.

7. Maeda K, Sugino H, Akazawa H, et al. Brexpiprazole I: in vitro and in vivo characterization of a novel serotonin-dopamine activity modulator. J Pharmacol Exp Ther. 2014;350(3):589-604.

8. Volavka J, Citrome L. Oral antipsychotics for the treatment of schizophrenia: heterogeneity in efficacy and tolerability should drive decision-making. Expert Opin Pharmacother. 2009;10(12):1917-1928.

9. Citrome L. Adjunctive aripiprazole, olanzapine, or quetiapine for major depressive disorder: an analysis of number needed to treat, number needed to harm, and likelihood to be helped or harmed. Postgrad Med. 2010;122(4):39-48.

10. Hobart M, Ouyang J, Forbes A, et al. Efficacy and safety of brexpiprazole (OPC-34712) as maintenance treatment in adults with schizophrenia: a randomized, double-blind, placebo-controlled study. Poster presented at: the American Society of Clinical Psychopharmacology Annual Meeting; June 22 to 25, 2015; Miami, FL.

11. Citrome L, Ota A, Nagamizu K, Perry P, et al. The effect of brexpiprazole (OPC‐34712) versus aripiprazole in adult patients with acute schizophrenia: an exploratory study. Poster presented at: the Society of Biological Psychiatry Annual Scientific Meeting and Convention; May 15, 2015; Toronto, Ontario, Canada.

What to do when your depressed patient develops mania

When a known depressed patient newly develops signs of mania or hypomania, a cascade of diagnostic and therapeutic questions ensues: Does the event “automatically” signify the presence of bipolar disorder (BD), or could manic symptoms be secondary to another underlying medical problem, a prescribed antidepressant or non-psychotropic medication, or illicit substances?

Even more questions confront the clinician: If mania symptoms are nothing more than an adverse drug reaction, will they go away by stopping the presumed offending agent? Or do symptoms always indicate the unmasking of a bipolar diathesis? Should anti-manic medication be prescribed immediately? If so, which one(s) and for how long? How extensive a medical or neurologic workup is indicated?

And, how do you differentiate ambiguous hypomania symptoms (irritability, insomnia, agitation) from other phenomena, such as akathisia, anxiety, and overstimulation?

In this article, we present an overview of how to approach and answer these key questions, so that you can identify, comprehend, and manage manic symptoms that arise in the course of your patient’s treatment for depression (Box).

Does disease exist on a unipolar−bipolar continuum?

There has been a resurgence of interest in Kraepelin’s original notion of mania and depression as falling along a continuum, rather than being distinct categories of pathology. True bipolar mania has its own identifiable epidemiology, familiality, and treatment, but symptomatic shades of gray often pose a formidable diagnostic and therapeutic challenge.

For example, DSM-5 relaxed its definition of “mixed” episodes of BD to include subsyndromal mania features in unipolar depression. When a patient with unipolar depression develops a full, unequivocal manic episode, there usually isn’t much ambiguity or confusion about initial management: assure a safe environment, stop any antidepressants, rule out drug- or medically induced causes, and begin an acute anti-manic medication.

Next steps can, sometimes, be murkier:

• formulate a definitive, overarching diagnosis

• provide psycho-education

• forecast return to work or school

• discuss prognosis and likelihood of relapse

• address necessary lifestyle modifications (eg, sleep hygiene, elimination of alcohol and illicit drug use)

• determine whether indefinite maintenance pharmacotherapy is indicated— and, if so, with which medication(s).

CASE A diagnostic formulation isn’t always black and white

Ms. J, age 56, a medically healthy woman, has a 10-year history of depression and anxiety that has been treated effectively for most of that time with venlafaxine, 225 mg/d. The mother of 4 grown children, Ms. J has worked steadily for >20 years as a flight attendant for an international airline.

Today, Ms. J is brought by ambulance from work to the emergency department in a paranoid and agitated state. The admission follows her having e-blasted airline corporate executives with a voluminous manifesto that she worked on around the clock the preceding week, in which she explained her bold ideas to revolutionize the airline industry, under her leadership.

Ms. J’s family history is unremarkable for psychiatric illness.

How does one approach a case such as Ms. J’s?

Stark examples of classical mania, as depicted in this case vignette, are easy to recognize but not necessarily straightforward, nosologically. Consider the following not-so-straightforward elements of Ms. J’s case:

• a first-lifetime episode of mania or hypomania is rare after age 50

• Ms. J took a serotonin-norepinephrine reuptake inhibitor (SNRI) for many years without evidence of mood destabilization

• years of repetitive chronobiological stress (including probable frequent time zone changes with likely sleep disruption) apparently did not trigger mood destabilization

• none of Ms. J’s 4 pregnancies led to postpartum mood episodes

• at least on the surface, there are no obvious features that point to likely causes of a secondary mania (eg, drug-induced, toxic, metabolic, or medical)

• Ms. J has no known family history of BD or any other mood disorder.

Approaching a case such as Ms. J’s must involve a systematic strategy that can best be broken into 2 segments: (1) a period of acute initial assessment and treatment and (2) later efforts focused on broader diagnostic evaluation and longer-term relapse prevention.

Initial assessment and treatment

Immediate assessment and management hinges on initial triage and forming a working diagnostic impression. Although full-blown mania usually is obvious (sometimes even without a formal interview), be alert to patients who might minimize or altogether disavow mania symptoms—often because of denial of illness, misidentification of symptoms, or impaired insight about changes in thinking, mood, or behavior.

Because florid mania, by definition, impairs psychosocial functioning, the context of an initial presentation often holds diagnostic relevance. Manic patients who display disruptive behaviors often are brought to treatment by a third party, whereas a less severely ill patient might be more inclined to seek treatment for herself (himself) when psychosis is absent and insight is less compromised or when the patient feels she (he) might be depressed.

It is not uncommon for a manic patient to report “depression” as the chief complaint or to omit elements related to psychomotor acceleration (such as racing thoughts or psychomotor agitation) in the description of symptoms. An accurate diagnosis often requires clinical probing and clarification of symptoms (eg, differentiating simple insomnia with consequent next-day fatigue from loss of the need for sleep with intact or even enhanced next-day energy) or discriminating racing thoughts from anxious ruminations that might be more intrusive than rapid.

Presentations of frank mania also can come to light as a consequence of symptoms, rather than as symptoms per se (eg, conflict in relationships, problems at work, financial reversals).

Particularly in patients who do not have a history of mania, avoid the temptation to begin or modify existing pharmacotherapy until you have performed a basic initial evaluation. Immediate considerations for initial assessment and management include the following:

Provide containment. Ensure a safe setting, level of care, and frequency of monitoring. Evaluate suicide risk (particularly when mixed features are present), and risk of withdrawal from any psychoactive substances.

Engage significant others. Close family members can provide essential history, particularly when a patient’s insight about her illness and need for treatment are impaired. Family members and significant others also often play important roles in helping to restrict access to finances, fostering medication adherence, preventing access to weapons in the home, and sharing information with providers about substance use or high-risk behavior.

Systematically assess for DSM-5 symptoms of mania and depression. DSM-5 modified criteria for mania/hypomania to necessitate increased energy, in addition to change in mood, to make a syndromal diagnosis. Useful during a clinical interview is the popular mnemonic DIGFAST to aid recognition of core mania symptomsa:

• Distractibility

• Indiscretion/impulsivity

• Grandiosity

• Flight of ideas

• Activity increase

• Sleep deficit

• Talkativeness.

aAlso see: “Mnemonics in a mnutshell: 32 aids to psychiatric diagnosis,” in the October 2008 issue Current Psychiatry and in the archive at CurrentPsychiatry.com.

These symptoms should represent a departure from normal baseline characteristics; it often is helpful to ask a significant other or collateral historian how the present symptoms differ from the patient’s usual state.

Assess for unstable medical conditions or toxicity states. When evaluating an acute change in mental status, toxicology screening is relatively standard and the absence of illicit substances should seldom, if ever, be taken for granted—especially because occult substance use can lead to identification of false-positive BD “cases.”1

Stop any antidepressant. During a manic episode, continuing antidepressant medication serves no purpose other than to contribute to or exacerbate mania symptoms. Nonetheless, observational studies demonstrate that approximately 15% of syndromally manic patients continue to receive an antidepressant, often when a clinician perceives more severe depression during mania, multiple prior depressive episodes, current anxiety, or rapid cycling.2

Importantly, antidepressants have been shown to harm, rather than alleviate, presentations that involve a mixed state,3 and have no demonstrated value in preventing post-manic depression. Mere elimination of an antidepressant might ease symptoms during a manic or mixed episode.4

In some cases, it might be advisable to taper, not abruptly stop, a short half-life serotonergic antidepressant, even in the setting of mania, to minimize the potential for aggravating autonomic dysregulation that can result from antidepressant discontinuation effects.

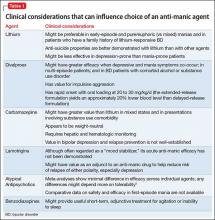

Begin anti-manic pharmacotherapy. Initiation of an anti-manic mood stabilizer, such as lithium and divalproex, has been standard in the treatment of acute mania.

In the 1990s, protocols for oral loading of divalproex (20 to 30 mg/kg/d) gained popularity for achieving more rapid symptom improvement than might occur with lithium. In the current era, atypical antipsychotics have all but replaced mood stabilizers as an initial intervention to contain mania symptoms quickly (and with less risk than first-generation antipsychotics for acute adverse motor effects from so-called rapid neuroleptization).

Because atypical antipsychotics often rapidly subdue mania, psychosis, and agitation, regardless of the underlying process, many practitioners might feel more comfortable initiating them than a mood stabilizer when the diagnosis is ambiguous or provisional, although their longer-term efficacy and safety, relative to traditional mood stabilizers, remains contested. Considerations for choosing from among feasible anti-manic pharmacotherapies are summarized in Table 1.5

Normalize the sleep-wake cycle. Chronobiological and circadian variables, such as irregular sleep patterns, are thought to contribute to the pathophysiology of affective switch in BD. Behavioral and pharmacotherapeutic efforts to impose a normal sleep−wake schedule are considered fundamental to stabilizing acute mania.

Facilitate next steps after acute stabilization. For inpatients, this might involve step-down to a partial hospitalization or intensive outpatient program, alongside taking steps to ensure continued treatment adherence and minimize relapse.

What medical and neurologic workup is appropriate?

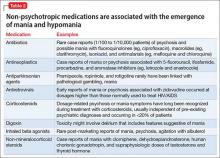

Not every first lifetime presentation of mania requires extensive medical and neurologic workup, particularly among patients who have a history of depression and those whose presentation neatly fits the demographic and clinical profile of newly emergent BD. Basic assessment should determine whether any new medication has been started that could plausibly contribute to abnormal mental status (Table 2).

Nevertheless, evaluation of almost all first presentations of mania should include:

• urine toxicology screen

• complete blood count

• comprehensive metabolic panel

• thyroid-stimulating hormone assay

• serum vitamin B12 level assay

• serum folic acid level assay

• rapid plasma reagin test.

Clinical features that usually lead a clinician to pursue a more detailed medical and neurologic evaluation of first-episode mania include:

• onset age >40

• absence of a family history of mood disorder

• symptoms arising during a major medical illness

• multiple medications

• suspicion of a degenerative or hereditary neurologic disorder

• altered state of consciousness

• signs of cortical or diffuse subcortical dysfunction (eg, cognitive deficits, motor deficits, tremor)

• abnormal vital signs.

Depending on the presentation, additional testing might include:

• tests of HIV antibody, immune autoantibodies, and Lyme disease antibody

• heavy metal screening (when suggested by environmental exposure)

• lumbar puncture (eg, in a setting of manic delirium or suspected central nervous system infection or paraneoplastic syndrome)

• neuroimaging (note: MRI provides better visualization than CT of white matter pathology and small vessel cerebrovascular disease) electroencephalography.

Making an overarching diagnosis: Is mania always bipolar disorder?

Mania is considered a manifestation of BD when symptoms cannot be attributed to another psychiatric condition, another underlying medical or neurologic condition, or a toxic-metabolic state (Table 3 and Table 46-9). Classification of mania that occurs soon after antidepressant exposure in patients without a known history of BD continues to be the subject of debate, varying in its conceptualization across editions of DSM.