User login

Impact of HOCDI on Sepsis Patients

There are approximately 3 million cases of Clostridium difficile infection (CDI) per year in the United States.[1, 2, 3, 4] Of these, 10% result in a hospitalization or occur as a consequence of the exposures and treatments associated with hospitalization.[1, 2, 3, 4] Some patients with CDI experience mild diarrhea that is responsive to therapy, but other patients experience severe, life‐threatening disease that is refractory to treatment, leading to pseudomembranous colitis, toxic megacolon, and sepsis with a 60‐day mortality rate that exceeds 12%.[5, 6, 7, 8, 9, 10, 11, 12, 13, 14]

Hospital‐onset CDI (HOCDI), defined as C difficile‐associated diarrhea and related symptoms with onset more than 48 hours after admission to a healthcare facility,[15] represents a unique marriage of CDI risk factors.[5] A vulnerable patient is introduced into an environment that contains both exposure to C difficile (through other patients or healthcare workers) and treatment with antibacterial agents that may diminish normal flora. Consequently, CDI is common among hospitalized patients.[16, 17, 18] A particularly important group for understanding the burden of disease is patients who initially present to the hospital with sepsis and subsequently develop HOCDI. Sepsis patients are often critically ill and are universally treated with antibiotics.

Determining the incremental cost and mortality risk attributable to HOCDI is methodologically challenging. Because HOCDI is associated with presenting severity, the sickest patients are also the most likely to contract the disease. HOCDI is also associated with time of exposure or length of stay (LOS). Because LOS is a risk factor, comparing LOS between those with and without HOCDI will overestimate the impact if the time to diagnosis is not taken into account.[16, 17, 19, 20] We aimed to examine the impact of HOCDI in hospitalized patients with sepsis using a large, multihospital database with statistical methods that took presenting severity and time to diagnosis into account.

METHODS

Data Source and Subjects

Permission to conduct this study was obtained from the institutional review board at Baystate Medical Center. We used the Premier Healthcare Informatics database, a voluntary, fee‐supported database created to measure quality and healthcare utilization, which has been used extensively in health services research.[21, 22, 23] In addition to the elements found in hospital claims derived from the uniform billing 04 form, Premier data include an itemized, date‐stamped log of all items and services charged to the patient or their insurer, including medications, laboratory tests, and diagnostic and therapeutic services. Approximately 75% of hospitals that submit data also provide information on actual hospital costs, taken from internal cost accounting systems. The rest provide cost estimates based on Medicare cost‐to‐charge ratios. Participating hospitals are similar to the composition of acute care hospitals nationwide, although they are more commonly small‐ to midsized nonteaching facilities and are more likely to be located in the southern United States.

We included medical (nonsurgical) adult patients with sepsis who were admitted to a participating hospital between July 1, 2004 and December 31, 2010. Because we sought to focus on the care of patients who present to the hospital with sepsis, we defined sepsis as the presence of a diagnosis of sepsis plus evidence of both blood cultures and antibiotic treatment within the first 2 days of hospitalization; we used the first 2 days of hospitalization rather than just the first day because, in administrative datasets, the duration of the first hospital day includes partial days that can vary in length. We excluded patients who died or were discharged prior to day 3, because HOCDI is defined as onset after 48 hours in a healthcare facility.[15] We also excluded surviving patients who received less than 3 consecutive days of antibiotics, and patients who were transferred from or to another acute‐care facility; the latter exclusion criterion was used because we could not accurately determine the onset or subsequent course of their illness.

Identification of Patients at Risk for and Diagnosed With HOCDI

Among eligible patients with sepsis, we aimed to identify a cohort at risk for developing CDI during the hospital stay. We excluded patients: (1) with a diagnosis indicating that diarrhea was present on admission, (2) with a diagnosis of CDI that was indicated to be present on admission, (3) who were tested for CDI on the first or second hospital day, and (4) who received an antibiotic that could be consistent with treatment for CDI (oral or intravenous [IV] metronidazole or oral vancomycin) on hospital days 1 or 2.

Next, we aimed to identify sepsis patients at risk for HOCDI who developed HOCDI during their hospital stay. Among eligible patients described above, we considered a patient to have HOCDI if they had an International Classification of Diseases, Ninth Revision, Clinical Modification diagnosis of CDI (primary or secondary but not present on admission), plus evidence of testing for CDI after hospital day 2, and treatment with oral vancomycin or oral or IV metronidazole that was started after hospital day 2 and within 2 days of the C difficile test, and evidence of treatment for CDI for at least 3 days unless the patient was discharged or died.

Patient Information

We recorded patient age, gender, marital status, insurance status, race, and ethnicity. Using software provided by the Healthcare Costs and Utilization Project of the Agency for Healthcare Research and Quality, we categorized information on 30 comorbid conditions. We also created a single numerical comorbidity score based on a previously published and validated combined comorbidity score that predicts 1‐year mortality.[24] Based on a previously described algorithm,[25] we used diagnosis codes to assess the source (lung, abdomen, urinary tract, blood, other) and type of sepsis (Gram positive, Gram negative, mixed, anaerobic, fungal). Because patients can have more than 1 potential source of sepsis (eg, pneumonia and urinary tract infection) and more than 1 organism causing infection (eg, urine with Gram negative rods and blood culture with Gram positive cocci), these categories are not mutually exclusive (see Supporting Table 1 in the online version of this article). We used billing codes to identify the use of therapies, monitoring devices, and pharmacologic treatments to characterize both initial severity of illness and severity at the time of CDI diagnosis. These therapies are included in a validated sepsis mortality prediction model (designed for administrative datasets) with similar discrimination and calibration to clinical intensive care unit (ICU) risk‐adjustment models such as the mortality probability model, version III.[26, 27]

Outcomes

Our primary outcome of interest was in‐hospital mortality. Secondary outcomes included LOS and costs for survivors only and for all patients.

Statistical Methods

We calculated patient‐level summary statistics for all patients using frequencies for binary variables and medians and interquartile percentiles for continuous variables. P values <0.05 were considered statistically significant.

To account for presenting severity and time to diagnosis, we used methods that have been described elsewhere.[12, 13, 18, 20, 28] First, we identified patients who were eligible to develop HOCDI. Second, for all eligible patients, we identified a date of disease onset (index date). For patients who met criteria for HOCDI, this was the date on which the patient was tested for CDI. For eligible patients without disease, this was a date randomly assigned to any time during the hospital stay.[29] Next, we developed a nonparsimonious propensity score model that included all patient characteristics (demographics, comorbidities, sepsis source, and severity of illness on presentation and on the index date; all variables listed in Table 1 were included in the propensity model). Some of the variables for this model (eg, mechanical ventilation and vasopressors) were derived from a validated severity model.[26] We adjusted for correlation within hospital when creating the propensity score using Huber‐White robust standard error estimators clustered at the hospital level.[30] We then created matched pairs with the same LOS prior to the index date and similar propensity for developing CDI. We first matched on index date, and then, within each index‐datematched subset, matched patients with and without HOCDI by their propensity score using a 5‐to‐1 greedy match algorithm.[31] We used the differences in LOS between the cases and controls after the index date to calculate the additional attributable LOS estimates; we also separately estimated the impact on cost and LOS in a group limited to those who survived after discharge because of concerns that death could shorten LOS and reduce costs.

| Before Matching | After Matching | |||||

|---|---|---|---|---|---|---|

| HOCDI, n=2,368, % | No CDI, n=216,547, % | P | HOCDI, n=2,368, % | No CDI, n=2,368, % | P | |

| ||||||

| Age, y | 70.9 (15.1) | 68.6 (16.8) | <0.01 | 70.9 (15.1) | 69.8 (15.9) | 0.02 |

| Male | 46.8 | 46.0 | 0.44 | 46.8 | 47.2 | 0.79 |

| Race | ||||||

| White | 61.0 | 63.3 | 61.0 | 58.1 | ||

| Black | 15.6 | 14.5 | <0.01 | 15.6 | 17.0 | 0.11 |

| Hispanic | 3.2 | 5.4 | 3.2 | 4.1 | ||

| Other race | 20.2 | 16.8 | 20.2 | 20.9 | ||

| Marital status | ||||||

| Married | 31.6 | 36.3 | <0.01 | 31.6 | 32.6 | 0.74 |

| Single/divorced | 52.8 | 51.1 | 52.8 | 52.0 | ||

| Other/unknown | 15.7 | 12.6 | 15.7 | 14.5 | ||

| Insurance status | ||||||

| Medicare traditional | 63.2 | 59.5 | 63.2 | 60.3 | ||

| Medicare managed | 10.6 | 10.1 | 10.6 | 10.9 | ||

| Medicaid traditional | 7.6 | 6.9 | 7.6 | 8.2 | ||

| Medicaid managed | 1.8 | 2.0 | <0.01 | 1.8 | 1.8 | 0.50 |

| Managed care | 10.8 | 12.3 | 10.8 | 12.0 | ||

| Commercial | 2.0 | 3.5 | 2.0 | 2.2 | ||

| Self‐pay/other/unknown | 4.0 | 5.7 | 4.0 | 4.7 | ||

| Infection source | ||||||

| Respiratory | 46.5 | 37.0 | <0.01 | 46.5 | 49.6 | 0.03 |

| Skin/bone | 10.1 | 8.6 | 0.01 | 10.1 | 11.2 | 0.21 |

| Urinary | 52.2 | 51.3 | 0.38 | 52.2 | 50.3 | 0.18 |

| Blood | 11.1 | 15.1 | <0.01 | 11.1 | 11.5 | 0.65 |

| Infecting organism | ||||||

| Gram negative | 35.0 | 36.6 | <0.01 | 35.0 | 33.1 | 0.18 |

| Anaerobe | 1.4 | 0.7 | <0.01 | 1.4 | 1.1 | 0.24 |

| Fungal | 17.5 | 7.5 | <0.01 | 17.5 | 18.3 | 0.44 |

| Most common comorbid conditions | ||||||

| Congestive heart failure | 35.1 | 24.6 | <0.01 | 35.1 | 37.5 | 0.06 |

| Chronic lung disease | 31.6 | 27.6 | <0.01 | 31.6 | 32.1 | 0.71 |

| Hypertension | 31.5 | 37.7 | <0.01 | 31.5 | 29.7 | 0.16 |

| Renal Failure | 29.7 | 23.8 | <0.01 | 29.7 | 31.2 | 0.28 |

| Weight Loss | 27.7 | 13.3 | <0.01 | 27.7 | 29.4 | 0.17 |

| Treatments by day 2 | ||||||

| ICU admission | 40.0 | 29.5 | <0.01 | 40.0 | 40.7 | 0.64 |

| Use of bicarbonate | 12.2 | 7.1 | <0.01 | 12.2 | 13.6 | 0.15 |

| Fresh frozen plasma | 1.4 | 1.0 | 0.03 | 1.4 | 1.1 | 0.36 |

| Inotropes | 1.4 | 0.9 | 0.01 | 1.4 | 2.2 | 0.04 |

| Hydrocortisone | 6.7 | 4.7 | <0.01 | 6.7 | 7.4 | 0.33 |

| Thiamine | 4.2 | 3.3 | 0.01 | 4.2 | 4.1 | 0.83 |

| Psychotropics (eg, haldol for delirium) | 10.0 | 9.2 | 0.21 | 10.0 | 10.8 | 0.36 |

| Restraints (eg, for delirium) | 2.0 | 1.5 | 0.05 | 2.0 | 2.5 | 0.29 |

| Angiotensin‐converting enzyme inhibitors | 12.1 | 13.2 | 0.12 | 12.1 | 10.9 | 0.20 |

| Statins | 18.8 | 21.1 | 0.01 | 18.8 | 16.9 | 0.09 |

| Drotrecogin alfa | 0.6 | 0.3 | 0.00 | 0.6 | 0.6 | 0.85 |

| Foley catheter | 19.2 | 19.8 | 0.50 | 19.2 | 22.0 | 0.02 |

| Diuretics | 28.5 | 25.4 | 0.01 | 28.5 | 29.6 | 0.42 |

| Red blood cells | 15.5 | 10.6 | <0.01 | 15.5 | 15.8 | 0.81 |

| Calcium channel blockers | 19.3 | 16.8 | 0.01 | 19.3 | 19.1 | 0.82 |

| ‐Blockers | 32.7 | 29.6 | 0.01 | 32.7 | 30.6 | 0.12 |

| Proton pump inhibitors | 59.6 | 53.1 | <0.01 | 59.6 | 61.0 | 0.31 |

Analysis Across Clinical Subgroups

In a secondary analysis, we examined heterogeneity in the association between HOCDI and outcomes within subsets of patients defined by age, combined comorbidity score, and admission to the ICU by day 2. We created separate propensity scores using the same covariates in the primary analysis, but limited matches to within these subsets. For each group, we examined how the covariates in the HOCDI and control groups differed after matching with inference tests that took the paired nature of the data into account. All analyses were carried out using Stata/SE 11.1 (StataCorp, College Station, TX).

RESULTS

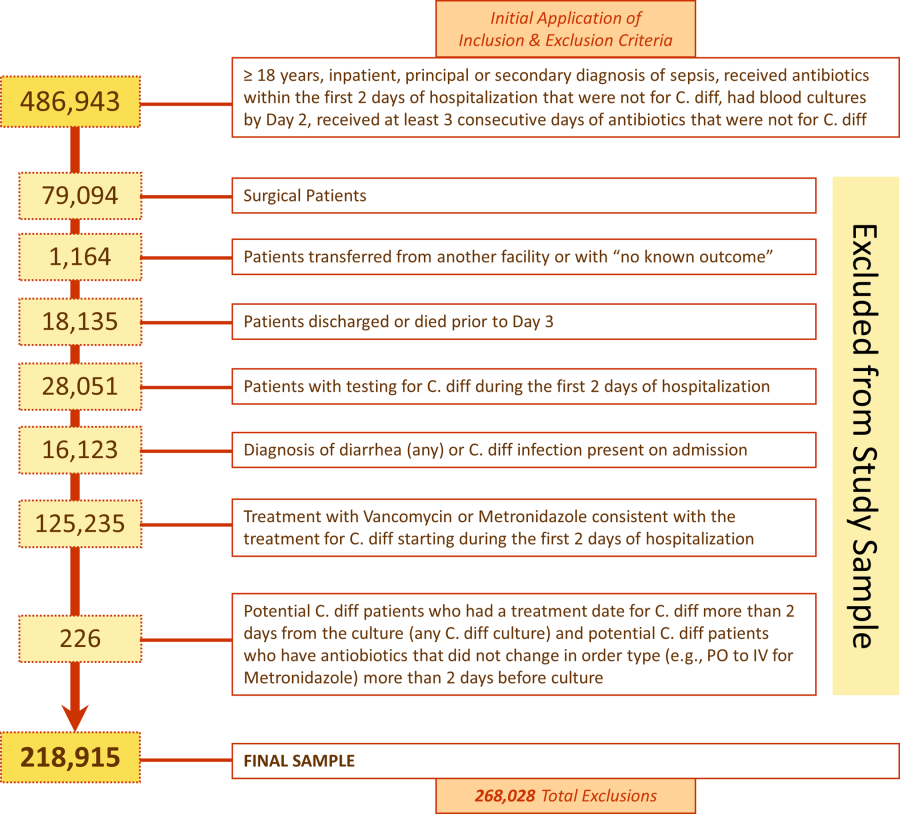

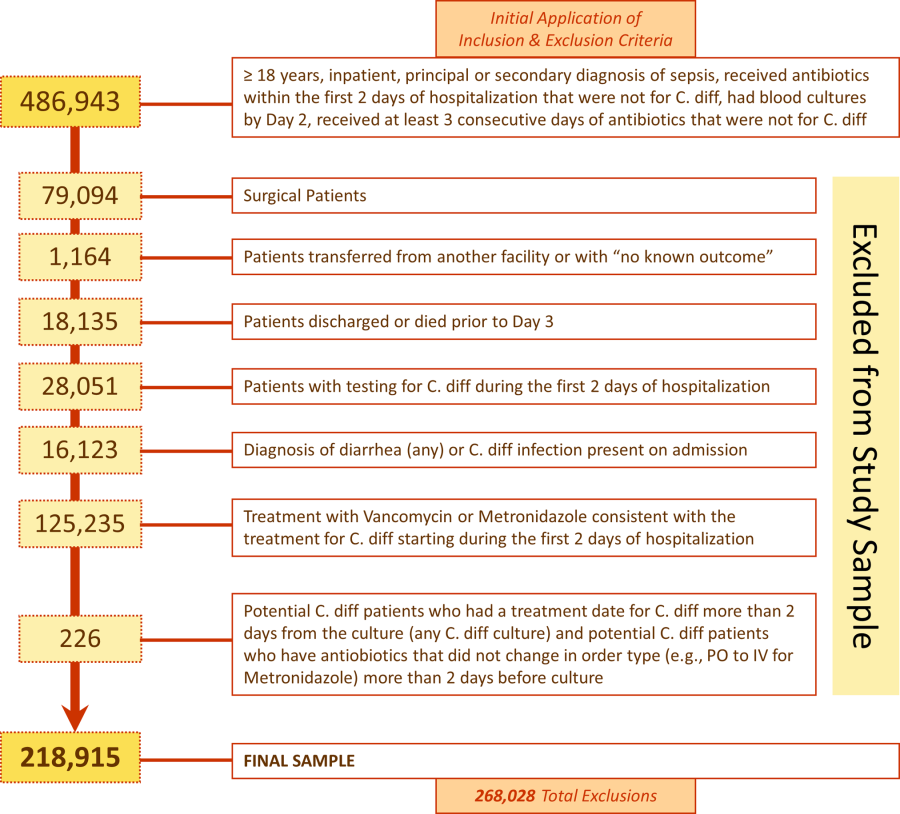

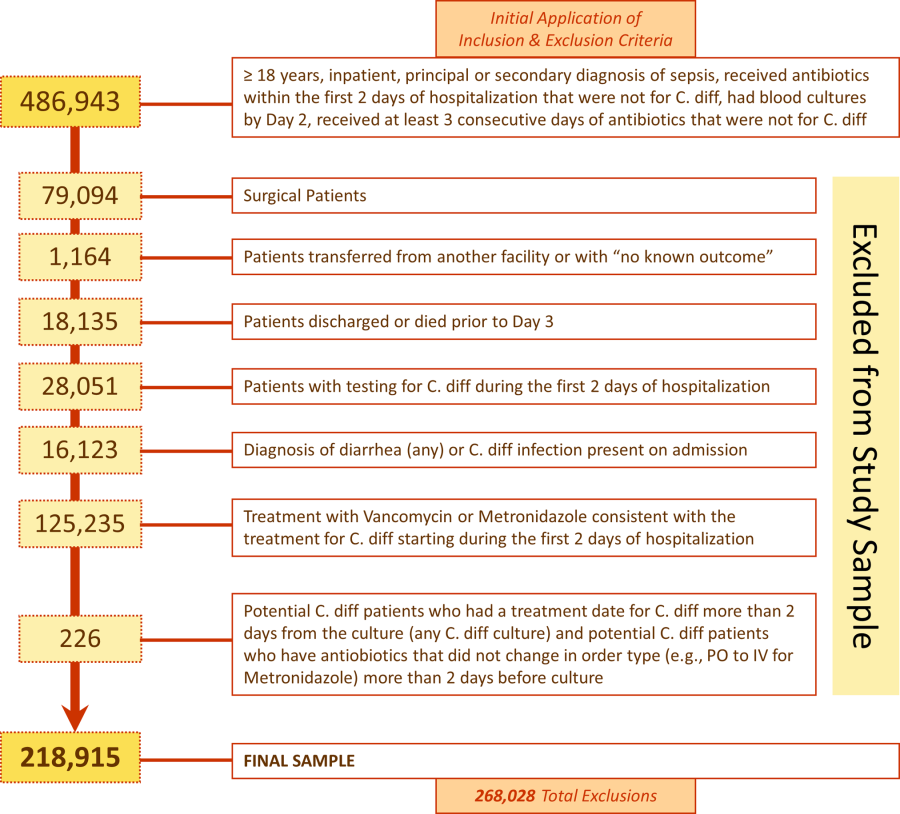

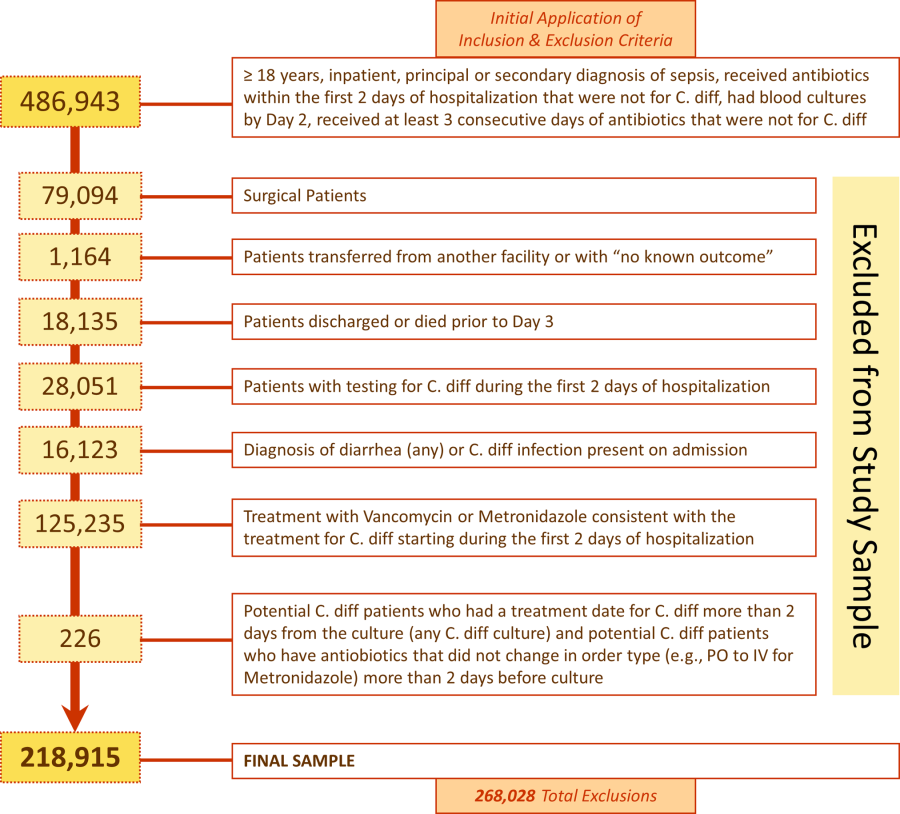

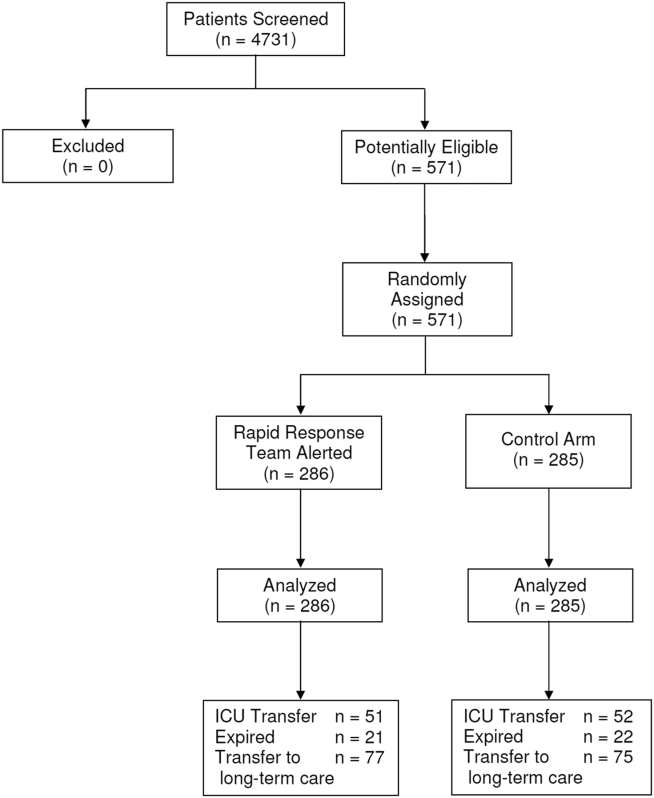

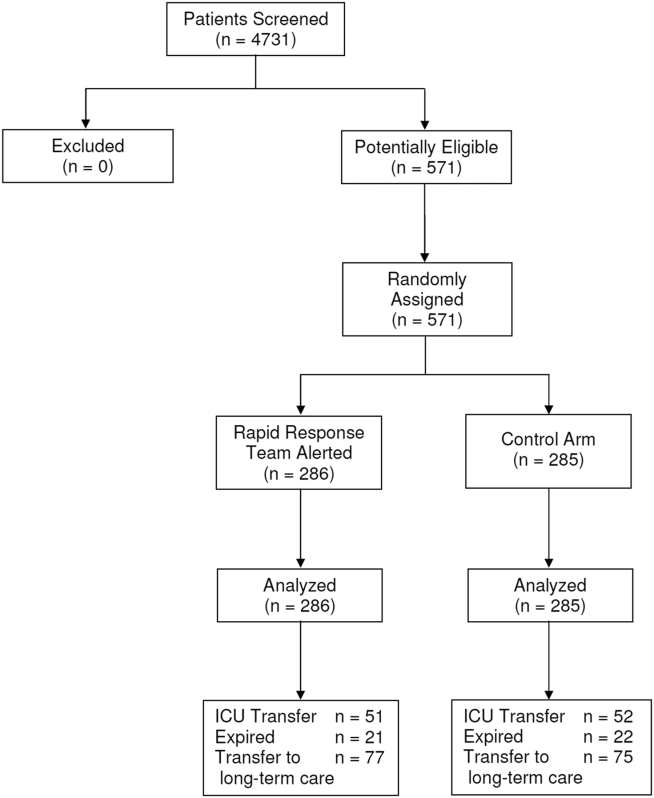

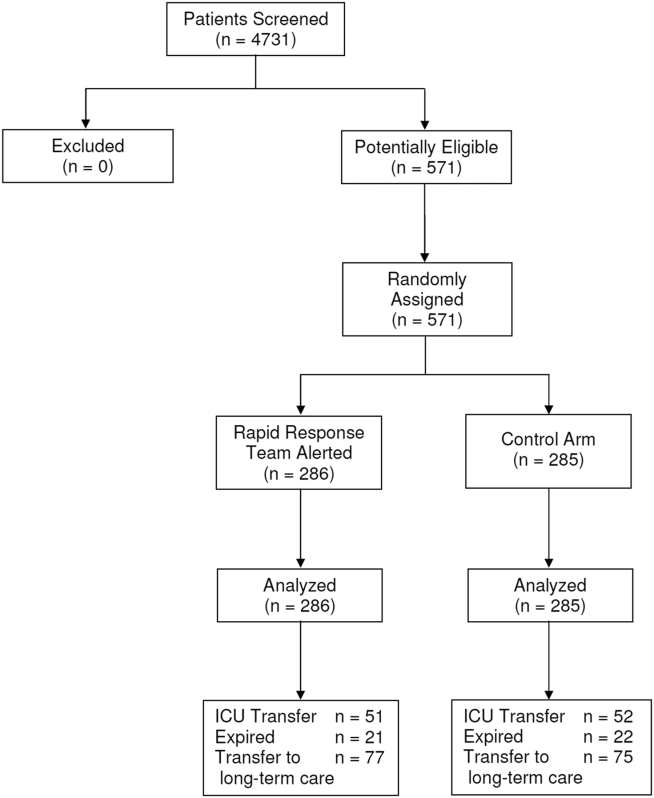

We identified 486,943 adult sepsis admissions to a Premier hospital between July 1, 2004 and December 31, 2010. After applying all exclusion criteria, we had a final sample of 218,915 admissions with sepsis (from 400 hospitals) at risk for HOCDI (Figure 1). Of these, 2368 (1.08%) met criteria for diagnosis of CDI after hospital day 2 and were matched to controls using index date and propensity score.

Patient and Hospital Factors

After matching, the median age was 71 years in cases and 70 years in controls (Table 1). Less than half (46%) of the population was male. Most cases (61%) and controls (58%) were white. Heart failure, hypertension, chronic lung disease, renal failure, and weight loss were the most common comorbid conditions. Our propensity model, which had a C statistic of 0.75, identified patients whose risk varied from a mean of 0.1% in the first decile to a mean of 3.8% in the tenth decile. Before matching, 40% of cases and 29% of controls were treated in the ICU by hospital day 2; after matching, 40% of both cases and controls were treated in the ICU by hospital day 2.

Distribution by LOS, Index Day, and Risk for Mortality

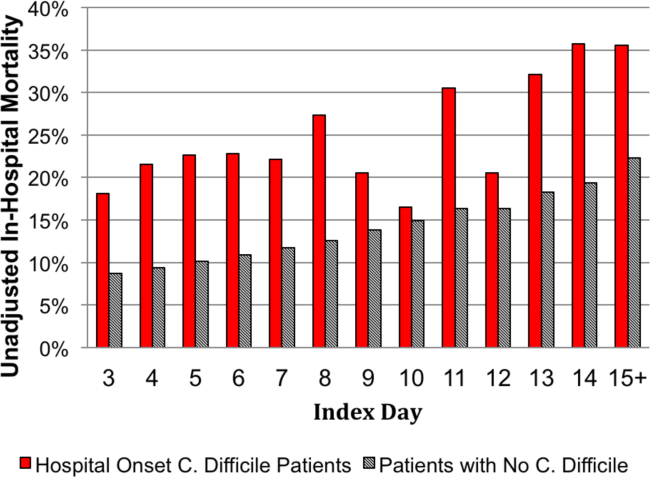

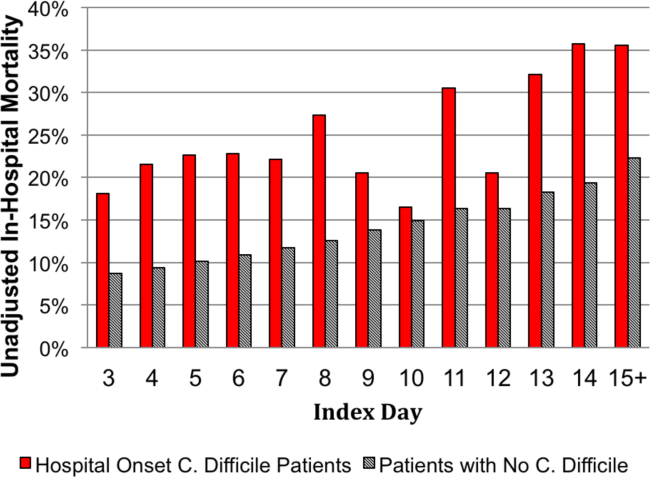

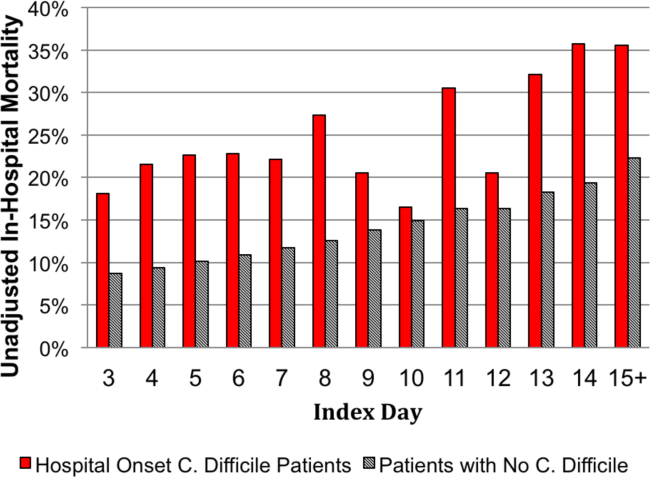

The unadjusted and unmatched LOS was longer for cases than controls (19 days vs 8 days, Table 2) (see Supporting Figure 1 in the online version of this article). Approximately 90% of the patients had an index day of 14 or less (Figure 2). Among patients both with and without CDI, the unadjusted mortality risk increased as the index day (and thus the total LOS) increased.

| Outcome | HOCDI | No HOCDI | Difference (95% CI) | P |

|---|---|---|---|---|

| ||||

| Length of stay, d | ||||

| Raw results | 19.2 | 8.3 | 8.4 (8.48.5) | <0.01 |

| Raw results for survivors only | 18.6 | 8.0 | 10.6 (10.311.0) | <0.01 |

| Matched results | 19.2 | 14.2 | 5.1(4.45.7) | <0.01 |

| Matched results for survivors only | 18.6 | 13.6 | 5.1 (4.45.8) | <0.01 |

| Mortality, % | ||||

| Raw results | 24.0 | 10.1 | 13.9 (12.615.1), RR=2.4 (2.22.5) | <0.01 |

| Matched results | 24.0 | 15.4 | 8.6 (6.410.9), RR=1.6 (1.41.8) | <0.01 |

| Costs, US$ | ||||

| Raw results median costs [interquartile range] | $26,187 [$15,117$46,273] | $9,988 [$6,296$17,351] | $16,190 ($15,826$16,555) | <0.01 |

| Raw results for survivors only [interquartile range] | $24,038 [$14,169$41,654] | $9,429 [$6,070$15,875] | $14,620 ($14,246$14,996) | <0.01 |

| Matched results [interquartile range] | $26,187 [$15,117$46,273] | $19,160 [$12,392$33,777] | $5,308 ($4,521$6,108) | |

| Matched results for survivors only [interquartile range] | $24,038 [$14,169$41,654] | $17,811 [$11,614$29,298] | $4,916 ($4,088$5,768) | <0.01 |

Adjusted Results

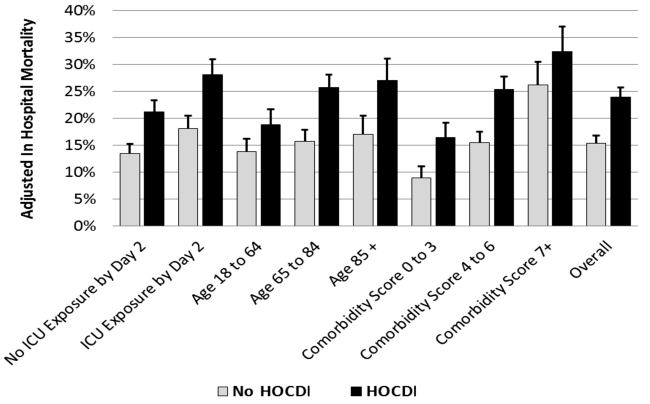

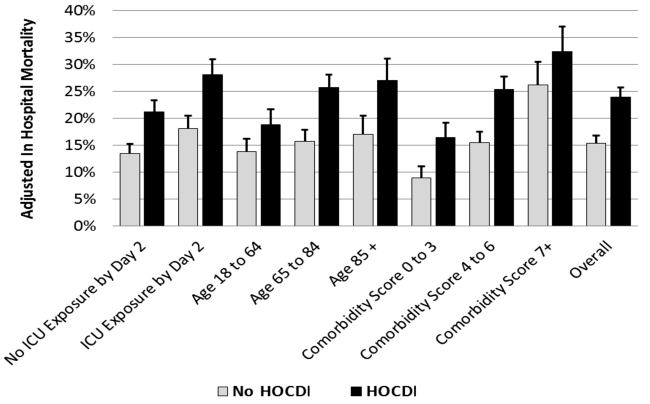

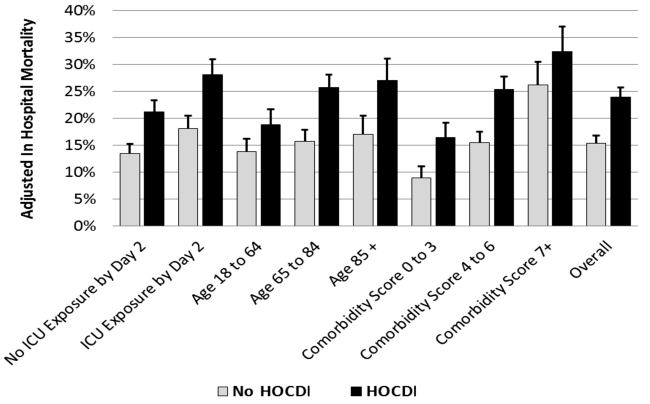

Compared to patients without disease, HOCDI patients had an increased unadjusted mortality (24% vs 10%, P<0.001). This translates into a relative risk of 2.4 (95% confidence interval [CI]: 2.2, 2.5). In the matched cohort, the difference in the mortality rates was attenuated, but still significantly higher in the HOCDI patients (24% versus 15%, P<0.001, an absolute difference of 9%; 95% CI: 6.410.8). The adjusted relative risk of mortality for HOCDI was 1.6 (95% CI: 1.41.8; Table 2). After matching, patients with CDI had a LOS of 19.2 days versus 14.2 days in matched controls (difference of 5.1 days; 95% CI: 4.45.7; P<0.001). When the LOS analysis was limited to survivors only, this difference of 5 days remained (P<0.001). In an analysis limited to survivors only, the difference in median costs between cases and controls was $4916 (95% CI: $4088$5768; P<0.001). In a secondary analysis examining heterogeneity between HOCDI and outcomes across clinical subgroups, the absolute difference in mortality and costs between cases and controls varied across demographics, comorbidity, and ICU admission, but the relative risks were similar (Figure 3) (see Supporting Figure 3 in the online version of this article).

DISCUSSION

In this large cohort of patients with sepsis, we found that approximately 1 in 100 patients with sepsis developed HOCDI. Even after matching with controls based on the date of symptom onset and propensity score, patients who developed HOCDI were more than 1.6 times more likely to die in the hospital. HOCDI also added 5 days to the average hospitalization for patients with sepsis and increased median costs by approximately $5000. These findings suggest that a hospital that prevents 1 case of HOCDI per month in sepsis patients could avoid 1 death and 60 inpatient days annually, achieving an approximate yearly savings of $60,000.

Until now, the incremental cost and mortality attributable to HOCDI in sepsis patients have been poorly understood. Attributing outcomes can be methodologically challenging because patients who are at greatest risk for poor outcomes are the most likely to contract the disease and are at risk for longer periods of time. Therefore, it is necessary to take into account differences in severity of illness and time at risk between diseased and nondiseased populations and to ensure that outcomes attributed to the disease occur after disease onset.[28, 32] The majority of prior studies examining the impact of CDI on hospitalized patients have been limited by a lack of adequate matching to controls, small sample size, or failure to take into account time to infection.[16, 17, 19, 20]

A few studies have taken into account severity, time to infection, or both in estimating the impact of HOCDI. Using a time‐dependent Cox model that accounted for time to infection, Micek et al. found no difference in mortality but a longer LOS in mechanically ventilated patients (not limited to sepsis) with CDI.[33] However, their study was conducted at only 3 centers, did not take into account severity at the time of diagnosis, and did not clearly distinguish between community‐onset CDI and HOCDI. Oake et al. and Forster et al. examined the impact of CDI on patients hospitalized in a 2‐hospital health system in Canada.[12, 13] Using the baseline mortality estimate in a Cox multivariate proportional hazards regression model that accounted for the time‐varying nature of CDI, they found that HOCDI increased absolute risk of death by approximately 10%. Also, notably similar to our study were their findings that HOCDI occurred in approximately 1 in 100 patients and that the attributable median increase in LOS due to hospital‐onset CDI was 6 days. Although methodologically rigorous, these 2 small studies did not assess the impact of CDI on costs of care, were not focused on sepsis patients or even patients who received antibiotics, and also did not clearly distinguish between community‐onset CDI and HOCDI.

Our study therefore has important strengths. It is the first to examine the impact of HOCDI, including costs, on the outcomes of patients hospitalized with sepsis. The fact that we took into account both time to diagnosis and severity at the time of diagnosis (by using an index date for both cases and controls and determining severity on that date) prevented us from overestimating the impact of HOCDI on outcomes. The large differences in outcomes we observed in unadjusted and unmatched data were tempered after multivariate adjustment (eg, difference in LOS from 10.6 days to 5.1 additional days, costs from $14,620 to $4916 additional costs after adjustment). Our patient sample was derived from a large, multihospital database that contains actual hospital costs as derived from internal accounting systems. The fact that our study used data from hundreds of hospitals means that our estimates of cost, LOS, and mortality may be more generalizable than the work of Micek et al., Oake et al., and Forster et al.

This work also has important implications. First, hospital administrators, clinicians, and researchers can use our results to evaluate the cost‐effectiveness of HOCDI prevention measures (eg, hand hygiene programs, antibiotic stewardship). By quantifying the cost per case in sepsis patients, we allow administrators and researchers to compare the incremental costs of HOCDI prevention programs to the dollars and lives saved due to prevention efforts. Second, we found that our propensity model identified patients whose risk varied greatly. This suggests that an opportunity exists to identify subgroups of patients that are at highest risk. Identifying high‐risk subgroups will allow for targeted risk reduction interventions and the opportunity to reduce transmission (eg, by placing high‐risk patients in a private room). Finally, we have reaffirmed that time to diagnosis and presenting severity need to be rigorously addressed prior to making estimates of the impact of CDI burden and other hospital‐acquired conditions and injuries.

There are limitations to this study as well. We did not have access to microbiological data. However, we required a diagnosis code of CDI, evidence of testing, and treatment after the date of testing to confirm a diagnosis. We also adopted detailed exclusion criteria to ensure that CDI that was not present on admission and that controls did not have CDI. These stringent inclusion and exclusion criteria strengthened the internal validity of our estimates of disease impact. We used administrative claims data, which limited our ability to adjust for severity. However, the detailed nature of the database allowed us to use treatments, such as vasopressors and antibiotics, to identify cases; treatments were also used as a validated indicator of severity,[26] which may have helped to reduce some of this potential bias. Although our propensity model included many predictors of CDI, such as use of proton pump inhibitors and factors associated with mortality, not every confounder was completely balanced after propensity matching, although the statistical differences may have been related to our large sample size and therefore might not be clinically significant. We also may have failed to include all possible predictors of CDI in the propensity model.

In a large, diverse cohort of hospitalized patients with sepsis, we found that HOCDI lengthened hospital stay by approximately 5 days, increased risk of in‐hospital mortality by 9%, and increased hospital cost by approximately $5000 per patient. These findings highlight the importance of identifying effective prevention measures and of determining the patient populations at greatest risk for HOCDI.

Disclosures: The study was conducted with funding from the Division of Critical Care and the Center for Quality of Care Research at Baystate Medical Center. Dr. Lagu is supported by the National Heart, Lung, and Blood Institute of the National Institutes of Health under award number K01HL114745. Dr. Stefan is supported by the National Heart, Lung, and Blood Institute of the National Institutes of Health under award number K01HL114631. Drs. Lagu and Lindenauer had full access to all of the data in the study; they take responsibility for the integrity of the data and the accuracy of the data analysis. Drs. Lagu, Lindenauer, Steingrub, Higgins, Stefan, Haessler, and Rothberg conceived of the study. Dr. Lindenauer acquired the data. Drs. Lagu, Lindenauer, Rothberg, Steingrub, Nathanson, Stefan, Haessler, Higgins, and Mr. Hannon analyzed and interpreted the data. Dr. Lagu drafted the manuscript. Drs. Lagu, Lindenauer, Rothberg, Steingrub, Nathanson, Stefan, Haessler, Higgins, and Mr. Hannon critically reviewed the manuscript for important intellectual content. Dr. Nathanson carried out the statistical analyses. Dr. Nathanson, through his company OptiStatim LLC, was paid by the investigators with funding from the Department of Medicine at Baystate Medical Center to assist in conducting the statistical analyses in this study. The authors report no further conflicts of interest.

- , , , . Increasing prevalence and severity of Clostridium difficile colitis in hospitalized patients in the United States. Arch Surg. 2007;142(7):624–631; discussion 631.

- , , , et al. The changing epidemiology of Clostridium difficile infections. Clin Microbiol Rev. 2010;23(3):529–549.

- , . Clostridium Difficile‐Associated Disease in U.S. Hospitals, 1993–2005. HCUP Statistical Brief #50. April 2008. Agency for Healthcare Research and Quality, Rockville, MD. Available at: http://www.hcup‐us.ahrq.gov/reports/statbriefs/sb50.pdf. Accessed April 4, 2014.

- , , , . National point prevalence of Clostridium difficile in US health care facility inpatients, 2008. Am J Infect Control. 2009;37(4):263–270.

- . A 76‐year‐old man with recurrent Clostridium difficile‐associated diarrhea: review of C. difficile infection. JAMA. 2009;301(9):954–962.

- , , , , , . Recurrent Clostridium difficile disease: epidemiology and clinical characteristics. Infect Control Hosp Epidemiol. 1999;20(1):43–50.

- , , , , , . Recurrent Clostridium difficile diarrhea: characteristics of and risk factors for patients enrolled in a prospective, randomized, double‐blinded trial. Clin Infect Dis. 1997;24(3):324–333.

- . Narrative review: the new epidemic of Clostridium difficile‐associated enteric disease. Ann Intern Med. 2006;145(10):758–764.

- , , , et al. Impact of emergency colectomy on survival of patients with fulminant Clostridium difficile colitis during an epidemic caused by a hypervirulent strain. Ann Surg. 2007;245(2):267–272.

- , , , . Hospital‐acquired Clostridium difficile‐associated disease in the intensive care unit setting: epidemiology, clinical course and outcome. BMC Infect Dis. 2007;7:42.

- , , , , , . Factors associated with prolonged symptoms and severe disease due to Clostridium difficile. Age Ageing. 1999;28(2):107–113.

- , , , , , . The effect of hospital‐acquired Clostridium difficile infection on in‐hospital mortality. Arch Intern Med. 2010;170(20):1804–1810.

- , , , , , . The effect of hospital‐acquired infection with Clostridium difficile on length of stay in hospital. CMAJ. 2012;184(1):37–42.

- , . Clostridium difficile—more difficult than ever. N Engl J Med. 2008;359(18):1932–1940.

- , , , et al. Clinical practice guidelines for Clostridium difficile infection in adults: 2010 update by the society for healthcare epidemiology of America (SHEA) and the infectious diseases society of America (IDSA). Infect Control Hosp Epidemiol. 2010;31(5):431–455.

- , , , . Health care costs and mortality associated with nosocomial diarrhea due to Clostridium difficile. Clin Infect Dis. 2002;34(3):346–353.

- , , , , . Short‐ and long‐term attributable costs of Clostridium difficile‐associated disease in nonsurgical inpatients. Clin Infect Dis. 2008;46(4):497–504.

- , , , , . Estimation of extra hospital stay attributable to nosocomial infections: heterogeneity and timing of events. J Clin Epidemiol. 2000;53(4):409–417.

- , , , et al. Attributable outcomes of endemic Clostridium difficile‐associated disease in nonsurgical patients. Emerging Infect Dis. 2008;14(7):1031–1038.

- , . Excess length of stay, charges, and mortality attributable to medical injuries during hospitalization. JAMA. 2003;290(14):1868–1874.

- , , , , , . Association of corticosteroid dose and route of administration with risk of treatment failure in acute exacerbation of chronic obstructive pulmonary disease. JAMA. 2010;303(23):2359–2367.

- , , , , , . The relationship between hospital spending and mortality in patients with sepsis. Arch Intern Med. 2011;171(4):292–299.

- , , , , , . Comparative effectiveness of macrolides and quinolones for patients hospitalized with acute exacerbations of chronic obstructive pulmonary disease (AECOPD). J Hosp Med. 2010;5(5):261–267.

- , , , , . A combined comorbidity score predicted mortality in elderly patients better than existing scores. J Clin Epidemiol. 2011;64(7):749–759.

- , , , , , . Epidemiology of severe sepsis in the United States: analysis of incidence, outcome, and associated costs of care. Crit Care Med. 2001;29(7):1303–1310.

- , , , et al. Development and validation of a model that uses enhanced administrative data to predict mortality in patients with sepsis. Crit Care Med. 2011;39(11):2425–2430.

- , , , , . Incorporating initial treatments improves performance of a mortality prediction model for patients with sepsis. Pharmacoepidemiol Drug Saf. 2012;21(suppl 2):44–52.

- , , , . Nosocomial infection, length of stay, and time‐dependent bias. Infect Control Hosp Epidemiol. 2009;30(3):273–276.

- , , , , , . Length of stay and hospital costs among high‐risk patients with hospital‐origin Clostridium difficile‐associated diarrhea. J Med Econ. 2013;16(3):440–448.

- Rogers. Regression standard errors in clustered samples. Stata Technical Bulletin. 1993;13(13):19–23.

- . Reducing bias in a propensity score matched‐pair sample using greedy matching techniques. In: Proceedings of the 26th Annual SAS Users Group International Conference; April 22–25, 2001; Long Beach, CA. Paper 214‐26. Available at: http://www2.sas.com/proceedings/sugi26/p214‐26.pdf. Accessed April 4, 2014.

- , . Prolongation of length of stay and Clostridium difficile infection: a review of the methods used to examine length of stay due to healthcare associated infections. Antimicrob Resist Infect Control. 2012;1(1):14.

- , , , et al. Clostridium difficile Infection: a multicenter study of epidemiology and outcomes in mechanically ventilated patients. Crit Care Med. 2013;41(8):1968–1975.

There are approximately 3 million cases of Clostridium difficile infection (CDI) per year in the United States.[1, 2, 3, 4] Of these, 10% result in a hospitalization or occur as a consequence of the exposures and treatments associated with hospitalization.[1, 2, 3, 4] Some patients with CDI experience mild diarrhea that is responsive to therapy, but other patients experience severe, life‐threatening disease that is refractory to treatment, leading to pseudomembranous colitis, toxic megacolon, and sepsis with a 60‐day mortality rate that exceeds 12%.[5, 6, 7, 8, 9, 10, 11, 12, 13, 14]

Hospital‐onset CDI (HOCDI), defined as C difficile‐associated diarrhea and related symptoms with onset more than 48 hours after admission to a healthcare facility,[15] represents a unique marriage of CDI risk factors.[5] A vulnerable patient is introduced into an environment that contains both exposure to C difficile (through other patients or healthcare workers) and treatment with antibacterial agents that may diminish normal flora. Consequently, CDI is common among hospitalized patients.[16, 17, 18] A particularly important group for understanding the burden of disease is patients who initially present to the hospital with sepsis and subsequently develop HOCDI. Sepsis patients are often critically ill and are universally treated with antibiotics.

Determining the incremental cost and mortality risk attributable to HOCDI is methodologically challenging. Because HOCDI is associated with presenting severity, the sickest patients are also the most likely to contract the disease. HOCDI is also associated with time of exposure or length of stay (LOS). Because LOS is a risk factor, comparing LOS between those with and without HOCDI will overestimate the impact if the time to diagnosis is not taken into account.[16, 17, 19, 20] We aimed to examine the impact of HOCDI in hospitalized patients with sepsis using a large, multihospital database with statistical methods that took presenting severity and time to diagnosis into account.

METHODS

Data Source and Subjects

Permission to conduct this study was obtained from the institutional review board at Baystate Medical Center. We used the Premier Healthcare Informatics database, a voluntary, fee‐supported database created to measure quality and healthcare utilization, which has been used extensively in health services research.[21, 22, 23] In addition to the elements found in hospital claims derived from the uniform billing 04 form, Premier data include an itemized, date‐stamped log of all items and services charged to the patient or their insurer, including medications, laboratory tests, and diagnostic and therapeutic services. Approximately 75% of hospitals that submit data also provide information on actual hospital costs, taken from internal cost accounting systems. The rest provide cost estimates based on Medicare cost‐to‐charge ratios. Participating hospitals are similar to the composition of acute care hospitals nationwide, although they are more commonly small‐ to midsized nonteaching facilities and are more likely to be located in the southern United States.

We included medical (nonsurgical) adult patients with sepsis who were admitted to a participating hospital between July 1, 2004 and December 31, 2010. Because we sought to focus on the care of patients who present to the hospital with sepsis, we defined sepsis as the presence of a diagnosis of sepsis plus evidence of both blood cultures and antibiotic treatment within the first 2 days of hospitalization; we used the first 2 days of hospitalization rather than just the first day because, in administrative datasets, the duration of the first hospital day includes partial days that can vary in length. We excluded patients who died or were discharged prior to day 3, because HOCDI is defined as onset after 48 hours in a healthcare facility.[15] We also excluded surviving patients who received less than 3 consecutive days of antibiotics, and patients who were transferred from or to another acute‐care facility; the latter exclusion criterion was used because we could not accurately determine the onset or subsequent course of their illness.

Identification of Patients at Risk for and Diagnosed With HOCDI

Among eligible patients with sepsis, we aimed to identify a cohort at risk for developing CDI during the hospital stay. We excluded patients: (1) with a diagnosis indicating that diarrhea was present on admission, (2) with a diagnosis of CDI that was indicated to be present on admission, (3) who were tested for CDI on the first or second hospital day, and (4) who received an antibiotic that could be consistent with treatment for CDI (oral or intravenous [IV] metronidazole or oral vancomycin) on hospital days 1 or 2.

Next, we aimed to identify sepsis patients at risk for HOCDI who developed HOCDI during their hospital stay. Among eligible patients described above, we considered a patient to have HOCDI if they had an International Classification of Diseases, Ninth Revision, Clinical Modification diagnosis of CDI (primary or secondary but not present on admission), plus evidence of testing for CDI after hospital day 2, and treatment with oral vancomycin or oral or IV metronidazole that was started after hospital day 2 and within 2 days of the C difficile test, and evidence of treatment for CDI for at least 3 days unless the patient was discharged or died.

Patient Information

We recorded patient age, gender, marital status, insurance status, race, and ethnicity. Using software provided by the Healthcare Costs and Utilization Project of the Agency for Healthcare Research and Quality, we categorized information on 30 comorbid conditions. We also created a single numerical comorbidity score based on a previously published and validated combined comorbidity score that predicts 1‐year mortality.[24] Based on a previously described algorithm,[25] we used diagnosis codes to assess the source (lung, abdomen, urinary tract, blood, other) and type of sepsis (Gram positive, Gram negative, mixed, anaerobic, fungal). Because patients can have more than 1 potential source of sepsis (eg, pneumonia and urinary tract infection) and more than 1 organism causing infection (eg, urine with Gram negative rods and blood culture with Gram positive cocci), these categories are not mutually exclusive (see Supporting Table 1 in the online version of this article). We used billing codes to identify the use of therapies, monitoring devices, and pharmacologic treatments to characterize both initial severity of illness and severity at the time of CDI diagnosis. These therapies are included in a validated sepsis mortality prediction model (designed for administrative datasets) with similar discrimination and calibration to clinical intensive care unit (ICU) risk‐adjustment models such as the mortality probability model, version III.[26, 27]

Outcomes

Our primary outcome of interest was in‐hospital mortality. Secondary outcomes included LOS and costs for survivors only and for all patients.

Statistical Methods

We calculated patient‐level summary statistics for all patients using frequencies for binary variables and medians and interquartile percentiles for continuous variables. P values <0.05 were considered statistically significant.

To account for presenting severity and time to diagnosis, we used methods that have been described elsewhere.[12, 13, 18, 20, 28] First, we identified patients who were eligible to develop HOCDI. Second, for all eligible patients, we identified a date of disease onset (index date). For patients who met criteria for HOCDI, this was the date on which the patient was tested for CDI. For eligible patients without disease, this was a date randomly assigned to any time during the hospital stay.[29] Next, we developed a nonparsimonious propensity score model that included all patient characteristics (demographics, comorbidities, sepsis source, and severity of illness on presentation and on the index date; all variables listed in Table 1 were included in the propensity model). Some of the variables for this model (eg, mechanical ventilation and vasopressors) were derived from a validated severity model.[26] We adjusted for correlation within hospital when creating the propensity score using Huber‐White robust standard error estimators clustered at the hospital level.[30] We then created matched pairs with the same LOS prior to the index date and similar propensity for developing CDI. We first matched on index date, and then, within each index‐datematched subset, matched patients with and without HOCDI by their propensity score using a 5‐to‐1 greedy match algorithm.[31] We used the differences in LOS between the cases and controls after the index date to calculate the additional attributable LOS estimates; we also separately estimated the impact on cost and LOS in a group limited to those who survived after discharge because of concerns that death could shorten LOS and reduce costs.

| Before Matching | After Matching | |||||

|---|---|---|---|---|---|---|

| HOCDI, n=2,368, % | No CDI, n=216,547, % | P | HOCDI, n=2,368, % | No CDI, n=2,368, % | P | |

| ||||||

| Age, y | 70.9 (15.1) | 68.6 (16.8) | <0.01 | 70.9 (15.1) | 69.8 (15.9) | 0.02 |

| Male | 46.8 | 46.0 | 0.44 | 46.8 | 47.2 | 0.79 |

| Race | ||||||

| White | 61.0 | 63.3 | 61.0 | 58.1 | ||

| Black | 15.6 | 14.5 | <0.01 | 15.6 | 17.0 | 0.11 |

| Hispanic | 3.2 | 5.4 | 3.2 | 4.1 | ||

| Other race | 20.2 | 16.8 | 20.2 | 20.9 | ||

| Marital status | ||||||

| Married | 31.6 | 36.3 | <0.01 | 31.6 | 32.6 | 0.74 |

| Single/divorced | 52.8 | 51.1 | 52.8 | 52.0 | ||

| Other/unknown | 15.7 | 12.6 | 15.7 | 14.5 | ||

| Insurance status | ||||||

| Medicare traditional | 63.2 | 59.5 | 63.2 | 60.3 | ||

| Medicare managed | 10.6 | 10.1 | 10.6 | 10.9 | ||

| Medicaid traditional | 7.6 | 6.9 | 7.6 | 8.2 | ||

| Medicaid managed | 1.8 | 2.0 | <0.01 | 1.8 | 1.8 | 0.50 |

| Managed care | 10.8 | 12.3 | 10.8 | 12.0 | ||

| Commercial | 2.0 | 3.5 | 2.0 | 2.2 | ||

| Self‐pay/other/unknown | 4.0 | 5.7 | 4.0 | 4.7 | ||

| Infection source | ||||||

| Respiratory | 46.5 | 37.0 | <0.01 | 46.5 | 49.6 | 0.03 |

| Skin/bone | 10.1 | 8.6 | 0.01 | 10.1 | 11.2 | 0.21 |

| Urinary | 52.2 | 51.3 | 0.38 | 52.2 | 50.3 | 0.18 |

| Blood | 11.1 | 15.1 | <0.01 | 11.1 | 11.5 | 0.65 |

| Infecting organism | ||||||

| Gram negative | 35.0 | 36.6 | <0.01 | 35.0 | 33.1 | 0.18 |

| Anaerobe | 1.4 | 0.7 | <0.01 | 1.4 | 1.1 | 0.24 |

| Fungal | 17.5 | 7.5 | <0.01 | 17.5 | 18.3 | 0.44 |

| Most common comorbid conditions | ||||||

| Congestive heart failure | 35.1 | 24.6 | <0.01 | 35.1 | 37.5 | 0.06 |

| Chronic lung disease | 31.6 | 27.6 | <0.01 | 31.6 | 32.1 | 0.71 |

| Hypertension | 31.5 | 37.7 | <0.01 | 31.5 | 29.7 | 0.16 |

| Renal Failure | 29.7 | 23.8 | <0.01 | 29.7 | 31.2 | 0.28 |

| Weight Loss | 27.7 | 13.3 | <0.01 | 27.7 | 29.4 | 0.17 |

| Treatments by day 2 | ||||||

| ICU admission | 40.0 | 29.5 | <0.01 | 40.0 | 40.7 | 0.64 |

| Use of bicarbonate | 12.2 | 7.1 | <0.01 | 12.2 | 13.6 | 0.15 |

| Fresh frozen plasma | 1.4 | 1.0 | 0.03 | 1.4 | 1.1 | 0.36 |

| Inotropes | 1.4 | 0.9 | 0.01 | 1.4 | 2.2 | 0.04 |

| Hydrocortisone | 6.7 | 4.7 | <0.01 | 6.7 | 7.4 | 0.33 |

| Thiamine | 4.2 | 3.3 | 0.01 | 4.2 | 4.1 | 0.83 |

| Psychotropics (eg, haldol for delirium) | 10.0 | 9.2 | 0.21 | 10.0 | 10.8 | 0.36 |

| Restraints (eg, for delirium) | 2.0 | 1.5 | 0.05 | 2.0 | 2.5 | 0.29 |

| Angiotensin‐converting enzyme inhibitors | 12.1 | 13.2 | 0.12 | 12.1 | 10.9 | 0.20 |

| Statins | 18.8 | 21.1 | 0.01 | 18.8 | 16.9 | 0.09 |

| Drotrecogin alfa | 0.6 | 0.3 | 0.00 | 0.6 | 0.6 | 0.85 |

| Foley catheter | 19.2 | 19.8 | 0.50 | 19.2 | 22.0 | 0.02 |

| Diuretics | 28.5 | 25.4 | 0.01 | 28.5 | 29.6 | 0.42 |

| Red blood cells | 15.5 | 10.6 | <0.01 | 15.5 | 15.8 | 0.81 |

| Calcium channel blockers | 19.3 | 16.8 | 0.01 | 19.3 | 19.1 | 0.82 |

| ‐Blockers | 32.7 | 29.6 | 0.01 | 32.7 | 30.6 | 0.12 |

| Proton pump inhibitors | 59.6 | 53.1 | <0.01 | 59.6 | 61.0 | 0.31 |

Analysis Across Clinical Subgroups

In a secondary analysis, we examined heterogeneity in the association between HOCDI and outcomes within subsets of patients defined by age, combined comorbidity score, and admission to the ICU by day 2. We created separate propensity scores using the same covariates in the primary analysis, but limited matches to within these subsets. For each group, we examined how the covariates in the HOCDI and control groups differed after matching with inference tests that took the paired nature of the data into account. All analyses were carried out using Stata/SE 11.1 (StataCorp, College Station, TX).

RESULTS

We identified 486,943 adult sepsis admissions to a Premier hospital between July 1, 2004 and December 31, 2010. After applying all exclusion criteria, we had a final sample of 218,915 admissions with sepsis (from 400 hospitals) at risk for HOCDI (Figure 1). Of these, 2368 (1.08%) met criteria for diagnosis of CDI after hospital day 2 and were matched to controls using index date and propensity score.

Patient and Hospital Factors

After matching, the median age was 71 years in cases and 70 years in controls (Table 1). Less than half (46%) of the population was male. Most cases (61%) and controls (58%) were white. Heart failure, hypertension, chronic lung disease, renal failure, and weight loss were the most common comorbid conditions. Our propensity model, which had a C statistic of 0.75, identified patients whose risk varied from a mean of 0.1% in the first decile to a mean of 3.8% in the tenth decile. Before matching, 40% of cases and 29% of controls were treated in the ICU by hospital day 2; after matching, 40% of both cases and controls were treated in the ICU by hospital day 2.

Distribution by LOS, Index Day, and Risk for Mortality

The unadjusted and unmatched LOS was longer for cases than controls (19 days vs 8 days, Table 2) (see Supporting Figure 1 in the online version of this article). Approximately 90% of the patients had an index day of 14 or less (Figure 2). Among patients both with and without CDI, the unadjusted mortality risk increased as the index day (and thus the total LOS) increased.

| Outcome | HOCDI | No HOCDI | Difference (95% CI) | P |

|---|---|---|---|---|

| ||||

| Length of stay, d | ||||

| Raw results | 19.2 | 8.3 | 8.4 (8.48.5) | <0.01 |

| Raw results for survivors only | 18.6 | 8.0 | 10.6 (10.311.0) | <0.01 |

| Matched results | 19.2 | 14.2 | 5.1(4.45.7) | <0.01 |

| Matched results for survivors only | 18.6 | 13.6 | 5.1 (4.45.8) | <0.01 |

| Mortality, % | ||||

| Raw results | 24.0 | 10.1 | 13.9 (12.615.1), RR=2.4 (2.22.5) | <0.01 |

| Matched results | 24.0 | 15.4 | 8.6 (6.410.9), RR=1.6 (1.41.8) | <0.01 |

| Costs, US$ | ||||

| Raw results median costs [interquartile range] | $26,187 [$15,117$46,273] | $9,988 [$6,296$17,351] | $16,190 ($15,826$16,555) | <0.01 |

| Raw results for survivors only [interquartile range] | $24,038 [$14,169$41,654] | $9,429 [$6,070$15,875] | $14,620 ($14,246$14,996) | <0.01 |

| Matched results [interquartile range] | $26,187 [$15,117$46,273] | $19,160 [$12,392$33,777] | $5,308 ($4,521$6,108) | |

| Matched results for survivors only [interquartile range] | $24,038 [$14,169$41,654] | $17,811 [$11,614$29,298] | $4,916 ($4,088$5,768) | <0.01 |

Adjusted Results

Compared to patients without disease, HOCDI patients had an increased unadjusted mortality (24% vs 10%, P<0.001). This translates into a relative risk of 2.4 (95% confidence interval [CI]: 2.2, 2.5). In the matched cohort, the difference in the mortality rates was attenuated, but still significantly higher in the HOCDI patients (24% versus 15%, P<0.001, an absolute difference of 9%; 95% CI: 6.410.8). The adjusted relative risk of mortality for HOCDI was 1.6 (95% CI: 1.41.8; Table 2). After matching, patients with CDI had a LOS of 19.2 days versus 14.2 days in matched controls (difference of 5.1 days; 95% CI: 4.45.7; P<0.001). When the LOS analysis was limited to survivors only, this difference of 5 days remained (P<0.001). In an analysis limited to survivors only, the difference in median costs between cases and controls was $4916 (95% CI: $4088$5768; P<0.001). In a secondary analysis examining heterogeneity between HOCDI and outcomes across clinical subgroups, the absolute difference in mortality and costs between cases and controls varied across demographics, comorbidity, and ICU admission, but the relative risks were similar (Figure 3) (see Supporting Figure 3 in the online version of this article).

DISCUSSION

In this large cohort of patients with sepsis, we found that approximately 1 in 100 patients with sepsis developed HOCDI. Even after matching with controls based on the date of symptom onset and propensity score, patients who developed HOCDI were more than 1.6 times more likely to die in the hospital. HOCDI also added 5 days to the average hospitalization for patients with sepsis and increased median costs by approximately $5000. These findings suggest that a hospital that prevents 1 case of HOCDI per month in sepsis patients could avoid 1 death and 60 inpatient days annually, achieving an approximate yearly savings of $60,000.

Until now, the incremental cost and mortality attributable to HOCDI in sepsis patients have been poorly understood. Attributing outcomes can be methodologically challenging because patients who are at greatest risk for poor outcomes are the most likely to contract the disease and are at risk for longer periods of time. Therefore, it is necessary to take into account differences in severity of illness and time at risk between diseased and nondiseased populations and to ensure that outcomes attributed to the disease occur after disease onset.[28, 32] The majority of prior studies examining the impact of CDI on hospitalized patients have been limited by a lack of adequate matching to controls, small sample size, or failure to take into account time to infection.[16, 17, 19, 20]

A few studies have taken into account severity, time to infection, or both in estimating the impact of HOCDI. Using a time‐dependent Cox model that accounted for time to infection, Micek et al. found no difference in mortality but a longer LOS in mechanically ventilated patients (not limited to sepsis) with CDI.[33] However, their study was conducted at only 3 centers, did not take into account severity at the time of diagnosis, and did not clearly distinguish between community‐onset CDI and HOCDI. Oake et al. and Forster et al. examined the impact of CDI on patients hospitalized in a 2‐hospital health system in Canada.[12, 13] Using the baseline mortality estimate in a Cox multivariate proportional hazards regression model that accounted for the time‐varying nature of CDI, they found that HOCDI increased absolute risk of death by approximately 10%. Also, notably similar to our study were their findings that HOCDI occurred in approximately 1 in 100 patients and that the attributable median increase in LOS due to hospital‐onset CDI was 6 days. Although methodologically rigorous, these 2 small studies did not assess the impact of CDI on costs of care, were not focused on sepsis patients or even patients who received antibiotics, and also did not clearly distinguish between community‐onset CDI and HOCDI.

Our study therefore has important strengths. It is the first to examine the impact of HOCDI, including costs, on the outcomes of patients hospitalized with sepsis. The fact that we took into account both time to diagnosis and severity at the time of diagnosis (by using an index date for both cases and controls and determining severity on that date) prevented us from overestimating the impact of HOCDI on outcomes. The large differences in outcomes we observed in unadjusted and unmatched data were tempered after multivariate adjustment (eg, difference in LOS from 10.6 days to 5.1 additional days, costs from $14,620 to $4916 additional costs after adjustment). Our patient sample was derived from a large, multihospital database that contains actual hospital costs as derived from internal accounting systems. The fact that our study used data from hundreds of hospitals means that our estimates of cost, LOS, and mortality may be more generalizable than the work of Micek et al., Oake et al., and Forster et al.

This work also has important implications. First, hospital administrators, clinicians, and researchers can use our results to evaluate the cost‐effectiveness of HOCDI prevention measures (eg, hand hygiene programs, antibiotic stewardship). By quantifying the cost per case in sepsis patients, we allow administrators and researchers to compare the incremental costs of HOCDI prevention programs to the dollars and lives saved due to prevention efforts. Second, we found that our propensity model identified patients whose risk varied greatly. This suggests that an opportunity exists to identify subgroups of patients that are at highest risk. Identifying high‐risk subgroups will allow for targeted risk reduction interventions and the opportunity to reduce transmission (eg, by placing high‐risk patients in a private room). Finally, we have reaffirmed that time to diagnosis and presenting severity need to be rigorously addressed prior to making estimates of the impact of CDI burden and other hospital‐acquired conditions and injuries.

There are limitations to this study as well. We did not have access to microbiological data. However, we required a diagnosis code of CDI, evidence of testing, and treatment after the date of testing to confirm a diagnosis. We also adopted detailed exclusion criteria to ensure that CDI that was not present on admission and that controls did not have CDI. These stringent inclusion and exclusion criteria strengthened the internal validity of our estimates of disease impact. We used administrative claims data, which limited our ability to adjust for severity. However, the detailed nature of the database allowed us to use treatments, such as vasopressors and antibiotics, to identify cases; treatments were also used as a validated indicator of severity,[26] which may have helped to reduce some of this potential bias. Although our propensity model included many predictors of CDI, such as use of proton pump inhibitors and factors associated with mortality, not every confounder was completely balanced after propensity matching, although the statistical differences may have been related to our large sample size and therefore might not be clinically significant. We also may have failed to include all possible predictors of CDI in the propensity model.

In a large, diverse cohort of hospitalized patients with sepsis, we found that HOCDI lengthened hospital stay by approximately 5 days, increased risk of in‐hospital mortality by 9%, and increased hospital cost by approximately $5000 per patient. These findings highlight the importance of identifying effective prevention measures and of determining the patient populations at greatest risk for HOCDI.

Disclosures: The study was conducted with funding from the Division of Critical Care and the Center for Quality of Care Research at Baystate Medical Center. Dr. Lagu is supported by the National Heart, Lung, and Blood Institute of the National Institutes of Health under award number K01HL114745. Dr. Stefan is supported by the National Heart, Lung, and Blood Institute of the National Institutes of Health under award number K01HL114631. Drs. Lagu and Lindenauer had full access to all of the data in the study; they take responsibility for the integrity of the data and the accuracy of the data analysis. Drs. Lagu, Lindenauer, Steingrub, Higgins, Stefan, Haessler, and Rothberg conceived of the study. Dr. Lindenauer acquired the data. Drs. Lagu, Lindenauer, Rothberg, Steingrub, Nathanson, Stefan, Haessler, Higgins, and Mr. Hannon analyzed and interpreted the data. Dr. Lagu drafted the manuscript. Drs. Lagu, Lindenauer, Rothberg, Steingrub, Nathanson, Stefan, Haessler, Higgins, and Mr. Hannon critically reviewed the manuscript for important intellectual content. Dr. Nathanson carried out the statistical analyses. Dr. Nathanson, through his company OptiStatim LLC, was paid by the investigators with funding from the Department of Medicine at Baystate Medical Center to assist in conducting the statistical analyses in this study. The authors report no further conflicts of interest.

There are approximately 3 million cases of Clostridium difficile infection (CDI) per year in the United States.[1, 2, 3, 4] Of these, 10% result in a hospitalization or occur as a consequence of the exposures and treatments associated with hospitalization.[1, 2, 3, 4] Some patients with CDI experience mild diarrhea that is responsive to therapy, but other patients experience severe, life‐threatening disease that is refractory to treatment, leading to pseudomembranous colitis, toxic megacolon, and sepsis with a 60‐day mortality rate that exceeds 12%.[5, 6, 7, 8, 9, 10, 11, 12, 13, 14]

Hospital‐onset CDI (HOCDI), defined as C difficile‐associated diarrhea and related symptoms with onset more than 48 hours after admission to a healthcare facility,[15] represents a unique marriage of CDI risk factors.[5] A vulnerable patient is introduced into an environment that contains both exposure to C difficile (through other patients or healthcare workers) and treatment with antibacterial agents that may diminish normal flora. Consequently, CDI is common among hospitalized patients.[16, 17, 18] A particularly important group for understanding the burden of disease is patients who initially present to the hospital with sepsis and subsequently develop HOCDI. Sepsis patients are often critically ill and are universally treated with antibiotics.

Determining the incremental cost and mortality risk attributable to HOCDI is methodologically challenging. Because HOCDI is associated with presenting severity, the sickest patients are also the most likely to contract the disease. HOCDI is also associated with time of exposure or length of stay (LOS). Because LOS is a risk factor, comparing LOS between those with and without HOCDI will overestimate the impact if the time to diagnosis is not taken into account.[16, 17, 19, 20] We aimed to examine the impact of HOCDI in hospitalized patients with sepsis using a large, multihospital database with statistical methods that took presenting severity and time to diagnosis into account.

METHODS

Data Source and Subjects

Permission to conduct this study was obtained from the institutional review board at Baystate Medical Center. We used the Premier Healthcare Informatics database, a voluntary, fee‐supported database created to measure quality and healthcare utilization, which has been used extensively in health services research.[21, 22, 23] In addition to the elements found in hospital claims derived from the uniform billing 04 form, Premier data include an itemized, date‐stamped log of all items and services charged to the patient or their insurer, including medications, laboratory tests, and diagnostic and therapeutic services. Approximately 75% of hospitals that submit data also provide information on actual hospital costs, taken from internal cost accounting systems. The rest provide cost estimates based on Medicare cost‐to‐charge ratios. Participating hospitals are similar to the composition of acute care hospitals nationwide, although they are more commonly small‐ to midsized nonteaching facilities and are more likely to be located in the southern United States.

We included medical (nonsurgical) adult patients with sepsis who were admitted to a participating hospital between July 1, 2004 and December 31, 2010. Because we sought to focus on the care of patients who present to the hospital with sepsis, we defined sepsis as the presence of a diagnosis of sepsis plus evidence of both blood cultures and antibiotic treatment within the first 2 days of hospitalization; we used the first 2 days of hospitalization rather than just the first day because, in administrative datasets, the duration of the first hospital day includes partial days that can vary in length. We excluded patients who died or were discharged prior to day 3, because HOCDI is defined as onset after 48 hours in a healthcare facility.[15] We also excluded surviving patients who received less than 3 consecutive days of antibiotics, and patients who were transferred from or to another acute‐care facility; the latter exclusion criterion was used because we could not accurately determine the onset or subsequent course of their illness.

Identification of Patients at Risk for and Diagnosed With HOCDI

Among eligible patients with sepsis, we aimed to identify a cohort at risk for developing CDI during the hospital stay. We excluded patients: (1) with a diagnosis indicating that diarrhea was present on admission, (2) with a diagnosis of CDI that was indicated to be present on admission, (3) who were tested for CDI on the first or second hospital day, and (4) who received an antibiotic that could be consistent with treatment for CDI (oral or intravenous [IV] metronidazole or oral vancomycin) on hospital days 1 or 2.

Next, we aimed to identify sepsis patients at risk for HOCDI who developed HOCDI during their hospital stay. Among eligible patients described above, we considered a patient to have HOCDI if they had an International Classification of Diseases, Ninth Revision, Clinical Modification diagnosis of CDI (primary or secondary but not present on admission), plus evidence of testing for CDI after hospital day 2, and treatment with oral vancomycin or oral or IV metronidazole that was started after hospital day 2 and within 2 days of the C difficile test, and evidence of treatment for CDI for at least 3 days unless the patient was discharged or died.

Patient Information

We recorded patient age, gender, marital status, insurance status, race, and ethnicity. Using software provided by the Healthcare Costs and Utilization Project of the Agency for Healthcare Research and Quality, we categorized information on 30 comorbid conditions. We also created a single numerical comorbidity score based on a previously published and validated combined comorbidity score that predicts 1‐year mortality.[24] Based on a previously described algorithm,[25] we used diagnosis codes to assess the source (lung, abdomen, urinary tract, blood, other) and type of sepsis (Gram positive, Gram negative, mixed, anaerobic, fungal). Because patients can have more than 1 potential source of sepsis (eg, pneumonia and urinary tract infection) and more than 1 organism causing infection (eg, urine with Gram negative rods and blood culture with Gram positive cocci), these categories are not mutually exclusive (see Supporting Table 1 in the online version of this article). We used billing codes to identify the use of therapies, monitoring devices, and pharmacologic treatments to characterize both initial severity of illness and severity at the time of CDI diagnosis. These therapies are included in a validated sepsis mortality prediction model (designed for administrative datasets) with similar discrimination and calibration to clinical intensive care unit (ICU) risk‐adjustment models such as the mortality probability model, version III.[26, 27]

Outcomes

Our primary outcome of interest was in‐hospital mortality. Secondary outcomes included LOS and costs for survivors only and for all patients.

Statistical Methods

We calculated patient‐level summary statistics for all patients using frequencies for binary variables and medians and interquartile percentiles for continuous variables. P values <0.05 were considered statistically significant.

To account for presenting severity and time to diagnosis, we used methods that have been described elsewhere.[12, 13, 18, 20, 28] First, we identified patients who were eligible to develop HOCDI. Second, for all eligible patients, we identified a date of disease onset (index date). For patients who met criteria for HOCDI, this was the date on which the patient was tested for CDI. For eligible patients without disease, this was a date randomly assigned to any time during the hospital stay.[29] Next, we developed a nonparsimonious propensity score model that included all patient characteristics (demographics, comorbidities, sepsis source, and severity of illness on presentation and on the index date; all variables listed in Table 1 were included in the propensity model). Some of the variables for this model (eg, mechanical ventilation and vasopressors) were derived from a validated severity model.[26] We adjusted for correlation within hospital when creating the propensity score using Huber‐White robust standard error estimators clustered at the hospital level.[30] We then created matched pairs with the same LOS prior to the index date and similar propensity for developing CDI. We first matched on index date, and then, within each index‐datematched subset, matched patients with and without HOCDI by their propensity score using a 5‐to‐1 greedy match algorithm.[31] We used the differences in LOS between the cases and controls after the index date to calculate the additional attributable LOS estimates; we also separately estimated the impact on cost and LOS in a group limited to those who survived after discharge because of concerns that death could shorten LOS and reduce costs.

| Before Matching | After Matching | |||||

|---|---|---|---|---|---|---|

| HOCDI, n=2,368, % | No CDI, n=216,547, % | P | HOCDI, n=2,368, % | No CDI, n=2,368, % | P | |

| ||||||

| Age, y | 70.9 (15.1) | 68.6 (16.8) | <0.01 | 70.9 (15.1) | 69.8 (15.9) | 0.02 |

| Male | 46.8 | 46.0 | 0.44 | 46.8 | 47.2 | 0.79 |

| Race | ||||||

| White | 61.0 | 63.3 | 61.0 | 58.1 | ||

| Black | 15.6 | 14.5 | <0.01 | 15.6 | 17.0 | 0.11 |

| Hispanic | 3.2 | 5.4 | 3.2 | 4.1 | ||

| Other race | 20.2 | 16.8 | 20.2 | 20.9 | ||

| Marital status | ||||||

| Married | 31.6 | 36.3 | <0.01 | 31.6 | 32.6 | 0.74 |

| Single/divorced | 52.8 | 51.1 | 52.8 | 52.0 | ||

| Other/unknown | 15.7 | 12.6 | 15.7 | 14.5 | ||

| Insurance status | ||||||

| Medicare traditional | 63.2 | 59.5 | 63.2 | 60.3 | ||

| Medicare managed | 10.6 | 10.1 | 10.6 | 10.9 | ||

| Medicaid traditional | 7.6 | 6.9 | 7.6 | 8.2 | ||

| Medicaid managed | 1.8 | 2.0 | <0.01 | 1.8 | 1.8 | 0.50 |

| Managed care | 10.8 | 12.3 | 10.8 | 12.0 | ||

| Commercial | 2.0 | 3.5 | 2.0 | 2.2 | ||

| Self‐pay/other/unknown | 4.0 | 5.7 | 4.0 | 4.7 | ||

| Infection source | ||||||

| Respiratory | 46.5 | 37.0 | <0.01 | 46.5 | 49.6 | 0.03 |

| Skin/bone | 10.1 | 8.6 | 0.01 | 10.1 | 11.2 | 0.21 |

| Urinary | 52.2 | 51.3 | 0.38 | 52.2 | 50.3 | 0.18 |

| Blood | 11.1 | 15.1 | <0.01 | 11.1 | 11.5 | 0.65 |

| Infecting organism | ||||||

| Gram negative | 35.0 | 36.6 | <0.01 | 35.0 | 33.1 | 0.18 |

| Anaerobe | 1.4 | 0.7 | <0.01 | 1.4 | 1.1 | 0.24 |

| Fungal | 17.5 | 7.5 | <0.01 | 17.5 | 18.3 | 0.44 |

| Most common comorbid conditions | ||||||

| Congestive heart failure | 35.1 | 24.6 | <0.01 | 35.1 | 37.5 | 0.06 |

| Chronic lung disease | 31.6 | 27.6 | <0.01 | 31.6 | 32.1 | 0.71 |

| Hypertension | 31.5 | 37.7 | <0.01 | 31.5 | 29.7 | 0.16 |

| Renal Failure | 29.7 | 23.8 | <0.01 | 29.7 | 31.2 | 0.28 |

| Weight Loss | 27.7 | 13.3 | <0.01 | 27.7 | 29.4 | 0.17 |

| Treatments by day 2 | ||||||

| ICU admission | 40.0 | 29.5 | <0.01 | 40.0 | 40.7 | 0.64 |

| Use of bicarbonate | 12.2 | 7.1 | <0.01 | 12.2 | 13.6 | 0.15 |

| Fresh frozen plasma | 1.4 | 1.0 | 0.03 | 1.4 | 1.1 | 0.36 |

| Inotropes | 1.4 | 0.9 | 0.01 | 1.4 | 2.2 | 0.04 |

| Hydrocortisone | 6.7 | 4.7 | <0.01 | 6.7 | 7.4 | 0.33 |

| Thiamine | 4.2 | 3.3 | 0.01 | 4.2 | 4.1 | 0.83 |

| Psychotropics (eg, haldol for delirium) | 10.0 | 9.2 | 0.21 | 10.0 | 10.8 | 0.36 |

| Restraints (eg, for delirium) | 2.0 | 1.5 | 0.05 | 2.0 | 2.5 | 0.29 |

| Angiotensin‐converting enzyme inhibitors | 12.1 | 13.2 | 0.12 | 12.1 | 10.9 | 0.20 |

| Statins | 18.8 | 21.1 | 0.01 | 18.8 | 16.9 | 0.09 |

| Drotrecogin alfa | 0.6 | 0.3 | 0.00 | 0.6 | 0.6 | 0.85 |

| Foley catheter | 19.2 | 19.8 | 0.50 | 19.2 | 22.0 | 0.02 |

| Diuretics | 28.5 | 25.4 | 0.01 | 28.5 | 29.6 | 0.42 |

| Red blood cells | 15.5 | 10.6 | <0.01 | 15.5 | 15.8 | 0.81 |

| Calcium channel blockers | 19.3 | 16.8 | 0.01 | 19.3 | 19.1 | 0.82 |

| ‐Blockers | 32.7 | 29.6 | 0.01 | 32.7 | 30.6 | 0.12 |

| Proton pump inhibitors | 59.6 | 53.1 | <0.01 | 59.6 | 61.0 | 0.31 |

Analysis Across Clinical Subgroups

In a secondary analysis, we examined heterogeneity in the association between HOCDI and outcomes within subsets of patients defined by age, combined comorbidity score, and admission to the ICU by day 2. We created separate propensity scores using the same covariates in the primary analysis, but limited matches to within these subsets. For each group, we examined how the covariates in the HOCDI and control groups differed after matching with inference tests that took the paired nature of the data into account. All analyses were carried out using Stata/SE 11.1 (StataCorp, College Station, TX).

RESULTS

We identified 486,943 adult sepsis admissions to a Premier hospital between July 1, 2004 and December 31, 2010. After applying all exclusion criteria, we had a final sample of 218,915 admissions with sepsis (from 400 hospitals) at risk for HOCDI (Figure 1). Of these, 2368 (1.08%) met criteria for diagnosis of CDI after hospital day 2 and were matched to controls using index date and propensity score.

Patient and Hospital Factors

After matching, the median age was 71 years in cases and 70 years in controls (Table 1). Less than half (46%) of the population was male. Most cases (61%) and controls (58%) were white. Heart failure, hypertension, chronic lung disease, renal failure, and weight loss were the most common comorbid conditions. Our propensity model, which had a C statistic of 0.75, identified patients whose risk varied from a mean of 0.1% in the first decile to a mean of 3.8% in the tenth decile. Before matching, 40% of cases and 29% of controls were treated in the ICU by hospital day 2; after matching, 40% of both cases and controls were treated in the ICU by hospital day 2.

Distribution by LOS, Index Day, and Risk for Mortality

The unadjusted and unmatched LOS was longer for cases than controls (19 days vs 8 days, Table 2) (see Supporting Figure 1 in the online version of this article). Approximately 90% of the patients had an index day of 14 or less (Figure 2). Among patients both with and without CDI, the unadjusted mortality risk increased as the index day (and thus the total LOS) increased.

| Outcome | HOCDI | No HOCDI | Difference (95% CI) | P |

|---|---|---|---|---|

| ||||

| Length of stay, d | ||||

| Raw results | 19.2 | 8.3 | 8.4 (8.48.5) | <0.01 |

| Raw results for survivors only | 18.6 | 8.0 | 10.6 (10.311.0) | <0.01 |

| Matched results | 19.2 | 14.2 | 5.1(4.45.7) | <0.01 |

| Matched results for survivors only | 18.6 | 13.6 | 5.1 (4.45.8) | <0.01 |

| Mortality, % | ||||

| Raw results | 24.0 | 10.1 | 13.9 (12.615.1), RR=2.4 (2.22.5) | <0.01 |

| Matched results | 24.0 | 15.4 | 8.6 (6.410.9), RR=1.6 (1.41.8) | <0.01 |

| Costs, US$ | ||||

| Raw results median costs [interquartile range] | $26,187 [$15,117$46,273] | $9,988 [$6,296$17,351] | $16,190 ($15,826$16,555) | <0.01 |

| Raw results for survivors only [interquartile range] | $24,038 [$14,169$41,654] | $9,429 [$6,070$15,875] | $14,620 ($14,246$14,996) | <0.01 |

| Matched results [interquartile range] | $26,187 [$15,117$46,273] | $19,160 [$12,392$33,777] | $5,308 ($4,521$6,108) | |

| Matched results for survivors only [interquartile range] | $24,038 [$14,169$41,654] | $17,811 [$11,614$29,298] | $4,916 ($4,088$5,768) | <0.01 |

Adjusted Results

Compared to patients without disease, HOCDI patients had an increased unadjusted mortality (24% vs 10%, P<0.001). This translates into a relative risk of 2.4 (95% confidence interval [CI]: 2.2, 2.5). In the matched cohort, the difference in the mortality rates was attenuated, but still significantly higher in the HOCDI patients (24% versus 15%, P<0.001, an absolute difference of 9%; 95% CI: 6.410.8). The adjusted relative risk of mortality for HOCDI was 1.6 (95% CI: 1.41.8; Table 2). After matching, patients with CDI had a LOS of 19.2 days versus 14.2 days in matched controls (difference of 5.1 days; 95% CI: 4.45.7; P<0.001). When the LOS analysis was limited to survivors only, this difference of 5 days remained (P<0.001). In an analysis limited to survivors only, the difference in median costs between cases and controls was $4916 (95% CI: $4088$5768; P<0.001). In a secondary analysis examining heterogeneity between HOCDI and outcomes across clinical subgroups, the absolute difference in mortality and costs between cases and controls varied across demographics, comorbidity, and ICU admission, but the relative risks were similar (Figure 3) (see Supporting Figure 3 in the online version of this article).

DISCUSSION

In this large cohort of patients with sepsis, we found that approximately 1 in 100 patients with sepsis developed HOCDI. Even after matching with controls based on the date of symptom onset and propensity score, patients who developed HOCDI were more than 1.6 times more likely to die in the hospital. HOCDI also added 5 days to the average hospitalization for patients with sepsis and increased median costs by approximately $5000. These findings suggest that a hospital that prevents 1 case of HOCDI per month in sepsis patients could avoid 1 death and 60 inpatient days annually, achieving an approximate yearly savings of $60,000.

Until now, the incremental cost and mortality attributable to HOCDI in sepsis patients have been poorly understood. Attributing outcomes can be methodologically challenging because patients who are at greatest risk for poor outcomes are the most likely to contract the disease and are at risk for longer periods of time. Therefore, it is necessary to take into account differences in severity of illness and time at risk between diseased and nondiseased populations and to ensure that outcomes attributed to the disease occur after disease onset.[28, 32] The majority of prior studies examining the impact of CDI on hospitalized patients have been limited by a lack of adequate matching to controls, small sample size, or failure to take into account time to infection.[16, 17, 19, 20]

A few studies have taken into account severity, time to infection, or both in estimating the impact of HOCDI. Using a time‐dependent Cox model that accounted for time to infection, Micek et al. found no difference in mortality but a longer LOS in mechanically ventilated patients (not limited to sepsis) with CDI.[33] However, their study was conducted at only 3 centers, did not take into account severity at the time of diagnosis, and did not clearly distinguish between community‐onset CDI and HOCDI. Oake et al. and Forster et al. examined the impact of CDI on patients hospitalized in a 2‐hospital health system in Canada.[12, 13] Using the baseline mortality estimate in a Cox multivariate proportional hazards regression model that accounted for the time‐varying nature of CDI, they found that HOCDI increased absolute risk of death by approximately 10%. Also, notably similar to our study were their findings that HOCDI occurred in approximately 1 in 100 patients and that the attributable median increase in LOS due to hospital‐onset CDI was 6 days. Although methodologically rigorous, these 2 small studies did not assess the impact of CDI on costs of care, were not focused on sepsis patients or even patients who received antibiotics, and also did not clearly distinguish between community‐onset CDI and HOCDI.

Our study therefore has important strengths. It is the first to examine the impact of HOCDI, including costs, on the outcomes of patients hospitalized with sepsis. The fact that we took into account both time to diagnosis and severity at the time of diagnosis (by using an index date for both cases and controls and determining severity on that date) prevented us from overestimating the impact of HOCDI on outcomes. The large differences in outcomes we observed in unadjusted and unmatched data were tempered after multivariate adjustment (eg, difference in LOS from 10.6 days to 5.1 additional days, costs from $14,620 to $4916 additional costs after adjustment). Our patient sample was derived from a large, multihospital database that contains actual hospital costs as derived from internal accounting systems. The fact that our study used data from hundreds of hospitals means that our estimates of cost, LOS, and mortality may be more generalizable than the work of Micek et al., Oake et al., and Forster et al.

This work also has important implications. First, hospital administrators, clinicians, and researchers can use our results to evaluate the cost‐effectiveness of HOCDI prevention measures (eg, hand hygiene programs, antibiotic stewardship). By quantifying the cost per case in sepsis patients, we allow administrators and researchers to compare the incremental costs of HOCDI prevention programs to the dollars and lives saved due to prevention efforts. Second, we found that our propensity model identified patients whose risk varied greatly. This suggests that an opportunity exists to identify subgroups of patients that are at highest risk. Identifying high‐risk subgroups will allow for targeted risk reduction interventions and the opportunity to reduce transmission (eg, by placing high‐risk patients in a private room). Finally, we have reaffirmed that time to diagnosis and presenting severity need to be rigorously addressed prior to making estimates of the impact of CDI burden and other hospital‐acquired conditions and injuries.

There are limitations to this study as well. We did not have access to microbiological data. However, we required a diagnosis code of CDI, evidence of testing, and treatment after the date of testing to confirm a diagnosis. We also adopted detailed exclusion criteria to ensure that CDI that was not present on admission and that controls did not have CDI. These stringent inclusion and exclusion criteria strengthened the internal validity of our estimates of disease impact. We used administrative claims data, which limited our ability to adjust for severity. However, the detailed nature of the database allowed us to use treatments, such as vasopressors and antibiotics, to identify cases; treatments were also used as a validated indicator of severity,[26] which may have helped to reduce some of this potential bias. Although our propensity model included many predictors of CDI, such as use of proton pump inhibitors and factors associated with mortality, not every confounder was completely balanced after propensity matching, although the statistical differences may have been related to our large sample size and therefore might not be clinically significant. We also may have failed to include all possible predictors of CDI in the propensity model.

In a large, diverse cohort of hospitalized patients with sepsis, we found that HOCDI lengthened hospital stay by approximately 5 days, increased risk of in‐hospital mortality by 9%, and increased hospital cost by approximately $5000 per patient. These findings highlight the importance of identifying effective prevention measures and of determining the patient populations at greatest risk for HOCDI.