User login

Central Line Insertion Using Simulators

The Accreditation Council for Graduate Medical Education mandates that internal medicine residents develop technical proficiency in performing central venous catheterization (CVC).1 Likewise in Canada, technical expertise in performing CVC is a specific competency requirement in the training objectives in internal medicine by the Royal College of Physicians and Surgeons of Canada.2 For certification in Internal Medicine, the American Board of Internal Medicine expects its candidates to be competent with respect to knowledge and understanding of the indications, contraindications, recognition, and management of complications of CVC.3 Despite the importance of procedural teaching in medical education, most internal medicine residency programs do not have formal procedural teaching.4 A number of potential barriers to teaching CVC exist. A recent study by the American College of Physicians found that fewer general internists perform procedures, compared with internists in the 1980s.5 With fewer internists performing procedures, there may be fewer educators with adequate confidence in teaching these procedures.6 Second, with increasing recognition of patient safety, there is a growing reluctance of patients to be used as teaching subjects for those who are learning CVC.7 Not surprisingly, residents report low comfort in performing CVC.8

Teaching procedures on simulators offers the benefits of allowing both teaching and evaluation of procedures without jeopardizing patient safety9 and is a welcomed variation from the traditional see 1, do 1, teach 1 approach.10 Despite the theoretical benefits of the use of simulators in medical education, the efficacy of their use in teaching internal medicine residents remains understudied.

The purpose of our study was to evaluate the benefits of using simulator models in teaching CVC to internal medicine residents. We hypothesized that participation in a simulator‐based teaching session on CVC would result in improved knowledge and performance of the procedure and confidence.

Methods

Participants

The study was approved by our university and hospital ethics review board. All first‐year internal medicine residents in a single academic institution, who provided written informed consent, were included and enrolled in a simulator curriculum on CVC.

Intervention

All participants completed a baseline multiple choice knowledge test on anatomy, procedural technique, and complications of CVC, as well as a self‐assessment questionnaire on confidence and prior experience of CVC. Upon completion of these, participants were provided access to Internet links of multimedia educational materials on CVC, including references for suggested reading.11, 12 One week later, participants, in small groups of 3 to 5 subjects, attended a 2‐hour simulation session on CVC. For each participant, the baseline performance of an internal jugular CVC on a simulator (Laerdal IV Torso; Laerdal Medical Corp, Wappingers Falls, NY) was videotaped in a blinded fashion. Blinding was done by assigning participants study numbers. No personal identifying information was recorded, as only gowned and gloved hands, arms, and parts of the simulator were recorded. A faculty member and a senior medical resident then demonstrated to each participant the proper CVC techniques on the simulator, which was followed by an 1‐hour practice session with the simulator. Feedback was provided by the supervising faculty member and senior resident during the practice session. Ultrasound‐guided CVC was demonstrated during the session, but not included for evaluation. postsession, each participant's repeat performance of an internal jugular CVC was videotaped in a blinded fashion, similar to that previously described. All participants then repeated the multiple‐choice knowledge assessment 1 week after the simulation session. For assessment of knowledge retention and confidence, all participants were invited to complete the multiple‐choice knowledge assessment at 18 months after training on simulation.

Measurements and Outcomes

We assessed knowledge on CVC based on performance on a 20‐item multiple‐choice test (scored out of 20, presented as a percentage). Questions were constructed based on information from published literature,11 covering areas of anatomy, procedural technique, and complications of CVC. Procedural skills were assessed by 2 raters using a previously validated modified global rating scale (5‐point Likert scale design)13, 14 during review of videotaped performances (Appendix A). Our primary outcome was an overall global rating score. Review was done by 2 independent, blinded raters who were not aware of whether the performances were recorded presimulation or postsimulation training sessions. Weighted Kappa scores for interobserver agreement between video reviewers ranged between 0.35 (95% confidence interval, 0.250.60) for the global average rating score to 0.43 (95% confidence interval, 0.170.53) for the procedural checklist. These Kappa scores indicate fair to moderate agreement, respectively.15

Secondary outcomes included other domains on the global rating scale such as: time and motion, instrument handling, flow of operation, knowledge of instruments,13, 14 checklist score, number of attempts to locate vein and insert catheter, time to complete the procedure, and self‐reported confidence. The criteria of this checklist score were based on a previously published 14‐item checklist,16 assessing for objective completion of specific tasks (Appendix B). Participants were scored based on 10 items, as the remaining 4 items on the original checklist were not applicable to our simulation session (that is, the items on site selection, catheter selection, Trendelenburg positioning, and sterile sealing of site). In our study, all participants were asked to complete an internal jugular catheterization with a standard kit provided, without the need to dress the site after line insertion. Confidence was assessed by self‐report on a 6‐point Likert scale ranging from none to complete.

Statistical Analysis

Comparisons between pretraining and posttraining were made with the use of McNemar's test for paired data and Wilcoxon signed‐rank test where appropriate. Usual descriptive statistics using mean standard deviation (SD), median and interquartile range (IQR) are reported. Comparisons between presession, postsession, and 18‐month data were analyzed using 1‐way analysis of variance (ANOVA), repeated measures design. Homogeneity of covariance assumption test indicated no evidence of nonsphericity in data (Mauchly's criterion; P = 0.53). All reported P values are 2‐sided. Analyses were conducted using the SAS version 9.1 (SAS Institute, Cary, NC).

Results

Of the 33 residents in the first‐year internal medicine program, all consented to participate. Thirty participants (91%) completed the study protocol between January and June 2007. The remaining three residents completed the presession assessments and the simulation session, but did not complete the postsession assessments. These were excluded from our analysis. At baseline, 20 participants had rotated through a previous 1‐month intensive care unit (ICU) block, 8 residents had no prior ICU training, and 2 residents had 2 months of ICU training. Table 1 summarizes the residents' baseline CVC experience prior to the simulation training. In general, participants had limited experience with CVC at baseline, having placed very few internal jugular CVCs (median = 4).

| Femoral Lines | Internal Jugular Lines | Subclavian Lines | |

|---|---|---|---|

| |||

| Number observed (IQR) | 4 (25) | 5 (37) | 2 (13) |

| Number attempted or performed (IQR) | 3 (15) | 4 (28) | 0 (02) |

| Number performed successfully without assistance (IQR) | 2 (03) | 4 (15) | 0 (01) |

Simulation training was associated with an increase in knowledge. Mean scores on multiple‐choice tests increased from 65.7 11.9% to 81.2 10.7 (P < 0.001). Furthermore, simulation training was associated with improvement in CVC performance. Median global average rating score increased from 3.5 (IQR = 34) to 4.5 (IQR = 44.5) (P < 0.001).

The procedural checklist median score increased from 9 (IQR = 69.5) to 9.5 (IQR = 99.5) (P <.001), median time required for completion of CVC decreased from 8 minutes 22 seconds (IQR = 7 minutes 37 seconds to 12 minutes 1 second) to 6 minutes 43 seconds (IQR = 6 minutes to 7 minutes 25 seconds) (P = 0.002). Other performance measures are summarized in Table 2. Last, simulation training was associated with an increase in self‐rated confidence. The median confidence rating (0 lowest, 5 highest) increased from 3 (moderate, IQR = 23) to 4 (good, IQR = 34) (P < 0.001). The overall satisfaction with the CVC simulation course was good (Table 3) and all 30 participants felt this course should continue to be offered.

| Pre | Post | P Value | |

|---|---|---|---|

| |||

| Number of attempts to locate vein; n (%) | 0.01 | ||

| 1 | 16 | 26 | |

| 2 | 14 | 4 | |

| Number of attempts to insert catheter; n (%) | 0.32 | ||

| 1 | 27 | 29 | |

| >1 | 3 | 1 | |

| Median time and motion score (IQR) | 4 (34) | 5 (45) | <0.001 |

| Median instrument handling score (IQR) | 4 (34) | 5 (45) | <0.001 |

| Median flow of operation score (IQR) | 4 (35) | 5 (45) | <0.001 |

| Median knowledge of instruments score (IQR) | 4 (35) | 5 (45) | 0.004 |

| Agree Strongly | Agree | Neutral | Disagree | Disagree Strongly | |

|---|---|---|---|---|---|

| |||||

| Course improved technical ability to insert central lines | 3 | 21 | 6 | 0 | 0 |

| Course decreased anxiety related to line placement | 6 | 22 | 2 | 0 | 0 |

| Course improved ability to deliver patient care | 6 | 22 | 2 | 0 | 0 |

Knowledge Retention

Sixteen participants completed the knowledge‐retention multiple‐choice tests. No significant differences in baseline characteristics were found between those who completed the knowledge retention tests (n = 16) vs. those who did not (n = 17) in baseline knowledge scores, previous ICU training, gender, and baseline self‐rated confidence.

Mean baseline multiple‐choice test score in the 16 participants who completed the knowledge retention tests was 65.3% 10.6%. Mean score 1 week posttraining was 80.3 9.4%. Mean score at 18 months was 78.4 9.6%. Results from 1‐way ANOVA, repeated‐measures design showed a significant improvement in knowledge scores, F(2, 30) = 14.83, P < 0.0001. Contrasts showed that scores at 1 week were significantly higher than baseline scores (P < 0.0001), and improvement in scores at 18 months was retained, remaining significantly higher than baseline scores (P = 0.002). Median confidence level increased from 3 (moderate) at baseline, to 4 (good) at 1‐week posttraining. Confidence level remained 4 (good) at 18 months (P < 0.0001).

Discussion

CVC is an important procedural competency for internists to master. Indications for CVC range from measuring of central venous pressure to administration of specific medications or solutions such as inotropic agents and total parental nutrition. Its use is important in the care of those who are acutely or critically ill and those who are chronically ill. CVC is associated with a number of potentially serious mechanical complications, including arterial puncture, pneumothorax, and death.11, 17 Proficiency in CVC insertion is found to be closely related to operator experience: catheter insertion by physicians who have performed 50 or more CVC are one‐half as likely to sustain complications as catheter insertion by less experienced physicians.18 Furthermore, training has been found to be associated with a decrease in risk for catheter associated infection19 and pneumothorax.20 Therefore, cumulative experience from repeated clinical encounters and education on CVC play an important role in optimizing patient safety and reducing potential errors associated with CVC. It is well‐documented that patients are generally reluctant to allow medical trainees to learn or practice procedures on them,7 especially in the early phases of training. Indeed, close to 50% of internal medicine residents in 1 study reported poor comfort in performing CVCs.8

Deficiencies in procedural teaching in internal medicine residency curriculum have long been recognized.4 Over the past 20 years, there has been a decrease in the percentage of internists who place central lines in practice.5 In the United States, more than 5 million central venous catheters are inserted every year.21 Presumably, concurrent with this trend is an increasing reliance on other specialists such as radiologists or surgical colleagues in the placement of CVCs. Without a concerted effort for medical educators in improving the quality of procedural teaching, this alarming trend is unlikely to be halted.

With the advance of modern technology and medical education, patient simulation offers a new platform for procedural teaching to take place: a way for trainees to practice without jeopardizing patient safety, as well as an opportunity for formal teaching and supervision to occur,9, 22 serving as a welcomed departure from the traditional see 1, do 1, teach 1 model to procedural teaching. While there is data to support the role of simulation teaching in the surgical training programs,16, 23 less objective evidence exists to support its use in internal medicine residency training programs.

Our study supports the use of simulation in training first‐year internal medicine residents in CVC. Our formal curriculum on CVC involving simulation was associated with an increase in knowledge of the procedure, observed procedural performance, and subjective confidence. Furthermore, this improvement in knowledge and confidence was maintained at 18 months. Our study has several limitations, such as those inherent to all studies using the prestudy and poststudy design. Improvement in performance under study may be a result of the Hawthorne effect. We are not able to isolate the effect of self‐directed learning from the benefits from training using simulators. Furthermore, improvement in knowledge and confidence may be a function of time rather than training. However, by not comparing our results to that from a historical cohort, we hope to minimize confounders by having participants serve as their own control. Results from our study would be strengthened by the introduction of a control group and a randomized design. Future study should include a randomized, controlled crossover educational trial. The second limitation of our study is the low interobserver agreement between video raters, indicating only fair to moderate agreement. Although our video raters were trained senior medical residents and faculty members who frequently supervise and evaluate procedures, we did not specifically train them for the purposes of scoring videotaped performances. Further faculty development is needed. Overall, the magnitudes of the interobserver differences were small (mean global rating scale between raters differed by 1 0.9), and may not translate into clinically important differences. Third, competence on performing CVC on simulators has not been directly correlated with clinical competence on real‐life patients, although a previous study on surgical interns suggested that simulator training was associated with clinical competence on real patients.16 Future studies are warranted to investigate the association between educational benefits from simulation and actual error reductions from CVC during patient encounters. Fourth, our study did not examine residents in the use of ultrasound during simulator training. Use of ultrasound devices has been shown to decrease complication rates of CVC insertion24 and increase rates of success,25 and is recommended as the preferred method for insertion of elective internal jugular CVCs.26 However, because our course was introduced during first‐year residency, when majority of the residents have performed fewer than 5 internal jugular CVCs prior to their simulation training session, the objective of our introductory course was to first ensure technical competency by landmark techniques. Once learners have mastered the technical aspect of line insertion, ultrasound‐guided techniques were introduced later in the residency training curriculum. Fifth, only 48% of participants completed the knowledge assessment at 18 months. Selection bias may be present in our long‐term data. However, baseline comparisons between those who completed the 18 month assessment vs. those who did not demonstrated no obvious differences between the 2 groups. Performance was not examined in our long‐term data. Demonstration of retention of psychomotor skills would be reassuring. However, by 18 months, many of our learners had completed a number of procedurally‐intensive rotations. Therefore, because retention of skills could not be reliably attributed to our initial training in the simulator laboratory, the participants were not retested in their performance of the CVC. Future study should include a control group that undergoes delayed training at 18 months to best assess the effect of simulation training on performance. Sixth, only overall confidence in performing CVC was captured. Confidence in each venous access site pretraining and posttraining was not assessed and warrants further study. Last, our study did not document overall costs of delivering the program, including cost of the simulator, facility, supplies, and stipends for teaching faculty. One full‐time equivalent (FTE) staff has previously been estimated to be a requirement for the success of the program.9 Our program required 3 medical educators, all of whom are clinical faculty members, as well as the help of 4 chief medical residents.

Despite our study's limitations, our study has a number of strengths. To our knowledge, our study is the first of its kind examining the use of simulators on CVC procedural performance, with the use of video‐recording, in an independent and blinded manner. Blinding should minimize bias associated with assessments of resident competency by evaluators who may be aware of residents' previous experience. Second, we were able to recruit all of our first‐year residents for our pretests and posttests, although 3 did not complete the study in its entirety due to on‐call or post‐call schedules. We were able to obtain detailed assessment of knowledge, performance, and subjective confidence before and after the simulation training on our cohort of residents and were able to demonstrate an improvement in knowledge, skills, and confidence. Furthermore, we were able to demonstrate that benefits in knowledge and confidence were retained at 18 months. Last, we readily implemented our formal CVC curriculum into the existing residency training schedule. It requires a single, 2‐hour session in addition to brief pretest and posttest surveys. It requires minimal equipment, using only 2 CVC mannequins, 4 to 6 central line kits, and an ultrasound machine. The simulation training adheres to and incorporates key elements that have been previously identified as effective teaching tools for simulators: feedback, repetitive practice, and curriculum integration.27 With increasing interest in the use of medical simulation for training and competency assessment in medical education,10 our study provides evidence in support of its use in improving knowledge, skills, and confidence. One of the most important goals of using simulators as an educational tool is to improve patient safety. While assessing for whether or not training on simulators lead to improved patient outcomes is beyond the scope of our study, this question merits further study and should be the focus of future studies.

Conclusions

Training on CVC simulators was associated with an improvement in performance, and increase in knowledge assessment scores and self‐reported confidence in internal medicine residents. Improvement in knowledge and confidence was maintained at 18 months.

Acknowledgements

The authors acknowledge the help of Dr. Valentyna Koval and the staff at the Center of Excellence in Surgical Education and Innovations, as well as Drs. Matt Bernard, Raheem B. Kherani, John Staples, Hin Hin Ko, and Dan Renouf for their help on reviewing of videotapes and simulation teaching.

Appendix

Modified Global Rating Scale

Appendix

Procedural Checklist

- Accreditation Council of Graduate Medical Education. ACGME Program Requirements for Residency Education in Internal Medicine Available at: http://www.acgme.org/acWebsite/downloads/RRC_progReq/140_im_07012007. pdf. Accessed June2009.

- The Royal College of Physicians and Surgeons of Canada. Objectives of training in internal medicine. Available at: http://rcpsc.medical.org/information/index.php?specialty=13615(6):432–433.

- ,.The declining number and variety of procedures done by general internists: a resurvey of members of the American College of Physicians.Ann Intern Med.2007;146(5):355–360.

- ,,, et al.Confidence of academic general internists and family physicians to teach ambulatory procedures.J Gen Intern Med.2000;15(6):353–360.

- ,,,.Patients' willingness to allow residents to learn to practice medical procedures.Acad Med.2004;79(2):144–147.

- ,,, et al.Beyond the comfort zone: residents assess their comfort performing inpatient medical procedures.Am J Med.2006;119(1):71.e17–e24.

- ,,,,.Clinical simulation: importance to the internal medicine educational mission.Am J Med.2007;120(9):820–824.

- ,,.Simulation technology for skills training and competency assessment in medical education.J Gen Intern Med.2008;23(suppl 1):46–49.

- ,.Preventing complications of central venous catheterization.N Engl J Med.2003;348(12):1123–1133.

- ,,,,.Videos in clinical medicine. Central venous catheterization.N Engl J Med.2007;356(21):e21.

- ,,,,.Testing technical skill via an innovative “bench station” examination.Am J Surg.1997;173(3):226–230.

- ,,,.Objective assessment of technical skills in surgery.BMJ.2003;327(7422):1032–1037.

- ,,,.Diagnosis. Measuring agreement beyond chance. In: Guyatt G, Rennie D, eds.User's Guides to the Medical Literature: A Manual for Evidence‐Based Clinical Practice.Chicago:American Medical Association Press;2002:461–470.

- ,,, et al.Cognitive task analysis for teaching technical skills in an inanimate surgical skills laboratory.Am J Surg.2004;187(1):114–119.

- ,.Central venous catheterization.Crit Care Med.2007;35(5):1390–1396.

- ,,,,.Central vein catheterization. Failure and complication rates by three percutaneous approaches.Arch Intern Med.1986;146(2):259–261.

- ,,, et al.Education of physicians‐in‐training can decrease the risk for vascular catheter infection.Ann Intern Med.2000;132(8):641–648.

- ,,,.Training fourth‐year medical students in critical invasive skills improves subsequent patient safety.Am Surg.2003;69(5):437–440.

- .Intravascular‐catheter‐related infections.Lancet.1998;351(9106):893–898.

- ,.Procedural simulation's developing role in medicine.Lancet.2007;369(9574):1671–1673.

- ,.Teaching surgical skills—changes in the wind.N Engl J Med.2006;355(25):2664–2669.

- ,,,,.Effect of the implementation of NICE guidelines for ultrasound guidance on the complication rates associated with central venous catheter placement in patients presenting for routine surgery in a tertiary referral centre.Br J Anaesth.2007;99(5):662–665.

- ,,, et al.Ultrasonic locating devices for central venous cannulation: meta‐analysis.BMJ.2003;327(7411):361.

- National Institute for Clinical Excellence. Guidance on the use of ultrasound locating devices for placing central venous catheters. Available at: http://www.nice.org.uk/Guidance/TA49/Guidance/pdf/English. Accessed June2009.

- ,,,,.Features and uses of high‐fidelity medical simulations that lead to effective learning: a BEME systematic review.Med Teach.2005;27(1):10–28.

The Accreditation Council for Graduate Medical Education mandates that internal medicine residents develop technical proficiency in performing central venous catheterization (CVC).1 Likewise in Canada, technical expertise in performing CVC is a specific competency requirement in the training objectives in internal medicine by the Royal College of Physicians and Surgeons of Canada.2 For certification in Internal Medicine, the American Board of Internal Medicine expects its candidates to be competent with respect to knowledge and understanding of the indications, contraindications, recognition, and management of complications of CVC.3 Despite the importance of procedural teaching in medical education, most internal medicine residency programs do not have formal procedural teaching.4 A number of potential barriers to teaching CVC exist. A recent study by the American College of Physicians found that fewer general internists perform procedures, compared with internists in the 1980s.5 With fewer internists performing procedures, there may be fewer educators with adequate confidence in teaching these procedures.6 Second, with increasing recognition of patient safety, there is a growing reluctance of patients to be used as teaching subjects for those who are learning CVC.7 Not surprisingly, residents report low comfort in performing CVC.8

Teaching procedures on simulators offers the benefits of allowing both teaching and evaluation of procedures without jeopardizing patient safety9 and is a welcomed variation from the traditional see 1, do 1, teach 1 approach.10 Despite the theoretical benefits of the use of simulators in medical education, the efficacy of their use in teaching internal medicine residents remains understudied.

The purpose of our study was to evaluate the benefits of using simulator models in teaching CVC to internal medicine residents. We hypothesized that participation in a simulator‐based teaching session on CVC would result in improved knowledge and performance of the procedure and confidence.

Methods

Participants

The study was approved by our university and hospital ethics review board. All first‐year internal medicine residents in a single academic institution, who provided written informed consent, were included and enrolled in a simulator curriculum on CVC.

Intervention

All participants completed a baseline multiple choice knowledge test on anatomy, procedural technique, and complications of CVC, as well as a self‐assessment questionnaire on confidence and prior experience of CVC. Upon completion of these, participants were provided access to Internet links of multimedia educational materials on CVC, including references for suggested reading.11, 12 One week later, participants, in small groups of 3 to 5 subjects, attended a 2‐hour simulation session on CVC. For each participant, the baseline performance of an internal jugular CVC on a simulator (Laerdal IV Torso; Laerdal Medical Corp, Wappingers Falls, NY) was videotaped in a blinded fashion. Blinding was done by assigning participants study numbers. No personal identifying information was recorded, as only gowned and gloved hands, arms, and parts of the simulator were recorded. A faculty member and a senior medical resident then demonstrated to each participant the proper CVC techniques on the simulator, which was followed by an 1‐hour practice session with the simulator. Feedback was provided by the supervising faculty member and senior resident during the practice session. Ultrasound‐guided CVC was demonstrated during the session, but not included for evaluation. postsession, each participant's repeat performance of an internal jugular CVC was videotaped in a blinded fashion, similar to that previously described. All participants then repeated the multiple‐choice knowledge assessment 1 week after the simulation session. For assessment of knowledge retention and confidence, all participants were invited to complete the multiple‐choice knowledge assessment at 18 months after training on simulation.

Measurements and Outcomes

We assessed knowledge on CVC based on performance on a 20‐item multiple‐choice test (scored out of 20, presented as a percentage). Questions were constructed based on information from published literature,11 covering areas of anatomy, procedural technique, and complications of CVC. Procedural skills were assessed by 2 raters using a previously validated modified global rating scale (5‐point Likert scale design)13, 14 during review of videotaped performances (Appendix A). Our primary outcome was an overall global rating score. Review was done by 2 independent, blinded raters who were not aware of whether the performances were recorded presimulation or postsimulation training sessions. Weighted Kappa scores for interobserver agreement between video reviewers ranged between 0.35 (95% confidence interval, 0.250.60) for the global average rating score to 0.43 (95% confidence interval, 0.170.53) for the procedural checklist. These Kappa scores indicate fair to moderate agreement, respectively.15

Secondary outcomes included other domains on the global rating scale such as: time and motion, instrument handling, flow of operation, knowledge of instruments,13, 14 checklist score, number of attempts to locate vein and insert catheter, time to complete the procedure, and self‐reported confidence. The criteria of this checklist score were based on a previously published 14‐item checklist,16 assessing for objective completion of specific tasks (Appendix B). Participants were scored based on 10 items, as the remaining 4 items on the original checklist were not applicable to our simulation session (that is, the items on site selection, catheter selection, Trendelenburg positioning, and sterile sealing of site). In our study, all participants were asked to complete an internal jugular catheterization with a standard kit provided, without the need to dress the site after line insertion. Confidence was assessed by self‐report on a 6‐point Likert scale ranging from none to complete.

Statistical Analysis

Comparisons between pretraining and posttraining were made with the use of McNemar's test for paired data and Wilcoxon signed‐rank test where appropriate. Usual descriptive statistics using mean standard deviation (SD), median and interquartile range (IQR) are reported. Comparisons between presession, postsession, and 18‐month data were analyzed using 1‐way analysis of variance (ANOVA), repeated measures design. Homogeneity of covariance assumption test indicated no evidence of nonsphericity in data (Mauchly's criterion; P = 0.53). All reported P values are 2‐sided. Analyses were conducted using the SAS version 9.1 (SAS Institute, Cary, NC).

Results

Of the 33 residents in the first‐year internal medicine program, all consented to participate. Thirty participants (91%) completed the study protocol between January and June 2007. The remaining three residents completed the presession assessments and the simulation session, but did not complete the postsession assessments. These were excluded from our analysis. At baseline, 20 participants had rotated through a previous 1‐month intensive care unit (ICU) block, 8 residents had no prior ICU training, and 2 residents had 2 months of ICU training. Table 1 summarizes the residents' baseline CVC experience prior to the simulation training. In general, participants had limited experience with CVC at baseline, having placed very few internal jugular CVCs (median = 4).

| Femoral Lines | Internal Jugular Lines | Subclavian Lines | |

|---|---|---|---|

| |||

| Number observed (IQR) | 4 (25) | 5 (37) | 2 (13) |

| Number attempted or performed (IQR) | 3 (15) | 4 (28) | 0 (02) |

| Number performed successfully without assistance (IQR) | 2 (03) | 4 (15) | 0 (01) |

Simulation training was associated with an increase in knowledge. Mean scores on multiple‐choice tests increased from 65.7 11.9% to 81.2 10.7 (P < 0.001). Furthermore, simulation training was associated with improvement in CVC performance. Median global average rating score increased from 3.5 (IQR = 34) to 4.5 (IQR = 44.5) (P < 0.001).

The procedural checklist median score increased from 9 (IQR = 69.5) to 9.5 (IQR = 99.5) (P <.001), median time required for completion of CVC decreased from 8 minutes 22 seconds (IQR = 7 minutes 37 seconds to 12 minutes 1 second) to 6 minutes 43 seconds (IQR = 6 minutes to 7 minutes 25 seconds) (P = 0.002). Other performance measures are summarized in Table 2. Last, simulation training was associated with an increase in self‐rated confidence. The median confidence rating (0 lowest, 5 highest) increased from 3 (moderate, IQR = 23) to 4 (good, IQR = 34) (P < 0.001). The overall satisfaction with the CVC simulation course was good (Table 3) and all 30 participants felt this course should continue to be offered.

| Pre | Post | P Value | |

|---|---|---|---|

| |||

| Number of attempts to locate vein; n (%) | 0.01 | ||

| 1 | 16 | 26 | |

| 2 | 14 | 4 | |

| Number of attempts to insert catheter; n (%) | 0.32 | ||

| 1 | 27 | 29 | |

| >1 | 3 | 1 | |

| Median time and motion score (IQR) | 4 (34) | 5 (45) | <0.001 |

| Median instrument handling score (IQR) | 4 (34) | 5 (45) | <0.001 |

| Median flow of operation score (IQR) | 4 (35) | 5 (45) | <0.001 |

| Median knowledge of instruments score (IQR) | 4 (35) | 5 (45) | 0.004 |

| Agree Strongly | Agree | Neutral | Disagree | Disagree Strongly | |

|---|---|---|---|---|---|

| |||||

| Course improved technical ability to insert central lines | 3 | 21 | 6 | 0 | 0 |

| Course decreased anxiety related to line placement | 6 | 22 | 2 | 0 | 0 |

| Course improved ability to deliver patient care | 6 | 22 | 2 | 0 | 0 |

Knowledge Retention

Sixteen participants completed the knowledge‐retention multiple‐choice tests. No significant differences in baseline characteristics were found between those who completed the knowledge retention tests (n = 16) vs. those who did not (n = 17) in baseline knowledge scores, previous ICU training, gender, and baseline self‐rated confidence.

Mean baseline multiple‐choice test score in the 16 participants who completed the knowledge retention tests was 65.3% 10.6%. Mean score 1 week posttraining was 80.3 9.4%. Mean score at 18 months was 78.4 9.6%. Results from 1‐way ANOVA, repeated‐measures design showed a significant improvement in knowledge scores, F(2, 30) = 14.83, P < 0.0001. Contrasts showed that scores at 1 week were significantly higher than baseline scores (P < 0.0001), and improvement in scores at 18 months was retained, remaining significantly higher than baseline scores (P = 0.002). Median confidence level increased from 3 (moderate) at baseline, to 4 (good) at 1‐week posttraining. Confidence level remained 4 (good) at 18 months (P < 0.0001).

Discussion

CVC is an important procedural competency for internists to master. Indications for CVC range from measuring of central venous pressure to administration of specific medications or solutions such as inotropic agents and total parental nutrition. Its use is important in the care of those who are acutely or critically ill and those who are chronically ill. CVC is associated with a number of potentially serious mechanical complications, including arterial puncture, pneumothorax, and death.11, 17 Proficiency in CVC insertion is found to be closely related to operator experience: catheter insertion by physicians who have performed 50 or more CVC are one‐half as likely to sustain complications as catheter insertion by less experienced physicians.18 Furthermore, training has been found to be associated with a decrease in risk for catheter associated infection19 and pneumothorax.20 Therefore, cumulative experience from repeated clinical encounters and education on CVC play an important role in optimizing patient safety and reducing potential errors associated with CVC. It is well‐documented that patients are generally reluctant to allow medical trainees to learn or practice procedures on them,7 especially in the early phases of training. Indeed, close to 50% of internal medicine residents in 1 study reported poor comfort in performing CVCs.8

Deficiencies in procedural teaching in internal medicine residency curriculum have long been recognized.4 Over the past 20 years, there has been a decrease in the percentage of internists who place central lines in practice.5 In the United States, more than 5 million central venous catheters are inserted every year.21 Presumably, concurrent with this trend is an increasing reliance on other specialists such as radiologists or surgical colleagues in the placement of CVCs. Without a concerted effort for medical educators in improving the quality of procedural teaching, this alarming trend is unlikely to be halted.

With the advance of modern technology and medical education, patient simulation offers a new platform for procedural teaching to take place: a way for trainees to practice without jeopardizing patient safety, as well as an opportunity for formal teaching and supervision to occur,9, 22 serving as a welcomed departure from the traditional see 1, do 1, teach 1 model to procedural teaching. While there is data to support the role of simulation teaching in the surgical training programs,16, 23 less objective evidence exists to support its use in internal medicine residency training programs.

Our study supports the use of simulation in training first‐year internal medicine residents in CVC. Our formal curriculum on CVC involving simulation was associated with an increase in knowledge of the procedure, observed procedural performance, and subjective confidence. Furthermore, this improvement in knowledge and confidence was maintained at 18 months. Our study has several limitations, such as those inherent to all studies using the prestudy and poststudy design. Improvement in performance under study may be a result of the Hawthorne effect. We are not able to isolate the effect of self‐directed learning from the benefits from training using simulators. Furthermore, improvement in knowledge and confidence may be a function of time rather than training. However, by not comparing our results to that from a historical cohort, we hope to minimize confounders by having participants serve as their own control. Results from our study would be strengthened by the introduction of a control group and a randomized design. Future study should include a randomized, controlled crossover educational trial. The second limitation of our study is the low interobserver agreement between video raters, indicating only fair to moderate agreement. Although our video raters were trained senior medical residents and faculty members who frequently supervise and evaluate procedures, we did not specifically train them for the purposes of scoring videotaped performances. Further faculty development is needed. Overall, the magnitudes of the interobserver differences were small (mean global rating scale between raters differed by 1 0.9), and may not translate into clinically important differences. Third, competence on performing CVC on simulators has not been directly correlated with clinical competence on real‐life patients, although a previous study on surgical interns suggested that simulator training was associated with clinical competence on real patients.16 Future studies are warranted to investigate the association between educational benefits from simulation and actual error reductions from CVC during patient encounters. Fourth, our study did not examine residents in the use of ultrasound during simulator training. Use of ultrasound devices has been shown to decrease complication rates of CVC insertion24 and increase rates of success,25 and is recommended as the preferred method for insertion of elective internal jugular CVCs.26 However, because our course was introduced during first‐year residency, when majority of the residents have performed fewer than 5 internal jugular CVCs prior to their simulation training session, the objective of our introductory course was to first ensure technical competency by landmark techniques. Once learners have mastered the technical aspect of line insertion, ultrasound‐guided techniques were introduced later in the residency training curriculum. Fifth, only 48% of participants completed the knowledge assessment at 18 months. Selection bias may be present in our long‐term data. However, baseline comparisons between those who completed the 18 month assessment vs. those who did not demonstrated no obvious differences between the 2 groups. Performance was not examined in our long‐term data. Demonstration of retention of psychomotor skills would be reassuring. However, by 18 months, many of our learners had completed a number of procedurally‐intensive rotations. Therefore, because retention of skills could not be reliably attributed to our initial training in the simulator laboratory, the participants were not retested in their performance of the CVC. Future study should include a control group that undergoes delayed training at 18 months to best assess the effect of simulation training on performance. Sixth, only overall confidence in performing CVC was captured. Confidence in each venous access site pretraining and posttraining was not assessed and warrants further study. Last, our study did not document overall costs of delivering the program, including cost of the simulator, facility, supplies, and stipends for teaching faculty. One full‐time equivalent (FTE) staff has previously been estimated to be a requirement for the success of the program.9 Our program required 3 medical educators, all of whom are clinical faculty members, as well as the help of 4 chief medical residents.

Despite our study's limitations, our study has a number of strengths. To our knowledge, our study is the first of its kind examining the use of simulators on CVC procedural performance, with the use of video‐recording, in an independent and blinded manner. Blinding should minimize bias associated with assessments of resident competency by evaluators who may be aware of residents' previous experience. Second, we were able to recruit all of our first‐year residents for our pretests and posttests, although 3 did not complete the study in its entirety due to on‐call or post‐call schedules. We were able to obtain detailed assessment of knowledge, performance, and subjective confidence before and after the simulation training on our cohort of residents and were able to demonstrate an improvement in knowledge, skills, and confidence. Furthermore, we were able to demonstrate that benefits in knowledge and confidence were retained at 18 months. Last, we readily implemented our formal CVC curriculum into the existing residency training schedule. It requires a single, 2‐hour session in addition to brief pretest and posttest surveys. It requires minimal equipment, using only 2 CVC mannequins, 4 to 6 central line kits, and an ultrasound machine. The simulation training adheres to and incorporates key elements that have been previously identified as effective teaching tools for simulators: feedback, repetitive practice, and curriculum integration.27 With increasing interest in the use of medical simulation for training and competency assessment in medical education,10 our study provides evidence in support of its use in improving knowledge, skills, and confidence. One of the most important goals of using simulators as an educational tool is to improve patient safety. While assessing for whether or not training on simulators lead to improved patient outcomes is beyond the scope of our study, this question merits further study and should be the focus of future studies.

Conclusions

Training on CVC simulators was associated with an improvement in performance, and increase in knowledge assessment scores and self‐reported confidence in internal medicine residents. Improvement in knowledge and confidence was maintained at 18 months.

Acknowledgements

The authors acknowledge the help of Dr. Valentyna Koval and the staff at the Center of Excellence in Surgical Education and Innovations, as well as Drs. Matt Bernard, Raheem B. Kherani, John Staples, Hin Hin Ko, and Dan Renouf for their help on reviewing of videotapes and simulation teaching.

Appendix

Modified Global Rating Scale

Appendix

Procedural Checklist

The Accreditation Council for Graduate Medical Education mandates that internal medicine residents develop technical proficiency in performing central venous catheterization (CVC).1 Likewise in Canada, technical expertise in performing CVC is a specific competency requirement in the training objectives in internal medicine by the Royal College of Physicians and Surgeons of Canada.2 For certification in Internal Medicine, the American Board of Internal Medicine expects its candidates to be competent with respect to knowledge and understanding of the indications, contraindications, recognition, and management of complications of CVC.3 Despite the importance of procedural teaching in medical education, most internal medicine residency programs do not have formal procedural teaching.4 A number of potential barriers to teaching CVC exist. A recent study by the American College of Physicians found that fewer general internists perform procedures, compared with internists in the 1980s.5 With fewer internists performing procedures, there may be fewer educators with adequate confidence in teaching these procedures.6 Second, with increasing recognition of patient safety, there is a growing reluctance of patients to be used as teaching subjects for those who are learning CVC.7 Not surprisingly, residents report low comfort in performing CVC.8

Teaching procedures on simulators offers the benefits of allowing both teaching and evaluation of procedures without jeopardizing patient safety9 and is a welcomed variation from the traditional see 1, do 1, teach 1 approach.10 Despite the theoretical benefits of the use of simulators in medical education, the efficacy of their use in teaching internal medicine residents remains understudied.

The purpose of our study was to evaluate the benefits of using simulator models in teaching CVC to internal medicine residents. We hypothesized that participation in a simulator‐based teaching session on CVC would result in improved knowledge and performance of the procedure and confidence.

Methods

Participants

The study was approved by our university and hospital ethics review board. All first‐year internal medicine residents in a single academic institution, who provided written informed consent, were included and enrolled in a simulator curriculum on CVC.

Intervention

All participants completed a baseline multiple choice knowledge test on anatomy, procedural technique, and complications of CVC, as well as a self‐assessment questionnaire on confidence and prior experience of CVC. Upon completion of these, participants were provided access to Internet links of multimedia educational materials on CVC, including references for suggested reading.11, 12 One week later, participants, in small groups of 3 to 5 subjects, attended a 2‐hour simulation session on CVC. For each participant, the baseline performance of an internal jugular CVC on a simulator (Laerdal IV Torso; Laerdal Medical Corp, Wappingers Falls, NY) was videotaped in a blinded fashion. Blinding was done by assigning participants study numbers. No personal identifying information was recorded, as only gowned and gloved hands, arms, and parts of the simulator were recorded. A faculty member and a senior medical resident then demonstrated to each participant the proper CVC techniques on the simulator, which was followed by an 1‐hour practice session with the simulator. Feedback was provided by the supervising faculty member and senior resident during the practice session. Ultrasound‐guided CVC was demonstrated during the session, but not included for evaluation. postsession, each participant's repeat performance of an internal jugular CVC was videotaped in a blinded fashion, similar to that previously described. All participants then repeated the multiple‐choice knowledge assessment 1 week after the simulation session. For assessment of knowledge retention and confidence, all participants were invited to complete the multiple‐choice knowledge assessment at 18 months after training on simulation.

Measurements and Outcomes

We assessed knowledge on CVC based on performance on a 20‐item multiple‐choice test (scored out of 20, presented as a percentage). Questions were constructed based on information from published literature,11 covering areas of anatomy, procedural technique, and complications of CVC. Procedural skills were assessed by 2 raters using a previously validated modified global rating scale (5‐point Likert scale design)13, 14 during review of videotaped performances (Appendix A). Our primary outcome was an overall global rating score. Review was done by 2 independent, blinded raters who were not aware of whether the performances were recorded presimulation or postsimulation training sessions. Weighted Kappa scores for interobserver agreement between video reviewers ranged between 0.35 (95% confidence interval, 0.250.60) for the global average rating score to 0.43 (95% confidence interval, 0.170.53) for the procedural checklist. These Kappa scores indicate fair to moderate agreement, respectively.15

Secondary outcomes included other domains on the global rating scale such as: time and motion, instrument handling, flow of operation, knowledge of instruments,13, 14 checklist score, number of attempts to locate vein and insert catheter, time to complete the procedure, and self‐reported confidence. The criteria of this checklist score were based on a previously published 14‐item checklist,16 assessing for objective completion of specific tasks (Appendix B). Participants were scored based on 10 items, as the remaining 4 items on the original checklist were not applicable to our simulation session (that is, the items on site selection, catheter selection, Trendelenburg positioning, and sterile sealing of site). In our study, all participants were asked to complete an internal jugular catheterization with a standard kit provided, without the need to dress the site after line insertion. Confidence was assessed by self‐report on a 6‐point Likert scale ranging from none to complete.

Statistical Analysis

Comparisons between pretraining and posttraining were made with the use of McNemar's test for paired data and Wilcoxon signed‐rank test where appropriate. Usual descriptive statistics using mean standard deviation (SD), median and interquartile range (IQR) are reported. Comparisons between presession, postsession, and 18‐month data were analyzed using 1‐way analysis of variance (ANOVA), repeated measures design. Homogeneity of covariance assumption test indicated no evidence of nonsphericity in data (Mauchly's criterion; P = 0.53). All reported P values are 2‐sided. Analyses were conducted using the SAS version 9.1 (SAS Institute, Cary, NC).

Results

Of the 33 residents in the first‐year internal medicine program, all consented to participate. Thirty participants (91%) completed the study protocol between January and June 2007. The remaining three residents completed the presession assessments and the simulation session, but did not complete the postsession assessments. These were excluded from our analysis. At baseline, 20 participants had rotated through a previous 1‐month intensive care unit (ICU) block, 8 residents had no prior ICU training, and 2 residents had 2 months of ICU training. Table 1 summarizes the residents' baseline CVC experience prior to the simulation training. In general, participants had limited experience with CVC at baseline, having placed very few internal jugular CVCs (median = 4).

| Femoral Lines | Internal Jugular Lines | Subclavian Lines | |

|---|---|---|---|

| |||

| Number observed (IQR) | 4 (25) | 5 (37) | 2 (13) |

| Number attempted or performed (IQR) | 3 (15) | 4 (28) | 0 (02) |

| Number performed successfully without assistance (IQR) | 2 (03) | 4 (15) | 0 (01) |

Simulation training was associated with an increase in knowledge. Mean scores on multiple‐choice tests increased from 65.7 11.9% to 81.2 10.7 (P < 0.001). Furthermore, simulation training was associated with improvement in CVC performance. Median global average rating score increased from 3.5 (IQR = 34) to 4.5 (IQR = 44.5) (P < 0.001).

The procedural checklist median score increased from 9 (IQR = 69.5) to 9.5 (IQR = 99.5) (P <.001), median time required for completion of CVC decreased from 8 minutes 22 seconds (IQR = 7 minutes 37 seconds to 12 minutes 1 second) to 6 minutes 43 seconds (IQR = 6 minutes to 7 minutes 25 seconds) (P = 0.002). Other performance measures are summarized in Table 2. Last, simulation training was associated with an increase in self‐rated confidence. The median confidence rating (0 lowest, 5 highest) increased from 3 (moderate, IQR = 23) to 4 (good, IQR = 34) (P < 0.001). The overall satisfaction with the CVC simulation course was good (Table 3) and all 30 participants felt this course should continue to be offered.

| Pre | Post | P Value | |

|---|---|---|---|

| |||

| Number of attempts to locate vein; n (%) | 0.01 | ||

| 1 | 16 | 26 | |

| 2 | 14 | 4 | |

| Number of attempts to insert catheter; n (%) | 0.32 | ||

| 1 | 27 | 29 | |

| >1 | 3 | 1 | |

| Median time and motion score (IQR) | 4 (34) | 5 (45) | <0.001 |

| Median instrument handling score (IQR) | 4 (34) | 5 (45) | <0.001 |

| Median flow of operation score (IQR) | 4 (35) | 5 (45) | <0.001 |

| Median knowledge of instruments score (IQR) | 4 (35) | 5 (45) | 0.004 |

| Agree Strongly | Agree | Neutral | Disagree | Disagree Strongly | |

|---|---|---|---|---|---|

| |||||

| Course improved technical ability to insert central lines | 3 | 21 | 6 | 0 | 0 |

| Course decreased anxiety related to line placement | 6 | 22 | 2 | 0 | 0 |

| Course improved ability to deliver patient care | 6 | 22 | 2 | 0 | 0 |

Knowledge Retention

Sixteen participants completed the knowledge‐retention multiple‐choice tests. No significant differences in baseline characteristics were found between those who completed the knowledge retention tests (n = 16) vs. those who did not (n = 17) in baseline knowledge scores, previous ICU training, gender, and baseline self‐rated confidence.

Mean baseline multiple‐choice test score in the 16 participants who completed the knowledge retention tests was 65.3% 10.6%. Mean score 1 week posttraining was 80.3 9.4%. Mean score at 18 months was 78.4 9.6%. Results from 1‐way ANOVA, repeated‐measures design showed a significant improvement in knowledge scores, F(2, 30) = 14.83, P < 0.0001. Contrasts showed that scores at 1 week were significantly higher than baseline scores (P < 0.0001), and improvement in scores at 18 months was retained, remaining significantly higher than baseline scores (P = 0.002). Median confidence level increased from 3 (moderate) at baseline, to 4 (good) at 1‐week posttraining. Confidence level remained 4 (good) at 18 months (P < 0.0001).

Discussion

CVC is an important procedural competency for internists to master. Indications for CVC range from measuring of central venous pressure to administration of specific medications or solutions such as inotropic agents and total parental nutrition. Its use is important in the care of those who are acutely or critically ill and those who are chronically ill. CVC is associated with a number of potentially serious mechanical complications, including arterial puncture, pneumothorax, and death.11, 17 Proficiency in CVC insertion is found to be closely related to operator experience: catheter insertion by physicians who have performed 50 or more CVC are one‐half as likely to sustain complications as catheter insertion by less experienced physicians.18 Furthermore, training has been found to be associated with a decrease in risk for catheter associated infection19 and pneumothorax.20 Therefore, cumulative experience from repeated clinical encounters and education on CVC play an important role in optimizing patient safety and reducing potential errors associated with CVC. It is well‐documented that patients are generally reluctant to allow medical trainees to learn or practice procedures on them,7 especially in the early phases of training. Indeed, close to 50% of internal medicine residents in 1 study reported poor comfort in performing CVCs.8

Deficiencies in procedural teaching in internal medicine residency curriculum have long been recognized.4 Over the past 20 years, there has been a decrease in the percentage of internists who place central lines in practice.5 In the United States, more than 5 million central venous catheters are inserted every year.21 Presumably, concurrent with this trend is an increasing reliance on other specialists such as radiologists or surgical colleagues in the placement of CVCs. Without a concerted effort for medical educators in improving the quality of procedural teaching, this alarming trend is unlikely to be halted.

With the advance of modern technology and medical education, patient simulation offers a new platform for procedural teaching to take place: a way for trainees to practice without jeopardizing patient safety, as well as an opportunity for formal teaching and supervision to occur,9, 22 serving as a welcomed departure from the traditional see 1, do 1, teach 1 model to procedural teaching. While there is data to support the role of simulation teaching in the surgical training programs,16, 23 less objective evidence exists to support its use in internal medicine residency training programs.

Our study supports the use of simulation in training first‐year internal medicine residents in CVC. Our formal curriculum on CVC involving simulation was associated with an increase in knowledge of the procedure, observed procedural performance, and subjective confidence. Furthermore, this improvement in knowledge and confidence was maintained at 18 months. Our study has several limitations, such as those inherent to all studies using the prestudy and poststudy design. Improvement in performance under study may be a result of the Hawthorne effect. We are not able to isolate the effect of self‐directed learning from the benefits from training using simulators. Furthermore, improvement in knowledge and confidence may be a function of time rather than training. However, by not comparing our results to that from a historical cohort, we hope to minimize confounders by having participants serve as their own control. Results from our study would be strengthened by the introduction of a control group and a randomized design. Future study should include a randomized, controlled crossover educational trial. The second limitation of our study is the low interobserver agreement between video raters, indicating only fair to moderate agreement. Although our video raters were trained senior medical residents and faculty members who frequently supervise and evaluate procedures, we did not specifically train them for the purposes of scoring videotaped performances. Further faculty development is needed. Overall, the magnitudes of the interobserver differences were small (mean global rating scale between raters differed by 1 0.9), and may not translate into clinically important differences. Third, competence on performing CVC on simulators has not been directly correlated with clinical competence on real‐life patients, although a previous study on surgical interns suggested that simulator training was associated with clinical competence on real patients.16 Future studies are warranted to investigate the association between educational benefits from simulation and actual error reductions from CVC during patient encounters. Fourth, our study did not examine residents in the use of ultrasound during simulator training. Use of ultrasound devices has been shown to decrease complication rates of CVC insertion24 and increase rates of success,25 and is recommended as the preferred method for insertion of elective internal jugular CVCs.26 However, because our course was introduced during first‐year residency, when majority of the residents have performed fewer than 5 internal jugular CVCs prior to their simulation training session, the objective of our introductory course was to first ensure technical competency by landmark techniques. Once learners have mastered the technical aspect of line insertion, ultrasound‐guided techniques were introduced later in the residency training curriculum. Fifth, only 48% of participants completed the knowledge assessment at 18 months. Selection bias may be present in our long‐term data. However, baseline comparisons between those who completed the 18 month assessment vs. those who did not demonstrated no obvious differences between the 2 groups. Performance was not examined in our long‐term data. Demonstration of retention of psychomotor skills would be reassuring. However, by 18 months, many of our learners had completed a number of procedurally‐intensive rotations. Therefore, because retention of skills could not be reliably attributed to our initial training in the simulator laboratory, the participants were not retested in their performance of the CVC. Future study should include a control group that undergoes delayed training at 18 months to best assess the effect of simulation training on performance. Sixth, only overall confidence in performing CVC was captured. Confidence in each venous access site pretraining and posttraining was not assessed and warrants further study. Last, our study did not document overall costs of delivering the program, including cost of the simulator, facility, supplies, and stipends for teaching faculty. One full‐time equivalent (FTE) staff has previously been estimated to be a requirement for the success of the program.9 Our program required 3 medical educators, all of whom are clinical faculty members, as well as the help of 4 chief medical residents.

Despite our study's limitations, our study has a number of strengths. To our knowledge, our study is the first of its kind examining the use of simulators on CVC procedural performance, with the use of video‐recording, in an independent and blinded manner. Blinding should minimize bias associated with assessments of resident competency by evaluators who may be aware of residents' previous experience. Second, we were able to recruit all of our first‐year residents for our pretests and posttests, although 3 did not complete the study in its entirety due to on‐call or post‐call schedules. We were able to obtain detailed assessment of knowledge, performance, and subjective confidence before and after the simulation training on our cohort of residents and were able to demonstrate an improvement in knowledge, skills, and confidence. Furthermore, we were able to demonstrate that benefits in knowledge and confidence were retained at 18 months. Last, we readily implemented our formal CVC curriculum into the existing residency training schedule. It requires a single, 2‐hour session in addition to brief pretest and posttest surveys. It requires minimal equipment, using only 2 CVC mannequins, 4 to 6 central line kits, and an ultrasound machine. The simulation training adheres to and incorporates key elements that have been previously identified as effective teaching tools for simulators: feedback, repetitive practice, and curriculum integration.27 With increasing interest in the use of medical simulation for training and competency assessment in medical education,10 our study provides evidence in support of its use in improving knowledge, skills, and confidence. One of the most important goals of using simulators as an educational tool is to improve patient safety. While assessing for whether or not training on simulators lead to improved patient outcomes is beyond the scope of our study, this question merits further study and should be the focus of future studies.

Conclusions

Training on CVC simulators was associated with an improvement in performance, and increase in knowledge assessment scores and self‐reported confidence in internal medicine residents. Improvement in knowledge and confidence was maintained at 18 months.

Acknowledgements

The authors acknowledge the help of Dr. Valentyna Koval and the staff at the Center of Excellence in Surgical Education and Innovations, as well as Drs. Matt Bernard, Raheem B. Kherani, John Staples, Hin Hin Ko, and Dan Renouf for their help on reviewing of videotapes and simulation teaching.

Appendix

Modified Global Rating Scale

Appendix

Procedural Checklist

- Accreditation Council of Graduate Medical Education. ACGME Program Requirements for Residency Education in Internal Medicine Available at: http://www.acgme.org/acWebsite/downloads/RRC_progReq/140_im_07012007. pdf. Accessed June2009.

- The Royal College of Physicians and Surgeons of Canada. Objectives of training in internal medicine. Available at: http://rcpsc.medical.org/information/index.php?specialty=13615(6):432–433.

- ,.The declining number and variety of procedures done by general internists: a resurvey of members of the American College of Physicians.Ann Intern Med.2007;146(5):355–360.

- ,,, et al.Confidence of academic general internists and family physicians to teach ambulatory procedures.J Gen Intern Med.2000;15(6):353–360.

- ,,,.Patients' willingness to allow residents to learn to practice medical procedures.Acad Med.2004;79(2):144–147.

- ,,, et al.Beyond the comfort zone: residents assess their comfort performing inpatient medical procedures.Am J Med.2006;119(1):71.e17–e24.

- ,,,,.Clinical simulation: importance to the internal medicine educational mission.Am J Med.2007;120(9):820–824.

- ,,.Simulation technology for skills training and competency assessment in medical education.J Gen Intern Med.2008;23(suppl 1):46–49.

- ,.Preventing complications of central venous catheterization.N Engl J Med.2003;348(12):1123–1133.

- ,,,,.Videos in clinical medicine. Central venous catheterization.N Engl J Med.2007;356(21):e21.

- ,,,,.Testing technical skill via an innovative “bench station” examination.Am J Surg.1997;173(3):226–230.

- ,,,.Objective assessment of technical skills in surgery.BMJ.2003;327(7422):1032–1037.

- ,,,.Diagnosis. Measuring agreement beyond chance. In: Guyatt G, Rennie D, eds.User's Guides to the Medical Literature: A Manual for Evidence‐Based Clinical Practice.Chicago:American Medical Association Press;2002:461–470.

- ,,, et al.Cognitive task analysis for teaching technical skills in an inanimate surgical skills laboratory.Am J Surg.2004;187(1):114–119.

- ,.Central venous catheterization.Crit Care Med.2007;35(5):1390–1396.

- ,,,,.Central vein catheterization. Failure and complication rates by three percutaneous approaches.Arch Intern Med.1986;146(2):259–261.

- ,,, et al.Education of physicians‐in‐training can decrease the risk for vascular catheter infection.Ann Intern Med.2000;132(8):641–648.

- ,,,.Training fourth‐year medical students in critical invasive skills improves subsequent patient safety.Am Surg.2003;69(5):437–440.

- .Intravascular‐catheter‐related infections.Lancet.1998;351(9106):893–898.

- ,.Procedural simulation's developing role in medicine.Lancet.2007;369(9574):1671–1673.

- ,.Teaching surgical skills—changes in the wind.N Engl J Med.2006;355(25):2664–2669.

- ,,,,.Effect of the implementation of NICE guidelines for ultrasound guidance on the complication rates associated with central venous catheter placement in patients presenting for routine surgery in a tertiary referral centre.Br J Anaesth.2007;99(5):662–665.

- ,,, et al.Ultrasonic locating devices for central venous cannulation: meta‐analysis.BMJ.2003;327(7411):361.

- National Institute for Clinical Excellence. Guidance on the use of ultrasound locating devices for placing central venous catheters. Available at: http://www.nice.org.uk/Guidance/TA49/Guidance/pdf/English. Accessed June2009.

- ,,,,.Features and uses of high‐fidelity medical simulations that lead to effective learning: a BEME systematic review.Med Teach.2005;27(1):10–28.

- Accreditation Council of Graduate Medical Education. ACGME Program Requirements for Residency Education in Internal Medicine Available at: http://www.acgme.org/acWebsite/downloads/RRC_progReq/140_im_07012007. pdf. Accessed June2009.

- The Royal College of Physicians and Surgeons of Canada. Objectives of training in internal medicine. Available at: http://rcpsc.medical.org/information/index.php?specialty=13615(6):432–433.

- ,.The declining number and variety of procedures done by general internists: a resurvey of members of the American College of Physicians.Ann Intern Med.2007;146(5):355–360.

- ,,, et al.Confidence of academic general internists and family physicians to teach ambulatory procedures.J Gen Intern Med.2000;15(6):353–360.

- ,,,.Patients' willingness to allow residents to learn to practice medical procedures.Acad Med.2004;79(2):144–147.

- ,,, et al.Beyond the comfort zone: residents assess their comfort performing inpatient medical procedures.Am J Med.2006;119(1):71.e17–e24.

- ,,,,.Clinical simulation: importance to the internal medicine educational mission.Am J Med.2007;120(9):820–824.

- ,,.Simulation technology for skills training and competency assessment in medical education.J Gen Intern Med.2008;23(suppl 1):46–49.

- ,.Preventing complications of central venous catheterization.N Engl J Med.2003;348(12):1123–1133.

- ,,,,.Videos in clinical medicine. Central venous catheterization.N Engl J Med.2007;356(21):e21.

- ,,,,.Testing technical skill via an innovative “bench station” examination.Am J Surg.1997;173(3):226–230.

- ,,,.Objective assessment of technical skills in surgery.BMJ.2003;327(7422):1032–1037.

- ,,,.Diagnosis. Measuring agreement beyond chance. In: Guyatt G, Rennie D, eds.User's Guides to the Medical Literature: A Manual for Evidence‐Based Clinical Practice.Chicago:American Medical Association Press;2002:461–470.

- ,,, et al.Cognitive task analysis for teaching technical skills in an inanimate surgical skills laboratory.Am J Surg.2004;187(1):114–119.

- ,.Central venous catheterization.Crit Care Med.2007;35(5):1390–1396.

- ,,,,.Central vein catheterization. Failure and complication rates by three percutaneous approaches.Arch Intern Med.1986;146(2):259–261.

- ,,, et al.Education of physicians‐in‐training can decrease the risk for vascular catheter infection.Ann Intern Med.2000;132(8):641–648.

- ,,,.Training fourth‐year medical students in critical invasive skills improves subsequent patient safety.Am Surg.2003;69(5):437–440.

- .Intravascular‐catheter‐related infections.Lancet.1998;351(9106):893–898.

- ,.Procedural simulation's developing role in medicine.Lancet.2007;369(9574):1671–1673.

- ,.Teaching surgical skills—changes in the wind.N Engl J Med.2006;355(25):2664–2669.

- ,,,,.Effect of the implementation of NICE guidelines for ultrasound guidance on the complication rates associated with central venous catheter placement in patients presenting for routine surgery in a tertiary referral centre.Br J Anaesth.2007;99(5):662–665.

- ,,, et al.Ultrasonic locating devices for central venous cannulation: meta‐analysis.BMJ.2003;327(7411):361.

- National Institute for Clinical Excellence. Guidance on the use of ultrasound locating devices for placing central venous catheters. Available at: http://www.nice.org.uk/Guidance/TA49/Guidance/pdf/English. Accessed June2009.

- ,,,,.Features and uses of high‐fidelity medical simulations that lead to effective learning: a BEME systematic review.Med Teach.2005;27(1):10–28.

Copyright © 2009 Society of Hospital Medicine

Improving Insulin Ordering Safely

The benefits of glycemic control include decreased patient morbidity, mortality, length of stay, and reduced hospital costs. In 2004, the American College of Endocrinology (ACE) issued glycemic guidelines for non‐critical‐care units (fasting glucose <110 mg/dL, nonfasting glucose <180 mg/dL).1 A comprehensive review of inpatient glycemic management called for development and evaluation of inpatient programs and tools.2 The 2006 ACE/American Diabetes Association (ADA) Statement on Inpatient Diabetes and Glycemic Control identified key components of an inpatient glycemic control program as: (1) solid administrative support; (2) a multidisciplinary committee; (3) assessment of current processes, care, and barriers; (4) development and implementation of order sets, protocols, policies, and educational efforts; and (5) metrics for evaluation.3

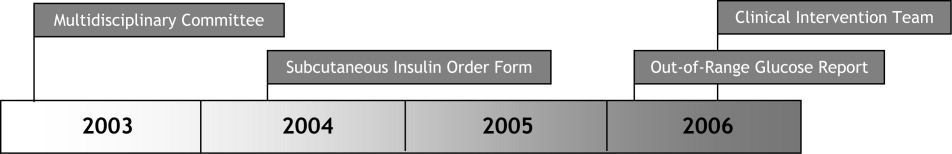

In 2003, Harborview Medical Center (HMC) formed a multidisciplinary committee to institute a Glycemic Control Program. The early goals were to decrease the use of sliding‐scale insulin, increase the appropriate use of basal and prandial insulin, and to avoid hypoglycemia. Here we report our program design and trends in physician insulin ordering from 2003 through 2006.

Patients and Methods

Setting

Seattle's HMC is a 400‐bed level‐1 regional trauma center managed by the University of Washington. The hospital's mission includes serving at‐risk populations. Based on illness, the University HealthSystem Consortium (UHC) assigns HMC the highest predicted mortality among its 131 affiliated hospitals nationwide.4

Patients

We included all patients hospitalized in non‐critical‐care wardsmedical, surgical, and psychiatric. Patients were categorized as dysglycemic if they: (1) received subcutaneous insulin or oral diabetic medications; or (2) had any single glucose level outside the normal range of 125 mg/dL or <60 mg/dL. Patients not meeting these criteria were classified as euglycemic. Approval was obtained from the University of Washington Human Subjects Review Committee.

Program Description

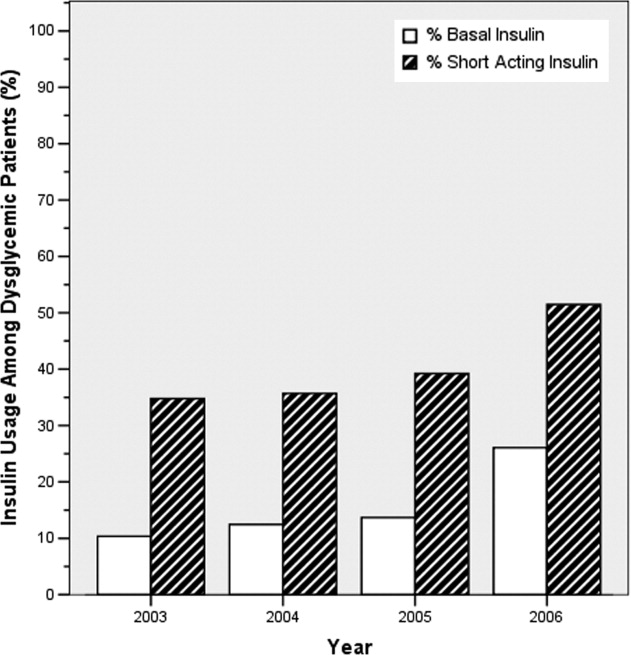

Since 2003, the multidisciplinary committeephysicians, nurses, pharmacy representatives, and dietary and administrative representativeshas directed the development of the Glycemic Control Program with support from hospital administration and the Department of Quality Improvement. Funding for this program has been provided by the hospital based on the prominence of glycemic control among quality and safety measures, a projected decrease in costs, and the high incidence of diabetes in our patient population. Figure 1 outlines the program's key interventions.

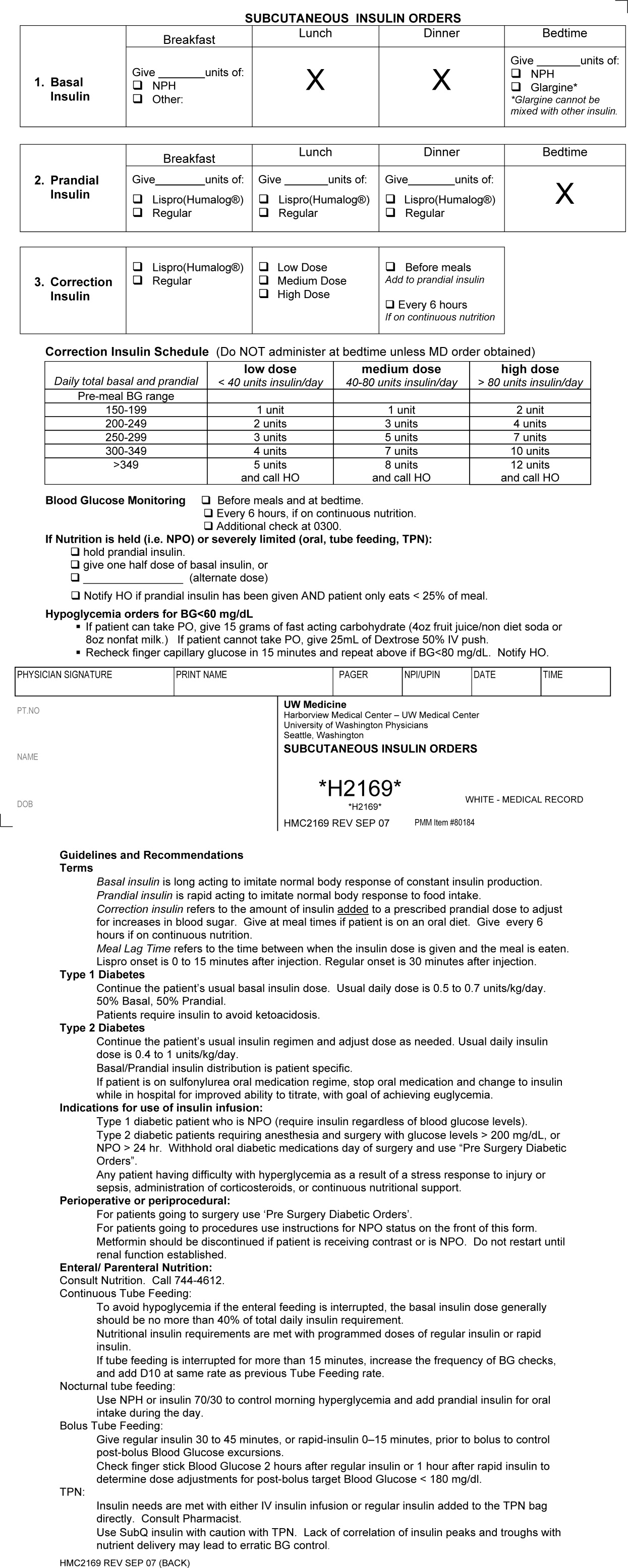

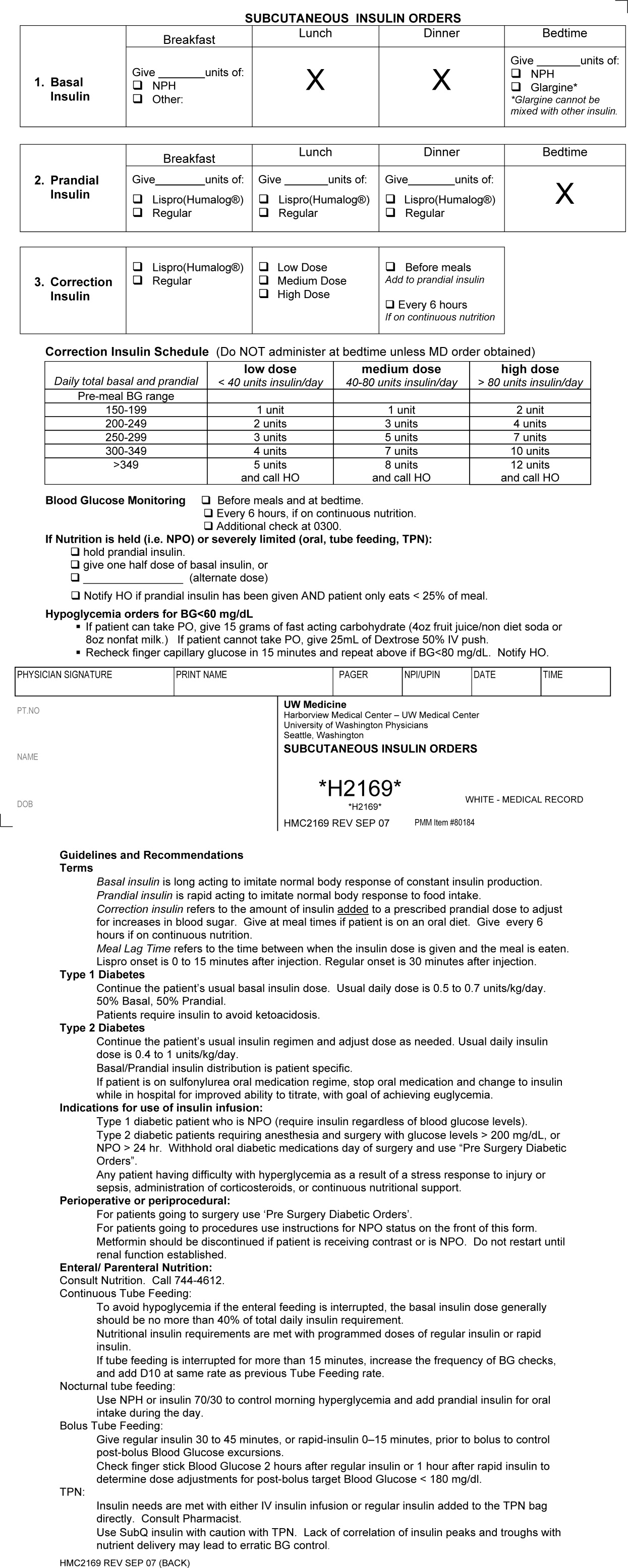

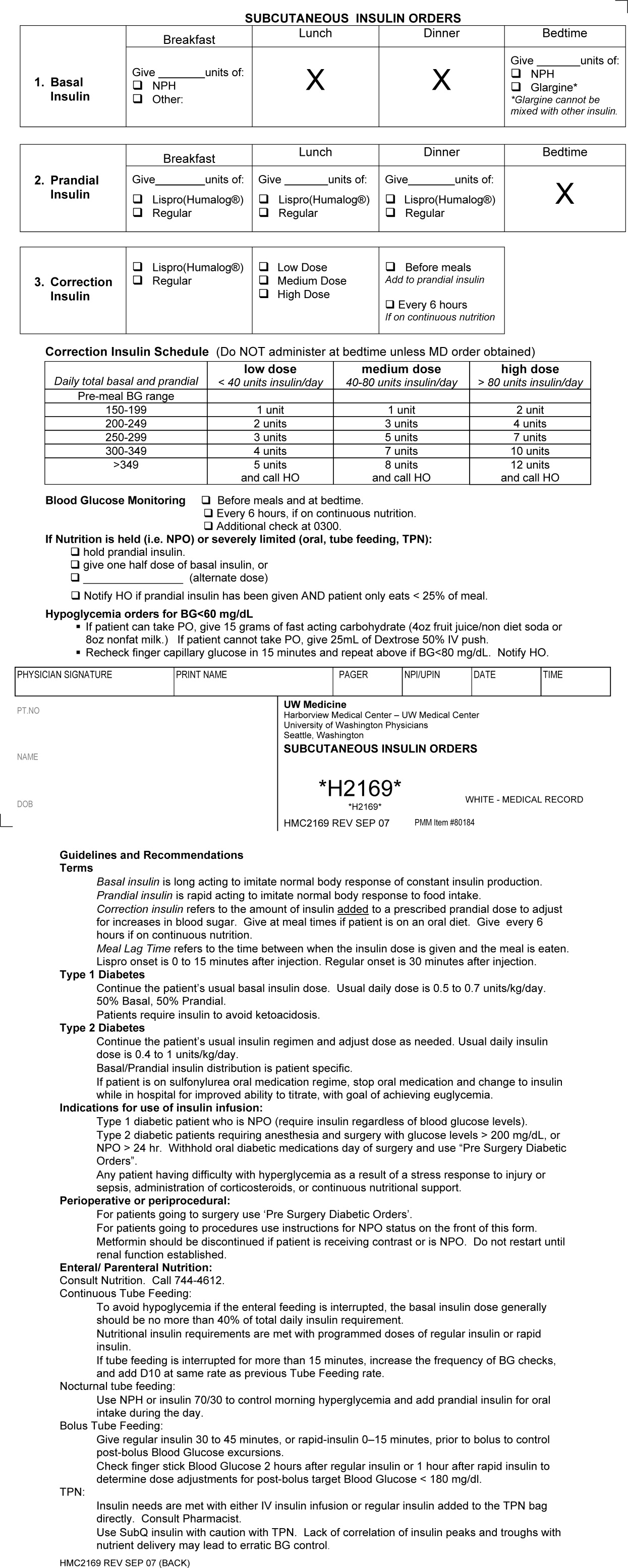

First, a Subcutaneous Insulin Order Form was released for elective use in May 2004 (Figure 2). This form incorporated the 3 components of quality insulin ordering (basal, scheduled prandial, and prandial correction dosing) and provides prompts and education. A Diabetes Nurse Specialist trained nursing staff on the use of the form.

Second, we developed an automated daily data report identifying patients with out‐of‐range glucose levels defined as having any single glucose readings <60 mg/dL or any 2 readings 180 mg/dL within the prior 24 hours. In February 2006, this daily report became available to the clinicians on the committee.

Third, the Glycemic Control Program recruited a full‐time clinical Advanced Registered Nurse Practitioner (ARNP) and part‐time supervising physician to provide directed intervention and education for patients and medical personnel. Since August 2006, the ARNP has reviewed the out‐of‐range report daily, performs assessments, refines insulin orders, and educates clinicians. The assessments include chart review (of history and glycemic control), discussion with primary physician and nurse (and often the dietician), and interview of the patient and/or family. This leads to development and implementation of a glycemic control plan. Clinician education is performed both as direct education of the primary physician at the time of intervention and as didactic sessions.

Outcomes

Physician Insulin Ordering

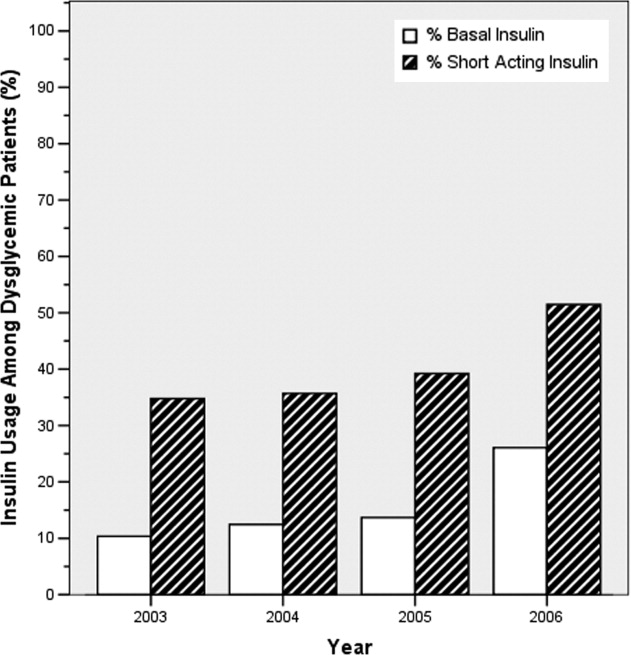

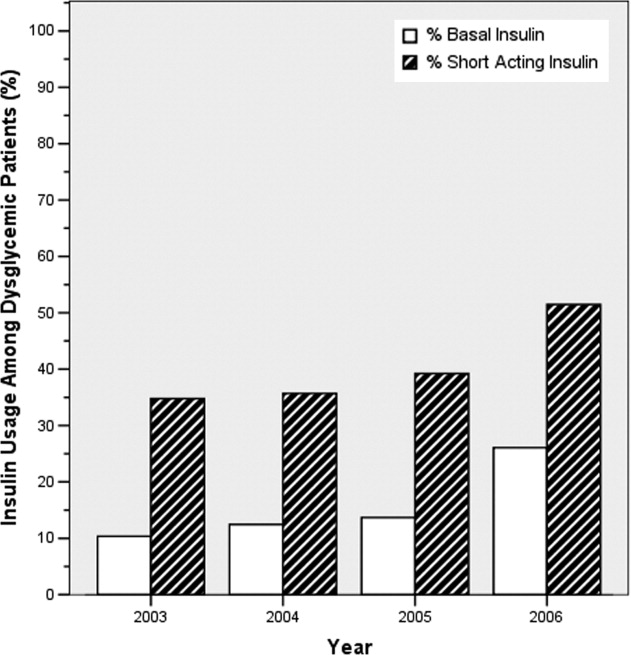

The numbers of patients receiving basal and short‐acting insulin were identified from the electronic medication record. Basal insulin included glargine and neutral protamine Hagerdorn (NPH). Short‐acting insulin (lispro or regular) could be ordered as scheduled prandial, prandial correction, or sliding scale. The distinction between prandial correction and sliding scale is that correction precedes meals exclusively and is not intended for use without food; in contrast, sliding scale is given regardless of food being consumed and is considered substandard. Quality insulin ordering is defined as having basal, prandial scheduled, and prandial correction doses.

In the electronic record, however, we were unable to distinguish the intent of short‐acting insulin orders in the larger data set. Thus, we reviewed a subset of 100 randomly selected charts (25 from each year from 2003 through 2006) to differentiate scheduled prandial, prandial correction, and sliding scale.

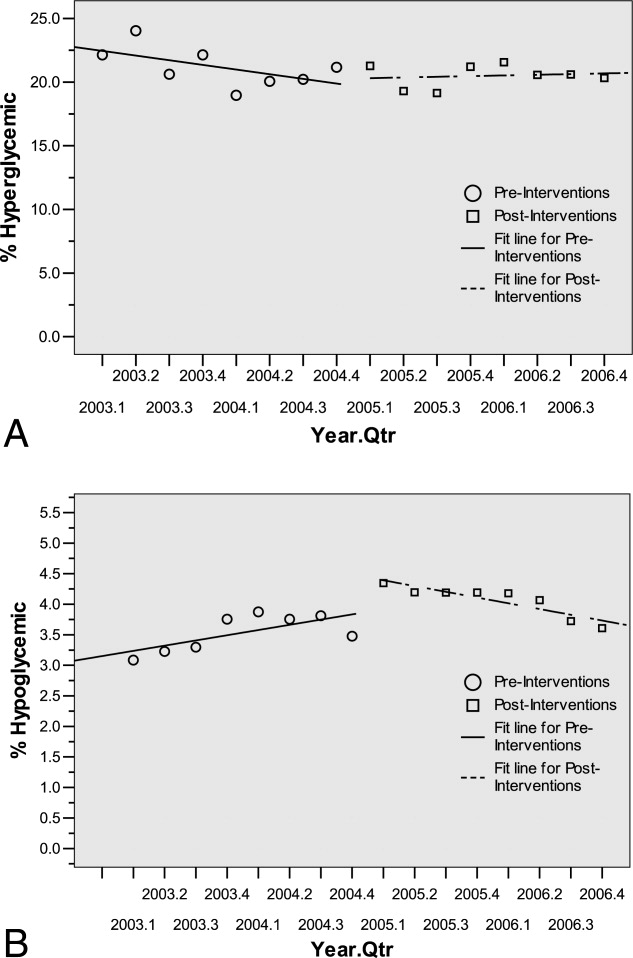

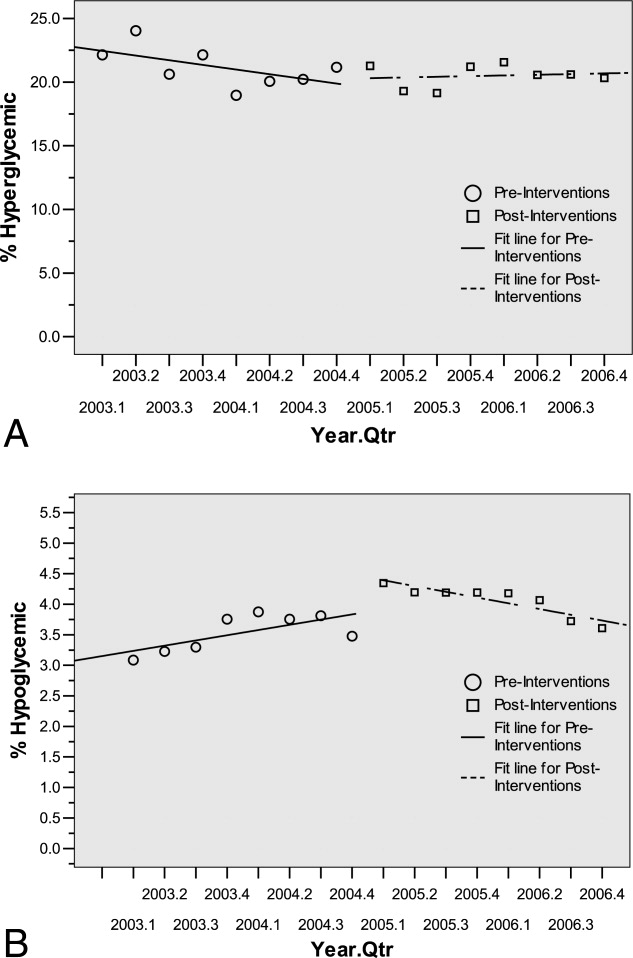

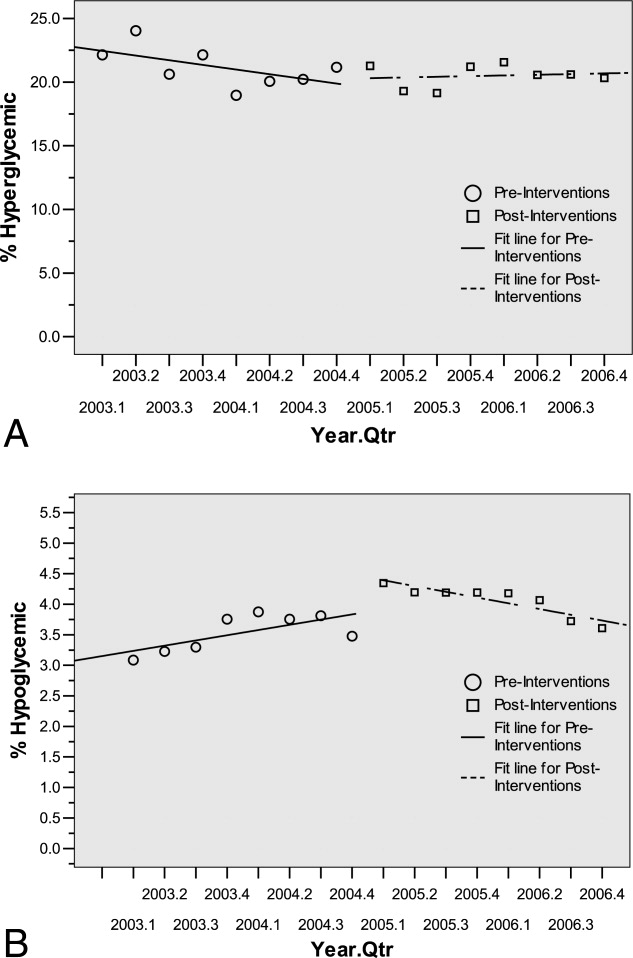

Hyperglycemia

Hyperglycemia was defined as glucose 180 mg/dL. The proportion of dysglycemic patients with hyperglycemia was calculated daily as the percent of dysglycemic patients with any 2 glucose levels 180 mg/dL. Daily values were averaged for quarterly measures.

Hypoglycemia

Hypoglycemia was defined as glucose <60 mg/dL. The proportion of all dysglycemic patients with hypoglycemia was calculated daily as the percent of dysglycemic patients with a single glucose level of <60 mg/dL. Daily values were averaged for quarterly measures.

Data Collection

Data were retrieved from electronic medical records, hospital administrative decision support, and risk‐adjusted5 UHC clinical database information. Glucose data were obtained from laboratory records (venous) and nursing data from bedside chemsticks (capillary).

Statistical Analyses

Data were analyzed using SAS 9.1 (SAS Institute, Cary, NC) and SPSS 13.0 (SPSS, Chicago, IL). The mean and standard deviation (SD) for continuous variables and proportions for categorical variables were calculated. Data were examined, plotted, and trended over time. Where applicable, linear regression trend lines were fitted and tested for statistical significance (P value <0.05).

Results

Patients