User login

Smartwatches able to detect very early signs of Parkinson’s

new research shows.

An analysis of wearable motion-tracking data from UK Biobank participants showed a strong correlation between reduced daytime movement over 1 week and a clinical diagnosis of PD up to 7 years later.

“Smartwatch data is easily accessible and low cost. By using this type of data, we would potentially be able to identify individuals in the very early stages of Parkinson’s disease within the general population,” lead researcher Cynthia Sandor, PhD, from Cardiff (Wales) University, said in a statement.

“We have shown here that a single week of data captured can predict events up to 7 years in the future. With these results we could develop a valuable screening tool to aid in the early detection of Parkinson’s,” she added.

“This has implications both for research, in improving recruitment into clinical trials, and in clinical practice, in allowing patients to access treatments at an earlier stage, in future when such treatments become available,” said Dr. Sandor.

The study was published online in Nature Medicine.

Novel biomarker for PD

Using machine learning, the researchers analyzed accelerometry data from 103,712 UK Biobank participants who wore a medical-grade smartwatch for a 7-day period during 2013-2016.

At the time of or within 2 years after accelerometry data collection, 273 participants were diagnosed with PD. An additional 196 individuals received a new PD diagnosis more than 2 years after accelerometry data collection (the prodromal group).

The patients with prodromal symptoms of PD and those who were diagnosed with PD showed a significantly reduced daytime acceleration profile up to 7 years before diagnosis, compared with age- and sex-matched healthy control persons, the researchers found.

The reduction in acceleration both before and following diagnosis was unique to patients with PD, “suggesting this measure to be disease specific with potential for use in early identification of individuals likely to be diagnosed with PD,” they wrote.

Accelerometry data proved more accurate than other risk factors (lifestyle, genetics, blood chemistry) or recognized prodromal symptoms of PD in predicting whether an individual would develop PD.

“Our results suggest that accelerometry collected with wearable devices in the general population could be used to identify those at elevated risk for PD on an unprecedented scale and, importantly, individuals who will likely convert within the next few years can be included in studies for neuroprotective treatments,” the researchers conclude in their article.

High-quality research

In a statement from the U.K.-based nonprofit Science Media Centre, José López Barneo, MD, PhD, with the University of Seville (Spain), said this “good quality” study “fits well with current knowledge.”

Dr. Barneo noted that other investigators have also observed that slowness of movement is a characteristic feature of some people who subsequently develop PD.

But these studies involved preselected cohorts of persons at risk of developing PD, or they were carried out in a hospital that required healthcare staff to conduct the movement analysis. In contrast, the current study was conducted in a very large cohort from the general U.K. population.

Also weighing in, José Luis Lanciego, MD, PhD, with the University of Navarra (Spain), said the “main value of this study is that it has demonstrated that accelerometry measurements obtained using wearable devices (such as a smartwatch or other similar devices) are more useful than the assessment of any other potentially prodromal symptom in identifying which people in the [general] population are at increased risk of developing Parkinson’s disease in the future, as well as being able to estimate how many years it will take to start suffering from this neurodegenerative process.

“In these diseases, early diagnosis is to some extent questionable, as early diagnosis is of little use if neuroprotective treatment is not available,” Dr. Lanciego noted.

“However, it is of great importance for use in clinical trials aimed at evaluating the efficacy of new potentially neuroprotective treatments whose main objective is to slow down – and, ideally, even halt ― the clinical progression that typically characterizes Parkinson’s disease,” Dr. Lanciego added.

The study was funded by the UK Dementia Research Institute, the Welsh government, and Cardiff University. Dr. Sandor, Dr. Barneo, and Dr. Lanciego have no relevant disclosures.

A version of this article originally appeared on Medscape.com.

new research shows.

An analysis of wearable motion-tracking data from UK Biobank participants showed a strong correlation between reduced daytime movement over 1 week and a clinical diagnosis of PD up to 7 years later.

“Smartwatch data is easily accessible and low cost. By using this type of data, we would potentially be able to identify individuals in the very early stages of Parkinson’s disease within the general population,” lead researcher Cynthia Sandor, PhD, from Cardiff (Wales) University, said in a statement.

“We have shown here that a single week of data captured can predict events up to 7 years in the future. With these results we could develop a valuable screening tool to aid in the early detection of Parkinson’s,” she added.

“This has implications both for research, in improving recruitment into clinical trials, and in clinical practice, in allowing patients to access treatments at an earlier stage, in future when such treatments become available,” said Dr. Sandor.

The study was published online in Nature Medicine.

Novel biomarker for PD

Using machine learning, the researchers analyzed accelerometry data from 103,712 UK Biobank participants who wore a medical-grade smartwatch for a 7-day period during 2013-2016.

At the time of or within 2 years after accelerometry data collection, 273 participants were diagnosed with PD. An additional 196 individuals received a new PD diagnosis more than 2 years after accelerometry data collection (the prodromal group).

The patients with prodromal symptoms of PD and those who were diagnosed with PD showed a significantly reduced daytime acceleration profile up to 7 years before diagnosis, compared with age- and sex-matched healthy control persons, the researchers found.

The reduction in acceleration both before and following diagnosis was unique to patients with PD, “suggesting this measure to be disease specific with potential for use in early identification of individuals likely to be diagnosed with PD,” they wrote.

Accelerometry data proved more accurate than other risk factors (lifestyle, genetics, blood chemistry) or recognized prodromal symptoms of PD in predicting whether an individual would develop PD.

“Our results suggest that accelerometry collected with wearable devices in the general population could be used to identify those at elevated risk for PD on an unprecedented scale and, importantly, individuals who will likely convert within the next few years can be included in studies for neuroprotective treatments,” the researchers conclude in their article.

High-quality research

In a statement from the U.K.-based nonprofit Science Media Centre, José López Barneo, MD, PhD, with the University of Seville (Spain), said this “good quality” study “fits well with current knowledge.”

Dr. Barneo noted that other investigators have also observed that slowness of movement is a characteristic feature of some people who subsequently develop PD.

But these studies involved preselected cohorts of persons at risk of developing PD, or they were carried out in a hospital that required healthcare staff to conduct the movement analysis. In contrast, the current study was conducted in a very large cohort from the general U.K. population.

Also weighing in, José Luis Lanciego, MD, PhD, with the University of Navarra (Spain), said the “main value of this study is that it has demonstrated that accelerometry measurements obtained using wearable devices (such as a smartwatch or other similar devices) are more useful than the assessment of any other potentially prodromal symptom in identifying which people in the [general] population are at increased risk of developing Parkinson’s disease in the future, as well as being able to estimate how many years it will take to start suffering from this neurodegenerative process.

“In these diseases, early diagnosis is to some extent questionable, as early diagnosis is of little use if neuroprotective treatment is not available,” Dr. Lanciego noted.

“However, it is of great importance for use in clinical trials aimed at evaluating the efficacy of new potentially neuroprotective treatments whose main objective is to slow down – and, ideally, even halt ― the clinical progression that typically characterizes Parkinson’s disease,” Dr. Lanciego added.

The study was funded by the UK Dementia Research Institute, the Welsh government, and Cardiff University. Dr. Sandor, Dr. Barneo, and Dr. Lanciego have no relevant disclosures.

A version of this article originally appeared on Medscape.com.

new research shows.

An analysis of wearable motion-tracking data from UK Biobank participants showed a strong correlation between reduced daytime movement over 1 week and a clinical diagnosis of PD up to 7 years later.

“Smartwatch data is easily accessible and low cost. By using this type of data, we would potentially be able to identify individuals in the very early stages of Parkinson’s disease within the general population,” lead researcher Cynthia Sandor, PhD, from Cardiff (Wales) University, said in a statement.

“We have shown here that a single week of data captured can predict events up to 7 years in the future. With these results we could develop a valuable screening tool to aid in the early detection of Parkinson’s,” she added.

“This has implications both for research, in improving recruitment into clinical trials, and in clinical practice, in allowing patients to access treatments at an earlier stage, in future when such treatments become available,” said Dr. Sandor.

The study was published online in Nature Medicine.

Novel biomarker for PD

Using machine learning, the researchers analyzed accelerometry data from 103,712 UK Biobank participants who wore a medical-grade smartwatch for a 7-day period during 2013-2016.

At the time of or within 2 years after accelerometry data collection, 273 participants were diagnosed with PD. An additional 196 individuals received a new PD diagnosis more than 2 years after accelerometry data collection (the prodromal group).

The patients with prodromal symptoms of PD and those who were diagnosed with PD showed a significantly reduced daytime acceleration profile up to 7 years before diagnosis, compared with age- and sex-matched healthy control persons, the researchers found.

The reduction in acceleration both before and following diagnosis was unique to patients with PD, “suggesting this measure to be disease specific with potential for use in early identification of individuals likely to be diagnosed with PD,” they wrote.

Accelerometry data proved more accurate than other risk factors (lifestyle, genetics, blood chemistry) or recognized prodromal symptoms of PD in predicting whether an individual would develop PD.

“Our results suggest that accelerometry collected with wearable devices in the general population could be used to identify those at elevated risk for PD on an unprecedented scale and, importantly, individuals who will likely convert within the next few years can be included in studies for neuroprotective treatments,” the researchers conclude in their article.

High-quality research

In a statement from the U.K.-based nonprofit Science Media Centre, José López Barneo, MD, PhD, with the University of Seville (Spain), said this “good quality” study “fits well with current knowledge.”

Dr. Barneo noted that other investigators have also observed that slowness of movement is a characteristic feature of some people who subsequently develop PD.

But these studies involved preselected cohorts of persons at risk of developing PD, or they were carried out in a hospital that required healthcare staff to conduct the movement analysis. In contrast, the current study was conducted in a very large cohort from the general U.K. population.

Also weighing in, José Luis Lanciego, MD, PhD, with the University of Navarra (Spain), said the “main value of this study is that it has demonstrated that accelerometry measurements obtained using wearable devices (such as a smartwatch or other similar devices) are more useful than the assessment of any other potentially prodromal symptom in identifying which people in the [general] population are at increased risk of developing Parkinson’s disease in the future, as well as being able to estimate how many years it will take to start suffering from this neurodegenerative process.

“In these diseases, early diagnosis is to some extent questionable, as early diagnosis is of little use if neuroprotective treatment is not available,” Dr. Lanciego noted.

“However, it is of great importance for use in clinical trials aimed at evaluating the efficacy of new potentially neuroprotective treatments whose main objective is to slow down – and, ideally, even halt ― the clinical progression that typically characterizes Parkinson’s disease,” Dr. Lanciego added.

The study was funded by the UK Dementia Research Institute, the Welsh government, and Cardiff University. Dr. Sandor, Dr. Barneo, and Dr. Lanciego have no relevant disclosures.

A version of this article originally appeared on Medscape.com.

FROM NATURE MEDICINE

Coffee’s brain-boosting effect goes beyond caffeine

“There is a widespread anticipation that coffee boosts alertness and psychomotor performance. By gaining a deeper understanding of the mechanisms underlying this biological phenomenon, we pave the way for investigating the factors that can influence it and even exploring the potential advantages of those mechanisms,” study investigator Nuno Sousa, MD, PhD, with the University of Minho, Braga, Portugal, said in a statement.

The study was published online in Frontiers in Behavioral Neuroscience.

Caffeine can’t take all the credit

Certain compounds in coffee, including caffeine and chlorogenic acids, have well-documented psychoactive effects, but the psychological impact of coffee/caffeine consumption as a whole remains a matter of debate.

The researchers investigated the neurobiological impact of coffee drinking on brain connectivity using resting-state functional MRI (fMRI).

They recruited 47 generally healthy adults (mean age, 30 years; 31 women) who regularly drank a minimum of one cup of coffee per day. Participants refrained from eating or drinking caffeinated beverages for at least 3 hours prior to undergoing fMRI.

To tease out the specific impact of caffeinated coffee intake, 30 habitual coffee drinkers (mean age, 32 years; 27 women) were given hot water containing the same amount of caffeine, but they were not given coffee.

The investigators conducted two fMRI scans – one before, and one 30 minutes after drinking coffee or caffeine-infused water.

Both drinking coffee and drinking plain caffeine in water led to a decrease in functional connectivity of the brain’s default mode network, which is typically active during self-reflection in resting states.

This finding suggests that consuming either coffee or caffeine heightened individuals’ readiness to transition from a state of rest to engaging in task-related activities, the researchers noted.

However, drinking a cup of coffee also boosted connectivity in the higher visual network and the right executive control network, which are linked to working memory, cognitive control, and goal-directed behavior – something that did not occur from drinking caffeinated water.

“Put simply, individuals exhibited a heightened state of preparedness, being more responsive and attentive to external stimuli after drinking coffee,” said first author Maria Picó-Pérez, PhD, with the University of Minho.

Given that some of the effects of coffee also occurred with caffeine alone, it’s “plausible to assume that other caffeinated beverages may share similar effects,” she added.

Still, certain effects were specific to coffee drinking, “likely influenced by factors such as the distinct aroma and taste of coffee or the psychological expectations associated with consuming this particular beverage,” the researcher wrote.

The investigators report that the observations could provide a scientific foundation for the common belief that coffee increases alertness and cognitive functioning. Further research is needed to differentiate the effects of caffeine from the overall experience of drinking coffee.

A limitation of the study is the absence of a nondrinker control sample (to rule out the withdrawal effect) or an alternative group that consumed decaffeinated coffee (to rule out the placebo effect of coffee intake) – something that should be considered in future studies, the researchers noted.

The study was funded by the Institute for the Scientific Information on Coffee. The authors declared no relevant conflicts of interest.

A version of this article originally appeared on Medscape.com.

“There is a widespread anticipation that coffee boosts alertness and psychomotor performance. By gaining a deeper understanding of the mechanisms underlying this biological phenomenon, we pave the way for investigating the factors that can influence it and even exploring the potential advantages of those mechanisms,” study investigator Nuno Sousa, MD, PhD, with the University of Minho, Braga, Portugal, said in a statement.

The study was published online in Frontiers in Behavioral Neuroscience.

Caffeine can’t take all the credit

Certain compounds in coffee, including caffeine and chlorogenic acids, have well-documented psychoactive effects, but the psychological impact of coffee/caffeine consumption as a whole remains a matter of debate.

The researchers investigated the neurobiological impact of coffee drinking on brain connectivity using resting-state functional MRI (fMRI).

They recruited 47 generally healthy adults (mean age, 30 years; 31 women) who regularly drank a minimum of one cup of coffee per day. Participants refrained from eating or drinking caffeinated beverages for at least 3 hours prior to undergoing fMRI.

To tease out the specific impact of caffeinated coffee intake, 30 habitual coffee drinkers (mean age, 32 years; 27 women) were given hot water containing the same amount of caffeine, but they were not given coffee.

The investigators conducted two fMRI scans – one before, and one 30 minutes after drinking coffee or caffeine-infused water.

Both drinking coffee and drinking plain caffeine in water led to a decrease in functional connectivity of the brain’s default mode network, which is typically active during self-reflection in resting states.

This finding suggests that consuming either coffee or caffeine heightened individuals’ readiness to transition from a state of rest to engaging in task-related activities, the researchers noted.

However, drinking a cup of coffee also boosted connectivity in the higher visual network and the right executive control network, which are linked to working memory, cognitive control, and goal-directed behavior – something that did not occur from drinking caffeinated water.

“Put simply, individuals exhibited a heightened state of preparedness, being more responsive and attentive to external stimuli after drinking coffee,” said first author Maria Picó-Pérez, PhD, with the University of Minho.

Given that some of the effects of coffee also occurred with caffeine alone, it’s “plausible to assume that other caffeinated beverages may share similar effects,” she added.

Still, certain effects were specific to coffee drinking, “likely influenced by factors such as the distinct aroma and taste of coffee or the psychological expectations associated with consuming this particular beverage,” the researcher wrote.

The investigators report that the observations could provide a scientific foundation for the common belief that coffee increases alertness and cognitive functioning. Further research is needed to differentiate the effects of caffeine from the overall experience of drinking coffee.

A limitation of the study is the absence of a nondrinker control sample (to rule out the withdrawal effect) or an alternative group that consumed decaffeinated coffee (to rule out the placebo effect of coffee intake) – something that should be considered in future studies, the researchers noted.

The study was funded by the Institute for the Scientific Information on Coffee. The authors declared no relevant conflicts of interest.

A version of this article originally appeared on Medscape.com.

“There is a widespread anticipation that coffee boosts alertness and psychomotor performance. By gaining a deeper understanding of the mechanisms underlying this biological phenomenon, we pave the way for investigating the factors that can influence it and even exploring the potential advantages of those mechanisms,” study investigator Nuno Sousa, MD, PhD, with the University of Minho, Braga, Portugal, said in a statement.

The study was published online in Frontiers in Behavioral Neuroscience.

Caffeine can’t take all the credit

Certain compounds in coffee, including caffeine and chlorogenic acids, have well-documented psychoactive effects, but the psychological impact of coffee/caffeine consumption as a whole remains a matter of debate.

The researchers investigated the neurobiological impact of coffee drinking on brain connectivity using resting-state functional MRI (fMRI).

They recruited 47 generally healthy adults (mean age, 30 years; 31 women) who regularly drank a minimum of one cup of coffee per day. Participants refrained from eating or drinking caffeinated beverages for at least 3 hours prior to undergoing fMRI.

To tease out the specific impact of caffeinated coffee intake, 30 habitual coffee drinkers (mean age, 32 years; 27 women) were given hot water containing the same amount of caffeine, but they were not given coffee.

The investigators conducted two fMRI scans – one before, and one 30 minutes after drinking coffee or caffeine-infused water.

Both drinking coffee and drinking plain caffeine in water led to a decrease in functional connectivity of the brain’s default mode network, which is typically active during self-reflection in resting states.

This finding suggests that consuming either coffee or caffeine heightened individuals’ readiness to transition from a state of rest to engaging in task-related activities, the researchers noted.

However, drinking a cup of coffee also boosted connectivity in the higher visual network and the right executive control network, which are linked to working memory, cognitive control, and goal-directed behavior – something that did not occur from drinking caffeinated water.

“Put simply, individuals exhibited a heightened state of preparedness, being more responsive and attentive to external stimuli after drinking coffee,” said first author Maria Picó-Pérez, PhD, with the University of Minho.

Given that some of the effects of coffee also occurred with caffeine alone, it’s “plausible to assume that other caffeinated beverages may share similar effects,” she added.

Still, certain effects were specific to coffee drinking, “likely influenced by factors such as the distinct aroma and taste of coffee or the psychological expectations associated with consuming this particular beverage,” the researcher wrote.

The investigators report that the observations could provide a scientific foundation for the common belief that coffee increases alertness and cognitive functioning. Further research is needed to differentiate the effects of caffeine from the overall experience of drinking coffee.

A limitation of the study is the absence of a nondrinker control sample (to rule out the withdrawal effect) or an alternative group that consumed decaffeinated coffee (to rule out the placebo effect of coffee intake) – something that should be considered in future studies, the researchers noted.

The study was funded by the Institute for the Scientific Information on Coffee. The authors declared no relevant conflicts of interest.

A version of this article originally appeared on Medscape.com.

FROM FRONTIERS IN BEHAVIORAL NEUROSCIENCE

Medical cannabis does not reduce use of prescription meds

TOPLINE:

, according to a new study published in Annals of Internal Medicine.

METHODOLOGY:

- Cannabis advocates suggest that legal medical cannabis can be a partial solution to the opioid overdose crisis in the United States, which claimed more than 80,000 lives in 2021.

- Current research on how legalized cannabis reduces dependence on prescription pain medication is inconclusive.

- Researchers examined insurance data for the period 2010-2022 from 583,820 adults with chronic noncancer pain.

- They drew from 12 states in which medical cannabis is legal and from 17 in which it is not legal to create a hypothetical randomized trial. The control group simulated prescription rates where medical cannabis was not available.

- Authors evaluated prescription rates for opioids, nonopioid painkillers, and pain interventions, such as physical therapy.

TAKEAWAY:

In a given month during the first 3 years after legalization, for states with medical cannabis, the investigators found the following:

- There was an average decrease of 1.07 percentage points in the proportion of patients who received any opioid prescription, compared to a 1.12 percentage point decrease in the control group.

- There was an average increase of 1.14 percentage points in the proportion of patients who received any nonopioid prescription painkiller, compared to a 1.19 percentage point increase in the control group.

- There was a 0.17 percentage point decrease in the proportion of patients who received any pain procedure, compared to a 0.001 percentage point decrease in the control group.

IN PRACTICE:

“This study did not identify important effects of medical cannabis laws on receipt of opioid or nonopioid pain treatment among patients with chronic noncancer pain,” according to the researchers.

SOURCE:

The study was led by Emma E. McGinty, PhD, of Weill Cornell Medicine, New York, and was funded by the National Institute on Drug Abuse.

LIMITATIONS:

The investigators used a simulated, hypothetical control group that was based on untestable assumptions. They also drew data solely from insured individuals, so the study does not necessarily represent uninsured populations.

DISCLOSURES:

Dr. McGinty reports receiving a grant from NIDA. Her coauthors reported receiving support from NIDA and the National Institutes of Health.

A version of this article first appeared on Medscape.com.

TOPLINE:

, according to a new study published in Annals of Internal Medicine.

METHODOLOGY:

- Cannabis advocates suggest that legal medical cannabis can be a partial solution to the opioid overdose crisis in the United States, which claimed more than 80,000 lives in 2021.

- Current research on how legalized cannabis reduces dependence on prescription pain medication is inconclusive.

- Researchers examined insurance data for the period 2010-2022 from 583,820 adults with chronic noncancer pain.

- They drew from 12 states in which medical cannabis is legal and from 17 in which it is not legal to create a hypothetical randomized trial. The control group simulated prescription rates where medical cannabis was not available.

- Authors evaluated prescription rates for opioids, nonopioid painkillers, and pain interventions, such as physical therapy.

TAKEAWAY:

In a given month during the first 3 years after legalization, for states with medical cannabis, the investigators found the following:

- There was an average decrease of 1.07 percentage points in the proportion of patients who received any opioid prescription, compared to a 1.12 percentage point decrease in the control group.

- There was an average increase of 1.14 percentage points in the proportion of patients who received any nonopioid prescription painkiller, compared to a 1.19 percentage point increase in the control group.

- There was a 0.17 percentage point decrease in the proportion of patients who received any pain procedure, compared to a 0.001 percentage point decrease in the control group.

IN PRACTICE:

“This study did not identify important effects of medical cannabis laws on receipt of opioid or nonopioid pain treatment among patients with chronic noncancer pain,” according to the researchers.

SOURCE:

The study was led by Emma E. McGinty, PhD, of Weill Cornell Medicine, New York, and was funded by the National Institute on Drug Abuse.

LIMITATIONS:

The investigators used a simulated, hypothetical control group that was based on untestable assumptions. They also drew data solely from insured individuals, so the study does not necessarily represent uninsured populations.

DISCLOSURES:

Dr. McGinty reports receiving a grant from NIDA. Her coauthors reported receiving support from NIDA and the National Institutes of Health.

A version of this article first appeared on Medscape.com.

TOPLINE:

, according to a new study published in Annals of Internal Medicine.

METHODOLOGY:

- Cannabis advocates suggest that legal medical cannabis can be a partial solution to the opioid overdose crisis in the United States, which claimed more than 80,000 lives in 2021.

- Current research on how legalized cannabis reduces dependence on prescription pain medication is inconclusive.

- Researchers examined insurance data for the period 2010-2022 from 583,820 adults with chronic noncancer pain.

- They drew from 12 states in which medical cannabis is legal and from 17 in which it is not legal to create a hypothetical randomized trial. The control group simulated prescription rates where medical cannabis was not available.

- Authors evaluated prescription rates for opioids, nonopioid painkillers, and pain interventions, such as physical therapy.

TAKEAWAY:

In a given month during the first 3 years after legalization, for states with medical cannabis, the investigators found the following:

- There was an average decrease of 1.07 percentage points in the proportion of patients who received any opioid prescription, compared to a 1.12 percentage point decrease in the control group.

- There was an average increase of 1.14 percentage points in the proportion of patients who received any nonopioid prescription painkiller, compared to a 1.19 percentage point increase in the control group.

- There was a 0.17 percentage point decrease in the proportion of patients who received any pain procedure, compared to a 0.001 percentage point decrease in the control group.

IN PRACTICE:

“This study did not identify important effects of medical cannabis laws on receipt of opioid or nonopioid pain treatment among patients with chronic noncancer pain,” according to the researchers.

SOURCE:

The study was led by Emma E. McGinty, PhD, of Weill Cornell Medicine, New York, and was funded by the National Institute on Drug Abuse.

LIMITATIONS:

The investigators used a simulated, hypothetical control group that was based on untestable assumptions. They also drew data solely from insured individuals, so the study does not necessarily represent uninsured populations.

DISCLOSURES:

Dr. McGinty reports receiving a grant from NIDA. Her coauthors reported receiving support from NIDA and the National Institutes of Health.

A version of this article first appeared on Medscape.com.

Lean muscle mass protective against Alzheimer’s?

Investigators analyzed data on more than 450,000 participants in the UK Biobank as well as two independent samples of more than 320,000 individuals with and without AD, and more than 260,000 individuals participating in a separate genes and intelligence study.

They estimated lean muscle and fat tissue in the arms and legs and found, in adjusted analyses, over 500 genetic variants associated with lean mass.

On average, higher genetically lean mass was associated with a “modest but statistically robust” reduction in AD risk and with superior performance on cognitive tasks.

“Using human genetic data, we found evidence for a protective effect of lean mass on risk of Alzheimer’s disease,” study investigators Iyas Daghlas, MD, a resident in the department of neurology, University of California, San Francisco, said in an interview.

Although “clinical intervention studies are needed to confirm this effect, this study supports current recommendations to maintain a healthy lifestyle to prevent dementia,” he said.

The study was published online in BMJ Medicine.

Naturally randomized research

Several measures of body composition have been investigated for their potential association with AD. Lean mass – a “proxy for muscle mass, defined as the difference between total mass and fat mass” – has been shown to be reduced in patients with AD compared with controls, the researchers noted.

“Previous research studies have tested the relationship of body mass index with Alzheimer’s disease and did not find evidence for a causal effect,” Dr. Daghlas said. “We wondered whether BMI was an insufficiently granular measure and hypothesized that disaggregating body mass into lean mass and fat mass could reveal novel associations with disease.”

Most studies have used case-control designs, which might be biased by “residual confounding or reverse causality.” Naturally randomized data “may be used as an alternative to conventional observational studies to investigate causal relations between risk factors and diseases,” the researchers wrote.

In particular, the Mendelian randomization (MR) paradigm randomly allocates germline genetic variants and uses them as proxies for a specific risk factor.

MR “is a technique that permits researchers to investigate cause-and-effect relationships using human genetic data,” Dr. Daghlas explained. “In effect, we’re studying the results of a naturally randomized experiment whereby some individuals are genetically allocated to carry more lean mass.”

The current study used MR to investigate the effect of genetically proxied lean mass on the risk of AD and the “related phenotype” of cognitive performance.

Genetic proxy

As genetic proxies for lean mass, the researchers chose single nucleotide polymorphisms (genetic variants) that were associated, in a genome-wide association study (GWAS), with appendicular lean mass.

Appendicular lean mass “more accurately reflects the effects of lean mass than whole body lean mass, which includes smooth and cardiac muscle,” the authors explained.

This GWAS used phenotypic and genetic data from 450,243 participants in the UK Biobank cohort (mean age 57 years). All participants were of European ancestry.

The researchers adjusted for age, sex, and genetic ancestry. They measured appendicular lean mass using bioimpedance – an electric current that flows at different rates through the body, depending on its composition.

In addition to the UK Biobank participants, the researchers drew on an independent sample of 21,982 people with AD; a control group of 41,944 people without AD; a replication sample of 7,329 people with and 252,879 people without AD to validate the findings; and 269,867 people taking part in a genome-wide study of cognitive performance.

The researchers identified 584 variants that met criteria for use as genetic proxies for lean mass. None were located within the APOE gene region. In the aggregate, these variants explained 10.3% of the variance in appendicular lean mass.

Each standard deviation increase in genetically proxied lean mass was associated with a 12% reduction in AD risk (odds ratio [OR], 0.88; 95% confidence interval [CI], 0.82-0.95; P < .001). This finding was replicated in the independent consortium (OR, 0.91; 95% CI, 0.83-0.99; P = .02).

The findings remained “consistent” in sensitivity analyses.

A modifiable risk factor?

Higher appendicular lean mass was associated with higher levels of cognitive performance, with each SD increase in lean mass associated with an SD increase in cognitive performance (OR, 0.09; 95% CI, 0.06-0.11; P = .001).

“Adjusting for potential mediation through performance did not reduce the association between appendicular lean mass and risk of AD,” the authors wrote.

They obtained similar results using genetically proxied trunk and whole-body lean mass, after adjusting for fat mass.

The authors noted several limitations. The bioimpedance measures “only predict, but do not directly measure, lean mass.” Moreover, the approach didn’t examine whether a “critical window of risk factor timing” exists, during which lean mass might play a role in influencing AD risk and after which “interventions would no longer be effective.” Nor could the study determine whether increasing lean mass could reverse AD pathology in patients with preclinical disease or mild cognitive impairment.

Nevertheless, the findings suggest “that lean mass might be a possible modifiable protective factor for Alzheimer’s disease,” the authors wrote. “The mechanisms underlying this finding, as well as the clinical and public health implications, warrant further investigation.”

Novel strategies

In a comment, Iva Miljkovic, MD, PhD, associate professor, department of epidemiology, University of Pittsburgh, said the investigators used “very rigorous methodology.”

The finding suggesting that lean mass is associated with better cognitive function is “important, as cognitive impairment can become stable rather than progress to a pathological state; and, in some cases, can even be reversed.”

In those cases, “identifying the underlying cause – e.g., low lean mass – can significantly improve cognitive function,” said Dr. Miljkovic, senior author of a study showing muscle fat as a risk factor for cognitive decline.

More research will enable us to “expand our understanding” of the mechanisms involved and determine whether interventions aimed at preventing muscle loss and/or increasing muscle fat may have a beneficial effect on cognitive function,” she said. “This might lead to novel strategies to prevent AD.”

Dr. Daghlas is supported by the British Heart Foundation Centre of Research Excellence at Imperial College, London, and is employed part-time by Novo Nordisk. Dr. Miljkovic reports no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Investigators analyzed data on more than 450,000 participants in the UK Biobank as well as two independent samples of more than 320,000 individuals with and without AD, and more than 260,000 individuals participating in a separate genes and intelligence study.

They estimated lean muscle and fat tissue in the arms and legs and found, in adjusted analyses, over 500 genetic variants associated with lean mass.

On average, higher genetically lean mass was associated with a “modest but statistically robust” reduction in AD risk and with superior performance on cognitive tasks.

“Using human genetic data, we found evidence for a protective effect of lean mass on risk of Alzheimer’s disease,” study investigators Iyas Daghlas, MD, a resident in the department of neurology, University of California, San Francisco, said in an interview.

Although “clinical intervention studies are needed to confirm this effect, this study supports current recommendations to maintain a healthy lifestyle to prevent dementia,” he said.

The study was published online in BMJ Medicine.

Naturally randomized research

Several measures of body composition have been investigated for their potential association with AD. Lean mass – a “proxy for muscle mass, defined as the difference between total mass and fat mass” – has been shown to be reduced in patients with AD compared with controls, the researchers noted.

“Previous research studies have tested the relationship of body mass index with Alzheimer’s disease and did not find evidence for a causal effect,” Dr. Daghlas said. “We wondered whether BMI was an insufficiently granular measure and hypothesized that disaggregating body mass into lean mass and fat mass could reveal novel associations with disease.”

Most studies have used case-control designs, which might be biased by “residual confounding or reverse causality.” Naturally randomized data “may be used as an alternative to conventional observational studies to investigate causal relations between risk factors and diseases,” the researchers wrote.

In particular, the Mendelian randomization (MR) paradigm randomly allocates germline genetic variants and uses them as proxies for a specific risk factor.

MR “is a technique that permits researchers to investigate cause-and-effect relationships using human genetic data,” Dr. Daghlas explained. “In effect, we’re studying the results of a naturally randomized experiment whereby some individuals are genetically allocated to carry more lean mass.”

The current study used MR to investigate the effect of genetically proxied lean mass on the risk of AD and the “related phenotype” of cognitive performance.

Genetic proxy

As genetic proxies for lean mass, the researchers chose single nucleotide polymorphisms (genetic variants) that were associated, in a genome-wide association study (GWAS), with appendicular lean mass.

Appendicular lean mass “more accurately reflects the effects of lean mass than whole body lean mass, which includes smooth and cardiac muscle,” the authors explained.

This GWAS used phenotypic and genetic data from 450,243 participants in the UK Biobank cohort (mean age 57 years). All participants were of European ancestry.

The researchers adjusted for age, sex, and genetic ancestry. They measured appendicular lean mass using bioimpedance – an electric current that flows at different rates through the body, depending on its composition.

In addition to the UK Biobank participants, the researchers drew on an independent sample of 21,982 people with AD; a control group of 41,944 people without AD; a replication sample of 7,329 people with and 252,879 people without AD to validate the findings; and 269,867 people taking part in a genome-wide study of cognitive performance.

The researchers identified 584 variants that met criteria for use as genetic proxies for lean mass. None were located within the APOE gene region. In the aggregate, these variants explained 10.3% of the variance in appendicular lean mass.

Each standard deviation increase in genetically proxied lean mass was associated with a 12% reduction in AD risk (odds ratio [OR], 0.88; 95% confidence interval [CI], 0.82-0.95; P < .001). This finding was replicated in the independent consortium (OR, 0.91; 95% CI, 0.83-0.99; P = .02).

The findings remained “consistent” in sensitivity analyses.

A modifiable risk factor?

Higher appendicular lean mass was associated with higher levels of cognitive performance, with each SD increase in lean mass associated with an SD increase in cognitive performance (OR, 0.09; 95% CI, 0.06-0.11; P = .001).

“Adjusting for potential mediation through performance did not reduce the association between appendicular lean mass and risk of AD,” the authors wrote.

They obtained similar results using genetically proxied trunk and whole-body lean mass, after adjusting for fat mass.

The authors noted several limitations. The bioimpedance measures “only predict, but do not directly measure, lean mass.” Moreover, the approach didn’t examine whether a “critical window of risk factor timing” exists, during which lean mass might play a role in influencing AD risk and after which “interventions would no longer be effective.” Nor could the study determine whether increasing lean mass could reverse AD pathology in patients with preclinical disease or mild cognitive impairment.

Nevertheless, the findings suggest “that lean mass might be a possible modifiable protective factor for Alzheimer’s disease,” the authors wrote. “The mechanisms underlying this finding, as well as the clinical and public health implications, warrant further investigation.”

Novel strategies

In a comment, Iva Miljkovic, MD, PhD, associate professor, department of epidemiology, University of Pittsburgh, said the investigators used “very rigorous methodology.”

The finding suggesting that lean mass is associated with better cognitive function is “important, as cognitive impairment can become stable rather than progress to a pathological state; and, in some cases, can even be reversed.”

In those cases, “identifying the underlying cause – e.g., low lean mass – can significantly improve cognitive function,” said Dr. Miljkovic, senior author of a study showing muscle fat as a risk factor for cognitive decline.

More research will enable us to “expand our understanding” of the mechanisms involved and determine whether interventions aimed at preventing muscle loss and/or increasing muscle fat may have a beneficial effect on cognitive function,” she said. “This might lead to novel strategies to prevent AD.”

Dr. Daghlas is supported by the British Heart Foundation Centre of Research Excellence at Imperial College, London, and is employed part-time by Novo Nordisk. Dr. Miljkovic reports no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Investigators analyzed data on more than 450,000 participants in the UK Biobank as well as two independent samples of more than 320,000 individuals with and without AD, and more than 260,000 individuals participating in a separate genes and intelligence study.

They estimated lean muscle and fat tissue in the arms and legs and found, in adjusted analyses, over 500 genetic variants associated with lean mass.

On average, higher genetically lean mass was associated with a “modest but statistically robust” reduction in AD risk and with superior performance on cognitive tasks.

“Using human genetic data, we found evidence for a protective effect of lean mass on risk of Alzheimer’s disease,” study investigators Iyas Daghlas, MD, a resident in the department of neurology, University of California, San Francisco, said in an interview.

Although “clinical intervention studies are needed to confirm this effect, this study supports current recommendations to maintain a healthy lifestyle to prevent dementia,” he said.

The study was published online in BMJ Medicine.

Naturally randomized research

Several measures of body composition have been investigated for their potential association with AD. Lean mass – a “proxy for muscle mass, defined as the difference between total mass and fat mass” – has been shown to be reduced in patients with AD compared with controls, the researchers noted.

“Previous research studies have tested the relationship of body mass index with Alzheimer’s disease and did not find evidence for a causal effect,” Dr. Daghlas said. “We wondered whether BMI was an insufficiently granular measure and hypothesized that disaggregating body mass into lean mass and fat mass could reveal novel associations with disease.”

Most studies have used case-control designs, which might be biased by “residual confounding or reverse causality.” Naturally randomized data “may be used as an alternative to conventional observational studies to investigate causal relations between risk factors and diseases,” the researchers wrote.

In particular, the Mendelian randomization (MR) paradigm randomly allocates germline genetic variants and uses them as proxies for a specific risk factor.

MR “is a technique that permits researchers to investigate cause-and-effect relationships using human genetic data,” Dr. Daghlas explained. “In effect, we’re studying the results of a naturally randomized experiment whereby some individuals are genetically allocated to carry more lean mass.”

The current study used MR to investigate the effect of genetically proxied lean mass on the risk of AD and the “related phenotype” of cognitive performance.

Genetic proxy

As genetic proxies for lean mass, the researchers chose single nucleotide polymorphisms (genetic variants) that were associated, in a genome-wide association study (GWAS), with appendicular lean mass.

Appendicular lean mass “more accurately reflects the effects of lean mass than whole body lean mass, which includes smooth and cardiac muscle,” the authors explained.

This GWAS used phenotypic and genetic data from 450,243 participants in the UK Biobank cohort (mean age 57 years). All participants were of European ancestry.

The researchers adjusted for age, sex, and genetic ancestry. They measured appendicular lean mass using bioimpedance – an electric current that flows at different rates through the body, depending on its composition.

In addition to the UK Biobank participants, the researchers drew on an independent sample of 21,982 people with AD; a control group of 41,944 people without AD; a replication sample of 7,329 people with and 252,879 people without AD to validate the findings; and 269,867 people taking part in a genome-wide study of cognitive performance.

The researchers identified 584 variants that met criteria for use as genetic proxies for lean mass. None were located within the APOE gene region. In the aggregate, these variants explained 10.3% of the variance in appendicular lean mass.

Each standard deviation increase in genetically proxied lean mass was associated with a 12% reduction in AD risk (odds ratio [OR], 0.88; 95% confidence interval [CI], 0.82-0.95; P < .001). This finding was replicated in the independent consortium (OR, 0.91; 95% CI, 0.83-0.99; P = .02).

The findings remained “consistent” in sensitivity analyses.

A modifiable risk factor?

Higher appendicular lean mass was associated with higher levels of cognitive performance, with each SD increase in lean mass associated with an SD increase in cognitive performance (OR, 0.09; 95% CI, 0.06-0.11; P = .001).

“Adjusting for potential mediation through performance did not reduce the association between appendicular lean mass and risk of AD,” the authors wrote.

They obtained similar results using genetically proxied trunk and whole-body lean mass, after adjusting for fat mass.

The authors noted several limitations. The bioimpedance measures “only predict, but do not directly measure, lean mass.” Moreover, the approach didn’t examine whether a “critical window of risk factor timing” exists, during which lean mass might play a role in influencing AD risk and after which “interventions would no longer be effective.” Nor could the study determine whether increasing lean mass could reverse AD pathology in patients with preclinical disease or mild cognitive impairment.

Nevertheless, the findings suggest “that lean mass might be a possible modifiable protective factor for Alzheimer’s disease,” the authors wrote. “The mechanisms underlying this finding, as well as the clinical and public health implications, warrant further investigation.”

Novel strategies

In a comment, Iva Miljkovic, MD, PhD, associate professor, department of epidemiology, University of Pittsburgh, said the investigators used “very rigorous methodology.”

The finding suggesting that lean mass is associated with better cognitive function is “important, as cognitive impairment can become stable rather than progress to a pathological state; and, in some cases, can even be reversed.”

In those cases, “identifying the underlying cause – e.g., low lean mass – can significantly improve cognitive function,” said Dr. Miljkovic, senior author of a study showing muscle fat as a risk factor for cognitive decline.

More research will enable us to “expand our understanding” of the mechanisms involved and determine whether interventions aimed at preventing muscle loss and/or increasing muscle fat may have a beneficial effect on cognitive function,” she said. “This might lead to novel strategies to prevent AD.”

Dr. Daghlas is supported by the British Heart Foundation Centre of Research Excellence at Imperial College, London, and is employed part-time by Novo Nordisk. Dr. Miljkovic reports no relevant financial relationships.

A version of this article first appeared on Medscape.com.

FROM BMJ MEDICINE

New data on traumatic brain injury show it’s chronic, evolving

The data show that patients with TBI may continue to improve or decline during a period of up to 7 years after injury, making it more of a chronic condition, the investigators report.

“Our results dispute the notion that TBI is a discrete, isolated medical event with a finite, static functional outcome following a relatively short period of upward recovery (typically up to 1 year),” Benjamin Brett, PhD, assistant professor, departments of neurosurgery and neurology, Medical College of Wisconsin, Milwaukee, told this news organization.

“Rather, individuals continue to exhibit improvement and decline across a range of domains, including psychiatric, cognitive, and functional outcomes, even 2-7 years after their injury,” Dr. Brett said.

“Ultimately, our findings support conceptualizing TBI as a chronic condition for many patients, which requires routine follow-up, medical monitoring, responsive care, and support, adapting to their evolving needs many years following injury,” he said.

Results of the TRACK TBI LONG (Transforming Research and Clinical Knowledge in TBI Longitudinal study) were published online in Neurology.

Chronic and evolving

The results are based on 1,264 adults (mean age at injury, 41 years) from the initial TRACK TBI study, including 917 with mild TBI (mTBI) and 193 with moderate/severe TBI (msTBI), who were matched to 154 control patients who had experienced orthopedic trauma without evidence of head injury (OTC).

The participants were followed annually for up to 7 years after injury using the Glasgow Outcome Scale–Extended (GOSE), Brief Symptom Inventory–18 (BSI), and the Brief Test of Adult Cognition by Telephone (BTACT), as well as a self-reported perception of function. The researchers calculated rates of change (classified as stable, improved, or declined) for individual outcomes at each long-term follow-up.

In general, “stable” was the most frequent change outcome for the individual measures from postinjury baseline assessment to 7 years post injury.

However, a substantial proportion of patients with TBI (regardless of severity) experienced changes in psychiatric status, cognition, and functional outcomes over the years.

When the GOSE, BSI, and BTACT were considered collectively, rates of decline were 21% for mTBI, 26% for msTBI, and 15% for OTC.

The highest rates of decline were in functional outcomes (GOSE scores). On average, over the course of 2-7 years post injury, 29% of patients with mTBI and 23% of those with msTBI experienced a decline in the ability to function with daily activities.

A pattern of improvement on the GOSE was noted in 36% of patients with msTBI and 22% patients with mTBI.

Notably, said Dr. Brett, patients who experienced greater difficulties near the time of injury showed improvement for a period of 2-7 years post injury. Patient factors, such as older age at the time of the injury, were associated with greater risk of long-term decline.

“Our findings highlight the need to embrace conceptualization of TBI as a chronic condition in order to establish systems of care that provide continued follow-up with treatment and supports that adapt to evolving patient needs, regardless of the directions of change,” Dr. Brett told this news organization.

Important and novel work

In a linked editorial, Robynne Braun, MD, PhD, with the department of neurology, University of Maryland, Baltimore, notes that there have been “few prospective studies examining postinjury outcomes on this longer timescale, especially in mild TBI, making this an important and novel body of work.”

The study “effectively demonstrates that changes in function across multiple domains continue to occur well beyond the conventionally tracked 6- to 12-month period of injury recovery,” Dr. Braun writes.

The observation that over the 7-year follow-up, a substantial proportion of patients with mTBI and msTBI exhibited a pattern of decline on the GOSE suggests that they “may have needed more ongoing medical monitoring, rehabilitation, or supportive services to prevent worsening,” Dr. Braun adds.

At the same time, the improvement pattern on the GOSE suggests “opportunities for recovery that further rehabilitative or medical services might have enhanced.”

The study was funded by the National Institute of Neurological Disorders and Stroke, the National Institute on Aging, the National Football League Scientific Advisory Board, and the U.S. Department of Defense. Dr. Brett and Dr. Braun have disclosed no relevant financial relationships.

A version of this article originally appeared on Medscape.com.

The data show that patients with TBI may continue to improve or decline during a period of up to 7 years after injury, making it more of a chronic condition, the investigators report.

“Our results dispute the notion that TBI is a discrete, isolated medical event with a finite, static functional outcome following a relatively short period of upward recovery (typically up to 1 year),” Benjamin Brett, PhD, assistant professor, departments of neurosurgery and neurology, Medical College of Wisconsin, Milwaukee, told this news organization.

“Rather, individuals continue to exhibit improvement and decline across a range of domains, including psychiatric, cognitive, and functional outcomes, even 2-7 years after their injury,” Dr. Brett said.

“Ultimately, our findings support conceptualizing TBI as a chronic condition for many patients, which requires routine follow-up, medical monitoring, responsive care, and support, adapting to their evolving needs many years following injury,” he said.

Results of the TRACK TBI LONG (Transforming Research and Clinical Knowledge in TBI Longitudinal study) were published online in Neurology.

Chronic and evolving

The results are based on 1,264 adults (mean age at injury, 41 years) from the initial TRACK TBI study, including 917 with mild TBI (mTBI) and 193 with moderate/severe TBI (msTBI), who were matched to 154 control patients who had experienced orthopedic trauma without evidence of head injury (OTC).

The participants were followed annually for up to 7 years after injury using the Glasgow Outcome Scale–Extended (GOSE), Brief Symptom Inventory–18 (BSI), and the Brief Test of Adult Cognition by Telephone (BTACT), as well as a self-reported perception of function. The researchers calculated rates of change (classified as stable, improved, or declined) for individual outcomes at each long-term follow-up.

In general, “stable” was the most frequent change outcome for the individual measures from postinjury baseline assessment to 7 years post injury.

However, a substantial proportion of patients with TBI (regardless of severity) experienced changes in psychiatric status, cognition, and functional outcomes over the years.

When the GOSE, BSI, and BTACT were considered collectively, rates of decline were 21% for mTBI, 26% for msTBI, and 15% for OTC.

The highest rates of decline were in functional outcomes (GOSE scores). On average, over the course of 2-7 years post injury, 29% of patients with mTBI and 23% of those with msTBI experienced a decline in the ability to function with daily activities.

A pattern of improvement on the GOSE was noted in 36% of patients with msTBI and 22% patients with mTBI.

Notably, said Dr. Brett, patients who experienced greater difficulties near the time of injury showed improvement for a period of 2-7 years post injury. Patient factors, such as older age at the time of the injury, were associated with greater risk of long-term decline.

“Our findings highlight the need to embrace conceptualization of TBI as a chronic condition in order to establish systems of care that provide continued follow-up with treatment and supports that adapt to evolving patient needs, regardless of the directions of change,” Dr. Brett told this news organization.

Important and novel work

In a linked editorial, Robynne Braun, MD, PhD, with the department of neurology, University of Maryland, Baltimore, notes that there have been “few prospective studies examining postinjury outcomes on this longer timescale, especially in mild TBI, making this an important and novel body of work.”

The study “effectively demonstrates that changes in function across multiple domains continue to occur well beyond the conventionally tracked 6- to 12-month period of injury recovery,” Dr. Braun writes.

The observation that over the 7-year follow-up, a substantial proportion of patients with mTBI and msTBI exhibited a pattern of decline on the GOSE suggests that they “may have needed more ongoing medical monitoring, rehabilitation, or supportive services to prevent worsening,” Dr. Braun adds.

At the same time, the improvement pattern on the GOSE suggests “opportunities for recovery that further rehabilitative or medical services might have enhanced.”

The study was funded by the National Institute of Neurological Disorders and Stroke, the National Institute on Aging, the National Football League Scientific Advisory Board, and the U.S. Department of Defense. Dr. Brett and Dr. Braun have disclosed no relevant financial relationships.

A version of this article originally appeared on Medscape.com.

The data show that patients with TBI may continue to improve or decline during a period of up to 7 years after injury, making it more of a chronic condition, the investigators report.

“Our results dispute the notion that TBI is a discrete, isolated medical event with a finite, static functional outcome following a relatively short period of upward recovery (typically up to 1 year),” Benjamin Brett, PhD, assistant professor, departments of neurosurgery and neurology, Medical College of Wisconsin, Milwaukee, told this news organization.

“Rather, individuals continue to exhibit improvement and decline across a range of domains, including psychiatric, cognitive, and functional outcomes, even 2-7 years after their injury,” Dr. Brett said.

“Ultimately, our findings support conceptualizing TBI as a chronic condition for many patients, which requires routine follow-up, medical monitoring, responsive care, and support, adapting to their evolving needs many years following injury,” he said.

Results of the TRACK TBI LONG (Transforming Research and Clinical Knowledge in TBI Longitudinal study) were published online in Neurology.

Chronic and evolving

The results are based on 1,264 adults (mean age at injury, 41 years) from the initial TRACK TBI study, including 917 with mild TBI (mTBI) and 193 with moderate/severe TBI (msTBI), who were matched to 154 control patients who had experienced orthopedic trauma without evidence of head injury (OTC).

The participants were followed annually for up to 7 years after injury using the Glasgow Outcome Scale–Extended (GOSE), Brief Symptom Inventory–18 (BSI), and the Brief Test of Adult Cognition by Telephone (BTACT), as well as a self-reported perception of function. The researchers calculated rates of change (classified as stable, improved, or declined) for individual outcomes at each long-term follow-up.

In general, “stable” was the most frequent change outcome for the individual measures from postinjury baseline assessment to 7 years post injury.

However, a substantial proportion of patients with TBI (regardless of severity) experienced changes in psychiatric status, cognition, and functional outcomes over the years.

When the GOSE, BSI, and BTACT were considered collectively, rates of decline were 21% for mTBI, 26% for msTBI, and 15% for OTC.

The highest rates of decline were in functional outcomes (GOSE scores). On average, over the course of 2-7 years post injury, 29% of patients with mTBI and 23% of those with msTBI experienced a decline in the ability to function with daily activities.

A pattern of improvement on the GOSE was noted in 36% of patients with msTBI and 22% patients with mTBI.

Notably, said Dr. Brett, patients who experienced greater difficulties near the time of injury showed improvement for a period of 2-7 years post injury. Patient factors, such as older age at the time of the injury, were associated with greater risk of long-term decline.

“Our findings highlight the need to embrace conceptualization of TBI as a chronic condition in order to establish systems of care that provide continued follow-up with treatment and supports that adapt to evolving patient needs, regardless of the directions of change,” Dr. Brett told this news organization.

Important and novel work

In a linked editorial, Robynne Braun, MD, PhD, with the department of neurology, University of Maryland, Baltimore, notes that there have been “few prospective studies examining postinjury outcomes on this longer timescale, especially in mild TBI, making this an important and novel body of work.”

The study “effectively demonstrates that changes in function across multiple domains continue to occur well beyond the conventionally tracked 6- to 12-month period of injury recovery,” Dr. Braun writes.

The observation that over the 7-year follow-up, a substantial proportion of patients with mTBI and msTBI exhibited a pattern of decline on the GOSE suggests that they “may have needed more ongoing medical monitoring, rehabilitation, or supportive services to prevent worsening,” Dr. Braun adds.

At the same time, the improvement pattern on the GOSE suggests “opportunities for recovery that further rehabilitative or medical services might have enhanced.”

The study was funded by the National Institute of Neurological Disorders and Stroke, the National Institute on Aging, the National Football League Scientific Advisory Board, and the U.S. Department of Defense. Dr. Brett and Dr. Braun have disclosed no relevant financial relationships.

A version of this article originally appeared on Medscape.com.

FROM NEUROLOGY

Can a repurposed Parkinson’s drug slow ALS progression?

However, at least one expert believes the study has “significant flaws.”

Investigators randomly assigned 20 individuals with sporadic ALS to receive either ropinirole or placebo for 24 weeks. During the double-blind period, there was no difference between the groups in terms of decline in functional status.

However, during a further open-label extension period, the ropinirole group showed significant suppression of functional decline and an average of an additional 7 months of progression-free survival.

The researchers were able to predict clinical responsiveness to ropinirole in vitro by analyzing motor neurons derived from participants’ stem cells.

“We found that ropinirole is safe and tolerable for ALS patients and shows therapeutic promise at helping them sustain daily activity and muscle strength,” first author Satoru Morimoto, MD, of the department of physiology, Keio University School of Medicine, Tokyo, said in a news release.

The study was published online in Cell Stem Cell.

Feasibility study

“ALS is totally incurable and it’s a very difficult disease to treat,” senior author Hideyuki Okano, MD, PhD, professor, department of physiology, Keio University, said in the news release.

Preclinical animal models have “limited translational potential” for identifying drug candidates, but induced pluripotent stem cell (iPSC)–derived motor neurons (MNs) from ALS patients can “overcome these limitations for drug screening,” the authors write.

“We previously identified ropinirole [a dopamine D2 receptor agonist] as a potential anti-ALS drug in vitro by iPSC drug discovery,” Dr. Okano said.

The current trial was a randomized, placebo-controlled phase 1/2a feasibility trial that evaluated the safety, tolerability, and efficacy of ropinirole in patients with ALS, using several parameters:

- The revised ALS functional rating scale (ALSFRS-R) score.

- Composite functional endpoints.

- Event-free survival.

- Time to ≤ 50% forced vital capacity (FVC).

The trial consisted of a 12-week run-in period, a 24-week double-blind period, an open-label extension period that lasted from 4 to 24 weeks, and a 4-week follow-up period after administration.

Thirteen patients were assigned to receive ropinirole (23.1% women; mean age, 65.2 ± 12.6 years; 7.7% with clinically definite and 76.9% with clinically probable ALS); seven were assigned to receive placebo (57.1% women; mean age, 66.3 ± 7.5 years; 14.3% with clinically definite and 85.7% with clinically probable ALS).

Of the treatment group, 30.8% had a bulbar onset lesion vs. 57.1% in the placebo group. At baseline, the mean FVC was 94.4% ± 14.9 and 81.5% ± 23.2 in the ropinirole and placebo groups, respectively. The mean body mass index (BMI) was 22.91 ± 3.82 and 19.69 ± 2.63, respectively.

Of the participants,12 in the ropinirole and six in the control group completed the full 24-week treatment protocol; 12 in the ropinirole and five in the placebo group completed the open-label extension (participants who had received placebo were switched to the active drug).

However only seven participants in the ropinirole group and one participant in the placebo group completed the full 1-year trial.

‘Striking correlation’

“During the double-blind period, muscle strength and daily activity were maintained, but a decline in the ALSFRS-R … was not different from that in the placebo group,” the researchers write.

In the open-label extension period, the ropinirole group showed “significant suppression of ALSFRS-R decline,” with an ALSFRS-R score change of only 7.75 (95% confidence interval, 10.66-4.63) for the treatment group vs. 17.51 (95% CI, 22.46-12.56) for the placebo group.

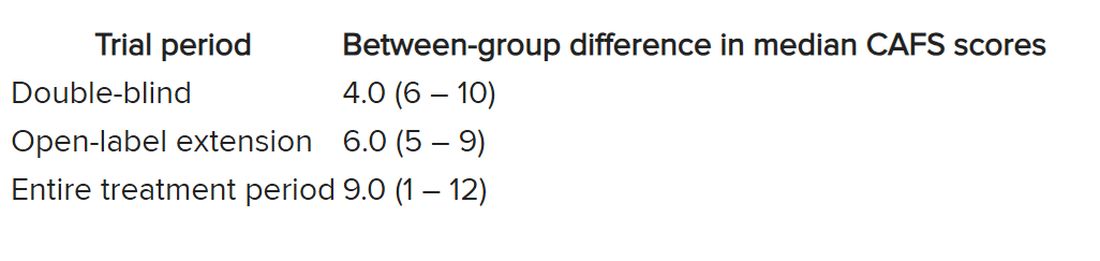

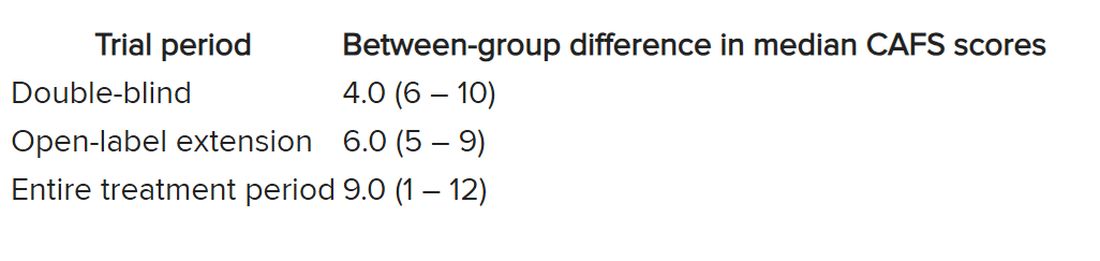

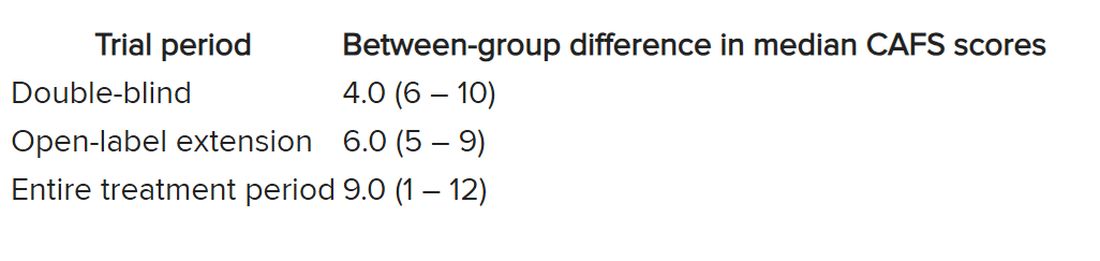

The researchers used the assessment of function and survival (CAFS) score, which adjusts the ALSFRS-R score against mortality, to see whether functional benefits translated into improved survival.

The score “favored ropinirole” in the open-extension period and the entire treatment period but not in the double-blind period.

Disease progression events occurred in 7 of 7 (100%) participants in the placebo group and 7 of 13 (54%) in the ropinirole group, “suggesting a twofold decrease in disease progression” in the treatment group.

The ropinirole group experienced an additional 27.9 weeks of disease progression–free survival, compared with the placebo group.

“No participant discontinued treatment because of adverse experiences in either treatment group,” the authors report.

The analysis of iPSC-derived motor neurons from participants showed dopamine D2 receptor expression, as well as the potential involvement of the cholesterol pathway SREBP2 in the therapeutic effects of ropinirole. Lipid peroxide was also identified as a good “surrogate clinical marker to assess disease progression and drug efficacy.”

“We found a very striking correlation between a patient’s clinical response and the response of their motor neurons in vitro,” said Dr. Morimoto. “Patients whose motor neurons responded robustly to ropinirole in vitro had a much slower clinical disease progression with ropinirole treatment, while suboptimal responders showed much more rapid disease progression, despite taking ropinirole.”

Limitations include “small sample sizes and high attrition rates in the open-label extension period,” so “further validation” is required, the authors state.

Significant flaws

Commenting for this article, Carmel Armon, MD, MHS, professor of neurology, Loma Linda (Calif.) University, said the study “falls short of being a credible 1/2a clinical trial.”

Although the “intentions were good and the design not unusual,” the two groups were not “balanced on risk factors for faster progressing disease.” Rather, the placebo group was “tilted towards faster progressing disease” because there were more clinically definite and probable ALS patients in the placebo group than the treatment group, and there were more patients with bulbar onset.

Participants in the placebo group also had shorter median disease duration, lower BMI, and lower FVC, noted Dr. Armon, who was not involved with the study.

And only 1 in 7 control patients completed the open-label extension, compared with 7 of 13 patients in the intervention group.

“With these limitations, I would be disinclined to rely on the findings to justify a larger clinical trial,” Dr. Armon concluded.

The trial was sponsored by K Pharma. The study drug, active drugs, and placebo were supplied free of charge by GlaxoSmithKline K.K. Dr. Okano received grants from JSPS and AMED and grants and personal fees from K Pharma during the conduct of the study and personal fees from Sanbio, outside the submitted work. Dr. Okano has a patent on a therapeutic agent for ALS and composition for treatment licensed to K Pharma. The other authors’ disclosures and additional information are available in the original article. Dr. Armon reports no relevant financial relationships.

A version of this article first appeared on Medscape.com.

However, at least one expert believes the study has “significant flaws.”

Investigators randomly assigned 20 individuals with sporadic ALS to receive either ropinirole or placebo for 24 weeks. During the double-blind period, there was no difference between the groups in terms of decline in functional status.

However, during a further open-label extension period, the ropinirole group showed significant suppression of functional decline and an average of an additional 7 months of progression-free survival.

The researchers were able to predict clinical responsiveness to ropinirole in vitro by analyzing motor neurons derived from participants’ stem cells.

“We found that ropinirole is safe and tolerable for ALS patients and shows therapeutic promise at helping them sustain daily activity and muscle strength,” first author Satoru Morimoto, MD, of the department of physiology, Keio University School of Medicine, Tokyo, said in a news release.

The study was published online in Cell Stem Cell.

Feasibility study

“ALS is totally incurable and it’s a very difficult disease to treat,” senior author Hideyuki Okano, MD, PhD, professor, department of physiology, Keio University, said in the news release.

Preclinical animal models have “limited translational potential” for identifying drug candidates, but induced pluripotent stem cell (iPSC)–derived motor neurons (MNs) from ALS patients can “overcome these limitations for drug screening,” the authors write.

“We previously identified ropinirole [a dopamine D2 receptor agonist] as a potential anti-ALS drug in vitro by iPSC drug discovery,” Dr. Okano said.

The current trial was a randomized, placebo-controlled phase 1/2a feasibility trial that evaluated the safety, tolerability, and efficacy of ropinirole in patients with ALS, using several parameters:

- The revised ALS functional rating scale (ALSFRS-R) score.

- Composite functional endpoints.

- Event-free survival.

- Time to ≤ 50% forced vital capacity (FVC).

The trial consisted of a 12-week run-in period, a 24-week double-blind period, an open-label extension period that lasted from 4 to 24 weeks, and a 4-week follow-up period after administration.

Thirteen patients were assigned to receive ropinirole (23.1% women; mean age, 65.2 ± 12.6 years; 7.7% with clinically definite and 76.9% with clinically probable ALS); seven were assigned to receive placebo (57.1% women; mean age, 66.3 ± 7.5 years; 14.3% with clinically definite and 85.7% with clinically probable ALS).

Of the treatment group, 30.8% had a bulbar onset lesion vs. 57.1% in the placebo group. At baseline, the mean FVC was 94.4% ± 14.9 and 81.5% ± 23.2 in the ropinirole and placebo groups, respectively. The mean body mass index (BMI) was 22.91 ± 3.82 and 19.69 ± 2.63, respectively.

Of the participants,12 in the ropinirole and six in the control group completed the full 24-week treatment protocol; 12 in the ropinirole and five in the placebo group completed the open-label extension (participants who had received placebo were switched to the active drug).

However only seven participants in the ropinirole group and one participant in the placebo group completed the full 1-year trial.

‘Striking correlation’

“During the double-blind period, muscle strength and daily activity were maintained, but a decline in the ALSFRS-R … was not different from that in the placebo group,” the researchers write.

In the open-label extension period, the ropinirole group showed “significant suppression of ALSFRS-R decline,” with an ALSFRS-R score change of only 7.75 (95% confidence interval, 10.66-4.63) for the treatment group vs. 17.51 (95% CI, 22.46-12.56) for the placebo group.

The researchers used the assessment of function and survival (CAFS) score, which adjusts the ALSFRS-R score against mortality, to see whether functional benefits translated into improved survival.

The score “favored ropinirole” in the open-extension period and the entire treatment period but not in the double-blind period.

Disease progression events occurred in 7 of 7 (100%) participants in the placebo group and 7 of 13 (54%) in the ropinirole group, “suggesting a twofold decrease in disease progression” in the treatment group.

The ropinirole group experienced an additional 27.9 weeks of disease progression–free survival, compared with the placebo group.