User login

Safest, most effective medications for spine-related pain in older adults?

, a new comprehensive literature review suggests.

Investigators assessed the evidence for medications used for this indication in older adults by reviewing 138 double-blind, placebo-controlled trials.

Among their key findings and recommendations: Acetaminophen has a favorable safety profile for spine-related pain but nonsteroidal anti-inflammatory drugs (NSAIDs) have greater efficacy.

However, NSAIDs should be used in lower doses in the short term, with gastrointestinal precaution, the researchers note.

Corticosteroids have the least evidence for treating nonspecific back pain, they add.

“Most older people experience neck or low back pain at some point, bothersome enough to see their doctor,” coinvestigator Michael Perloff, MD, PhD, department of neurology, Boston University, said in a news release.

“Our findings provide a helpful medication guide for physicians to use for spine pain in an older population that can have a complex medical history,” Dr. Perloff added.

The results were published online in Drugs and Aging.

Recommendations, warnings

With the graying of the U.S. population, spine-related pain is increasingly common, the investigators note.

Medications play an important role in pain management, but their use has limitations in the elderly, owing to reduced liver and renal function, comorbid medical problems, and polypharmacy.

Other key findings from the literature review include that, although the nerve pain medications gabapentin and pregabalin may cause dizziness or difficulty walking, they also have some demonstrated benefit for neck and back nerve pain, such as sciatica, in older adults.

These agents should be used in lower doses with smaller dose adjustments, the researchers note.

They caution that the muscle relaxants carisoprodol, chlorzoxazone, cyclobenzaprine, metaxalone, methocarbamol, and orphenadrine should be avoided in older adults because of their association with risk for sedation and falls.

‘Rational therapeutic choices’

Three other muscle relaxants – tizanidine, baclofen, and dantrolene – may be helpful for neck and back pain. The most evidence favors tizanidine and baclofen. These should be used in reduced doses. Tizanidine should be avoided in patients with liver disease, and for patients with kidney disease, the dosing of baclofen should be reduced, the investigators write.

Other findings include the following:

- Older tricyclic antidepressants should typically be avoided in this population because of their side effects, but nortriptyline and desipramine may be better tolerated for neck and back nerve pain at lower doses.

- Newer antidepressants, particularly the selective serotonin-norepinephrine reuptake inhibitor duloxetine, have a better safety profile and good efficacy for spine-related nerve pain.

- Traditional opioids are typically avoided in the treatment of spine-related pain in older adults, owing to their associated risks.

However, low-dose opioid therapy may be helpful for severe refractory pain, with close monitoring of patients, the researchers note.

Weaker opioids, such as tramadol, may be better tolerated by older patients. They work well when combined with acetaminophen, but they carry the risk for sedation, upset stomach, and constipation.

“Medications used at the correct dose, for the correct diagnosis, adjusting for preexisting medical problems can result in better use of treatments for spine pain,” coinvestigator Jonathan Fu, MD, also with the department of neurology, Boston University, said in the release.

“Rational therapeutic choices should be targeted to spine pain diagnosis, such as NSAIDs and acetaminophen for arthritic and myofascial-based complaints, gabapentinoids or duloxetine for neuropathic and radicular symptoms, antispastic agents for myofascial-based pain, and combination therapy for mixed etiologies,” the investigators write.

They also emphasize that medications should be coupled with physical therapy and exercise programs, as well as treatment of the underlying degenerative disease process and medical illness, while keeping in mind the need for possible interventions and/or corrective surgery.

The research had no specific funding. The investigators have reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, a new comprehensive literature review suggests.

Investigators assessed the evidence for medications used for this indication in older adults by reviewing 138 double-blind, placebo-controlled trials.

Among their key findings and recommendations: Acetaminophen has a favorable safety profile for spine-related pain but nonsteroidal anti-inflammatory drugs (NSAIDs) have greater efficacy.

However, NSAIDs should be used in lower doses in the short term, with gastrointestinal precaution, the researchers note.

Corticosteroids have the least evidence for treating nonspecific back pain, they add.

“Most older people experience neck or low back pain at some point, bothersome enough to see their doctor,” coinvestigator Michael Perloff, MD, PhD, department of neurology, Boston University, said in a news release.

“Our findings provide a helpful medication guide for physicians to use for spine pain in an older population that can have a complex medical history,” Dr. Perloff added.

The results were published online in Drugs and Aging.

Recommendations, warnings

With the graying of the U.S. population, spine-related pain is increasingly common, the investigators note.

Medications play an important role in pain management, but their use has limitations in the elderly, owing to reduced liver and renal function, comorbid medical problems, and polypharmacy.

Other key findings from the literature review include that, although the nerve pain medications gabapentin and pregabalin may cause dizziness or difficulty walking, they also have some demonstrated benefit for neck and back nerve pain, such as sciatica, in older adults.

These agents should be used in lower doses with smaller dose adjustments, the researchers note.

They caution that the muscle relaxants carisoprodol, chlorzoxazone, cyclobenzaprine, metaxalone, methocarbamol, and orphenadrine should be avoided in older adults because of their association with risk for sedation and falls.

‘Rational therapeutic choices’

Three other muscle relaxants – tizanidine, baclofen, and dantrolene – may be helpful for neck and back pain. The most evidence favors tizanidine and baclofen. These should be used in reduced doses. Tizanidine should be avoided in patients with liver disease, and for patients with kidney disease, the dosing of baclofen should be reduced, the investigators write.

Other findings include the following:

- Older tricyclic antidepressants should typically be avoided in this population because of their side effects, but nortriptyline and desipramine may be better tolerated for neck and back nerve pain at lower doses.

- Newer antidepressants, particularly the selective serotonin-norepinephrine reuptake inhibitor duloxetine, have a better safety profile and good efficacy for spine-related nerve pain.

- Traditional opioids are typically avoided in the treatment of spine-related pain in older adults, owing to their associated risks.

However, low-dose opioid therapy may be helpful for severe refractory pain, with close monitoring of patients, the researchers note.

Weaker opioids, such as tramadol, may be better tolerated by older patients. They work well when combined with acetaminophen, but they carry the risk for sedation, upset stomach, and constipation.

“Medications used at the correct dose, for the correct diagnosis, adjusting for preexisting medical problems can result in better use of treatments for spine pain,” coinvestigator Jonathan Fu, MD, also with the department of neurology, Boston University, said in the release.

“Rational therapeutic choices should be targeted to spine pain diagnosis, such as NSAIDs and acetaminophen for arthritic and myofascial-based complaints, gabapentinoids or duloxetine for neuropathic and radicular symptoms, antispastic agents for myofascial-based pain, and combination therapy for mixed etiologies,” the investigators write.

They also emphasize that medications should be coupled with physical therapy and exercise programs, as well as treatment of the underlying degenerative disease process and medical illness, while keeping in mind the need for possible interventions and/or corrective surgery.

The research had no specific funding. The investigators have reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, a new comprehensive literature review suggests.

Investigators assessed the evidence for medications used for this indication in older adults by reviewing 138 double-blind, placebo-controlled trials.

Among their key findings and recommendations: Acetaminophen has a favorable safety profile for spine-related pain but nonsteroidal anti-inflammatory drugs (NSAIDs) have greater efficacy.

However, NSAIDs should be used in lower doses in the short term, with gastrointestinal precaution, the researchers note.

Corticosteroids have the least evidence for treating nonspecific back pain, they add.

“Most older people experience neck or low back pain at some point, bothersome enough to see their doctor,” coinvestigator Michael Perloff, MD, PhD, department of neurology, Boston University, said in a news release.

“Our findings provide a helpful medication guide for physicians to use for spine pain in an older population that can have a complex medical history,” Dr. Perloff added.

The results were published online in Drugs and Aging.

Recommendations, warnings

With the graying of the U.S. population, spine-related pain is increasingly common, the investigators note.

Medications play an important role in pain management, but their use has limitations in the elderly, owing to reduced liver and renal function, comorbid medical problems, and polypharmacy.

Other key findings from the literature review include that, although the nerve pain medications gabapentin and pregabalin may cause dizziness or difficulty walking, they also have some demonstrated benefit for neck and back nerve pain, such as sciatica, in older adults.

These agents should be used in lower doses with smaller dose adjustments, the researchers note.

They caution that the muscle relaxants carisoprodol, chlorzoxazone, cyclobenzaprine, metaxalone, methocarbamol, and orphenadrine should be avoided in older adults because of their association with risk for sedation and falls.

‘Rational therapeutic choices’

Three other muscle relaxants – tizanidine, baclofen, and dantrolene – may be helpful for neck and back pain. The most evidence favors tizanidine and baclofen. These should be used in reduced doses. Tizanidine should be avoided in patients with liver disease, and for patients with kidney disease, the dosing of baclofen should be reduced, the investigators write.

Other findings include the following:

- Older tricyclic antidepressants should typically be avoided in this population because of their side effects, but nortriptyline and desipramine may be better tolerated for neck and back nerve pain at lower doses.

- Newer antidepressants, particularly the selective serotonin-norepinephrine reuptake inhibitor duloxetine, have a better safety profile and good efficacy for spine-related nerve pain.

- Traditional opioids are typically avoided in the treatment of spine-related pain in older adults, owing to their associated risks.

However, low-dose opioid therapy may be helpful for severe refractory pain, with close monitoring of patients, the researchers note.

Weaker opioids, such as tramadol, may be better tolerated by older patients. They work well when combined with acetaminophen, but they carry the risk for sedation, upset stomach, and constipation.

“Medications used at the correct dose, for the correct diagnosis, adjusting for preexisting medical problems can result in better use of treatments for spine pain,” coinvestigator Jonathan Fu, MD, also with the department of neurology, Boston University, said in the release.

“Rational therapeutic choices should be targeted to spine pain diagnosis, such as NSAIDs and acetaminophen for arthritic and myofascial-based complaints, gabapentinoids or duloxetine for neuropathic and radicular symptoms, antispastic agents for myofascial-based pain, and combination therapy for mixed etiologies,” the investigators write.

They also emphasize that medications should be coupled with physical therapy and exercise programs, as well as treatment of the underlying degenerative disease process and medical illness, while keeping in mind the need for possible interventions and/or corrective surgery.

The research had no specific funding. The investigators have reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

FROM DRUGS AND AGING

Alcohol’s detrimental impact on the brain explained?

Results of a large observational study suggest brain iron accumulation is a “plausible pathway” through which alcohol negatively affects cognition, study Anya Topiwala, MD, PhD, senior clinical researcher, Nuffield Department of Population Health, University of Oxford, England, said in an interview.

Study participants who drank 56 grams of alcohol a week had higher brain iron levels. The U.K. guideline for “low risk” alcohol consumption is less than 14 units weekly, or 112 grams.

“We are finding harmful associations with iron within those low-risk alcohol intake guidelines,” said Dr. Topiwala.

The study was published online in PLOS Medicine.

Early intervention opportunity?

Previous research suggests higher brain iron may be involved in the pathophysiology of Alzheimer’s and Parkinson’s diseases. However, it’s unclear whether deposition plays a role in alcohol’s effect on the brain and if it does, whether this could present an opportunity for early intervention with, for example, chelating agents.

The study included 20,729 participants in the UK Biobank study, which recruited volunteers from 2006 to 2010. Participants had a mean age of 54.8 years, and 48.6% were female.

Participants self-identified as current, never, or previous alcohol consumers. For current drinkers, researchers calculated the total weekly number of U.K. units of alcohol consumed. One unit is 8 grams. A standard drink in the United States is 14 grams. They categorized weekly consumption into quintiles and used the lowest quintile as the reference category.

Participants underwent MRI to determine brain iron levels. Areas of interest were deep brain structures in the basal ganglia.

Mean weekly alcohol consumption was 17.7 units, which is higher than U.K. guidelines for low-risk consumption. “Half of the sample were drinking above what is recommended,” said Dr. Topiwala.

Alcohol consumption was associated with markers of higher iron in the bilateral putamen (beta, 0.08 standard deviation; 95% confidence interval, 0.06-0.09; P < .001), caudate (beta, 0.05; 95% CI, 0.04-0.07; P < .001), and substantia nigra (beta, 0.03; 95% CI; 0.02-0.05; P < .001).

Poorer performance

Drinking more than 7 units (56 grams) weekly was associated with higher susceptibility for all brain regions, except the thalamus.

Controlling for menopause status did not alter associations between alcohol and susceptibility for any brain region. This was also the case when excluding blood pressure and cholesterol as covariates.

There were significant interactions with age in the bilateral putamen and caudate but not with sex, smoking, or Townsend Deprivation Index, which includes such factors as unemployment and living conditions.

To gather data on liver iron levels, participants underwent abdominal imaging at the same time as brain imaging. Dr. Topiwala explained that the liver is a primary storage center for iron, so it was used as “a kind of surrogate marker” of iron in the body.

The researchers showed an indirect effect of alcohol through systemic iron. A 1 SD increase in weekly alcohol consumption was associated with a 0.05 mg/g (95% CI, 0.02-0.07; P < .001) increase in liver iron. In addition, a 1 mg/g increase in liver iron was associated with a 0.44 (95% CI, 0.35-0.52; P < .001) SD increase in left putamen susceptibility.

In this sample, 32% (95% CI, 22-49; P < .001) of alcohol’s total effect on left putamen susceptibility was mediated via higher systemic iron levels.

To minimize the impact of other factors influencing the association between alcohol consumption and brain iron – and the possibility that people with more brain iron drink more – researchers used Mendelian randomization that considers genetically predicted alcohol intake. This analysis supported findings of associations between alcohol consumption and brain iron.

Participants completed a cognitive battery, which included trail-making tests that reflect executive function, puzzle tests that assess fluid intelligence or logic and reasoning, and task-based tests using the “Snap” card game to measure reaction time.

Investigators found the more iron that was present in certain brain regions, the poorer participants’ cognitive performance.

Patients should know about the risks of moderate alcohol intake so they can make decisions about drinking, said Dr. Topiwala. “They should be aware that 14 units of alcohol per week is not a zero risk.”

Novel research

Commenting for this news organization, Heather Snyder, PhD, vice president of medical and scientific relations, Alzheimer’s Association, noted the study’s large size as a strength of the research.

She noted previous research has shown an association between higher iron levels and alcohol dependence and worse cognitive function, but the potential connection of brain iron levels, moderate alcohol consumption, and cognition has not been studied to date.

“This paper aims to look at whether there is a potential biological link between moderate alcohol consumption and cognition through iron-related pathways.”

The authors suggest more work is needed to understand whether alcohol consumption impacts iron-related biologies to affect downstream cognition, said Dr. Snyder. “Although this study does not answer that question, it does highlight some important questions.”

Study authors received funding from Wellcome Trust, UK Medical Research Council, National Institute for Health Research (NIHR) Oxford Biomedical Research Centre, BHF Centre of Research Excellence, British Heart Foundation, NIHR Cambridge Biomedical Research Centre, U.S. Department of Veterans Affairs, China Scholarship Council, and Li Ka Shing Centre for Health Information and Discovery. Dr. Topiwala has disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Results of a large observational study suggest brain iron accumulation is a “plausible pathway” through which alcohol negatively affects cognition, study Anya Topiwala, MD, PhD, senior clinical researcher, Nuffield Department of Population Health, University of Oxford, England, said in an interview.

Study participants who drank 56 grams of alcohol a week had higher brain iron levels. The U.K. guideline for “low risk” alcohol consumption is less than 14 units weekly, or 112 grams.

“We are finding harmful associations with iron within those low-risk alcohol intake guidelines,” said Dr. Topiwala.

The study was published online in PLOS Medicine.

Early intervention opportunity?

Previous research suggests higher brain iron may be involved in the pathophysiology of Alzheimer’s and Parkinson’s diseases. However, it’s unclear whether deposition plays a role in alcohol’s effect on the brain and if it does, whether this could present an opportunity for early intervention with, for example, chelating agents.

The study included 20,729 participants in the UK Biobank study, which recruited volunteers from 2006 to 2010. Participants had a mean age of 54.8 years, and 48.6% were female.

Participants self-identified as current, never, or previous alcohol consumers. For current drinkers, researchers calculated the total weekly number of U.K. units of alcohol consumed. One unit is 8 grams. A standard drink in the United States is 14 grams. They categorized weekly consumption into quintiles and used the lowest quintile as the reference category.

Participants underwent MRI to determine brain iron levels. Areas of interest were deep brain structures in the basal ganglia.

Mean weekly alcohol consumption was 17.7 units, which is higher than U.K. guidelines for low-risk consumption. “Half of the sample were drinking above what is recommended,” said Dr. Topiwala.

Alcohol consumption was associated with markers of higher iron in the bilateral putamen (beta, 0.08 standard deviation; 95% confidence interval, 0.06-0.09; P < .001), caudate (beta, 0.05; 95% CI, 0.04-0.07; P < .001), and substantia nigra (beta, 0.03; 95% CI; 0.02-0.05; P < .001).

Poorer performance

Drinking more than 7 units (56 grams) weekly was associated with higher susceptibility for all brain regions, except the thalamus.

Controlling for menopause status did not alter associations between alcohol and susceptibility for any brain region. This was also the case when excluding blood pressure and cholesterol as covariates.

There were significant interactions with age in the bilateral putamen and caudate but not with sex, smoking, or Townsend Deprivation Index, which includes such factors as unemployment and living conditions.

To gather data on liver iron levels, participants underwent abdominal imaging at the same time as brain imaging. Dr. Topiwala explained that the liver is a primary storage center for iron, so it was used as “a kind of surrogate marker” of iron in the body.

The researchers showed an indirect effect of alcohol through systemic iron. A 1 SD increase in weekly alcohol consumption was associated with a 0.05 mg/g (95% CI, 0.02-0.07; P < .001) increase in liver iron. In addition, a 1 mg/g increase in liver iron was associated with a 0.44 (95% CI, 0.35-0.52; P < .001) SD increase in left putamen susceptibility.

In this sample, 32% (95% CI, 22-49; P < .001) of alcohol’s total effect on left putamen susceptibility was mediated via higher systemic iron levels.

To minimize the impact of other factors influencing the association between alcohol consumption and brain iron – and the possibility that people with more brain iron drink more – researchers used Mendelian randomization that considers genetically predicted alcohol intake. This analysis supported findings of associations between alcohol consumption and brain iron.

Participants completed a cognitive battery, which included trail-making tests that reflect executive function, puzzle tests that assess fluid intelligence or logic and reasoning, and task-based tests using the “Snap” card game to measure reaction time.

Investigators found the more iron that was present in certain brain regions, the poorer participants’ cognitive performance.

Patients should know about the risks of moderate alcohol intake so they can make decisions about drinking, said Dr. Topiwala. “They should be aware that 14 units of alcohol per week is not a zero risk.”

Novel research

Commenting for this news organization, Heather Snyder, PhD, vice president of medical and scientific relations, Alzheimer’s Association, noted the study’s large size as a strength of the research.

She noted previous research has shown an association between higher iron levels and alcohol dependence and worse cognitive function, but the potential connection of brain iron levels, moderate alcohol consumption, and cognition has not been studied to date.

“This paper aims to look at whether there is a potential biological link between moderate alcohol consumption and cognition through iron-related pathways.”

The authors suggest more work is needed to understand whether alcohol consumption impacts iron-related biologies to affect downstream cognition, said Dr. Snyder. “Although this study does not answer that question, it does highlight some important questions.”

Study authors received funding from Wellcome Trust, UK Medical Research Council, National Institute for Health Research (NIHR) Oxford Biomedical Research Centre, BHF Centre of Research Excellence, British Heart Foundation, NIHR Cambridge Biomedical Research Centre, U.S. Department of Veterans Affairs, China Scholarship Council, and Li Ka Shing Centre for Health Information and Discovery. Dr. Topiwala has disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Results of a large observational study suggest brain iron accumulation is a “plausible pathway” through which alcohol negatively affects cognition, study Anya Topiwala, MD, PhD, senior clinical researcher, Nuffield Department of Population Health, University of Oxford, England, said in an interview.

Study participants who drank 56 grams of alcohol a week had higher brain iron levels. The U.K. guideline for “low risk” alcohol consumption is less than 14 units weekly, or 112 grams.

“We are finding harmful associations with iron within those low-risk alcohol intake guidelines,” said Dr. Topiwala.

The study was published online in PLOS Medicine.

Early intervention opportunity?

Previous research suggests higher brain iron may be involved in the pathophysiology of Alzheimer’s and Parkinson’s diseases. However, it’s unclear whether deposition plays a role in alcohol’s effect on the brain and if it does, whether this could present an opportunity for early intervention with, for example, chelating agents.

The study included 20,729 participants in the UK Biobank study, which recruited volunteers from 2006 to 2010. Participants had a mean age of 54.8 years, and 48.6% were female.

Participants self-identified as current, never, or previous alcohol consumers. For current drinkers, researchers calculated the total weekly number of U.K. units of alcohol consumed. One unit is 8 grams. A standard drink in the United States is 14 grams. They categorized weekly consumption into quintiles and used the lowest quintile as the reference category.

Participants underwent MRI to determine brain iron levels. Areas of interest were deep brain structures in the basal ganglia.

Mean weekly alcohol consumption was 17.7 units, which is higher than U.K. guidelines for low-risk consumption. “Half of the sample were drinking above what is recommended,” said Dr. Topiwala.

Alcohol consumption was associated with markers of higher iron in the bilateral putamen (beta, 0.08 standard deviation; 95% confidence interval, 0.06-0.09; P < .001), caudate (beta, 0.05; 95% CI, 0.04-0.07; P < .001), and substantia nigra (beta, 0.03; 95% CI; 0.02-0.05; P < .001).

Poorer performance

Drinking more than 7 units (56 grams) weekly was associated with higher susceptibility for all brain regions, except the thalamus.

Controlling for menopause status did not alter associations between alcohol and susceptibility for any brain region. This was also the case when excluding blood pressure and cholesterol as covariates.

There were significant interactions with age in the bilateral putamen and caudate but not with sex, smoking, or Townsend Deprivation Index, which includes such factors as unemployment and living conditions.

To gather data on liver iron levels, participants underwent abdominal imaging at the same time as brain imaging. Dr. Topiwala explained that the liver is a primary storage center for iron, so it was used as “a kind of surrogate marker” of iron in the body.

The researchers showed an indirect effect of alcohol through systemic iron. A 1 SD increase in weekly alcohol consumption was associated with a 0.05 mg/g (95% CI, 0.02-0.07; P < .001) increase in liver iron. In addition, a 1 mg/g increase in liver iron was associated with a 0.44 (95% CI, 0.35-0.52; P < .001) SD increase in left putamen susceptibility.

In this sample, 32% (95% CI, 22-49; P < .001) of alcohol’s total effect on left putamen susceptibility was mediated via higher systemic iron levels.

To minimize the impact of other factors influencing the association between alcohol consumption and brain iron – and the possibility that people with more brain iron drink more – researchers used Mendelian randomization that considers genetically predicted alcohol intake. This analysis supported findings of associations between alcohol consumption and brain iron.

Participants completed a cognitive battery, which included trail-making tests that reflect executive function, puzzle tests that assess fluid intelligence or logic and reasoning, and task-based tests using the “Snap” card game to measure reaction time.

Investigators found the more iron that was present in certain brain regions, the poorer participants’ cognitive performance.

Patients should know about the risks of moderate alcohol intake so they can make decisions about drinking, said Dr. Topiwala. “They should be aware that 14 units of alcohol per week is not a zero risk.”

Novel research

Commenting for this news organization, Heather Snyder, PhD, vice president of medical and scientific relations, Alzheimer’s Association, noted the study’s large size as a strength of the research.

She noted previous research has shown an association between higher iron levels and alcohol dependence and worse cognitive function, but the potential connection of brain iron levels, moderate alcohol consumption, and cognition has not been studied to date.

“This paper aims to look at whether there is a potential biological link between moderate alcohol consumption and cognition through iron-related pathways.”

The authors suggest more work is needed to understand whether alcohol consumption impacts iron-related biologies to affect downstream cognition, said Dr. Snyder. “Although this study does not answer that question, it does highlight some important questions.”

Study authors received funding from Wellcome Trust, UK Medical Research Council, National Institute for Health Research (NIHR) Oxford Biomedical Research Centre, BHF Centre of Research Excellence, British Heart Foundation, NIHR Cambridge Biomedical Research Centre, U.S. Department of Veterans Affairs, China Scholarship Council, and Li Ka Shing Centre for Health Information and Discovery. Dr. Topiwala has disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

FROM PLOS MEDICINE

Sleep-deprived physicians less empathetic to patient pain?

new research suggests.

In the first of two studies, resident physicians were presented with two hypothetical scenarios involving a patient who complains of pain. They were asked about their likelihood of prescribing pain medication. The test was given to one group of residents who were just starting their day and to another group who were at the end of their night shift after being on call for 26 hours.

Results showed that the night shift residents were less likely than their daytime counterparts to say they would prescribe pain medication to the patients.

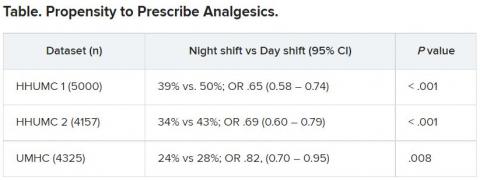

In further analysis of discharge notes from more than 13,000 electronic records of patients presenting with pain complaints at hospitals in Israel and the United States, the likelihood of an analgesic being prescribed during the night shift was 11% lower in Israel and 9% lower in the United States, compared with the day shift.

“Pain management is a major challenge, and a doctor’s perception of a patient’s subjective pain is susceptible to bias,” coinvestigator David Gozal, MD, the Marie M. and Harry L. Smith Endowed Chair of Child Health, University of Missouri–Columbia, said in a press release.

“This study demonstrated that night shift work is an important and previously unrecognized source of bias in pain management, likely stemming from impaired perception of pain,” Dr. Gozal added.

The findings were published online in the Proceedings of the National Academy of Sciences.

‘Directional’ differences

Senior investigator Alex Gileles-Hillel, MD, senior pediatric pulmonologist and sleep researcher at Hadassah University Medical Center, Jerusalem, said in an interview that physicians must make “complex assessments of patients’ subjective pain experience” – and the “subjective nature of pain management decisions can give rise to various biases.”

Dr. Gileles-Hillel has previously researched the cognitive toll of night shift work on physicians.

“It’s pretty established, for example, not to drive when sleep deprived because cognition is impaired,” he said. The current study explored whether sleep deprivation could affect areas other than cognition, including emotions and empathy.

The researchers used “two complementary approaches.” First, they administered tests to measure empathy and pain management decisions in 67 resident physicians at Hadassah Medical Centers either following a 26-hour night shift that began at 8:00 a.m. the day before (n = 36) or immediately before starting the workday (n = 31).

There were no significant differences in demographic, sleep, or burnout measures between the two groups, except that night shift physicians had slept less than those in the daytime group (2.93 vs. 5.96 hours).

Participants completed two tasks. In the empathy-for-pain task, they rated their emotional reactions to pictures of individuals in pain. In the empathy accuracy task, they were asked to assess the feelings of videotaped individuals telling emotional stories.

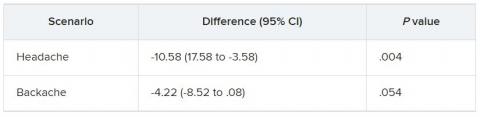

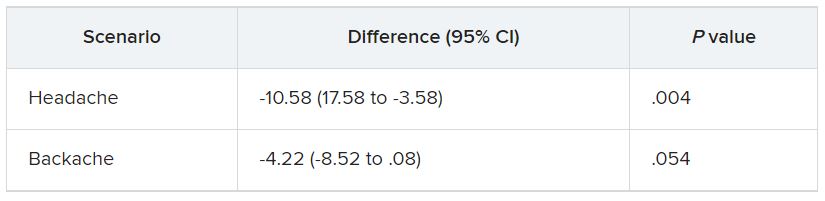

They were then presented with two clinical scenarios: a female patient with a headache and a male patient with a backache. Following that, they were asked to assess the magnitude of the patients’ pain and how likely they would be to prescribe pain medication.

In the empathy-for-pain task, physicians’ empathy scores were significantly lower in the night shift group than in the day group (difference, –0.83; 95% CI, –1.55 to –0.10; P = .026). There were no significant differences between the groups in the empathy accuracy task.

In both scenarios, physicians in the night shift group assessed the patient’s pain as weaker in comparison with physicians in the day group. There was a statistically significant difference in the headache scenario but not the backache scenario.

In the headache scenario, the propensity of the physicians to prescribe analgesics was “directionally lower” but did not reach statistical significance. In the backache scenario, there was no significant difference between the groups’ prescribing propensities.

In both scenarios, pain assessment was positively correlated with the propensity to prescribe analgesics.

Despite the lack of statistical significance, the findings “documented a negative effect of night shift work on physician empathy for pain and a positive association between physician assessment of patient pain and the propensity to prescribe analgesics,” the investigators wrote.

Need for naps?

The researchers then analyzed analgesic prescription patterns drawn from three datasets of discharge notes of patients presenting to the emergency department with pain complaints (n = 13,482) at two branches of Hadassah-Hebrew University Medical Center and the University of Missouri Health Center.

The researchers collected data, including discharge time, medications patients were prescribed upon discharge, and patients’ subjective pain rating on a scale of 0-10 on a visual analogue scale (VAS).

Although patients’ VAS scores did not differ with respect to time or shift, patients were discharged with significantly less prescribed analgesics during the night shift in comparison with the day shift.

No similar differences in prescriptions between night shifts and day shifts were found for nonanalgesic medications, such as for diabetes or blood pressure. This suggests “the effect was specific to pain,” Dr. Gileles-Hillel said.

The pattern remained significant after controlling for potential confounders, including patient and physician variables and emergency department characteristics.

In addition, patients seen during night shifts received fewer analgesics, particularly opioids, than recommended by the World Health Organization for pain management.

“The first study enabled us to measure empathy for pain directly and examine our hypothesis in a controlled environment, while the second enabled us to test the implications by examining real-life pain management decisions,” Dr. Gileles-Hillel said.

“Physicians need to be aware of this,” he noted. “I try to be aware when I’m taking calls [at night] that I’m less empathetic to others and I might be more brief or angry with others.”

On a “house management level, perhaps institutions should try to schedule naps either before or during overnight call. A nap might give a boost and reboot not only to cognitive but also to emotional resources,” Dr. Gileles-Hillel added.

Compromised safety

In a comment, Eti Ben Simon, PhD, a postdoctoral fellow at the Center for Human Sleep Science, University of California, Berkeley, called the study “an important contribution to a growing list of studies that reveal how long night shifts reduce overall safety” for both patients and clinicians.

“It’s time to abandon the notion that the human brain can function as normal after being deprived of sleep for 24 hours,” said Dr. Ben Simon, who was not involved with the research.

“This is especially true in medicine, where we trust others to take care of us and feel our pain. These functions are simply not possible without adequate sleep,” she added.

Also commenting, Kannan Ramar, MD, president of the American Academy of Sleep Medicine, suggested that being cognizant of these findings “may help providers to mitigate this bias” of underprescribing pain medications when treating their patients.

Dr. Ramar, who is also a critical care specialist, pulmonologist, and sleep medicine specialist at Mayo Clinic, Rochester, Minn., was not involved with the research.

He noted that “further studies that systematically evaluate this further in a prospective and blinded way will be important.”

The research was supported in part by grants from the Israel Science Foundation, Joy Ventures, the Recanati Fund at the Jerusalem School of Business at the Hebrew University, and a fellowship from the Azrieli Foundation and received grant support to various investigators from the NIH, the Leda J. Sears Foundation, and the University of Missouri. The investigators, Ramar, and Ben Simon have reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

new research suggests.

In the first of two studies, resident physicians were presented with two hypothetical scenarios involving a patient who complains of pain. They were asked about their likelihood of prescribing pain medication. The test was given to one group of residents who were just starting their day and to another group who were at the end of their night shift after being on call for 26 hours.

Results showed that the night shift residents were less likely than their daytime counterparts to say they would prescribe pain medication to the patients.

In further analysis of discharge notes from more than 13,000 electronic records of patients presenting with pain complaints at hospitals in Israel and the United States, the likelihood of an analgesic being prescribed during the night shift was 11% lower in Israel and 9% lower in the United States, compared with the day shift.

“Pain management is a major challenge, and a doctor’s perception of a patient’s subjective pain is susceptible to bias,” coinvestigator David Gozal, MD, the Marie M. and Harry L. Smith Endowed Chair of Child Health, University of Missouri–Columbia, said in a press release.

“This study demonstrated that night shift work is an important and previously unrecognized source of bias in pain management, likely stemming from impaired perception of pain,” Dr. Gozal added.

The findings were published online in the Proceedings of the National Academy of Sciences.

‘Directional’ differences

Senior investigator Alex Gileles-Hillel, MD, senior pediatric pulmonologist and sleep researcher at Hadassah University Medical Center, Jerusalem, said in an interview that physicians must make “complex assessments of patients’ subjective pain experience” – and the “subjective nature of pain management decisions can give rise to various biases.”

Dr. Gileles-Hillel has previously researched the cognitive toll of night shift work on physicians.

“It’s pretty established, for example, not to drive when sleep deprived because cognition is impaired,” he said. The current study explored whether sleep deprivation could affect areas other than cognition, including emotions and empathy.

The researchers used “two complementary approaches.” First, they administered tests to measure empathy and pain management decisions in 67 resident physicians at Hadassah Medical Centers either following a 26-hour night shift that began at 8:00 a.m. the day before (n = 36) or immediately before starting the workday (n = 31).

There were no significant differences in demographic, sleep, or burnout measures between the two groups, except that night shift physicians had slept less than those in the daytime group (2.93 vs. 5.96 hours).

Participants completed two tasks. In the empathy-for-pain task, they rated their emotional reactions to pictures of individuals in pain. In the empathy accuracy task, they were asked to assess the feelings of videotaped individuals telling emotional stories.

They were then presented with two clinical scenarios: a female patient with a headache and a male patient with a backache. Following that, they were asked to assess the magnitude of the patients’ pain and how likely they would be to prescribe pain medication.

In the empathy-for-pain task, physicians’ empathy scores were significantly lower in the night shift group than in the day group (difference, –0.83; 95% CI, –1.55 to –0.10; P = .026). There were no significant differences between the groups in the empathy accuracy task.

In both scenarios, physicians in the night shift group assessed the patient’s pain as weaker in comparison with physicians in the day group. There was a statistically significant difference in the headache scenario but not the backache scenario.

In the headache scenario, the propensity of the physicians to prescribe analgesics was “directionally lower” but did not reach statistical significance. In the backache scenario, there was no significant difference between the groups’ prescribing propensities.

In both scenarios, pain assessment was positively correlated with the propensity to prescribe analgesics.

Despite the lack of statistical significance, the findings “documented a negative effect of night shift work on physician empathy for pain and a positive association between physician assessment of patient pain and the propensity to prescribe analgesics,” the investigators wrote.

Need for naps?

The researchers then analyzed analgesic prescription patterns drawn from three datasets of discharge notes of patients presenting to the emergency department with pain complaints (n = 13,482) at two branches of Hadassah-Hebrew University Medical Center and the University of Missouri Health Center.

The researchers collected data, including discharge time, medications patients were prescribed upon discharge, and patients’ subjective pain rating on a scale of 0-10 on a visual analogue scale (VAS).

Although patients’ VAS scores did not differ with respect to time or shift, patients were discharged with significantly less prescribed analgesics during the night shift in comparison with the day shift.

No similar differences in prescriptions between night shifts and day shifts were found for nonanalgesic medications, such as for diabetes or blood pressure. This suggests “the effect was specific to pain,” Dr. Gileles-Hillel said.

The pattern remained significant after controlling for potential confounders, including patient and physician variables and emergency department characteristics.

In addition, patients seen during night shifts received fewer analgesics, particularly opioids, than recommended by the World Health Organization for pain management.

“The first study enabled us to measure empathy for pain directly and examine our hypothesis in a controlled environment, while the second enabled us to test the implications by examining real-life pain management decisions,” Dr. Gileles-Hillel said.

“Physicians need to be aware of this,” he noted. “I try to be aware when I’m taking calls [at night] that I’m less empathetic to others and I might be more brief or angry with others.”

On a “house management level, perhaps institutions should try to schedule naps either before or during overnight call. A nap might give a boost and reboot not only to cognitive but also to emotional resources,” Dr. Gileles-Hillel added.

Compromised safety

In a comment, Eti Ben Simon, PhD, a postdoctoral fellow at the Center for Human Sleep Science, University of California, Berkeley, called the study “an important contribution to a growing list of studies that reveal how long night shifts reduce overall safety” for both patients and clinicians.

“It’s time to abandon the notion that the human brain can function as normal after being deprived of sleep for 24 hours,” said Dr. Ben Simon, who was not involved with the research.

“This is especially true in medicine, where we trust others to take care of us and feel our pain. These functions are simply not possible without adequate sleep,” she added.

Also commenting, Kannan Ramar, MD, president of the American Academy of Sleep Medicine, suggested that being cognizant of these findings “may help providers to mitigate this bias” of underprescribing pain medications when treating their patients.

Dr. Ramar, who is also a critical care specialist, pulmonologist, and sleep medicine specialist at Mayo Clinic, Rochester, Minn., was not involved with the research.

He noted that “further studies that systematically evaluate this further in a prospective and blinded way will be important.”

The research was supported in part by grants from the Israel Science Foundation, Joy Ventures, the Recanati Fund at the Jerusalem School of Business at the Hebrew University, and a fellowship from the Azrieli Foundation and received grant support to various investigators from the NIH, the Leda J. Sears Foundation, and the University of Missouri. The investigators, Ramar, and Ben Simon have reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

new research suggests.

In the first of two studies, resident physicians were presented with two hypothetical scenarios involving a patient who complains of pain. They were asked about their likelihood of prescribing pain medication. The test was given to one group of residents who were just starting their day and to another group who were at the end of their night shift after being on call for 26 hours.

Results showed that the night shift residents were less likely than their daytime counterparts to say they would prescribe pain medication to the patients.

In further analysis of discharge notes from more than 13,000 electronic records of patients presenting with pain complaints at hospitals in Israel and the United States, the likelihood of an analgesic being prescribed during the night shift was 11% lower in Israel and 9% lower in the United States, compared with the day shift.

“Pain management is a major challenge, and a doctor’s perception of a patient’s subjective pain is susceptible to bias,” coinvestigator David Gozal, MD, the Marie M. and Harry L. Smith Endowed Chair of Child Health, University of Missouri–Columbia, said in a press release.

“This study demonstrated that night shift work is an important and previously unrecognized source of bias in pain management, likely stemming from impaired perception of pain,” Dr. Gozal added.

The findings were published online in the Proceedings of the National Academy of Sciences.

‘Directional’ differences

Senior investigator Alex Gileles-Hillel, MD, senior pediatric pulmonologist and sleep researcher at Hadassah University Medical Center, Jerusalem, said in an interview that physicians must make “complex assessments of patients’ subjective pain experience” – and the “subjective nature of pain management decisions can give rise to various biases.”

Dr. Gileles-Hillel has previously researched the cognitive toll of night shift work on physicians.

“It’s pretty established, for example, not to drive when sleep deprived because cognition is impaired,” he said. The current study explored whether sleep deprivation could affect areas other than cognition, including emotions and empathy.

The researchers used “two complementary approaches.” First, they administered tests to measure empathy and pain management decisions in 67 resident physicians at Hadassah Medical Centers either following a 26-hour night shift that began at 8:00 a.m. the day before (n = 36) or immediately before starting the workday (n = 31).

There were no significant differences in demographic, sleep, or burnout measures between the two groups, except that night shift physicians had slept less than those in the daytime group (2.93 vs. 5.96 hours).

Participants completed two tasks. In the empathy-for-pain task, they rated their emotional reactions to pictures of individuals in pain. In the empathy accuracy task, they were asked to assess the feelings of videotaped individuals telling emotional stories.

They were then presented with two clinical scenarios: a female patient with a headache and a male patient with a backache. Following that, they were asked to assess the magnitude of the patients’ pain and how likely they would be to prescribe pain medication.

In the empathy-for-pain task, physicians’ empathy scores were significantly lower in the night shift group than in the day group (difference, –0.83; 95% CI, –1.55 to –0.10; P = .026). There were no significant differences between the groups in the empathy accuracy task.

In both scenarios, physicians in the night shift group assessed the patient’s pain as weaker in comparison with physicians in the day group. There was a statistically significant difference in the headache scenario but not the backache scenario.

In the headache scenario, the propensity of the physicians to prescribe analgesics was “directionally lower” but did not reach statistical significance. In the backache scenario, there was no significant difference between the groups’ prescribing propensities.

In both scenarios, pain assessment was positively correlated with the propensity to prescribe analgesics.

Despite the lack of statistical significance, the findings “documented a negative effect of night shift work on physician empathy for pain and a positive association between physician assessment of patient pain and the propensity to prescribe analgesics,” the investigators wrote.

Need for naps?

The researchers then analyzed analgesic prescription patterns drawn from three datasets of discharge notes of patients presenting to the emergency department with pain complaints (n = 13,482) at two branches of Hadassah-Hebrew University Medical Center and the University of Missouri Health Center.

The researchers collected data, including discharge time, medications patients were prescribed upon discharge, and patients’ subjective pain rating on a scale of 0-10 on a visual analogue scale (VAS).

Although patients’ VAS scores did not differ with respect to time or shift, patients were discharged with significantly less prescribed analgesics during the night shift in comparison with the day shift.

No similar differences in prescriptions between night shifts and day shifts were found for nonanalgesic medications, such as for diabetes or blood pressure. This suggests “the effect was specific to pain,” Dr. Gileles-Hillel said.

The pattern remained significant after controlling for potential confounders, including patient and physician variables and emergency department characteristics.

In addition, patients seen during night shifts received fewer analgesics, particularly opioids, than recommended by the World Health Organization for pain management.

“The first study enabled us to measure empathy for pain directly and examine our hypothesis in a controlled environment, while the second enabled us to test the implications by examining real-life pain management decisions,” Dr. Gileles-Hillel said.

“Physicians need to be aware of this,” he noted. “I try to be aware when I’m taking calls [at night] that I’m less empathetic to others and I might be more brief or angry with others.”

On a “house management level, perhaps institutions should try to schedule naps either before or during overnight call. A nap might give a boost and reboot not only to cognitive but also to emotional resources,” Dr. Gileles-Hillel added.

Compromised safety

In a comment, Eti Ben Simon, PhD, a postdoctoral fellow at the Center for Human Sleep Science, University of California, Berkeley, called the study “an important contribution to a growing list of studies that reveal how long night shifts reduce overall safety” for both patients and clinicians.

“It’s time to abandon the notion that the human brain can function as normal after being deprived of sleep for 24 hours,” said Dr. Ben Simon, who was not involved with the research.

“This is especially true in medicine, where we trust others to take care of us and feel our pain. These functions are simply not possible without adequate sleep,” she added.

Also commenting, Kannan Ramar, MD, president of the American Academy of Sleep Medicine, suggested that being cognizant of these findings “may help providers to mitigate this bias” of underprescribing pain medications when treating their patients.

Dr. Ramar, who is also a critical care specialist, pulmonologist, and sleep medicine specialist at Mayo Clinic, Rochester, Minn., was not involved with the research.

He noted that “further studies that systematically evaluate this further in a prospective and blinded way will be important.”

The research was supported in part by grants from the Israel Science Foundation, Joy Ventures, the Recanati Fund at the Jerusalem School of Business at the Hebrew University, and a fellowship from the Azrieli Foundation and received grant support to various investigators from the NIH, the Leda J. Sears Foundation, and the University of Missouri. The investigators, Ramar, and Ben Simon have reported no relevant financial relationships.

A version of this article first appeared on Medscape.com.

FROM THE PROCEEDINGS OF THE NATIONAL ACADEMY OF SCIENCES

‘Striking’ jump in cost of brand-name epilepsy meds

, a new analysis shows.

After adjustment for inflation, the cost of a 1-year supply of brand-name ASMs grew 277%, while generics became 42% less expensive.

“Our study makes transparent striking trends in brand name prescribing patterns,” the study team wrote.

Since 2010, the costs for brand-name ASMs have “consistently” increased. Costs were particularly boosted by increases in prescriptions for lacosamide (Vimpat), in addition to a “steep increase in the cost per pill, with brand-name drugs costing 10 times more than their generic counterparts,” first author Samuel Waller Terman, MD, of the University of Michigan, Ann Arbor, added in a news release.

The study was published online in Neurology.

Is a 10-fold increase in cost worth it?

To evaluate trends in ASM prescriptions and costs, the researchers used a random sample of 20% of Medicare beneficiaries with coverage from 2008 to 2018. There were 77,000 to 133,000 patients with epilepsy each year.

Over time, likely because of increasing availability of generics, brand-name ASMs made up a smaller proportion of pills prescribed, from 56% in 2008 to 14% in 2018, but still made up 79% of prescription drug costs in 2018.

The annual cost of brand-name ASMs rose from $2,800 in 2008 to $10,700 in 2018, while the cost of generic drugs decreased from $800 to $460 during that time.

An increased number of prescriptions for lacosamide was responsible for 45% of the total increase in brand-name costs.

As of 2018, lacosamide comprised 30% of all brand-name pill supply (followed by pregabalin, at 15%) and 30% of all brand-name costs (followed by clobazam and pregabalin, both at 9%), the investigators reported.

Brand-name antiepileptic drug costs decreased from 2008 to 2010, but after the introduction of lacosamide, total brand-name costs steadily rose from $72 million in 2010 (in 2018 dollars) to $256 million in 2018, they noted.

Because the dataset consists of a 20% random Medicare sample, total Medicare costs for brand-name ASMs for beneficiaries with epilepsy alone likely rose from roughly $360 million in 2010 to $1.3 billion in 2018, they added.

“Clinicians must remain cognizant of this societal cost magnitude when judging whether the 10-fold increased expense per pill for brand name medications is worth the possible benefits,” they wrote.

“While newer-generation drugs have potential advantages such as limited drug interactions and different side effect profiles, there have been conflicting studies on whether they are cost effective,” Dr. Terman noted in a news release.

A barrier to treatment

The authors of an accompanying editorial propose that the problem of prescription drug costs could be solved through a combination of competition and government regulation of prices. Patients and physicians are the most important stakeholders in this issue.

“When something represents 14% of the total use, but contributes 79% of the cost, it would be wise to consider alternatives, assuming that these alternatives are not of lower quality,” wrote Wyatt Bensken, with Case Western Reserve University, Cleveland, and Iván Sánchez Fernández, MD, with Boston Medical Center.

“When there are several ASMs with a similar mechanism of action, similar efficacy, similar safety and tolerability profile, and different costs, it would be unwise to choose the more expensive alternative just because it is newer,” they said.

This study, they added, provides data to “understand, and begin to act, on the challenging problem of the cost of prescription ASMs. After all, what is the point of having a large number of ASMs if their cost severely limits their use?”

A limitation of the study is that only Medicare prescription claims were included, so the results may not apply to younger patients with private insurance.

The study received no direct funding. The authors and editorialists have disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, a new analysis shows.

After adjustment for inflation, the cost of a 1-year supply of brand-name ASMs grew 277%, while generics became 42% less expensive.

“Our study makes transparent striking trends in brand name prescribing patterns,” the study team wrote.

Since 2010, the costs for brand-name ASMs have “consistently” increased. Costs were particularly boosted by increases in prescriptions for lacosamide (Vimpat), in addition to a “steep increase in the cost per pill, with brand-name drugs costing 10 times more than their generic counterparts,” first author Samuel Waller Terman, MD, of the University of Michigan, Ann Arbor, added in a news release.

The study was published online in Neurology.

Is a 10-fold increase in cost worth it?

To evaluate trends in ASM prescriptions and costs, the researchers used a random sample of 20% of Medicare beneficiaries with coverage from 2008 to 2018. There were 77,000 to 133,000 patients with epilepsy each year.

Over time, likely because of increasing availability of generics, brand-name ASMs made up a smaller proportion of pills prescribed, from 56% in 2008 to 14% in 2018, but still made up 79% of prescription drug costs in 2018.

The annual cost of brand-name ASMs rose from $2,800 in 2008 to $10,700 in 2018, while the cost of generic drugs decreased from $800 to $460 during that time.

An increased number of prescriptions for lacosamide was responsible for 45% of the total increase in brand-name costs.

As of 2018, lacosamide comprised 30% of all brand-name pill supply (followed by pregabalin, at 15%) and 30% of all brand-name costs (followed by clobazam and pregabalin, both at 9%), the investigators reported.

Brand-name antiepileptic drug costs decreased from 2008 to 2010, but after the introduction of lacosamide, total brand-name costs steadily rose from $72 million in 2010 (in 2018 dollars) to $256 million in 2018, they noted.

Because the dataset consists of a 20% random Medicare sample, total Medicare costs for brand-name ASMs for beneficiaries with epilepsy alone likely rose from roughly $360 million in 2010 to $1.3 billion in 2018, they added.

“Clinicians must remain cognizant of this societal cost magnitude when judging whether the 10-fold increased expense per pill for brand name medications is worth the possible benefits,” they wrote.

“While newer-generation drugs have potential advantages such as limited drug interactions and different side effect profiles, there have been conflicting studies on whether they are cost effective,” Dr. Terman noted in a news release.

A barrier to treatment

The authors of an accompanying editorial propose that the problem of prescription drug costs could be solved through a combination of competition and government regulation of prices. Patients and physicians are the most important stakeholders in this issue.

“When something represents 14% of the total use, but contributes 79% of the cost, it would be wise to consider alternatives, assuming that these alternatives are not of lower quality,” wrote Wyatt Bensken, with Case Western Reserve University, Cleveland, and Iván Sánchez Fernández, MD, with Boston Medical Center.

“When there are several ASMs with a similar mechanism of action, similar efficacy, similar safety and tolerability profile, and different costs, it would be unwise to choose the more expensive alternative just because it is newer,” they said.

This study, they added, provides data to “understand, and begin to act, on the challenging problem of the cost of prescription ASMs. After all, what is the point of having a large number of ASMs if their cost severely limits their use?”

A limitation of the study is that only Medicare prescription claims were included, so the results may not apply to younger patients with private insurance.

The study received no direct funding. The authors and editorialists have disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, a new analysis shows.

After adjustment for inflation, the cost of a 1-year supply of brand-name ASMs grew 277%, while generics became 42% less expensive.

“Our study makes transparent striking trends in brand name prescribing patterns,” the study team wrote.

Since 2010, the costs for brand-name ASMs have “consistently” increased. Costs were particularly boosted by increases in prescriptions for lacosamide (Vimpat), in addition to a “steep increase in the cost per pill, with brand-name drugs costing 10 times more than their generic counterparts,” first author Samuel Waller Terman, MD, of the University of Michigan, Ann Arbor, added in a news release.

The study was published online in Neurology.

Is a 10-fold increase in cost worth it?

To evaluate trends in ASM prescriptions and costs, the researchers used a random sample of 20% of Medicare beneficiaries with coverage from 2008 to 2018. There were 77,000 to 133,000 patients with epilepsy each year.

Over time, likely because of increasing availability of generics, brand-name ASMs made up a smaller proportion of pills prescribed, from 56% in 2008 to 14% in 2018, but still made up 79% of prescription drug costs in 2018.

The annual cost of brand-name ASMs rose from $2,800 in 2008 to $10,700 in 2018, while the cost of generic drugs decreased from $800 to $460 during that time.

An increased number of prescriptions for lacosamide was responsible for 45% of the total increase in brand-name costs.

As of 2018, lacosamide comprised 30% of all brand-name pill supply (followed by pregabalin, at 15%) and 30% of all brand-name costs (followed by clobazam and pregabalin, both at 9%), the investigators reported.

Brand-name antiepileptic drug costs decreased from 2008 to 2010, but after the introduction of lacosamide, total brand-name costs steadily rose from $72 million in 2010 (in 2018 dollars) to $256 million in 2018, they noted.

Because the dataset consists of a 20% random Medicare sample, total Medicare costs for brand-name ASMs for beneficiaries with epilepsy alone likely rose from roughly $360 million in 2010 to $1.3 billion in 2018, they added.

“Clinicians must remain cognizant of this societal cost magnitude when judging whether the 10-fold increased expense per pill for brand name medications is worth the possible benefits,” they wrote.

“While newer-generation drugs have potential advantages such as limited drug interactions and different side effect profiles, there have been conflicting studies on whether they are cost effective,” Dr. Terman noted in a news release.

A barrier to treatment

The authors of an accompanying editorial propose that the problem of prescription drug costs could be solved through a combination of competition and government regulation of prices. Patients and physicians are the most important stakeholders in this issue.

“When something represents 14% of the total use, but contributes 79% of the cost, it would be wise to consider alternatives, assuming that these alternatives are not of lower quality,” wrote Wyatt Bensken, with Case Western Reserve University, Cleveland, and Iván Sánchez Fernández, MD, with Boston Medical Center.

“When there are several ASMs with a similar mechanism of action, similar efficacy, similar safety and tolerability profile, and different costs, it would be unwise to choose the more expensive alternative just because it is newer,” they said.

This study, they added, provides data to “understand, and begin to act, on the challenging problem of the cost of prescription ASMs. After all, what is the point of having a large number of ASMs if their cost severely limits their use?”

A limitation of the study is that only Medicare prescription claims were included, so the results may not apply to younger patients with private insurance.

The study received no direct funding. The authors and editorialists have disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Scientists find brain mechanism behind age-related memory loss

Scientists at Johns Hopkins University have identified a mechanism in the brain behind age-related memory loss, expanding our knowledge of the inner workings of the aging brain and possibly opening the door to new Alzheimer’s treatments.

The researchers looked at the hippocampus, a part of the brain thought to store long-term memories.

Neurons there are responsible for a pair of memory functions – called pattern separation and pattern completion – that work together in young, healthy brains. These functions can swing out of balance with age, impacting memory.

The Johns Hopkins team may have discovered what causes this imbalance. Their findings – reported in a paper in the journal Current Biology – may not only help us improve dementia treatments, but even prevent or delay a loss of thinking skills in the first place, the researchers say.

Pattern separation vs. pattern completion

To understand how the hippocampus changes with age, the researchers looked at rats’ brains. In rats and in humans, pattern separation and pattern completion are present, controlled by neurons in the hippocampus.

As the name suggests, pattern completion is when you take a few details or fragments of information – a few notes of music, or the start of a famous movie quote – and your brain retrieves the full memory. Pattern separation, on the other hand, is being able to tell similar observations or experiences apart (like two visits to the same restaurant) to be stored as separate memories.

These functions occur along a gradient across a tiny region called CA3. That gradient, the study found, disappears with aging, said lead study author Hey-Kyoung Lee, PhD, an assistant research scientist at the university’s Zanvyl Krieger Mind/Brain Institute. “The main consequence of the loss,” Dr. Lee said, “is that pattern completion becomes more dominant in rats as they age.”

What’s happening in the brain

Neurons responsible for pattern completion occupy the “distal” end of CA3, while those in charge of pattern separation reside at the “proximal” end. Dr. Lee said prior studies had not examined the proximal and distal regions separately, as she and her team did in this study.

What was surprising, said Dr. Lee, “was that hyperactivity in aging was observed toward the proximal CA3 region, not the expected distal region.” Contrary to their expectations, that hyperactivity did not enhance function in that area but rather dampened it. Hence: “There is diminished pattern separation and augmented pattern completion,” she said.

– they may recall a certain restaurant they’d been to but not be able to separate what happened during one visit versus another.

Why do some older adults stay sharp?

That memory impairment does not happen to everyone, and it doesn’t happen to all rats either. In fact, the researchers found that some older rats performed spatial-learning tasks as well as young rats did – even though their brains were already beginning to favor pattern completion.

If we can better understand why this happens, we may uncover new therapies for age-related memory loss, Dr. Lee said.

Coauthor Michela Gallagher’s team previously demonstrated that the anti-epilepsy drug levetiracetam improves memory performance by reducing hyperactivity in the hippocampus.

The extra detail this study adds may allow scientists to better aim such drugs in the future, Dr. Lee speculated. “It would give us better control of where we could possibly target the deficits we see.”

A version of this article first appeared on WebMD.com.

Scientists at Johns Hopkins University have identified a mechanism in the brain behind age-related memory loss, expanding our knowledge of the inner workings of the aging brain and possibly opening the door to new Alzheimer’s treatments.

The researchers looked at the hippocampus, a part of the brain thought to store long-term memories.

Neurons there are responsible for a pair of memory functions – called pattern separation and pattern completion – that work together in young, healthy brains. These functions can swing out of balance with age, impacting memory.

The Johns Hopkins team may have discovered what causes this imbalance. Their findings – reported in a paper in the journal Current Biology – may not only help us improve dementia treatments, but even prevent or delay a loss of thinking skills in the first place, the researchers say.

Pattern separation vs. pattern completion

To understand how the hippocampus changes with age, the researchers looked at rats’ brains. In rats and in humans, pattern separation and pattern completion are present, controlled by neurons in the hippocampus.

As the name suggests, pattern completion is when you take a few details or fragments of information – a few notes of music, or the start of a famous movie quote – and your brain retrieves the full memory. Pattern separation, on the other hand, is being able to tell similar observations or experiences apart (like two visits to the same restaurant) to be stored as separate memories.

These functions occur along a gradient across a tiny region called CA3. That gradient, the study found, disappears with aging, said lead study author Hey-Kyoung Lee, PhD, an assistant research scientist at the university’s Zanvyl Krieger Mind/Brain Institute. “The main consequence of the loss,” Dr. Lee said, “is that pattern completion becomes more dominant in rats as they age.”

What’s happening in the brain

Neurons responsible for pattern completion occupy the “distal” end of CA3, while those in charge of pattern separation reside at the “proximal” end. Dr. Lee said prior studies had not examined the proximal and distal regions separately, as she and her team did in this study.

What was surprising, said Dr. Lee, “was that hyperactivity in aging was observed toward the proximal CA3 region, not the expected distal region.” Contrary to their expectations, that hyperactivity did not enhance function in that area but rather dampened it. Hence: “There is diminished pattern separation and augmented pattern completion,” she said.

– they may recall a certain restaurant they’d been to but not be able to separate what happened during one visit versus another.

Why do some older adults stay sharp?

That memory impairment does not happen to everyone, and it doesn’t happen to all rats either. In fact, the researchers found that some older rats performed spatial-learning tasks as well as young rats did – even though their brains were already beginning to favor pattern completion.

If we can better understand why this happens, we may uncover new therapies for age-related memory loss, Dr. Lee said.

Coauthor Michela Gallagher’s team previously demonstrated that the anti-epilepsy drug levetiracetam improves memory performance by reducing hyperactivity in the hippocampus.

The extra detail this study adds may allow scientists to better aim such drugs in the future, Dr. Lee speculated. “It would give us better control of where we could possibly target the deficits we see.”

A version of this article first appeared on WebMD.com.

Scientists at Johns Hopkins University have identified a mechanism in the brain behind age-related memory loss, expanding our knowledge of the inner workings of the aging brain and possibly opening the door to new Alzheimer’s treatments.

The researchers looked at the hippocampus, a part of the brain thought to store long-term memories.

Neurons there are responsible for a pair of memory functions – called pattern separation and pattern completion – that work together in young, healthy brains. These functions can swing out of balance with age, impacting memory.

The Johns Hopkins team may have discovered what causes this imbalance. Their findings – reported in a paper in the journal Current Biology – may not only help us improve dementia treatments, but even prevent or delay a loss of thinking skills in the first place, the researchers say.

Pattern separation vs. pattern completion

To understand how the hippocampus changes with age, the researchers looked at rats’ brains. In rats and in humans, pattern separation and pattern completion are present, controlled by neurons in the hippocampus.

As the name suggests, pattern completion is when you take a few details or fragments of information – a few notes of music, or the start of a famous movie quote – and your brain retrieves the full memory. Pattern separation, on the other hand, is being able to tell similar observations or experiences apart (like two visits to the same restaurant) to be stored as separate memories.

These functions occur along a gradient across a tiny region called CA3. That gradient, the study found, disappears with aging, said lead study author Hey-Kyoung Lee, PhD, an assistant research scientist at the university’s Zanvyl Krieger Mind/Brain Institute. “The main consequence of the loss,” Dr. Lee said, “is that pattern completion becomes more dominant in rats as they age.”

What’s happening in the brain

Neurons responsible for pattern completion occupy the “distal” end of CA3, while those in charge of pattern separation reside at the “proximal” end. Dr. Lee said prior studies had not examined the proximal and distal regions separately, as she and her team did in this study.

What was surprising, said Dr. Lee, “was that hyperactivity in aging was observed toward the proximal CA3 region, not the expected distal region.” Contrary to their expectations, that hyperactivity did not enhance function in that area but rather dampened it. Hence: “There is diminished pattern separation and augmented pattern completion,” she said.

– they may recall a certain restaurant they’d been to but not be able to separate what happened during one visit versus another.

Why do some older adults stay sharp?

That memory impairment does not happen to everyone, and it doesn’t happen to all rats either. In fact, the researchers found that some older rats performed spatial-learning tasks as well as young rats did – even though their brains were already beginning to favor pattern completion.

If we can better understand why this happens, we may uncover new therapies for age-related memory loss, Dr. Lee said.

Coauthor Michela Gallagher’s team previously demonstrated that the anti-epilepsy drug levetiracetam improves memory performance by reducing hyperactivity in the hippocampus.

The extra detail this study adds may allow scientists to better aim such drugs in the future, Dr. Lee speculated. “It would give us better control of where we could possibly target the deficits we see.”

A version of this article first appeared on WebMD.com.

FROM CURRENT BIOLOGY

Can bone density scans help predict dementia risk?

, new research suggests.