User login

Patterns and Appropriateness of Thrombophilia Testing in an Academic Medical Center

Thrombophilia is a prothrombotic state, either acquired or inherited, leading to a thrombotic predisposition.1 The most common heritable thrombophilias include factor V Leiden (FVL) and prothrombin G20210A. The most common acquired thrombophilia is the presence of phospholipid antibodies.1 Thrombotic risk varies with thrombophilia type. For example, deficiencies of antithrombin, protein C and protein S, and the presence of phospholipid antibodies, confer higher risk than FVL and prothrombin G20210A.2-5 Other thrombophilias (eg, methylenetetrahydrofolate reductase mutation, increased factor VIII activity) are relatively uncommon and/or their impact on thrombosis risk appears to be either minimal or unknown.1-6 There is little clinical evidence that testing for thrombophilia impacts subsequent thrombosis prevention.5,7,8 Multiple clinical guidelines and medical societies recommend against the routine and indiscriminate use of thrombophilia testing.8-13 In general, thrombophilia testing should be considered only if the result would lead to changes in anticoagulant initiation, intensity, and/or duration, or might inform interventions to prevent thrombosis in asymptomatic family members.8-13 However, thrombophilia testing rarely changes the acute management of a thrombotic event and may have harmful effects on patients and their family members because positive results may unnecessarily increase anxiety and negative results may provide false reassurance.6,14-18 The cost-effectiveness of thrombophilia testing is unknown. Economic models have sought to quantify cost-effectiveness, but conclusions from these studies are limited.7

The utility of thrombophilia testing in emergency department (ED) and inpatient settings is further limited because patients are often treated and discharged before thrombophilia test results are available. Additionally, in these settings, multiple factors increase the risk of false-positive or false-negative results (eg, acute thrombosis, acute illness, pregnancy, and anticoagulant therapy).19,20 The purpose of this study was to systematically assess thrombophilia testing patterns in the ED and hospitalized patients at an academic medical center and to quantify the proportion of tests associated with minimal clinical utility. We hypothesize that the majority of thrombophilia tests completed in the inpatient setting are associated with minimal clinical utility.

METHODS

Setting and Patients

This study was conducted at University of Utah Health Care (UUHC) University Hospital, a 488-bed academic medical center with a level I trauma center, primary stroke center, and 50-bed ED. Laboratory services for UUHC, including thrombophilia testing, are provided by a national reference laboratory, Associated Regional and University Pathologists Laboratories. This study included patients ≥18 years of age who received thrombophilia testing (Supplementary Table 1) during an ED visit or inpatient admission at University Hospital between July 1, 2014 and December 31, 2014. There were no exclusion criteria. An institutional electronic data repository was used to identify patients matching inclusion criteria. All study activities were reviewed and approved by the UUHC Institutional Review Board with a waiver of informed consent.

Outcomes

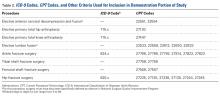

An electronic database query was used to identify patients, collect patient demographic information, and collect test characteristics. Each patient’s electronic medical record was manually reviewed to collect all other outcomes. Indication for thrombophilia testing was identified by manual review of provider notes. Thrombophilia tests occurring in situations associated with minimal clinical utility were defined as tests meeting at least one of the following criteria: patient discharged before test results were available for review; test type not recommended by published guidelines or by UUHC Thrombosis Service physicians for thrombophilia testing (Supplementary Table 2); test performed in situations associated with decreased accuracy; test was a duplicate test as a result of different thrombophilia panels containing identical tests; and test followed a provoked venous thromboembolism (VTE). Testing in situations associated with decreased accuracy are summarized in Supplementary Table 3 and included at least one of the following at the time of the test: anticoagulant therapy, acute thrombosis, pregnant or <8 weeks postpartum, and receiving estrogen-containing medications. Only test types known to be affected by the respective situation were included. Testing following a provoked VTE was defined as testing prompted by an acute thrombosis and performed within 3 months following major surgery (defined administratively as any surgery performed in an operating room), during pregnancy, <8 weeks postpartum, or while on estrogen-containing medications. Thrombophilia testing during anticoagulant therapy was defined as testing within 4 half-lives of anticoagulant administration based on medication administration records. Anticoagulant therapy changes were identified by comparing prior-to-admission and discharge medication lists.

Data Analysis

Patient and laboratory characteristics were summarized using descriptive statistics, including mean and standard deviation (SD) for continuous variables and proportions for categorical variables. Data analysis was performed using Excel (Version 2013, Microsoft Corporation. Redmond, Washington).

RESULTS

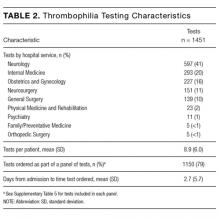

During the 6-month study period, 163 patients received at least 1 thrombophilia test during an ED visit or inpatient admission. Patient characteristics are summarized in Table 1. Tested patients were most commonly inpatients (96%) and female (71%). A total of 1451 thrombophilia tests were performed with a mean (± SD) of 8.9 ± 6.0 tests per patient. Testing characteristics are summarized in Table 2. Of the 39 different test types performed, the most commonly ordered were cardiolipin IgG and IgM antibodies (9% each), lupus anticoagulant (9%), and β2-glycoprotein 1 IgG and IgM antibodies (8% each). When combined with testing for phosphatidyl antibodies, antiphospholipid tests accounted for 70% of all tests. Overall, 134 (9%) test results were positive. The mean time for results to become available was 2.2 ± 2.5 days. The frequency of test types with corresponding positivity rates and mean time for results to become available are summarized in Supplementary Table 4.

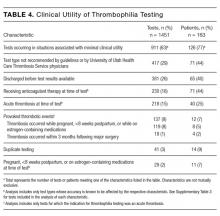

The indications for thrombophilia testing are summarized in Table 3. Ischemic stroke was the most common indication for testing (50% of tests; 35% of patients), followed by VTE (21% of tests; 21% of patients), and pregnancy-related conditions (eg, preeclampsia, intrauterine fetal demise; 15% of tests; 25% of patients). Overall, 911 tests (63%) occurred in situations associated with minimal clinical utility, with 126 patients (77%) receiving at least one of these tests (Table 4).

Anticoagulant therapy was changed in 43 patients (26%) in the following ways: initiated in 35 patients (21%), transitioned to a different anticoagulant in 6 patients (4%), and discontinued in 2 patients (1%). Of the 35 patients initiating anticoagulant therapy, 29 had documented thrombosis (24 had VTE, 4 had cerebral venous sinus thrombosis [CVST], and 1 had basilar artery thrombosis). Overall, 2 instances were identified in which initiation of anticoagulant therapy at discharge was in response to thrombophilia test results. In the first instance, warfarin without a parenteral anticoagulant bridge was initiated for a 54-year-old patient with a cryptogenic stroke who tested positive for β2-glycoprotein 1 IgG antibodies, lupus anticoagulant, and protein S deficiency. In the second instance, warfarin with an enoxaparin bridge was initiated for a 26-year-old patient with a cryptogenic stroke who tested positive for β2-glycoprotein 1 IgG and IgM antibodies, cardiolipin IgG antibodies, lupus anticoagulant, protein C deficiency, and antithrombin deficiency. Of the 163 patients receiving thrombophilia testing, only 2 patients (1%) had clear documentation of being offered genetic consultation.

DISCUSSION

In this retrospective analysis, 1451 thrombophilia tests were performed in 163 patients over 6 months. Tested patients were relatively young, which is likely explained by the number of patients tested for pregnancy-related conditions and the fact that a stroke or VTE in younger patients more frequently prompted providers to suspect thrombophilia. Nearly three-fourths of patients were female, which is likely due to testing for pregnancy-related conditions and possibly diagnostic suspicion bias given the comparative predilection of antiphospholipid syndrome for women. The patient characteristics in our study are consistent with other studies evaluating thrombophilia testing.21,22

Thrombophilia testing was most frequently prompted by stroke, VTE, and pregnancy-related conditions. Only 26% of patients had acute thrombosis identified during the admission, primarily because of the high proportion of tests for cryptogenic strokes and pregnancy-related conditions. Thrombophilia testing is recommended in patients who have had a stroke when the stroke is considered to be cryptogenic after a standard stroke evaluation.23 Thrombophilia testing in pregnancy-related conditions is controversial but is often considered in situations such as stillbirths with severe placental pathology and/or significant growth restriction, or in mothers with a personal or family history of thrombosis.24 The proportion of testing for pregnancy-related conditions may be greater than at other institutions because UUHC Maternal Fetal Medicine is a referral center for women with conditions associated with hypercoagulability. Anticoagulant therapy was initiated in 21% of patients, but specifically in response to thrombophilia testing in only 2 instances; in most cases, anticoagulant therapy was initiated regardless of thrombophilia test results.

The results of this study confirm our hypothesis because the majority of thrombophilia tests occurred in situations associated with minimal clinical utility. Testing in these situations was not isolated to specific patients or medical services because 77% of tested patients received at least 1 test associated with minimal clinical utility. Our study took a conservative approach in defining scenarios associated with minimal clinical utility because other situations can also affect testing accuracy (eg, hepatic disease, nephrotic syndrome) but were not included in our analysis of this outcome.

The results of this study highlight opportunities to improve thrombophilia testing practices at our institution and may be generalizable to institutions with similar testing patterns. Because multiple medical services order thrombophilia tests, strategies to improve testing practices are still being determined. The results of this study can serve as a baseline for comparison after strategies are implemented. The most common situation associated with minimal clinical utility was the use of test types not generally recommended by guidelines or UUHC Thrombosis Service physicians for thrombophilia testing (eg, β2-glycoprotein 1 IgA antibodies, phosphatidyl antibodies). We intend to require a hematology or thrombosis specialty consult prior to ordering these tests. This intervention alone could potentially decrease unnecessary testing by a third. Another consideration is to require a specialty consult prior to any inpatient thrombophilia testing. This strategy has been found to decrease inappropriate testing at other institutions.21 We also intend to streamline available thrombophilia testing panels because a poorly designed panel could lead to ordering of multiple tests associated with minimal clinical utility. At least 12 different thrombophilia panels are currently available in our computerized physician order entry system (see Supplementary Table 5). We hypothesize that current panel designs contribute to providers inadvertently ordering unintended or duplicate tests and that reducing the number of available panels and clearly delineating what tests are contained in each panel is likely to reduce unnecessary testing. Other strategies being considered include using electronic clinical decision support tools, implementing strict ordering criteria for all inpatient testing, and establishing a thrombosis stewardship program.

Our study was unique in at least 2 ways. First, previous studies describing thrombophilia testing have described testing patterns for patients with specific indications (eg, VTE), whereas our study described all thrombophilia tests regardless of indication. This allows for testing pattern comparisons across indications and medical services, increasing the generalizability of our results. Second, this study quantifies tests occurring in situations associated with a practical definition of minimal clinical utility.

Our study has several limitations: (1) Many variables were reliant on provider notes and other documentation, which allows for potential misclassification of variables. (2) It was not always possible to determine the ultimate utility of each test in clinical management decisions, and our study did not investigate the impact of thrombophilia testing on duration of anticoagulant therapy. Additionally, select situations could benefit from testing regardless if anticoagulant therapy is altered (eg, informing contraceptive choices). (3) Testing performed following a provoked acute thrombosis was defined as testing within 3 months following administratively defined major surgery. This definition could have included some minor procedures that do not substantially increase VTE risk, resulting in underestimated clinical utility. (4) The UUHC University Hospital serves as a referral hospital for a large geographical area, and investigators did not have access to outpatient records for a large proportion of discharged patients. As a result, frequency of repeat testing could not be assessed, possibly resulting in overestimated clinical utility. (5) In categorizing indications for testing, testing for CVST was subcategorized under testing for ischemic stroke based on presenting symptoms rather than on underlying pathophysiology. The rationale for this categorization is that patients with CVST were often tested based on presenting symptoms. Additionally, tests for CVST were ordered by the neurology service, which also ordered tests for all other ischemic stroke indications. (6) The purpose of our study was to investigate the subset of the hospital’s patient population that received thrombophilia testing, and patients were identified by tests received and not by diagnosis codes. As a result, we are unable to provide the proportion of total patients treated at the hospital for specific conditions who were tested (eg, the proportion of stroke patients that received thrombophilia testing). (7) Current practice guidelines do not recommend testing for phosphatidyl antibodies, even when traditional antiphospholipid testing is negative.25-27 Although expert panels continue to explore associations between phosphatidyl antibodies and pregnancy morbidity and thrombotic events, the low level of evidence is insufficient to guide clinical management.28 Therefore, we categorized all phosphatidyl testing as associated with minimal clinical utility.

CONCLUSIONS

In a large academic medical center, the majority of tests occurred in situations associated with minimal clinical utility. Strategies to improve thrombophilia testing practices are needed in order to minimize potentially inappropriate testing, provide more cost-effective care, and promote value-driven outcomes.

Disclosure

S.W. received financial support for this submitted work via a Bristol-Myers-Squibb grant. G.F. received financial support from Portola Pharmaceuticals for consulting and lectures that were not related to this submitted work.

1. Franco RF, Reitsma PH. Genetic risk factors of venous thrombosis. Hum Genet. 2001;109(4):369-384. PubMed

2. Ridker PM, Hennekens CH, Lindpaintner K, Stampfer MJ, Eisenberg PR, Miletich JP. Mutation in the gene coding for coagulation factor V and the risk of myocardial infarction, stroke, and venous thrombosis in apparently healthy men. N Engl J Med. 1995;332(14):912-917. PubMed

3. Koster T, Rosendaal FR, de Ronde H, Briët E, Vandenbroucke JP, Bertina RM. Venous thrombosis due to poor anticoagulant response to activated protein C: Leiden Thrombophilia Study. Lancet. 1993;342(8886-8887):1503-1506. PubMed

4. Margaglione M, Brancaccio V, Giuliani N, et al. Increased risk for venous thrombosis in carriers of the prothrombin G-->A20210 gene variant. Ann Intern Med. 1998;129(2):89-93. PubMed

5. De Stefano V, Martinelli I, Mannucci PM, et al. The risk of recurrent deep venous thrombosis among heterozygous carriers of both factor V Leiden and the G20210A prothrombin mutation. N Engl J Med. 1999;341:801-806. PubMed

6. Dickey TL. Can thrombophilia testing help to prevent recurrent VTE? Part 2. JAAPA. 2002;15(12):23-24, 27-29. PubMed

7. Simpson EL, Stevenson MD, Rawdin A, Papaioannou D. Thrombophilia testing in people with venous thromboembolism: systematic review and cost-effectiveness analysis. Health Technol Assess. 2009;13(2):iii, ix-x, 1-91. PubMed

8. National Institute for Health and Clinical Excellence. Venous thromboembolic disease: the management of venous thromboembolic diseases and the role of thrombophilia testing. NICE clinical guideline 144. https://www.nice.org.uk/guidance/cg144. Accessed on June 30, 2017.

9. Evalution of Genomic Applications in Practice and Prevention (EGAPP) Working Group. Recommendations from the EGAPP Working Group: routine testing for factor V Leiden (R506Q) and prothrombin (20210G>A) mutations in adults with a history of idiopathic venous thromboembolism and their adult family members. Genet Med. 2011;13(1):67-76.

10. Kearon C, Akl EA, Comerota AJ, et al. Antithrombotic therapy for VTE disease: antithrombotic therapy and prevention of thrombosis, 9th ed: American College of Chest Physicians evidence-based clinical practice guidelines. Chest. 2012;141(2 Suppl):e419S-494S. PubMed

11. Baglin T, Gray E, Greaves M, et al. Clinical guidelines for testing for heritable thrombophilia. Br J Haematol. 2010;149(2):209-220. PubMed

12. Hicks LK, Bering H, Carson KR, et al. The ASH Choosing Wisely® campaign: five hematologic tests and treatments to question. Hematology Am Soc Hematol Educ Program. 2013;2013:9-14. PubMed

13. Stevens SM, Woller SC, Bauer KA, et al. Guidance for the evaluation and treatment of hereditary and acquired thrombophilia. J Thromb Thrombolysis. 2016;41(1):154-164. PubMed

14. Christiansen SC, Cannegieter SC, Koster T, Vandenbroucke JP, Rosendaal FR. Thrombophilia, clinical factors, and recurrent venous thrombotic events. JAMA. 2005;293(19):2352-2361. PubMed

15. Prandoni P, Lensing AW, Cogo A, et al. The long-term clinical course of acute deep venous thrombosis. Ann Intern Med. 1996;125(1):1-7. PubMed

16. Miles JS, Miletich JP, Goldhaber SZ, Hennekens CH, Ridker PM. G20210A mutation in the prothrombin gene and the risk of recurrent venous thromboembolism. J Am Coll Cardiol. 2001;37(1):215-218. PubMed

17. Eichinger S, Weltermann A, Mannhalter C, et al. The risk of recurrent venous thromboembolism in heterozygous carriers of factor V Leiden and a first spontaneous venous thromboembolism. Arch Intern Med. 2002;162(20):2357-2360. PubMed

18. Mazzolai L, Duchosal MA. Hereditary thrombophilia and venous thromboembolism: critical evaluation of the clinical implications of screening. Eur J Vasc Endovasc Surg. 2007;34(4):483-488. PubMed

19. Merriman L, Greaves M. Testing for thrombophilia: an evidence‐based approach. Postgrad Med J. 2006;82(973):699-704. PubMed

20. Favaloro EJ, McDonald D, Lippi G. Laboratory investigation of thrombophilia: the good, the bad, and the ugly. Semin Thromb Hemost. 2009;35(7):695-710. PubMed

21. Shen YM, Tsai J, Taiwo E, et al. Analysis of thrombophilia test ordering practices at an academic center: a proposal for appropriate testing to reduce harm and cost. PLoS One. 2016;11(5):e0155326. PubMed

22. Meyer MR, Witt DM, Delate T, et al. Thrombophilia testing patterns amongst patients with acute venous thromboembolism. Thromb Res. 2015;136(6):1160-1164. PubMed

23. Saver JL. Clinical practice: cryptogenic stroke. N Engl J Med. 2016;374(21):2065-2074. PubMed

24. ACOG practice bulletin no. 102: management of stillbirth. Obstet Gynecol. 2009;113(3):748-761. PubMed

25. Miyakis S, Lockshin MD, Atsumi T, et al. International consensus statement on an update of the classification criteria for definite antiphospholipid syndrome (APS). J Thromb Haemost. 2006;4(2):295-306. PubMed

26. Keeling D, Mackie I, Moore GW, Greer IA, Greaves M, British Committee for Standards in Haematology. Guidelines on the investigation and management of antiphospholipid syndrome. Br J Haematol. 2012;157(1):47-58. PubMed

27. Committee on Practice Bulletins—Obstetrics, American College of Obstetricians and Gynecologists. Practice bulletin no. 132: antiphospholipid syndrome. Obstet Gynecol. 2012;120(6):1514-1521. PubMed

28. Bertolaccini ML, Amengual O, Andreoli L, et al. 14th International Congress on Antiphospholipid Antibodies Task Force. Report on antiphospholipid syndrome laboratory diagnostics and trends. Autoimmun Rev. 2014;13(9):917-930. PubMed

Thrombophilia is a prothrombotic state, either acquired or inherited, leading to a thrombotic predisposition.1 The most common heritable thrombophilias include factor V Leiden (FVL) and prothrombin G20210A. The most common acquired thrombophilia is the presence of phospholipid antibodies.1 Thrombotic risk varies with thrombophilia type. For example, deficiencies of antithrombin, protein C and protein S, and the presence of phospholipid antibodies, confer higher risk than FVL and prothrombin G20210A.2-5 Other thrombophilias (eg, methylenetetrahydrofolate reductase mutation, increased factor VIII activity) are relatively uncommon and/or their impact on thrombosis risk appears to be either minimal or unknown.1-6 There is little clinical evidence that testing for thrombophilia impacts subsequent thrombosis prevention.5,7,8 Multiple clinical guidelines and medical societies recommend against the routine and indiscriminate use of thrombophilia testing.8-13 In general, thrombophilia testing should be considered only if the result would lead to changes in anticoagulant initiation, intensity, and/or duration, or might inform interventions to prevent thrombosis in asymptomatic family members.8-13 However, thrombophilia testing rarely changes the acute management of a thrombotic event and may have harmful effects on patients and their family members because positive results may unnecessarily increase anxiety and negative results may provide false reassurance.6,14-18 The cost-effectiveness of thrombophilia testing is unknown. Economic models have sought to quantify cost-effectiveness, but conclusions from these studies are limited.7

The utility of thrombophilia testing in emergency department (ED) and inpatient settings is further limited because patients are often treated and discharged before thrombophilia test results are available. Additionally, in these settings, multiple factors increase the risk of false-positive or false-negative results (eg, acute thrombosis, acute illness, pregnancy, and anticoagulant therapy).19,20 The purpose of this study was to systematically assess thrombophilia testing patterns in the ED and hospitalized patients at an academic medical center and to quantify the proportion of tests associated with minimal clinical utility. We hypothesize that the majority of thrombophilia tests completed in the inpatient setting are associated with minimal clinical utility.

METHODS

Setting and Patients

This study was conducted at University of Utah Health Care (UUHC) University Hospital, a 488-bed academic medical center with a level I trauma center, primary stroke center, and 50-bed ED. Laboratory services for UUHC, including thrombophilia testing, are provided by a national reference laboratory, Associated Regional and University Pathologists Laboratories. This study included patients ≥18 years of age who received thrombophilia testing (Supplementary Table 1) during an ED visit or inpatient admission at University Hospital between July 1, 2014 and December 31, 2014. There were no exclusion criteria. An institutional electronic data repository was used to identify patients matching inclusion criteria. All study activities were reviewed and approved by the UUHC Institutional Review Board with a waiver of informed consent.

Outcomes

An electronic database query was used to identify patients, collect patient demographic information, and collect test characteristics. Each patient’s electronic medical record was manually reviewed to collect all other outcomes. Indication for thrombophilia testing was identified by manual review of provider notes. Thrombophilia tests occurring in situations associated with minimal clinical utility were defined as tests meeting at least one of the following criteria: patient discharged before test results were available for review; test type not recommended by published guidelines or by UUHC Thrombosis Service physicians for thrombophilia testing (Supplementary Table 2); test performed in situations associated with decreased accuracy; test was a duplicate test as a result of different thrombophilia panels containing identical tests; and test followed a provoked venous thromboembolism (VTE). Testing in situations associated with decreased accuracy are summarized in Supplementary Table 3 and included at least one of the following at the time of the test: anticoagulant therapy, acute thrombosis, pregnant or <8 weeks postpartum, and receiving estrogen-containing medications. Only test types known to be affected by the respective situation were included. Testing following a provoked VTE was defined as testing prompted by an acute thrombosis and performed within 3 months following major surgery (defined administratively as any surgery performed in an operating room), during pregnancy, <8 weeks postpartum, or while on estrogen-containing medications. Thrombophilia testing during anticoagulant therapy was defined as testing within 4 half-lives of anticoagulant administration based on medication administration records. Anticoagulant therapy changes were identified by comparing prior-to-admission and discharge medication lists.

Data Analysis

Patient and laboratory characteristics were summarized using descriptive statistics, including mean and standard deviation (SD) for continuous variables and proportions for categorical variables. Data analysis was performed using Excel (Version 2013, Microsoft Corporation. Redmond, Washington).

RESULTS

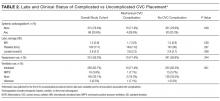

During the 6-month study period, 163 patients received at least 1 thrombophilia test during an ED visit or inpatient admission. Patient characteristics are summarized in Table 1. Tested patients were most commonly inpatients (96%) and female (71%). A total of 1451 thrombophilia tests were performed with a mean (± SD) of 8.9 ± 6.0 tests per patient. Testing characteristics are summarized in Table 2. Of the 39 different test types performed, the most commonly ordered were cardiolipin IgG and IgM antibodies (9% each), lupus anticoagulant (9%), and β2-glycoprotein 1 IgG and IgM antibodies (8% each). When combined with testing for phosphatidyl antibodies, antiphospholipid tests accounted for 70% of all tests. Overall, 134 (9%) test results were positive. The mean time for results to become available was 2.2 ± 2.5 days. The frequency of test types with corresponding positivity rates and mean time for results to become available are summarized in Supplementary Table 4.

The indications for thrombophilia testing are summarized in Table 3. Ischemic stroke was the most common indication for testing (50% of tests; 35% of patients), followed by VTE (21% of tests; 21% of patients), and pregnancy-related conditions (eg, preeclampsia, intrauterine fetal demise; 15% of tests; 25% of patients). Overall, 911 tests (63%) occurred in situations associated with minimal clinical utility, with 126 patients (77%) receiving at least one of these tests (Table 4).

Anticoagulant therapy was changed in 43 patients (26%) in the following ways: initiated in 35 patients (21%), transitioned to a different anticoagulant in 6 patients (4%), and discontinued in 2 patients (1%). Of the 35 patients initiating anticoagulant therapy, 29 had documented thrombosis (24 had VTE, 4 had cerebral venous sinus thrombosis [CVST], and 1 had basilar artery thrombosis). Overall, 2 instances were identified in which initiation of anticoagulant therapy at discharge was in response to thrombophilia test results. In the first instance, warfarin without a parenteral anticoagulant bridge was initiated for a 54-year-old patient with a cryptogenic stroke who tested positive for β2-glycoprotein 1 IgG antibodies, lupus anticoagulant, and protein S deficiency. In the second instance, warfarin with an enoxaparin bridge was initiated for a 26-year-old patient with a cryptogenic stroke who tested positive for β2-glycoprotein 1 IgG and IgM antibodies, cardiolipin IgG antibodies, lupus anticoagulant, protein C deficiency, and antithrombin deficiency. Of the 163 patients receiving thrombophilia testing, only 2 patients (1%) had clear documentation of being offered genetic consultation.

DISCUSSION

In this retrospective analysis, 1451 thrombophilia tests were performed in 163 patients over 6 months. Tested patients were relatively young, which is likely explained by the number of patients tested for pregnancy-related conditions and the fact that a stroke or VTE in younger patients more frequently prompted providers to suspect thrombophilia. Nearly three-fourths of patients were female, which is likely due to testing for pregnancy-related conditions and possibly diagnostic suspicion bias given the comparative predilection of antiphospholipid syndrome for women. The patient characteristics in our study are consistent with other studies evaluating thrombophilia testing.21,22

Thrombophilia testing was most frequently prompted by stroke, VTE, and pregnancy-related conditions. Only 26% of patients had acute thrombosis identified during the admission, primarily because of the high proportion of tests for cryptogenic strokes and pregnancy-related conditions. Thrombophilia testing is recommended in patients who have had a stroke when the stroke is considered to be cryptogenic after a standard stroke evaluation.23 Thrombophilia testing in pregnancy-related conditions is controversial but is often considered in situations such as stillbirths with severe placental pathology and/or significant growth restriction, or in mothers with a personal or family history of thrombosis.24 The proportion of testing for pregnancy-related conditions may be greater than at other institutions because UUHC Maternal Fetal Medicine is a referral center for women with conditions associated with hypercoagulability. Anticoagulant therapy was initiated in 21% of patients, but specifically in response to thrombophilia testing in only 2 instances; in most cases, anticoagulant therapy was initiated regardless of thrombophilia test results.

The results of this study confirm our hypothesis because the majority of thrombophilia tests occurred in situations associated with minimal clinical utility. Testing in these situations was not isolated to specific patients or medical services because 77% of tested patients received at least 1 test associated with minimal clinical utility. Our study took a conservative approach in defining scenarios associated with minimal clinical utility because other situations can also affect testing accuracy (eg, hepatic disease, nephrotic syndrome) but were not included in our analysis of this outcome.

The results of this study highlight opportunities to improve thrombophilia testing practices at our institution and may be generalizable to institutions with similar testing patterns. Because multiple medical services order thrombophilia tests, strategies to improve testing practices are still being determined. The results of this study can serve as a baseline for comparison after strategies are implemented. The most common situation associated with minimal clinical utility was the use of test types not generally recommended by guidelines or UUHC Thrombosis Service physicians for thrombophilia testing (eg, β2-glycoprotein 1 IgA antibodies, phosphatidyl antibodies). We intend to require a hematology or thrombosis specialty consult prior to ordering these tests. This intervention alone could potentially decrease unnecessary testing by a third. Another consideration is to require a specialty consult prior to any inpatient thrombophilia testing. This strategy has been found to decrease inappropriate testing at other institutions.21 We also intend to streamline available thrombophilia testing panels because a poorly designed panel could lead to ordering of multiple tests associated with minimal clinical utility. At least 12 different thrombophilia panels are currently available in our computerized physician order entry system (see Supplementary Table 5). We hypothesize that current panel designs contribute to providers inadvertently ordering unintended or duplicate tests and that reducing the number of available panels and clearly delineating what tests are contained in each panel is likely to reduce unnecessary testing. Other strategies being considered include using electronic clinical decision support tools, implementing strict ordering criteria for all inpatient testing, and establishing a thrombosis stewardship program.

Our study was unique in at least 2 ways. First, previous studies describing thrombophilia testing have described testing patterns for patients with specific indications (eg, VTE), whereas our study described all thrombophilia tests regardless of indication. This allows for testing pattern comparisons across indications and medical services, increasing the generalizability of our results. Second, this study quantifies tests occurring in situations associated with a practical definition of minimal clinical utility.

Our study has several limitations: (1) Many variables were reliant on provider notes and other documentation, which allows for potential misclassification of variables. (2) It was not always possible to determine the ultimate utility of each test in clinical management decisions, and our study did not investigate the impact of thrombophilia testing on duration of anticoagulant therapy. Additionally, select situations could benefit from testing regardless if anticoagulant therapy is altered (eg, informing contraceptive choices). (3) Testing performed following a provoked acute thrombosis was defined as testing within 3 months following administratively defined major surgery. This definition could have included some minor procedures that do not substantially increase VTE risk, resulting in underestimated clinical utility. (4) The UUHC University Hospital serves as a referral hospital for a large geographical area, and investigators did not have access to outpatient records for a large proportion of discharged patients. As a result, frequency of repeat testing could not be assessed, possibly resulting in overestimated clinical utility. (5) In categorizing indications for testing, testing for CVST was subcategorized under testing for ischemic stroke based on presenting symptoms rather than on underlying pathophysiology. The rationale for this categorization is that patients with CVST were often tested based on presenting symptoms. Additionally, tests for CVST were ordered by the neurology service, which also ordered tests for all other ischemic stroke indications. (6) The purpose of our study was to investigate the subset of the hospital’s patient population that received thrombophilia testing, and patients were identified by tests received and not by diagnosis codes. As a result, we are unable to provide the proportion of total patients treated at the hospital for specific conditions who were tested (eg, the proportion of stroke patients that received thrombophilia testing). (7) Current practice guidelines do not recommend testing for phosphatidyl antibodies, even when traditional antiphospholipid testing is negative.25-27 Although expert panels continue to explore associations between phosphatidyl antibodies and pregnancy morbidity and thrombotic events, the low level of evidence is insufficient to guide clinical management.28 Therefore, we categorized all phosphatidyl testing as associated with minimal clinical utility.

CONCLUSIONS

In a large academic medical center, the majority of tests occurred in situations associated with minimal clinical utility. Strategies to improve thrombophilia testing practices are needed in order to minimize potentially inappropriate testing, provide more cost-effective care, and promote value-driven outcomes.

Disclosure

S.W. received financial support for this submitted work via a Bristol-Myers-Squibb grant. G.F. received financial support from Portola Pharmaceuticals for consulting and lectures that were not related to this submitted work.

Thrombophilia is a prothrombotic state, either acquired or inherited, leading to a thrombotic predisposition.1 The most common heritable thrombophilias include factor V Leiden (FVL) and prothrombin G20210A. The most common acquired thrombophilia is the presence of phospholipid antibodies.1 Thrombotic risk varies with thrombophilia type. For example, deficiencies of antithrombin, protein C and protein S, and the presence of phospholipid antibodies, confer higher risk than FVL and prothrombin G20210A.2-5 Other thrombophilias (eg, methylenetetrahydrofolate reductase mutation, increased factor VIII activity) are relatively uncommon and/or their impact on thrombosis risk appears to be either minimal or unknown.1-6 There is little clinical evidence that testing for thrombophilia impacts subsequent thrombosis prevention.5,7,8 Multiple clinical guidelines and medical societies recommend against the routine and indiscriminate use of thrombophilia testing.8-13 In general, thrombophilia testing should be considered only if the result would lead to changes in anticoagulant initiation, intensity, and/or duration, or might inform interventions to prevent thrombosis in asymptomatic family members.8-13 However, thrombophilia testing rarely changes the acute management of a thrombotic event and may have harmful effects on patients and their family members because positive results may unnecessarily increase anxiety and negative results may provide false reassurance.6,14-18 The cost-effectiveness of thrombophilia testing is unknown. Economic models have sought to quantify cost-effectiveness, but conclusions from these studies are limited.7

The utility of thrombophilia testing in emergency department (ED) and inpatient settings is further limited because patients are often treated and discharged before thrombophilia test results are available. Additionally, in these settings, multiple factors increase the risk of false-positive or false-negative results (eg, acute thrombosis, acute illness, pregnancy, and anticoagulant therapy).19,20 The purpose of this study was to systematically assess thrombophilia testing patterns in the ED and hospitalized patients at an academic medical center and to quantify the proportion of tests associated with minimal clinical utility. We hypothesize that the majority of thrombophilia tests completed in the inpatient setting are associated with minimal clinical utility.

METHODS

Setting and Patients

This study was conducted at University of Utah Health Care (UUHC) University Hospital, a 488-bed academic medical center with a level I trauma center, primary stroke center, and 50-bed ED. Laboratory services for UUHC, including thrombophilia testing, are provided by a national reference laboratory, Associated Regional and University Pathologists Laboratories. This study included patients ≥18 years of age who received thrombophilia testing (Supplementary Table 1) during an ED visit or inpatient admission at University Hospital between July 1, 2014 and December 31, 2014. There were no exclusion criteria. An institutional electronic data repository was used to identify patients matching inclusion criteria. All study activities were reviewed and approved by the UUHC Institutional Review Board with a waiver of informed consent.

Outcomes

An electronic database query was used to identify patients, collect patient demographic information, and collect test characteristics. Each patient’s electronic medical record was manually reviewed to collect all other outcomes. Indication for thrombophilia testing was identified by manual review of provider notes. Thrombophilia tests occurring in situations associated with minimal clinical utility were defined as tests meeting at least one of the following criteria: patient discharged before test results were available for review; test type not recommended by published guidelines or by UUHC Thrombosis Service physicians for thrombophilia testing (Supplementary Table 2); test performed in situations associated with decreased accuracy; test was a duplicate test as a result of different thrombophilia panels containing identical tests; and test followed a provoked venous thromboembolism (VTE). Testing in situations associated with decreased accuracy are summarized in Supplementary Table 3 and included at least one of the following at the time of the test: anticoagulant therapy, acute thrombosis, pregnant or <8 weeks postpartum, and receiving estrogen-containing medications. Only test types known to be affected by the respective situation were included. Testing following a provoked VTE was defined as testing prompted by an acute thrombosis and performed within 3 months following major surgery (defined administratively as any surgery performed in an operating room), during pregnancy, <8 weeks postpartum, or while on estrogen-containing medications. Thrombophilia testing during anticoagulant therapy was defined as testing within 4 half-lives of anticoagulant administration based on medication administration records. Anticoagulant therapy changes were identified by comparing prior-to-admission and discharge medication lists.

Data Analysis

Patient and laboratory characteristics were summarized using descriptive statistics, including mean and standard deviation (SD) for continuous variables and proportions for categorical variables. Data analysis was performed using Excel (Version 2013, Microsoft Corporation. Redmond, Washington).

RESULTS

During the 6-month study period, 163 patients received at least 1 thrombophilia test during an ED visit or inpatient admission. Patient characteristics are summarized in Table 1. Tested patients were most commonly inpatients (96%) and female (71%). A total of 1451 thrombophilia tests were performed with a mean (± SD) of 8.9 ± 6.0 tests per patient. Testing characteristics are summarized in Table 2. Of the 39 different test types performed, the most commonly ordered were cardiolipin IgG and IgM antibodies (9% each), lupus anticoagulant (9%), and β2-glycoprotein 1 IgG and IgM antibodies (8% each). When combined with testing for phosphatidyl antibodies, antiphospholipid tests accounted for 70% of all tests. Overall, 134 (9%) test results were positive. The mean time for results to become available was 2.2 ± 2.5 days. The frequency of test types with corresponding positivity rates and mean time for results to become available are summarized in Supplementary Table 4.

The indications for thrombophilia testing are summarized in Table 3. Ischemic stroke was the most common indication for testing (50% of tests; 35% of patients), followed by VTE (21% of tests; 21% of patients), and pregnancy-related conditions (eg, preeclampsia, intrauterine fetal demise; 15% of tests; 25% of patients). Overall, 911 tests (63%) occurred in situations associated with minimal clinical utility, with 126 patients (77%) receiving at least one of these tests (Table 4).

Anticoagulant therapy was changed in 43 patients (26%) in the following ways: initiated in 35 patients (21%), transitioned to a different anticoagulant in 6 patients (4%), and discontinued in 2 patients (1%). Of the 35 patients initiating anticoagulant therapy, 29 had documented thrombosis (24 had VTE, 4 had cerebral venous sinus thrombosis [CVST], and 1 had basilar artery thrombosis). Overall, 2 instances were identified in which initiation of anticoagulant therapy at discharge was in response to thrombophilia test results. In the first instance, warfarin without a parenteral anticoagulant bridge was initiated for a 54-year-old patient with a cryptogenic stroke who tested positive for β2-glycoprotein 1 IgG antibodies, lupus anticoagulant, and protein S deficiency. In the second instance, warfarin with an enoxaparin bridge was initiated for a 26-year-old patient with a cryptogenic stroke who tested positive for β2-glycoprotein 1 IgG and IgM antibodies, cardiolipin IgG antibodies, lupus anticoagulant, protein C deficiency, and antithrombin deficiency. Of the 163 patients receiving thrombophilia testing, only 2 patients (1%) had clear documentation of being offered genetic consultation.

DISCUSSION

In this retrospective analysis, 1451 thrombophilia tests were performed in 163 patients over 6 months. Tested patients were relatively young, which is likely explained by the number of patients tested for pregnancy-related conditions and the fact that a stroke or VTE in younger patients more frequently prompted providers to suspect thrombophilia. Nearly three-fourths of patients were female, which is likely due to testing for pregnancy-related conditions and possibly diagnostic suspicion bias given the comparative predilection of antiphospholipid syndrome for women. The patient characteristics in our study are consistent with other studies evaluating thrombophilia testing.21,22

Thrombophilia testing was most frequently prompted by stroke, VTE, and pregnancy-related conditions. Only 26% of patients had acute thrombosis identified during the admission, primarily because of the high proportion of tests for cryptogenic strokes and pregnancy-related conditions. Thrombophilia testing is recommended in patients who have had a stroke when the stroke is considered to be cryptogenic after a standard stroke evaluation.23 Thrombophilia testing in pregnancy-related conditions is controversial but is often considered in situations such as stillbirths with severe placental pathology and/or significant growth restriction, or in mothers with a personal or family history of thrombosis.24 The proportion of testing for pregnancy-related conditions may be greater than at other institutions because UUHC Maternal Fetal Medicine is a referral center for women with conditions associated with hypercoagulability. Anticoagulant therapy was initiated in 21% of patients, but specifically in response to thrombophilia testing in only 2 instances; in most cases, anticoagulant therapy was initiated regardless of thrombophilia test results.

The results of this study confirm our hypothesis because the majority of thrombophilia tests occurred in situations associated with minimal clinical utility. Testing in these situations was not isolated to specific patients or medical services because 77% of tested patients received at least 1 test associated with minimal clinical utility. Our study took a conservative approach in defining scenarios associated with minimal clinical utility because other situations can also affect testing accuracy (eg, hepatic disease, nephrotic syndrome) but were not included in our analysis of this outcome.

The results of this study highlight opportunities to improve thrombophilia testing practices at our institution and may be generalizable to institutions with similar testing patterns. Because multiple medical services order thrombophilia tests, strategies to improve testing practices are still being determined. The results of this study can serve as a baseline for comparison after strategies are implemented. The most common situation associated with minimal clinical utility was the use of test types not generally recommended by guidelines or UUHC Thrombosis Service physicians for thrombophilia testing (eg, β2-glycoprotein 1 IgA antibodies, phosphatidyl antibodies). We intend to require a hematology or thrombosis specialty consult prior to ordering these tests. This intervention alone could potentially decrease unnecessary testing by a third. Another consideration is to require a specialty consult prior to any inpatient thrombophilia testing. This strategy has been found to decrease inappropriate testing at other institutions.21 We also intend to streamline available thrombophilia testing panels because a poorly designed panel could lead to ordering of multiple tests associated with minimal clinical utility. At least 12 different thrombophilia panels are currently available in our computerized physician order entry system (see Supplementary Table 5). We hypothesize that current panel designs contribute to providers inadvertently ordering unintended or duplicate tests and that reducing the number of available panels and clearly delineating what tests are contained in each panel is likely to reduce unnecessary testing. Other strategies being considered include using electronic clinical decision support tools, implementing strict ordering criteria for all inpatient testing, and establishing a thrombosis stewardship program.

Our study was unique in at least 2 ways. First, previous studies describing thrombophilia testing have described testing patterns for patients with specific indications (eg, VTE), whereas our study described all thrombophilia tests regardless of indication. This allows for testing pattern comparisons across indications and medical services, increasing the generalizability of our results. Second, this study quantifies tests occurring in situations associated with a practical definition of minimal clinical utility.

Our study has several limitations: (1) Many variables were reliant on provider notes and other documentation, which allows for potential misclassification of variables. (2) It was not always possible to determine the ultimate utility of each test in clinical management decisions, and our study did not investigate the impact of thrombophilia testing on duration of anticoagulant therapy. Additionally, select situations could benefit from testing regardless if anticoagulant therapy is altered (eg, informing contraceptive choices). (3) Testing performed following a provoked acute thrombosis was defined as testing within 3 months following administratively defined major surgery. This definition could have included some minor procedures that do not substantially increase VTE risk, resulting in underestimated clinical utility. (4) The UUHC University Hospital serves as a referral hospital for a large geographical area, and investigators did not have access to outpatient records for a large proportion of discharged patients. As a result, frequency of repeat testing could not be assessed, possibly resulting in overestimated clinical utility. (5) In categorizing indications for testing, testing for CVST was subcategorized under testing for ischemic stroke based on presenting symptoms rather than on underlying pathophysiology. The rationale for this categorization is that patients with CVST were often tested based on presenting symptoms. Additionally, tests for CVST were ordered by the neurology service, which also ordered tests for all other ischemic stroke indications. (6) The purpose of our study was to investigate the subset of the hospital’s patient population that received thrombophilia testing, and patients were identified by tests received and not by diagnosis codes. As a result, we are unable to provide the proportion of total patients treated at the hospital for specific conditions who were tested (eg, the proportion of stroke patients that received thrombophilia testing). (7) Current practice guidelines do not recommend testing for phosphatidyl antibodies, even when traditional antiphospholipid testing is negative.25-27 Although expert panels continue to explore associations between phosphatidyl antibodies and pregnancy morbidity and thrombotic events, the low level of evidence is insufficient to guide clinical management.28 Therefore, we categorized all phosphatidyl testing as associated with minimal clinical utility.

CONCLUSIONS

In a large academic medical center, the majority of tests occurred in situations associated with minimal clinical utility. Strategies to improve thrombophilia testing practices are needed in order to minimize potentially inappropriate testing, provide more cost-effective care, and promote value-driven outcomes.

Disclosure

S.W. received financial support for this submitted work via a Bristol-Myers-Squibb grant. G.F. received financial support from Portola Pharmaceuticals for consulting and lectures that were not related to this submitted work.

1. Franco RF, Reitsma PH. Genetic risk factors of venous thrombosis. Hum Genet. 2001;109(4):369-384. PubMed

2. Ridker PM, Hennekens CH, Lindpaintner K, Stampfer MJ, Eisenberg PR, Miletich JP. Mutation in the gene coding for coagulation factor V and the risk of myocardial infarction, stroke, and venous thrombosis in apparently healthy men. N Engl J Med. 1995;332(14):912-917. PubMed

3. Koster T, Rosendaal FR, de Ronde H, Briët E, Vandenbroucke JP, Bertina RM. Venous thrombosis due to poor anticoagulant response to activated protein C: Leiden Thrombophilia Study. Lancet. 1993;342(8886-8887):1503-1506. PubMed

4. Margaglione M, Brancaccio V, Giuliani N, et al. Increased risk for venous thrombosis in carriers of the prothrombin G-->A20210 gene variant. Ann Intern Med. 1998;129(2):89-93. PubMed

5. De Stefano V, Martinelli I, Mannucci PM, et al. The risk of recurrent deep venous thrombosis among heterozygous carriers of both factor V Leiden and the G20210A prothrombin mutation. N Engl J Med. 1999;341:801-806. PubMed

6. Dickey TL. Can thrombophilia testing help to prevent recurrent VTE? Part 2. JAAPA. 2002;15(12):23-24, 27-29. PubMed

7. Simpson EL, Stevenson MD, Rawdin A, Papaioannou D. Thrombophilia testing in people with venous thromboembolism: systematic review and cost-effectiveness analysis. Health Technol Assess. 2009;13(2):iii, ix-x, 1-91. PubMed

8. National Institute for Health and Clinical Excellence. Venous thromboembolic disease: the management of venous thromboembolic diseases and the role of thrombophilia testing. NICE clinical guideline 144. https://www.nice.org.uk/guidance/cg144. Accessed on June 30, 2017.

9. Evalution of Genomic Applications in Practice and Prevention (EGAPP) Working Group. Recommendations from the EGAPP Working Group: routine testing for factor V Leiden (R506Q) and prothrombin (20210G>A) mutations in adults with a history of idiopathic venous thromboembolism and their adult family members. Genet Med. 2011;13(1):67-76.

10. Kearon C, Akl EA, Comerota AJ, et al. Antithrombotic therapy for VTE disease: antithrombotic therapy and prevention of thrombosis, 9th ed: American College of Chest Physicians evidence-based clinical practice guidelines. Chest. 2012;141(2 Suppl):e419S-494S. PubMed

11. Baglin T, Gray E, Greaves M, et al. Clinical guidelines for testing for heritable thrombophilia. Br J Haematol. 2010;149(2):209-220. PubMed

12. Hicks LK, Bering H, Carson KR, et al. The ASH Choosing Wisely® campaign: five hematologic tests and treatments to question. Hematology Am Soc Hematol Educ Program. 2013;2013:9-14. PubMed

13. Stevens SM, Woller SC, Bauer KA, et al. Guidance for the evaluation and treatment of hereditary and acquired thrombophilia. J Thromb Thrombolysis. 2016;41(1):154-164. PubMed

14. Christiansen SC, Cannegieter SC, Koster T, Vandenbroucke JP, Rosendaal FR. Thrombophilia, clinical factors, and recurrent venous thrombotic events. JAMA. 2005;293(19):2352-2361. PubMed

15. Prandoni P, Lensing AW, Cogo A, et al. The long-term clinical course of acute deep venous thrombosis. Ann Intern Med. 1996;125(1):1-7. PubMed

16. Miles JS, Miletich JP, Goldhaber SZ, Hennekens CH, Ridker PM. G20210A mutation in the prothrombin gene and the risk of recurrent venous thromboembolism. J Am Coll Cardiol. 2001;37(1):215-218. PubMed

17. Eichinger S, Weltermann A, Mannhalter C, et al. The risk of recurrent venous thromboembolism in heterozygous carriers of factor V Leiden and a first spontaneous venous thromboembolism. Arch Intern Med. 2002;162(20):2357-2360. PubMed

18. Mazzolai L, Duchosal MA. Hereditary thrombophilia and venous thromboembolism: critical evaluation of the clinical implications of screening. Eur J Vasc Endovasc Surg. 2007;34(4):483-488. PubMed

19. Merriman L, Greaves M. Testing for thrombophilia: an evidence‐based approach. Postgrad Med J. 2006;82(973):699-704. PubMed

20. Favaloro EJ, McDonald D, Lippi G. Laboratory investigation of thrombophilia: the good, the bad, and the ugly. Semin Thromb Hemost. 2009;35(7):695-710. PubMed

21. Shen YM, Tsai J, Taiwo E, et al. Analysis of thrombophilia test ordering practices at an academic center: a proposal for appropriate testing to reduce harm and cost. PLoS One. 2016;11(5):e0155326. PubMed

22. Meyer MR, Witt DM, Delate T, et al. Thrombophilia testing patterns amongst patients with acute venous thromboembolism. Thromb Res. 2015;136(6):1160-1164. PubMed

23. Saver JL. Clinical practice: cryptogenic stroke. N Engl J Med. 2016;374(21):2065-2074. PubMed

24. ACOG practice bulletin no. 102: management of stillbirth. Obstet Gynecol. 2009;113(3):748-761. PubMed

25. Miyakis S, Lockshin MD, Atsumi T, et al. International consensus statement on an update of the classification criteria for definite antiphospholipid syndrome (APS). J Thromb Haemost. 2006;4(2):295-306. PubMed

26. Keeling D, Mackie I, Moore GW, Greer IA, Greaves M, British Committee for Standards in Haematology. Guidelines on the investigation and management of antiphospholipid syndrome. Br J Haematol. 2012;157(1):47-58. PubMed

27. Committee on Practice Bulletins—Obstetrics, American College of Obstetricians and Gynecologists. Practice bulletin no. 132: antiphospholipid syndrome. Obstet Gynecol. 2012;120(6):1514-1521. PubMed

28. Bertolaccini ML, Amengual O, Andreoli L, et al. 14th International Congress on Antiphospholipid Antibodies Task Force. Report on antiphospholipid syndrome laboratory diagnostics and trends. Autoimmun Rev. 2014;13(9):917-930. PubMed

1. Franco RF, Reitsma PH. Genetic risk factors of venous thrombosis. Hum Genet. 2001;109(4):369-384. PubMed

2. Ridker PM, Hennekens CH, Lindpaintner K, Stampfer MJ, Eisenberg PR, Miletich JP. Mutation in the gene coding for coagulation factor V and the risk of myocardial infarction, stroke, and venous thrombosis in apparently healthy men. N Engl J Med. 1995;332(14):912-917. PubMed

3. Koster T, Rosendaal FR, de Ronde H, Briët E, Vandenbroucke JP, Bertina RM. Venous thrombosis due to poor anticoagulant response to activated protein C: Leiden Thrombophilia Study. Lancet. 1993;342(8886-8887):1503-1506. PubMed

4. Margaglione M, Brancaccio V, Giuliani N, et al. Increased risk for venous thrombosis in carriers of the prothrombin G-->A20210 gene variant. Ann Intern Med. 1998;129(2):89-93. PubMed

5. De Stefano V, Martinelli I, Mannucci PM, et al. The risk of recurrent deep venous thrombosis among heterozygous carriers of both factor V Leiden and the G20210A prothrombin mutation. N Engl J Med. 1999;341:801-806. PubMed

6. Dickey TL. Can thrombophilia testing help to prevent recurrent VTE? Part 2. JAAPA. 2002;15(12):23-24, 27-29. PubMed

7. Simpson EL, Stevenson MD, Rawdin A, Papaioannou D. Thrombophilia testing in people with venous thromboembolism: systematic review and cost-effectiveness analysis. Health Technol Assess. 2009;13(2):iii, ix-x, 1-91. PubMed

8. National Institute for Health and Clinical Excellence. Venous thromboembolic disease: the management of venous thromboembolic diseases and the role of thrombophilia testing. NICE clinical guideline 144. https://www.nice.org.uk/guidance/cg144. Accessed on June 30, 2017.

9. Evalution of Genomic Applications in Practice and Prevention (EGAPP) Working Group. Recommendations from the EGAPP Working Group: routine testing for factor V Leiden (R506Q) and prothrombin (20210G>A) mutations in adults with a history of idiopathic venous thromboembolism and their adult family members. Genet Med. 2011;13(1):67-76.

10. Kearon C, Akl EA, Comerota AJ, et al. Antithrombotic therapy for VTE disease: antithrombotic therapy and prevention of thrombosis, 9th ed: American College of Chest Physicians evidence-based clinical practice guidelines. Chest. 2012;141(2 Suppl):e419S-494S. PubMed

11. Baglin T, Gray E, Greaves M, et al. Clinical guidelines for testing for heritable thrombophilia. Br J Haematol. 2010;149(2):209-220. PubMed

12. Hicks LK, Bering H, Carson KR, et al. The ASH Choosing Wisely® campaign: five hematologic tests and treatments to question. Hematology Am Soc Hematol Educ Program. 2013;2013:9-14. PubMed

13. Stevens SM, Woller SC, Bauer KA, et al. Guidance for the evaluation and treatment of hereditary and acquired thrombophilia. J Thromb Thrombolysis. 2016;41(1):154-164. PubMed

14. Christiansen SC, Cannegieter SC, Koster T, Vandenbroucke JP, Rosendaal FR. Thrombophilia, clinical factors, and recurrent venous thrombotic events. JAMA. 2005;293(19):2352-2361. PubMed

15. Prandoni P, Lensing AW, Cogo A, et al. The long-term clinical course of acute deep venous thrombosis. Ann Intern Med. 1996;125(1):1-7. PubMed

16. Miles JS, Miletich JP, Goldhaber SZ, Hennekens CH, Ridker PM. G20210A mutation in the prothrombin gene and the risk of recurrent venous thromboembolism. J Am Coll Cardiol. 2001;37(1):215-218. PubMed

17. Eichinger S, Weltermann A, Mannhalter C, et al. The risk of recurrent venous thromboembolism in heterozygous carriers of factor V Leiden and a first spontaneous venous thromboembolism. Arch Intern Med. 2002;162(20):2357-2360. PubMed

18. Mazzolai L, Duchosal MA. Hereditary thrombophilia and venous thromboembolism: critical evaluation of the clinical implications of screening. Eur J Vasc Endovasc Surg. 2007;34(4):483-488. PubMed

19. Merriman L, Greaves M. Testing for thrombophilia: an evidence‐based approach. Postgrad Med J. 2006;82(973):699-704. PubMed

20. Favaloro EJ, McDonald D, Lippi G. Laboratory investigation of thrombophilia: the good, the bad, and the ugly. Semin Thromb Hemost. 2009;35(7):695-710. PubMed

21. Shen YM, Tsai J, Taiwo E, et al. Analysis of thrombophilia test ordering practices at an academic center: a proposal for appropriate testing to reduce harm and cost. PLoS One. 2016;11(5):e0155326. PubMed

22. Meyer MR, Witt DM, Delate T, et al. Thrombophilia testing patterns amongst patients with acute venous thromboembolism. Thromb Res. 2015;136(6):1160-1164. PubMed

23. Saver JL. Clinical practice: cryptogenic stroke. N Engl J Med. 2016;374(21):2065-2074. PubMed

24. ACOG practice bulletin no. 102: management of stillbirth. Obstet Gynecol. 2009;113(3):748-761. PubMed

25. Miyakis S, Lockshin MD, Atsumi T, et al. International consensus statement on an update of the classification criteria for definite antiphospholipid syndrome (APS). J Thromb Haemost. 2006;4(2):295-306. PubMed

26. Keeling D, Mackie I, Moore GW, Greer IA, Greaves M, British Committee for Standards in Haematology. Guidelines on the investigation and management of antiphospholipid syndrome. Br J Haematol. 2012;157(1):47-58. PubMed

27. Committee on Practice Bulletins—Obstetrics, American College of Obstetricians and Gynecologists. Practice bulletin no. 132: antiphospholipid syndrome. Obstet Gynecol. 2012;120(6):1514-1521. PubMed

28. Bertolaccini ML, Amengual O, Andreoli L, et al. 14th International Congress on Antiphospholipid Antibodies Task Force. Report on antiphospholipid syndrome laboratory diagnostics and trends. Autoimmun Rev. 2014;13(9):917-930. PubMed

© 2017 Society of Hospital Medicine

A Randomized Controlled Trial of a CPR Decision Support Video for Patients Admitted to the General Medicine Service

Discussions about cardiopulmonary resuscitation (CPR) can be difficult due to their association with end of life. The Patient Self Determination Act (H.R.4449 — 101st Congress [1989-1990]) and institutional standards mandate collaboration between care providers and patients regarding goals of care in emergency situations such as cardiopulmonary arrest. The default option is to provide CPR, which may involve chest compressions, intubation, and/or defibrillation. Yet numerous studies show that a significant number of patients have no code preference documented in their medical chart, and even fewer report a conversation with their care provider about their wishes regarding CPR.1-3 CPR is an invasive and potentially painful procedure with a higher chance of failure than success4, and yet many patients report that their provider did not discuss with them the risks and benefits of resuscitation.5,6 Further highlighting the importance of individual discussions about CPR preferences is the reality that factors such as age and disease burden further skew the likelihood of survival after cardiopulmonary arrest.7

Complicating the lack of appropriate provider and patient discussion of the risks and benefits of resuscitation are significant misunderstandings about CPR in the lay population. Patients routinely overestimate the likelihood of survival following CPR.8,9 This may be partially due to the portrayal of CPR in the lay media as highly efficacious.10 Other factors known to prevent effective provider-and-patient discussions about CPR preferences are providers’ discomfort with the subject11 and perceived time constraints.12

Informational videos have been developed to assist patients with decision making about CPR and have been shown to impact patients’ choices in the setting of life-limiting diseases such as advanced cancer,13-14 serious illness with a prognosis of less than 1 year,15 and dementia.16 While discussion of code status is vitally important in end-of-life planning for seriously ill individuals, delayed discussion of CPR preferences is associated with a significant increase in the number of invasive procedures performed at the end of life, increased length of stay in the hospital, and increased medical cost.17 Despite clear evidence that earlier discussion of resuscitation options are valuable, no studies have examined the impact of a video about code status options in the general patient population.

Here we present our findings of a randomized trial in patients hospitalized on the general medicine wards who were 65 years of age or older, regardless of illness severity or diagnosis. The video tool was a supplement for, rather than a replacement of, standard provider and patient communication about code preferences, and we compared patients who watched the video against controls who had standard discussions with their providers. Our video detailed the process of chest compressions and intubation during CPR and explained the differences between the code statuses: full code, do not resuscitate (DNR), and do not resuscitate/do not intubate (DNR/DNI). We found a significant difference between the 2 groups, with significantly more individuals in the video group choosing DNR/DNI. These findings suggest that video support tools may be a useful supplement to traditional provider discussions about code preferences in the general patient population.

METHODS

We enrolled patients from the general medicine wards at the Minneapolis VA Hospital from September 28, 2015 to October 23, 2015. Eligibility criteria included age 65 years or older, ability to provide informed consent, and ability to communicate in English. Study recruitment and data collection were performed by a study coordinator who was a house staff physician and had no role in the care of the participants. The medical charts of all general medicine patients were reviewed to determine if they met the age criteria. The physician of record for potential participants was contacted to assess if the patient was able to provide informed consent and communicate in English. Eligible patients were approached and informed consent was obtained from those who chose to participate in the study. After obtaining informed consent, patients were randomized using a random number generator to the intervention or usual-care arm of the study.

Those who were assigned to the intervention arm watched a 6-minute long video explaining the code-preference choices of full code, DNR, or DNR/DNI. Full code was described as possibly including CPR, intubation, and/or defibrillation depending on the clinical situation. Do not resuscitate was described as meaning no CPR or defibrillation but possible intubation in the case of respiratory failure. Do not resuscitate/do not intubate was explained as meaning no CPR, no defibrillation, and no intubation but rather permitting “natural death” to occur. The video showed a mock code with chest compressions, defibrillation, and intubation on a mannequin as well as palliative care specialists who discussed potential complications and survival rates of inhospital resuscitation.

The video was created at the University of Minnesota with the departments of palliative care and internal medicine (www.mmcgmeservices.org/codestat.html). After viewing the video, participants in the intervention arm filled out a questionnaire designed to assess their knowledge and beliefs about CPR and trust in their medical care providers. They were asked to circle their code preference. The participants’ medical teams were made aware of the code preferences and were counseled to discuss code preferences further if it was different from their previously documented code preference.

Participants in the control arm were assigned to usual care. At the institution where this study occurred, a discussion about code preferences between the patient and their medical team is considered the standard of care. After informed consent was obtained, participants filled out the same questionnaire as the participants in the intervention arm. They were asked to circle their code status preference. If they chose to ask questions about resuscitation, these were answered, but the study coordinator did not volunteer information about resuscitation or intervene in the medical care of the participants in any way.

All participants’ demographic characteristics and outcomes were described using proportions for categorical variables and means ± standard deviation for continuous variables. The primary outcome was participants’ stated code preference (full code, DNR, or DNR/DNI). Secondary outcomes included comparison of trust in medical providers, resuscitation beliefs, and desire for life-prolonging interventions as obtained from the questionnaire.

We analyzed code preferences between the intervention and control groups using Fisher exact test. We used analysis of variance (ANOVA) to compare questionnaire responses between the 2 groups. All reported P values are 2-sided with P < 0.05 considered significant. The project originally targeted a sample size of 194 participants for 80% power to detect a 20% difference in the code preference choices between intervention and control groups. Given the short time frame available to enroll participants, the target sample size was not reached. Propitiously, the effect size was greater than originally expected.

RESULTS

Study Participants

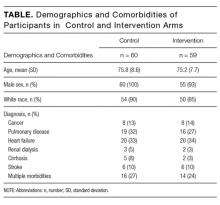

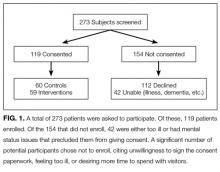

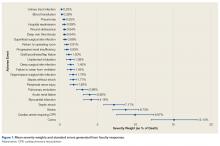

A total of 273 potentially eligible patients were approached to participate and 119 (44%) enrolled. (Figure 1). Of the 154 patients that were deemed eligible after initial screening, 42 patients were unable to give consent due to the severity of their illness or because of their mental status. Another 112 patients declined participation in the study, citing reasons such as disinterest in the consent paperwork, desire to spend time with visitors, and unease with the subject matter. Patients who declined participation did not differ significantly by age, sex, or race from those enrolled in the study.

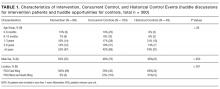

Among the 119 participants, 60 were randomized to the control arm, and 59 were randomized to the intervention arm. Participants in the 2 arms did not differ significantly in age, sex, or race (P > 0.05), although all 4 women in the study were randomized to the intervention arm. Eighty-seven percent of the study population identified as white with the remainder including black, Asian, Pacific Islander, Native American, or declining to answer. The mean age was 75.8 years in the control arm vs. 75.2 years in the intervention arm.

Primary diagnoses in the study group ranged widely from relatively minor skin infections to acute pancreatitis. The control arm and the intervention arm did not differ significantly in the incidence of heart failure, pulmonary disease, renal dialysis, cirrhosis, stroke, or active cancer (P > 0.05). Patients were considered as having a stroke if they had suffered a stroke during their hospital admission or if they had long-term sequelae of prior stroke. Patients were considered as having active cancer if they were currently undergoing treatment or had metastases. Participants were considered as having multiple morbidities if they possessed 2 or more of the listed conditions. Between the control arm and the intervention arm, there was no significant difference in the number of participants with multiple morbidities (27% in the control group and 24% in the video group).

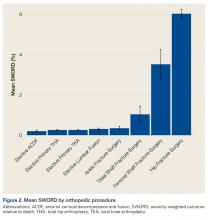

Code Status Preference

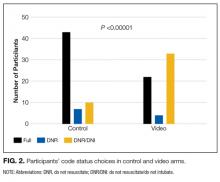

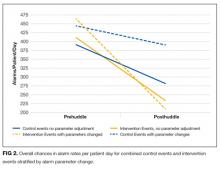

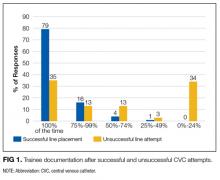

There was a significant difference in the code status preferences of the intervention arm and the control arm (P < 0.00001; Figure 2). In the control arm, 71% of participants chose full code, 12% chose DNR, and 17% chose DNR/DNI. In the intervention arm, only 37% chose full code, 7% chose DNR, and 56% chose DNR/DNI.

Secondary outcomes

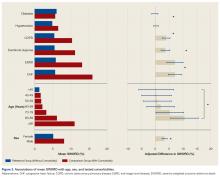

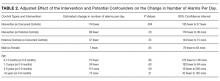

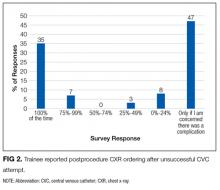

Participants in the control and intervention arms were asked about their trust in their medical team (Question 1, Figure 3). There was no significant difference, but a trend towards less trust in the intervention group (P = 0.083) was seen with 93% of the control arm and 76% of the intervention arm agreeing with the statement “My doctors and healthcare team want what is best for me.”

Question 2, “If I choose to avoid resuscitation efforts, I will not receive care,” was designed to assess participants’ knowledge and perception about the care they would receive if they chose DNR/DNI as their code status. No significant difference was seen between the control and the interventions arms, with 28% of the control group agreeing with the statement, compared to 22% of the video group.

For question 3, participants were asked to respond to the statement “I would like to live as long as possible, even if I never leave the hospital.” No significant differences were seen between the control and the intervention arms, with 22% of both groups agreeing with the statement.

When we examined participant responses by the code status chosen, a significantly higher percentage of participants who chose full code agreed with the statement in question 3 (P = 0.0133). Of participants who chose full code, 27% agreed with the statement, compared to 18% of participants who chose DNR and 12% of participants who chose DNR/DNI. There was no significant difference (P > 0.05) between participant code status choice and either Question 1 or 2.

DISCUSSION

This study examined the effect of watching a video about CPR and intubation on the code status preferences of hospitalized patients. Participants who viewed a video about CPR and intubation were more likely to choose to forgo these treatments. Participants who chose CPR and intubation were more likely to agree that they would want to live as long as possible even if that time were spent in a medical setting.

To our knowledge, this is the first study to examine the role of a video decision support tool about code choices in the general hospital population, regardless of prognosis. Previous work has trialed the use of video support tools in hospitalized patients with a prognosis of less than 1 year,15 patients admitted to the ICU,18 and outpatients with cancer18 and those with dementia.16 Unlike previous studies, our study included a variety of illness severity.

Discussions about resuscitation are important for all adults admitted to the hospital because of the unpredictable nature of illness and the importance of providing high-quality care at the end of life. A recent study indicates that in-hospital cardiopulmonary arrest occurs in almost 1 per 1000 hospital days.19 These discussions are particularly salient for patients 65 years and older because of the higher incidence of death in this group. Inpatient admission is often a result of a change in health status, making it an important time for patients to reassess their resuscitation preferences based on their physical state and known comorbidities.

Video tools supplement the traditional code status discussion in several key ways. They provide a visual simulation of the procedures that occur during a typical resuscitation. These tools can help patients understand what CPR and intubation entail and transmit information that might be missed in verbal discussions. Visual media is now a common way for patients to obtain medical information20-22 and may be particularly helpful to patients who have low health literacy.23Video tools also help ensure that patients receive all the facts about resuscitation irrespective of how busy their provider may be or how comfortable the provider is with the topic. Lastly, video tools can reinforce information that is shared in the initial code status discussion. Given the significant differences in code status preference between our control and video arms, it is clear that the video tool has a significant impact on patient choices.