User login

Do HCAHPS doctor communication scores reflect the communication skills of the attending on record? A cautionary tale from a tertiary-care medical service

Communication is the foundation of medical care.1 Effective communication can improve health outcomes, safety, adherence, satisfaction, trust, and enable genuine informed consent and decision-making.2-9 Furthermore, high-quality communication increases provider engagement and workplace satisfaction, while reducing stress and malpractice risk.10-15

Direct measurement of communication in the healthcare setting can be challenging. The “Four Habits Model,” which is derived from a synthesis of empiric studies8,16-20 and theoretical models21-24 of communication, offers 1 framework for assessing healthcare communication. The conceptual model underlying the 4 habits has been validated in studies of physician and patient satisfaction.1,4,25-27 The 4 habits are: investing in the beginning, eliciting the patient’s perspective, demonstrating empathy, and investing in the end. Each habit is divided into several identifiable tasks or skill sets, which can be reliably measured using validated tools and checklists.28 One such instrument, the Four Habits Coding Scheme (4HCS), has been evaluated against other tools and demonstrated overall satisfactory inter-rater reliability and validity.29,30

The Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) survey, developed under the direction of the Centers for Medicare and Medicaid Services (CMS) and the Agency for Healthcare Research and Quality, is an established national standard for measuring patient perceptions of care. HCAHPS retrospectively measures global perceptions of communication, support and empathy from physicians and staff, processes of care, and the overall patient experience. HCAHPS scores were first collected nationally in 2006 and have been publicly reported since 2008.31 With the introduction of value-based purchasing in 2012, health system revenues are now tied to HCAHPS survey performance.32 As a result, hospitals are financially motivated to improve HCAHPS scores but lack evidence-based methods for doing so. Some healthcare organizations have invested in communication training programs based on the available literature and best practices.2,33-35 However, it is not known how, if at all, HCAHPS scores relate to physicians’ real-time observed communication skills.

To examine the relationship between physician communication, as reported by global HCAHPS scores, and the quality of physician communication skills in specific encounters, we observed hospitalist physicians during inpatient bedside rounds and measured their communication skills using the 4HCS.

METHODS

Study Design

The study utilized a cross sectional design; physicians who consented were observed on rounds during 3 separate encounters, and we compared hospitalists’ 4HCS scores to their HCAHPS scores to assess the correlation. The study was approved by the Institutional Review Board of the Cleveland Clinic.

Population

The study was conducted at the main campus of the Cleveland Clinic. All physicians specializing in hospital medicine who had received 10 or more completed HCAHPS survey responses while rounding on a medicine service in the past year were invited to participate in the study. Participation was voluntary; night hospitalists were excluded. A research nurse was trained in the Four Habits Model28 and in the use of the 4HCS coding scheme by the principal investigator. The nurse observed each physician and ascertained the presence of communication behaviors using the 4HCS tool. Physicians were observed between August 2013 and August 2014. Multiple observations per physician could occur on the same day, but only 1 observation per patient was used for analysis. Observations consisted of a physician’s first encounter with a hospitalized patient, with the patient’s consent. Observations were conducted during encounters with English-speaking and cognitively intact patients only. Resident physicians were permitted to stay and conduct rounds per their normal routine. Patient information was not collected as part of the study.

Measures

HCAHPS. For each physician, we extracted all HCAHPS scores that were collected from our hospital’s Press Ganey database. The HCAHPS survey contains 22 core questions divided into 7 themes or domains, 1 of which is doctor communication. The survey uses frequency-based questions with possible answers fixed on a 4-point scale (4=always, 3=usually, 2=sometimes, 1=never). Our primary outcome was the doctor communication domain, which comprises 3 questions: 1) During this hospital stay, how often did the doctors treat you with respect? 2) During this hospital stay, how often did the doctors listen to you? and 3) During this hospital stay, how often did the doctors explain things in a language you can understand? Because CMS counts only the percentage of responses that are graded “always,” so-called “top box” scoring, we used the same measure.

The HCAHPS scores are always attributed to the physician at the time of discharge even if he may not have been responsible for the care of the patient during the entire hospital course. To mitigate contamination from patients seen by multiple providers, we cross-matched length of stay (LOS) data with billing data to determine the proportion of days a patient was seen by a single provider during the entire length of stay. We stratified patients seen by the attending providers to less than 50%, 50% to less than 100%, and at 100% of the LOS. However, we were unable to identify which patients were seen by other consultants or by residents due to limitations in data gathering and the nature of the database.

The Four Habits. The Four Habits are: invest in the beginning, elicit the patient’s perspective, demonstrate empathy, and invest in the end (Figure 1). Specific behaviors for Habits 1 to 4 are outlined in the Appendix, but we will briefly describe the themes as follows. Habit 1, invest in the beginning, describes the ability of the physician to set a welcoming environment for the patient, establish rapport, and collaborate on an agenda for the visit. Habit 2, elicit the patient’s perspective, describes the ability of the physician to explore the patients’ worries, ideas, expectations, and the impact of the illness on their lifestyle. Habit 3, demonstrate empathy, describes the physician’s openness to the patient’s emotions as well as the ability to explore, validate; express curiosity, and openly accept these feelings. Habit 4, invest in the end, is a measure of the physician’s ability to counsel patients in a language built around their original concerns or worries, as well as the ability to check the patients’ understanding of the plan.2,29-30

4HCS. The 4HCS tool (Appendix) measures discreet behaviors and phrases based on each of the Four Habits (Figure 1). With a scoring range from a low of 4 to a high of 20, the rater at bedside assigns a range of points on a scale of 1 to 5 for each habit. It is an instrument based on a teaching model used widely throughout Kaiser Permanente to improve clinicians’ communication skills. The 4HCS was first tested for interrater reliability and validity against the Roter Interaction Analysis System using 100 videotaped primary care physician encounters.29 It was further evaluated in a randomized control trial. Videotapes from 497 hospital encounters involving 71 doctors from a variety of clinical specialties were rated by 4 trained raters using the coding scheme. The total score Pearson’s R and intraclass correlation coefficient (ICC) exceeded 0.70 for all pairs of raters, and the interrater reliability was satisfactory for the 4HCS as applied to heterogeneous material.30

STATISTICAL ANALYSIS

Physician characteristics were summarized with standard descriptive statistics. Pearson correlation coefficients were computed between HCAHPS and 4HCS scores. All analyses were performed with RStudio (Boston, MA). The Pearson correlation between the averaged HCAHPS and 4HCS scores was also computed. A correlation with a P value less than 0.05 was considered statistically significant. With 28 physicians, the study had a power of 88% to detect a moderate correlation (greater than 0.50) with a 2-sided alpha of 0.05. We also computed the correlations based on the subgroups of data with patients seen by providers for less than 50%, 50% to less than 100%, and 100% of LOS. All analyses were conducted in SAS 9.2 (SAS Institute Inc., Cary, NC).36

RESULTS

There were 31 physicians who met our inclusion criteria. Of 29 volunteers, 28 were observed during 3 separate inpatient encounters and made up the final sample. A total of 1003 HCAHPS survey responses were available for these physicians. Participants were predominantly female (60.7%), with an average age of 39 years. They were in practice for an average of 4 years (12 were in practice more than 5 years), and 9 were observed on a teaching rotation.

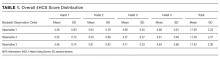

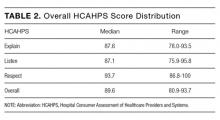

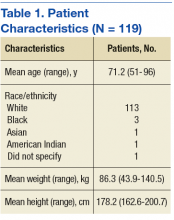

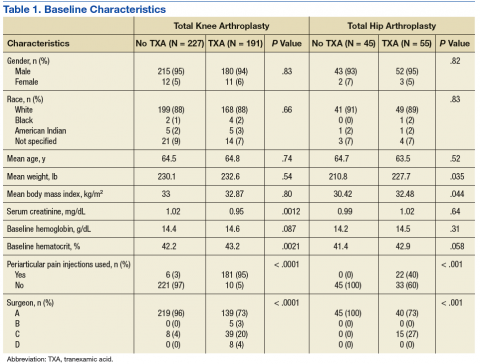

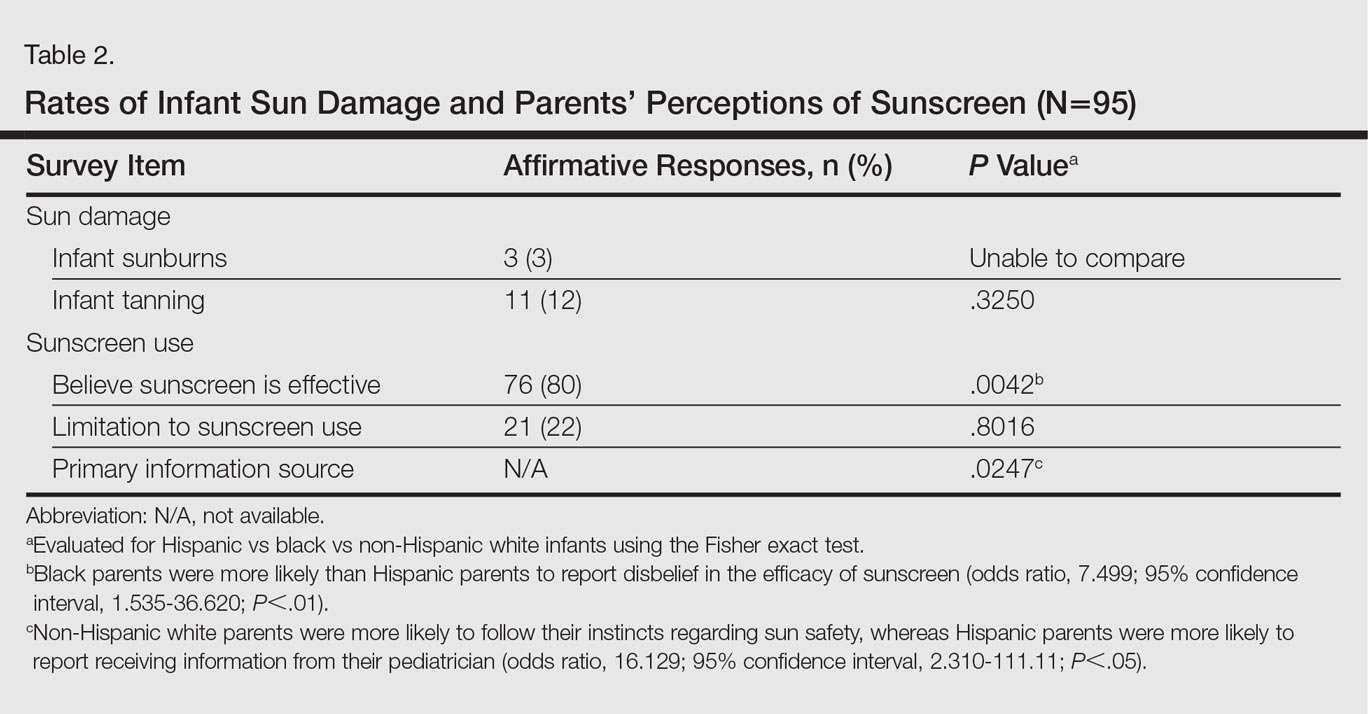

The means of the overall 4HCS scores per observation were 17.39 ± 2.33 for the first, 17.00 ± 2.37 for the second, and 17.43 ± 2.36 for third bedside observation. The mean 4HCS scores per observation, broken down by habit, appear in Table 1. The ICC among the repeated scores within the same physician was 0.81. The median number of HCAHPS survey returns was 32 (range = [8, 85], with mean = 35.8, interquartile range = [16, 54]). The median overall HCAHPS doctor communication score was 89.6 (range = 80.9-93.7). Participants scored the highest in the respect subdomain and the lowest in the explain subdomain. Median HCAHPS scores and ranges appear in Table 2.

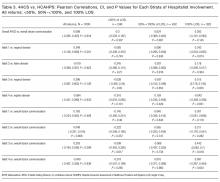

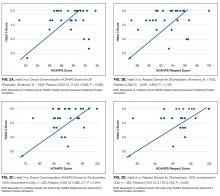

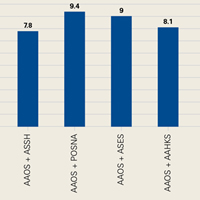

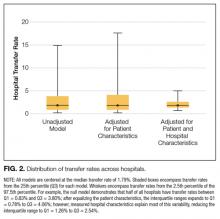

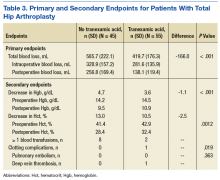

Because there were no significant associations between 4HCS scores or HCAHPS scores and physician age, sex, years in practice, or teaching site, correlations were not adjusted. Figure 2A and 2B show the association between mean 4HCS scores and HCAHPS scores by physician. There was no significant correlation between overall 4HCS and HCAHPS doctor communication scores (Pearson correlation coefficient 0.098; 95% confidence interval [CI], -0.285, 0.455). The individual habits also were not correlated with overall HCAHPS scores or with their corresponding HCAHPS domain (Table 3).

For 325 patients, 1 hospitalist was present for the entire LOS. In sensitivity analysis limiting observations to these patients (Figure 2C, Figure 2D, Table 3), we found a moderate correlation between habit 3 and the HCAHPS respect score (Pearson correlation coefficient 0.515; 95% CI, 0.176, 0.745; P = 0.005), and a weaker correlation between habit 3 and the HCAHPS overall doctor communication score (0.442; 95% CI, 0.082, 0.7; P = 0.019). There were no other significant correlations between specific habits and HCAHPS scores.

DISCUSSION

In this observational study of hospitalist physicians at a large tertiary care center, we found that communication skills, as measured by the 4HCS, varied substantially among physicians but were highly correlated within patients of the same physician. However, there was virtually no correlation between the attending physician of record’s 4HCS scores and their HCAHPS communication scores. When we limited our analysis to patients who saw only 1 hospitalist throughout their stay, there were moderate correlations between demonstration of empathy and both the HCAHPS respect score and overall doctor communication score. There were no trends across the strata of hospitalist involvement. It is important to note that the addition of even 1 different hospitalist to the LOS removes any association. Habits 1 and 2 are close to significance in the 100% subgroup, with a weak correlation. Interestingly, Habit 4, which focuses on creating a plan with the patient, showed no correlation at all with patients reporting that doctors explained things in language they could understand.

Development and testing of the HCAHPS survey began in 2002, commissioned by CMS and the Agency for Healthcare Research and Quality for the purpose of measuring patient experience in the hospital. The HCAHPS survey was endorsed by the National Quality Forum in 2005, with final approval of the national implementation granted by the Office of Management and Budget later that year. The CMS began implementation of the HCAHPS survey in 2006, with the first required public reporting of all hospitals taking place in March 2008.37-41 Based on CMS’ value-based purchasing initiative, hospitals with low HCAHPS scores have faced substantial penalties since 2012. Under these circumstances, it is important that the HCAHPS measures what it purports to measure. Because HCAHPS was designed to compare hospitals, testing was limited to assessment of internal reliability, hospital-level reliability, and construct validity. External validation with known measures of physician communication was not performed.41 Our study appears to be the first to compare HCAHPS scores to directly observed measures of physician communication skills. The lack of association between the 2 should sound a cautionary note to hospitals who seek to tie individual compensation to HCAHPS scores to improve them. In particular, the survey asks for a rating for all the patient’s doctors, not just the primary hospitalist. We found that, for hospital stays with just 1 hospitalist, the HCAHPS score reflected observed expression of empathy, although the correlation was only moderate, and HCAHPS were not correlated with other communication skills. Of all communication skills, empathy may be most important. Almost the entire body of research on physician communication cites empathy as a central skill. Empathy improves patient outcomes1-9,13-14,16-18,42 such as adherence to treatment, loyalty, and perception of care; and provider outcomes10-12,15 such as reduced burnout and a decreased likelihood of malpractice litigation.

It is less clear why other communication skills did not correlate with HCAHPS, but several differences in the measures themselves and how they were obtained might be responsible. It is possible that HCAHPS measures something broader than physician communication. In addition, the 4HCS was developed and normed on outpatient encounters as is true for virtually all doctor-patient coding schemes.43 Little is known about inpatient communication best practices. The timing of HCAHPS may also degrade the relationship between observed and reported communication. The HCAHPS questionnaires, collected after discharge, are retrospective reconstructions that are subject to recall bias and recency effects.44,45 In contrast, our observations took place in real time and were specific to the face-to-face interactions that take place when physicians engage patients at the bedside. Third, the response rate for HCAHPS surveys is only 30%, leading to potential sample bias.46 Respondents represent discharged patients who are willing and able to answer surveys, and may not be representative of all hospitalized patients. Finally, as with all global questions, the meaning any individual patient assigns to terms like “respect” may vary.

Our study has several limitations. The HCAHPS and 4HCS scores were not obtained from the same sample of patients. It is possible that the patients who were observed were not representative of the patients who completed the HCAHPS surveys. In addition, the only type of encounter observed was the initial visit between the hospitalist and the patient, and did not include communication during follow-up visits or on the day of discharge. However, there was a strong ICC among the 4HCS scores, implying that the 4HCS measures an inherent physician skill, which should be consistent across patients and encounters. Coding bias of the habits by a single observer could not be excluded. High intra-class correlation could be due in part to observer preferences for particular communication styles. Our sample included only 28 physicians. Although our study was powered to rule out a moderate correlation between 4HCS scores and HCAHPS scores (Pearson correlation coefficient greater than 0.5), we cannot exclude weaker correlations. Most correlations that we observed were so small that they would not be clinically meaningful, even in a much larger sample.

CONCLUSIONS

Our findings that HCAHPS scores did not correlate with the communication skills of the attending of record have some important implications. In an environment of value-based purchasing, most hospital systems are interested in identifying modifiable provider behaviors that optimize efficiency and payment structures. This study shows that directly measured communication skills do not correlate with HCAHPS scores as generally reported, indicating that HCAHPS may be measuring a broader domain than only physician communication skills. Better attribution based on the proportion of care provided by an individual physician could make the scores more useful for individual comparisons, but most institutions do not report their data in this way. Given this limitation, hospitals should refrain from comparing and incentivizing individual physicians based on their HCAHPS scores, because this measure was not designed for this purpose and does not appear to reflect an individual’s skills. This is important in the current environment in which hospitals face substantial penalties for underperformance but lack specific tools to improve their scores. Furthermore, there is concern that this type of measurement creates perverse incentives that may adversely alter clinical practice with the aim of improving scores.46

Training clinicians in communication and teaming skills is one potential means of increasing overall scores.15 Improving doctor-patient and team relationships is also the right thing to do. It is increasingly being demanded by patients and has always been a deep source of satisfaction for physicians.15,47 Moreover, there is an increasingly robust literature that relates face-to-face communication to biomedical and psychosocial outcomes of care.48 Identifying individual physicians who need help with communication skills is a worthwhile goal. Unfortunately, the HCAHPS survey does not appear to be the appropriate tool for this purpose.

Disclosure

The Cleveland Clinic Foundation, Division of Clinical Research, Research Programs Committees provided funding support. No funding source had any role in the study design; in the collection, analysis, and interpretation of data; in the writing of the report; or in the decision to submit the article for publication. The authors have no conflicts of interest for this study.

1. Glass RM. The patient-physician relationship. JAMA focuses on the center of medicine. JAMA. 1996;275(2):147-148. PubMed

2. Stein T, Frankel RM, Krupat E. Enhancing clinician communication skills in a large healthcare organization: a longitudinal case study. Patient Educ Couns. 2005;58(1):4-12. PubMed

3. Stewart M, Brown JB, Donner A, et al. The impact of patient-centered care on outcomes. J Fam Pract. 2000;49(9):796-804. PubMed

4. Safran DG, Taira DA, Rogers WH, Kosinski M, Ware JE, Tarlov AR. Linking primary care performance to outcomes of care. J Fam Pract. 1998;47(3):213-220. PubMed

5. Like R, Zyzanski SJ. Patient satisfaction with the clinical encounter: social psychological determinants. Soc Sci Med. 1987;24(4):351-357. PubMed

6. Williams S, Weinman J, Dale J. Doctor-patient communication and patient satisfaction: a review. Fam Pract. 1998;15(5):480-492. PubMed

7. Ciechanowski P, Katon WJ. The interpersonal experience of health care through the eyes of patients with diabetes. Soc Sci Med. 2006;63(12):3067-3079. PubMed

8. Stewart MA. Effective physician-patient communication and health outcomes: a review. CMAJ. 1995;152(9):1423-1433. PubMed

9. Hojat M, Louis DZ, Markham FW, Wender R, Rabinowitz C, Gonnella JS. Physicians’ empathy and clinical outcomes for diabetic patients. Acad Med. 2011;86(3):359-364. PubMed

10. Levinson W, Roter DL, Mullooly JP, Dull VT, Frankel RM. Physician-patient communication. The relationship with malpractice claims among primary care physicians and surgeons. JAMA. 1997;277(7):553-559. PubMed

11. Ambady N, Laplante D, Nguyen T, Rosenthal R, Chaumeton N, Levinson W. Surgeons’ tone of voice: a clue to malpractice history. Surgery. 2002;132(1):5-9. PubMed

12. Weng HC, Hung CM, Liu YT, et al. Associations between emotional intelligence and doctor burnout, job satisfaction and patient satisfaction. Med Educ. 2011;45(8):835-842. PubMed

13. Mauksch LB, Dugdale DC, Dodson S, Epstein R. Relationship, communication, and efficiency in the medical encounter: creating a clinical model from a literature review. Arch Intern Med. 2008;168(13):1387-1395. PubMed

14. Suchman AL, Roter D, Green M, Lipkin M Jr. Physician satisfaction with primary care office visits. Collaborative Study Group of the American Academy on Physician and Patient. Med Care. 1993;31(12):1083-1092. PubMed

15. Boissy A, Windover AK, Bokar D, et al. Communication skills training for physicians improves patient satisfaction. J Gen Intern Med. 2016;31(7):755-761. PubMed

16. Brody DS, Miller SM, Lerman CE, Smith DG, Lazaro CG, Blum MJ. The relationship between patients’ satisfaction with their physicians and perceptions about interventions they desired and received. Med Care. 1989;27(11):1027-1035. PubMed

17. Wasserman RC, Inui TS, Barriatua RD, Carter WB, Lippincott P. Pediatric clinicians’ support for parents makes a difference: an outcome-based analysis of clinician-parent interaction. Pediatrics. 1984;74(6):1047-1053. PubMed

18. Greenfield S, Kaplan S, Ware JE Jr. Expanding patient involvement in care. Effects on patient outcomes. Ann Intern Med. 1985;102(4):520-528. PubMed

19. Inui TS, Carter WB. Problems and prospects for health services research on provider-patient communication. Med Care. 1985;23(5):521-538. PubMed

20. Beckman H, Frankel R, Kihm J, Kulesza G, Geheb M. Measurement and improvement of humanistic skills in first-year trainees. J Gen Intern Med. 1990;5(1):42-45. PubMed

21. Keller S, O’Malley AJ, Hays RD, et al. Methods used to streamline the CAHPS Hospital Survey. Health Serv Res. 2005;40(6 pt 2):2057-2077. PubMed

22. Engel GL. The clinical application of the biopsychosocial model. Am J Psychiatry. 1980;137(5):535-544. PubMed

23. Lazare A, Eisenthal S, Wasserman L. The customer approach to patienthood. Attending to patient requests in a walk-in clinic. Arch Gen Psychiatry. 1975;32(5):553-558. PubMed

24. Eisenthal S, Lazare A. Evaluation of the initial interview in a walk-in clinic. The clinician’s perspective on a “negotiated approach”. J Nerv Ment Dis. 1977;164(1):30-35. PubMed

25. Kravitz RL, Callahan EJ, Paterniti D, Antonius D, Dunham M, Lewis CE. Prevalence and sources of patients’ unmet expectations for care. Ann Intern Med. 1996;125(9):730-737. PubMed

26. Froehlich GW, Welch HG. Meeting walk-in patients’ expectations for testing. Effects on satisfaction. J Gen Intern Med. 1996;11(8):470-474. PubMed

27. DiMatteo MR, Taranta A, Friedman HS, Prince LM. Predicting patient satisfaction from physicians’ nonverbal communication skills. Med Care. 1980;18(4):376-387. PubMed

28. Frankel RM, Stein T. Getting the most out of the clinical encounter: the four habits model. J Med Pract Manage. 2001;16(4):184-191. PubMed

29. Krupat E, Frankel R, Stein T, Irish J. The Four Habits Coding Scheme: validation of an instrument to assess clinicians’ communication behavior. Patient Educ Couns. 2006;62(1):38-45. PubMed

30. Fossli Jensen B, Gulbrandsen P, Benth JS, Dahl FA, Krupat E, Finset A. Interrater reliability for the Four Habits Coding Scheme as part of a randomized controlled trial. Patient Educ Couns. 2010;80(3):405-409. PubMed

31. Giordano LA, Elliott MN, Goldstein E, Lehrman WG, Spencer PA. Development, implementation, and public reporting of the HCAHPS survey. Med Care Res Rev. 2010;67(1):27-37. PubMed

32. Anonymous. CMS continues to shift emphasis to quality of care. Hosp Case Manag. 2012;20(10):150-151. PubMed

33. The R.E.D.E. Model 2015. Cleveland Clinic Center for Excellence in Healthcare

Communication. http://healthcarecommunication.info/. Accessed April 3 2016.

34. Empathetics, Inc. A Boston-based empathy training firm raises $1.5 million in

Series A Financing 2015. Empathetics Inc. http://www.prnewswire.com/news-releases/

empathetics-inc----a-boston-based-empathy-training-firm-raises-15-million-

in-series-a-financing-300072696.html). Accessed April 3, 2016.

35. Intensive Communication Skills 2016. Institute for Healthcare Communication.

http://healthcarecomm.org/. Accessed April 3, 2016.

36. Hu B, Palta M, Shao J. Variability explained by covariates in linear mixed-effect

models for longitudinal data. Canadian Journal of Statistics. 2010;38:352-368.

37. O’Malley AJ, Zaslavsky AM, Elliott MN, Zaborski L, Cleary PD. Case-mix adjustment

of the CAHPS Hospital Survey. Health Serv Res. 2005;40(6 pt 2):2162-2181. PubMed

38. O’Malley AJ, Zaslavsky AM, Hays RD, Hepner KA, Keller S, Cleary PD. Exploratory

factor analyses of the CAHPS Hospital Pilot Survey responses across

and within medical, surgical, and obstetric services. Health Serv Res. 2005;40(6 pt

2):2078-2095. PubMed

39. Goldstein E, Farquhar M, Crofton C, Darby C, Garfinkel S. Measuring hospital

care from the patients’ perspective: an overview of the CAHPS Hospital Survey

development process. Health Serv Res. 2005;40(6 pt 2):1977-1995. PubMed

40. Darby C, Hays RD, Kletke P. Development and evaluation of the CAHPS hospital

survey. Health Serv Res. 2005;40(6 pt 2):1973-1976. PubMed

41. Keller VF, Carroll JG. A new model for physician-patient communication. Patient

Educ Couns. 1994;23(2):131-140. PubMed

42. Quirk M, Mazor K, Haley HL, et al. How patients perceive a doctor’s caring attitude.

Patient Educ Couns. 2008;72(3):359-366. PubMed

43. Frankel RM, Levinson W. Back to the future: Can conversation analysis be used

to judge physicians’ malpractice history? Commun Med. 2014;11(1):27-39. PubMed

44. Furnham A. Response bias, social desirability and dissimulation. Personality and

individual differences 1986;7(3):385-400.

45. Shteingart H, Neiman T, Loewenstein Y. The role of first impression in operant

learning. J Exp Psychol Gen. 2013;142(2):476-488. PubMed

46. Tefera L, Lehrman WG, Conway P. Measurement of the patient experience: clarifying

facts, myths, and approaches. JAMA. 2016;315(2):2167-2168. PubMed

47. Horowitz CR, Suchman AL, Branch WT Jr, Frankel RM. What do doctors find

meaningful about their work? Ann Intern Med. 2003;138(9):772-775. PubMed

48. Rao JK, Anderson LA, Inui TS, Frankel RM. Communication interventions make

a difference in conversations between physicians and patients: a systematic review

of the evidence. Med Care. 2007;45(4):340-349. PubMed

Communication is the foundation of medical care.1 Effective communication can improve health outcomes, safety, adherence, satisfaction, trust, and enable genuine informed consent and decision-making.2-9 Furthermore, high-quality communication increases provider engagement and workplace satisfaction, while reducing stress and malpractice risk.10-15

Direct measurement of communication in the healthcare setting can be challenging. The “Four Habits Model,” which is derived from a synthesis of empiric studies8,16-20 and theoretical models21-24 of communication, offers 1 framework for assessing healthcare communication. The conceptual model underlying the 4 habits has been validated in studies of physician and patient satisfaction.1,4,25-27 The 4 habits are: investing in the beginning, eliciting the patient’s perspective, demonstrating empathy, and investing in the end. Each habit is divided into several identifiable tasks or skill sets, which can be reliably measured using validated tools and checklists.28 One such instrument, the Four Habits Coding Scheme (4HCS), has been evaluated against other tools and demonstrated overall satisfactory inter-rater reliability and validity.29,30

The Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) survey, developed under the direction of the Centers for Medicare and Medicaid Services (CMS) and the Agency for Healthcare Research and Quality, is an established national standard for measuring patient perceptions of care. HCAHPS retrospectively measures global perceptions of communication, support and empathy from physicians and staff, processes of care, and the overall patient experience. HCAHPS scores were first collected nationally in 2006 and have been publicly reported since 2008.31 With the introduction of value-based purchasing in 2012, health system revenues are now tied to HCAHPS survey performance.32 As a result, hospitals are financially motivated to improve HCAHPS scores but lack evidence-based methods for doing so. Some healthcare organizations have invested in communication training programs based on the available literature and best practices.2,33-35 However, it is not known how, if at all, HCAHPS scores relate to physicians’ real-time observed communication skills.

To examine the relationship between physician communication, as reported by global HCAHPS scores, and the quality of physician communication skills in specific encounters, we observed hospitalist physicians during inpatient bedside rounds and measured their communication skills using the 4HCS.

METHODS

Study Design

The study utilized a cross sectional design; physicians who consented were observed on rounds during 3 separate encounters, and we compared hospitalists’ 4HCS scores to their HCAHPS scores to assess the correlation. The study was approved by the Institutional Review Board of the Cleveland Clinic.

Population

The study was conducted at the main campus of the Cleveland Clinic. All physicians specializing in hospital medicine who had received 10 or more completed HCAHPS survey responses while rounding on a medicine service in the past year were invited to participate in the study. Participation was voluntary; night hospitalists were excluded. A research nurse was trained in the Four Habits Model28 and in the use of the 4HCS coding scheme by the principal investigator. The nurse observed each physician and ascertained the presence of communication behaviors using the 4HCS tool. Physicians were observed between August 2013 and August 2014. Multiple observations per physician could occur on the same day, but only 1 observation per patient was used for analysis. Observations consisted of a physician’s first encounter with a hospitalized patient, with the patient’s consent. Observations were conducted during encounters with English-speaking and cognitively intact patients only. Resident physicians were permitted to stay and conduct rounds per their normal routine. Patient information was not collected as part of the study.

Measures

HCAHPS. For each physician, we extracted all HCAHPS scores that were collected from our hospital’s Press Ganey database. The HCAHPS survey contains 22 core questions divided into 7 themes or domains, 1 of which is doctor communication. The survey uses frequency-based questions with possible answers fixed on a 4-point scale (4=always, 3=usually, 2=sometimes, 1=never). Our primary outcome was the doctor communication domain, which comprises 3 questions: 1) During this hospital stay, how often did the doctors treat you with respect? 2) During this hospital stay, how often did the doctors listen to you? and 3) During this hospital stay, how often did the doctors explain things in a language you can understand? Because CMS counts only the percentage of responses that are graded “always,” so-called “top box” scoring, we used the same measure.

The HCAHPS scores are always attributed to the physician at the time of discharge even if he may not have been responsible for the care of the patient during the entire hospital course. To mitigate contamination from patients seen by multiple providers, we cross-matched length of stay (LOS) data with billing data to determine the proportion of days a patient was seen by a single provider during the entire length of stay. We stratified patients seen by the attending providers to less than 50%, 50% to less than 100%, and at 100% of the LOS. However, we were unable to identify which patients were seen by other consultants or by residents due to limitations in data gathering and the nature of the database.

The Four Habits. The Four Habits are: invest in the beginning, elicit the patient’s perspective, demonstrate empathy, and invest in the end (Figure 1). Specific behaviors for Habits 1 to 4 are outlined in the Appendix, but we will briefly describe the themes as follows. Habit 1, invest in the beginning, describes the ability of the physician to set a welcoming environment for the patient, establish rapport, and collaborate on an agenda for the visit. Habit 2, elicit the patient’s perspective, describes the ability of the physician to explore the patients’ worries, ideas, expectations, and the impact of the illness on their lifestyle. Habit 3, demonstrate empathy, describes the physician’s openness to the patient’s emotions as well as the ability to explore, validate; express curiosity, and openly accept these feelings. Habit 4, invest in the end, is a measure of the physician’s ability to counsel patients in a language built around their original concerns or worries, as well as the ability to check the patients’ understanding of the plan.2,29-30

4HCS. The 4HCS tool (Appendix) measures discreet behaviors and phrases based on each of the Four Habits (Figure 1). With a scoring range from a low of 4 to a high of 20, the rater at bedside assigns a range of points on a scale of 1 to 5 for each habit. It is an instrument based on a teaching model used widely throughout Kaiser Permanente to improve clinicians’ communication skills. The 4HCS was first tested for interrater reliability and validity against the Roter Interaction Analysis System using 100 videotaped primary care physician encounters.29 It was further evaluated in a randomized control trial. Videotapes from 497 hospital encounters involving 71 doctors from a variety of clinical specialties were rated by 4 trained raters using the coding scheme. The total score Pearson’s R and intraclass correlation coefficient (ICC) exceeded 0.70 for all pairs of raters, and the interrater reliability was satisfactory for the 4HCS as applied to heterogeneous material.30

STATISTICAL ANALYSIS

Physician characteristics were summarized with standard descriptive statistics. Pearson correlation coefficients were computed between HCAHPS and 4HCS scores. All analyses were performed with RStudio (Boston, MA). The Pearson correlation between the averaged HCAHPS and 4HCS scores was also computed. A correlation with a P value less than 0.05 was considered statistically significant. With 28 physicians, the study had a power of 88% to detect a moderate correlation (greater than 0.50) with a 2-sided alpha of 0.05. We also computed the correlations based on the subgroups of data with patients seen by providers for less than 50%, 50% to less than 100%, and 100% of LOS. All analyses were conducted in SAS 9.2 (SAS Institute Inc., Cary, NC).36

RESULTS

There were 31 physicians who met our inclusion criteria. Of 29 volunteers, 28 were observed during 3 separate inpatient encounters and made up the final sample. A total of 1003 HCAHPS survey responses were available for these physicians. Participants were predominantly female (60.7%), with an average age of 39 years. They were in practice for an average of 4 years (12 were in practice more than 5 years), and 9 were observed on a teaching rotation.

The means of the overall 4HCS scores per observation were 17.39 ± 2.33 for the first, 17.00 ± 2.37 for the second, and 17.43 ± 2.36 for third bedside observation. The mean 4HCS scores per observation, broken down by habit, appear in Table 1. The ICC among the repeated scores within the same physician was 0.81. The median number of HCAHPS survey returns was 32 (range = [8, 85], with mean = 35.8, interquartile range = [16, 54]). The median overall HCAHPS doctor communication score was 89.6 (range = 80.9-93.7). Participants scored the highest in the respect subdomain and the lowest in the explain subdomain. Median HCAHPS scores and ranges appear in Table 2.

Because there were no significant associations between 4HCS scores or HCAHPS scores and physician age, sex, years in practice, or teaching site, correlations were not adjusted. Figure 2A and 2B show the association between mean 4HCS scores and HCAHPS scores by physician. There was no significant correlation between overall 4HCS and HCAHPS doctor communication scores (Pearson correlation coefficient 0.098; 95% confidence interval [CI], -0.285, 0.455). The individual habits also were not correlated with overall HCAHPS scores or with their corresponding HCAHPS domain (Table 3).

For 325 patients, 1 hospitalist was present for the entire LOS. In sensitivity analysis limiting observations to these patients (Figure 2C, Figure 2D, Table 3), we found a moderate correlation between habit 3 and the HCAHPS respect score (Pearson correlation coefficient 0.515; 95% CI, 0.176, 0.745; P = 0.005), and a weaker correlation between habit 3 and the HCAHPS overall doctor communication score (0.442; 95% CI, 0.082, 0.7; P = 0.019). There were no other significant correlations between specific habits and HCAHPS scores.

DISCUSSION

In this observational study of hospitalist physicians at a large tertiary care center, we found that communication skills, as measured by the 4HCS, varied substantially among physicians but were highly correlated within patients of the same physician. However, there was virtually no correlation between the attending physician of record’s 4HCS scores and their HCAHPS communication scores. When we limited our analysis to patients who saw only 1 hospitalist throughout their stay, there were moderate correlations between demonstration of empathy and both the HCAHPS respect score and overall doctor communication score. There were no trends across the strata of hospitalist involvement. It is important to note that the addition of even 1 different hospitalist to the LOS removes any association. Habits 1 and 2 are close to significance in the 100% subgroup, with a weak correlation. Interestingly, Habit 4, which focuses on creating a plan with the patient, showed no correlation at all with patients reporting that doctors explained things in language they could understand.

Development and testing of the HCAHPS survey began in 2002, commissioned by CMS and the Agency for Healthcare Research and Quality for the purpose of measuring patient experience in the hospital. The HCAHPS survey was endorsed by the National Quality Forum in 2005, with final approval of the national implementation granted by the Office of Management and Budget later that year. The CMS began implementation of the HCAHPS survey in 2006, with the first required public reporting of all hospitals taking place in March 2008.37-41 Based on CMS’ value-based purchasing initiative, hospitals with low HCAHPS scores have faced substantial penalties since 2012. Under these circumstances, it is important that the HCAHPS measures what it purports to measure. Because HCAHPS was designed to compare hospitals, testing was limited to assessment of internal reliability, hospital-level reliability, and construct validity. External validation with known measures of physician communication was not performed.41 Our study appears to be the first to compare HCAHPS scores to directly observed measures of physician communication skills. The lack of association between the 2 should sound a cautionary note to hospitals who seek to tie individual compensation to HCAHPS scores to improve them. In particular, the survey asks for a rating for all the patient’s doctors, not just the primary hospitalist. We found that, for hospital stays with just 1 hospitalist, the HCAHPS score reflected observed expression of empathy, although the correlation was only moderate, and HCAHPS were not correlated with other communication skills. Of all communication skills, empathy may be most important. Almost the entire body of research on physician communication cites empathy as a central skill. Empathy improves patient outcomes1-9,13-14,16-18,42 such as adherence to treatment, loyalty, and perception of care; and provider outcomes10-12,15 such as reduced burnout and a decreased likelihood of malpractice litigation.

It is less clear why other communication skills did not correlate with HCAHPS, but several differences in the measures themselves and how they were obtained might be responsible. It is possible that HCAHPS measures something broader than physician communication. In addition, the 4HCS was developed and normed on outpatient encounters as is true for virtually all doctor-patient coding schemes.43 Little is known about inpatient communication best practices. The timing of HCAHPS may also degrade the relationship between observed and reported communication. The HCAHPS questionnaires, collected after discharge, are retrospective reconstructions that are subject to recall bias and recency effects.44,45 In contrast, our observations took place in real time and were specific to the face-to-face interactions that take place when physicians engage patients at the bedside. Third, the response rate for HCAHPS surveys is only 30%, leading to potential sample bias.46 Respondents represent discharged patients who are willing and able to answer surveys, and may not be representative of all hospitalized patients. Finally, as with all global questions, the meaning any individual patient assigns to terms like “respect” may vary.

Our study has several limitations. The HCAHPS and 4HCS scores were not obtained from the same sample of patients. It is possible that the patients who were observed were not representative of the patients who completed the HCAHPS surveys. In addition, the only type of encounter observed was the initial visit between the hospitalist and the patient, and did not include communication during follow-up visits or on the day of discharge. However, there was a strong ICC among the 4HCS scores, implying that the 4HCS measures an inherent physician skill, which should be consistent across patients and encounters. Coding bias of the habits by a single observer could not be excluded. High intra-class correlation could be due in part to observer preferences for particular communication styles. Our sample included only 28 physicians. Although our study was powered to rule out a moderate correlation between 4HCS scores and HCAHPS scores (Pearson correlation coefficient greater than 0.5), we cannot exclude weaker correlations. Most correlations that we observed were so small that they would not be clinically meaningful, even in a much larger sample.

CONCLUSIONS

Our findings that HCAHPS scores did not correlate with the communication skills of the attending of record have some important implications. In an environment of value-based purchasing, most hospital systems are interested in identifying modifiable provider behaviors that optimize efficiency and payment structures. This study shows that directly measured communication skills do not correlate with HCAHPS scores as generally reported, indicating that HCAHPS may be measuring a broader domain than only physician communication skills. Better attribution based on the proportion of care provided by an individual physician could make the scores more useful for individual comparisons, but most institutions do not report their data in this way. Given this limitation, hospitals should refrain from comparing and incentivizing individual physicians based on their HCAHPS scores, because this measure was not designed for this purpose and does not appear to reflect an individual’s skills. This is important in the current environment in which hospitals face substantial penalties for underperformance but lack specific tools to improve their scores. Furthermore, there is concern that this type of measurement creates perverse incentives that may adversely alter clinical practice with the aim of improving scores.46

Training clinicians in communication and teaming skills is one potential means of increasing overall scores.15 Improving doctor-patient and team relationships is also the right thing to do. It is increasingly being demanded by patients and has always been a deep source of satisfaction for physicians.15,47 Moreover, there is an increasingly robust literature that relates face-to-face communication to biomedical and psychosocial outcomes of care.48 Identifying individual physicians who need help with communication skills is a worthwhile goal. Unfortunately, the HCAHPS survey does not appear to be the appropriate tool for this purpose.

Disclosure

The Cleveland Clinic Foundation, Division of Clinical Research, Research Programs Committees provided funding support. No funding source had any role in the study design; in the collection, analysis, and interpretation of data; in the writing of the report; or in the decision to submit the article for publication. The authors have no conflicts of interest for this study.

Communication is the foundation of medical care.1 Effective communication can improve health outcomes, safety, adherence, satisfaction, trust, and enable genuine informed consent and decision-making.2-9 Furthermore, high-quality communication increases provider engagement and workplace satisfaction, while reducing stress and malpractice risk.10-15

Direct measurement of communication in the healthcare setting can be challenging. The “Four Habits Model,” which is derived from a synthesis of empiric studies8,16-20 and theoretical models21-24 of communication, offers 1 framework for assessing healthcare communication. The conceptual model underlying the 4 habits has been validated in studies of physician and patient satisfaction.1,4,25-27 The 4 habits are: investing in the beginning, eliciting the patient’s perspective, demonstrating empathy, and investing in the end. Each habit is divided into several identifiable tasks or skill sets, which can be reliably measured using validated tools and checklists.28 One such instrument, the Four Habits Coding Scheme (4HCS), has been evaluated against other tools and demonstrated overall satisfactory inter-rater reliability and validity.29,30

The Hospital Consumer Assessment of Healthcare Providers and Systems (HCAHPS) survey, developed under the direction of the Centers for Medicare and Medicaid Services (CMS) and the Agency for Healthcare Research and Quality, is an established national standard for measuring patient perceptions of care. HCAHPS retrospectively measures global perceptions of communication, support and empathy from physicians and staff, processes of care, and the overall patient experience. HCAHPS scores were first collected nationally in 2006 and have been publicly reported since 2008.31 With the introduction of value-based purchasing in 2012, health system revenues are now tied to HCAHPS survey performance.32 As a result, hospitals are financially motivated to improve HCAHPS scores but lack evidence-based methods for doing so. Some healthcare organizations have invested in communication training programs based on the available literature and best practices.2,33-35 However, it is not known how, if at all, HCAHPS scores relate to physicians’ real-time observed communication skills.

To examine the relationship between physician communication, as reported by global HCAHPS scores, and the quality of physician communication skills in specific encounters, we observed hospitalist physicians during inpatient bedside rounds and measured their communication skills using the 4HCS.

METHODS

Study Design

The study utilized a cross sectional design; physicians who consented were observed on rounds during 3 separate encounters, and we compared hospitalists’ 4HCS scores to their HCAHPS scores to assess the correlation. The study was approved by the Institutional Review Board of the Cleveland Clinic.

Population

The study was conducted at the main campus of the Cleveland Clinic. All physicians specializing in hospital medicine who had received 10 or more completed HCAHPS survey responses while rounding on a medicine service in the past year were invited to participate in the study. Participation was voluntary; night hospitalists were excluded. A research nurse was trained in the Four Habits Model28 and in the use of the 4HCS coding scheme by the principal investigator. The nurse observed each physician and ascertained the presence of communication behaviors using the 4HCS tool. Physicians were observed between August 2013 and August 2014. Multiple observations per physician could occur on the same day, but only 1 observation per patient was used for analysis. Observations consisted of a physician’s first encounter with a hospitalized patient, with the patient’s consent. Observations were conducted during encounters with English-speaking and cognitively intact patients only. Resident physicians were permitted to stay and conduct rounds per their normal routine. Patient information was not collected as part of the study.

Measures

HCAHPS. For each physician, we extracted all HCAHPS scores that were collected from our hospital’s Press Ganey database. The HCAHPS survey contains 22 core questions divided into 7 themes or domains, 1 of which is doctor communication. The survey uses frequency-based questions with possible answers fixed on a 4-point scale (4=always, 3=usually, 2=sometimes, 1=never). Our primary outcome was the doctor communication domain, which comprises 3 questions: 1) During this hospital stay, how often did the doctors treat you with respect? 2) During this hospital stay, how often did the doctors listen to you? and 3) During this hospital stay, how often did the doctors explain things in a language you can understand? Because CMS counts only the percentage of responses that are graded “always,” so-called “top box” scoring, we used the same measure.

The HCAHPS scores are always attributed to the physician at the time of discharge even if he may not have been responsible for the care of the patient during the entire hospital course. To mitigate contamination from patients seen by multiple providers, we cross-matched length of stay (LOS) data with billing data to determine the proportion of days a patient was seen by a single provider during the entire length of stay. We stratified patients seen by the attending providers to less than 50%, 50% to less than 100%, and at 100% of the LOS. However, we were unable to identify which patients were seen by other consultants or by residents due to limitations in data gathering and the nature of the database.

The Four Habits. The Four Habits are: invest in the beginning, elicit the patient’s perspective, demonstrate empathy, and invest in the end (Figure 1). Specific behaviors for Habits 1 to 4 are outlined in the Appendix, but we will briefly describe the themes as follows. Habit 1, invest in the beginning, describes the ability of the physician to set a welcoming environment for the patient, establish rapport, and collaborate on an agenda for the visit. Habit 2, elicit the patient’s perspective, describes the ability of the physician to explore the patients’ worries, ideas, expectations, and the impact of the illness on their lifestyle. Habit 3, demonstrate empathy, describes the physician’s openness to the patient’s emotions as well as the ability to explore, validate; express curiosity, and openly accept these feelings. Habit 4, invest in the end, is a measure of the physician’s ability to counsel patients in a language built around their original concerns or worries, as well as the ability to check the patients’ understanding of the plan.2,29-30

4HCS. The 4HCS tool (Appendix) measures discreet behaviors and phrases based on each of the Four Habits (Figure 1). With a scoring range from a low of 4 to a high of 20, the rater at bedside assigns a range of points on a scale of 1 to 5 for each habit. It is an instrument based on a teaching model used widely throughout Kaiser Permanente to improve clinicians’ communication skills. The 4HCS was first tested for interrater reliability and validity against the Roter Interaction Analysis System using 100 videotaped primary care physician encounters.29 It was further evaluated in a randomized control trial. Videotapes from 497 hospital encounters involving 71 doctors from a variety of clinical specialties were rated by 4 trained raters using the coding scheme. The total score Pearson’s R and intraclass correlation coefficient (ICC) exceeded 0.70 for all pairs of raters, and the interrater reliability was satisfactory for the 4HCS as applied to heterogeneous material.30

STATISTICAL ANALYSIS

Physician characteristics were summarized with standard descriptive statistics. Pearson correlation coefficients were computed between HCAHPS and 4HCS scores. All analyses were performed with RStudio (Boston, MA). The Pearson correlation between the averaged HCAHPS and 4HCS scores was also computed. A correlation with a P value less than 0.05 was considered statistically significant. With 28 physicians, the study had a power of 88% to detect a moderate correlation (greater than 0.50) with a 2-sided alpha of 0.05. We also computed the correlations based on the subgroups of data with patients seen by providers for less than 50%, 50% to less than 100%, and 100% of LOS. All analyses were conducted in SAS 9.2 (SAS Institute Inc., Cary, NC).36

RESULTS

There were 31 physicians who met our inclusion criteria. Of 29 volunteers, 28 were observed during 3 separate inpatient encounters and made up the final sample. A total of 1003 HCAHPS survey responses were available for these physicians. Participants were predominantly female (60.7%), with an average age of 39 years. They were in practice for an average of 4 years (12 were in practice more than 5 years), and 9 were observed on a teaching rotation.

The means of the overall 4HCS scores per observation were 17.39 ± 2.33 for the first, 17.00 ± 2.37 for the second, and 17.43 ± 2.36 for third bedside observation. The mean 4HCS scores per observation, broken down by habit, appear in Table 1. The ICC among the repeated scores within the same physician was 0.81. The median number of HCAHPS survey returns was 32 (range = [8, 85], with mean = 35.8, interquartile range = [16, 54]). The median overall HCAHPS doctor communication score was 89.6 (range = 80.9-93.7). Participants scored the highest in the respect subdomain and the lowest in the explain subdomain. Median HCAHPS scores and ranges appear in Table 2.

Because there were no significant associations between 4HCS scores or HCAHPS scores and physician age, sex, years in practice, or teaching site, correlations were not adjusted. Figure 2A and 2B show the association between mean 4HCS scores and HCAHPS scores by physician. There was no significant correlation between overall 4HCS and HCAHPS doctor communication scores (Pearson correlation coefficient 0.098; 95% confidence interval [CI], -0.285, 0.455). The individual habits also were not correlated with overall HCAHPS scores or with their corresponding HCAHPS domain (Table 3).

For 325 patients, 1 hospitalist was present for the entire LOS. In sensitivity analysis limiting observations to these patients (Figure 2C, Figure 2D, Table 3), we found a moderate correlation between habit 3 and the HCAHPS respect score (Pearson correlation coefficient 0.515; 95% CI, 0.176, 0.745; P = 0.005), and a weaker correlation between habit 3 and the HCAHPS overall doctor communication score (0.442; 95% CI, 0.082, 0.7; P = 0.019). There were no other significant correlations between specific habits and HCAHPS scores.

DISCUSSION

In this observational study of hospitalist physicians at a large tertiary care center, we found that communication skills, as measured by the 4HCS, varied substantially among physicians but were highly correlated within patients of the same physician. However, there was virtually no correlation between the attending physician of record’s 4HCS scores and their HCAHPS communication scores. When we limited our analysis to patients who saw only 1 hospitalist throughout their stay, there were moderate correlations between demonstration of empathy and both the HCAHPS respect score and overall doctor communication score. There were no trends across the strata of hospitalist involvement. It is important to note that the addition of even 1 different hospitalist to the LOS removes any association. Habits 1 and 2 are close to significance in the 100% subgroup, with a weak correlation. Interestingly, Habit 4, which focuses on creating a plan with the patient, showed no correlation at all with patients reporting that doctors explained things in language they could understand.

Development and testing of the HCAHPS survey began in 2002, commissioned by CMS and the Agency for Healthcare Research and Quality for the purpose of measuring patient experience in the hospital. The HCAHPS survey was endorsed by the National Quality Forum in 2005, with final approval of the national implementation granted by the Office of Management and Budget later that year. The CMS began implementation of the HCAHPS survey in 2006, with the first required public reporting of all hospitals taking place in March 2008.37-41 Based on CMS’ value-based purchasing initiative, hospitals with low HCAHPS scores have faced substantial penalties since 2012. Under these circumstances, it is important that the HCAHPS measures what it purports to measure. Because HCAHPS was designed to compare hospitals, testing was limited to assessment of internal reliability, hospital-level reliability, and construct validity. External validation with known measures of physician communication was not performed.41 Our study appears to be the first to compare HCAHPS scores to directly observed measures of physician communication skills. The lack of association between the 2 should sound a cautionary note to hospitals who seek to tie individual compensation to HCAHPS scores to improve them. In particular, the survey asks for a rating for all the patient’s doctors, not just the primary hospitalist. We found that, for hospital stays with just 1 hospitalist, the HCAHPS score reflected observed expression of empathy, although the correlation was only moderate, and HCAHPS were not correlated with other communication skills. Of all communication skills, empathy may be most important. Almost the entire body of research on physician communication cites empathy as a central skill. Empathy improves patient outcomes1-9,13-14,16-18,42 such as adherence to treatment, loyalty, and perception of care; and provider outcomes10-12,15 such as reduced burnout and a decreased likelihood of malpractice litigation.

It is less clear why other communication skills did not correlate with HCAHPS, but several differences in the measures themselves and how they were obtained might be responsible. It is possible that HCAHPS measures something broader than physician communication. In addition, the 4HCS was developed and normed on outpatient encounters as is true for virtually all doctor-patient coding schemes.43 Little is known about inpatient communication best practices. The timing of HCAHPS may also degrade the relationship between observed and reported communication. The HCAHPS questionnaires, collected after discharge, are retrospective reconstructions that are subject to recall bias and recency effects.44,45 In contrast, our observations took place in real time and were specific to the face-to-face interactions that take place when physicians engage patients at the bedside. Third, the response rate for HCAHPS surveys is only 30%, leading to potential sample bias.46 Respondents represent discharged patients who are willing and able to answer surveys, and may not be representative of all hospitalized patients. Finally, as with all global questions, the meaning any individual patient assigns to terms like “respect” may vary.

Our study has several limitations. The HCAHPS and 4HCS scores were not obtained from the same sample of patients. It is possible that the patients who were observed were not representative of the patients who completed the HCAHPS surveys. In addition, the only type of encounter observed was the initial visit between the hospitalist and the patient, and did not include communication during follow-up visits or on the day of discharge. However, there was a strong ICC among the 4HCS scores, implying that the 4HCS measures an inherent physician skill, which should be consistent across patients and encounters. Coding bias of the habits by a single observer could not be excluded. High intra-class correlation could be due in part to observer preferences for particular communication styles. Our sample included only 28 physicians. Although our study was powered to rule out a moderate correlation between 4HCS scores and HCAHPS scores (Pearson correlation coefficient greater than 0.5), we cannot exclude weaker correlations. Most correlations that we observed were so small that they would not be clinically meaningful, even in a much larger sample.

CONCLUSIONS

Our findings that HCAHPS scores did not correlate with the communication skills of the attending of record have some important implications. In an environment of value-based purchasing, most hospital systems are interested in identifying modifiable provider behaviors that optimize efficiency and payment structures. This study shows that directly measured communication skills do not correlate with HCAHPS scores as generally reported, indicating that HCAHPS may be measuring a broader domain than only physician communication skills. Better attribution based on the proportion of care provided by an individual physician could make the scores more useful for individual comparisons, but most institutions do not report their data in this way. Given this limitation, hospitals should refrain from comparing and incentivizing individual physicians based on their HCAHPS scores, because this measure was not designed for this purpose and does not appear to reflect an individual’s skills. This is important in the current environment in which hospitals face substantial penalties for underperformance but lack specific tools to improve their scores. Furthermore, there is concern that this type of measurement creates perverse incentives that may adversely alter clinical practice with the aim of improving scores.46

Training clinicians in communication and teaming skills is one potential means of increasing overall scores.15 Improving doctor-patient and team relationships is also the right thing to do. It is increasingly being demanded by patients and has always been a deep source of satisfaction for physicians.15,47 Moreover, there is an increasingly robust literature that relates face-to-face communication to biomedical and psychosocial outcomes of care.48 Identifying individual physicians who need help with communication skills is a worthwhile goal. Unfortunately, the HCAHPS survey does not appear to be the appropriate tool for this purpose.

Disclosure

The Cleveland Clinic Foundation, Division of Clinical Research, Research Programs Committees provided funding support. No funding source had any role in the study design; in the collection, analysis, and interpretation of data; in the writing of the report; or in the decision to submit the article for publication. The authors have no conflicts of interest for this study.

1. Glass RM. The patient-physician relationship. JAMA focuses on the center of medicine. JAMA. 1996;275(2):147-148. PubMed

2. Stein T, Frankel RM, Krupat E. Enhancing clinician communication skills in a large healthcare organization: a longitudinal case study. Patient Educ Couns. 2005;58(1):4-12. PubMed

3. Stewart M, Brown JB, Donner A, et al. The impact of patient-centered care on outcomes. J Fam Pract. 2000;49(9):796-804. PubMed

4. Safran DG, Taira DA, Rogers WH, Kosinski M, Ware JE, Tarlov AR. Linking primary care performance to outcomes of care. J Fam Pract. 1998;47(3):213-220. PubMed

5. Like R, Zyzanski SJ. Patient satisfaction with the clinical encounter: social psychological determinants. Soc Sci Med. 1987;24(4):351-357. PubMed

6. Williams S, Weinman J, Dale J. Doctor-patient communication and patient satisfaction: a review. Fam Pract. 1998;15(5):480-492. PubMed

7. Ciechanowski P, Katon WJ. The interpersonal experience of health care through the eyes of patients with diabetes. Soc Sci Med. 2006;63(12):3067-3079. PubMed

8. Stewart MA. Effective physician-patient communication and health outcomes: a review. CMAJ. 1995;152(9):1423-1433. PubMed

9. Hojat M, Louis DZ, Markham FW, Wender R, Rabinowitz C, Gonnella JS. Physicians’ empathy and clinical outcomes for diabetic patients. Acad Med. 2011;86(3):359-364. PubMed

10. Levinson W, Roter DL, Mullooly JP, Dull VT, Frankel RM. Physician-patient communication. The relationship with malpractice claims among primary care physicians and surgeons. JAMA. 1997;277(7):553-559. PubMed

11. Ambady N, Laplante D, Nguyen T, Rosenthal R, Chaumeton N, Levinson W. Surgeons’ tone of voice: a clue to malpractice history. Surgery. 2002;132(1):5-9. PubMed

12. Weng HC, Hung CM, Liu YT, et al. Associations between emotional intelligence and doctor burnout, job satisfaction and patient satisfaction. Med Educ. 2011;45(8):835-842. PubMed

13. Mauksch LB, Dugdale DC, Dodson S, Epstein R. Relationship, communication, and efficiency in the medical encounter: creating a clinical model from a literature review. Arch Intern Med. 2008;168(13):1387-1395. PubMed

14. Suchman AL, Roter D, Green M, Lipkin M Jr. Physician satisfaction with primary care office visits. Collaborative Study Group of the American Academy on Physician and Patient. Med Care. 1993;31(12):1083-1092. PubMed

15. Boissy A, Windover AK, Bokar D, et al. Communication skills training for physicians improves patient satisfaction. J Gen Intern Med. 2016;31(7):755-761. PubMed

16. Brody DS, Miller SM, Lerman CE, Smith DG, Lazaro CG, Blum MJ. The relationship between patients’ satisfaction with their physicians and perceptions about interventions they desired and received. Med Care. 1989;27(11):1027-1035. PubMed

17. Wasserman RC, Inui TS, Barriatua RD, Carter WB, Lippincott P. Pediatric clinicians’ support for parents makes a difference: an outcome-based analysis of clinician-parent interaction. Pediatrics. 1984;74(6):1047-1053. PubMed

18. Greenfield S, Kaplan S, Ware JE Jr. Expanding patient involvement in care. Effects on patient outcomes. Ann Intern Med. 1985;102(4):520-528. PubMed

19. Inui TS, Carter WB. Problems and prospects for health services research on provider-patient communication. Med Care. 1985;23(5):521-538. PubMed

20. Beckman H, Frankel R, Kihm J, Kulesza G, Geheb M. Measurement and improvement of humanistic skills in first-year trainees. J Gen Intern Med. 1990;5(1):42-45. PubMed

21. Keller S, O’Malley AJ, Hays RD, et al. Methods used to streamline the CAHPS Hospital Survey. Health Serv Res. 2005;40(6 pt 2):2057-2077. PubMed

22. Engel GL. The clinical application of the biopsychosocial model. Am J Psychiatry. 1980;137(5):535-544. PubMed

23. Lazare A, Eisenthal S, Wasserman L. The customer approach to patienthood. Attending to patient requests in a walk-in clinic. Arch Gen Psychiatry. 1975;32(5):553-558. PubMed

24. Eisenthal S, Lazare A. Evaluation of the initial interview in a walk-in clinic. The clinician’s perspective on a “negotiated approach”. J Nerv Ment Dis. 1977;164(1):30-35. PubMed

25. Kravitz RL, Callahan EJ, Paterniti D, Antonius D, Dunham M, Lewis CE. Prevalence and sources of patients’ unmet expectations for care. Ann Intern Med. 1996;125(9):730-737. PubMed

26. Froehlich GW, Welch HG. Meeting walk-in patients’ expectations for testing. Effects on satisfaction. J Gen Intern Med. 1996;11(8):470-474. PubMed

27. DiMatteo MR, Taranta A, Friedman HS, Prince LM. Predicting patient satisfaction from physicians’ nonverbal communication skills. Med Care. 1980;18(4):376-387. PubMed

28. Frankel RM, Stein T. Getting the most out of the clinical encounter: the four habits model. J Med Pract Manage. 2001;16(4):184-191. PubMed

29. Krupat E, Frankel R, Stein T, Irish J. The Four Habits Coding Scheme: validation of an instrument to assess clinicians’ communication behavior. Patient Educ Couns. 2006;62(1):38-45. PubMed

30. Fossli Jensen B, Gulbrandsen P, Benth JS, Dahl FA, Krupat E, Finset A. Interrater reliability for the Four Habits Coding Scheme as part of a randomized controlled trial. Patient Educ Couns. 2010;80(3):405-409. PubMed

31. Giordano LA, Elliott MN, Goldstein E, Lehrman WG, Spencer PA. Development, implementation, and public reporting of the HCAHPS survey. Med Care Res Rev. 2010;67(1):27-37. PubMed

32. Anonymous. CMS continues to shift emphasis to quality of care. Hosp Case Manag. 2012;20(10):150-151. PubMed

33. The R.E.D.E. Model 2015. Cleveland Clinic Center for Excellence in Healthcare

Communication. http://healthcarecommunication.info/. Accessed April 3 2016.

34. Empathetics, Inc. A Boston-based empathy training firm raises $1.5 million in

Series A Financing 2015. Empathetics Inc. http://www.prnewswire.com/news-releases/

empathetics-inc----a-boston-based-empathy-training-firm-raises-15-million-

in-series-a-financing-300072696.html). Accessed April 3, 2016.

35. Intensive Communication Skills 2016. Institute for Healthcare Communication.

http://healthcarecomm.org/. Accessed April 3, 2016.

36. Hu B, Palta M, Shao J. Variability explained by covariates in linear mixed-effect

models for longitudinal data. Canadian Journal of Statistics. 2010;38:352-368.

37. O’Malley AJ, Zaslavsky AM, Elliott MN, Zaborski L, Cleary PD. Case-mix adjustment

of the CAHPS Hospital Survey. Health Serv Res. 2005;40(6 pt 2):2162-2181. PubMed

38. O’Malley AJ, Zaslavsky AM, Hays RD, Hepner KA, Keller S, Cleary PD. Exploratory

factor analyses of the CAHPS Hospital Pilot Survey responses across

and within medical, surgical, and obstetric services. Health Serv Res. 2005;40(6 pt

2):2078-2095. PubMed

39. Goldstein E, Farquhar M, Crofton C, Darby C, Garfinkel S. Measuring hospital

care from the patients’ perspective: an overview of the CAHPS Hospital Survey

development process. Health Serv Res. 2005;40(6 pt 2):1977-1995. PubMed

40. Darby C, Hays RD, Kletke P. Development and evaluation of the CAHPS hospital

survey. Health Serv Res. 2005;40(6 pt 2):1973-1976. PubMed

41. Keller VF, Carroll JG. A new model for physician-patient communication. Patient

Educ Couns. 1994;23(2):131-140. PubMed

42. Quirk M, Mazor K, Haley HL, et al. How patients perceive a doctor’s caring attitude.

Patient Educ Couns. 2008;72(3):359-366. PubMed

43. Frankel RM, Levinson W. Back to the future: Can conversation analysis be used

to judge physicians’ malpractice history? Commun Med. 2014;11(1):27-39. PubMed

44. Furnham A. Response bias, social desirability and dissimulation. Personality and

individual differences 1986;7(3):385-400.

45. Shteingart H, Neiman T, Loewenstein Y. The role of first impression in operant

learning. J Exp Psychol Gen. 2013;142(2):476-488. PubMed

46. Tefera L, Lehrman WG, Conway P. Measurement of the patient experience: clarifying

facts, myths, and approaches. JAMA. 2016;315(2):2167-2168. PubMed

47. Horowitz CR, Suchman AL, Branch WT Jr, Frankel RM. What do doctors find

meaningful about their work? Ann Intern Med. 2003;138(9):772-775. PubMed

48. Rao JK, Anderson LA, Inui TS, Frankel RM. Communication interventions make

a difference in conversations between physicians and patients: a systematic review

of the evidence. Med Care. 2007;45(4):340-349. PubMed

1. Glass RM. The patient-physician relationship. JAMA focuses on the center of medicine. JAMA. 1996;275(2):147-148. PubMed

2. Stein T, Frankel RM, Krupat E. Enhancing clinician communication skills in a large healthcare organization: a longitudinal case study. Patient Educ Couns. 2005;58(1):4-12. PubMed

3. Stewart M, Brown JB, Donner A, et al. The impact of patient-centered care on outcomes. J Fam Pract. 2000;49(9):796-804. PubMed

4. Safran DG, Taira DA, Rogers WH, Kosinski M, Ware JE, Tarlov AR. Linking primary care performance to outcomes of care. J Fam Pract. 1998;47(3):213-220. PubMed

5. Like R, Zyzanski SJ. Patient satisfaction with the clinical encounter: social psychological determinants. Soc Sci Med. 1987;24(4):351-357. PubMed

6. Williams S, Weinman J, Dale J. Doctor-patient communication and patient satisfaction: a review. Fam Pract. 1998;15(5):480-492. PubMed

7. Ciechanowski P, Katon WJ. The interpersonal experience of health care through the eyes of patients with diabetes. Soc Sci Med. 2006;63(12):3067-3079. PubMed

8. Stewart MA. Effective physician-patient communication and health outcomes: a review. CMAJ. 1995;152(9):1423-1433. PubMed

9. Hojat M, Louis DZ, Markham FW, Wender R, Rabinowitz C, Gonnella JS. Physicians’ empathy and clinical outcomes for diabetic patients. Acad Med. 2011;86(3):359-364. PubMed

10. Levinson W, Roter DL, Mullooly JP, Dull VT, Frankel RM. Physician-patient communication. The relationship with malpractice claims among primary care physicians and surgeons. JAMA. 1997;277(7):553-559. PubMed

11. Ambady N, Laplante D, Nguyen T, Rosenthal R, Chaumeton N, Levinson W. Surgeons’ tone of voice: a clue to malpractice history. Surgery. 2002;132(1):5-9. PubMed

12. Weng HC, Hung CM, Liu YT, et al. Associations between emotional intelligence and doctor burnout, job satisfaction and patient satisfaction. Med Educ. 2011;45(8):835-842. PubMed

13. Mauksch LB, Dugdale DC, Dodson S, Epstein R. Relationship, communication, and efficiency in the medical encounter: creating a clinical model from a literature review. Arch Intern Med. 2008;168(13):1387-1395. PubMed

14. Suchman AL, Roter D, Green M, Lipkin M Jr. Physician satisfaction with primary care office visits. Collaborative Study Group of the American Academy on Physician and Patient. Med Care. 1993;31(12):1083-1092. PubMed

15. Boissy A, Windover AK, Bokar D, et al. Communication skills training for physicians improves patient satisfaction. J Gen Intern Med. 2016;31(7):755-761. PubMed

16. Brody DS, Miller SM, Lerman CE, Smith DG, Lazaro CG, Blum MJ. The relationship between patients’ satisfaction with their physicians and perceptions about interventions they desired and received. Med Care. 1989;27(11):1027-1035. PubMed

17. Wasserman RC, Inui TS, Barriatua RD, Carter WB, Lippincott P. Pediatric clinicians’ support for parents makes a difference: an outcome-based analysis of clinician-parent interaction. Pediatrics. 1984;74(6):1047-1053. PubMed

18. Greenfield S, Kaplan S, Ware JE Jr. Expanding patient involvement in care. Effects on patient outcomes. Ann Intern Med. 1985;102(4):520-528. PubMed

19. Inui TS, Carter WB. Problems and prospects for health services research on provider-patient communication. Med Care. 1985;23(5):521-538. PubMed

20. Beckman H, Frankel R, Kihm J, Kulesza G, Geheb M. Measurement and improvement of humanistic skills in first-year trainees. J Gen Intern Med. 1990;5(1):42-45. PubMed

21. Keller S, O’Malley AJ, Hays RD, et al. Methods used to streamline the CAHPS Hospital Survey. Health Serv Res. 2005;40(6 pt 2):2057-2077. PubMed

22. Engel GL. The clinical application of the biopsychosocial model. Am J Psychiatry. 1980;137(5):535-544. PubMed

23. Lazare A, Eisenthal S, Wasserman L. The customer approach to patienthood. Attending to patient requests in a walk-in clinic. Arch Gen Psychiatry. 1975;32(5):553-558. PubMed

24. Eisenthal S, Lazare A. Evaluation of the initial interview in a walk-in clinic. The clinician’s perspective on a “negotiated approach”. J Nerv Ment Dis. 1977;164(1):30-35. PubMed

25. Kravitz RL, Callahan EJ, Paterniti D, Antonius D, Dunham M, Lewis CE. Prevalence and sources of patients’ unmet expectations for care. Ann Intern Med. 1996;125(9):730-737. PubMed

26. Froehlich GW, Welch HG. Meeting walk-in patients’ expectations for testing. Effects on satisfaction. J Gen Intern Med. 1996;11(8):470-474. PubMed

27. DiMatteo MR, Taranta A, Friedman HS, Prince LM. Predicting patient satisfaction from physicians’ nonverbal communication skills. Med Care. 1980;18(4):376-387. PubMed

28. Frankel RM, Stein T. Getting the most out of the clinical encounter: the four habits model. J Med Pract Manage. 2001;16(4):184-191. PubMed

29. Krupat E, Frankel R, Stein T, Irish J. The Four Habits Coding Scheme: validation of an instrument to assess clinicians’ communication behavior. Patient Educ Couns. 2006;62(1):38-45. PubMed

30. Fossli Jensen B, Gulbrandsen P, Benth JS, Dahl FA, Krupat E, Finset A. Interrater reliability for the Four Habits Coding Scheme as part of a randomized controlled trial. Patient Educ Couns. 2010;80(3):405-409. PubMed

31. Giordano LA, Elliott MN, Goldstein E, Lehrman WG, Spencer PA. Development, implementation, and public reporting of the HCAHPS survey. Med Care Res Rev. 2010;67(1):27-37. PubMed

32. Anonymous. CMS continues to shift emphasis to quality of care. Hosp Case Manag. 2012;20(10):150-151. PubMed

33. The R.E.D.E. Model 2015. Cleveland Clinic Center for Excellence in Healthcare

Communication. http://healthcarecommunication.info/. Accessed April 3 2016.

34. Empathetics, Inc. A Boston-based empathy training firm raises $1.5 million in

Series A Financing 2015. Empathetics Inc. http://www.prnewswire.com/news-releases/

empathetics-inc----a-boston-based-empathy-training-firm-raises-15-million-

in-series-a-financing-300072696.html). Accessed April 3, 2016.

35. Intensive Communication Skills 2016. Institute for Healthcare Communication.

http://healthcarecomm.org/. Accessed April 3, 2016.

36. Hu B, Palta M, Shao J. Variability explained by covariates in linear mixed-effect

models for longitudinal data. Canadian Journal of Statistics. 2010;38:352-368.

37. O’Malley AJ, Zaslavsky AM, Elliott MN, Zaborski L, Cleary PD. Case-mix adjustment

of the CAHPS Hospital Survey. Health Serv Res. 2005;40(6 pt 2):2162-2181. PubMed

38. O’Malley AJ, Zaslavsky AM, Hays RD, Hepner KA, Keller S, Cleary PD. Exploratory

factor analyses of the CAHPS Hospital Pilot Survey responses across