User login

Fiduciary Services for Veterans With Psychiatric Disabilities

Veterans with psychiatric disabilities who are found incompetent to manage their finances are assigned trustees to directly receive and disburse their disability funds. The term representative payee refers to trustees assigned by the Social Security Administration (SSA), and the term for those assigned by the Veterans Benefits Administration (VBA) is fiduciaries. The generic term trustee will be used when referring to an individual responsible for managing another person’s benefits, regardless of the source of those benefits.

Because a trustee assignment is associated with the loss of legal rights and personal autonomy, the clinical utility of appointing trustees has been extensively researched.1-7 However, almost all the literature on trustees for adults with psychiatric disabilities has focused on services within the civilian sector, whereas little is known about military veterans with similar arrangements.

Veterans with psychiatric disabilities face challenges in managing money on a daily basis. Like other individuals with serious mental illnesses, they may have limitations in basic monetary skills associated with mild to severe cognitive deficits, experience difficulties in budgeting finances, and have impulsive spending habits during periods of acute psychosis, mania, or depression. Unlike civilians with severe mental illness, veterans are able to receive disability benefits from both the VBA and the SSA, thus having the potential for substantially greater income than is typical among nonveterans with psychiatric disabilities.

This increased income can increase veterans’ risk of debt through increased capacity to obtain credit cards and other unsecured loans as well as make them more vulnerable to financial exploitation and victimization. Veterans with incomes from both VBA and SSA face the added complication of dealing with 2 distinct, ever-changing, and often difficult-to-navigate benefit systems.

This article compares the VBA fiduciary program with the better-known SSA representative payment program, then discusses in detail the fiduciary program administered by the VBA, highlighting areas of particular relevance to clinicians, and ends with a review of the published literature on the VBA fiduciary program for individuals with severe mental illness.

Federal Trustee Programs

The magnitude of the 2 main federal trustee systems is remarkable. In 2010, 1.5 million adult beneficiaries who received Supplemental Security Income (SSI) had representative payees responsible for managing about $4 billion per month.8,9 Likewise, in 2010, almost 100,000 individuals receiving VBA benefits had fiduciaries responsible for overseeing about $100 million per month in disability compensation or pension benefits.10

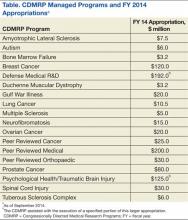

The SSA has a single arrangement for provision of representative payee services in which the payee assignment can be indefinite, the responsibility for modification of the arrangement lies with the beneficiary, and oversight is minimal in both policy and practice.9 In contrast, the VBA, which oversees veterans’ pensions and disability benefits, administers several fiduciary arrangements that range in permanency and level of oversight (Table).

Permanent fiduciary appointments can be either federal or court appointed. Federal fiduciaries manage only VBA-appointed benefits, whereas court-appointed trustees (also known as guardians, fiduciaries, conservators, or curators, depending on the state) are appointed by the state to supervise all the financial assets of an incompetent beneficiary, potentially including both VBA and SSA benefits. Court-appointed trustees are usually designated when broader trust powers are needed to protect the beneficiary’s interests.11

A final VBA fiduciary arrangement is called a Supervised Direct Payment. The payment is made directly to a veteran with periodic supervision by a field examiner who assesses the veteran’s use of funds. This arrangement is used when a veteran has future potential to be deemed competent and released from VBA supervision. It allows the veteran a trial period of managing her/his funds generally for about a year but no longer than 36 months before transitioning to direct pay.11

Unlike SSA, which compensates total disability only, VBA has a rating system that estimates the degree to which a veteran is disabled and grants disability compensation accordingly.12 In 2009, the average monthly payment for all SSA recipients of SSI was $474; the average monthly payment for all recipients of disability benefits from VBA in that year was $925.13,14 For 2009, the federal maximum a SSA recipient could receive was only $674, although this could be supplemented by state funds. On the other hand, there is no set maximum for veterans’ benefits, which are determined through a formula that includes both percentage disability and number of dependents.12,13 In 2011, the average monthly payment for disabled veterans with fiduciaries was $2,540 per month.12 In a study of 49 veterans with trustees, the mean benefit from VBA was twice that of the SSA.15

Because VBA benefits are typically higher than those from SSA and because veterans can receive both SSA and VBA benefits, disabled veterans tend to have higher incomes than do civilians receiving disability benefits. Veterans also may receive lump sum payouts for past benefits, which can be substantial (often $20,000 to $40,000 and sometimes up to $100,000).16 For these reasons, identifying individuals who need a fiduciary and overseeing the management of funds once a fiduciary is assigned are critical.

Referral and Evaluation

The process through which a civilian SSA beneficiary is referred and evaluated for a representative payee is arguably less rigorous than is the referral of a veteran for the VBA fiduciary program. In the former, the treating clinician’s response to a single question, “In your opinion, is the beneficiary capable of managing his/her funds?” on the application for disability benefits often serves as the impetus for payee assignment.

In the latter, the VBA uses a rating agency to make determinations of a veteran’s capacity to handle VBA benefits either after receiving a request for such a determination or after receiving notice that a state court has determined the person is incompetent and/or has appointed a guardian to the person. The Code of Federal Regulations defines the criteria for finding a veteran with a psychiatric disability incompetent to manage his or her finances as follows: “a mentally incompetent person is one who because of injury or disease lacks the mental capacity to contract or to manage his or her own affairs, including disbursement of funds without limitation.”17 As such, if a veteran with mental illness is to be assigned a fiduciary, there needs to be evidence that the mental illness causes financial incompetence.

To assign a fiduciary, multiple sources of evidence are considered in demonstrating behaviors indicating financial incapacity. To illustrate, in Sanders v Principi, the VBA reviewed a veteran’s psychiatric history and weighed the opinion of a psychiatrist that the veteran’s mental illness was in remission against the opinion of family members that the veteran did not possess the ability to “conduct business transactions as his cognitive skills were severely impaired.”18

The VBA is expected to conduct a thorough review of the record and provide reasoned analysis in support of its conclusions, as discussed in Sims v Nicholson.19 The Sims court asserted that to render its decision, the VBA can consider a wide array of information sources, including field examination reports, private psychiatric examinations, medical examiners’ reports, and private physicians. Veterans are informed of the reasons behind the need for a fiduciary, which less commonly occurs in assigning representative payees in the SSA. Although the documented policy for evaluating and determining need for a fiduciary is impressive in its rigor, it is unknown to what extent these standards are put into actual practice.

For health care clinicians, deciding when to request formal assessment by the VBA rating agency of a veteran’s capacity to manage benefits can be challenging to both clinical judgment and to the therapeutic relationship. Although clinicians such as primary care providers, nurses, social workers, and case managers often hear information from the veteran and his/her family about the veteran’s day-to-day management of funds, most of these providers are not necessarily qualified to make a formal assessment of financial capacity.

Black and colleagues developed a measure to assess money mismanagement in a population composed primarily of veterans.20 Although this measure was correlated with client Global Assessment of Functioning scores and client-rated assessment of money mismanagement, it was not correlated with clinician judgment of the individual’s inability to manage funds. Rosen and colleagues similarly found that clinician assessment of whether a veteran would benefit from a trustee arrangement was not associated with the veteran meeting more stringent objective criteria, such as evidence that mismanagement of funds had resulted in the veteran’s inability to meet basic needs or had substantially harmed the veteran.21 Recognizing that their clinical judgment has limitations without external guidance, clinicians may postpone referral, particularly if there is also concern that the veteran may misunderstand the referral decision as a personal judgment, possibly impairing future relationships with the clinician or clinical team.

One option a clinician can consider prior to an official request to the VBA rating agency is to refer the veteran to a trained neuropsychologist for a financial capacity evaluation. The information obtained normally includes a detailed clinical interview, standardized performance measures, and neuropsychological testing.22 This evaluation may allow the clinician to feel more confident about his/her decision and provide a nonjudgmental way of initiating discussion with the veteran. Clinicians may also want to discuss the situation with staff of the Fiduciary Program prior to making a referral. The VBA website (http://benefits.va.gov/fiduciary) provides information about the fiduciary process, including regional contact information for fiduciary services, which clinicians and family members may find useful.

The Fiduciary Role

Once an individual has been determined to need a formal trustee, the decision of who will assume this role differs for SSA and VBA systems. Whereas over 70% of SSA-appointed representative payees for individuals are family members, the majority of fiduciaries for veterans are attorneys or paralegals.23,24 The ultimate designation of a trustee can have critical consequences for both beneficiaries and their families. Some studies have shown that people with psychiatric disabilities who are financially dependent on family members are significantly more likely to be aggressive and even violent toward those family members, with a greater elevated risk of conflict if the disabled person has more education, or even better money management skills, than the assigned family trustee.25-27 Although there are fewer family fiduciaries in the VBA system, it is still possible that veterans with psychiatric disabilities will have these conflicts.

The significant amount of money veterans receive may put them at higher risk for financial exploitation. Given that the VBA disability payment is a reliable source of income and that many veterans with psychiatric disabilities live in environments of lower socioeconomic status, the veteran with a psychiatric disability may be especially vulnerable to financial manipulation. In an environment where many individuals have limited monetary resources, experience financial strain, and are frequently unemployed, it is unsurprising that, at best, family and friends may seek help and assistance from the veteran, and at worst, may maliciously exploit him or her. As a disinterested third party, it can be helpful for the clinician to explore potential disparities between veterans’ disability benefits and the income of individuals with whom the veteran resides.

Additionally, the amount of compensation fiduciaries can receive for their role can be significant. Fiduciaries can receive up to 4% of the yearly VBA benefits of a veteran for whom they are managing money, although family members and court-appointed fiduciaries are not allowed to receive such a commission without a special exception.11 Because large retroactive payments may be disbursed all at once, 4% of the total can be substantial.16

Unsurprisingly, the VBA fiduciary system suffers from a certain amount of fraud, prompting recent efforts in Congress to investigate the program more closely.28 Particular concern has been expressed by the House Committee on Veterans Affairs about misuse of funds by so-called professional fiduciaries who provide services for multiple veterans.29 Recent audits estimated that over $400 million in payments and estates were at risk for misuse and over $80 million might be subject to fraud.16 Until 2004, there was no policy in place to replace a veteran’s funds if those funds had been misused by her/his fiduciary.30 However, this was corrected when Congress passed the Veterans Benefits Improvement Act, and the VBA now reissues benefits if they were misused and the VBA was found negligent in its monitoring of the fiduciary.31 Unfortunately, it is also the VBA that makes the determination of negligence, raising concerns about conflict of interest.

Clinicians may contact their VBA Regional Office to request an evaluation of a veteran’s situation if they have concerns about the fiduciary arrangement, either based on their own observations or on complaints received from the veteran. A field examiner is required to investigate concerns about misuse of veteran funds.11

Fiduciary Oversight

The SSA has been criticized for its lack of close oversight of representative payees. In a recent report on the SSA representative payee program, the evaluators noted, “More broadly, the [SSA] program does not require careful accounting and reporting by payees, nor does the current system appear to be useful in detecting possible misuse of benefits by payees.”9

In contrast, the VBA fiduciary program has designated field examiners who play a role in the initial competence determination, fiduciary arrangement and selection, and oversight of the fiduciary arrangement. Once the VBA has been alerted that a veteran may require a fiduciary, a field examiner is dispatched to observe the individual’s living conditions, fund requirements, and capacity to handle benefits.11 After the initial contact, the field examiner makes a recommendation of the appropriate financial arrangement and prospective fiduciary.

Regardless of the type of fiduciary arrangement in place, the field examiner makes periodic follow-up visits to the beneficiary based on the individual situation. The minimum frequency of required contacts is at least once per year.11 However, visits can occur as infrequently as 36 months in particular situations (Table). During follow-up visits, the field examiner evaluates the beneficiary’s welfare, the performance of the fiduciary, the use of funds, the competency of the beneficiary, and the necessity to continue the fiduciary relationship.11

Although detailed oversight of fiduciaries is technically required, there are a limited number of field examiners to provide that oversight. In 2006, caseloads for field examiners ranged from 132 to 592 cases per employee.Recent auditing showed that programs with the highest staff case loads also had the highest number of deficiencies, suggesting that some field examiners may be unable to provide sufficient oversight to all their clients.16 The effectiveness of field examiners may suffer when they are responsible for very high numbers of veterans.16 Improving oversight of fiduciaries is a stated goal of the VA Office of Inspector General, although increasing the number of field examiners is not mentioned as a means to achieve this goal.32

The SSA does not systematically assess whether a beneficiary is able to resume control over his or her finances. Responsibility lies with the beneficiary to initiate a request to become his/her own payee by demonstrating ability to care for self by means of any evidence, including providing a doctor’s statement or an official copy of a court order. The SSA further cautions beneficiaries who are considering submitting proof of their capability to manage their money as a result of improvement in their condition that, “If SSA believes your condition has improved to the point that you no longer need a payee, we may reevaluate your eligibility for disability payments.”33 This may discourage beneficiaries from attempting to rescind the payeeship, as they potentially risk losing their disability benefits as well.

In contrast, VBA requires regular assessment by a field examiner for continuation of the fiduciary arrangement.11 It is possible to rescind this arrangement if the veteran is found to be competent to handle his/her own funds, understands his/her financial situation, is applying funds to his/her needs appropriately, and would not benefit from further VBA supervision. Additionally, a trial period of limited fund disbursement for 3 to 5 months can be recommended in order to determine how well the veteran manages his/her money. This is commonly done when there are substantial amounts of money being held in trust for the veteran.11

Trustee Effectiveness

Considerable research has examined the effectiveness of the SSA representative payee program as well as potential benefits and risks to the payee. For example, in beneficiaries with psychiatric disabilities, payees can be instrumental in promoting residential stability, basic health care, and psychiatric treatment engagement.6 In addition, representative payeeship has been shown to be associated with reduced hospitalization, victimization, and homelessness.34,35 Finally, research has found better treatment adherence among consumers with payees compared with those without.5

On the other hand, risks noted in some studies suggest payeeship may be used coercively, thwart self-determination, and increase conflict.25 Additionally, payeeship was not associated with a differential reduction in substance use compared with SSA beneficiaries without a payee, nor did it have any effect on clinical outcomes.36-38 These studies may or may not be applicable to the veteran population: Few studies of SSA payeeship include veterans, and there are no studies examining the effectiveness of the VBA fiduciary program exclusively.

Conrad and colleagues reported on a randomized trial of a community trustee and case management program integrated with psychiatric care provided by the VHA.4 Twelve-month outcomes favored the use of the more integrated program, which showed a reduction in substance use, money mismanagement, and days homeless, along with an increased quality of life. However, the study did not distinguish between funding source (VBA, SSA, or both) and trustee status (SSA representative payee or VBA fiduciary). A voluntary program in which veterans worked with money managers who helped them manage funds and held their check books/bank cards also resulted in some improvement in substance use and money management, but this program did not involve either the formal SSA payee or VBA fiduciary systems.39

Although there is a perception that fiduciaries are unwanted impositions on individuals with mental illness, many veterans who have difficulty managing their money seem to want assistance. In one study, nearly 75% of the veterans interviewed agreed with the statement, “Someone who would give me advice around my funds would be helpful to me.” Thirty-four percent agreed with the statement, “Someone who would receive my check and control my funds would be helpful to me,” and 22% reported that they thought a money manager would have helped prevent their hospitalization.40 Additionally, veterans who had payees reported generally high levels of satisfaction and trust with their payee, as well as low feelings of coercion.15 Although similarities with the SSA system may allow some generalizing of findings across SSA and VBA, significant differences in how the programs are administered and the amount of money at stake justify independent evaluation of the VBA fiduciary program.

Conclusion

Veterans with psychiatric disabilities who are deemed incompetent to manage their finances are typically assigned a trustee to disperse disability funds. Both the VBA and SSA provide disability compensation and have a process for providing formal money management services for those determined to be financially incapacitated. However, these 2 federal programs are complex and have many differences.

Clinicians may come into contact with these programs when referring a veteran for services or when a veteran complains about their existing services. The decision of when to refer a veteran for evaluation for a fiduciary is challenging. Once a veteran is referred to the VBA rating agency, the VBA completes a more formalized evaluation to determine whether the beneficiary meets the criteria for a fiduciary. The VBA also has outlined more rigorous ongoing assessment requirements than has the SSA and has designated field examiners to complete these; however, in practice, field examiner heavy case-loads may make it more challenging for the VBA to achieve this rigor.

The VBA provides a formal means of evaluating a veteran’s ability to manage his or her funds through Supervised Direct Payment, which can allow a veteran to demonstrate the ability to manage money and thus end a fiduciary relationship that is no longer needed. In contrast, SSA has no formal evaluation program. Additionally, requesting an end to a payeeship for SSA funds can potentially trigger the loss of benefits, discouraging recipients from ever managing their money independently again.

Ultimately, assigning a fiduciary involves a complex decision weighing values of autonomy (veteran’s freedom to manage his or her own money) and social welfare (veteran’s safety if genuinely vulnerable to financial exploitation).

Author disclosures

The authors report no actual or potential conflicts of interest with regard to this article.

Disclaimer

The opinions expressed herein are those of the authors and do not necessarily reflect those of Federal Practitioner, Frontline Medical Communications Inc., the U.S. Government, or any of its agencies. This article may discuss unlabeled or investigational use of certain drugs. Please review the complete prescribing information for specific drugs or drug combinations—including indications, contraindications, warnings, and adverse effects—before administering pharmacologic therapy to patients.

1. Elbogen EB, Swanson JW, Swartz MS. Psychiatric disability, the use of financial leverage, and perceived coercion in mental health services. Int J Forensic Ment Health. 2003;2(2):119-127.

2. Rosen MI, Bailey M, Dombrowski E, Ablondi K, Rosenheck RA. A comparison of satisfaction with clinician, family members/friends and attorneys as payees. Community Ment Health J. 2005;41(3):291-306.

3. Rosenheck R. Disability payments and chemical dependence: Conflicting values and uncertain effects. Psychiatr Serv. 1997;48(6):789-791.

4. Conrad KJ, Lutz G, Matters MD, Donner L, Clark E, Lynch P. Randomized trial of psychiatric care with representative payeeship for persons with serious mental illness. Psychiatr Serv. 2006;57(2):197-204.

5. Elbogen EB, Swanson JW, Swartz MS. Effects of legal mechanisms on perceived coercion and treatment adherence among persons with severe mental illness. J Nerv Ment Dis. 2003;191(10):629-637.

6. Luchins DJ, Roberts DL, Hanrahan P. Representative payeeship and mental illness: A review. Adm Policy Ment Health. 2003;30(4):341-353.

7. Rosenheck R, Lam J, Randolph F. Impact of representative payees on substance abuse by homeless persons with serious mental illness. Psychiatr Serv. 1997;48(6):800-806.

8. Social Security Administration. 2010 Annual Report of the Supplemental Security Income Program. Washington, DC: Social Security Administration; 2010.

9. National Research Council Committee on Social Security Representative Payees. Improving the Social Security Representative Payee Program: Serving Beneficiaries and Minimizing Misuses. Washington, DC: Division of Behavioral and Social Sciences and Education; 2007.

10. Department of Veterans Affairs. Veterans Benefits Administration Annual Benefits Report Fiscal Year 2010. Washington, DC: Department of Veterans Affairs, Under Secretary of Veterans Affairs for Benefits; 2010.

11. Department of Veterans Affairs. Fiduciary Program Manual. Washington, DC: Department of Veterans Affairs, Under Secretary of Veterans Affairs for Benefits; 2005.

12. Department of Veterans Affairs. Veterans Benefits Administration Annual Benefits Report Fiscal Year 2011. Washington, DC: Department of Veterans Affairs, Under Secretary of Veterans Affairs for Benefits; 2011.

13. Social Security Administration. 2009 Annual Report of the Supplemental Security Income Program. Washington, DC: Social Security Administration; 2009.

14. Department of Veterans Affairs. Veterans Benefits Administration Annual Benefits Report Fiscal Year 2009. Washington, DC: Department of Veterans Affairs, Under Secretary of Veterans Affairs for Benefits; 2009.

15. Rosen MI, Rosenheck R, Shaner A, Eckman T, Gamache G, Krebs C. Payee relationships: Institutional payees versus personal acquaintances. Psychiatr Rehabil J. 2003;26(3):262-267.

16. Department of Veterans Affairs. Audit of Veterans Benefits Administration Fiduciary Program Operations. Document Number 05-01931-158. Washington, DC: Department of Veterans Affairs Office of Inspector General; 2006.

17. Calvert v Mansfield, 38 CFR § 3.353 (A) (2006).

18. Sanders v Principi, 17 Vet App 232 (2003).

19. Sims v Nicholson, 19 Vet App 453, 456 (2006).

20. Black RA, Rounsaville BJ, Rosenheck RA, Conrad KJ, Ball SA, Rosen MI. Measuring money mismanagement among dually diagnosed clients. J Nerv Ment Dis. 2008;196(7):576-579.

21. Rosen MI, Rosenheck RA, Shaner A, Eckman T, Gamache G, Krebs C. Veterans who may need a payee to prevent misuse of funds for drugs. Psychiatr Serv. 2002;53(8):995-1000.

22. American Bar Association Commission on Law and Aging/American Psychological Association. Assessment Of Older Adults With Diminished Capacity: A Handbook for Psychologists. Washington, DC: American Psychological Association; 2008.

23. Elbogen EB, Swanson JW, Swartz MS, Wagner HR. Characteristics of third-party money management for persons with psychiatric disabilities. Psychiatr Serv. 2003;54(8):1136-1141.

24. Social Security Administration. Annual Statistical Report on the Social Security Disability Insurance Program, 2006. Washington, DC: Social Security Administration; 2006.

25. Elbogen EB, Swanson JW, Swartz MS, Van Dorn R. Family representative payeeship and violence risk in severe mental illness. Law Hum Behav. 2005;29(5):563-574.

26. Estroff SE, Swanson JW, Lachicotte WS, Swartz M, Bolduc M. Risk reconsidered: Targets of violence in the social networks of people with serious psychiatric disorders. Soc Psychiatry Psychiatr Epidemiol. 1998;33(suppl 1):S95-S101.

27. Elbogen EB, Ferron JC, Swartz MS, Wilder CM, Swanson JW, Wagner HR. Characteristics of representative payeeship involving families of beneficiaries with psychiatric disabilities. Psychiatr Serv. 2007;58(11):1433-1440.

28. Mitchell A. VA fiduciary system seriously flawed. House Committee on Veterans Affairs Website. http://veterans.house.gov/press-release/va-fiduciary-system-seriously-flawed. Published February 9, 2012. Accessed November 25, 2014.

29. Subcommittee on Disability Assistance and Memorial Affairs, Committee on Veterans’ Affairs. Examining the U.S. Department of Veterans Affairs Fiduciary Program: How Can VA Better Protect Vulnerable Veterans and Their Families? Document 111-72. Washington, DC: U.S. Government Printing Office; 2010.

30. Subcommittee on Benefits Committee on Veterans Affairs. Hearing on Department of Veterans Affairs’ Fiduciary Program. Document Number 108-21. Washington, DC: U.S. Government Printing Office; 2003.

31. Thakker N. The state of veterans’ fiduciary programs: What is needed to protect our nation’s incapacitated veterans? Bifocal. 2006;28(2):19-27.

32. Department of Veterans Affairs. Semiannual Report to Congress: April 1, 2006-September 30, 2006. Washington, DC: Office of Inspector General, Department of Veterans Affairs; 2006.

33. Social Security. FAQs for beneficiaries who have a payee. Social Security Website. http://www.socialsecurity.gov/payee/faqbene.htm. Accessed November 25, 2014.

34. Hanrahan P, Luchins DJ, Savage C, Patrick G, Roberts D, Conrad KJ. Representative payee programs for persons with mental illness in Illinois. Psychiatr Serv. 2002;53(2):190-194.

35. Stoner MR. Money management services for the homeless mentally ill. Hosp Community Psychiatry. 1989;40(7):751-753.

36. Rosen MI, McMahon TJ, Rosenheck R. Does assigning a representative payee reduce substance abuse? Drug Alcohol Dependence. 2007;86(2-3):115-122.

37. Rosen MI. The ‘check effect’ reconsidered. Addiction. 2011;106(6):1071-1077.

38. Swartz JA, Hsieh CM, Baumohl J. Disability payments, drug use and representative payees: An analysis of the relationships. Addiction. 2003;98(7):965-975.

39. Rosen MI, Carroll KM, Stefanovics E, Rosenheck RA. A randomized controlled trial of a money management-based substance use intervention. Psychiatr Serv. 2009;60(4):498-504.

40. Rosen MI, Rosenheck R, Shaner A, Eckman T, Gamache G, Krebs C. Do patients who mismanage their funds use more health services? Adm Policy Ment Health. 2003;31(2):131-140.

Veterans with psychiatric disabilities who are found incompetent to manage their finances are assigned trustees to directly receive and disburse their disability funds. The term representative payee refers to trustees assigned by the Social Security Administration (SSA), and the term for those assigned by the Veterans Benefits Administration (VBA) is fiduciaries. The generic term trustee will be used when referring to an individual responsible for managing another person’s benefits, regardless of the source of those benefits.

Because a trustee assignment is associated with the loss of legal rights and personal autonomy, the clinical utility of appointing trustees has been extensively researched.1-7 However, almost all the literature on trustees for adults with psychiatric disabilities has focused on services within the civilian sector, whereas little is known about military veterans with similar arrangements.

Veterans with psychiatric disabilities face challenges in managing money on a daily basis. Like other individuals with serious mental illnesses, they may have limitations in basic monetary skills associated with mild to severe cognitive deficits, experience difficulties in budgeting finances, and have impulsive spending habits during periods of acute psychosis, mania, or depression. Unlike civilians with severe mental illness, veterans are able to receive disability benefits from both the VBA and the SSA, thus having the potential for substantially greater income than is typical among nonveterans with psychiatric disabilities.

This increased income can increase veterans’ risk of debt through increased capacity to obtain credit cards and other unsecured loans as well as make them more vulnerable to financial exploitation and victimization. Veterans with incomes from both VBA and SSA face the added complication of dealing with 2 distinct, ever-changing, and often difficult-to-navigate benefit systems.

This article compares the VBA fiduciary program with the better-known SSA representative payment program, then discusses in detail the fiduciary program administered by the VBA, highlighting areas of particular relevance to clinicians, and ends with a review of the published literature on the VBA fiduciary program for individuals with severe mental illness.

Federal Trustee Programs

The magnitude of the 2 main federal trustee systems is remarkable. In 2010, 1.5 million adult beneficiaries who received Supplemental Security Income (SSI) had representative payees responsible for managing about $4 billion per month.8,9 Likewise, in 2010, almost 100,000 individuals receiving VBA benefits had fiduciaries responsible for overseeing about $100 million per month in disability compensation or pension benefits.10

The SSA has a single arrangement for provision of representative payee services in which the payee assignment can be indefinite, the responsibility for modification of the arrangement lies with the beneficiary, and oversight is minimal in both policy and practice.9 In contrast, the VBA, which oversees veterans’ pensions and disability benefits, administers several fiduciary arrangements that range in permanency and level of oversight (Table).

Permanent fiduciary appointments can be either federal or court appointed. Federal fiduciaries manage only VBA-appointed benefits, whereas court-appointed trustees (also known as guardians, fiduciaries, conservators, or curators, depending on the state) are appointed by the state to supervise all the financial assets of an incompetent beneficiary, potentially including both VBA and SSA benefits. Court-appointed trustees are usually designated when broader trust powers are needed to protect the beneficiary’s interests.11

A final VBA fiduciary arrangement is called a Supervised Direct Payment. The payment is made directly to a veteran with periodic supervision by a field examiner who assesses the veteran’s use of funds. This arrangement is used when a veteran has future potential to be deemed competent and released from VBA supervision. It allows the veteran a trial period of managing her/his funds generally for about a year but no longer than 36 months before transitioning to direct pay.11

Unlike SSA, which compensates total disability only, VBA has a rating system that estimates the degree to which a veteran is disabled and grants disability compensation accordingly.12 In 2009, the average monthly payment for all SSA recipients of SSI was $474; the average monthly payment for all recipients of disability benefits from VBA in that year was $925.13,14 For 2009, the federal maximum a SSA recipient could receive was only $674, although this could be supplemented by state funds. On the other hand, there is no set maximum for veterans’ benefits, which are determined through a formula that includes both percentage disability and number of dependents.12,13 In 2011, the average monthly payment for disabled veterans with fiduciaries was $2,540 per month.12 In a study of 49 veterans with trustees, the mean benefit from VBA was twice that of the SSA.15

Because VBA benefits are typically higher than those from SSA and because veterans can receive both SSA and VBA benefits, disabled veterans tend to have higher incomes than do civilians receiving disability benefits. Veterans also may receive lump sum payouts for past benefits, which can be substantial (often $20,000 to $40,000 and sometimes up to $100,000).16 For these reasons, identifying individuals who need a fiduciary and overseeing the management of funds once a fiduciary is assigned are critical.

Referral and Evaluation

The process through which a civilian SSA beneficiary is referred and evaluated for a representative payee is arguably less rigorous than is the referral of a veteran for the VBA fiduciary program. In the former, the treating clinician’s response to a single question, “In your opinion, is the beneficiary capable of managing his/her funds?” on the application for disability benefits often serves as the impetus for payee assignment.

In the latter, the VBA uses a rating agency to make determinations of a veteran’s capacity to handle VBA benefits either after receiving a request for such a determination or after receiving notice that a state court has determined the person is incompetent and/or has appointed a guardian to the person. The Code of Federal Regulations defines the criteria for finding a veteran with a psychiatric disability incompetent to manage his or her finances as follows: “a mentally incompetent person is one who because of injury or disease lacks the mental capacity to contract or to manage his or her own affairs, including disbursement of funds without limitation.”17 As such, if a veteran with mental illness is to be assigned a fiduciary, there needs to be evidence that the mental illness causes financial incompetence.

To assign a fiduciary, multiple sources of evidence are considered in demonstrating behaviors indicating financial incapacity. To illustrate, in Sanders v Principi, the VBA reviewed a veteran’s psychiatric history and weighed the opinion of a psychiatrist that the veteran’s mental illness was in remission against the opinion of family members that the veteran did not possess the ability to “conduct business transactions as his cognitive skills were severely impaired.”18

The VBA is expected to conduct a thorough review of the record and provide reasoned analysis in support of its conclusions, as discussed in Sims v Nicholson.19 The Sims court asserted that to render its decision, the VBA can consider a wide array of information sources, including field examination reports, private psychiatric examinations, medical examiners’ reports, and private physicians. Veterans are informed of the reasons behind the need for a fiduciary, which less commonly occurs in assigning representative payees in the SSA. Although the documented policy for evaluating and determining need for a fiduciary is impressive in its rigor, it is unknown to what extent these standards are put into actual practice.

For health care clinicians, deciding when to request formal assessment by the VBA rating agency of a veteran’s capacity to manage benefits can be challenging to both clinical judgment and to the therapeutic relationship. Although clinicians such as primary care providers, nurses, social workers, and case managers often hear information from the veteran and his/her family about the veteran’s day-to-day management of funds, most of these providers are not necessarily qualified to make a formal assessment of financial capacity.

Black and colleagues developed a measure to assess money mismanagement in a population composed primarily of veterans.20 Although this measure was correlated with client Global Assessment of Functioning scores and client-rated assessment of money mismanagement, it was not correlated with clinician judgment of the individual’s inability to manage funds. Rosen and colleagues similarly found that clinician assessment of whether a veteran would benefit from a trustee arrangement was not associated with the veteran meeting more stringent objective criteria, such as evidence that mismanagement of funds had resulted in the veteran’s inability to meet basic needs or had substantially harmed the veteran.21 Recognizing that their clinical judgment has limitations without external guidance, clinicians may postpone referral, particularly if there is also concern that the veteran may misunderstand the referral decision as a personal judgment, possibly impairing future relationships with the clinician or clinical team.

One option a clinician can consider prior to an official request to the VBA rating agency is to refer the veteran to a trained neuropsychologist for a financial capacity evaluation. The information obtained normally includes a detailed clinical interview, standardized performance measures, and neuropsychological testing.22 This evaluation may allow the clinician to feel more confident about his/her decision and provide a nonjudgmental way of initiating discussion with the veteran. Clinicians may also want to discuss the situation with staff of the Fiduciary Program prior to making a referral. The VBA website (http://benefits.va.gov/fiduciary) provides information about the fiduciary process, including regional contact information for fiduciary services, which clinicians and family members may find useful.

The Fiduciary Role

Once an individual has been determined to need a formal trustee, the decision of who will assume this role differs for SSA and VBA systems. Whereas over 70% of SSA-appointed representative payees for individuals are family members, the majority of fiduciaries for veterans are attorneys or paralegals.23,24 The ultimate designation of a trustee can have critical consequences for both beneficiaries and their families. Some studies have shown that people with psychiatric disabilities who are financially dependent on family members are significantly more likely to be aggressive and even violent toward those family members, with a greater elevated risk of conflict if the disabled person has more education, or even better money management skills, than the assigned family trustee.25-27 Although there are fewer family fiduciaries in the VBA system, it is still possible that veterans with psychiatric disabilities will have these conflicts.

The significant amount of money veterans receive may put them at higher risk for financial exploitation. Given that the VBA disability payment is a reliable source of income and that many veterans with psychiatric disabilities live in environments of lower socioeconomic status, the veteran with a psychiatric disability may be especially vulnerable to financial manipulation. In an environment where many individuals have limited monetary resources, experience financial strain, and are frequently unemployed, it is unsurprising that, at best, family and friends may seek help and assistance from the veteran, and at worst, may maliciously exploit him or her. As a disinterested third party, it can be helpful for the clinician to explore potential disparities between veterans’ disability benefits and the income of individuals with whom the veteran resides.

Additionally, the amount of compensation fiduciaries can receive for their role can be significant. Fiduciaries can receive up to 4% of the yearly VBA benefits of a veteran for whom they are managing money, although family members and court-appointed fiduciaries are not allowed to receive such a commission without a special exception.11 Because large retroactive payments may be disbursed all at once, 4% of the total can be substantial.16

Unsurprisingly, the VBA fiduciary system suffers from a certain amount of fraud, prompting recent efforts in Congress to investigate the program more closely.28 Particular concern has been expressed by the House Committee on Veterans Affairs about misuse of funds by so-called professional fiduciaries who provide services for multiple veterans.29 Recent audits estimated that over $400 million in payments and estates were at risk for misuse and over $80 million might be subject to fraud.16 Until 2004, there was no policy in place to replace a veteran’s funds if those funds had been misused by her/his fiduciary.30 However, this was corrected when Congress passed the Veterans Benefits Improvement Act, and the VBA now reissues benefits if they were misused and the VBA was found negligent in its monitoring of the fiduciary.31 Unfortunately, it is also the VBA that makes the determination of negligence, raising concerns about conflict of interest.

Clinicians may contact their VBA Regional Office to request an evaluation of a veteran’s situation if they have concerns about the fiduciary arrangement, either based on their own observations or on complaints received from the veteran. A field examiner is required to investigate concerns about misuse of veteran funds.11

Fiduciary Oversight

The SSA has been criticized for its lack of close oversight of representative payees. In a recent report on the SSA representative payee program, the evaluators noted, “More broadly, the [SSA] program does not require careful accounting and reporting by payees, nor does the current system appear to be useful in detecting possible misuse of benefits by payees.”9

In contrast, the VBA fiduciary program has designated field examiners who play a role in the initial competence determination, fiduciary arrangement and selection, and oversight of the fiduciary arrangement. Once the VBA has been alerted that a veteran may require a fiduciary, a field examiner is dispatched to observe the individual’s living conditions, fund requirements, and capacity to handle benefits.11 After the initial contact, the field examiner makes a recommendation of the appropriate financial arrangement and prospective fiduciary.

Regardless of the type of fiduciary arrangement in place, the field examiner makes periodic follow-up visits to the beneficiary based on the individual situation. The minimum frequency of required contacts is at least once per year.11 However, visits can occur as infrequently as 36 months in particular situations (Table). During follow-up visits, the field examiner evaluates the beneficiary’s welfare, the performance of the fiduciary, the use of funds, the competency of the beneficiary, and the necessity to continue the fiduciary relationship.11

Although detailed oversight of fiduciaries is technically required, there are a limited number of field examiners to provide that oversight. In 2006, caseloads for field examiners ranged from 132 to 592 cases per employee.Recent auditing showed that programs with the highest staff case loads also had the highest number of deficiencies, suggesting that some field examiners may be unable to provide sufficient oversight to all their clients.16 The effectiveness of field examiners may suffer when they are responsible for very high numbers of veterans.16 Improving oversight of fiduciaries is a stated goal of the VA Office of Inspector General, although increasing the number of field examiners is not mentioned as a means to achieve this goal.32

The SSA does not systematically assess whether a beneficiary is able to resume control over his or her finances. Responsibility lies with the beneficiary to initiate a request to become his/her own payee by demonstrating ability to care for self by means of any evidence, including providing a doctor’s statement or an official copy of a court order. The SSA further cautions beneficiaries who are considering submitting proof of their capability to manage their money as a result of improvement in their condition that, “If SSA believes your condition has improved to the point that you no longer need a payee, we may reevaluate your eligibility for disability payments.”33 This may discourage beneficiaries from attempting to rescind the payeeship, as they potentially risk losing their disability benefits as well.

In contrast, VBA requires regular assessment by a field examiner for continuation of the fiduciary arrangement.11 It is possible to rescind this arrangement if the veteran is found to be competent to handle his/her own funds, understands his/her financial situation, is applying funds to his/her needs appropriately, and would not benefit from further VBA supervision. Additionally, a trial period of limited fund disbursement for 3 to 5 months can be recommended in order to determine how well the veteran manages his/her money. This is commonly done when there are substantial amounts of money being held in trust for the veteran.11

Trustee Effectiveness

Considerable research has examined the effectiveness of the SSA representative payee program as well as potential benefits and risks to the payee. For example, in beneficiaries with psychiatric disabilities, payees can be instrumental in promoting residential stability, basic health care, and psychiatric treatment engagement.6 In addition, representative payeeship has been shown to be associated with reduced hospitalization, victimization, and homelessness.34,35 Finally, research has found better treatment adherence among consumers with payees compared with those without.5

On the other hand, risks noted in some studies suggest payeeship may be used coercively, thwart self-determination, and increase conflict.25 Additionally, payeeship was not associated with a differential reduction in substance use compared with SSA beneficiaries without a payee, nor did it have any effect on clinical outcomes.36-38 These studies may or may not be applicable to the veteran population: Few studies of SSA payeeship include veterans, and there are no studies examining the effectiveness of the VBA fiduciary program exclusively.

Conrad and colleagues reported on a randomized trial of a community trustee and case management program integrated with psychiatric care provided by the VHA.4 Twelve-month outcomes favored the use of the more integrated program, which showed a reduction in substance use, money mismanagement, and days homeless, along with an increased quality of life. However, the study did not distinguish between funding source (VBA, SSA, or both) and trustee status (SSA representative payee or VBA fiduciary). A voluntary program in which veterans worked with money managers who helped them manage funds and held their check books/bank cards also resulted in some improvement in substance use and money management, but this program did not involve either the formal SSA payee or VBA fiduciary systems.39

Although there is a perception that fiduciaries are unwanted impositions on individuals with mental illness, many veterans who have difficulty managing their money seem to want assistance. In one study, nearly 75% of the veterans interviewed agreed with the statement, “Someone who would give me advice around my funds would be helpful to me.” Thirty-four percent agreed with the statement, “Someone who would receive my check and control my funds would be helpful to me,” and 22% reported that they thought a money manager would have helped prevent their hospitalization.40 Additionally, veterans who had payees reported generally high levels of satisfaction and trust with their payee, as well as low feelings of coercion.15 Although similarities with the SSA system may allow some generalizing of findings across SSA and VBA, significant differences in how the programs are administered and the amount of money at stake justify independent evaluation of the VBA fiduciary program.

Conclusion

Veterans with psychiatric disabilities who are deemed incompetent to manage their finances are typically assigned a trustee to disperse disability funds. Both the VBA and SSA provide disability compensation and have a process for providing formal money management services for those determined to be financially incapacitated. However, these 2 federal programs are complex and have many differences.

Clinicians may come into contact with these programs when referring a veteran for services or when a veteran complains about their existing services. The decision of when to refer a veteran for evaluation for a fiduciary is challenging. Once a veteran is referred to the VBA rating agency, the VBA completes a more formalized evaluation to determine whether the beneficiary meets the criteria for a fiduciary. The VBA also has outlined more rigorous ongoing assessment requirements than has the SSA and has designated field examiners to complete these; however, in practice, field examiner heavy case-loads may make it more challenging for the VBA to achieve this rigor.

The VBA provides a formal means of evaluating a veteran’s ability to manage his or her funds through Supervised Direct Payment, which can allow a veteran to demonstrate the ability to manage money and thus end a fiduciary relationship that is no longer needed. In contrast, SSA has no formal evaluation program. Additionally, requesting an end to a payeeship for SSA funds can potentially trigger the loss of benefits, discouraging recipients from ever managing their money independently again.

Ultimately, assigning a fiduciary involves a complex decision weighing values of autonomy (veteran’s freedom to manage his or her own money) and social welfare (veteran’s safety if genuinely vulnerable to financial exploitation).

Author disclosures

The authors report no actual or potential conflicts of interest with regard to this article.

Disclaimer

The opinions expressed herein are those of the authors and do not necessarily reflect those of Federal Practitioner, Frontline Medical Communications Inc., the U.S. Government, or any of its agencies. This article may discuss unlabeled or investigational use of certain drugs. Please review the complete prescribing information for specific drugs or drug combinations—including indications, contraindications, warnings, and adverse effects—before administering pharmacologic therapy to patients.

Veterans with psychiatric disabilities who are found incompetent to manage their finances are assigned trustees to directly receive and disburse their disability funds. The term representative payee refers to trustees assigned by the Social Security Administration (SSA), and the term for those assigned by the Veterans Benefits Administration (VBA) is fiduciaries. The generic term trustee will be used when referring to an individual responsible for managing another person’s benefits, regardless of the source of those benefits.

Because a trustee assignment is associated with the loss of legal rights and personal autonomy, the clinical utility of appointing trustees has been extensively researched.1-7 However, almost all the literature on trustees for adults with psychiatric disabilities has focused on services within the civilian sector, whereas little is known about military veterans with similar arrangements.

Veterans with psychiatric disabilities face challenges in managing money on a daily basis. Like other individuals with serious mental illnesses, they may have limitations in basic monetary skills associated with mild to severe cognitive deficits, experience difficulties in budgeting finances, and have impulsive spending habits during periods of acute psychosis, mania, or depression. Unlike civilians with severe mental illness, veterans are able to receive disability benefits from both the VBA and the SSA, thus having the potential for substantially greater income than is typical among nonveterans with psychiatric disabilities.

This increased income can increase veterans’ risk of debt through increased capacity to obtain credit cards and other unsecured loans as well as make them more vulnerable to financial exploitation and victimization. Veterans with incomes from both VBA and SSA face the added complication of dealing with 2 distinct, ever-changing, and often difficult-to-navigate benefit systems.

This article compares the VBA fiduciary program with the better-known SSA representative payment program, then discusses in detail the fiduciary program administered by the VBA, highlighting areas of particular relevance to clinicians, and ends with a review of the published literature on the VBA fiduciary program for individuals with severe mental illness.

Federal Trustee Programs

The magnitude of the 2 main federal trustee systems is remarkable. In 2010, 1.5 million adult beneficiaries who received Supplemental Security Income (SSI) had representative payees responsible for managing about $4 billion per month.8,9 Likewise, in 2010, almost 100,000 individuals receiving VBA benefits had fiduciaries responsible for overseeing about $100 million per month in disability compensation or pension benefits.10

The SSA has a single arrangement for provision of representative payee services in which the payee assignment can be indefinite, the responsibility for modification of the arrangement lies with the beneficiary, and oversight is minimal in both policy and practice.9 In contrast, the VBA, which oversees veterans’ pensions and disability benefits, administers several fiduciary arrangements that range in permanency and level of oversight (Table).

Permanent fiduciary appointments can be either federal or court appointed. Federal fiduciaries manage only VBA-appointed benefits, whereas court-appointed trustees (also known as guardians, fiduciaries, conservators, or curators, depending on the state) are appointed by the state to supervise all the financial assets of an incompetent beneficiary, potentially including both VBA and SSA benefits. Court-appointed trustees are usually designated when broader trust powers are needed to protect the beneficiary’s interests.11

A final VBA fiduciary arrangement is called a Supervised Direct Payment. The payment is made directly to a veteran with periodic supervision by a field examiner who assesses the veteran’s use of funds. This arrangement is used when a veteran has future potential to be deemed competent and released from VBA supervision. It allows the veteran a trial period of managing her/his funds generally for about a year but no longer than 36 months before transitioning to direct pay.11

Unlike SSA, which compensates total disability only, VBA has a rating system that estimates the degree to which a veteran is disabled and grants disability compensation accordingly.12 In 2009, the average monthly payment for all SSA recipients of SSI was $474; the average monthly payment for all recipients of disability benefits from VBA in that year was $925.13,14 For 2009, the federal maximum a SSA recipient could receive was only $674, although this could be supplemented by state funds. On the other hand, there is no set maximum for veterans’ benefits, which are determined through a formula that includes both percentage disability and number of dependents.12,13 In 2011, the average monthly payment for disabled veterans with fiduciaries was $2,540 per month.12 In a study of 49 veterans with trustees, the mean benefit from VBA was twice that of the SSA.15

Because VBA benefits are typically higher than those from SSA and because veterans can receive both SSA and VBA benefits, disabled veterans tend to have higher incomes than do civilians receiving disability benefits. Veterans also may receive lump sum payouts for past benefits, which can be substantial (often $20,000 to $40,000 and sometimes up to $100,000).16 For these reasons, identifying individuals who need a fiduciary and overseeing the management of funds once a fiduciary is assigned are critical.

Referral and Evaluation

The process through which a civilian SSA beneficiary is referred and evaluated for a representative payee is arguably less rigorous than is the referral of a veteran for the VBA fiduciary program. In the former, the treating clinician’s response to a single question, “In your opinion, is the beneficiary capable of managing his/her funds?” on the application for disability benefits often serves as the impetus for payee assignment.

In the latter, the VBA uses a rating agency to make determinations of a veteran’s capacity to handle VBA benefits either after receiving a request for such a determination or after receiving notice that a state court has determined the person is incompetent and/or has appointed a guardian to the person. The Code of Federal Regulations defines the criteria for finding a veteran with a psychiatric disability incompetent to manage his or her finances as follows: “a mentally incompetent person is one who because of injury or disease lacks the mental capacity to contract or to manage his or her own affairs, including disbursement of funds without limitation.”17 As such, if a veteran with mental illness is to be assigned a fiduciary, there needs to be evidence that the mental illness causes financial incompetence.

To assign a fiduciary, multiple sources of evidence are considered in demonstrating behaviors indicating financial incapacity. To illustrate, in Sanders v Principi, the VBA reviewed a veteran’s psychiatric history and weighed the opinion of a psychiatrist that the veteran’s mental illness was in remission against the opinion of family members that the veteran did not possess the ability to “conduct business transactions as his cognitive skills were severely impaired.”18

The VBA is expected to conduct a thorough review of the record and provide reasoned analysis in support of its conclusions, as discussed in Sims v Nicholson.19 The Sims court asserted that to render its decision, the VBA can consider a wide array of information sources, including field examination reports, private psychiatric examinations, medical examiners’ reports, and private physicians. Veterans are informed of the reasons behind the need for a fiduciary, which less commonly occurs in assigning representative payees in the SSA. Although the documented policy for evaluating and determining need for a fiduciary is impressive in its rigor, it is unknown to what extent these standards are put into actual practice.

For health care clinicians, deciding when to request formal assessment by the VBA rating agency of a veteran’s capacity to manage benefits can be challenging to both clinical judgment and to the therapeutic relationship. Although clinicians such as primary care providers, nurses, social workers, and case managers often hear information from the veteran and his/her family about the veteran’s day-to-day management of funds, most of these providers are not necessarily qualified to make a formal assessment of financial capacity.

Black and colleagues developed a measure to assess money mismanagement in a population composed primarily of veterans.20 Although this measure was correlated with client Global Assessment of Functioning scores and client-rated assessment of money mismanagement, it was not correlated with clinician judgment of the individual’s inability to manage funds. Rosen and colleagues similarly found that clinician assessment of whether a veteran would benefit from a trustee arrangement was not associated with the veteran meeting more stringent objective criteria, such as evidence that mismanagement of funds had resulted in the veteran’s inability to meet basic needs or had substantially harmed the veteran.21 Recognizing that their clinical judgment has limitations without external guidance, clinicians may postpone referral, particularly if there is also concern that the veteran may misunderstand the referral decision as a personal judgment, possibly impairing future relationships with the clinician or clinical team.

One option a clinician can consider prior to an official request to the VBA rating agency is to refer the veteran to a trained neuropsychologist for a financial capacity evaluation. The information obtained normally includes a detailed clinical interview, standardized performance measures, and neuropsychological testing.22 This evaluation may allow the clinician to feel more confident about his/her decision and provide a nonjudgmental way of initiating discussion with the veteran. Clinicians may also want to discuss the situation with staff of the Fiduciary Program prior to making a referral. The VBA website (http://benefits.va.gov/fiduciary) provides information about the fiduciary process, including regional contact information for fiduciary services, which clinicians and family members may find useful.

The Fiduciary Role

Once an individual has been determined to need a formal trustee, the decision of who will assume this role differs for SSA and VBA systems. Whereas over 70% of SSA-appointed representative payees for individuals are family members, the majority of fiduciaries for veterans are attorneys or paralegals.23,24 The ultimate designation of a trustee can have critical consequences for both beneficiaries and their families. Some studies have shown that people with psychiatric disabilities who are financially dependent on family members are significantly more likely to be aggressive and even violent toward those family members, with a greater elevated risk of conflict if the disabled person has more education, or even better money management skills, than the assigned family trustee.25-27 Although there are fewer family fiduciaries in the VBA system, it is still possible that veterans with psychiatric disabilities will have these conflicts.

The significant amount of money veterans receive may put them at higher risk for financial exploitation. Given that the VBA disability payment is a reliable source of income and that many veterans with psychiatric disabilities live in environments of lower socioeconomic status, the veteran with a psychiatric disability may be especially vulnerable to financial manipulation. In an environment where many individuals have limited monetary resources, experience financial strain, and are frequently unemployed, it is unsurprising that, at best, family and friends may seek help and assistance from the veteran, and at worst, may maliciously exploit him or her. As a disinterested third party, it can be helpful for the clinician to explore potential disparities between veterans’ disability benefits and the income of individuals with whom the veteran resides.

Additionally, the amount of compensation fiduciaries can receive for their role can be significant. Fiduciaries can receive up to 4% of the yearly VBA benefits of a veteran for whom they are managing money, although family members and court-appointed fiduciaries are not allowed to receive such a commission without a special exception.11 Because large retroactive payments may be disbursed all at once, 4% of the total can be substantial.16

Unsurprisingly, the VBA fiduciary system suffers from a certain amount of fraud, prompting recent efforts in Congress to investigate the program more closely.28 Particular concern has been expressed by the House Committee on Veterans Affairs about misuse of funds by so-called professional fiduciaries who provide services for multiple veterans.29 Recent audits estimated that over $400 million in payments and estates were at risk for misuse and over $80 million might be subject to fraud.16 Until 2004, there was no policy in place to replace a veteran’s funds if those funds had been misused by her/his fiduciary.30 However, this was corrected when Congress passed the Veterans Benefits Improvement Act, and the VBA now reissues benefits if they were misused and the VBA was found negligent in its monitoring of the fiduciary.31 Unfortunately, it is also the VBA that makes the determination of negligence, raising concerns about conflict of interest.

Clinicians may contact their VBA Regional Office to request an evaluation of a veteran’s situation if they have concerns about the fiduciary arrangement, either based on their own observations or on complaints received from the veteran. A field examiner is required to investigate concerns about misuse of veteran funds.11

Fiduciary Oversight

The SSA has been criticized for its lack of close oversight of representative payees. In a recent report on the SSA representative payee program, the evaluators noted, “More broadly, the [SSA] program does not require careful accounting and reporting by payees, nor does the current system appear to be useful in detecting possible misuse of benefits by payees.”9

In contrast, the VBA fiduciary program has designated field examiners who play a role in the initial competence determination, fiduciary arrangement and selection, and oversight of the fiduciary arrangement. Once the VBA has been alerted that a veteran may require a fiduciary, a field examiner is dispatched to observe the individual’s living conditions, fund requirements, and capacity to handle benefits.11 After the initial contact, the field examiner makes a recommendation of the appropriate financial arrangement and prospective fiduciary.

Regardless of the type of fiduciary arrangement in place, the field examiner makes periodic follow-up visits to the beneficiary based on the individual situation. The minimum frequency of required contacts is at least once per year.11 However, visits can occur as infrequently as 36 months in particular situations (Table). During follow-up visits, the field examiner evaluates the beneficiary’s welfare, the performance of the fiduciary, the use of funds, the competency of the beneficiary, and the necessity to continue the fiduciary relationship.11

Although detailed oversight of fiduciaries is technically required, there are a limited number of field examiners to provide that oversight. In 2006, caseloads for field examiners ranged from 132 to 592 cases per employee.Recent auditing showed that programs with the highest staff case loads also had the highest number of deficiencies, suggesting that some field examiners may be unable to provide sufficient oversight to all their clients.16 The effectiveness of field examiners may suffer when they are responsible for very high numbers of veterans.16 Improving oversight of fiduciaries is a stated goal of the VA Office of Inspector General, although increasing the number of field examiners is not mentioned as a means to achieve this goal.32

The SSA does not systematically assess whether a beneficiary is able to resume control over his or her finances. Responsibility lies with the beneficiary to initiate a request to become his/her own payee by demonstrating ability to care for self by means of any evidence, including providing a doctor’s statement or an official copy of a court order. The SSA further cautions beneficiaries who are considering submitting proof of their capability to manage their money as a result of improvement in their condition that, “If SSA believes your condition has improved to the point that you no longer need a payee, we may reevaluate your eligibility for disability payments.”33 This may discourage beneficiaries from attempting to rescind the payeeship, as they potentially risk losing their disability benefits as well.

In contrast, VBA requires regular assessment by a field examiner for continuation of the fiduciary arrangement.11 It is possible to rescind this arrangement if the veteran is found to be competent to handle his/her own funds, understands his/her financial situation, is applying funds to his/her needs appropriately, and would not benefit from further VBA supervision. Additionally, a trial period of limited fund disbursement for 3 to 5 months can be recommended in order to determine how well the veteran manages his/her money. This is commonly done when there are substantial amounts of money being held in trust for the veteran.11

Trustee Effectiveness

Considerable research has examined the effectiveness of the SSA representative payee program as well as potential benefits and risks to the payee. For example, in beneficiaries with psychiatric disabilities, payees can be instrumental in promoting residential stability, basic health care, and psychiatric treatment engagement.6 In addition, representative payeeship has been shown to be associated with reduced hospitalization, victimization, and homelessness.34,35 Finally, research has found better treatment adherence among consumers with payees compared with those without.5

On the other hand, risks noted in some studies suggest payeeship may be used coercively, thwart self-determination, and increase conflict.25 Additionally, payeeship was not associated with a differential reduction in substance use compared with SSA beneficiaries without a payee, nor did it have any effect on clinical outcomes.36-38 These studies may or may not be applicable to the veteran population: Few studies of SSA payeeship include veterans, and there are no studies examining the effectiveness of the VBA fiduciary program exclusively.

Conrad and colleagues reported on a randomized trial of a community trustee and case management program integrated with psychiatric care provided by the VHA.4 Twelve-month outcomes favored the use of the more integrated program, which showed a reduction in substance use, money mismanagement, and days homeless, along with an increased quality of life. However, the study did not distinguish between funding source (VBA, SSA, or both) and trustee status (SSA representative payee or VBA fiduciary). A voluntary program in which veterans worked with money managers who helped them manage funds and held their check books/bank cards also resulted in some improvement in substance use and money management, but this program did not involve either the formal SSA payee or VBA fiduciary systems.39

Although there is a perception that fiduciaries are unwanted impositions on individuals with mental illness, many veterans who have difficulty managing their money seem to want assistance. In one study, nearly 75% of the veterans interviewed agreed with the statement, “Someone who would give me advice around my funds would be helpful to me.” Thirty-four percent agreed with the statement, “Someone who would receive my check and control my funds would be helpful to me,” and 22% reported that they thought a money manager would have helped prevent their hospitalization.40 Additionally, veterans who had payees reported generally high levels of satisfaction and trust with their payee, as well as low feelings of coercion.15 Although similarities with the SSA system may allow some generalizing of findings across SSA and VBA, significant differences in how the programs are administered and the amount of money at stake justify independent evaluation of the VBA fiduciary program.

Conclusion

Veterans with psychiatric disabilities who are deemed incompetent to manage their finances are typically assigned a trustee to disperse disability funds. Both the VBA and SSA provide disability compensation and have a process for providing formal money management services for those determined to be financially incapacitated. However, these 2 federal programs are complex and have many differences.

Clinicians may come into contact with these programs when referring a veteran for services or when a veteran complains about their existing services. The decision of when to refer a veteran for evaluation for a fiduciary is challenging. Once a veteran is referred to the VBA rating agency, the VBA completes a more formalized evaluation to determine whether the beneficiary meets the criteria for a fiduciary. The VBA also has outlined more rigorous ongoing assessment requirements than has the SSA and has designated field examiners to complete these; however, in practice, field examiner heavy case-loads may make it more challenging for the VBA to achieve this rigor.

The VBA provides a formal means of evaluating a veteran’s ability to manage his or her funds through Supervised Direct Payment, which can allow a veteran to demonstrate the ability to manage money and thus end a fiduciary relationship that is no longer needed. In contrast, SSA has no formal evaluation program. Additionally, requesting an end to a payeeship for SSA funds can potentially trigger the loss of benefits, discouraging recipients from ever managing their money independently again.

Ultimately, assigning a fiduciary involves a complex decision weighing values of autonomy (veteran’s freedom to manage his or her own money) and social welfare (veteran’s safety if genuinely vulnerable to financial exploitation).

Author disclosures

The authors report no actual or potential conflicts of interest with regard to this article.

Disclaimer

The opinions expressed herein are those of the authors and do not necessarily reflect those of Federal Practitioner, Frontline Medical Communications Inc., the U.S. Government, or any of its agencies. This article may discuss unlabeled or investigational use of certain drugs. Please review the complete prescribing information for specific drugs or drug combinations—including indications, contraindications, warnings, and adverse effects—before administering pharmacologic therapy to patients.

1. Elbogen EB, Swanson JW, Swartz MS. Psychiatric disability, the use of financial leverage, and perceived coercion in mental health services. Int J Forensic Ment Health. 2003;2(2):119-127.

2. Rosen MI, Bailey M, Dombrowski E, Ablondi K, Rosenheck RA. A comparison of satisfaction with clinician, family members/friends and attorneys as payees. Community Ment Health J. 2005;41(3):291-306.

3. Rosenheck R. Disability payments and chemical dependence: Conflicting values and uncertain effects. Psychiatr Serv. 1997;48(6):789-791.

4. Conrad KJ, Lutz G, Matters MD, Donner L, Clark E, Lynch P. Randomized trial of psychiatric care with representative payeeship for persons with serious mental illness. Psychiatr Serv. 2006;57(2):197-204.

5. Elbogen EB, Swanson JW, Swartz MS. Effects of legal mechanisms on perceived coercion and treatment adherence among persons with severe mental illness. J Nerv Ment Dis. 2003;191(10):629-637.

6. Luchins DJ, Roberts DL, Hanrahan P. Representative payeeship and mental illness: A review. Adm Policy Ment Health. 2003;30(4):341-353.

7. Rosenheck R, Lam J, Randolph F. Impact of representative payees on substance abuse by homeless persons with serious mental illness. Psychiatr Serv. 1997;48(6):800-806.

8. Social Security Administration. 2010 Annual Report of the Supplemental Security Income Program. Washington, DC: Social Security Administration; 2010.

9. National Research Council Committee on Social Security Representative Payees. Improving the Social Security Representative Payee Program: Serving Beneficiaries and Minimizing Misuses. Washington, DC: Division of Behavioral and Social Sciences and Education; 2007.

10. Department of Veterans Affairs. Veterans Benefits Administration Annual Benefits Report Fiscal Year 2010. Washington, DC: Department of Veterans Affairs, Under Secretary of Veterans Affairs for Benefits; 2010.

11. Department of Veterans Affairs. Fiduciary Program Manual. Washington, DC: Department of Veterans Affairs, Under Secretary of Veterans Affairs for Benefits; 2005.

12. Department of Veterans Affairs. Veterans Benefits Administration Annual Benefits Report Fiscal Year 2011. Washington, DC: Department of Veterans Affairs, Under Secretary of Veterans Affairs for Benefits; 2011.

13. Social Security Administration. 2009 Annual Report of the Supplemental Security Income Program. Washington, DC: Social Security Administration; 2009.

14. Department of Veterans Affairs. Veterans Benefits Administration Annual Benefits Report Fiscal Year 2009. Washington, DC: Department of Veterans Affairs, Under Secretary of Veterans Affairs for Benefits; 2009.

15. Rosen MI, Rosenheck R, Shaner A, Eckman T, Gamache G, Krebs C. Payee relationships: Institutional payees versus personal acquaintances. Psychiatr Rehabil J. 2003;26(3):262-267.