User login

Evaluation of Spinal Epidural Abscess

Spinal epidural abscess (SEA) is caused by a suppurative infection in the epidural space. The mass effect of the abscess can compress and reduce blood flow to the spinal cord, conus medullaris, or cauda equina. Left untreated, the infection can lead to sensory loss, muscle weakness, visceral dysfunction, sepsis, and even death. Early diagnosis is essential to limit morbidity and neurologic injury. The classic triad of fever, axial pain, and neurological deficit occurs in as few as 13% of patients, highlighting the diagnostic challenge associated with SEA.[1]

This investigation reviews the current literature on SEA epidemiology, clinical findings, laboratory data, and treatment methods, with particular focus on nonsurgical versus surgical treatment. Our primary objective was to educate the clinician on when to suspect SEA and how to execute an appropriate diagnostic evaluation.

INCIDENCE AND EPIDEMIOLOGY

In 1975, the incidence of SEA was reported to be 0.2 to 2.0 per 10,000 hospital admissions.[2] Two decades later, a study of tertiary referral centers documented a much higher rate of 12.5 per 10,000 admissions.[3] The reported incidence continues to rise and has doubled in the past decade, with SEA currently representing approximately 10% of all primary spine infections.[4, 5] Potential explanations for this increasing incidence include aging of the population, an increasing prevalence of diabetes, increasing intravenous drug abuse, more widespread use of advanced immunosuppressive regimens, and an increased rate of invasive spinal procedures.[6, 7, 8] Other factors contributing to the rising incidence include increased detection due to the greater accessibility of magnetic resonance imaging (MRI) and increased reporting as a result of the concentration of cases at tertiary referral centers.

SEA is most common in patients older than 60 years[7] and those with multiple medical comorbidities. A review of over 30,000 patients found that the average number of comorbidities in patients who underwent surgical intervention for SEA was 6, ranging from 0 to 20.[5] The same study noted that diabetes was the most frequently associated disease (30% of patients), followed by chronic lung disease (19%), renal failure (13%), and obesity (13%) (Table 1).[5] A history of invasive spine interventions is an additional risk factor; between 14% and 22% occur as a result of spine surgery or percutaneous spine procedures (eg, epidural steroid injections).[8, 9] Regardless of pathogenesis, the rate of permanent neurologic injury after SEA is 30% to 50%, and the mortality rate ranges from 10% to 20%.[4, 5, 8, 10]

| Medical Comorbidity | Prevalence (%) |

|---|---|

| |

| Diabetes mellitus | 1546 |

| IV drug use | 437 |

| Spinal trauma | 533 |

| End‐stage renal disease | 213 |

| Immunosuppressant therapy | 716 |

| Cancer | 215 |

| HIV/AIDS | 29 |

MISSED DIAGNOSIS

Despite the availability of advanced imaging, rates of misdiagnosis at initial presentation remain substantial, with current estimates ranging from 11% to 75%.[4, 9] Back and neck pain symptoms are ubiquitous and nonspecific, often making the diagnosis difficult. Repeated emergency room visits for pain are common in patients who are eventually diagnosed with SEA. Davis et al. found that 51% of 63 patients present to the emergency room at least 2 or more times prior to diagnosis; 11% present 3 or more times.[1]

PATHOPHYSIOLOGY

Microbiology

Staphylococcus aureus, including methicillin resistant S aureus (MRSA), accounts for two‐thirds of all infections.[3] S aureus infection of the spine may occur in the setting of surgical intervention or concomitant skin infection, although it often occurs without an identifiable source. Historically, MRSA has been reported to be responsible for 15% of all staphylococcal infections of the epidural space; some institutions report MRSA rates as high as 40%.[4] S epidermidis is another common pathogen, which is most often encountered following spinal surgery, epidural catheter insertion, and spinal injections.[11] Gram‐negative infections are less common. Escherichia coli is characteristically isolated in patients with active urinary tract infections, and Pseudomonas aeruginosa is more common in intravenous (IV) drug users.[12] Rare causes of SEA include anaerobes such as Bacteroides,[13] various parasites, and fungal organisms such as actinomyces, nocardia, and mycobacteria.[8]

Mechanism of Inoculation

Infections may enter the epidural space by 4 mechanisms: hematogenous spread, direct extension, inoculation via spinal procedure, and trauma. Hematogenous spread from an existing infection is the most common mechanism.[14] Seeding of the epidural space from transient bacteremia after dental procedures has also been reported.[13]

The second most common mechanism of infection is direct spread from an infected component of the vertebral column or paraspinal soft tissues. Most commonly, this takes the form of an anterior SEA in association with vertebral body osteomyelitis. Septic arthritis of a facet joint can also cause a secondary infection of the posterior epidural space.[4, 15] Direct spread from other posterior structures (eg, retropharyngeal, psoas, or paraspinal muscle abscess) may cause SEA as well.[16]

Less‐frequent mechanisms include direct inoculation and trauma. Infection of the epidural space can occur in association with spinal surgery, placement of an epidural catheter, or spinal injections. Grewal et al. reported in 2006 that 1 in 1000 surgical and 1 in 2000 obstetric patients develop SEA following epidural nerve block.[17] Hematoma secondary to an osseous or ligamentous injury can become seeded by bacteria, leading to abscess formation.[18]

Development of Neurologic Symptoms

There are several proposed mechanisms by which SEA can produce neurologic dysfunction. The first theory is that the compressive effect of an expanding abscess decreases blood flow to the neuronal tissue.[4] Improvement of neurologic function following surgical decompression lends credence to this theory. A second potential mechanism is a loss of blood flow due to local vascular inflammation from the infection. Local arteritis may decrease inflow to the cord parenchyma. This theoretical mechanism offers an explanation for the rapid onset of profound neurologic compromise in some cases.[19, 20] Infection can also result in venous thrombophlebitis, which produces ischemic injury due to impaired outflow. Postmortem examination supports this hypothesis; autopsy has revealed local thrombosis of the leptomeningeal vessels adjacent to the level of SEA.[4] All of these mechanisms are probably involved to some degree in any given case of neurologic compromise.

PATIENT HISTORY

Patients present with a wide variety of complaints and confounding variables that complicate the diagnosis (eg, medical comorbidities, psychiatric disease, chronic pain, dementia, or preexistent nonambulatory status). Ninety‐five percent of patients with SEA have a chief complaint of axial spinal pain.[1] Approximately half of patients report fever, and 47% complain of weakness in either the upper or lower extremities.[9] The classic triad of fever, spine pain, and neurological deficits presents in only a minority of patients, with rates ranging from 13% to 37%.[1, 3] Additionally, 1 study found that the sensitivity of this triad was a mere 8%.[1]

The physician should inquire about comorbid conditions associated with SEA, including diabetes, kidney disease, and history of drug use. Any recent infection at a remote site, such as cellulitis or urinary tract infection, should also be investigated. As many as 44% of patients with vertebral osteomyelitis have an associated SEA; conversely, osteomyelitis may be present in up to 80% of patients with SEA.[2, 13] Spinal procedures such as epidural[13] or facet joint injections,[14] placement of hemodialysis catheters,[21] acupuncture,[22] and tattoos[23] have also been implicated as risk factors.

PHYSICAL EXAM

Physical exam findings range from subtle back tenderness to severe neurologic deficits and complete paralysis. Spinal tenderness is elicited in up to 75% of patients, with equal rates of focal and diffuse back tenderness.[1, 4] Radicular symptoms are evident in 12% to 47% of patients, presenting as weakness identified in 26% to 60% and altered sensation in up to 67% of patients.[1, 4, 8, 11, 19] One study revealed that 71% of patients have an abnormal neurologic exam at presentation, including paresthesias (39%), motor weakness (39%), and loss of bladder and bowel control (27%).[3] Thus, a thorough neurologic exam is vital in SEA evaluation.

Heusner staged patients based on their clinical findings (Table 2). Because the evolution of symptoms can be variable and rapidly progressive[4, 24] to stage 3 or 4, documenting subtle abnormalities on initial exam and monitoring for changes are important. Many patients initially present in stage 1 or 2 but remain undiagnosed until progression to stage 3 or 4.[24]

| Stage | Symptoms |

|---|---|

| I | Back pain |

| II | Radiculopathy, neck pain, reflex changes |

| III | Paresthesia, weakness, bladder symptoms |

| IV | Paralysis |

DIAGNOSTIC WORKUP

Laboratory Testing

Routine tests should include a white blood cell count (WBC), erythrocyte sedimentation rate (ESR), and C‐reactive protein (CRP). ESR has been shown to be the most sensitive and specific marker of SEA (Table 3). In a study of 63 patients matched with 126 controls, ESR was greater than 20 in 98% of cases and only 21% of controls.[1] Another study of 55 patients found the sensitivity and specificity of ESR to be 100% and 67%, respectively.[25] White count is less specific, with leukocytosis present in approximately two‐thirds of patients.[1, 25] CRP level rises faster than ESR in the setting of inflammation and also returns to baseline faster. As such, CRP is a useful means of monitoring the response to treatment.[1, 19]

|

| 1. Patient assessment |

| Evaluate for symptom triad (back pain, fever, neurologic dysfunction) |

| Assess for common comorbidities (diabetes, IV drug abuse, spinal trauma, ESRD, immunosuppressant therapy, recent spine procedure, systemic or local infection) |

| 2. Laboratory evaluation |

| Essential: ESR (most sensitive and specific), CRP, WBCs, blood cultures, UA |

| : echocardiogram, TB evaluation |

| No role: lumbar puncture |

| 3. Imaging |

| Gold standard: MRI w/gadolinium (90% sensitive) |

| If MRI contraindicated: CT myelogram |

| 4. Obtain tissue sample |

| Gold standard: open surgical biopsy, debridement fixation. |

| If patient unstable, diagnosis indeterminate, or no neuro symptoms: IR‐guided biopsy before surgery |

| 5. Antibiotics (once tissue sample obtained) |

| Empiric vancomycin plus third‐generation cephalosporin or aminoglycoside |

| Vancomycin only acceptable as monotherapy in patient with early diagnosis and no neurologic symptoms |

| 6. Surgical management (only if not done as step 4) |

| Early surgical consult recommended. Pursue surgical intervention if patient is stable for OR and neuro symptoms progressing or abscess refractory to antibiotics. Best outcomes occur with combined antibiotics and surgical debridement. |

Blood cultures are important to obtain at initial evaluation. Bacteremia from the offending organism may be present in up to 60% of cases; 60% to 90% of cases of bacteremia are caused by MRSA.[26] Urine culture should also be obtained in all patients. Sputum cultures should not be routinely obtained, but it may be beneficial in select patients with history of chronic obstructive pulmonary disease, new cough, or abnormality on chest radiographs. Transthoracic or transesophageal echocardiogram may be recommended for bacteremic patients, those with new heart murmur, or those with a history of IV drug abuse.

In patients in whom tuberculosis may be a potential risk, a tuberculin test with purified protein derivate can help rule out this organism. However, false‐negative testing may occur in up to 15% of patients, particularly the immunocompromised.[27] Patients with false positive results or prior vaccination with bacille Calmette‐Gurin (BCG) can be tested with QuantiFERON gold testing. These time‐consuming and expensive tests should not delay treatment with an empiric agent if tuberculosis is suspected due to patient risk factors or exposure, though an infectious disease specialist should be consulted before initiating treatment for tuberculosis.

Lumbar puncture should not be performed routinely in cases of suspected SEA, primarily due to the risk of bacterial contamination of the cerebrospinal fluid (CSF). Additionally, the increased protein and inflammatory cells seen in the CSF are nonspecific markers of parameningeal inflammation.[15]

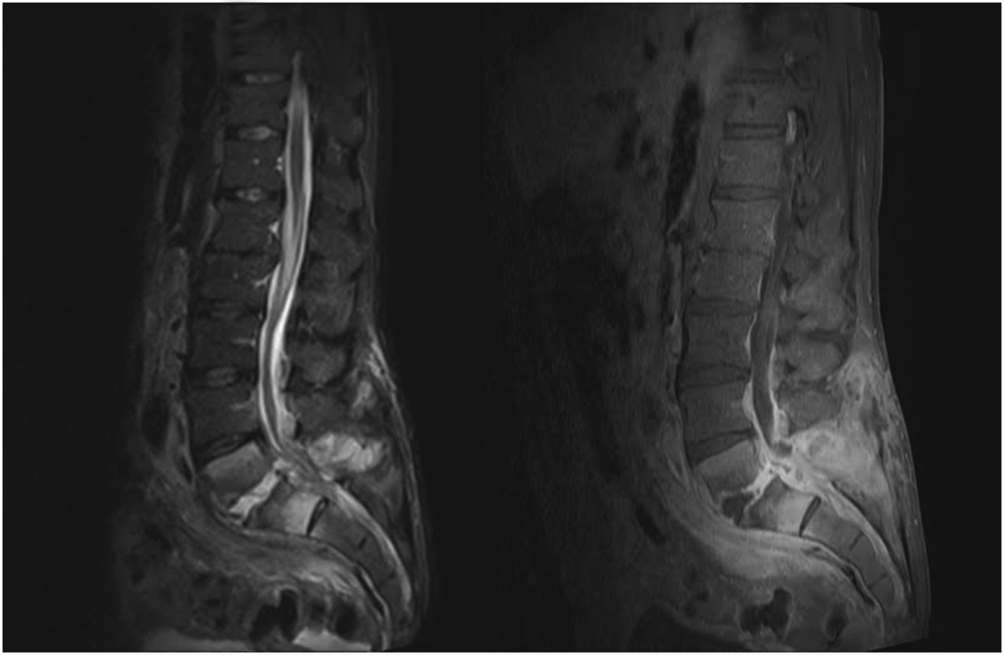

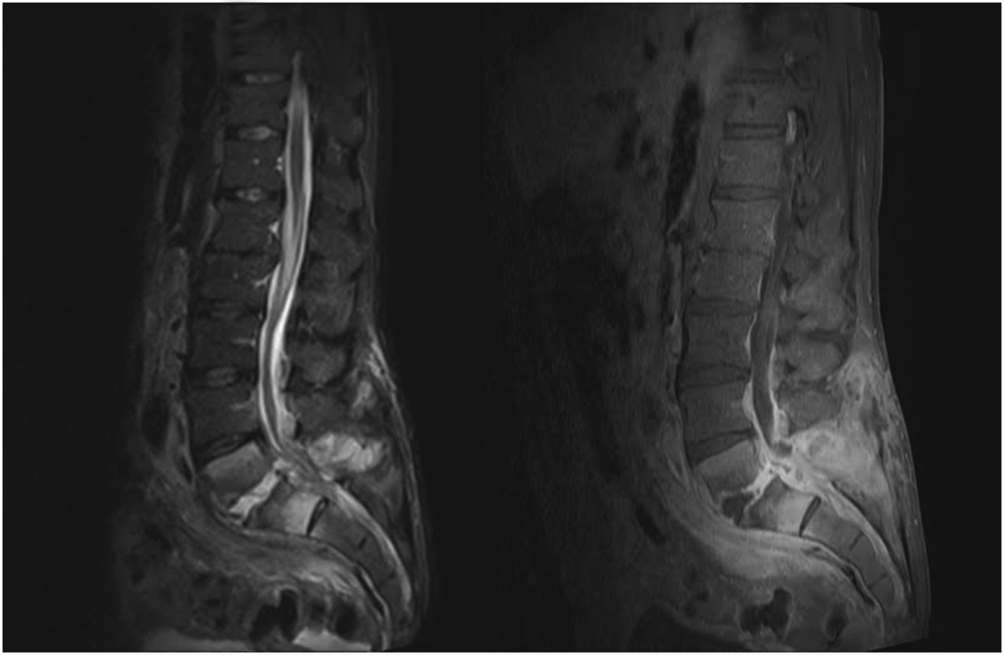

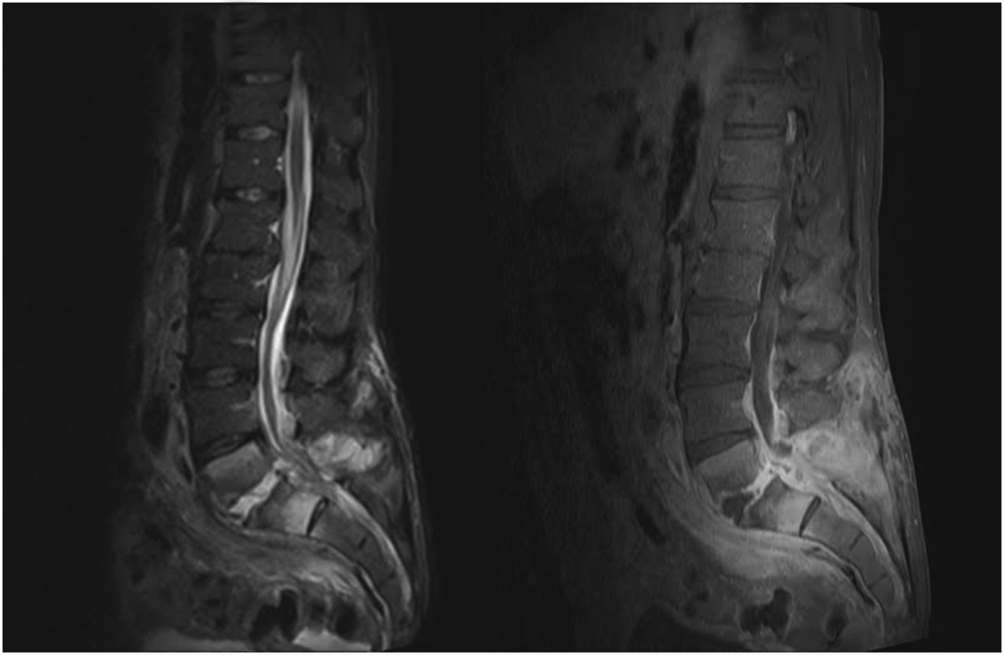

Imaging

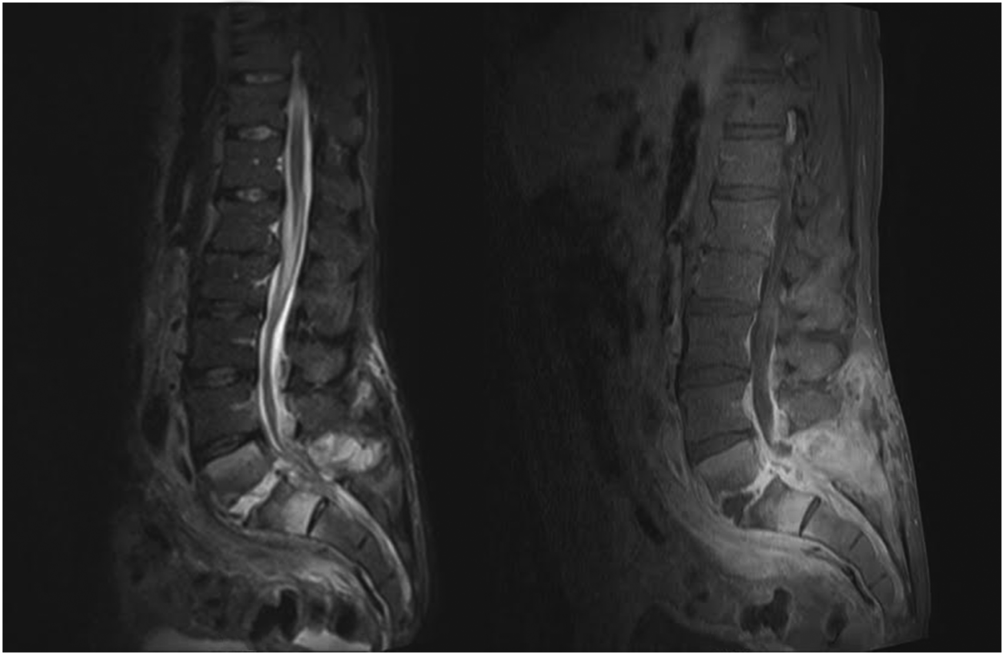

When the diagnosis of SEA is suspected based on clinical findings, MRI with gadolinium should be obtained first, as it is 90% sensitive for diagnosing SEA (Figure 1).[3] In patients unable to undergo MRI (eg, pacemaker), a computed tomography (CT) myelogram should be obtained instead. The study should be performed on an emergent basis, and the entire spine should be imaged due to the risk of noncontiguous lesions. Patients with plain radiograph and/or CT scan findings of bone lysis suggestive of vertebral osteomyelitis should also be evaluated with MRI if able.

MRI with contrast can often differentiate SEA from malignancy and other space‐occupying lesions.[28] Plain radiography can rule out other causes of back pain, such as trauma and degenerative disc disease, but it cannot demonstrate SEA. One study found that x‐rays showed pathology in only 16.6% of patients with SEA.[29]

Patients with exam findings and laboratory studies concerning for SEA who present to a community hospital without MRI capability should be transferred to a tertiary care center for advanced imaging and potential emergent treatment.

Tissue Culture

Though MRI is essential to the workup of SEA, biopsy with cultures allows for a definitive diagnosis.[30] Cultures may be obtained in the operating room or interventional radiology (IR) suite via fine‐needle aspiration or core‐needle biopsy under CT guidance, if available.[30, 31] CT‐guided bone biopsy, which has a sensitivity of 81% and specificity of 100%, may also be performed when vertebral osteomyelitis is present.[32] Biopsy by IR should be considered before surgical intervention, when the patient has no evidence of progressive neurologic deficit, the diagnosis is unclear, or the patient is too high risk for surgical intervention. In very high‐risk surgical patients, IR aspiration may be curative. Lyu et al. describe a case of refractory SEA treated with percutaneous CT‐guided needle aspiration alone, though they note that surgical debridement is preferred when possible.[31]

NONSURGICAL VERSUS SURGICAL MANAGEMENT

SEA may be treated without surgery in carefully selected patients. Savage et al. studied 52 patients, and found that nonsurgical management was often effective in patients who were completely neurologically intact at initial presentation. In their study, only 3 patients needed to undergo surgery due to development of new neurologic symptoms.[33] However, other studies report neurologic symptoms in 71% of patients at initial diagnosis.[3]

Adogwa et al. reviewed surgical versus nonsurgical management in elderly patients (>50 years old) over 15 years.[10] Their study included 30 patients treated operatively and 52 who received antibiotics alone. The decision for surgical management was at the surgeons' discretion; however, most patients with grade 2 or 3 symptoms underwent surgery, and those with paraplegia or quadriplegia for >48 hours did not. The authors found no clinically significant difference in outcome between these 2 groups and cautioned against surgical intervention for elderly patients with multiple comorbidities. It is worth noting, however, that 7/30 (23%) patients treated with surgery versus 5/52 (10%) treated conservatively had improved neurological outcomes (P = 0.03).[10] Numerous other studies have also found that neurologic status at presentation is the most important predictor of surgical outcomes.[7, 15, 34]

Patel et al. performed a study analyzing risk factors for failure of medical management, and found that diabetes mellitus, CRP >115, WBC >12.5, and positive blood cultures were predictors of failure.[9] Patients with >3 of these risk factors required surgery 76.9% of the time compared to 40.2% with 2, 35.4% with 1, and 8.3% with none.[9] The authors also found that surgical patients experienced better mean improvement than patients who failed nonsurgical treatment and subsequently underwent surgical decompression.[9]

In a follow‐up study by Alton et al., 62 patients were treated with either nonsurgical or surgical management. Indications for surgery in their study included a neurologic deficit at initial presentation or the development of a new neurological deficit while undergoing treatment. Twenty‐four patients presented without deficits and underwent nonsurgical management, but only 6 (25%) were treated successfully, as defined by stable or improved neurological status following therapy. In contrast, none of the 38 patients who underwent therapy with both IV antibiotics and emergent surgical management within 24 hours experienced deterioration in the neurologic status. For the 18 patients who failed nonsurgical management, surgery was performed within an average of 7 days. This group experienced improvement following surgery, but their neurological status improved less than those who underwent early surgery.[35]

These recent studies demonstrate that medical management may be an option for patients who are diagnosed early and present without neurologic deficits, but surgery is the mainstay of treatment for most patients. Additionally, patients who experience a delay in operative management often do not recover function as well as those patients who undergo emergent surgical debridement.[35] A delay in diagnosis has also been shown to lead to increase in lawsuits against providers. French et al. found an increase in verdicts against the provider when a delay in diagnosis >48 hours was present, irrespective of the degree of permanent neurologic dysfunction.[36]

TREATMENT AND FOLLOW‐UP

Unless the patient is septic and hemodynamically unstable, antibiotics should be held until a tissue sample is obtained for speciation and culture, either in the IR suite or the operating room. Once cultures have been obtained, broad‐spectrum IV antibiotics, usually vancomycin for gram‐positive coverage in combination with a third‐generation cephalosporin or aminoglycoside for additional gram‐negative coverage, should be started until organism sensitivities are determined and specific antibiotics can be administered.[3] Antibiotic therapy should continue for 4 to 6 weeks, though therapy may be administered for 8 weeks or longer in patients with vertebral osteomyelitis or immunocompromised patients.[19] An infectious disease specialist should be involved to determine the duration of therapy and transition to oral antibiotics.

As noted earlier, biopsy by IR should be considered when a trial of nonsurgical management is planned for a patient with no evidence of neurologic involvement, when the diagnosis is unclear after imaging, or when the patient is too high risk for surgery. Otherwise, surgical management is standard and should involve extensive surgical debridement with possible fusion if there is considerable structural compromise to the spinal column. After definitive treatment, repeat MRI is not recommended in the follow‐up of SEA; however, it is useful if there is concern for recurrence, as indicated by new fevers or a rising leukocyte count or inflammatory markers, especially CRP. Inflammatory labs should be obtained every 1 to 2 weeks to monitor for resolution of the infectious process.

CONCLUSION

Spinal epidural abscess is a potentially devastating condition that can be difficult to diagnose. Although uncommon, the triad of axial spine pain, fever, and new‐onset neurologic dysfunction are concerning. Other factors that increase the likelihood of SEA include a history of diabetes, IV drug abuse, spinal trauma, end‐stage renal disease, immunosuppressant therapy, recent invasive spine procedures, and concurrent infection of the skin or urinary tract. In patients with suspected SEA, inflammatory laboratory studies should be obtained along with gadolinium‐enhanced MRI of the entire spinal axis. Once the diagnosis is established, spine surgery and infectious disease consultation is mandatory, and IR biopsy may be appropriate in some cases. The rapidity of diagnosis and initiation of treatment are critical factors in optimizing patient outcome.

Disclosures

Mark A. Palumbo, MD, receives research funding from Globus Medical and is a paid consultant for Stryker. Alan H. Daniels, MD, is a paid consultant for Stryker and Osseous. No funding was obtained in support of this work.

- , , , et al. The clinical presentation and impact of diagnostic delays on emergency department patients with spinal epidural abscess. J Emerg Med. 2004;26:285–291.

- , , , . Spinal epidural abscess. N Engl J Med. 1975;293:463–468.

- , , , et al. Spinal epidural abscess: contemporary trends in etiology, evaluation, and management. Surg Neurol. 1999;52:189–196; discussion 197.

- . Spinal epidural abscess. N Engl J Med. 2006;355:2012–2020.

- , . Mortality, complication risk, and total charges after the treatment of epidural abscess. Spine J. 2015;15:249–255.

- , . Spinal epidural abscess. Curr Infect Dis Rep. 2004;16:436.

- , , , . Spinal epidural abscess: a ten‐year perspective. Neurosurgery. 1980;27:177–184.

- , , . Spinal epidural abscess: a meta‐analysis of 915 patients. Neurosurg Rev. 2000;23:175–204; discussion 205.

- , , , , , . Spinal epidural abscesses: risk factors, medical versus surgical management, a retrospective review of 128 cases. Spine J. 2014;14:326–330.

- , , , et al. Spontaneous spinal epidural abscess in patients 50 years of age and older: a 15‐year institutional perspective and review of the literature: clinical article. J Neurosurg Spine. 2014;20:344–349.

- , . Spinal epidural abscesses: clinical manifestations, prognostic factors, and outcomes. Neurosurgery. 2002;51:79–85; discussion 86–87.

- , , . Infectious agents in spinal epidural abscesses. Neurology. 1980;30:844–850.

- , , , , , . Cervical epidural abscess after epidural steroid injection. Spine (Phila Pa 1976). 2004;29:E7–E9.

- , , . Spinal epidural abscesses in adults: review and report of iatrogenic cases. Scand J Infect Dis. 1990;22:249–257.

- , , , , . Bacterial spinal epidural abscess. Review of 43 cases and literature survey. Medicine (Baltimore). 1992;71:369–385.

- , , , , . Spinal epidural abscess: the importance of early diagnosis and treatment. J Neurol Neurosurg Psychiatry. 1998;65:209–212.

- , , . Epidural abscesses. Br J Anaesth. 2006;96:292–302.

- , . Spinal epidural abscess. Med Clin North Am. 1985;69;375–384.

- , , , . Spinal epidural abscess. J Emerg Med. 2010;39:384–390.

- , , . Spinal epidural abscess: clinical presentation, management and outcome [comment on Curry WT, Hoh BL, Hanjani SA, et al. Surg Neurol. 2005;63:364–371). Surg Neurol. 2005;64:279.

- , , , , . Routine replacement of tunneled, cuffed, hemodialysis catheters eliminates paraspinal/vertebral infections in patients with catheter‐associated bacteremia. Am J Nephrol. 2003;23:202–207.

- , . Paraplegia caused by spinal infection after acupuncture. Spinal Cord. 2006;44:258–259.

- , , , . Spinal epidural abscess after tattooing. Clin Infect Dis. 1999;29:225–226.

- . Nontuberculous spinal epidural infections. N Engl J Med. 1948;239:845–854.

- , , , . Prospective evaluation of a clinical decision guideline to diagnose spinal epidural abscess in patients who present to the emergency department with spine pain. J Neurosurg Spine. 2011;14:765–770.

- , , , . Spinal epidural abscess: clinical presentation, management, and outcome. Surg Neurol. 2005;63:364–371; discussion 371.

- , . Bone and joint tuberculosis. Eur Spine J. 2013;22:556–566.

- , . Surgical management of spinal epidural abscess: selection of approach based on MRI appearance. J Clin Neurosci. 2004;11:130–133.

- , . Infection as a cause of spinal cord compression: a review of 36 spinal epidural abscess cases. Acta Neurochir (Wien). 2000;142:17–23.

- , , , et al. Spondyloarthropathy from long‐term hemodialysis. Radiology. 1988;167:761–764.

- , , , . Spinal epidural abscess successfully treated with percutaneous, computed tomography‐guided, needle aspiration and parenteral antibiotic therapy: case report and review of the literature. Neurosurgery. 2002;51:509–512; discussion 512.

- , , , . CT‐guided core biopsy of subchondral bone and intervertebral space in suspected spondylodiskitis. AJR Am J Roentgenol. 2006;186:977–980.

- , , . Spinal epidural abscess: early clinical outcome in patients treated medically. Clin Orthop. 2005;439:56–60.

- , . Update on spinal epidural abscess: 35 cases and review of the literature. Rev Infect Dis. 1987;9:265–274.

- , , , , , . Is there a difference in neurologic outcome in medical versus early operative management of cervical epidural abscesses? Spine J. 2015;15:10–17.

- , , , . Medicolegal cases for spinal epidural hematoma and spinal epidural abscess. Orthopedics. 2013;36:48–53.

- , , , . Spinal epidural abscess—experience with 46 patients and evaluation of prognostic factors. J Infect. 2002;45:76–81.

Spinal epidural abscess (SEA) is caused by a suppurative infection in the epidural space. The mass effect of the abscess can compress and reduce blood flow to the spinal cord, conus medullaris, or cauda equina. Left untreated, the infection can lead to sensory loss, muscle weakness, visceral dysfunction, sepsis, and even death. Early diagnosis is essential to limit morbidity and neurologic injury. The classic triad of fever, axial pain, and neurological deficit occurs in as few as 13% of patients, highlighting the diagnostic challenge associated with SEA.[1]

This investigation reviews the current literature on SEA epidemiology, clinical findings, laboratory data, and treatment methods, with particular focus on nonsurgical versus surgical treatment. Our primary objective was to educate the clinician on when to suspect SEA and how to execute an appropriate diagnostic evaluation.

INCIDENCE AND EPIDEMIOLOGY

In 1975, the incidence of SEA was reported to be 0.2 to 2.0 per 10,000 hospital admissions.[2] Two decades later, a study of tertiary referral centers documented a much higher rate of 12.5 per 10,000 admissions.[3] The reported incidence continues to rise and has doubled in the past decade, with SEA currently representing approximately 10% of all primary spine infections.[4, 5] Potential explanations for this increasing incidence include aging of the population, an increasing prevalence of diabetes, increasing intravenous drug abuse, more widespread use of advanced immunosuppressive regimens, and an increased rate of invasive spinal procedures.[6, 7, 8] Other factors contributing to the rising incidence include increased detection due to the greater accessibility of magnetic resonance imaging (MRI) and increased reporting as a result of the concentration of cases at tertiary referral centers.

SEA is most common in patients older than 60 years[7] and those with multiple medical comorbidities. A review of over 30,000 patients found that the average number of comorbidities in patients who underwent surgical intervention for SEA was 6, ranging from 0 to 20.[5] The same study noted that diabetes was the most frequently associated disease (30% of patients), followed by chronic lung disease (19%), renal failure (13%), and obesity (13%) (Table 1).[5] A history of invasive spine interventions is an additional risk factor; between 14% and 22% occur as a result of spine surgery or percutaneous spine procedures (eg, epidural steroid injections).[8, 9] Regardless of pathogenesis, the rate of permanent neurologic injury after SEA is 30% to 50%, and the mortality rate ranges from 10% to 20%.[4, 5, 8, 10]

| Medical Comorbidity | Prevalence (%) |

|---|---|

| |

| Diabetes mellitus | 1546 |

| IV drug use | 437 |

| Spinal trauma | 533 |

| End‐stage renal disease | 213 |

| Immunosuppressant therapy | 716 |

| Cancer | 215 |

| HIV/AIDS | 29 |

MISSED DIAGNOSIS

Despite the availability of advanced imaging, rates of misdiagnosis at initial presentation remain substantial, with current estimates ranging from 11% to 75%.[4, 9] Back and neck pain symptoms are ubiquitous and nonspecific, often making the diagnosis difficult. Repeated emergency room visits for pain are common in patients who are eventually diagnosed with SEA. Davis et al. found that 51% of 63 patients present to the emergency room at least 2 or more times prior to diagnosis; 11% present 3 or more times.[1]

PATHOPHYSIOLOGY

Microbiology

Staphylococcus aureus, including methicillin resistant S aureus (MRSA), accounts for two‐thirds of all infections.[3] S aureus infection of the spine may occur in the setting of surgical intervention or concomitant skin infection, although it often occurs without an identifiable source. Historically, MRSA has been reported to be responsible for 15% of all staphylococcal infections of the epidural space; some institutions report MRSA rates as high as 40%.[4] S epidermidis is another common pathogen, which is most often encountered following spinal surgery, epidural catheter insertion, and spinal injections.[11] Gram‐negative infections are less common. Escherichia coli is characteristically isolated in patients with active urinary tract infections, and Pseudomonas aeruginosa is more common in intravenous (IV) drug users.[12] Rare causes of SEA include anaerobes such as Bacteroides,[13] various parasites, and fungal organisms such as actinomyces, nocardia, and mycobacteria.[8]

Mechanism of Inoculation

Infections may enter the epidural space by 4 mechanisms: hematogenous spread, direct extension, inoculation via spinal procedure, and trauma. Hematogenous spread from an existing infection is the most common mechanism.[14] Seeding of the epidural space from transient bacteremia after dental procedures has also been reported.[13]

The second most common mechanism of infection is direct spread from an infected component of the vertebral column or paraspinal soft tissues. Most commonly, this takes the form of an anterior SEA in association with vertebral body osteomyelitis. Septic arthritis of a facet joint can also cause a secondary infection of the posterior epidural space.[4, 15] Direct spread from other posterior structures (eg, retropharyngeal, psoas, or paraspinal muscle abscess) may cause SEA as well.[16]

Less‐frequent mechanisms include direct inoculation and trauma. Infection of the epidural space can occur in association with spinal surgery, placement of an epidural catheter, or spinal injections. Grewal et al. reported in 2006 that 1 in 1000 surgical and 1 in 2000 obstetric patients develop SEA following epidural nerve block.[17] Hematoma secondary to an osseous or ligamentous injury can become seeded by bacteria, leading to abscess formation.[18]

Development of Neurologic Symptoms

There are several proposed mechanisms by which SEA can produce neurologic dysfunction. The first theory is that the compressive effect of an expanding abscess decreases blood flow to the neuronal tissue.[4] Improvement of neurologic function following surgical decompression lends credence to this theory. A second potential mechanism is a loss of blood flow due to local vascular inflammation from the infection. Local arteritis may decrease inflow to the cord parenchyma. This theoretical mechanism offers an explanation for the rapid onset of profound neurologic compromise in some cases.[19, 20] Infection can also result in venous thrombophlebitis, which produces ischemic injury due to impaired outflow. Postmortem examination supports this hypothesis; autopsy has revealed local thrombosis of the leptomeningeal vessels adjacent to the level of SEA.[4] All of these mechanisms are probably involved to some degree in any given case of neurologic compromise.

PATIENT HISTORY

Patients present with a wide variety of complaints and confounding variables that complicate the diagnosis (eg, medical comorbidities, psychiatric disease, chronic pain, dementia, or preexistent nonambulatory status). Ninety‐five percent of patients with SEA have a chief complaint of axial spinal pain.[1] Approximately half of patients report fever, and 47% complain of weakness in either the upper or lower extremities.[9] The classic triad of fever, spine pain, and neurological deficits presents in only a minority of patients, with rates ranging from 13% to 37%.[1, 3] Additionally, 1 study found that the sensitivity of this triad was a mere 8%.[1]

The physician should inquire about comorbid conditions associated with SEA, including diabetes, kidney disease, and history of drug use. Any recent infection at a remote site, such as cellulitis or urinary tract infection, should also be investigated. As many as 44% of patients with vertebral osteomyelitis have an associated SEA; conversely, osteomyelitis may be present in up to 80% of patients with SEA.[2, 13] Spinal procedures such as epidural[13] or facet joint injections,[14] placement of hemodialysis catheters,[21] acupuncture,[22] and tattoos[23] have also been implicated as risk factors.

PHYSICAL EXAM

Physical exam findings range from subtle back tenderness to severe neurologic deficits and complete paralysis. Spinal tenderness is elicited in up to 75% of patients, with equal rates of focal and diffuse back tenderness.[1, 4] Radicular symptoms are evident in 12% to 47% of patients, presenting as weakness identified in 26% to 60% and altered sensation in up to 67% of patients.[1, 4, 8, 11, 19] One study revealed that 71% of patients have an abnormal neurologic exam at presentation, including paresthesias (39%), motor weakness (39%), and loss of bladder and bowel control (27%).[3] Thus, a thorough neurologic exam is vital in SEA evaluation.

Heusner staged patients based on their clinical findings (Table 2). Because the evolution of symptoms can be variable and rapidly progressive[4, 24] to stage 3 or 4, documenting subtle abnormalities on initial exam and monitoring for changes are important. Many patients initially present in stage 1 or 2 but remain undiagnosed until progression to stage 3 or 4.[24]

| Stage | Symptoms |

|---|---|

| I | Back pain |

| II | Radiculopathy, neck pain, reflex changes |

| III | Paresthesia, weakness, bladder symptoms |

| IV | Paralysis |

DIAGNOSTIC WORKUP

Laboratory Testing

Routine tests should include a white blood cell count (WBC), erythrocyte sedimentation rate (ESR), and C‐reactive protein (CRP). ESR has been shown to be the most sensitive and specific marker of SEA (Table 3). In a study of 63 patients matched with 126 controls, ESR was greater than 20 in 98% of cases and only 21% of controls.[1] Another study of 55 patients found the sensitivity and specificity of ESR to be 100% and 67%, respectively.[25] White count is less specific, with leukocytosis present in approximately two‐thirds of patients.[1, 25] CRP level rises faster than ESR in the setting of inflammation and also returns to baseline faster. As such, CRP is a useful means of monitoring the response to treatment.[1, 19]

|

| 1. Patient assessment |

| Evaluate for symptom triad (back pain, fever, neurologic dysfunction) |

| Assess for common comorbidities (diabetes, IV drug abuse, spinal trauma, ESRD, immunosuppressant therapy, recent spine procedure, systemic or local infection) |

| 2. Laboratory evaluation |

| Essential: ESR (most sensitive and specific), CRP, WBCs, blood cultures, UA |

| : echocardiogram, TB evaluation |

| No role: lumbar puncture |

| 3. Imaging |

| Gold standard: MRI w/gadolinium (90% sensitive) |

| If MRI contraindicated: CT myelogram |

| 4. Obtain tissue sample |

| Gold standard: open surgical biopsy, debridement fixation. |

| If patient unstable, diagnosis indeterminate, or no neuro symptoms: IR‐guided biopsy before surgery |

| 5. Antibiotics (once tissue sample obtained) |

| Empiric vancomycin plus third‐generation cephalosporin or aminoglycoside |

| Vancomycin only acceptable as monotherapy in patient with early diagnosis and no neurologic symptoms |

| 6. Surgical management (only if not done as step 4) |

| Early surgical consult recommended. Pursue surgical intervention if patient is stable for OR and neuro symptoms progressing or abscess refractory to antibiotics. Best outcomes occur with combined antibiotics and surgical debridement. |

Blood cultures are important to obtain at initial evaluation. Bacteremia from the offending organism may be present in up to 60% of cases; 60% to 90% of cases of bacteremia are caused by MRSA.[26] Urine culture should also be obtained in all patients. Sputum cultures should not be routinely obtained, but it may be beneficial in select patients with history of chronic obstructive pulmonary disease, new cough, or abnormality on chest radiographs. Transthoracic or transesophageal echocardiogram may be recommended for bacteremic patients, those with new heart murmur, or those with a history of IV drug abuse.

In patients in whom tuberculosis may be a potential risk, a tuberculin test with purified protein derivate can help rule out this organism. However, false‐negative testing may occur in up to 15% of patients, particularly the immunocompromised.[27] Patients with false positive results or prior vaccination with bacille Calmette‐Gurin (BCG) can be tested with QuantiFERON gold testing. These time‐consuming and expensive tests should not delay treatment with an empiric agent if tuberculosis is suspected due to patient risk factors or exposure, though an infectious disease specialist should be consulted before initiating treatment for tuberculosis.

Lumbar puncture should not be performed routinely in cases of suspected SEA, primarily due to the risk of bacterial contamination of the cerebrospinal fluid (CSF). Additionally, the increased protein and inflammatory cells seen in the CSF are nonspecific markers of parameningeal inflammation.[15]

Imaging

When the diagnosis of SEA is suspected based on clinical findings, MRI with gadolinium should be obtained first, as it is 90% sensitive for diagnosing SEA (Figure 1).[3] In patients unable to undergo MRI (eg, pacemaker), a computed tomography (CT) myelogram should be obtained instead. The study should be performed on an emergent basis, and the entire spine should be imaged due to the risk of noncontiguous lesions. Patients with plain radiograph and/or CT scan findings of bone lysis suggestive of vertebral osteomyelitis should also be evaluated with MRI if able.

MRI with contrast can often differentiate SEA from malignancy and other space‐occupying lesions.[28] Plain radiography can rule out other causes of back pain, such as trauma and degenerative disc disease, but it cannot demonstrate SEA. One study found that x‐rays showed pathology in only 16.6% of patients with SEA.[29]

Patients with exam findings and laboratory studies concerning for SEA who present to a community hospital without MRI capability should be transferred to a tertiary care center for advanced imaging and potential emergent treatment.

Tissue Culture

Though MRI is essential to the workup of SEA, biopsy with cultures allows for a definitive diagnosis.[30] Cultures may be obtained in the operating room or interventional radiology (IR) suite via fine‐needle aspiration or core‐needle biopsy under CT guidance, if available.[30, 31] CT‐guided bone biopsy, which has a sensitivity of 81% and specificity of 100%, may also be performed when vertebral osteomyelitis is present.[32] Biopsy by IR should be considered before surgical intervention, when the patient has no evidence of progressive neurologic deficit, the diagnosis is unclear, or the patient is too high risk for surgical intervention. In very high‐risk surgical patients, IR aspiration may be curative. Lyu et al. describe a case of refractory SEA treated with percutaneous CT‐guided needle aspiration alone, though they note that surgical debridement is preferred when possible.[31]

NONSURGICAL VERSUS SURGICAL MANAGEMENT

SEA may be treated without surgery in carefully selected patients. Savage et al. studied 52 patients, and found that nonsurgical management was often effective in patients who were completely neurologically intact at initial presentation. In their study, only 3 patients needed to undergo surgery due to development of new neurologic symptoms.[33] However, other studies report neurologic symptoms in 71% of patients at initial diagnosis.[3]

Adogwa et al. reviewed surgical versus nonsurgical management in elderly patients (>50 years old) over 15 years.[10] Their study included 30 patients treated operatively and 52 who received antibiotics alone. The decision for surgical management was at the surgeons' discretion; however, most patients with grade 2 or 3 symptoms underwent surgery, and those with paraplegia or quadriplegia for >48 hours did not. The authors found no clinically significant difference in outcome between these 2 groups and cautioned against surgical intervention for elderly patients with multiple comorbidities. It is worth noting, however, that 7/30 (23%) patients treated with surgery versus 5/52 (10%) treated conservatively had improved neurological outcomes (P = 0.03).[10] Numerous other studies have also found that neurologic status at presentation is the most important predictor of surgical outcomes.[7, 15, 34]

Patel et al. performed a study analyzing risk factors for failure of medical management, and found that diabetes mellitus, CRP >115, WBC >12.5, and positive blood cultures were predictors of failure.[9] Patients with >3 of these risk factors required surgery 76.9% of the time compared to 40.2% with 2, 35.4% with 1, and 8.3% with none.[9] The authors also found that surgical patients experienced better mean improvement than patients who failed nonsurgical treatment and subsequently underwent surgical decompression.[9]

In a follow‐up study by Alton et al., 62 patients were treated with either nonsurgical or surgical management. Indications for surgery in their study included a neurologic deficit at initial presentation or the development of a new neurological deficit while undergoing treatment. Twenty‐four patients presented without deficits and underwent nonsurgical management, but only 6 (25%) were treated successfully, as defined by stable or improved neurological status following therapy. In contrast, none of the 38 patients who underwent therapy with both IV antibiotics and emergent surgical management within 24 hours experienced deterioration in the neurologic status. For the 18 patients who failed nonsurgical management, surgery was performed within an average of 7 days. This group experienced improvement following surgery, but their neurological status improved less than those who underwent early surgery.[35]

These recent studies demonstrate that medical management may be an option for patients who are diagnosed early and present without neurologic deficits, but surgery is the mainstay of treatment for most patients. Additionally, patients who experience a delay in operative management often do not recover function as well as those patients who undergo emergent surgical debridement.[35] A delay in diagnosis has also been shown to lead to increase in lawsuits against providers. French et al. found an increase in verdicts against the provider when a delay in diagnosis >48 hours was present, irrespective of the degree of permanent neurologic dysfunction.[36]

TREATMENT AND FOLLOW‐UP

Unless the patient is septic and hemodynamically unstable, antibiotics should be held until a tissue sample is obtained for speciation and culture, either in the IR suite or the operating room. Once cultures have been obtained, broad‐spectrum IV antibiotics, usually vancomycin for gram‐positive coverage in combination with a third‐generation cephalosporin or aminoglycoside for additional gram‐negative coverage, should be started until organism sensitivities are determined and specific antibiotics can be administered.[3] Antibiotic therapy should continue for 4 to 6 weeks, though therapy may be administered for 8 weeks or longer in patients with vertebral osteomyelitis or immunocompromised patients.[19] An infectious disease specialist should be involved to determine the duration of therapy and transition to oral antibiotics.

As noted earlier, biopsy by IR should be considered when a trial of nonsurgical management is planned for a patient with no evidence of neurologic involvement, when the diagnosis is unclear after imaging, or when the patient is too high risk for surgery. Otherwise, surgical management is standard and should involve extensive surgical debridement with possible fusion if there is considerable structural compromise to the spinal column. After definitive treatment, repeat MRI is not recommended in the follow‐up of SEA; however, it is useful if there is concern for recurrence, as indicated by new fevers or a rising leukocyte count or inflammatory markers, especially CRP. Inflammatory labs should be obtained every 1 to 2 weeks to monitor for resolution of the infectious process.

CONCLUSION

Spinal epidural abscess is a potentially devastating condition that can be difficult to diagnose. Although uncommon, the triad of axial spine pain, fever, and new‐onset neurologic dysfunction are concerning. Other factors that increase the likelihood of SEA include a history of diabetes, IV drug abuse, spinal trauma, end‐stage renal disease, immunosuppressant therapy, recent invasive spine procedures, and concurrent infection of the skin or urinary tract. In patients with suspected SEA, inflammatory laboratory studies should be obtained along with gadolinium‐enhanced MRI of the entire spinal axis. Once the diagnosis is established, spine surgery and infectious disease consultation is mandatory, and IR biopsy may be appropriate in some cases. The rapidity of diagnosis and initiation of treatment are critical factors in optimizing patient outcome.

Disclosures

Mark A. Palumbo, MD, receives research funding from Globus Medical and is a paid consultant for Stryker. Alan H. Daniels, MD, is a paid consultant for Stryker and Osseous. No funding was obtained in support of this work.

Spinal epidural abscess (SEA) is caused by a suppurative infection in the epidural space. The mass effect of the abscess can compress and reduce blood flow to the spinal cord, conus medullaris, or cauda equina. Left untreated, the infection can lead to sensory loss, muscle weakness, visceral dysfunction, sepsis, and even death. Early diagnosis is essential to limit morbidity and neurologic injury. The classic triad of fever, axial pain, and neurological deficit occurs in as few as 13% of patients, highlighting the diagnostic challenge associated with SEA.[1]

This investigation reviews the current literature on SEA epidemiology, clinical findings, laboratory data, and treatment methods, with particular focus on nonsurgical versus surgical treatment. Our primary objective was to educate the clinician on when to suspect SEA and how to execute an appropriate diagnostic evaluation.

INCIDENCE AND EPIDEMIOLOGY

In 1975, the incidence of SEA was reported to be 0.2 to 2.0 per 10,000 hospital admissions.[2] Two decades later, a study of tertiary referral centers documented a much higher rate of 12.5 per 10,000 admissions.[3] The reported incidence continues to rise and has doubled in the past decade, with SEA currently representing approximately 10% of all primary spine infections.[4, 5] Potential explanations for this increasing incidence include aging of the population, an increasing prevalence of diabetes, increasing intravenous drug abuse, more widespread use of advanced immunosuppressive regimens, and an increased rate of invasive spinal procedures.[6, 7, 8] Other factors contributing to the rising incidence include increased detection due to the greater accessibility of magnetic resonance imaging (MRI) and increased reporting as a result of the concentration of cases at tertiary referral centers.

SEA is most common in patients older than 60 years[7] and those with multiple medical comorbidities. A review of over 30,000 patients found that the average number of comorbidities in patients who underwent surgical intervention for SEA was 6, ranging from 0 to 20.[5] The same study noted that diabetes was the most frequently associated disease (30% of patients), followed by chronic lung disease (19%), renal failure (13%), and obesity (13%) (Table 1).[5] A history of invasive spine interventions is an additional risk factor; between 14% and 22% occur as a result of spine surgery or percutaneous spine procedures (eg, epidural steroid injections).[8, 9] Regardless of pathogenesis, the rate of permanent neurologic injury after SEA is 30% to 50%, and the mortality rate ranges from 10% to 20%.[4, 5, 8, 10]

| Medical Comorbidity | Prevalence (%) |

|---|---|

| |

| Diabetes mellitus | 1546 |

| IV drug use | 437 |

| Spinal trauma | 533 |

| End‐stage renal disease | 213 |

| Immunosuppressant therapy | 716 |

| Cancer | 215 |

| HIV/AIDS | 29 |

MISSED DIAGNOSIS

Despite the availability of advanced imaging, rates of misdiagnosis at initial presentation remain substantial, with current estimates ranging from 11% to 75%.[4, 9] Back and neck pain symptoms are ubiquitous and nonspecific, often making the diagnosis difficult. Repeated emergency room visits for pain are common in patients who are eventually diagnosed with SEA. Davis et al. found that 51% of 63 patients present to the emergency room at least 2 or more times prior to diagnosis; 11% present 3 or more times.[1]

PATHOPHYSIOLOGY

Microbiology

Staphylococcus aureus, including methicillin resistant S aureus (MRSA), accounts for two‐thirds of all infections.[3] S aureus infection of the spine may occur in the setting of surgical intervention or concomitant skin infection, although it often occurs without an identifiable source. Historically, MRSA has been reported to be responsible for 15% of all staphylococcal infections of the epidural space; some institutions report MRSA rates as high as 40%.[4] S epidermidis is another common pathogen, which is most often encountered following spinal surgery, epidural catheter insertion, and spinal injections.[11] Gram‐negative infections are less common. Escherichia coli is characteristically isolated in patients with active urinary tract infections, and Pseudomonas aeruginosa is more common in intravenous (IV) drug users.[12] Rare causes of SEA include anaerobes such as Bacteroides,[13] various parasites, and fungal organisms such as actinomyces, nocardia, and mycobacteria.[8]

Mechanism of Inoculation

Infections may enter the epidural space by 4 mechanisms: hematogenous spread, direct extension, inoculation via spinal procedure, and trauma. Hematogenous spread from an existing infection is the most common mechanism.[14] Seeding of the epidural space from transient bacteremia after dental procedures has also been reported.[13]

The second most common mechanism of infection is direct spread from an infected component of the vertebral column or paraspinal soft tissues. Most commonly, this takes the form of an anterior SEA in association with vertebral body osteomyelitis. Septic arthritis of a facet joint can also cause a secondary infection of the posterior epidural space.[4, 15] Direct spread from other posterior structures (eg, retropharyngeal, psoas, or paraspinal muscle abscess) may cause SEA as well.[16]

Less‐frequent mechanisms include direct inoculation and trauma. Infection of the epidural space can occur in association with spinal surgery, placement of an epidural catheter, or spinal injections. Grewal et al. reported in 2006 that 1 in 1000 surgical and 1 in 2000 obstetric patients develop SEA following epidural nerve block.[17] Hematoma secondary to an osseous or ligamentous injury can become seeded by bacteria, leading to abscess formation.[18]

Development of Neurologic Symptoms

There are several proposed mechanisms by which SEA can produce neurologic dysfunction. The first theory is that the compressive effect of an expanding abscess decreases blood flow to the neuronal tissue.[4] Improvement of neurologic function following surgical decompression lends credence to this theory. A second potential mechanism is a loss of blood flow due to local vascular inflammation from the infection. Local arteritis may decrease inflow to the cord parenchyma. This theoretical mechanism offers an explanation for the rapid onset of profound neurologic compromise in some cases.[19, 20] Infection can also result in venous thrombophlebitis, which produces ischemic injury due to impaired outflow. Postmortem examination supports this hypothesis; autopsy has revealed local thrombosis of the leptomeningeal vessels adjacent to the level of SEA.[4] All of these mechanisms are probably involved to some degree in any given case of neurologic compromise.

PATIENT HISTORY

Patients present with a wide variety of complaints and confounding variables that complicate the diagnosis (eg, medical comorbidities, psychiatric disease, chronic pain, dementia, or preexistent nonambulatory status). Ninety‐five percent of patients with SEA have a chief complaint of axial spinal pain.[1] Approximately half of patients report fever, and 47% complain of weakness in either the upper or lower extremities.[9] The classic triad of fever, spine pain, and neurological deficits presents in only a minority of patients, with rates ranging from 13% to 37%.[1, 3] Additionally, 1 study found that the sensitivity of this triad was a mere 8%.[1]

The physician should inquire about comorbid conditions associated with SEA, including diabetes, kidney disease, and history of drug use. Any recent infection at a remote site, such as cellulitis or urinary tract infection, should also be investigated. As many as 44% of patients with vertebral osteomyelitis have an associated SEA; conversely, osteomyelitis may be present in up to 80% of patients with SEA.[2, 13] Spinal procedures such as epidural[13] or facet joint injections,[14] placement of hemodialysis catheters,[21] acupuncture,[22] and tattoos[23] have also been implicated as risk factors.

PHYSICAL EXAM

Physical exam findings range from subtle back tenderness to severe neurologic deficits and complete paralysis. Spinal tenderness is elicited in up to 75% of patients, with equal rates of focal and diffuse back tenderness.[1, 4] Radicular symptoms are evident in 12% to 47% of patients, presenting as weakness identified in 26% to 60% and altered sensation in up to 67% of patients.[1, 4, 8, 11, 19] One study revealed that 71% of patients have an abnormal neurologic exam at presentation, including paresthesias (39%), motor weakness (39%), and loss of bladder and bowel control (27%).[3] Thus, a thorough neurologic exam is vital in SEA evaluation.

Heusner staged patients based on their clinical findings (Table 2). Because the evolution of symptoms can be variable and rapidly progressive[4, 24] to stage 3 or 4, documenting subtle abnormalities on initial exam and monitoring for changes are important. Many patients initially present in stage 1 or 2 but remain undiagnosed until progression to stage 3 or 4.[24]

| Stage | Symptoms |

|---|---|

| I | Back pain |

| II | Radiculopathy, neck pain, reflex changes |

| III | Paresthesia, weakness, bladder symptoms |

| IV | Paralysis |

DIAGNOSTIC WORKUP

Laboratory Testing

Routine tests should include a white blood cell count (WBC), erythrocyte sedimentation rate (ESR), and C‐reactive protein (CRP). ESR has been shown to be the most sensitive and specific marker of SEA (Table 3). In a study of 63 patients matched with 126 controls, ESR was greater than 20 in 98% of cases and only 21% of controls.[1] Another study of 55 patients found the sensitivity and specificity of ESR to be 100% and 67%, respectively.[25] White count is less specific, with leukocytosis present in approximately two‐thirds of patients.[1, 25] CRP level rises faster than ESR in the setting of inflammation and also returns to baseline faster. As such, CRP is a useful means of monitoring the response to treatment.[1, 19]

|

| 1. Patient assessment |

| Evaluate for symptom triad (back pain, fever, neurologic dysfunction) |

| Assess for common comorbidities (diabetes, IV drug abuse, spinal trauma, ESRD, immunosuppressant therapy, recent spine procedure, systemic or local infection) |

| 2. Laboratory evaluation |

| Essential: ESR (most sensitive and specific), CRP, WBCs, blood cultures, UA |

| : echocardiogram, TB evaluation |

| No role: lumbar puncture |

| 3. Imaging |

| Gold standard: MRI w/gadolinium (90% sensitive) |

| If MRI contraindicated: CT myelogram |

| 4. Obtain tissue sample |

| Gold standard: open surgical biopsy, debridement fixation. |

| If patient unstable, diagnosis indeterminate, or no neuro symptoms: IR‐guided biopsy before surgery |

| 5. Antibiotics (once tissue sample obtained) |

| Empiric vancomycin plus third‐generation cephalosporin or aminoglycoside |

| Vancomycin only acceptable as monotherapy in patient with early diagnosis and no neurologic symptoms |

| 6. Surgical management (only if not done as step 4) |

| Early surgical consult recommended. Pursue surgical intervention if patient is stable for OR and neuro symptoms progressing or abscess refractory to antibiotics. Best outcomes occur with combined antibiotics and surgical debridement. |

Blood cultures are important to obtain at initial evaluation. Bacteremia from the offending organism may be present in up to 60% of cases; 60% to 90% of cases of bacteremia are caused by MRSA.[26] Urine culture should also be obtained in all patients. Sputum cultures should not be routinely obtained, but it may be beneficial in select patients with history of chronic obstructive pulmonary disease, new cough, or abnormality on chest radiographs. Transthoracic or transesophageal echocardiogram may be recommended for bacteremic patients, those with new heart murmur, or those with a history of IV drug abuse.

In patients in whom tuberculosis may be a potential risk, a tuberculin test with purified protein derivate can help rule out this organism. However, false‐negative testing may occur in up to 15% of patients, particularly the immunocompromised.[27] Patients with false positive results or prior vaccination with bacille Calmette‐Gurin (BCG) can be tested with QuantiFERON gold testing. These time‐consuming and expensive tests should not delay treatment with an empiric agent if tuberculosis is suspected due to patient risk factors or exposure, though an infectious disease specialist should be consulted before initiating treatment for tuberculosis.

Lumbar puncture should not be performed routinely in cases of suspected SEA, primarily due to the risk of bacterial contamination of the cerebrospinal fluid (CSF). Additionally, the increased protein and inflammatory cells seen in the CSF are nonspecific markers of parameningeal inflammation.[15]

Imaging

When the diagnosis of SEA is suspected based on clinical findings, MRI with gadolinium should be obtained first, as it is 90% sensitive for diagnosing SEA (Figure 1).[3] In patients unable to undergo MRI (eg, pacemaker), a computed tomography (CT) myelogram should be obtained instead. The study should be performed on an emergent basis, and the entire spine should be imaged due to the risk of noncontiguous lesions. Patients with plain radiograph and/or CT scan findings of bone lysis suggestive of vertebral osteomyelitis should also be evaluated with MRI if able.

MRI with contrast can often differentiate SEA from malignancy and other space‐occupying lesions.[28] Plain radiography can rule out other causes of back pain, such as trauma and degenerative disc disease, but it cannot demonstrate SEA. One study found that x‐rays showed pathology in only 16.6% of patients with SEA.[29]

Patients with exam findings and laboratory studies concerning for SEA who present to a community hospital without MRI capability should be transferred to a tertiary care center for advanced imaging and potential emergent treatment.

Tissue Culture

Though MRI is essential to the workup of SEA, biopsy with cultures allows for a definitive diagnosis.[30] Cultures may be obtained in the operating room or interventional radiology (IR) suite via fine‐needle aspiration or core‐needle biopsy under CT guidance, if available.[30, 31] CT‐guided bone biopsy, which has a sensitivity of 81% and specificity of 100%, may also be performed when vertebral osteomyelitis is present.[32] Biopsy by IR should be considered before surgical intervention, when the patient has no evidence of progressive neurologic deficit, the diagnosis is unclear, or the patient is too high risk for surgical intervention. In very high‐risk surgical patients, IR aspiration may be curative. Lyu et al. describe a case of refractory SEA treated with percutaneous CT‐guided needle aspiration alone, though they note that surgical debridement is preferred when possible.[31]

NONSURGICAL VERSUS SURGICAL MANAGEMENT

SEA may be treated without surgery in carefully selected patients. Savage et al. studied 52 patients, and found that nonsurgical management was often effective in patients who were completely neurologically intact at initial presentation. In their study, only 3 patients needed to undergo surgery due to development of new neurologic symptoms.[33] However, other studies report neurologic symptoms in 71% of patients at initial diagnosis.[3]

Adogwa et al. reviewed surgical versus nonsurgical management in elderly patients (>50 years old) over 15 years.[10] Their study included 30 patients treated operatively and 52 who received antibiotics alone. The decision for surgical management was at the surgeons' discretion; however, most patients with grade 2 or 3 symptoms underwent surgery, and those with paraplegia or quadriplegia for >48 hours did not. The authors found no clinically significant difference in outcome between these 2 groups and cautioned against surgical intervention for elderly patients with multiple comorbidities. It is worth noting, however, that 7/30 (23%) patients treated with surgery versus 5/52 (10%) treated conservatively had improved neurological outcomes (P = 0.03).[10] Numerous other studies have also found that neurologic status at presentation is the most important predictor of surgical outcomes.[7, 15, 34]

Patel et al. performed a study analyzing risk factors for failure of medical management, and found that diabetes mellitus, CRP >115, WBC >12.5, and positive blood cultures were predictors of failure.[9] Patients with >3 of these risk factors required surgery 76.9% of the time compared to 40.2% with 2, 35.4% with 1, and 8.3% with none.[9] The authors also found that surgical patients experienced better mean improvement than patients who failed nonsurgical treatment and subsequently underwent surgical decompression.[9]

In a follow‐up study by Alton et al., 62 patients were treated with either nonsurgical or surgical management. Indications for surgery in their study included a neurologic deficit at initial presentation or the development of a new neurological deficit while undergoing treatment. Twenty‐four patients presented without deficits and underwent nonsurgical management, but only 6 (25%) were treated successfully, as defined by stable or improved neurological status following therapy. In contrast, none of the 38 patients who underwent therapy with both IV antibiotics and emergent surgical management within 24 hours experienced deterioration in the neurologic status. For the 18 patients who failed nonsurgical management, surgery was performed within an average of 7 days. This group experienced improvement following surgery, but their neurological status improved less than those who underwent early surgery.[35]

These recent studies demonstrate that medical management may be an option for patients who are diagnosed early and present without neurologic deficits, but surgery is the mainstay of treatment for most patients. Additionally, patients who experience a delay in operative management often do not recover function as well as those patients who undergo emergent surgical debridement.[35] A delay in diagnosis has also been shown to lead to increase in lawsuits against providers. French et al. found an increase in verdicts against the provider when a delay in diagnosis >48 hours was present, irrespective of the degree of permanent neurologic dysfunction.[36]

TREATMENT AND FOLLOW‐UP

Unless the patient is septic and hemodynamically unstable, antibiotics should be held until a tissue sample is obtained for speciation and culture, either in the IR suite or the operating room. Once cultures have been obtained, broad‐spectrum IV antibiotics, usually vancomycin for gram‐positive coverage in combination with a third‐generation cephalosporin or aminoglycoside for additional gram‐negative coverage, should be started until organism sensitivities are determined and specific antibiotics can be administered.[3] Antibiotic therapy should continue for 4 to 6 weeks, though therapy may be administered for 8 weeks or longer in patients with vertebral osteomyelitis or immunocompromised patients.[19] An infectious disease specialist should be involved to determine the duration of therapy and transition to oral antibiotics.

As noted earlier, biopsy by IR should be considered when a trial of nonsurgical management is planned for a patient with no evidence of neurologic involvement, when the diagnosis is unclear after imaging, or when the patient is too high risk for surgery. Otherwise, surgical management is standard and should involve extensive surgical debridement with possible fusion if there is considerable structural compromise to the spinal column. After definitive treatment, repeat MRI is not recommended in the follow‐up of SEA; however, it is useful if there is concern for recurrence, as indicated by new fevers or a rising leukocyte count or inflammatory markers, especially CRP. Inflammatory labs should be obtained every 1 to 2 weeks to monitor for resolution of the infectious process.

CONCLUSION

Spinal epidural abscess is a potentially devastating condition that can be difficult to diagnose. Although uncommon, the triad of axial spine pain, fever, and new‐onset neurologic dysfunction are concerning. Other factors that increase the likelihood of SEA include a history of diabetes, IV drug abuse, spinal trauma, end‐stage renal disease, immunosuppressant therapy, recent invasive spine procedures, and concurrent infection of the skin or urinary tract. In patients with suspected SEA, inflammatory laboratory studies should be obtained along with gadolinium‐enhanced MRI of the entire spinal axis. Once the diagnosis is established, spine surgery and infectious disease consultation is mandatory, and IR biopsy may be appropriate in some cases. The rapidity of diagnosis and initiation of treatment are critical factors in optimizing patient outcome.

Disclosures

Mark A. Palumbo, MD, receives research funding from Globus Medical and is a paid consultant for Stryker. Alan H. Daniels, MD, is a paid consultant for Stryker and Osseous. No funding was obtained in support of this work.

- , , , et al. The clinical presentation and impact of diagnostic delays on emergency department patients with spinal epidural abscess. J Emerg Med. 2004;26:285–291.

- , , , . Spinal epidural abscess. N Engl J Med. 1975;293:463–468.

- , , , et al. Spinal epidural abscess: contemporary trends in etiology, evaluation, and management. Surg Neurol. 1999;52:189–196; discussion 197.

- . Spinal epidural abscess. N Engl J Med. 2006;355:2012–2020.

- , . Mortality, complication risk, and total charges after the treatment of epidural abscess. Spine J. 2015;15:249–255.

- , . Spinal epidural abscess. Curr Infect Dis Rep. 2004;16:436.

- , , , . Spinal epidural abscess: a ten‐year perspective. Neurosurgery. 1980;27:177–184.

- , , . Spinal epidural abscess: a meta‐analysis of 915 patients. Neurosurg Rev. 2000;23:175–204; discussion 205.

- , , , , , . Spinal epidural abscesses: risk factors, medical versus surgical management, a retrospective review of 128 cases. Spine J. 2014;14:326–330.

- , , , et al. Spontaneous spinal epidural abscess in patients 50 years of age and older: a 15‐year institutional perspective and review of the literature: clinical article. J Neurosurg Spine. 2014;20:344–349.

- , . Spinal epidural abscesses: clinical manifestations, prognostic factors, and outcomes. Neurosurgery. 2002;51:79–85; discussion 86–87.

- , , . Infectious agents in spinal epidural abscesses. Neurology. 1980;30:844–850.

- , , , , , . Cervical epidural abscess after epidural steroid injection. Spine (Phila Pa 1976). 2004;29:E7–E9.

- , , . Spinal epidural abscesses in adults: review and report of iatrogenic cases. Scand J Infect Dis. 1990;22:249–257.

- , , , , . Bacterial spinal epidural abscess. Review of 43 cases and literature survey. Medicine (Baltimore). 1992;71:369–385.

- , , , , . Spinal epidural abscess: the importance of early diagnosis and treatment. J Neurol Neurosurg Psychiatry. 1998;65:209–212.

- , , . Epidural abscesses. Br J Anaesth. 2006;96:292–302.

- , . Spinal epidural abscess. Med Clin North Am. 1985;69;375–384.

- , , , . Spinal epidural abscess. J Emerg Med. 2010;39:384–390.

- , , . Spinal epidural abscess: clinical presentation, management and outcome [comment on Curry WT, Hoh BL, Hanjani SA, et al. Surg Neurol. 2005;63:364–371). Surg Neurol. 2005;64:279.

- , , , , . Routine replacement of tunneled, cuffed, hemodialysis catheters eliminates paraspinal/vertebral infections in patients with catheter‐associated bacteremia. Am J Nephrol. 2003;23:202–207.

- , . Paraplegia caused by spinal infection after acupuncture. Spinal Cord. 2006;44:258–259.

- , , , . Spinal epidural abscess after tattooing. Clin Infect Dis. 1999;29:225–226.

- . Nontuberculous spinal epidural infections. N Engl J Med. 1948;239:845–854.

- , , , . Prospective evaluation of a clinical decision guideline to diagnose spinal epidural abscess in patients who present to the emergency department with spine pain. J Neurosurg Spine. 2011;14:765–770.

- , , , . Spinal epidural abscess: clinical presentation, management, and outcome. Surg Neurol. 2005;63:364–371; discussion 371.

- , . Bone and joint tuberculosis. Eur Spine J. 2013;22:556–566.

- , . Surgical management of spinal epidural abscess: selection of approach based on MRI appearance. J Clin Neurosci. 2004;11:130–133.

- , . Infection as a cause of spinal cord compression: a review of 36 spinal epidural abscess cases. Acta Neurochir (Wien). 2000;142:17–23.

- , , , et al. Spondyloarthropathy from long‐term hemodialysis. Radiology. 1988;167:761–764.

- , , , . Spinal epidural abscess successfully treated with percutaneous, computed tomography‐guided, needle aspiration and parenteral antibiotic therapy: case report and review of the literature. Neurosurgery. 2002;51:509–512; discussion 512.

- , , , . CT‐guided core biopsy of subchondral bone and intervertebral space in suspected spondylodiskitis. AJR Am J Roentgenol. 2006;186:977–980.

- , , . Spinal epidural abscess: early clinical outcome in patients treated medically. Clin Orthop. 2005;439:56–60.

- , . Update on spinal epidural abscess: 35 cases and review of the literature. Rev Infect Dis. 1987;9:265–274.

- , , , , , . Is there a difference in neurologic outcome in medical versus early operative management of cervical epidural abscesses? Spine J. 2015;15:10–17.

- , , , . Medicolegal cases for spinal epidural hematoma and spinal epidural abscess. Orthopedics. 2013;36:48–53.

- , , , . Spinal epidural abscess—experience with 46 patients and evaluation of prognostic factors. J Infect. 2002;45:76–81.

- , , , et al. The clinical presentation and impact of diagnostic delays on emergency department patients with spinal epidural abscess. J Emerg Med. 2004;26:285–291.

- , , , . Spinal epidural abscess. N Engl J Med. 1975;293:463–468.

- , , , et al. Spinal epidural abscess: contemporary trends in etiology, evaluation, and management. Surg Neurol. 1999;52:189–196; discussion 197.

- . Spinal epidural abscess. N Engl J Med. 2006;355:2012–2020.

- , . Mortality, complication risk, and total charges after the treatment of epidural abscess. Spine J. 2015;15:249–255.

- , . Spinal epidural abscess. Curr Infect Dis Rep. 2004;16:436.

- , , , . Spinal epidural abscess: a ten‐year perspective. Neurosurgery. 1980;27:177–184.

- , , . Spinal epidural abscess: a meta‐analysis of 915 patients. Neurosurg Rev. 2000;23:175–204; discussion 205.

- , , , , , . Spinal epidural abscesses: risk factors, medical versus surgical management, a retrospective review of 128 cases. Spine J. 2014;14:326–330.

- , , , et al. Spontaneous spinal epidural abscess in patients 50 years of age and older: a 15‐year institutional perspective and review of the literature: clinical article. J Neurosurg Spine. 2014;20:344–349.

- , . Spinal epidural abscesses: clinical manifestations, prognostic factors, and outcomes. Neurosurgery. 2002;51:79–85; discussion 86–87.

- , , . Infectious agents in spinal epidural abscesses. Neurology. 1980;30:844–850.

- , , , , , . Cervical epidural abscess after epidural steroid injection. Spine (Phila Pa 1976). 2004;29:E7–E9.

- , , . Spinal epidural abscesses in adults: review and report of iatrogenic cases. Scand J Infect Dis. 1990;22:249–257.

- , , , , . Bacterial spinal epidural abscess. Review of 43 cases and literature survey. Medicine (Baltimore). 1992;71:369–385.

- , , , , . Spinal epidural abscess: the importance of early diagnosis and treatment. J Neurol Neurosurg Psychiatry. 1998;65:209–212.

- , , . Epidural abscesses. Br J Anaesth. 2006;96:292–302.

- , . Spinal epidural abscess. Med Clin North Am. 1985;69;375–384.

- , , , . Spinal epidural abscess. J Emerg Med. 2010;39:384–390.

- , , . Spinal epidural abscess: clinical presentation, management and outcome [comment on Curry WT, Hoh BL, Hanjani SA, et al. Surg Neurol. 2005;63:364–371). Surg Neurol. 2005;64:279.

- , , , , . Routine replacement of tunneled, cuffed, hemodialysis catheters eliminates paraspinal/vertebral infections in patients with catheter‐associated bacteremia. Am J Nephrol. 2003;23:202–207.

- , . Paraplegia caused by spinal infection after acupuncture. Spinal Cord. 2006;44:258–259.

- , , , . Spinal epidural abscess after tattooing. Clin Infect Dis. 1999;29:225–226.

- . Nontuberculous spinal epidural infections. N Engl J Med. 1948;239:845–854.

- , , , . Prospective evaluation of a clinical decision guideline to diagnose spinal epidural abscess in patients who present to the emergency department with spine pain. J Neurosurg Spine. 2011;14:765–770.

- , , , . Spinal epidural abscess: clinical presentation, management, and outcome. Surg Neurol. 2005;63:364–371; discussion 371.

- , . Bone and joint tuberculosis. Eur Spine J. 2013;22:556–566.

- , . Surgical management of spinal epidural abscess: selection of approach based on MRI appearance. J Clin Neurosci. 2004;11:130–133.

- , . Infection as a cause of spinal cord compression: a review of 36 spinal epidural abscess cases. Acta Neurochir (Wien). 2000;142:17–23.

- , , , et al. Spondyloarthropathy from long‐term hemodialysis. Radiology. 1988;167:761–764.

- , , , . Spinal epidural abscess successfully treated with percutaneous, computed tomography‐guided, needle aspiration and parenteral antibiotic therapy: case report and review of the literature. Neurosurgery. 2002;51:509–512; discussion 512.

- , , , . CT‐guided core biopsy of subchondral bone and intervertebral space in suspected spondylodiskitis. AJR Am J Roentgenol. 2006;186:977–980.

- , , . Spinal epidural abscess: early clinical outcome in patients treated medically. Clin Orthop. 2005;439:56–60.

- , . Update on spinal epidural abscess: 35 cases and review of the literature. Rev Infect Dis. 1987;9:265–274.

- , , , , , . Is there a difference in neurologic outcome in medical versus early operative management of cervical epidural abscesses? Spine J. 2015;15:10–17.

- , , , . Medicolegal cases for spinal epidural hematoma and spinal epidural abscess. Orthopedics. 2013;36:48–53.

- , , , . Spinal epidural abscess—experience with 46 patients and evaluation of prognostic factors. J Infect. 2002;45:76–81.

ACOG plans consensus conference on uniform guidelines for breast cancer screening

The Susan G. Komen Foundation estimates that 84% of breast cancers are found through mammography.1 Clearly, the value of mammography is proven. But controversy and confusion abound on how much mammography, and beginning at what age, is best for women.

Currently, the United States Preventive Services Task Force (USPSTF), the American Cancer Society (ACS), and the American College of Obstetricians and Gynecologists (ACOG) all have differing recommendations about mammography and about the importance of clinical breast examinations. These inconsistencies largely are due to different interpretations of the same data, not the data itself, and tend to center on how harm is defined and measured. Importantly, these differences can wreak havoc on our patients’ confidence in our counsel and decision making, and can complicate women’s access to screening. Under the Affordable Care Act, women are guaranteed coverage of annual mammograms, but new USPSTF recommendations, due out soon, may undermine that guarantee.