User login

Heart rate variability may be risk factor for depression, not a consequence

While some investigations have suggested that depression may lead to later unfavorable effects on heart rate variability, authors of a newly published study say they have found stronger evidence that the opposite is true.

Lower heart rate variability was independently associated with later increases in depressive symptoms, according to results of the longitudinal, twin difference study.

When researchers looked at the opposite direction, they found earlier depressive symptoms were associated with lower heart rate variability at follow-up in the study; however, investigators said those associations were not as robust and were largely explainable by antidepressant use.

That meant reduced heart rate variability is more likely a risk factor for depression, rather than a consequence, according to Minxuan Huang, ScM, of the department of epidemiology at Emory University Rollins School of Public Health, Atlanta, and his coinvestigators.

“These findings point to a central role of the autonomic nervous system in the regulation of mood and depression vulnerability,” they wrote in a report on the study appearing in JAMA Psychiatry.

The published analysis included 146 male twins, or 73 pairs, who participated in the national Vietnam Era Twin Registry.

Previous studies have linked depression to heart rate variability, a noninvasive index of cardiac autonomic nervous system regulation. However, these studies are not consistent on whether depression affects heart rate variability or vice versa, and the studies have been limited in their ability to assess the relationship between those two variables over time, the investigators said.

By contrast, the current study evaluated depression and heart rate variability at two time points: a baseline assessment conducted during 2002-2006 and a at a 7-year follow-up visit.

Investigators found consistent associations between heart rate variability on 24-hour electrocardiogram monitoring at baseline and scores on the Beck Depression Inventory-II at the 7-year follow-up, with beta coefficients ranging from –0.14 to –0.29, the report showed.

By contrast, the associations were less consistent between BDI-II score at the baseline visit and heart rate variability at follow-up, the investigators said. “These associations were largely explained by antidepressant use, which when added to the model, weakened the associations.”

These findings may help guide future research aimed at identifying individuals at a higher risk of later depression, the authors said, noting that treatment studies also are warranted. “Future interventions modulating autonomic nervous system regulation may be useful for the prevention and treatment of depression.” The study was supported by the National Institutes of Health. The Department of Veterans Affairs has supported the Vietnam Era Twin Registry. The researchers had no conflicts of interest.

SOURCE: Huang M et al. JAMA Psychiatry. 2018 May 16. doi: 10.1001/jamapsychiatry.2018.0747.

While some investigations have suggested that depression may lead to later unfavorable effects on heart rate variability, authors of a newly published study say they have found stronger evidence that the opposite is true.

Lower heart rate variability was independently associated with later increases in depressive symptoms, according to results of the longitudinal, twin difference study.

When researchers looked at the opposite direction, they found earlier depressive symptoms were associated with lower heart rate variability at follow-up in the study; however, investigators said those associations were not as robust and were largely explainable by antidepressant use.

That meant reduced heart rate variability is more likely a risk factor for depression, rather than a consequence, according to Minxuan Huang, ScM, of the department of epidemiology at Emory University Rollins School of Public Health, Atlanta, and his coinvestigators.

“These findings point to a central role of the autonomic nervous system in the regulation of mood and depression vulnerability,” they wrote in a report on the study appearing in JAMA Psychiatry.

The published analysis included 146 male twins, or 73 pairs, who participated in the national Vietnam Era Twin Registry.

Previous studies have linked depression to heart rate variability, a noninvasive index of cardiac autonomic nervous system regulation. However, these studies are not consistent on whether depression affects heart rate variability or vice versa, and the studies have been limited in their ability to assess the relationship between those two variables over time, the investigators said.

By contrast, the current study evaluated depression and heart rate variability at two time points: a baseline assessment conducted during 2002-2006 and a at a 7-year follow-up visit.

Investigators found consistent associations between heart rate variability on 24-hour electrocardiogram monitoring at baseline and scores on the Beck Depression Inventory-II at the 7-year follow-up, with beta coefficients ranging from –0.14 to –0.29, the report showed.

By contrast, the associations were less consistent between BDI-II score at the baseline visit and heart rate variability at follow-up, the investigators said. “These associations were largely explained by antidepressant use, which when added to the model, weakened the associations.”

These findings may help guide future research aimed at identifying individuals at a higher risk of later depression, the authors said, noting that treatment studies also are warranted. “Future interventions modulating autonomic nervous system regulation may be useful for the prevention and treatment of depression.” The study was supported by the National Institutes of Health. The Department of Veterans Affairs has supported the Vietnam Era Twin Registry. The researchers had no conflicts of interest.

SOURCE: Huang M et al. JAMA Psychiatry. 2018 May 16. doi: 10.1001/jamapsychiatry.2018.0747.

While some investigations have suggested that depression may lead to later unfavorable effects on heart rate variability, authors of a newly published study say they have found stronger evidence that the opposite is true.

Lower heart rate variability was independently associated with later increases in depressive symptoms, according to results of the longitudinal, twin difference study.

When researchers looked at the opposite direction, they found earlier depressive symptoms were associated with lower heart rate variability at follow-up in the study; however, investigators said those associations were not as robust and were largely explainable by antidepressant use.

That meant reduced heart rate variability is more likely a risk factor for depression, rather than a consequence, according to Minxuan Huang, ScM, of the department of epidemiology at Emory University Rollins School of Public Health, Atlanta, and his coinvestigators.

“These findings point to a central role of the autonomic nervous system in the regulation of mood and depression vulnerability,” they wrote in a report on the study appearing in JAMA Psychiatry.

The published analysis included 146 male twins, or 73 pairs, who participated in the national Vietnam Era Twin Registry.

Previous studies have linked depression to heart rate variability, a noninvasive index of cardiac autonomic nervous system regulation. However, these studies are not consistent on whether depression affects heart rate variability or vice versa, and the studies have been limited in their ability to assess the relationship between those two variables over time, the investigators said.

By contrast, the current study evaluated depression and heart rate variability at two time points: a baseline assessment conducted during 2002-2006 and a at a 7-year follow-up visit.

Investigators found consistent associations between heart rate variability on 24-hour electrocardiogram monitoring at baseline and scores on the Beck Depression Inventory-II at the 7-year follow-up, with beta coefficients ranging from –0.14 to –0.29, the report showed.

By contrast, the associations were less consistent between BDI-II score at the baseline visit and heart rate variability at follow-up, the investigators said. “These associations were largely explained by antidepressant use, which when added to the model, weakened the associations.”

These findings may help guide future research aimed at identifying individuals at a higher risk of later depression, the authors said, noting that treatment studies also are warranted. “Future interventions modulating autonomic nervous system regulation may be useful for the prevention and treatment of depression.” The study was supported by the National Institutes of Health. The Department of Veterans Affairs has supported the Vietnam Era Twin Registry. The researchers had no conflicts of interest.

SOURCE: Huang M et al. JAMA Psychiatry. 2018 May 16. doi: 10.1001/jamapsychiatry.2018.0747.

FROM JAMA PSYCHIATRY

Key clinical point:

Major finding: There were consistent associations between baseline heart rate variability and later depression scores (beta coefficients ranged from –0.14 to –0.29).

Study details: A longitudinal twin difference study including 166 individuals in the Vietnam Era Twin Registry, including baseline assessments conducted during 2002-2006 plus a 7-year follow-up visit.

Disclosures: The study was supported by the National Institutes of Health. The Department of Veterans Affairs has supported the Vietnam Era Twin Registry. Study authors had no conflicts of interest.

Source: Huang M et al. JAMA Psychiatry. 2018 May 16. doi: 10.1001/jamapsychiatry.2018.0747.

ECT cost effective in treatment-resistant depression

Electroconvulsive therapy might be a cost-effective treatment option for patients with treatment-resistant depression, results of a mathematical modeling analysis suggest.

The health-economic value of electroconvulsive therapy is most likely maximized when the intervention is tried after two failed lines of pharmacotherapy/psychotherapy, authors of the analysis said in JAMA Psychiatry.

“Increasing use of ECT by offering it earlier in the course of treatment-resistant depression could greatly improve outcomes for this difficult-to-treat patient population,” wrote Eric L. Ross, a medical student at the University of Michigan, Ann Arbor.

In clinical practice, patients with uncontrolled depression might not be offered electroconvulsive therapy for months or years, despite evidence from multiple studies that it is significantly more effective than pharmacotherapy in that setting, Mr. Ross and his coauthors in the university’s department of psychiatry wrote in their report.

One barrier to use of electroconvulsive therapy might be its cost, which they said can run in excess of $10,000 for initial therapy and maintenance treatments, compared with several hundred dollars for an antidepressant prescription.

However, data are limited showing the efficacy of electroconvulsive therapy in relation to that higher price tag. Accordingly, the investigators used a decision analytic model to simulate the clinical and economic effects of seven different electroconvulsive therapy treatment strategies, and calculated the incremental cost-effectiveness ratio of each.

, depending on the treatment strategy, the investigators found.

It was 74%-78% likely that electroconvulsive therapy would be cost effective, they added, based on commonly accepted cost-effectiveness thresholds, they said.

They found that third-line electroconvulsive therapy had an incremental cost-effectiveness ratio of $54,000 per quality-adjusted life-year. Based on that, investigators projected that the third-line strategy would be cost effective, considering a willingness-to-pay threshold of $100,000 per quality-adjusted life-year.

Although offering electroconvulsive therapy after two lines of pharmacotherapy/psychotherapy maximized its health-economic value in this analysis, the treatment still would be cost effective in patients with three or more previous treatments, authors said.

Based on those findings, Mr. Ross and his coauthors said they would recommend that patients with major depressive disorder be offered ECT when two or more trials of pharmacotherapy or psychotherapy have failed. That aligns with other recent recommendations, including 2017 Florida best practice guidelines that classify ECT as a level 3 treatment option, they said.

Mr. Ross and his coauthors cited several limitations. One is that much of the input data used in the mathematical model is more than 10 years old. In addition, other novel interventions for treatment-resistant depression – such as repetitive transcranial magnetic stimulation – were not evaluated.

The study was supported by the Department of Veterans Affairs Health Services Research & Development Services. The study authors had no conflicts of interest to report.

SOURCE: Ross EL et al. JAMA Psychiatry. 2018 May 9. doi: 10.1001/jamapsychiatry.2018.0768..

Electroconvulsive therapy might be a cost-effective treatment option for patients with treatment-resistant depression, results of a mathematical modeling analysis suggest.

The health-economic value of electroconvulsive therapy is most likely maximized when the intervention is tried after two failed lines of pharmacotherapy/psychotherapy, authors of the analysis said in JAMA Psychiatry.

“Increasing use of ECT by offering it earlier in the course of treatment-resistant depression could greatly improve outcomes for this difficult-to-treat patient population,” wrote Eric L. Ross, a medical student at the University of Michigan, Ann Arbor.

In clinical practice, patients with uncontrolled depression might not be offered electroconvulsive therapy for months or years, despite evidence from multiple studies that it is significantly more effective than pharmacotherapy in that setting, Mr. Ross and his coauthors in the university’s department of psychiatry wrote in their report.

One barrier to use of electroconvulsive therapy might be its cost, which they said can run in excess of $10,000 for initial therapy and maintenance treatments, compared with several hundred dollars for an antidepressant prescription.

However, data are limited showing the efficacy of electroconvulsive therapy in relation to that higher price tag. Accordingly, the investigators used a decision analytic model to simulate the clinical and economic effects of seven different electroconvulsive therapy treatment strategies, and calculated the incremental cost-effectiveness ratio of each.

, depending on the treatment strategy, the investigators found.

It was 74%-78% likely that electroconvulsive therapy would be cost effective, they added, based on commonly accepted cost-effectiveness thresholds, they said.

They found that third-line electroconvulsive therapy had an incremental cost-effectiveness ratio of $54,000 per quality-adjusted life-year. Based on that, investigators projected that the third-line strategy would be cost effective, considering a willingness-to-pay threshold of $100,000 per quality-adjusted life-year.

Although offering electroconvulsive therapy after two lines of pharmacotherapy/psychotherapy maximized its health-economic value in this analysis, the treatment still would be cost effective in patients with three or more previous treatments, authors said.

Based on those findings, Mr. Ross and his coauthors said they would recommend that patients with major depressive disorder be offered ECT when two or more trials of pharmacotherapy or psychotherapy have failed. That aligns with other recent recommendations, including 2017 Florida best practice guidelines that classify ECT as a level 3 treatment option, they said.

Mr. Ross and his coauthors cited several limitations. One is that much of the input data used in the mathematical model is more than 10 years old. In addition, other novel interventions for treatment-resistant depression – such as repetitive transcranial magnetic stimulation – were not evaluated.

The study was supported by the Department of Veterans Affairs Health Services Research & Development Services. The study authors had no conflicts of interest to report.

SOURCE: Ross EL et al. JAMA Psychiatry. 2018 May 9. doi: 10.1001/jamapsychiatry.2018.0768..

Electroconvulsive therapy might be a cost-effective treatment option for patients with treatment-resistant depression, results of a mathematical modeling analysis suggest.

The health-economic value of electroconvulsive therapy is most likely maximized when the intervention is tried after two failed lines of pharmacotherapy/psychotherapy, authors of the analysis said in JAMA Psychiatry.

“Increasing use of ECT by offering it earlier in the course of treatment-resistant depression could greatly improve outcomes for this difficult-to-treat patient population,” wrote Eric L. Ross, a medical student at the University of Michigan, Ann Arbor.

In clinical practice, patients with uncontrolled depression might not be offered electroconvulsive therapy for months or years, despite evidence from multiple studies that it is significantly more effective than pharmacotherapy in that setting, Mr. Ross and his coauthors in the university’s department of psychiatry wrote in their report.

One barrier to use of electroconvulsive therapy might be its cost, which they said can run in excess of $10,000 for initial therapy and maintenance treatments, compared with several hundred dollars for an antidepressant prescription.

However, data are limited showing the efficacy of electroconvulsive therapy in relation to that higher price tag. Accordingly, the investigators used a decision analytic model to simulate the clinical and economic effects of seven different electroconvulsive therapy treatment strategies, and calculated the incremental cost-effectiveness ratio of each.

, depending on the treatment strategy, the investigators found.

It was 74%-78% likely that electroconvulsive therapy would be cost effective, they added, based on commonly accepted cost-effectiveness thresholds, they said.

They found that third-line electroconvulsive therapy had an incremental cost-effectiveness ratio of $54,000 per quality-adjusted life-year. Based on that, investigators projected that the third-line strategy would be cost effective, considering a willingness-to-pay threshold of $100,000 per quality-adjusted life-year.

Although offering electroconvulsive therapy after two lines of pharmacotherapy/psychotherapy maximized its health-economic value in this analysis, the treatment still would be cost effective in patients with three or more previous treatments, authors said.

Based on those findings, Mr. Ross and his coauthors said they would recommend that patients with major depressive disorder be offered ECT when two or more trials of pharmacotherapy or psychotherapy have failed. That aligns with other recent recommendations, including 2017 Florida best practice guidelines that classify ECT as a level 3 treatment option, they said.

Mr. Ross and his coauthors cited several limitations. One is that much of the input data used in the mathematical model is more than 10 years old. In addition, other novel interventions for treatment-resistant depression – such as repetitive transcranial magnetic stimulation – were not evaluated.

The study was supported by the Department of Veterans Affairs Health Services Research & Development Services. The study authors had no conflicts of interest to report.

SOURCE: Ross EL et al. JAMA Psychiatry. 2018 May 9. doi: 10.1001/jamapsychiatry.2018.0768..

FROM JAMA Psychiatry

Key clinical point: Electroconvulsive therapy (ECT) should be considered as a third-line treatment after two or more lines of pharmacotherapy and/or psychotherapy have failed.

Major finding: Third-line ECT had an incremental cost-effectiveness ratio of $54,000/quality-adjusted life-year.

Study details: A simulation of depression treatment using a decision analytic model taking into account the efficacy, cost, and quality of life impact of ECT based on meta-analyses, randomized trials, and observational studies.

Disclosures: The study was supported by the Department of Veterans Affairs Health Services Research & Development Services. Authors had no conflicts of interest to report.

Source: Ross EL et al. JAMA Psychiatry. 2018 May 9. doi: 10.1001/jamapsychiatry.2018.0768.

ADHD, asthma Rxs up

Use of prescription medication overall decreased in children and adolescents over the past 15 years, but certain medication classes saw increases over that time period, according to a comprehensive analysis of cross-sectional, nationally representative survey data.

Reported use of any prescription medication in the past 30 days decreased from 25% during 1999-2002 to 22% during 2011-2014 (P = .04), according to the analysis based on data from 38,277 children and adolescents aged 0-19 years in the National Health and Nutrition Examination Survey (NHANES).

However, the study showed increases over time in prescribing of medications for asthma, ADHD, and contraception, according to Craig M. Hales, MD, of the National Center for Health Statistics, Centers for Disease Control and Prevention, Hyattsville, Md., and his coinvestigators.

“Monitoring trends in use of prescription medications among children and adolescents provides insights on several important public health concerns, such as shifting disease burden, changes in access to health care and medicines, increases in the adoption of appropriate therapies, and decreases in use of inappropriate or ineffective treatments,” Dr. Hales and his coauthors said.

They found significant linear trends in 14 therapeutic classes or subclasses, including six decreases and eight increases, when looking at combined survey data for reported use of any prescription medication and reported use of two or more prescription medications in the prior 30 days.

Of note, antibiotic usage decreased significantly from 8% during 1999-2002 to 5% during 2011-2014, including decreases in amoxicillin, amoxicillin/clavulanate, and cephalosporins. Likewise, antihistamine use was down over time, from 4% to 2%, as was use of upper respiratory combination medications, which decreased from 2% to 0.5%.

Conversely, they found prevalence of ADHD medication usage increased significantly from 3% during 1999-2002 to 4% during 2011-2014, including significant increases for both amphetamines and centrally acting adrenergic agents.

Asthma medication also increased, from 4% to 6%, including significant increases in inhaled corticosteroids and montelukast. Likewise, a significant increase in proton pump inhibitors was reported from 0.2% to 0.7%, while contraceptive use in girls increased significantly in prevalence, from 1% to 2%.

Taken together, these findings suggest an overall decrease in medication prescribing among children and adolescents, despite significantly increased prevalence of prescribing for certain drug classes, the investigators said.

They noted that the study had limitations. For example, NHANES does not include data on most over-the-counter medications, and for the drugs it does include, there are no data on dosages, frequency of use, or specific formulations, they said.

Dr. Hales and his coauthors had no conflicts of interest.

SOURCE: Hales CM et al. JAMA. 2018;319(19):2009-20.

These thorough analyses of medication prescribing for children and adolescents are much needed, but are “frustrating” because definitive conclusions cannot be drawn because of inherent limitations of the serial, cross-sectional study design, Gary L. Freed, MD, MPH, said in an editorial.

The study by Hales et al. shows an overall decrease in prescription medication use in children and adolescents based on data from the 1999-2014 National Health and Examination Survey (NHANES). The study shows increased use of medications for asthma, ADHD, and contraception, and decreased use of antibiotics, antihistamines, and upper respiratory combination medications.

“Some of these trends likely signal potential improvements in the care of children, others may suggest little progress has been made, and yet others are difficult to interpret with certainty,” Dr. Freed wrote.

One finding that seems clear in the data, according to Dr. Freed, is a decrease in antibiotic use among children and adolescents, from 8% to 5% from the 1999-2002 to 2011-2014 time period. That likely reflects the success of efforts to decrease overuse of these agents in community settings.

On the other hand, the decreased use of antihistamines documented in this study may reflect the success of efforts to reduce overuse, or the fact that several prescription medications became approved for OTC use over the course of the study. NHANES does not include OTC drug data in its survey.

“It is unclear whether there was simply a substitution effect and the actual overall rate of utilization of these medications was unchanged,” Dr. Freed wrote.

Increased amphetamine use for the treatment of children aged 6-11 years with ADHD is “vexing” to see, but again, caution must be exercised in interpreting the results, he said, because they do not clearly demonstrate whether these agents are being overused or underused.

“The findings reported by Hales et al. will require additional studies, using different data sources, to provide clarity in the clinical and policy implications of recent trends in medication use among children,” Dr. Freed wrote.

Dr. Freed is a pediatrician with the Child Health Evaluation and Research Center, University of Michigan, Ann Arbor. These comments are derived from his editorial accompanying the study by Hales et al. (JAMA. 2018;319[19]:1988-9). Dr. Freed had no conflicts of interest.

These thorough analyses of medication prescribing for children and adolescents are much needed, but are “frustrating” because definitive conclusions cannot be drawn because of inherent limitations of the serial, cross-sectional study design, Gary L. Freed, MD, MPH, said in an editorial.

The study by Hales et al. shows an overall decrease in prescription medication use in children and adolescents based on data from the 1999-2014 National Health and Examination Survey (NHANES). The study shows increased use of medications for asthma, ADHD, and contraception, and decreased use of antibiotics, antihistamines, and upper respiratory combination medications.

“Some of these trends likely signal potential improvements in the care of children, others may suggest little progress has been made, and yet others are difficult to interpret with certainty,” Dr. Freed wrote.

One finding that seems clear in the data, according to Dr. Freed, is a decrease in antibiotic use among children and adolescents, from 8% to 5% from the 1999-2002 to 2011-2014 time period. That likely reflects the success of efforts to decrease overuse of these agents in community settings.

On the other hand, the decreased use of antihistamines documented in this study may reflect the success of efforts to reduce overuse, or the fact that several prescription medications became approved for OTC use over the course of the study. NHANES does not include OTC drug data in its survey.

“It is unclear whether there was simply a substitution effect and the actual overall rate of utilization of these medications was unchanged,” Dr. Freed wrote.

Increased amphetamine use for the treatment of children aged 6-11 years with ADHD is “vexing” to see, but again, caution must be exercised in interpreting the results, he said, because they do not clearly demonstrate whether these agents are being overused or underused.

“The findings reported by Hales et al. will require additional studies, using different data sources, to provide clarity in the clinical and policy implications of recent trends in medication use among children,” Dr. Freed wrote.

Dr. Freed is a pediatrician with the Child Health Evaluation and Research Center, University of Michigan, Ann Arbor. These comments are derived from his editorial accompanying the study by Hales et al. (JAMA. 2018;319[19]:1988-9). Dr. Freed had no conflicts of interest.

These thorough analyses of medication prescribing for children and adolescents are much needed, but are “frustrating” because definitive conclusions cannot be drawn because of inherent limitations of the serial, cross-sectional study design, Gary L. Freed, MD, MPH, said in an editorial.

The study by Hales et al. shows an overall decrease in prescription medication use in children and adolescents based on data from the 1999-2014 National Health and Examination Survey (NHANES). The study shows increased use of medications for asthma, ADHD, and contraception, and decreased use of antibiotics, antihistamines, and upper respiratory combination medications.

“Some of these trends likely signal potential improvements in the care of children, others may suggest little progress has been made, and yet others are difficult to interpret with certainty,” Dr. Freed wrote.

One finding that seems clear in the data, according to Dr. Freed, is a decrease in antibiotic use among children and adolescents, from 8% to 5% from the 1999-2002 to 2011-2014 time period. That likely reflects the success of efforts to decrease overuse of these agents in community settings.

On the other hand, the decreased use of antihistamines documented in this study may reflect the success of efforts to reduce overuse, or the fact that several prescription medications became approved for OTC use over the course of the study. NHANES does not include OTC drug data in its survey.

“It is unclear whether there was simply a substitution effect and the actual overall rate of utilization of these medications was unchanged,” Dr. Freed wrote.

Increased amphetamine use for the treatment of children aged 6-11 years with ADHD is “vexing” to see, but again, caution must be exercised in interpreting the results, he said, because they do not clearly demonstrate whether these agents are being overused or underused.

“The findings reported by Hales et al. will require additional studies, using different data sources, to provide clarity in the clinical and policy implications of recent trends in medication use among children,” Dr. Freed wrote.

Dr. Freed is a pediatrician with the Child Health Evaluation and Research Center, University of Michigan, Ann Arbor. These comments are derived from his editorial accompanying the study by Hales et al. (JAMA. 2018;319[19]:1988-9). Dr. Freed had no conflicts of interest.

Use of prescription medication overall decreased in children and adolescents over the past 15 years, but certain medication classes saw increases over that time period, according to a comprehensive analysis of cross-sectional, nationally representative survey data.

Reported use of any prescription medication in the past 30 days decreased from 25% during 1999-2002 to 22% during 2011-2014 (P = .04), according to the analysis based on data from 38,277 children and adolescents aged 0-19 years in the National Health and Nutrition Examination Survey (NHANES).

However, the study showed increases over time in prescribing of medications for asthma, ADHD, and contraception, according to Craig M. Hales, MD, of the National Center for Health Statistics, Centers for Disease Control and Prevention, Hyattsville, Md., and his coinvestigators.

“Monitoring trends in use of prescription medications among children and adolescents provides insights on several important public health concerns, such as shifting disease burden, changes in access to health care and medicines, increases in the adoption of appropriate therapies, and decreases in use of inappropriate or ineffective treatments,” Dr. Hales and his coauthors said.

They found significant linear trends in 14 therapeutic classes or subclasses, including six decreases and eight increases, when looking at combined survey data for reported use of any prescription medication and reported use of two or more prescription medications in the prior 30 days.

Of note, antibiotic usage decreased significantly from 8% during 1999-2002 to 5% during 2011-2014, including decreases in amoxicillin, amoxicillin/clavulanate, and cephalosporins. Likewise, antihistamine use was down over time, from 4% to 2%, as was use of upper respiratory combination medications, which decreased from 2% to 0.5%.

Conversely, they found prevalence of ADHD medication usage increased significantly from 3% during 1999-2002 to 4% during 2011-2014, including significant increases for both amphetamines and centrally acting adrenergic agents.

Asthma medication also increased, from 4% to 6%, including significant increases in inhaled corticosteroids and montelukast. Likewise, a significant increase in proton pump inhibitors was reported from 0.2% to 0.7%, while contraceptive use in girls increased significantly in prevalence, from 1% to 2%.

Taken together, these findings suggest an overall decrease in medication prescribing among children and adolescents, despite significantly increased prevalence of prescribing for certain drug classes, the investigators said.

They noted that the study had limitations. For example, NHANES does not include data on most over-the-counter medications, and for the drugs it does include, there are no data on dosages, frequency of use, or specific formulations, they said.

Dr. Hales and his coauthors had no conflicts of interest.

SOURCE: Hales CM et al. JAMA. 2018;319(19):2009-20.

Use of prescription medication overall decreased in children and adolescents over the past 15 years, but certain medication classes saw increases over that time period, according to a comprehensive analysis of cross-sectional, nationally representative survey data.

Reported use of any prescription medication in the past 30 days decreased from 25% during 1999-2002 to 22% during 2011-2014 (P = .04), according to the analysis based on data from 38,277 children and adolescents aged 0-19 years in the National Health and Nutrition Examination Survey (NHANES).

However, the study showed increases over time in prescribing of medications for asthma, ADHD, and contraception, according to Craig M. Hales, MD, of the National Center for Health Statistics, Centers for Disease Control and Prevention, Hyattsville, Md., and his coinvestigators.

“Monitoring trends in use of prescription medications among children and adolescents provides insights on several important public health concerns, such as shifting disease burden, changes in access to health care and medicines, increases in the adoption of appropriate therapies, and decreases in use of inappropriate or ineffective treatments,” Dr. Hales and his coauthors said.

They found significant linear trends in 14 therapeutic classes or subclasses, including six decreases and eight increases, when looking at combined survey data for reported use of any prescription medication and reported use of two or more prescription medications in the prior 30 days.

Of note, antibiotic usage decreased significantly from 8% during 1999-2002 to 5% during 2011-2014, including decreases in amoxicillin, amoxicillin/clavulanate, and cephalosporins. Likewise, antihistamine use was down over time, from 4% to 2%, as was use of upper respiratory combination medications, which decreased from 2% to 0.5%.

Conversely, they found prevalence of ADHD medication usage increased significantly from 3% during 1999-2002 to 4% during 2011-2014, including significant increases for both amphetamines and centrally acting adrenergic agents.

Asthma medication also increased, from 4% to 6%, including significant increases in inhaled corticosteroids and montelukast. Likewise, a significant increase in proton pump inhibitors was reported from 0.2% to 0.7%, while contraceptive use in girls increased significantly in prevalence, from 1% to 2%.

Taken together, these findings suggest an overall decrease in medication prescribing among children and adolescents, despite significantly increased prevalence of prescribing for certain drug classes, the investigators said.

They noted that the study had limitations. For example, NHANES does not include data on most over-the-counter medications, and for the drugs it does include, there are no data on dosages, frequency of use, or specific formulations, they said.

Dr. Hales and his coauthors had no conflicts of interest.

SOURCE: Hales CM et al. JAMA. 2018;319(19):2009-20.

FROM JAMA

Key clinical point: Nationally representative survey data demonstrate an overall decrease in use of any medication among children and adolescents, although use of certain medications increased.

Major finding: (P = 0.04).

Study details: Analysis of survey data for U.S. children and adolescents aged 0-19 years in the 1999-2014 NHANES.

Disclosures: Dr. Hales and his coauthors had no conflicts of interest.

Source: Hales CM et al. JAMA. 2018;319(19):2009-20.

Fremanezumab may be an effective episodic migraine treatment

Patients with episodic migraines had significantly fewer headache days when treated with a monoclonal antibody targeting calcitonin gene–related peptide (CGRP), results of a recent randomized clinical trial show.

The treatment was generally well tolerated, and also improved secondary efficacy outcomes; however, the investigators qualified the results, noting that the trial included patients who had failed two or fewer previous classes of migraine preventive medication.

“Further research is needed to assess effectiveness against other preventive medications, and in patients in whom multiple preventive drug classes have failed,” wrote David W. Dodick, MD, of Mayo Clinic, Phoenix, Ariz., and his coauthors. Long-term safety and efficacy also are needed, they added. Their report was published in JAMA.

Fremanezumab is a subcutaneously administered, fully humanized monoclonal antibody that binds to the CGRP ligand. A previous randomized phase 2b study showed that the treatment was effective in preventing migraine, with no serious treatment-related adverse events.

The current 12-week phase 3 trial (clinicaltrials.gov Identifier: NCT02629861) was designed to evaluate the efficacy and safety of fremanezumab in two different dosing regimens. A total of 875 patients (742 women; 85%) with episodic migraine were randomly assigned to fremanezumab monthly dosing (225 mg at baseline, 4 weeks, and 8 weeks), a single higher dose of fremanezumab (675 mg at baseline) intended to support a quarterly dosing regimen, or placebo.

Both dosing approaches significantly reduced the mean number of migraine days per month vs. placebo, Dr. Dodick and his coinvestigators reported.

In the monthly dosing group, the mean number of migraine days per month decreased from 8.9 to 4.9, and compared with placebo (with a decrease from 9.1 to 6.5 days), the mean number of migraine days at 12 weeks was 1.5 days lower (P less than .001). Similarly, the mean number of migraine days decreased from 9.2 to 5.3 days in the single higher dose group, with a difference of 1.3 days vs. placebo (P less than .001).

Significantly more patients receiving fremanezumab had a 50% or greater reduction in mean number of migraine days per month, suggesting a clinical response to the CGRP monoclonal antibody, the investigators said.

The most common adverse events leading to discontinuation included erythema at the injection site in three patients, along with induration, diarrhea, anxiety, and depression occurring in two patients each, according to the report. There was one death in the study due to suicide 109 days after the patient received a single higher dose of the study drug. However, the investigators determined that the death was unrelated to treatment.

One limitation of the study, investigators said, is that it excluded patients with treatment refractory migraine who had failed at least two previous preventive drug classes, and those who had continuous headache.

“Further studies are needed to define the full spectrum of efficacy and tolerability of fremanezumab, including in patients who are treatment refractory and who have a range of coexistent diseases,” they wrote.

Teva Pharmaceuticals supported the study. Dr. Dodick and his coauthors reported disclosures related to Teva, Amgen, Novartis, Pfizer, and Merck, among other entities.

SOURCE: Dodick DW et al. JAMA. 2018.319[19]:1999-2008.

While this report on fremanezumab by Dr. Dodick and colleagues adds important evidence on the efficacy and safety of CGRP monoclonal antibodies, authors of a related editorial said several questions remain regarding this particular clinical trial and the drug class in general.

Of note, the study of fremanezumab excluded patients who failed two or more previous classes of medications. “This means the results of this trial may not necessarily apply to patients with severe, treatment-resistant migraine, who are the patients most likely to be prescribed and have access to these treatments in clinical practice,” Elizabeth W. Loder, MD, MPH, and Matthew S. Robbins, MD, wrote in their editorial (JAMA 2018 319[19]:1985-7).

Patients who received monthly fremanezumab had fewer migraine days per month versus placebo, as did patients who received a single higher dose of the medication. However, in both instances, the differences were smaller than the 1.6-day difference that was specified in the sample size calculation, added Dr. Loder and Dr. Robbins.

Though long-term safety data are needed, fremanezumab seemed generally well tolerated over 12 weeks in this study. “An important apparent benefit of fremanezumab and the other 3 CGRP monoclonal antibodies in development is their low burden of common nuisance adverse events,” they wrote.

However, it is “sobering to consider” the three deaths documented in clinical trials of CGRP monoclonal antibodies, they said. That total includes one patient in this study who committed suicide 109 days after receiving a 675-mg dose of fremanezumab.

The death may not have been related to treatment, the editorial authors said, noting that depression and other affective disorders are often comorbid with migraine.

“The [Food and Drug Administration] undoubtedly will scrutinize the deaths and adverse events reported in the trials of fremanezumab and other CGRP monoclonal antibodies,” they wrote. “If the result is restrictive labeling, it could greatly limit the patient population for these drugs, which are in any case likely to prove costly and challenging for patients to access.”

Dr. Loder is in the division of headache, department of neurology, Brigham and Women’s Hospital, Harvard Medical School, Boston. Dr. Robbins is with Montefiore Headache Center, Albert Einstein College of Medicine, New York. These comments are derived from their editorial in JAMA. Dr Loder reported receiving grants and other funds from companies developing CGRP antibodies. Dr. Robbins reported that he is principal investigator for a clinical trial sponsored by eNeura.

While this report on fremanezumab by Dr. Dodick and colleagues adds important evidence on the efficacy and safety of CGRP monoclonal antibodies, authors of a related editorial said several questions remain regarding this particular clinical trial and the drug class in general.

Of note, the study of fremanezumab excluded patients who failed two or more previous classes of medications. “This means the results of this trial may not necessarily apply to patients with severe, treatment-resistant migraine, who are the patients most likely to be prescribed and have access to these treatments in clinical practice,” Elizabeth W. Loder, MD, MPH, and Matthew S. Robbins, MD, wrote in their editorial (JAMA 2018 319[19]:1985-7).

Patients who received monthly fremanezumab had fewer migraine days per month versus placebo, as did patients who received a single higher dose of the medication. However, in both instances, the differences were smaller than the 1.6-day difference that was specified in the sample size calculation, added Dr. Loder and Dr. Robbins.

Though long-term safety data are needed, fremanezumab seemed generally well tolerated over 12 weeks in this study. “An important apparent benefit of fremanezumab and the other 3 CGRP monoclonal antibodies in development is their low burden of common nuisance adverse events,” they wrote.

However, it is “sobering to consider” the three deaths documented in clinical trials of CGRP monoclonal antibodies, they said. That total includes one patient in this study who committed suicide 109 days after receiving a 675-mg dose of fremanezumab.

The death may not have been related to treatment, the editorial authors said, noting that depression and other affective disorders are often comorbid with migraine.

“The [Food and Drug Administration] undoubtedly will scrutinize the deaths and adverse events reported in the trials of fremanezumab and other CGRP monoclonal antibodies,” they wrote. “If the result is restrictive labeling, it could greatly limit the patient population for these drugs, which are in any case likely to prove costly and challenging for patients to access.”

Dr. Loder is in the division of headache, department of neurology, Brigham and Women’s Hospital, Harvard Medical School, Boston. Dr. Robbins is with Montefiore Headache Center, Albert Einstein College of Medicine, New York. These comments are derived from their editorial in JAMA. Dr Loder reported receiving grants and other funds from companies developing CGRP antibodies. Dr. Robbins reported that he is principal investigator for a clinical trial sponsored by eNeura.

While this report on fremanezumab by Dr. Dodick and colleagues adds important evidence on the efficacy and safety of CGRP monoclonal antibodies, authors of a related editorial said several questions remain regarding this particular clinical trial and the drug class in general.

Of note, the study of fremanezumab excluded patients who failed two or more previous classes of medications. “This means the results of this trial may not necessarily apply to patients with severe, treatment-resistant migraine, who are the patients most likely to be prescribed and have access to these treatments in clinical practice,” Elizabeth W. Loder, MD, MPH, and Matthew S. Robbins, MD, wrote in their editorial (JAMA 2018 319[19]:1985-7).

Patients who received monthly fremanezumab had fewer migraine days per month versus placebo, as did patients who received a single higher dose of the medication. However, in both instances, the differences were smaller than the 1.6-day difference that was specified in the sample size calculation, added Dr. Loder and Dr. Robbins.

Though long-term safety data are needed, fremanezumab seemed generally well tolerated over 12 weeks in this study. “An important apparent benefit of fremanezumab and the other 3 CGRP monoclonal antibodies in development is their low burden of common nuisance adverse events,” they wrote.

However, it is “sobering to consider” the three deaths documented in clinical trials of CGRP monoclonal antibodies, they said. That total includes one patient in this study who committed suicide 109 days after receiving a 675-mg dose of fremanezumab.

The death may not have been related to treatment, the editorial authors said, noting that depression and other affective disorders are often comorbid with migraine.

“The [Food and Drug Administration] undoubtedly will scrutinize the deaths and adverse events reported in the trials of fremanezumab and other CGRP monoclonal antibodies,” they wrote. “If the result is restrictive labeling, it could greatly limit the patient population for these drugs, which are in any case likely to prove costly and challenging for patients to access.”

Dr. Loder is in the division of headache, department of neurology, Brigham and Women’s Hospital, Harvard Medical School, Boston. Dr. Robbins is with Montefiore Headache Center, Albert Einstein College of Medicine, New York. These comments are derived from their editorial in JAMA. Dr Loder reported receiving grants and other funds from companies developing CGRP antibodies. Dr. Robbins reported that he is principal investigator for a clinical trial sponsored by eNeura.

Patients with episodic migraines had significantly fewer headache days when treated with a monoclonal antibody targeting calcitonin gene–related peptide (CGRP), results of a recent randomized clinical trial show.

The treatment was generally well tolerated, and also improved secondary efficacy outcomes; however, the investigators qualified the results, noting that the trial included patients who had failed two or fewer previous classes of migraine preventive medication.

“Further research is needed to assess effectiveness against other preventive medications, and in patients in whom multiple preventive drug classes have failed,” wrote David W. Dodick, MD, of Mayo Clinic, Phoenix, Ariz., and his coauthors. Long-term safety and efficacy also are needed, they added. Their report was published in JAMA.

Fremanezumab is a subcutaneously administered, fully humanized monoclonal antibody that binds to the CGRP ligand. A previous randomized phase 2b study showed that the treatment was effective in preventing migraine, with no serious treatment-related adverse events.

The current 12-week phase 3 trial (clinicaltrials.gov Identifier: NCT02629861) was designed to evaluate the efficacy and safety of fremanezumab in two different dosing regimens. A total of 875 patients (742 women; 85%) with episodic migraine were randomly assigned to fremanezumab monthly dosing (225 mg at baseline, 4 weeks, and 8 weeks), a single higher dose of fremanezumab (675 mg at baseline) intended to support a quarterly dosing regimen, or placebo.

Both dosing approaches significantly reduced the mean number of migraine days per month vs. placebo, Dr. Dodick and his coinvestigators reported.

In the monthly dosing group, the mean number of migraine days per month decreased from 8.9 to 4.9, and compared with placebo (with a decrease from 9.1 to 6.5 days), the mean number of migraine days at 12 weeks was 1.5 days lower (P less than .001). Similarly, the mean number of migraine days decreased from 9.2 to 5.3 days in the single higher dose group, with a difference of 1.3 days vs. placebo (P less than .001).

Significantly more patients receiving fremanezumab had a 50% or greater reduction in mean number of migraine days per month, suggesting a clinical response to the CGRP monoclonal antibody, the investigators said.

The most common adverse events leading to discontinuation included erythema at the injection site in three patients, along with induration, diarrhea, anxiety, and depression occurring in two patients each, according to the report. There was one death in the study due to suicide 109 days after the patient received a single higher dose of the study drug. However, the investigators determined that the death was unrelated to treatment.

One limitation of the study, investigators said, is that it excluded patients with treatment refractory migraine who had failed at least two previous preventive drug classes, and those who had continuous headache.

“Further studies are needed to define the full spectrum of efficacy and tolerability of fremanezumab, including in patients who are treatment refractory and who have a range of coexistent diseases,” they wrote.

Teva Pharmaceuticals supported the study. Dr. Dodick and his coauthors reported disclosures related to Teva, Amgen, Novartis, Pfizer, and Merck, among other entities.

SOURCE: Dodick DW et al. JAMA. 2018.319[19]:1999-2008.

Patients with episodic migraines had significantly fewer headache days when treated with a monoclonal antibody targeting calcitonin gene–related peptide (CGRP), results of a recent randomized clinical trial show.

The treatment was generally well tolerated, and also improved secondary efficacy outcomes; however, the investigators qualified the results, noting that the trial included patients who had failed two or fewer previous classes of migraine preventive medication.

“Further research is needed to assess effectiveness against other preventive medications, and in patients in whom multiple preventive drug classes have failed,” wrote David W. Dodick, MD, of Mayo Clinic, Phoenix, Ariz., and his coauthors. Long-term safety and efficacy also are needed, they added. Their report was published in JAMA.

Fremanezumab is a subcutaneously administered, fully humanized monoclonal antibody that binds to the CGRP ligand. A previous randomized phase 2b study showed that the treatment was effective in preventing migraine, with no serious treatment-related adverse events.

The current 12-week phase 3 trial (clinicaltrials.gov Identifier: NCT02629861) was designed to evaluate the efficacy and safety of fremanezumab in two different dosing regimens. A total of 875 patients (742 women; 85%) with episodic migraine were randomly assigned to fremanezumab monthly dosing (225 mg at baseline, 4 weeks, and 8 weeks), a single higher dose of fremanezumab (675 mg at baseline) intended to support a quarterly dosing regimen, or placebo.

Both dosing approaches significantly reduced the mean number of migraine days per month vs. placebo, Dr. Dodick and his coinvestigators reported.

In the monthly dosing group, the mean number of migraine days per month decreased from 8.9 to 4.9, and compared with placebo (with a decrease from 9.1 to 6.5 days), the mean number of migraine days at 12 weeks was 1.5 days lower (P less than .001). Similarly, the mean number of migraine days decreased from 9.2 to 5.3 days in the single higher dose group, with a difference of 1.3 days vs. placebo (P less than .001).

Significantly more patients receiving fremanezumab had a 50% or greater reduction in mean number of migraine days per month, suggesting a clinical response to the CGRP monoclonal antibody, the investigators said.

The most common adverse events leading to discontinuation included erythema at the injection site in three patients, along with induration, diarrhea, anxiety, and depression occurring in two patients each, according to the report. There was one death in the study due to suicide 109 days after the patient received a single higher dose of the study drug. However, the investigators determined that the death was unrelated to treatment.

One limitation of the study, investigators said, is that it excluded patients with treatment refractory migraine who had failed at least two previous preventive drug classes, and those who had continuous headache.

“Further studies are needed to define the full spectrum of efficacy and tolerability of fremanezumab, including in patients who are treatment refractory and who have a range of coexistent diseases,” they wrote.

Teva Pharmaceuticals supported the study. Dr. Dodick and his coauthors reported disclosures related to Teva, Amgen, Novartis, Pfizer, and Merck, among other entities.

SOURCE: Dodick DW et al. JAMA. 2018.319[19]:1999-2008.

FROM JAMA

Key clinical point: The CGRP monoclonal antibody fremanezumab significantly reduced the mean number of migraine days in patients with episodic migraine who had not previously failed multiple medication classes.

Major finding: The mean number of migraine days at 12 weeks was 1.5 days lower with monthly dosing and 1.3 days lower with a single higher dose.

Study details: A randomized clinical trial of 875 patients with episodic migraine who received fremanezumab monthly dosing, a single higher dose of fremanezumab, or placebo.

Disclosures: Teva Pharmaceuticals supported the study. The study authors reported disclosures related to Teva, Amgen, Novartis, Pfizer, and Merck, among other entities.

Source: Dodick DW et al. JAMA. 2018. 319(19):1999-2008.

Advanced adenoma on colonoscopy linked to increased colorectal cancer incidence

Advanced adenomas found on diagnostic colonoscopy were associated with increased risk of developing colorectal cancer, while nonadvanced adenomas were not, according to long-term follow-up results from a large screening study.

The findings come from a post hoc analysis of the Prostate, Lung, Colorectal, and Ovarian (PLCO) cancer screening trial that enrolled 154,900 individuals, of whom 15,935 underwent colonoscopy following an abnormal flexible sigmoidoscopy screening result.

With a median of 13 years of follow-up, the incidence of colorectal cancer was 20.0 per 10,000 person-years for patients who had advanced adenoma found on colonoscopy, according to a report on the study published in JAMA. By comparison, colorectal cancer incidence was 9.1 and 7.5 per 10,000 person-years for nonadvanced adenoma and no adenoma, respectively.

“By demonstrating that individuals diagnosed with an advanced adenoma are at increased long-term risk for subsequent incident CRC, these findings support periodic, ongoing surveillance colonoscopy in these patients,” wrote Benjamin Click, MD, of the division of gastroenterology, hepatology, and nutrition, University of Pittsburgh, and his coauthors.

Compared with patients who had no adenoma, those with advanced adenoma were significantly more likely to develop colorectal cancer (rate ratio, 2.7; 95% confidence interval, 1.9-3.7; P less than .001). By contrast, there was no significant difference in risk of colorectal cancer for patients with nonadvanced adenoma and no adenoma (RR, 1.2; 95% CI, 0.8-1.7; P = .30).

Risk of death related to colorectal cancer was also significantly increased for patients with advanced adenoma versus no adenoma, and again, the investigators said, no such difference in mortality was found when nonadvanced adenoma was compared with no adenoma.

The PLCO screening study enrolled men and women aged 55-74 years beginning in 1993, with follow-up continuing until Dec. 31, 2013.

Small, nonadvanced adenomas are commonly found in colonoscopy, occurring in approximately 30% of patients, the investigators said. In the United States, when patients have one to two nonadvanced adenomas, they are typically advised to return in 5-10 years, the researchers noted. However, evidence is lacking in terms of who should return in 5 years, as opposed to 10 years.

“If appropriately powered prospective trials were to replicate these findings, demonstrating no significant difference in cancer incidence between participants with 1 to 2 nonadvanced adenoma(s) and no adenomas, colonoscopy use could be reduced by a large extent, as a surveillance examination at 5 years would not be needed,” the study authors said.

The National Cancer Institute Division of Cancer Prevention supported the study. One author reported receiving grant support from Medtronic.

SOURCE: Click B et al. JAMA. 2018;319(19):2021-31.

Advanced adenomas found on diagnostic colonoscopy were associated with increased risk of developing colorectal cancer, while nonadvanced adenomas were not, according to long-term follow-up results from a large screening study.

The findings come from a post hoc analysis of the Prostate, Lung, Colorectal, and Ovarian (PLCO) cancer screening trial that enrolled 154,900 individuals, of whom 15,935 underwent colonoscopy following an abnormal flexible sigmoidoscopy screening result.

With a median of 13 years of follow-up, the incidence of colorectal cancer was 20.0 per 10,000 person-years for patients who had advanced adenoma found on colonoscopy, according to a report on the study published in JAMA. By comparison, colorectal cancer incidence was 9.1 and 7.5 per 10,000 person-years for nonadvanced adenoma and no adenoma, respectively.

“By demonstrating that individuals diagnosed with an advanced adenoma are at increased long-term risk for subsequent incident CRC, these findings support periodic, ongoing surveillance colonoscopy in these patients,” wrote Benjamin Click, MD, of the division of gastroenterology, hepatology, and nutrition, University of Pittsburgh, and his coauthors.

Compared with patients who had no adenoma, those with advanced adenoma were significantly more likely to develop colorectal cancer (rate ratio, 2.7; 95% confidence interval, 1.9-3.7; P less than .001). By contrast, there was no significant difference in risk of colorectal cancer for patients with nonadvanced adenoma and no adenoma (RR, 1.2; 95% CI, 0.8-1.7; P = .30).

Risk of death related to colorectal cancer was also significantly increased for patients with advanced adenoma versus no adenoma, and again, the investigators said, no such difference in mortality was found when nonadvanced adenoma was compared with no adenoma.

The PLCO screening study enrolled men and women aged 55-74 years beginning in 1993, with follow-up continuing until Dec. 31, 2013.

Small, nonadvanced adenomas are commonly found in colonoscopy, occurring in approximately 30% of patients, the investigators said. In the United States, when patients have one to two nonadvanced adenomas, they are typically advised to return in 5-10 years, the researchers noted. However, evidence is lacking in terms of who should return in 5 years, as opposed to 10 years.

“If appropriately powered prospective trials were to replicate these findings, demonstrating no significant difference in cancer incidence between participants with 1 to 2 nonadvanced adenoma(s) and no adenomas, colonoscopy use could be reduced by a large extent, as a surveillance examination at 5 years would not be needed,” the study authors said.

The National Cancer Institute Division of Cancer Prevention supported the study. One author reported receiving grant support from Medtronic.

SOURCE: Click B et al. JAMA. 2018;319(19):2021-31.

Advanced adenomas found on diagnostic colonoscopy were associated with increased risk of developing colorectal cancer, while nonadvanced adenomas were not, according to long-term follow-up results from a large screening study.

The findings come from a post hoc analysis of the Prostate, Lung, Colorectal, and Ovarian (PLCO) cancer screening trial that enrolled 154,900 individuals, of whom 15,935 underwent colonoscopy following an abnormal flexible sigmoidoscopy screening result.

With a median of 13 years of follow-up, the incidence of colorectal cancer was 20.0 per 10,000 person-years for patients who had advanced adenoma found on colonoscopy, according to a report on the study published in JAMA. By comparison, colorectal cancer incidence was 9.1 and 7.5 per 10,000 person-years for nonadvanced adenoma and no adenoma, respectively.

“By demonstrating that individuals diagnosed with an advanced adenoma are at increased long-term risk for subsequent incident CRC, these findings support periodic, ongoing surveillance colonoscopy in these patients,” wrote Benjamin Click, MD, of the division of gastroenterology, hepatology, and nutrition, University of Pittsburgh, and his coauthors.

Compared with patients who had no adenoma, those with advanced adenoma were significantly more likely to develop colorectal cancer (rate ratio, 2.7; 95% confidence interval, 1.9-3.7; P less than .001). By contrast, there was no significant difference in risk of colorectal cancer for patients with nonadvanced adenoma and no adenoma (RR, 1.2; 95% CI, 0.8-1.7; P = .30).

Risk of death related to colorectal cancer was also significantly increased for patients with advanced adenoma versus no adenoma, and again, the investigators said, no such difference in mortality was found when nonadvanced adenoma was compared with no adenoma.

The PLCO screening study enrolled men and women aged 55-74 years beginning in 1993, with follow-up continuing until Dec. 31, 2013.

Small, nonadvanced adenomas are commonly found in colonoscopy, occurring in approximately 30% of patients, the investigators said. In the United States, when patients have one to two nonadvanced adenomas, they are typically advised to return in 5-10 years, the researchers noted. However, evidence is lacking in terms of who should return in 5 years, as opposed to 10 years.

“If appropriately powered prospective trials were to replicate these findings, demonstrating no significant difference in cancer incidence between participants with 1 to 2 nonadvanced adenoma(s) and no adenomas, colonoscopy use could be reduced by a large extent, as a surveillance examination at 5 years would not be needed,” the study authors said.

The National Cancer Institute Division of Cancer Prevention supported the study. One author reported receiving grant support from Medtronic.

SOURCE: Click B et al. JAMA. 2018;319(19):2021-31.

FROM JAMA

Key clinical point: The risk of developing colorectal cancer was significantly increased for individuals with an advanced adenoma at diagnostic colonoscopy.

Major finding: With a median follow-up of 12.9 years, the incidence of colorectal cancer was 20.0 per 10,000 person-years for advanced adenoma, compared with 9.1 and 7.5 per 10,000 person-years for nonadvanced adenoma and no adenoma, respectively.

Study details: A multicenter, prospective cohort study of the Prostate, Lung, Colorectal, and Ovarian (PLCO) Cancer randomized clinical trial of flexible sigmoidoscopy that enrolled 154,900 individuals.

Disclosures: The National Cancer Institute Division of Cancer Prevention supported the study. One author reported receiving grant support from Medtronic.

Source: Click B et al. JAMA. 2018;319(19):2021-31.

Is adjuvant chemo warranted in stage I ovarian clear cell carcinoma?

In patients with stage I ovarian clear cell carcinoma, the use of adjuvant chemotherapy was associated with superior overall survival (OS), according to results of a large, retrospective cohort study.

The finding, published in Gynecologic Oncology, provides further evidence that adjuvant chemotherapy may provide a survival advantage for patients with this relatively common epithelial ovarian cancer subtype.

However, not all data to date point toward a benefit of adjuvant chemotherapy. “Its utility has yet to be established, especially for patients with stage IA disease,” wrote Dimitrios Nasioudis, MD, and his coauthors in the department of obstetrics and gynecology, Hospital of the University of Pennsylvania, Philadelphia.

In one recent large population-based study, chemotherapy was not associated with superior OS in patients with stage I disease, whereas two smaller retrospective studies suggested that chemotherapy may improve progression-free survival in that setting, noted Dr. Nasioudis and his colleagues.

Their study included data on 2,325 patients in the National Cancer Data Base diagnosed with stage I ovarian clear cell carcinoma between 2004 and 2014. That is the largest cohort of patients with stage I ovarian clear cell carcinoma with adequate staging reported to date in the medical literature, the investigators noted.

The rate of OS at 5 years was 89.2% for patients receiving adjuvant chemotherapy, versus 82.6% for those who did not (P less than .001). Furthermore, adjuvant chemotherapy was associated with improved OS after the researchers controlled for medical comorbidities, age, race, disease substage, and hospital type (hazard ratio, 0.59; 95% confidence interval, 0.45-0.78).

When the researchers looked at disease substage, women with stage IA or IB disease had superior OS with chemotherapy versus no chemotherapy, while in women with stage IC disease, there was a trend toward better OS with chemotherapy that did not reach statistical significance.

“The administration of adjuvant chemotherapy was associated with a survival benefit, even for those with stage IA disease,” the researchers wrote.

Ovarian clear cell carcinoma is the third most common subtype of epithelial ovarian carcinoma, accounting for up to 25% of new diagnoses, they said. Current U.S. and European clinical practice guidelines recommend adjuvant chemotherapy for all women with stage I disease because of a high risk of relapse associated with this subtype.

Observation could be acceptable for patients with surgical stage IA disease, in light of excellent survival rates, the Gynecologic Cancer Intergroup has suggested.

While the present study suggests a survival benefit associated with chemotherapy in stage I ovarian clear cell carcinoma, the investigators had no information on morbidity, cost, or quality-of-life impacts associated with treatment, which limit the findings.

“International collaboration, such as the creation of ovarian clear cell carcinoma registry, is greatly needed to further elucidate the optimal management of those patients,” they wrote.

Dr. Nasioudis and his coauthors had no conflicts of interest to report.

SOURCE: Nasioudis D et al. Gynecol Oncol. 2018 May 8. doi: 10.1016/j.ygyno.2018.04.567.

In patients with stage I ovarian clear cell carcinoma, the use of adjuvant chemotherapy was associated with superior overall survival (OS), according to results of a large, retrospective cohort study.

The finding, published in Gynecologic Oncology, provides further evidence that adjuvant chemotherapy may provide a survival advantage for patients with this relatively common epithelial ovarian cancer subtype.

However, not all data to date point toward a benefit of adjuvant chemotherapy. “Its utility has yet to be established, especially for patients with stage IA disease,” wrote Dimitrios Nasioudis, MD, and his coauthors in the department of obstetrics and gynecology, Hospital of the University of Pennsylvania, Philadelphia.

In one recent large population-based study, chemotherapy was not associated with superior OS in patients with stage I disease, whereas two smaller retrospective studies suggested that chemotherapy may improve progression-free survival in that setting, noted Dr. Nasioudis and his colleagues.

Their study included data on 2,325 patients in the National Cancer Data Base diagnosed with stage I ovarian clear cell carcinoma between 2004 and 2014. That is the largest cohort of patients with stage I ovarian clear cell carcinoma with adequate staging reported to date in the medical literature, the investigators noted.

The rate of OS at 5 years was 89.2% for patients receiving adjuvant chemotherapy, versus 82.6% for those who did not (P less than .001). Furthermore, adjuvant chemotherapy was associated with improved OS after the researchers controlled for medical comorbidities, age, race, disease substage, and hospital type (hazard ratio, 0.59; 95% confidence interval, 0.45-0.78).

When the researchers looked at disease substage, women with stage IA or IB disease had superior OS with chemotherapy versus no chemotherapy, while in women with stage IC disease, there was a trend toward better OS with chemotherapy that did not reach statistical significance.

“The administration of adjuvant chemotherapy was associated with a survival benefit, even for those with stage IA disease,” the researchers wrote.

Ovarian clear cell carcinoma is the third most common subtype of epithelial ovarian carcinoma, accounting for up to 25% of new diagnoses, they said. Current U.S. and European clinical practice guidelines recommend adjuvant chemotherapy for all women with stage I disease because of a high risk of relapse associated with this subtype.

Observation could be acceptable for patients with surgical stage IA disease, in light of excellent survival rates, the Gynecologic Cancer Intergroup has suggested.

While the present study suggests a survival benefit associated with chemotherapy in stage I ovarian clear cell carcinoma, the investigators had no information on morbidity, cost, or quality-of-life impacts associated with treatment, which limit the findings.

“International collaboration, such as the creation of ovarian clear cell carcinoma registry, is greatly needed to further elucidate the optimal management of those patients,” they wrote.

Dr. Nasioudis and his coauthors had no conflicts of interest to report.

SOURCE: Nasioudis D et al. Gynecol Oncol. 2018 May 8. doi: 10.1016/j.ygyno.2018.04.567.

In patients with stage I ovarian clear cell carcinoma, the use of adjuvant chemotherapy was associated with superior overall survival (OS), according to results of a large, retrospective cohort study.

The finding, published in Gynecologic Oncology, provides further evidence that adjuvant chemotherapy may provide a survival advantage for patients with this relatively common epithelial ovarian cancer subtype.

However, not all data to date point toward a benefit of adjuvant chemotherapy. “Its utility has yet to be established, especially for patients with stage IA disease,” wrote Dimitrios Nasioudis, MD, and his coauthors in the department of obstetrics and gynecology, Hospital of the University of Pennsylvania, Philadelphia.

In one recent large population-based study, chemotherapy was not associated with superior OS in patients with stage I disease, whereas two smaller retrospective studies suggested that chemotherapy may improve progression-free survival in that setting, noted Dr. Nasioudis and his colleagues.

Their study included data on 2,325 patients in the National Cancer Data Base diagnosed with stage I ovarian clear cell carcinoma between 2004 and 2014. That is the largest cohort of patients with stage I ovarian clear cell carcinoma with adequate staging reported to date in the medical literature, the investigators noted.

The rate of OS at 5 years was 89.2% for patients receiving adjuvant chemotherapy, versus 82.6% for those who did not (P less than .001). Furthermore, adjuvant chemotherapy was associated with improved OS after the researchers controlled for medical comorbidities, age, race, disease substage, and hospital type (hazard ratio, 0.59; 95% confidence interval, 0.45-0.78).

When the researchers looked at disease substage, women with stage IA or IB disease had superior OS with chemotherapy versus no chemotherapy, while in women with stage IC disease, there was a trend toward better OS with chemotherapy that did not reach statistical significance.

“The administration of adjuvant chemotherapy was associated with a survival benefit, even for those with stage IA disease,” the researchers wrote.

Ovarian clear cell carcinoma is the third most common subtype of epithelial ovarian carcinoma, accounting for up to 25% of new diagnoses, they said. Current U.S. and European clinical practice guidelines recommend adjuvant chemotherapy for all women with stage I disease because of a high risk of relapse associated with this subtype.

Observation could be acceptable for patients with surgical stage IA disease, in light of excellent survival rates, the Gynecologic Cancer Intergroup has suggested.

While the present study suggests a survival benefit associated with chemotherapy in stage I ovarian clear cell carcinoma, the investigators had no information on morbidity, cost, or quality-of-life impacts associated with treatment, which limit the findings.

“International collaboration, such as the creation of ovarian clear cell carcinoma registry, is greatly needed to further elucidate the optimal management of those patients,” they wrote.

Dr. Nasioudis and his coauthors had no conflicts of interest to report.

SOURCE: Nasioudis D et al. Gynecol Oncol. 2018 May 8. doi: 10.1016/j.ygyno.2018.04.567.

FROM GYNECOLOGIC ONCOLOGY

Key clinical point: Adjuvant chemotherapy was associated with improved survival in patients with stage I OCCC.

Major finding: Five-year overall survival was 89.2% for patients receiving adjuvant chemotherapy versus 82.6% for those who did not (P less than .001).

Study details: A retrospective cohort study of 2,325 patients with stage I ovarian clear cell carcinoma diagnosed between 2004 and 2014.

Disclosures: The authors had no conflicts of interest to report.

Source: Nasioudis D et al. Gynecol Oncol. 2018 May 8. doi: 10.1016/j.ygyno.2018.04.567.

Device-related thrombus associated with ischemic events

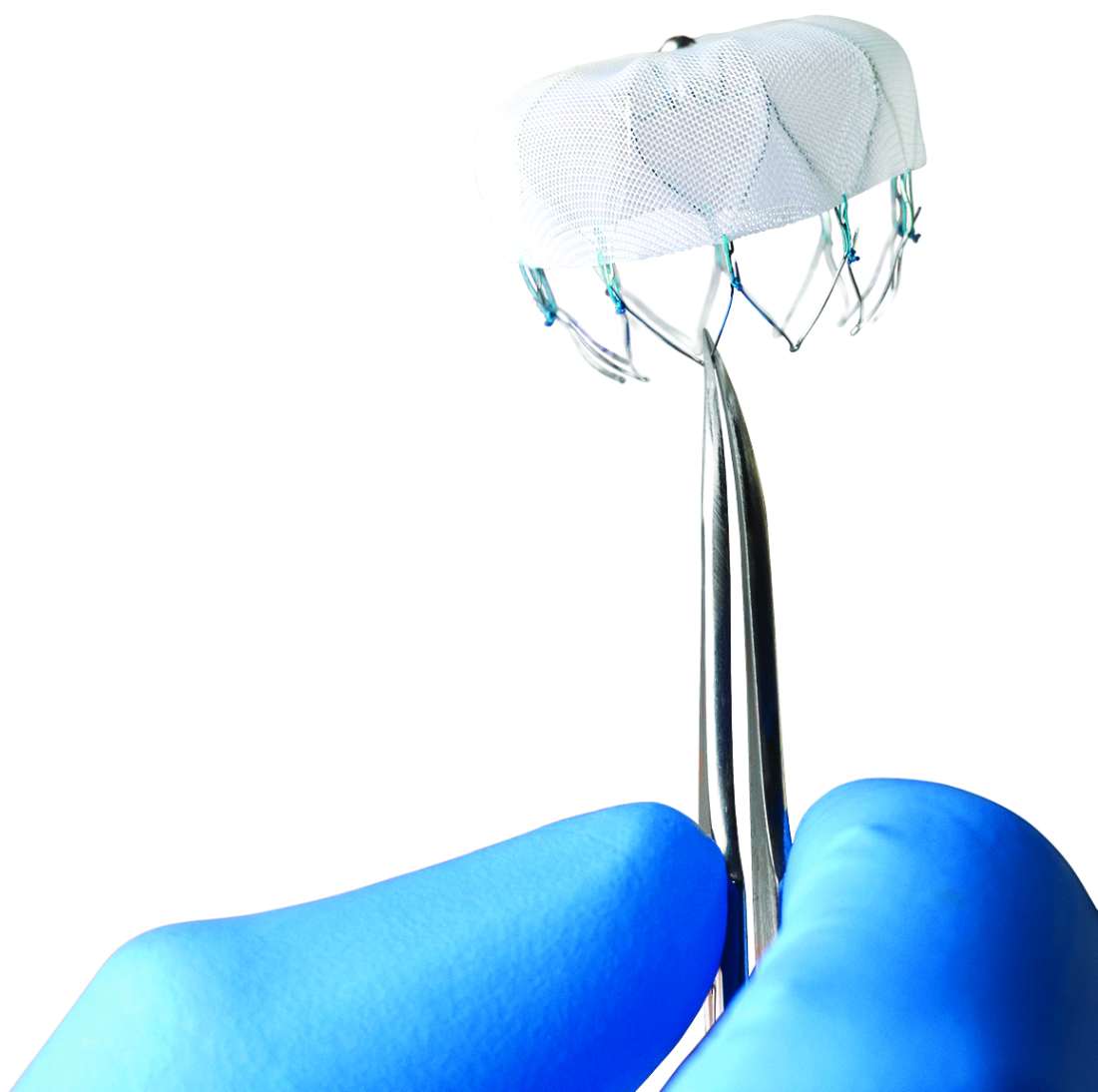

BOSTON – Device-related thrombus (DRT) does not occur often after left atrial appendage closure with the Watchman device. When it does, however, it is associated with a significantly higher rate of stroke and systemic embolism compared with that of patients with no DRT, according to a recent analysis presented at the annual scientific sessions of the Heart Rhythm Society.

Given the negative implications of DRT, a judicious surveillance strategy should be considered, especially when DRT risk factors are present, investigators said in a report on the study, which was published simultaneously in Circulation.

“Certainly, DRT is associated with an increased risk of stroke, and therapeutic anticoagulation should be resumed when discovered with rigorous transesophageal echocardiography follow-up to ensure resolution,” noted senior investigator Vivek Y. Reddy, MD.

Despite the higher rates of all strokes, ischemic strokes, and hemorrhagic strokes linked with DRT, the complication did not link with a higher rate of all-cause mortality compared with patients who never had a DRT. The results also suggested a causal link between DRT and subsequent stroke in about half the patients with DRT because their strokes occurred within a month following DRT diagnosis.

Despite these findings, a majority – 74% – of patients with an identified DRT did not have a stroke, and 87% of the strokes that occurred in the patients who received the Watchman device occurred in the absence of a DRT, reported Dr. Reddy, who presented the findings at the meeting.

The most immediate implication of the findings is the strong case they make for rethinking the timing of planned follow-up transesophageal echocardiography (TEE) examinations of patients after they receive a Watchman device. The current, standard protocol schedules a TEE at 45 days after Watchman placement, when routine anticoagulation usually stops, and then a second TEE 12 months after placement. A better schedule might be to perform the first TEE 3-4 months after Watchman placement to give a potential DRT time to form once oral anticoagulant therapy stops, suggested Dr. Reddy, professor and director of the cardiac arrhythmia service at Mount Sinai Hospital and Health System in New York.