User login

Commission Issues ‘Radical Overhaul’ of Obesity Diagnosis

“We propose a radical overhaul of the actual diagnosis of obesity to improve global healthcare and practices and policies. The specific aims were to facilitate individualized assessment and care of people living with obesity while preserving resources by reducing overdiagnosis and unnecessary or inadequate interventions,” Professor Louise Baur, chair of Child & Adolescent Health at the University of Sydney, Australia, said during a UK Science Media Centre (SMC) news briefing.

The report calls first for a diagnosis of obesity via confirmation of excess adiposity using measures such as waist circumference or waist-to-hip ratio in addition to BMI. Next, a clinical assessment of signs and symptoms of organ dysfunction due to obesity and/or functional limitations determines whether the individual has the disease “clinical obesity,” or “preclinical obesity,” a condition of health risk but not an illness itself.

Published on January 14, 2025, in The Lancet Diabetes & Endocrinology, the document also provides broad guidance on management for the two obesity conditions, emphasizing a personalized and stigma-free approach. The Lancet Commission on Obesity comprised 56 experts in relevant fields including endocrinology, surgery, nutrition, and public health, along with people living with obesity.

The report has been endorsed by more than 75 medical organizations, including the Association of British Clinical Diabetologists, the American Association of Clinical Endocrinologists, the American Diabetes Association, the American Heart Association, the Obesity Society, the World Obesity Federation, and obesity and endocrinology societies from countries in Europe, Latin America, Asia, and South Africa.

In recent years, many in the field have found fault with the current BMI-based definition of obesity (> 30 for people of European descent or other cutoffs for specific ethnic groups), primarily because BMI alone doesn’t reflect a person’s fat vs lean mass, fat distribution, or overall health. The new definition aims to overcome these limitations, as well as settle the debate about whether obesity is a “disease.”

“We now have a clinical diagnosis for obesity, which has been lacking. ... The traditional classification based on BMI ... reflects simply whether or not there is excess adiposity, and sometimes not even precise in that regard, either…It has never been a classification that was meant to diagnose a specific illness with its own clinical characteristics in the same way we diagnose any other illness,” Commission Chair Francesco Rubino, MD, professor and chair of Metabolic and Bariatric Surgery at King’s College London, England, said in an interview.

He added, “The fact that now we have a clinical diagnosis allows recognition of the nuance that obesity is generally a risk and for some can be an illness. There are some who have risk but don’t have the illness here and now. And it’s crucially important for clinical decision-making, but also for policies to have a distinction between those two things because the treatment strategy for one and the other are substantially different.”

Asked to comment, obesity specialist Michael A. Weintraub, MD, clinical assistant professor of endocrinology at New York University Langone Health, said in an interview, “I wholeheartedly agree with modifying the definition of obesity in this more accurate way. ... There has already been a lot of talk about the fallibility of BMI and that BMI over 30 does not equal obesity. ... So a major Commission article like this I think can really move those discussions even more into the forefront and start changing practice.”

However, Weintraub added, “I think there needs to be another step here of more practical guidance on how to actually implement this ... including how to measure waist circumference and to put it into a patient flow.”

Asked to comment, obesity expert Luca Busetto, MD, associate professor of internal medicine at the Department of Medicine of the University of Padova, Italy, said in an interview that he agrees with the general concept of moving beyond BMI in defining obesity. That view was expressed in a proposed “framework” from the European Association for the Study of Obesity (EASO), for which Busetto was the lead author.

Busetto also agrees with the emphasis on the need for a complete clinical evaluation of patients to define their health status. “The precise definition of the symptoms defining clinical obesity in adults and children is extremely important, emphasizing the fact that obesity is a severe and disabling disease by itself, even without or before the occurrence of obesity-related complications,” he said.

However, he takes issue with the Commission’s designation that “preclinical” obesity is not a disease. “The critical point of disagreement for me is the message that obesity is a disease only if it is clinical or only if it presents functional impairment or clinical symptoms. This remains, in my opinion, an oversimplification, not taking into account the fact that the pathophysiological mechanisms that lead to fat accumulation and ‘adipose tissue-based chronic disease’ usually start well before the occurrence of symptoms.”

Busetto pointed to examples such as type 2 diabetes and chronic kidney disease, both of which can be asymptomatic in their early phases yet are still considered diseases at those points. “I have no problem in accepting a distinction between preclinical and clinical stages of the disease, and I like the definition of clinical obesity, but why should this imply the fact that obesity is NOT a disease since its beginning?”

The Commission does state that preclinical obesity should be addressed, mostly with preventive approaches but in some cases with more intensive management. “This is highly relevant, but the risk of an undertreatment of obesity in its asymptomatic state remains in place. This could delay appropriate management for a progressive disease that certainly should not be treated only when presenting symptoms. It would be too late,” Busetto said.

And EASO framework coauthor Gijs Goossens, PhD, professor of cardiometabolic physiology of obesity at Maastricht University Medical Centre, the Netherlands, added a concern that those with excess adiposity but lower BMI might be missed entirely, noting “Since abdominal fat accumulation better predicts chronic cardiometabolic diseases and can also be accompanied by clinical manifestations in individuals with overweight as a consequence of compromised adipose tissue function, the proposed model may lead to underdiagnosis or undertreatment in individuals with BMI 25-30 who have excess abdominal fat.”

Diagnosis and Management Beyond BMI

The Commission advises the use of BMI solely as a marker to screen for potential obesity. Those with a BMI > 40 can be assumed to have excess body fat. For others with a BMI at or near the threshold for obesity in a specific country or ethnic group or for whom there is the clinical judgment of the potential for clinical obesity, confirmation of excess or abnormal adiposity is needed by one of the following:

- At least one measurement of body size and BMI

- At least two measures of body size, regardless of BMI

- Direct body fat measurement, such as a dual-energy x-ray absorptiometry (DEXA) scan

Measurement of body size can be assessed in three ways:

- Waist circumference ≥ 102 cm for men and ≥ 88 cm for women

- Waist-to-hip ratio > 0.90 for men and > 0.50 for women

- Waist-to-height ratio > 0.50 for all.

Weintraub noted, “Telemedicine is a useful tool used by many patients and providers but may also make it challenging to accurately assess someone’s body size. Having technology like an iPhone app to measure body size would circumvent this challenge but this type of tool has not yet been validated.”

If the person does not have excess adiposity, they don’t have obesity. Those with excess adiposity do have obesity. Further assessment is then needed to establish whether the person has an illness, that is, clinical obesity, indicated by signs/symptoms of organ dysfunction, and/or limitations of daily activities. If not, they have “preclinical” obesity.

The document provides a list of 18 obesity-related organ, tissue, and body system criteria for diagnosing “clinical” obesity in adults, including upper airways (eg, apneas/hypopneas), respiratory (breathlessness), cardiovascular (hypertension, heart failure), liver (fatty liver disease with hepatic fibrosis), reproductive (polycystic ovary syndrome, hypogonadism), and metabolism (hyperglycemia, hypertriglyceridemia, low high-density lipoprotein cholesterol). A list of 13 such criteria is also provided for children. “Limitations of day-to-day activities” are included on both lists.

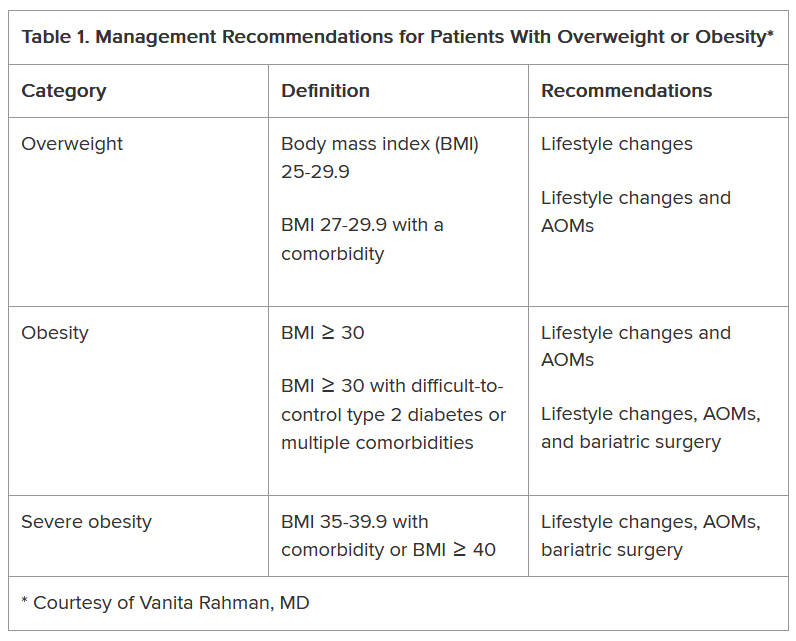

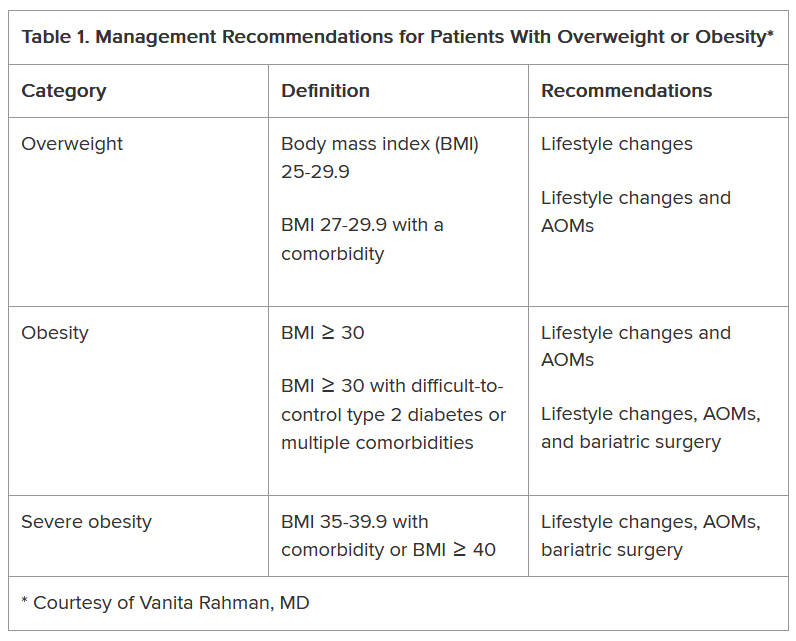

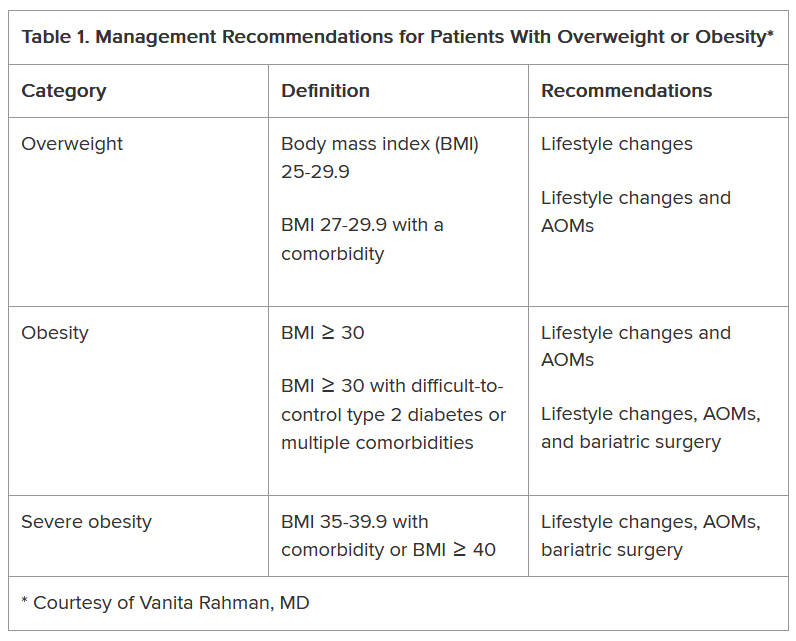

Management Differs by Designation

For preclinical obesity, management should focus on risk reduction and prevention of progression to clinical obesity or other obesity-related diseases. Such approaches include health counseling for weight loss or prevention of weight gain, monitoring over time, and active weight loss interventions in people at higher risk of developing clinical obesity and other obesity-related diseases.

Management for clinical obesity focuses on improvements or reversal of the organ dysfunction. The type of evidence-based treatment and management should be informed by individual risk-benefit assessments and decided via “active discussion” with the patient. Success is determined by improvement in the signs and symptoms rather than measures of weight loss.

In response to a reporter’s question at the SMC briefing about the implications for the use of weight-loss medications, Rubino noted that this wasn’t the focus of the report, but nonetheless said that this new obesity definition could help with their targeted use. “The strategy and intent by which you use the drugs is different in clinical and preclinical obesity. ... Pharmacological interventions could be used for patients with high-risk preclinical obesity, with the intent of reducing risk, but we ... would use the same medication at a different intensity, dose, and maybe in combination therapies.”

As for clinical obesity, “It could be more or less severe and could affect more than one organ, and so clinical obesity might require drugs, might require surgery, or may require, in some cases, a combination of both of them, to achieve the best possible outcomes. ... We want to make sure that the person is restoring health ... with whatever it takes.”

Rubino believes this new definition will convince the remaining clinicians who haven’t yet accepted the concept of obesity as a disease. “When they see clinical obesity, I think it will be much harder to say that a biological process that is capable of causing a dysfunction in the heart or the lungs is less of a disease than another biological process that causes similar dysfunction in the heart of the lungs. ... It’s going to be objective. Obesity is a spectrum of different situations. ... When it’s an illness, clinical obesity, it’s not a matter of if or when. It’s a matter of fact.”

There were no industrial grants or other funding for this initiative. King’s Health Partners hosted the initiative and provided logistical and personnel support to facilitate administrative work and the Delphi-like consensus-development process. Rubino declared receiving research grants from Ethicon (Johnson & Johnson), Novo Nordisk, and Medtronic; consulting fees from Morphic Medical; speaking honoraria from Medtronic, Ethicon, Novo Nordisk, Eli Lilly, and Amgen. Rubino has served (unpaid) as a member of the scientific advisory board for Keyron and a member of the data safety and monitoring board for GI Metabolic Solutions; is president of the Metabolic Health Institute (non-profit); and is the sole director of Metabolic Health International and London Metabolic and Bariatric Surgery (private practice). Baur declared serving on the scientific advisory board for Novo Nordisk (for the ACTION Teens study) and Eli Lilly and receiving speaker fees (paid to the institution) from Novo Nordisk.

A version of this article first appeared on Medscape.com.

“We propose a radical overhaul of the actual diagnosis of obesity to improve global healthcare and practices and policies. The specific aims were to facilitate individualized assessment and care of people living with obesity while preserving resources by reducing overdiagnosis and unnecessary or inadequate interventions,” Professor Louise Baur, chair of Child & Adolescent Health at the University of Sydney, Australia, said during a UK Science Media Centre (SMC) news briefing.

The report calls first for a diagnosis of obesity via confirmation of excess adiposity using measures such as waist circumference or waist-to-hip ratio in addition to BMI. Next, a clinical assessment of signs and symptoms of organ dysfunction due to obesity and/or functional limitations determines whether the individual has the disease “clinical obesity,” or “preclinical obesity,” a condition of health risk but not an illness itself.

Published on January 14, 2025, in The Lancet Diabetes & Endocrinology, the document also provides broad guidance on management for the two obesity conditions, emphasizing a personalized and stigma-free approach. The Lancet Commission on Obesity comprised 56 experts in relevant fields including endocrinology, surgery, nutrition, and public health, along with people living with obesity.

The report has been endorsed by more than 75 medical organizations, including the Association of British Clinical Diabetologists, the American Association of Clinical Endocrinologists, the American Diabetes Association, the American Heart Association, the Obesity Society, the World Obesity Federation, and obesity and endocrinology societies from countries in Europe, Latin America, Asia, and South Africa.

In recent years, many in the field have found fault with the current BMI-based definition of obesity (> 30 for people of European descent or other cutoffs for specific ethnic groups), primarily because BMI alone doesn’t reflect a person’s fat vs lean mass, fat distribution, or overall health. The new definition aims to overcome these limitations, as well as settle the debate about whether obesity is a “disease.”

“We now have a clinical diagnosis for obesity, which has been lacking. ... The traditional classification based on BMI ... reflects simply whether or not there is excess adiposity, and sometimes not even precise in that regard, either…It has never been a classification that was meant to diagnose a specific illness with its own clinical characteristics in the same way we diagnose any other illness,” Commission Chair Francesco Rubino, MD, professor and chair of Metabolic and Bariatric Surgery at King’s College London, England, said in an interview.

He added, “The fact that now we have a clinical diagnosis allows recognition of the nuance that obesity is generally a risk and for some can be an illness. There are some who have risk but don’t have the illness here and now. And it’s crucially important for clinical decision-making, but also for policies to have a distinction between those two things because the treatment strategy for one and the other are substantially different.”

Asked to comment, obesity specialist Michael A. Weintraub, MD, clinical assistant professor of endocrinology at New York University Langone Health, said in an interview, “I wholeheartedly agree with modifying the definition of obesity in this more accurate way. ... There has already been a lot of talk about the fallibility of BMI and that BMI over 30 does not equal obesity. ... So a major Commission article like this I think can really move those discussions even more into the forefront and start changing practice.”

However, Weintraub added, “I think there needs to be another step here of more practical guidance on how to actually implement this ... including how to measure waist circumference and to put it into a patient flow.”

Asked to comment, obesity expert Luca Busetto, MD, associate professor of internal medicine at the Department of Medicine of the University of Padova, Italy, said in an interview that he agrees with the general concept of moving beyond BMI in defining obesity. That view was expressed in a proposed “framework” from the European Association for the Study of Obesity (EASO), for which Busetto was the lead author.

Busetto also agrees with the emphasis on the need for a complete clinical evaluation of patients to define their health status. “The precise definition of the symptoms defining clinical obesity in adults and children is extremely important, emphasizing the fact that obesity is a severe and disabling disease by itself, even without or before the occurrence of obesity-related complications,” he said.

However, he takes issue with the Commission’s designation that “preclinical” obesity is not a disease. “The critical point of disagreement for me is the message that obesity is a disease only if it is clinical or only if it presents functional impairment or clinical symptoms. This remains, in my opinion, an oversimplification, not taking into account the fact that the pathophysiological mechanisms that lead to fat accumulation and ‘adipose tissue-based chronic disease’ usually start well before the occurrence of symptoms.”

Busetto pointed to examples such as type 2 diabetes and chronic kidney disease, both of which can be asymptomatic in their early phases yet are still considered diseases at those points. “I have no problem in accepting a distinction between preclinical and clinical stages of the disease, and I like the definition of clinical obesity, but why should this imply the fact that obesity is NOT a disease since its beginning?”

The Commission does state that preclinical obesity should be addressed, mostly with preventive approaches but in some cases with more intensive management. “This is highly relevant, but the risk of an undertreatment of obesity in its asymptomatic state remains in place. This could delay appropriate management for a progressive disease that certainly should not be treated only when presenting symptoms. It would be too late,” Busetto said.

And EASO framework coauthor Gijs Goossens, PhD, professor of cardiometabolic physiology of obesity at Maastricht University Medical Centre, the Netherlands, added a concern that those with excess adiposity but lower BMI might be missed entirely, noting “Since abdominal fat accumulation better predicts chronic cardiometabolic diseases and can also be accompanied by clinical manifestations in individuals with overweight as a consequence of compromised adipose tissue function, the proposed model may lead to underdiagnosis or undertreatment in individuals with BMI 25-30 who have excess abdominal fat.”

Diagnosis and Management Beyond BMI

The Commission advises the use of BMI solely as a marker to screen for potential obesity. Those with a BMI > 40 can be assumed to have excess body fat. For others with a BMI at or near the threshold for obesity in a specific country or ethnic group or for whom there is the clinical judgment of the potential for clinical obesity, confirmation of excess or abnormal adiposity is needed by one of the following:

- At least one measurement of body size and BMI

- At least two measures of body size, regardless of BMI

- Direct body fat measurement, such as a dual-energy x-ray absorptiometry (DEXA) scan

Measurement of body size can be assessed in three ways:

- Waist circumference ≥ 102 cm for men and ≥ 88 cm for women

- Waist-to-hip ratio > 0.90 for men and > 0.50 for women

- Waist-to-height ratio > 0.50 for all.

Weintraub noted, “Telemedicine is a useful tool used by many patients and providers but may also make it challenging to accurately assess someone’s body size. Having technology like an iPhone app to measure body size would circumvent this challenge but this type of tool has not yet been validated.”

If the person does not have excess adiposity, they don’t have obesity. Those with excess adiposity do have obesity. Further assessment is then needed to establish whether the person has an illness, that is, clinical obesity, indicated by signs/symptoms of organ dysfunction, and/or limitations of daily activities. If not, they have “preclinical” obesity.

The document provides a list of 18 obesity-related organ, tissue, and body system criteria for diagnosing “clinical” obesity in adults, including upper airways (eg, apneas/hypopneas), respiratory (breathlessness), cardiovascular (hypertension, heart failure), liver (fatty liver disease with hepatic fibrosis), reproductive (polycystic ovary syndrome, hypogonadism), and metabolism (hyperglycemia, hypertriglyceridemia, low high-density lipoprotein cholesterol). A list of 13 such criteria is also provided for children. “Limitations of day-to-day activities” are included on both lists.

Management Differs by Designation

For preclinical obesity, management should focus on risk reduction and prevention of progression to clinical obesity or other obesity-related diseases. Such approaches include health counseling for weight loss or prevention of weight gain, monitoring over time, and active weight loss interventions in people at higher risk of developing clinical obesity and other obesity-related diseases.

Management for clinical obesity focuses on improvements or reversal of the organ dysfunction. The type of evidence-based treatment and management should be informed by individual risk-benefit assessments and decided via “active discussion” with the patient. Success is determined by improvement in the signs and symptoms rather than measures of weight loss.

In response to a reporter’s question at the SMC briefing about the implications for the use of weight-loss medications, Rubino noted that this wasn’t the focus of the report, but nonetheless said that this new obesity definition could help with their targeted use. “The strategy and intent by which you use the drugs is different in clinical and preclinical obesity. ... Pharmacological interventions could be used for patients with high-risk preclinical obesity, with the intent of reducing risk, but we ... would use the same medication at a different intensity, dose, and maybe in combination therapies.”

As for clinical obesity, “It could be more or less severe and could affect more than one organ, and so clinical obesity might require drugs, might require surgery, or may require, in some cases, a combination of both of them, to achieve the best possible outcomes. ... We want to make sure that the person is restoring health ... with whatever it takes.”

Rubino believes this new definition will convince the remaining clinicians who haven’t yet accepted the concept of obesity as a disease. “When they see clinical obesity, I think it will be much harder to say that a biological process that is capable of causing a dysfunction in the heart or the lungs is less of a disease than another biological process that causes similar dysfunction in the heart of the lungs. ... It’s going to be objective. Obesity is a spectrum of different situations. ... When it’s an illness, clinical obesity, it’s not a matter of if or when. It’s a matter of fact.”

There were no industrial grants or other funding for this initiative. King’s Health Partners hosted the initiative and provided logistical and personnel support to facilitate administrative work and the Delphi-like consensus-development process. Rubino declared receiving research grants from Ethicon (Johnson & Johnson), Novo Nordisk, and Medtronic; consulting fees from Morphic Medical; speaking honoraria from Medtronic, Ethicon, Novo Nordisk, Eli Lilly, and Amgen. Rubino has served (unpaid) as a member of the scientific advisory board for Keyron and a member of the data safety and monitoring board for GI Metabolic Solutions; is president of the Metabolic Health Institute (non-profit); and is the sole director of Metabolic Health International and London Metabolic and Bariatric Surgery (private practice). Baur declared serving on the scientific advisory board for Novo Nordisk (for the ACTION Teens study) and Eli Lilly and receiving speaker fees (paid to the institution) from Novo Nordisk.

A version of this article first appeared on Medscape.com.

“We propose a radical overhaul of the actual diagnosis of obesity to improve global healthcare and practices and policies. The specific aims were to facilitate individualized assessment and care of people living with obesity while preserving resources by reducing overdiagnosis and unnecessary or inadequate interventions,” Professor Louise Baur, chair of Child & Adolescent Health at the University of Sydney, Australia, said during a UK Science Media Centre (SMC) news briefing.

The report calls first for a diagnosis of obesity via confirmation of excess adiposity using measures such as waist circumference or waist-to-hip ratio in addition to BMI. Next, a clinical assessment of signs and symptoms of organ dysfunction due to obesity and/or functional limitations determines whether the individual has the disease “clinical obesity,” or “preclinical obesity,” a condition of health risk but not an illness itself.

Published on January 14, 2025, in The Lancet Diabetes & Endocrinology, the document also provides broad guidance on management for the two obesity conditions, emphasizing a personalized and stigma-free approach. The Lancet Commission on Obesity comprised 56 experts in relevant fields including endocrinology, surgery, nutrition, and public health, along with people living with obesity.

The report has been endorsed by more than 75 medical organizations, including the Association of British Clinical Diabetologists, the American Association of Clinical Endocrinologists, the American Diabetes Association, the American Heart Association, the Obesity Society, the World Obesity Federation, and obesity and endocrinology societies from countries in Europe, Latin America, Asia, and South Africa.

In recent years, many in the field have found fault with the current BMI-based definition of obesity (> 30 for people of European descent or other cutoffs for specific ethnic groups), primarily because BMI alone doesn’t reflect a person’s fat vs lean mass, fat distribution, or overall health. The new definition aims to overcome these limitations, as well as settle the debate about whether obesity is a “disease.”

“We now have a clinical diagnosis for obesity, which has been lacking. ... The traditional classification based on BMI ... reflects simply whether or not there is excess adiposity, and sometimes not even precise in that regard, either…It has never been a classification that was meant to diagnose a specific illness with its own clinical characteristics in the same way we diagnose any other illness,” Commission Chair Francesco Rubino, MD, professor and chair of Metabolic and Bariatric Surgery at King’s College London, England, said in an interview.

He added, “The fact that now we have a clinical diagnosis allows recognition of the nuance that obesity is generally a risk and for some can be an illness. There are some who have risk but don’t have the illness here and now. And it’s crucially important for clinical decision-making, but also for policies to have a distinction between those two things because the treatment strategy for one and the other are substantially different.”

Asked to comment, obesity specialist Michael A. Weintraub, MD, clinical assistant professor of endocrinology at New York University Langone Health, said in an interview, “I wholeheartedly agree with modifying the definition of obesity in this more accurate way. ... There has already been a lot of talk about the fallibility of BMI and that BMI over 30 does not equal obesity. ... So a major Commission article like this I think can really move those discussions even more into the forefront and start changing practice.”

However, Weintraub added, “I think there needs to be another step here of more practical guidance on how to actually implement this ... including how to measure waist circumference and to put it into a patient flow.”

Asked to comment, obesity expert Luca Busetto, MD, associate professor of internal medicine at the Department of Medicine of the University of Padova, Italy, said in an interview that he agrees with the general concept of moving beyond BMI in defining obesity. That view was expressed in a proposed “framework” from the European Association for the Study of Obesity (EASO), for which Busetto was the lead author.

Busetto also agrees with the emphasis on the need for a complete clinical evaluation of patients to define their health status. “The precise definition of the symptoms defining clinical obesity in adults and children is extremely important, emphasizing the fact that obesity is a severe and disabling disease by itself, even without or before the occurrence of obesity-related complications,” he said.

However, he takes issue with the Commission’s designation that “preclinical” obesity is not a disease. “The critical point of disagreement for me is the message that obesity is a disease only if it is clinical or only if it presents functional impairment or clinical symptoms. This remains, in my opinion, an oversimplification, not taking into account the fact that the pathophysiological mechanisms that lead to fat accumulation and ‘adipose tissue-based chronic disease’ usually start well before the occurrence of symptoms.”

Busetto pointed to examples such as type 2 diabetes and chronic kidney disease, both of which can be asymptomatic in their early phases yet are still considered diseases at those points. “I have no problem in accepting a distinction between preclinical and clinical stages of the disease, and I like the definition of clinical obesity, but why should this imply the fact that obesity is NOT a disease since its beginning?”

The Commission does state that preclinical obesity should be addressed, mostly with preventive approaches but in some cases with more intensive management. “This is highly relevant, but the risk of an undertreatment of obesity in its asymptomatic state remains in place. This could delay appropriate management for a progressive disease that certainly should not be treated only when presenting symptoms. It would be too late,” Busetto said.

And EASO framework coauthor Gijs Goossens, PhD, professor of cardiometabolic physiology of obesity at Maastricht University Medical Centre, the Netherlands, added a concern that those with excess adiposity but lower BMI might be missed entirely, noting “Since abdominal fat accumulation better predicts chronic cardiometabolic diseases and can also be accompanied by clinical manifestations in individuals with overweight as a consequence of compromised adipose tissue function, the proposed model may lead to underdiagnosis or undertreatment in individuals with BMI 25-30 who have excess abdominal fat.”

Diagnosis and Management Beyond BMI

The Commission advises the use of BMI solely as a marker to screen for potential obesity. Those with a BMI > 40 can be assumed to have excess body fat. For others with a BMI at or near the threshold for obesity in a specific country or ethnic group or for whom there is the clinical judgment of the potential for clinical obesity, confirmation of excess or abnormal adiposity is needed by one of the following:

- At least one measurement of body size and BMI

- At least two measures of body size, regardless of BMI

- Direct body fat measurement, such as a dual-energy x-ray absorptiometry (DEXA) scan

Measurement of body size can be assessed in three ways:

- Waist circumference ≥ 102 cm for men and ≥ 88 cm for women

- Waist-to-hip ratio > 0.90 for men and > 0.50 for women

- Waist-to-height ratio > 0.50 for all.

Weintraub noted, “Telemedicine is a useful tool used by many patients and providers but may also make it challenging to accurately assess someone’s body size. Having technology like an iPhone app to measure body size would circumvent this challenge but this type of tool has not yet been validated.”

If the person does not have excess adiposity, they don’t have obesity. Those with excess adiposity do have obesity. Further assessment is then needed to establish whether the person has an illness, that is, clinical obesity, indicated by signs/symptoms of organ dysfunction, and/or limitations of daily activities. If not, they have “preclinical” obesity.

The document provides a list of 18 obesity-related organ, tissue, and body system criteria for diagnosing “clinical” obesity in adults, including upper airways (eg, apneas/hypopneas), respiratory (breathlessness), cardiovascular (hypertension, heart failure), liver (fatty liver disease with hepatic fibrosis), reproductive (polycystic ovary syndrome, hypogonadism), and metabolism (hyperglycemia, hypertriglyceridemia, low high-density lipoprotein cholesterol). A list of 13 such criteria is also provided for children. “Limitations of day-to-day activities” are included on both lists.

Management Differs by Designation

For preclinical obesity, management should focus on risk reduction and prevention of progression to clinical obesity or other obesity-related diseases. Such approaches include health counseling for weight loss or prevention of weight gain, monitoring over time, and active weight loss interventions in people at higher risk of developing clinical obesity and other obesity-related diseases.

Management for clinical obesity focuses on improvements or reversal of the organ dysfunction. The type of evidence-based treatment and management should be informed by individual risk-benefit assessments and decided via “active discussion” with the patient. Success is determined by improvement in the signs and symptoms rather than measures of weight loss.

In response to a reporter’s question at the SMC briefing about the implications for the use of weight-loss medications, Rubino noted that this wasn’t the focus of the report, but nonetheless said that this new obesity definition could help with their targeted use. “The strategy and intent by which you use the drugs is different in clinical and preclinical obesity. ... Pharmacological interventions could be used for patients with high-risk preclinical obesity, with the intent of reducing risk, but we ... would use the same medication at a different intensity, dose, and maybe in combination therapies.”

As for clinical obesity, “It could be more or less severe and could affect more than one organ, and so clinical obesity might require drugs, might require surgery, or may require, in some cases, a combination of both of them, to achieve the best possible outcomes. ... We want to make sure that the person is restoring health ... with whatever it takes.”

Rubino believes this new definition will convince the remaining clinicians who haven’t yet accepted the concept of obesity as a disease. “When they see clinical obesity, I think it will be much harder to say that a biological process that is capable of causing a dysfunction in the heart or the lungs is less of a disease than another biological process that causes similar dysfunction in the heart of the lungs. ... It’s going to be objective. Obesity is a spectrum of different situations. ... When it’s an illness, clinical obesity, it’s not a matter of if or when. It’s a matter of fact.”

There were no industrial grants or other funding for this initiative. King’s Health Partners hosted the initiative and provided logistical and personnel support to facilitate administrative work and the Delphi-like consensus-development process. Rubino declared receiving research grants from Ethicon (Johnson & Johnson), Novo Nordisk, and Medtronic; consulting fees from Morphic Medical; speaking honoraria from Medtronic, Ethicon, Novo Nordisk, Eli Lilly, and Amgen. Rubino has served (unpaid) as a member of the scientific advisory board for Keyron and a member of the data safety and monitoring board for GI Metabolic Solutions; is president of the Metabolic Health Institute (non-profit); and is the sole director of Metabolic Health International and London Metabolic and Bariatric Surgery (private practice). Baur declared serving on the scientific advisory board for Novo Nordisk (for the ACTION Teens study) and Eli Lilly and receiving speaker fees (paid to the institution) from Novo Nordisk.

A version of this article first appeared on Medscape.com.

FROM THE LANCET DIABETES & ENDOCRINOLOGY

Losing Your Mind Trying to Understand the BP-Dementia Link

You could be forgiven if you are confused about how blood pressure (BP) affects dementia. First, you read an article extolling the benefits of BP lowering, then a study about how stopping antihypertensives slows cognitive decline in nursing home residents. It’s enough to make you lose your mind.

The Brain Benefits of BP Lowering

It should be stated unequivocally that you should absolutely treat high BP. It may have once been acceptable to state, “The greatest danger to a man with high blood pressure lies in its discovery, because then some fool is certain to try and reduce it.” But those dark days are long behind us.

In these divided times, at least we can agree that we should treat high BP. The cardiovascular (CV) benefits, in and of themselves, justify the decision. But BP’s relationship with dementia is more complex. There are different types of dementia even though we tend to lump them all into one category. Vascular dementia is driven by the same pathophysiology and risk factors as cardiac disease. It’s intuitive that treating hypertension, diabetes, hypercholesterolemia, and smoking will decrease the risk for stroke and limit the damage to the brain that we see with repeated vascular insults. For Alzheimer’s disease, high BP and other CV risk factors seem to increase the risk even if the mechanism is not fully elucidated.

Estimates suggest that if we could lower the prevalence of hypertension by 25%, there would be 160,000 fewer cases of Alzheimer’s disease. But the data are not as robust as one might hope. A 2021 Cochrane review found that hypertension treatment slowed cognitive decline, but the quality of the evidence was low. Short duration of follow-up, dropouts, crossovers, and other problems with the data precluded any certainty. What’s more, hypertension in midlife is associated with cognitive decline and dementia, but its impact in those over age 70 is less clear. Later in life, or once cognitive impairment has already developed, it may be too late for BP lowering to have any impact.

Potential Harms of Lowering BP

All this needs to be weighed against the potential harms of treating hypertension. I will reiterate that hypertension should be treated and treated aggressively for the prevention of CV events. But overtreatment, especially in older patients, is associated with hypotension, falls, and syncope. Older patients are also at risk for polypharmacy and drug-drug interactions.

A Korean nationwide survey showed a U-shaped association between BP and Alzheimer’s disease risk in adults (mean age, 67 years), with both high and low BPs associated with a higher risk for Alzheimer’s disease. Though not all studies agree. A post hoc analysis of SPRINT MIND did not find any negative impact of intensive BP lowering on cognitive outcomes or cerebral perfusion in older adults (mean age, 68 years). But it didn’t do much good either. Given the heterogeneity of the data, doubts remain on whether aggressive BP lowering might be detrimental in older patients with comorbidities and preexisting dementia. The obvious corollary then is whether deprescribing hypertensive medications could be beneficial.

A recent publication in JAMA Internal Medicine attempted to address this very question. The cohort study used data from Veterans Affairs nursing home residents (mean age, 78 years) to emulate a randomized trial on deprescribing antihypertensives and cognitive decline. Many of the residents’ cognitive scores worsened over the course of follow-up; however, the decline was less pronounced in the deprescribing group (10% vs 12%). The same group did a similar analysis looking at CV outcomes and found no increased risk for heart attack or stroke with deprescribing BP medications. Taken together, these nursing home data suggest that deprescribing may help slow cognitive decline without the expected trade-off of increased CV events.

Deprescribing, Yes or No?

However, randomized data would obviously be preferable, and these are in short supply. One such trial, the DANTE study, found no benefit to deprescribing in terms of cognition in adults aged 75 years or older with mild cognitive impairment. The study follow-up was only 16 weeks, however, which is hardly enough time to demonstrate any effect, positive or negative. The most that can be said is that it didn’t cause many short-term adverse events.

Perhaps the best conclusion to draw from this somewhat underwhelming collection of data is that lowering high BP is important, but less important the closer we get to the end of life. Hypotension is obviously bad, and overly aggressive BP lowering is going to lead to negative outcomes in older adults because gravity is an unforgiving mistress.

Deprescribing antihypertensives in older adults is probably not going to cause major negative outcomes, but whether it will do much good in nonhypotensive patients is debatable. The bigger problem is the millions of people with undiagnosed or undertreated hypertension. We would probably have less dementia if we treated hypertension when it does the most good: as a primary-prevention strategy in midlife.

Dr. Labos is a cardiologist at Hôpital Notre-Dame, Montreal, Quebec, Canada. He disclosed no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

You could be forgiven if you are confused about how blood pressure (BP) affects dementia. First, you read an article extolling the benefits of BP lowering, then a study about how stopping antihypertensives slows cognitive decline in nursing home residents. It’s enough to make you lose your mind.

The Brain Benefits of BP Lowering

It should be stated unequivocally that you should absolutely treat high BP. It may have once been acceptable to state, “The greatest danger to a man with high blood pressure lies in its discovery, because then some fool is certain to try and reduce it.” But those dark days are long behind us.

In these divided times, at least we can agree that we should treat high BP. The cardiovascular (CV) benefits, in and of themselves, justify the decision. But BP’s relationship with dementia is more complex. There are different types of dementia even though we tend to lump them all into one category. Vascular dementia is driven by the same pathophysiology and risk factors as cardiac disease. It’s intuitive that treating hypertension, diabetes, hypercholesterolemia, and smoking will decrease the risk for stroke and limit the damage to the brain that we see with repeated vascular insults. For Alzheimer’s disease, high BP and other CV risk factors seem to increase the risk even if the mechanism is not fully elucidated.

Estimates suggest that if we could lower the prevalence of hypertension by 25%, there would be 160,000 fewer cases of Alzheimer’s disease. But the data are not as robust as one might hope. A 2021 Cochrane review found that hypertension treatment slowed cognitive decline, but the quality of the evidence was low. Short duration of follow-up, dropouts, crossovers, and other problems with the data precluded any certainty. What’s more, hypertension in midlife is associated with cognitive decline and dementia, but its impact in those over age 70 is less clear. Later in life, or once cognitive impairment has already developed, it may be too late for BP lowering to have any impact.

Potential Harms of Lowering BP

All this needs to be weighed against the potential harms of treating hypertension. I will reiterate that hypertension should be treated and treated aggressively for the prevention of CV events. But overtreatment, especially in older patients, is associated with hypotension, falls, and syncope. Older patients are also at risk for polypharmacy and drug-drug interactions.

A Korean nationwide survey showed a U-shaped association between BP and Alzheimer’s disease risk in adults (mean age, 67 years), with both high and low BPs associated with a higher risk for Alzheimer’s disease. Though not all studies agree. A post hoc analysis of SPRINT MIND did not find any negative impact of intensive BP lowering on cognitive outcomes or cerebral perfusion in older adults (mean age, 68 years). But it didn’t do much good either. Given the heterogeneity of the data, doubts remain on whether aggressive BP lowering might be detrimental in older patients with comorbidities and preexisting dementia. The obvious corollary then is whether deprescribing hypertensive medications could be beneficial.

A recent publication in JAMA Internal Medicine attempted to address this very question. The cohort study used data from Veterans Affairs nursing home residents (mean age, 78 years) to emulate a randomized trial on deprescribing antihypertensives and cognitive decline. Many of the residents’ cognitive scores worsened over the course of follow-up; however, the decline was less pronounced in the deprescribing group (10% vs 12%). The same group did a similar analysis looking at CV outcomes and found no increased risk for heart attack or stroke with deprescribing BP medications. Taken together, these nursing home data suggest that deprescribing may help slow cognitive decline without the expected trade-off of increased CV events.

Deprescribing, Yes or No?

However, randomized data would obviously be preferable, and these are in short supply. One such trial, the DANTE study, found no benefit to deprescribing in terms of cognition in adults aged 75 years or older with mild cognitive impairment. The study follow-up was only 16 weeks, however, which is hardly enough time to demonstrate any effect, positive or negative. The most that can be said is that it didn’t cause many short-term adverse events.

Perhaps the best conclusion to draw from this somewhat underwhelming collection of data is that lowering high BP is important, but less important the closer we get to the end of life. Hypotension is obviously bad, and overly aggressive BP lowering is going to lead to negative outcomes in older adults because gravity is an unforgiving mistress.

Deprescribing antihypertensives in older adults is probably not going to cause major negative outcomes, but whether it will do much good in nonhypotensive patients is debatable. The bigger problem is the millions of people with undiagnosed or undertreated hypertension. We would probably have less dementia if we treated hypertension when it does the most good: as a primary-prevention strategy in midlife.

Dr. Labos is a cardiologist at Hôpital Notre-Dame, Montreal, Quebec, Canada. He disclosed no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

You could be forgiven if you are confused about how blood pressure (BP) affects dementia. First, you read an article extolling the benefits of BP lowering, then a study about how stopping antihypertensives slows cognitive decline in nursing home residents. It’s enough to make you lose your mind.

The Brain Benefits of BP Lowering

It should be stated unequivocally that you should absolutely treat high BP. It may have once been acceptable to state, “The greatest danger to a man with high blood pressure lies in its discovery, because then some fool is certain to try and reduce it.” But those dark days are long behind us.

In these divided times, at least we can agree that we should treat high BP. The cardiovascular (CV) benefits, in and of themselves, justify the decision. But BP’s relationship with dementia is more complex. There are different types of dementia even though we tend to lump them all into one category. Vascular dementia is driven by the same pathophysiology and risk factors as cardiac disease. It’s intuitive that treating hypertension, diabetes, hypercholesterolemia, and smoking will decrease the risk for stroke and limit the damage to the brain that we see with repeated vascular insults. For Alzheimer’s disease, high BP and other CV risk factors seem to increase the risk even if the mechanism is not fully elucidated.

Estimates suggest that if we could lower the prevalence of hypertension by 25%, there would be 160,000 fewer cases of Alzheimer’s disease. But the data are not as robust as one might hope. A 2021 Cochrane review found that hypertension treatment slowed cognitive decline, but the quality of the evidence was low. Short duration of follow-up, dropouts, crossovers, and other problems with the data precluded any certainty. What’s more, hypertension in midlife is associated with cognitive decline and dementia, but its impact in those over age 70 is less clear. Later in life, or once cognitive impairment has already developed, it may be too late for BP lowering to have any impact.

Potential Harms of Lowering BP

All this needs to be weighed against the potential harms of treating hypertension. I will reiterate that hypertension should be treated and treated aggressively for the prevention of CV events. But overtreatment, especially in older patients, is associated with hypotension, falls, and syncope. Older patients are also at risk for polypharmacy and drug-drug interactions.

A Korean nationwide survey showed a U-shaped association between BP and Alzheimer’s disease risk in adults (mean age, 67 years), with both high and low BPs associated with a higher risk for Alzheimer’s disease. Though not all studies agree. A post hoc analysis of SPRINT MIND did not find any negative impact of intensive BP lowering on cognitive outcomes or cerebral perfusion in older adults (mean age, 68 years). But it didn’t do much good either. Given the heterogeneity of the data, doubts remain on whether aggressive BP lowering might be detrimental in older patients with comorbidities and preexisting dementia. The obvious corollary then is whether deprescribing hypertensive medications could be beneficial.

A recent publication in JAMA Internal Medicine attempted to address this very question. The cohort study used data from Veterans Affairs nursing home residents (mean age, 78 years) to emulate a randomized trial on deprescribing antihypertensives and cognitive decline. Many of the residents’ cognitive scores worsened over the course of follow-up; however, the decline was less pronounced in the deprescribing group (10% vs 12%). The same group did a similar analysis looking at CV outcomes and found no increased risk for heart attack or stroke with deprescribing BP medications. Taken together, these nursing home data suggest that deprescribing may help slow cognitive decline without the expected trade-off of increased CV events.

Deprescribing, Yes or No?

However, randomized data would obviously be preferable, and these are in short supply. One such trial, the DANTE study, found no benefit to deprescribing in terms of cognition in adults aged 75 years or older with mild cognitive impairment. The study follow-up was only 16 weeks, however, which is hardly enough time to demonstrate any effect, positive or negative. The most that can be said is that it didn’t cause many short-term adverse events.

Perhaps the best conclusion to draw from this somewhat underwhelming collection of data is that lowering high BP is important, but less important the closer we get to the end of life. Hypotension is obviously bad, and overly aggressive BP lowering is going to lead to negative outcomes in older adults because gravity is an unforgiving mistress.

Deprescribing antihypertensives in older adults is probably not going to cause major negative outcomes, but whether it will do much good in nonhypotensive patients is debatable. The bigger problem is the millions of people with undiagnosed or undertreated hypertension. We would probably have less dementia if we treated hypertension when it does the most good: as a primary-prevention strategy in midlife.

Dr. Labos is a cardiologist at Hôpital Notre-Dame, Montreal, Quebec, Canada. He disclosed no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

New Weight Loss Drugs May Fight Obesity-Related Cancer, Too

The latest glucagon-like peptide 1 (GLP-1) receptor agonists have been heralded for their potential to not only boost weight loss and glucose control but also improve cardiovascular, gastric, hepatic, and renal values.

Throughout 2024, research has also indicated GLP-1 drugs may reduce risks for obesity-related cancer.

In a US study of more than 1.6 million patients with type 2 diabetes, cancer researchers found that patients who took a GLP-1 drug had significant risk reductions for 10 of 13 obesity-associated cancers, as compared with patients who only took insulin.

They also saw a declining risk for stomach cancer, though it wasn’t considered statistically significant, but not a reduced risk for postmenopausal breast cancer or thyroid cancer.

The associations make sense, particularly because GLP-1 drugs have unexpected effects on modulating immune functions linked to obesity-associated cancers.

“The protective effects of GLP-1s against obesity-associated cancers likely stem from multiple mechanisms,” said lead author Lindsey Wang, a medical student and research scholar at Case Western Reserve University in Cleveland.

“These drugs promote substantial weight loss, reducing obesity-related cancer risks,” she said. “They also enhance insulin sensitivity and lower insulin levels, decreasing cancer cell growth signals.”

Additional GLP-1 Studies

The Case Western team also published a study in December 2023 that found people with type 2 diabetes who took GLP-1s had a 44% lower risk for colorectal cancer than those who took insulin and a 25% lower risk than those who took metformin. The research suggested even greater risk reductions among those with overweight or obesity, with GLP-1 users having a 50% lower risk than those who took insulin and a 42% lower risk than those who took metformin.

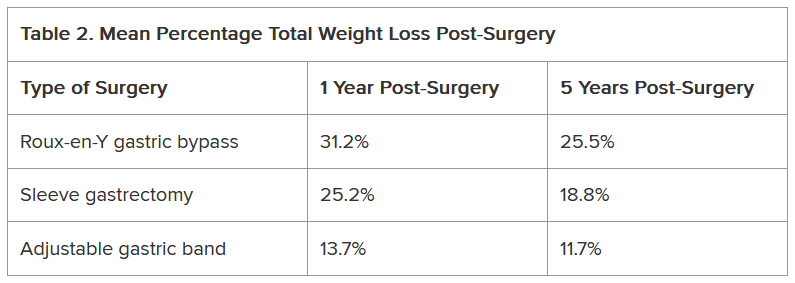

In another recent Case Western study, both bariatric surgery and GLP-1 drugs reduced the risk for obesity-related cancers. While those who had bariatric surgery had a 22% risk reduction over 10 years, as compared with those who received no treatment, those taking GLP-1 had a 39% risk reduction.

Other studies worldwide have looked at GLP-1 drugs and tumor effects among various cancer cell lines. In a study using pancreatic cancer cell lines, GLP-1 liraglutide suppressed cancer cell growth and led to cell death. Similarly, a study using breast cancer cells found liraglutide reduced cancer cell viability and the ability for cells to migrate.

As researchers identify additional links between GLP-1s and improvements across organ systems, the knock-on effects could lead to lower cancer risks as well. For example, studies presented at The Liver Meeting in San Diego in November pointed to GLP-1s reducing fatty liver disease, which can slow the progression to liver cancer.

“Separate from obesity, having higher levels of body fat is associated with an increased risk of several forms of cancer,” said Neil Iyengar, MD, an oncologist at Memorial Sloan Kettering Cancer Center in New York City. Iyengar researches the relationship between obesity and cancer.

“I foresee that this class of drugs will revolutionize obesity and the cancer burden that comes with it, if people can get access,” he said. “This really is an exciting development.”

Ongoing GLP-1 Research

On the other hand, cancer researchers have also expressed concerns about potential associations between GLP-1s and increased cancer risks. In the obesity-associated cancer study by Case Western researchers, patients with type 2 diabetes taking a GLP-1 drug appeared to have a slightly higher risk for kidney cancer than those taking metformin.

In addition, GLP-1 studies in animals have indicated that the drugs may increase the risks for medullary thyroid cancer and pancreatic cancer. However, the data on increased risks in humans remain inconclusive, and more recent studies refute these findings.

For instance, cancer researchers in India conducted a systematic review and meta-analysis of semaglutide and cancer risks, finding that 37 randomized controlled trials and 19 real-world studies didn’t find increased risks for any cancer, including pancreatic and thyroid cancers.

In another systematic review by Brazilian researchers, 50 trials found GLP-1s didn’t increase the risk for breast cancer or benign breast neoplasms.

In 2025, new retrospective studies will show more nuanced data, especially as more patients — both with and without type 2 diabetes — take semaglutide, tirzepatide, and new GLP-1 drugs in the research pipeline.

“The holy grail has always been getting a medication to treat obesity,” said Anne McTiernan, MD, PhD, an epidemiologist and obesity researcher at the Fred Hutchinson Cancer Center in Seattle.

“There have been trials focused on these medications’ effects on diabetes and cardiovascular disease treatment, but no trials have tested their effects on cancer risk,” she said. “Usually, many years of follow-up of large numbers of patients are needed to see cancer effects of a carcinogen or cancer-preventing intervention.”

Those clinical trials are likely coming soon, she said. Researchers will need to conduct prospective clinical trials to examine the direct relationship between GLP-1 drugs and cancer risks, as well as the underlying mechanisms linked to cancer cell growth, activation of immune cells, and anti-inflammatory properties.

Because GLP-1 medications aren’t intended to be taken forever, researchers will also need to consider the associations with long-term cancer risks. Even so, weight loss and other obesity-related improvements could contribute to overall lower cancer risks in the end.

“If taking these drugs for a limited amount of time can help people lose weight and get on an exercise plan, then that’s helping lower cancer risk long-term,” said Sonali Thosani, MD, associate professor of endocrine neoplasia and hormonal disorders at the University of Texas MD Anderson Cancer Center in Houston.

“But it all comes back to someone making lifestyle changes and sticking to them, even after they stop taking the drugs,” she said. “If they can do that, then you’ll probably see a net positive for long-term cancer risks and other long-term health risks.”

A version of this article appeared on Medscape.com.

The latest glucagon-like peptide 1 (GLP-1) receptor agonists have been heralded for their potential to not only boost weight loss and glucose control but also improve cardiovascular, gastric, hepatic, and renal values.

Throughout 2024, research has also indicated GLP-1 drugs may reduce risks for obesity-related cancer.

In a US study of more than 1.6 million patients with type 2 diabetes, cancer researchers found that patients who took a GLP-1 drug had significant risk reductions for 10 of 13 obesity-associated cancers, as compared with patients who only took insulin.

They also saw a declining risk for stomach cancer, though it wasn’t considered statistically significant, but not a reduced risk for postmenopausal breast cancer or thyroid cancer.

The associations make sense, particularly because GLP-1 drugs have unexpected effects on modulating immune functions linked to obesity-associated cancers.

“The protective effects of GLP-1s against obesity-associated cancers likely stem from multiple mechanisms,” said lead author Lindsey Wang, a medical student and research scholar at Case Western Reserve University in Cleveland.

“These drugs promote substantial weight loss, reducing obesity-related cancer risks,” she said. “They also enhance insulin sensitivity and lower insulin levels, decreasing cancer cell growth signals.”

Additional GLP-1 Studies

The Case Western team also published a study in December 2023 that found people with type 2 diabetes who took GLP-1s had a 44% lower risk for colorectal cancer than those who took insulin and a 25% lower risk than those who took metformin. The research suggested even greater risk reductions among those with overweight or obesity, with GLP-1 users having a 50% lower risk than those who took insulin and a 42% lower risk than those who took metformin.

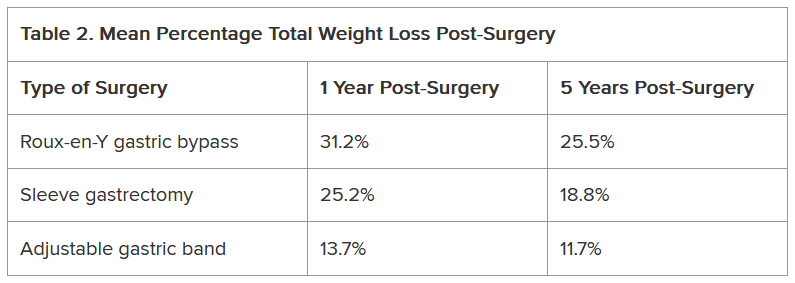

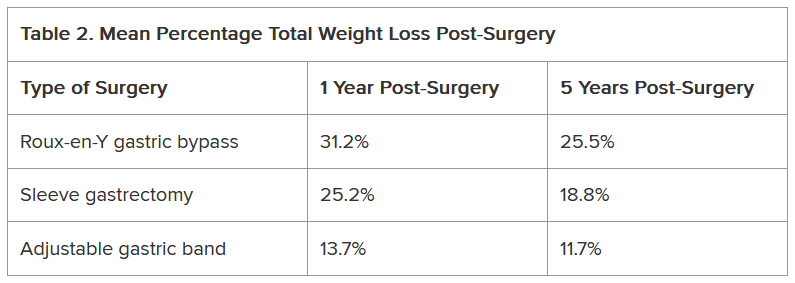

In another recent Case Western study, both bariatric surgery and GLP-1 drugs reduced the risk for obesity-related cancers. While those who had bariatric surgery had a 22% risk reduction over 10 years, as compared with those who received no treatment, those taking GLP-1 had a 39% risk reduction.

Other studies worldwide have looked at GLP-1 drugs and tumor effects among various cancer cell lines. In a study using pancreatic cancer cell lines, GLP-1 liraglutide suppressed cancer cell growth and led to cell death. Similarly, a study using breast cancer cells found liraglutide reduced cancer cell viability and the ability for cells to migrate.

As researchers identify additional links between GLP-1s and improvements across organ systems, the knock-on effects could lead to lower cancer risks as well. For example, studies presented at The Liver Meeting in San Diego in November pointed to GLP-1s reducing fatty liver disease, which can slow the progression to liver cancer.

“Separate from obesity, having higher levels of body fat is associated with an increased risk of several forms of cancer,” said Neil Iyengar, MD, an oncologist at Memorial Sloan Kettering Cancer Center in New York City. Iyengar researches the relationship between obesity and cancer.

“I foresee that this class of drugs will revolutionize obesity and the cancer burden that comes with it, if people can get access,” he said. “This really is an exciting development.”

Ongoing GLP-1 Research

On the other hand, cancer researchers have also expressed concerns about potential associations between GLP-1s and increased cancer risks. In the obesity-associated cancer study by Case Western researchers, patients with type 2 diabetes taking a GLP-1 drug appeared to have a slightly higher risk for kidney cancer than those taking metformin.

In addition, GLP-1 studies in animals have indicated that the drugs may increase the risks for medullary thyroid cancer and pancreatic cancer. However, the data on increased risks in humans remain inconclusive, and more recent studies refute these findings.

For instance, cancer researchers in India conducted a systematic review and meta-analysis of semaglutide and cancer risks, finding that 37 randomized controlled trials and 19 real-world studies didn’t find increased risks for any cancer, including pancreatic and thyroid cancers.

In another systematic review by Brazilian researchers, 50 trials found GLP-1s didn’t increase the risk for breast cancer or benign breast neoplasms.

In 2025, new retrospective studies will show more nuanced data, especially as more patients — both with and without type 2 diabetes — take semaglutide, tirzepatide, and new GLP-1 drugs in the research pipeline.

“The holy grail has always been getting a medication to treat obesity,” said Anne McTiernan, MD, PhD, an epidemiologist and obesity researcher at the Fred Hutchinson Cancer Center in Seattle.

“There have been trials focused on these medications’ effects on diabetes and cardiovascular disease treatment, but no trials have tested their effects on cancer risk,” she said. “Usually, many years of follow-up of large numbers of patients are needed to see cancer effects of a carcinogen or cancer-preventing intervention.”

Those clinical trials are likely coming soon, she said. Researchers will need to conduct prospective clinical trials to examine the direct relationship between GLP-1 drugs and cancer risks, as well as the underlying mechanisms linked to cancer cell growth, activation of immune cells, and anti-inflammatory properties.

Because GLP-1 medications aren’t intended to be taken forever, researchers will also need to consider the associations with long-term cancer risks. Even so, weight loss and other obesity-related improvements could contribute to overall lower cancer risks in the end.

“If taking these drugs for a limited amount of time can help people lose weight and get on an exercise plan, then that’s helping lower cancer risk long-term,” said Sonali Thosani, MD, associate professor of endocrine neoplasia and hormonal disorders at the University of Texas MD Anderson Cancer Center in Houston.

“But it all comes back to someone making lifestyle changes and sticking to them, even after they stop taking the drugs,” she said. “If they can do that, then you’ll probably see a net positive for long-term cancer risks and other long-term health risks.”

A version of this article appeared on Medscape.com.

The latest glucagon-like peptide 1 (GLP-1) receptor agonists have been heralded for their potential to not only boost weight loss and glucose control but also improve cardiovascular, gastric, hepatic, and renal values.

Throughout 2024, research has also indicated GLP-1 drugs may reduce risks for obesity-related cancer.

In a US study of more than 1.6 million patients with type 2 diabetes, cancer researchers found that patients who took a GLP-1 drug had significant risk reductions for 10 of 13 obesity-associated cancers, as compared with patients who only took insulin.

They also saw a declining risk for stomach cancer, though it wasn’t considered statistically significant, but not a reduced risk for postmenopausal breast cancer or thyroid cancer.

The associations make sense, particularly because GLP-1 drugs have unexpected effects on modulating immune functions linked to obesity-associated cancers.

“The protective effects of GLP-1s against obesity-associated cancers likely stem from multiple mechanisms,” said lead author Lindsey Wang, a medical student and research scholar at Case Western Reserve University in Cleveland.

“These drugs promote substantial weight loss, reducing obesity-related cancer risks,” she said. “They also enhance insulin sensitivity and lower insulin levels, decreasing cancer cell growth signals.”

Additional GLP-1 Studies

The Case Western team also published a study in December 2023 that found people with type 2 diabetes who took GLP-1s had a 44% lower risk for colorectal cancer than those who took insulin and a 25% lower risk than those who took metformin. The research suggested even greater risk reductions among those with overweight or obesity, with GLP-1 users having a 50% lower risk than those who took insulin and a 42% lower risk than those who took metformin.

In another recent Case Western study, both bariatric surgery and GLP-1 drugs reduced the risk for obesity-related cancers. While those who had bariatric surgery had a 22% risk reduction over 10 years, as compared with those who received no treatment, those taking GLP-1 had a 39% risk reduction.

Other studies worldwide have looked at GLP-1 drugs and tumor effects among various cancer cell lines. In a study using pancreatic cancer cell lines, GLP-1 liraglutide suppressed cancer cell growth and led to cell death. Similarly, a study using breast cancer cells found liraglutide reduced cancer cell viability and the ability for cells to migrate.

As researchers identify additional links between GLP-1s and improvements across organ systems, the knock-on effects could lead to lower cancer risks as well. For example, studies presented at The Liver Meeting in San Diego in November pointed to GLP-1s reducing fatty liver disease, which can slow the progression to liver cancer.

“Separate from obesity, having higher levels of body fat is associated with an increased risk of several forms of cancer,” said Neil Iyengar, MD, an oncologist at Memorial Sloan Kettering Cancer Center in New York City. Iyengar researches the relationship between obesity and cancer.

“I foresee that this class of drugs will revolutionize obesity and the cancer burden that comes with it, if people can get access,” he said. “This really is an exciting development.”

Ongoing GLP-1 Research

On the other hand, cancer researchers have also expressed concerns about potential associations between GLP-1s and increased cancer risks. In the obesity-associated cancer study by Case Western researchers, patients with type 2 diabetes taking a GLP-1 drug appeared to have a slightly higher risk for kidney cancer than those taking metformin.

In addition, GLP-1 studies in animals have indicated that the drugs may increase the risks for medullary thyroid cancer and pancreatic cancer. However, the data on increased risks in humans remain inconclusive, and more recent studies refute these findings.

For instance, cancer researchers in India conducted a systematic review and meta-analysis of semaglutide and cancer risks, finding that 37 randomized controlled trials and 19 real-world studies didn’t find increased risks for any cancer, including pancreatic and thyroid cancers.

In another systematic review by Brazilian researchers, 50 trials found GLP-1s didn’t increase the risk for breast cancer or benign breast neoplasms.

In 2025, new retrospective studies will show more nuanced data, especially as more patients — both with and without type 2 diabetes — take semaglutide, tirzepatide, and new GLP-1 drugs in the research pipeline.

“The holy grail has always been getting a medication to treat obesity,” said Anne McTiernan, MD, PhD, an epidemiologist and obesity researcher at the Fred Hutchinson Cancer Center in Seattle.

“There have been trials focused on these medications’ effects on diabetes and cardiovascular disease treatment, but no trials have tested their effects on cancer risk,” she said. “Usually, many years of follow-up of large numbers of patients are needed to see cancer effects of a carcinogen or cancer-preventing intervention.”

Those clinical trials are likely coming soon, she said. Researchers will need to conduct prospective clinical trials to examine the direct relationship between GLP-1 drugs and cancer risks, as well as the underlying mechanisms linked to cancer cell growth, activation of immune cells, and anti-inflammatory properties.

Because GLP-1 medications aren’t intended to be taken forever, researchers will also need to consider the associations with long-term cancer risks. Even so, weight loss and other obesity-related improvements could contribute to overall lower cancer risks in the end.

“If taking these drugs for a limited amount of time can help people lose weight and get on an exercise plan, then that’s helping lower cancer risk long-term,” said Sonali Thosani, MD, associate professor of endocrine neoplasia and hormonal disorders at the University of Texas MD Anderson Cancer Center in Houston.

“But it all comes back to someone making lifestyle changes and sticking to them, even after they stop taking the drugs,” she said. “If they can do that, then you’ll probably see a net positive for long-term cancer risks and other long-term health risks.”

A version of this article appeared on Medscape.com.

Both High and Low HDL Levels Linked to Increased Risk for Age-Related Macular Degeneration

TOPLINE:

This study also identified a potential novel single-nucleotide polymorphism linked to an elevated risk for the retina condition.

METHODOLOGY:

- Researchers conducted a cross-sectional retrospective analysis using data from the All of Us research program to assess the association between lipoprotein and the risk for AMD.

- They analyzed data from 2328 patients with AMD (mean age, 75.5 years; 46.7% women; 84.2% White individuals) and 5028 matched controls (mean age, 75.6 years; 52.5% women; 82.9% White individuals).

- Data were extracted for smoking status, history of hyperlipidemia, use of statins (categorized as hepatically and non-hepatically metabolized), and laboratory values for total triglyceride, low-density lipoprotein (LDL), and HDL levels.

- Data for single-nucleotide polymorphisms associated with the dysregulation of LDL and HDL metabolism were extracted using the PLINK toolkit.

TAKEAWAY:

- Both high and low HDL levels were associated with an increased risk for AMD (adjusted odds ratio [aOR], 1.28 for both; both P < .001), whereas low and high levels of triglyceride and LDL did not demonstrate a statistically significant association with the risk for AMD.

- A history of smoking and statin use showed significant associations with an increased risk for AMD (aOR, 1.30 and 1.36, respectively; both P < .001).

- Single-nucleotide polymorphisms in the genes associated with HDL metabolism, ABCA1 and LIPC, were negatively associated with the risk for AMD (aOR, 0.88; P = .04 and aOR, 0.86; P = .001, respectively).

- Lipoprotein(a) or Lp(a) was identified as a novel single nucleotide polymorphism linked to an increased risk for AMD (aOR, 1.37; P = .007).

IN PRACTICE:

“Despite conflicting evidence regarding the relationship with elevated HDL and AMD risk, this is to our knowledge the first time a U-shaped relationship with low and high HDL and AMD has been described. In fact, the presence of a U-shaped relationship may explain inconsistency in prior analyses comparing mean HDL levels in AMD and control populations,” the study authors wrote.

SOURCE:

The study was led by Jimmy S. Chen, MD, of the Viterbi Family Department of Ophthalmology and Shiley Eye Institute at the University of California, San Diego. It was published online on January 3, 2025, in Ophthalmology.

LIMITATIONS:

The study was limited by the retrospective collection and analysis of data. The use of billing codes for diagnosis extraction may have introduced documentation inaccuracies. The subgroup analysis by severity of AMD was not performed.

DISCLOSURES:

One of the authors was funded by grants from the National Eye Institute (NEI), Research to Prevent Blindness Career Development Award, Robert Machemer MD and International Retinal Research Foundation, and the UC San Diego Academic Senate. Another author reported receiving a grant from the National Heart, Lung, and Blood Institute, while a third author received funding from the National Institutes of Health (NIH), NEI, and Research to Prevent Blindness. The All of Us Research Program was supported by grants from the NIH and other sources. The authors reported no conflicts of interest.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article appeared on Medscape.com.

TOPLINE:

This study also identified a potential novel single-nucleotide polymorphism linked to an elevated risk for the retina condition.

METHODOLOGY:

- Researchers conducted a cross-sectional retrospective analysis using data from the All of Us research program to assess the association between lipoprotein and the risk for AMD.

- They analyzed data from 2328 patients with AMD (mean age, 75.5 years; 46.7% women; 84.2% White individuals) and 5028 matched controls (mean age, 75.6 years; 52.5% women; 82.9% White individuals).

- Data were extracted for smoking status, history of hyperlipidemia, use of statins (categorized as hepatically and non-hepatically metabolized), and laboratory values for total triglyceride, low-density lipoprotein (LDL), and HDL levels.

- Data for single-nucleotide polymorphisms associated with the dysregulation of LDL and HDL metabolism were extracted using the PLINK toolkit.

TAKEAWAY:

- Both high and low HDL levels were associated with an increased risk for AMD (adjusted odds ratio [aOR], 1.28 for both; both P < .001), whereas low and high levels of triglyceride and LDL did not demonstrate a statistically significant association with the risk for AMD.

- A history of smoking and statin use showed significant associations with an increased risk for AMD (aOR, 1.30 and 1.36, respectively; both P < .001).

- Single-nucleotide polymorphisms in the genes associated with HDL metabolism, ABCA1 and LIPC, were negatively associated with the risk for AMD (aOR, 0.88; P = .04 and aOR, 0.86; P = .001, respectively).

- Lipoprotein(a) or Lp(a) was identified as a novel single nucleotide polymorphism linked to an increased risk for AMD (aOR, 1.37; P = .007).

IN PRACTICE:

“Despite conflicting evidence regarding the relationship with elevated HDL and AMD risk, this is to our knowledge the first time a U-shaped relationship with low and high HDL and AMD has been described. In fact, the presence of a U-shaped relationship may explain inconsistency in prior analyses comparing mean HDL levels in AMD and control populations,” the study authors wrote.

SOURCE:

The study was led by Jimmy S. Chen, MD, of the Viterbi Family Department of Ophthalmology and Shiley Eye Institute at the University of California, San Diego. It was published online on January 3, 2025, in Ophthalmology.

LIMITATIONS:

The study was limited by the retrospective collection and analysis of data. The use of billing codes for diagnosis extraction may have introduced documentation inaccuracies. The subgroup analysis by severity of AMD was not performed.

DISCLOSURES:

One of the authors was funded by grants from the National Eye Institute (NEI), Research to Prevent Blindness Career Development Award, Robert Machemer MD and International Retinal Research Foundation, and the UC San Diego Academic Senate. Another author reported receiving a grant from the National Heart, Lung, and Blood Institute, while a third author received funding from the National Institutes of Health (NIH), NEI, and Research to Prevent Blindness. The All of Us Research Program was supported by grants from the NIH and other sources. The authors reported no conflicts of interest.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article appeared on Medscape.com.

TOPLINE:

This study also identified a potential novel single-nucleotide polymorphism linked to an elevated risk for the retina condition.

METHODOLOGY: