User login

Autotransplant is linked to higher AML, MDS risk

Patients undergoing autologous hematopoietic cell transplantation for lymphoma or plasma cell myeloma have 10-100 times the risk of acute myeloid leukemia (AML) or myelodysplastic syndrome (MDS) seen in the general population, according to a retrospective cohort study.

The elevated risk also exceeds that of similar patients largely untreated with autotransplant.

Exposure to DNA-damaging drugs and ionizing radiation – both used in autotransplant – is known to increase risk of these treatment-related myeloid neoplasms, according to Tomas Radivoyevitch, PhD, of the Cleveland Clinic Foundation and his colleagues. Concern about this complication has been growing as long-term survivorship after transplant improves.

The investigators analyzed data reported to the Center for International Blood and Marrow Transplant Research. Analyses were based on 9,028 patients undergoing autotransplant during 1995-2010 for Hodgkin lymphoma (916 patients), non-Hodgkin lymphoma (3,546 patients), or plasma cell myeloma (4,566 patients). Their median duration of follow-up was 90 months, 110 months, and 97 months, respectively.

Overall, 3.7% of the cohort developed AML or MDS after their transplant. More aggressive transplantation protocols increased the likelihood of this outcome: Risk was higher for patients with Hodgkin lymphoma who received conditioning with total body radiation versus chemotherapy alone (hazard ratio, 4.0); patients with non-Hodgkin lymphoma who received conditioning with total body radiation (HR, 1.7) or with busulfan and melphalan or cyclophosphamide (HR, 1.8) versus the BEAM regimen; patients with non-Hodgkin lymphoma or plasma cell myeloma who received three or more lines of chemotherapy versus just one line (HR, 1.9 and 1.8, respectively); and patients with non-Hodgkin lymphoma who underwent transplantation in 2005-2010 versus 1995-1999 (HR, 2.1).

Patients reported to Surveillance, Epidemiology and End Results (SEER) database with the same lymphoma and plasma cell myeloma diagnoses, few of whom underwent autotransplant, had risks of AML and MDS that were 5-10 times higher than the background level in the population. But the study autotransplant cohort had a risk of AML that was 10-50 times higher, and a relative risk of MDS that was roughly 100 times higher than the background level.

“These increases may be related to exposure to high doses of DNA-damaging drugs given for the autotransplant, but this hypothesis can only be tested in a prospective study,” Dr. Radivoyevitch and his coinvestigators wrote.

The reason for the greater elevation of MDS risk, compared with AML risk, is unknown. “One possible explanation is that many cases of MDS evolve to AML, and that earlier diagnosis from increased posttransplant surveillance resulted in a deficiency of AML,” they wrote. “A second is based on steeper MDS versus AML incidences versus age … and the possibility that transplantation recipient marrow ages (i.e., marrow biological ages) are perhaps decades older than calendar ages.”

The Center for International Blood and Marrow Transplant Research is supported by several U.S. government agencies and numerous pharmaceutical companies. The authors reported that they had no relevant conflicts of interest.

SOURCE: Radivoyevitch T et al. Leuk Res. 2018 Jul 19. pii: S0145-2126(18)30160-7.

Patients undergoing autologous hematopoietic cell transplantation for lymphoma or plasma cell myeloma have 10-100 times the risk of acute myeloid leukemia (AML) or myelodysplastic syndrome (MDS) seen in the general population, according to a retrospective cohort study.

The elevated risk also exceeds that of similar patients largely untreated with autotransplant.

Exposure to DNA-damaging drugs and ionizing radiation – both used in autotransplant – is known to increase risk of these treatment-related myeloid neoplasms, according to Tomas Radivoyevitch, PhD, of the Cleveland Clinic Foundation and his colleagues. Concern about this complication has been growing as long-term survivorship after transplant improves.

The investigators analyzed data reported to the Center for International Blood and Marrow Transplant Research. Analyses were based on 9,028 patients undergoing autotransplant during 1995-2010 for Hodgkin lymphoma (916 patients), non-Hodgkin lymphoma (3,546 patients), or plasma cell myeloma (4,566 patients). Their median duration of follow-up was 90 months, 110 months, and 97 months, respectively.

Overall, 3.7% of the cohort developed AML or MDS after their transplant. More aggressive transplantation protocols increased the likelihood of this outcome: Risk was higher for patients with Hodgkin lymphoma who received conditioning with total body radiation versus chemotherapy alone (hazard ratio, 4.0); patients with non-Hodgkin lymphoma who received conditioning with total body radiation (HR, 1.7) or with busulfan and melphalan or cyclophosphamide (HR, 1.8) versus the BEAM regimen; patients with non-Hodgkin lymphoma or plasma cell myeloma who received three or more lines of chemotherapy versus just one line (HR, 1.9 and 1.8, respectively); and patients with non-Hodgkin lymphoma who underwent transplantation in 2005-2010 versus 1995-1999 (HR, 2.1).

Patients reported to Surveillance, Epidemiology and End Results (SEER) database with the same lymphoma and plasma cell myeloma diagnoses, few of whom underwent autotransplant, had risks of AML and MDS that were 5-10 times higher than the background level in the population. But the study autotransplant cohort had a risk of AML that was 10-50 times higher, and a relative risk of MDS that was roughly 100 times higher than the background level.

“These increases may be related to exposure to high doses of DNA-damaging drugs given for the autotransplant, but this hypothesis can only be tested in a prospective study,” Dr. Radivoyevitch and his coinvestigators wrote.

The reason for the greater elevation of MDS risk, compared with AML risk, is unknown. “One possible explanation is that many cases of MDS evolve to AML, and that earlier diagnosis from increased posttransplant surveillance resulted in a deficiency of AML,” they wrote. “A second is based on steeper MDS versus AML incidences versus age … and the possibility that transplantation recipient marrow ages (i.e., marrow biological ages) are perhaps decades older than calendar ages.”

The Center for International Blood and Marrow Transplant Research is supported by several U.S. government agencies and numerous pharmaceutical companies. The authors reported that they had no relevant conflicts of interest.

SOURCE: Radivoyevitch T et al. Leuk Res. 2018 Jul 19. pii: S0145-2126(18)30160-7.

Patients undergoing autologous hematopoietic cell transplantation for lymphoma or plasma cell myeloma have 10-100 times the risk of acute myeloid leukemia (AML) or myelodysplastic syndrome (MDS) seen in the general population, according to a retrospective cohort study.

The elevated risk also exceeds that of similar patients largely untreated with autotransplant.

Exposure to DNA-damaging drugs and ionizing radiation – both used in autotransplant – is known to increase risk of these treatment-related myeloid neoplasms, according to Tomas Radivoyevitch, PhD, of the Cleveland Clinic Foundation and his colleagues. Concern about this complication has been growing as long-term survivorship after transplant improves.

The investigators analyzed data reported to the Center for International Blood and Marrow Transplant Research. Analyses were based on 9,028 patients undergoing autotransplant during 1995-2010 for Hodgkin lymphoma (916 patients), non-Hodgkin lymphoma (3,546 patients), or plasma cell myeloma (4,566 patients). Their median duration of follow-up was 90 months, 110 months, and 97 months, respectively.

Overall, 3.7% of the cohort developed AML or MDS after their transplant. More aggressive transplantation protocols increased the likelihood of this outcome: Risk was higher for patients with Hodgkin lymphoma who received conditioning with total body radiation versus chemotherapy alone (hazard ratio, 4.0); patients with non-Hodgkin lymphoma who received conditioning with total body radiation (HR, 1.7) or with busulfan and melphalan or cyclophosphamide (HR, 1.8) versus the BEAM regimen; patients with non-Hodgkin lymphoma or plasma cell myeloma who received three or more lines of chemotherapy versus just one line (HR, 1.9 and 1.8, respectively); and patients with non-Hodgkin lymphoma who underwent transplantation in 2005-2010 versus 1995-1999 (HR, 2.1).

Patients reported to Surveillance, Epidemiology and End Results (SEER) database with the same lymphoma and plasma cell myeloma diagnoses, few of whom underwent autotransplant, had risks of AML and MDS that were 5-10 times higher than the background level in the population. But the study autotransplant cohort had a risk of AML that was 10-50 times higher, and a relative risk of MDS that was roughly 100 times higher than the background level.

“These increases may be related to exposure to high doses of DNA-damaging drugs given for the autotransplant, but this hypothesis can only be tested in a prospective study,” Dr. Radivoyevitch and his coinvestigators wrote.

The reason for the greater elevation of MDS risk, compared with AML risk, is unknown. “One possible explanation is that many cases of MDS evolve to AML, and that earlier diagnosis from increased posttransplant surveillance resulted in a deficiency of AML,” they wrote. “A second is based on steeper MDS versus AML incidences versus age … and the possibility that transplantation recipient marrow ages (i.e., marrow biological ages) are perhaps decades older than calendar ages.”

The Center for International Blood and Marrow Transplant Research is supported by several U.S. government agencies and numerous pharmaceutical companies. The authors reported that they had no relevant conflicts of interest.

SOURCE: Radivoyevitch T et al. Leuk Res. 2018 Jul 19. pii: S0145-2126(18)30160-7.

FROM LEUKEMIA RESEARCH

Key clinical point:

Major finding: Patients undergoing autologous hematopoietic cell transplantation have risks for AML and MDS that are 10-100 times higher than those of the general population.

Study details: A retrospective cohort study of 9,028 patients undergoing hematopoietic cell autotransplant during 1995-2010 for Hodgkin lymphoma, non-Hodgkin lymphoma, or plasma cell myeloma.

Disclosures: The Center for International Blood and Marrow Transplant Research is supported by U.S. government agencies and numerous pharmaceutical companies. The authors reported that they have no relevant conflicts of interest.

Source: Radivoyevitch T et al. Leuk Res. 2018 Jul 19. pii: S0145-2126(18)30160-7.

Secondhand smoke drives ED visits for teens

Teens who were exposed to any type of and to have more such visits, compared with unexposed controls, based on data from more than 7,000 adolescents.

Approximately 35% of U.S. teens spent more than an hour exposed to secondhand smoke in a given week, wrote Ashley L. Merianos, PhD, of the University of Cincinnati and her colleagues.

In a study published in Pediatrics, the researchers conducted a secondary analysis of nonsmoking adolescents aged 12-17 years who had not been diagnosed with asthma and who were part of the PATH (Population Assessment of Tobacco and Health) study, a longitudinal cohort trial of tobacco use behavior and related health outcomes in adolescents and adults in the United States. The data were collected between Oct. 3, 2014, and Oct. 30, 2015. The researchers reviewed three main measures of tobacco smoke exposure (TSE): living with a smoker, being exposed to secondhand smoke at home, and being exposed to secondhand smoke for an hour or more in the past 7 days.

Overall, teens who lived with a smoker, had secondary exposure at home, or had at least 1 hour of TSE had significantly more emergency department and/or urgent care visits (mean ranged from 1.62 to 1.65), compared with unexposed peers (mean visits ranged from 1.42 to 1.48). Those who both lived with a smoker and had at least 1 hour of TSE exposure were significantly more likely to visit an ED or urgent care center.

In addition, teens who lived with a smoker, had secondary exposure at home, and had at least 1 hour of TSE were significantly more likely than were unexposed peers to report shortness of breath, difficulty exercising, wheezing during and after exercise, and a dry cough at night (P less than .001).

The researchers also assessed other health indicators, and found that teens with TSE exposure were significantly less likely than were unexposed peers to report very good or excellent health and were approximately 1.50 times more likely than unexposed peers to report missing school because of poor health.

The results were limited by several factors including the use of self-reports of TSE and parent reports of emergency or urgent care visits, and by the inclusion only of other public use variables in the PATH database, the researchers noted. But they adjusted for potentially confounding factors such as household income level, parent education, and health insurance status. However, “Because adolescents are high users of EDs and/or [urgent care] for primary care reasons, these venues are high-volume settings that should be used to offer interventions to adolescents with TSE and their families,” they said.

The researchers had no financial conflicts to disclose. The study was funded by the National Institutes of Health via the National Institute on Drug Abuse and the Eunice Kennedy Shriver National Institute of Child Health and Human Development.

SOURCE: Merianos AL et al. Pediatrics 2018 Aug 6. doi: org/10.1542/peds.2018-0266.

Teens who were exposed to any type of and to have more such visits, compared with unexposed controls, based on data from more than 7,000 adolescents.

Approximately 35% of U.S. teens spent more than an hour exposed to secondhand smoke in a given week, wrote Ashley L. Merianos, PhD, of the University of Cincinnati and her colleagues.

In a study published in Pediatrics, the researchers conducted a secondary analysis of nonsmoking adolescents aged 12-17 years who had not been diagnosed with asthma and who were part of the PATH (Population Assessment of Tobacco and Health) study, a longitudinal cohort trial of tobacco use behavior and related health outcomes in adolescents and adults in the United States. The data were collected between Oct. 3, 2014, and Oct. 30, 2015. The researchers reviewed three main measures of tobacco smoke exposure (TSE): living with a smoker, being exposed to secondhand smoke at home, and being exposed to secondhand smoke for an hour or more in the past 7 days.

Overall, teens who lived with a smoker, had secondary exposure at home, or had at least 1 hour of TSE had significantly more emergency department and/or urgent care visits (mean ranged from 1.62 to 1.65), compared with unexposed peers (mean visits ranged from 1.42 to 1.48). Those who both lived with a smoker and had at least 1 hour of TSE exposure were significantly more likely to visit an ED or urgent care center.

In addition, teens who lived with a smoker, had secondary exposure at home, and had at least 1 hour of TSE were significantly more likely than were unexposed peers to report shortness of breath, difficulty exercising, wheezing during and after exercise, and a dry cough at night (P less than .001).

The researchers also assessed other health indicators, and found that teens with TSE exposure were significantly less likely than were unexposed peers to report very good or excellent health and were approximately 1.50 times more likely than unexposed peers to report missing school because of poor health.

The results were limited by several factors including the use of self-reports of TSE and parent reports of emergency or urgent care visits, and by the inclusion only of other public use variables in the PATH database, the researchers noted. But they adjusted for potentially confounding factors such as household income level, parent education, and health insurance status. However, “Because adolescents are high users of EDs and/or [urgent care] for primary care reasons, these venues are high-volume settings that should be used to offer interventions to adolescents with TSE and their families,” they said.

The researchers had no financial conflicts to disclose. The study was funded by the National Institutes of Health via the National Institute on Drug Abuse and the Eunice Kennedy Shriver National Institute of Child Health and Human Development.

SOURCE: Merianos AL et al. Pediatrics 2018 Aug 6. doi: org/10.1542/peds.2018-0266.

Teens who were exposed to any type of and to have more such visits, compared with unexposed controls, based on data from more than 7,000 adolescents.

Approximately 35% of U.S. teens spent more than an hour exposed to secondhand smoke in a given week, wrote Ashley L. Merianos, PhD, of the University of Cincinnati and her colleagues.

In a study published in Pediatrics, the researchers conducted a secondary analysis of nonsmoking adolescents aged 12-17 years who had not been diagnosed with asthma and who were part of the PATH (Population Assessment of Tobacco and Health) study, a longitudinal cohort trial of tobacco use behavior and related health outcomes in adolescents and adults in the United States. The data were collected between Oct. 3, 2014, and Oct. 30, 2015. The researchers reviewed three main measures of tobacco smoke exposure (TSE): living with a smoker, being exposed to secondhand smoke at home, and being exposed to secondhand smoke for an hour or more in the past 7 days.

Overall, teens who lived with a smoker, had secondary exposure at home, or had at least 1 hour of TSE had significantly more emergency department and/or urgent care visits (mean ranged from 1.62 to 1.65), compared with unexposed peers (mean visits ranged from 1.42 to 1.48). Those who both lived with a smoker and had at least 1 hour of TSE exposure were significantly more likely to visit an ED or urgent care center.

In addition, teens who lived with a smoker, had secondary exposure at home, and had at least 1 hour of TSE were significantly more likely than were unexposed peers to report shortness of breath, difficulty exercising, wheezing during and after exercise, and a dry cough at night (P less than .001).

The researchers also assessed other health indicators, and found that teens with TSE exposure were significantly less likely than were unexposed peers to report very good or excellent health and were approximately 1.50 times more likely than unexposed peers to report missing school because of poor health.

The results were limited by several factors including the use of self-reports of TSE and parent reports of emergency or urgent care visits, and by the inclusion only of other public use variables in the PATH database, the researchers noted. But they adjusted for potentially confounding factors such as household income level, parent education, and health insurance status. However, “Because adolescents are high users of EDs and/or [urgent care] for primary care reasons, these venues are high-volume settings that should be used to offer interventions to adolescents with TSE and their families,” they said.

The researchers had no financial conflicts to disclose. The study was funded by the National Institutes of Health via the National Institute on Drug Abuse and the Eunice Kennedy Shriver National Institute of Child Health and Human Development.

SOURCE: Merianos AL et al. Pediatrics 2018 Aug 6. doi: org/10.1542/peds.2018-0266.

FROM PEDIATRICS

Researchers pinpoint antigen for autoimmune pancreatitis

Researchers have identified laminin 511 as a novel antigen in autoimmune pancreatitis (AIP). A truncated form of the antigen was found in about half of human patients, but fewer than 2% of controls, and mice that were immunized with the antigen responded with induced antibodies and suffered pancreatic injury.

Laminin 511 plays a key role in cell–extracellular matrix (ECM) adhesion in pancreatic tissue. The results, published in Science Translational Medicine, could improve the biologic understanding of AIP and could potentially be a useful diagnostic marker for the disease.

Some autoantibodies are known to be associated with AIP, but the seropositive frequency is low among patients.

The researchers previously demonstrated that injecting IgG from AIP patients into neonatal mice led to pancreatic injury. The IgG was bound to the basement membrane of the pancreatic acini, suggesting the presence of autoantibodies that recognize an antigen in the ECM.

The researchers then screened previously known proteins from the pancreatic ECM against sera from AIP patients, performing Western blot analyses and immunosorbent column chromatography with human and mouse pancreas extracts, and AIP patient IgG. But this approach yielded no results.

The team then conducted an enzyme-linked immunosorbent assay using known pancreatic ECM proteins, which included the laminin subunits 511-FL, 521-FL, 511-E8, 521-E8, 111-EI, 211-E8, and 332-E8. The E8 designates a truncated protein produced by pancreatic elastase that contains the integrin-binding site.

That experiment revealed that 511-E8 is a consistent autoantigen, and a survey of AIP patients found that 26 of 51 (51.0%) had autoantibodies against 511-E8, compared with just 2 of 122 (1.6%) of controls (P less than .001). Further immunohistochemistry studies confirmed that patient IgG binds to laminin in pancreatic tissue.

When the researchers injected 511-E8, 511-FL, 521-FL, or ovalbumin into 8-week-old mice, and then again after 28 days and 56 days, only those who received 511-E8 showed evidence of pancreatic injury 28 days after the final immunization. The mice generated autoantibodies to 511-E8 but not ovalbumin.

The findings may have clinical significance. Patients with antibodies to laminin 511-E8 had a lower frequency of malignancies (0% vs. 32%; P =.0017) and allergic diseases (12% vs. 48%; P =.0043) than patients with no laminin 511-E8 antibodies.

The study was funded by the Japan Society for the Promotion of Science; the Japanese Ministry of Health, Labour, and Welfare; the Practical Research Project for Rare/Intractable Diseases Grant,; the Agency for Medical Research and Development; and the Takeda Science Foundation. One of the authors has filed a patent related to the study results.

SOURCE: Shiokawa M et al. Sci. Transl. Med. 2018 Aug 8. doi: 10.1126/scitranslmed.aaq0997.

Researchers have identified laminin 511 as a novel antigen in autoimmune pancreatitis (AIP). A truncated form of the antigen was found in about half of human patients, but fewer than 2% of controls, and mice that were immunized with the antigen responded with induced antibodies and suffered pancreatic injury.

Laminin 511 plays a key role in cell–extracellular matrix (ECM) adhesion in pancreatic tissue. The results, published in Science Translational Medicine, could improve the biologic understanding of AIP and could potentially be a useful diagnostic marker for the disease.

Some autoantibodies are known to be associated with AIP, but the seropositive frequency is low among patients.

The researchers previously demonstrated that injecting IgG from AIP patients into neonatal mice led to pancreatic injury. The IgG was bound to the basement membrane of the pancreatic acini, suggesting the presence of autoantibodies that recognize an antigen in the ECM.

The researchers then screened previously known proteins from the pancreatic ECM against sera from AIP patients, performing Western blot analyses and immunosorbent column chromatography with human and mouse pancreas extracts, and AIP patient IgG. But this approach yielded no results.

The team then conducted an enzyme-linked immunosorbent assay using known pancreatic ECM proteins, which included the laminin subunits 511-FL, 521-FL, 511-E8, 521-E8, 111-EI, 211-E8, and 332-E8. The E8 designates a truncated protein produced by pancreatic elastase that contains the integrin-binding site.

That experiment revealed that 511-E8 is a consistent autoantigen, and a survey of AIP patients found that 26 of 51 (51.0%) had autoantibodies against 511-E8, compared with just 2 of 122 (1.6%) of controls (P less than .001). Further immunohistochemistry studies confirmed that patient IgG binds to laminin in pancreatic tissue.

When the researchers injected 511-E8, 511-FL, 521-FL, or ovalbumin into 8-week-old mice, and then again after 28 days and 56 days, only those who received 511-E8 showed evidence of pancreatic injury 28 days after the final immunization. The mice generated autoantibodies to 511-E8 but not ovalbumin.

The findings may have clinical significance. Patients with antibodies to laminin 511-E8 had a lower frequency of malignancies (0% vs. 32%; P =.0017) and allergic diseases (12% vs. 48%; P =.0043) than patients with no laminin 511-E8 antibodies.

The study was funded by the Japan Society for the Promotion of Science; the Japanese Ministry of Health, Labour, and Welfare; the Practical Research Project for Rare/Intractable Diseases Grant,; the Agency for Medical Research and Development; and the Takeda Science Foundation. One of the authors has filed a patent related to the study results.

SOURCE: Shiokawa M et al. Sci. Transl. Med. 2018 Aug 8. doi: 10.1126/scitranslmed.aaq0997.

Researchers have identified laminin 511 as a novel antigen in autoimmune pancreatitis (AIP). A truncated form of the antigen was found in about half of human patients, but fewer than 2% of controls, and mice that were immunized with the antigen responded with induced antibodies and suffered pancreatic injury.

Laminin 511 plays a key role in cell–extracellular matrix (ECM) adhesion in pancreatic tissue. The results, published in Science Translational Medicine, could improve the biologic understanding of AIP and could potentially be a useful diagnostic marker for the disease.

Some autoantibodies are known to be associated with AIP, but the seropositive frequency is low among patients.

The researchers previously demonstrated that injecting IgG from AIP patients into neonatal mice led to pancreatic injury. The IgG was bound to the basement membrane of the pancreatic acini, suggesting the presence of autoantibodies that recognize an antigen in the ECM.

The researchers then screened previously known proteins from the pancreatic ECM against sera from AIP patients, performing Western blot analyses and immunosorbent column chromatography with human and mouse pancreas extracts, and AIP patient IgG. But this approach yielded no results.

The team then conducted an enzyme-linked immunosorbent assay using known pancreatic ECM proteins, which included the laminin subunits 511-FL, 521-FL, 511-E8, 521-E8, 111-EI, 211-E8, and 332-E8. The E8 designates a truncated protein produced by pancreatic elastase that contains the integrin-binding site.

That experiment revealed that 511-E8 is a consistent autoantigen, and a survey of AIP patients found that 26 of 51 (51.0%) had autoantibodies against 511-E8, compared with just 2 of 122 (1.6%) of controls (P less than .001). Further immunohistochemistry studies confirmed that patient IgG binds to laminin in pancreatic tissue.

When the researchers injected 511-E8, 511-FL, 521-FL, or ovalbumin into 8-week-old mice, and then again after 28 days and 56 days, only those who received 511-E8 showed evidence of pancreatic injury 28 days after the final immunization. The mice generated autoantibodies to 511-E8 but not ovalbumin.

The findings may have clinical significance. Patients with antibodies to laminin 511-E8 had a lower frequency of malignancies (0% vs. 32%; P =.0017) and allergic diseases (12% vs. 48%; P =.0043) than patients with no laminin 511-E8 antibodies.

The study was funded by the Japan Society for the Promotion of Science; the Japanese Ministry of Health, Labour, and Welfare; the Practical Research Project for Rare/Intractable Diseases Grant,; the Agency for Medical Research and Development; and the Takeda Science Foundation. One of the authors has filed a patent related to the study results.

SOURCE: Shiokawa M et al. Sci. Transl. Med. 2018 Aug 8. doi: 10.1126/scitranslmed.aaq0997.

FROM SCIENCE TRANSLATIONAL MEDICINE

Key clinical point: The study is the first to identify an autoantigen associated with autoimmune pancreatitis.

Major finding: Just over half of autoimmune pancreatitis patients had antibodies against the antigen, compared with 1.6% of controls.

Study details: A mouse and human study (n = 173).

Disclosures: The study was funded by the Japan Society for the Promotion of Science; the Japanese Ministry of Health, Labour, and Welfare; the Practical Research Project for Rare/Intractable Diseases Grant,; the Agency for Medical Research and Development; and the Takeda Science Foundation. One of the authors has filed a patent related to the study results.

Source: Shiokawa M et al. Sci Transl Med. 2018 Aug 8. doi: 10.1126/scitranslmed.aaq0997.

RT linked with better survival in DCIS

Treatment with lumpectomy and radiotherapy was associated with a significant reduction in breast cancer mortality in patients with ductal carcinoma in situ (DCIS), compared with a lumpectomy alone or a mastectomy alone, investigators reported in JAMA Oncology.

Among women who received adjuvant radiation, there was an associated 23% reduced risk of dying of breast cancer. This extrapolated to a cumulative mortality of 2.33% for those treated with lumpectomy alone and 1.74% for women treated with lumpectomy and radiotherapy at 15 years (adjusted hazard ratio, 0.77; 95% confidence interval, 0.67-0.88; P less than .001).

“Although the clinical benefit is small, it is intriguing that radiotherapy has this effect, which appears to be attributable to systemic activity rather than local control,” wrote Vasily Giannakeas, MPH, of the Women’s College Research Institute, Toronto, and colleagues.

Emerging evidence suggests that adding radiotherapy to breast conserving surgery can reduce the risk of breast cancer mortality among women with DCIS and lower the risk of local recurrence. Because of the low rate of mortality associated with DCIS, the authors noted that it has been difficult to investigate deaths related to DCIS. The association of adjuvant radiotherapy with breast cancer survival in this population has also not yet been clearly established.

To determine the extent to which radiotherapy is associated with reduced risk of breast cancer mortality in patients treated for DCIS and identify patient subgroups who might derive the most benefit from radiotherapy, the authors conducted a historical cohort study using the Surveillance, Epidemiology, and End Results database. A total of 140,366 women diagnosed with first primary DCIS between 1998 and 2014 were identified, and three separate comparisons were made using 1:1 matching: lumpectomy with radiation versus lumpectomy alone, lumpectomy alone versus mastectomy, and lumpectomy with radiation therapy versus mastectomy.

A total of 35,070 women (25.0%) were treated with lumpectomy alone, 65,301 (46.5%) were treated with lumpectomy and radiotherapy, and 39,995 (28.5%) were treated with mastectomy.

The overall cumulative mortality for the entire cohort from breast cancer at 15 years was 2.03%. The actuarial 15-year mortality rate for the mastectomy group (2.26%) was similar to those who had lumpectomy without radiotherapy (2.33%).

The adjusted HR for death for mastectomy versus lumpectomy alone (based on 20,832 propensity-matched pairs) was 0.91 (95% CI, 0.78-1.05). The adjusted hazard ratios for death were 0.77 (95% CI, 0.67-0.88) for lumpectomy and radiotherapy versus lumpectomy alone (29,465 propensity-matched pairs), 0.91 (95% CI, 0.78-1.05) for mastectomy alone versus lumpectomy alone (20,832 propensity-matched pairs), and 0.75 (95% CI, 0.65-0.87) for lumpectomy and radiotherapy versus mastectomy (29,865 propensity-matched pairs).

When looking at subgroups and the effect of radiotherapy on mortality, the authors found the following: The HR was 0.59 (95% CI, 0.43-0.80) for patients aged younger than 50 years and 0.86 (95% CI, 0.73- 1.01) for those aged 50 years and older; it was 0.67 (95% CI, 0.51-0.87) for patients with ER-positive cancers, 0.50 (95% CI, 0.32-0.78) for ER-negative cancers, and 0.93 (95% CI, 0.77-1.13) for those with unknown ER status.

“How exactly radiotherapy affects survival is an important question that should be explored in future studies,” the authors concluded.

There was no outside funding source reported. Mr. Giannakeas is supported by the Canadian Institutes of Health Research Frederick Banting and Charles Best Doctoral Research Award.

SOURCE: Giannakeas V et al. JAMA Network Open. 2018 Aug 10. doi:10.1001/jamanetworkopen.2018.1100.

In an accompanying editorial, Mira Goldberg, MD, and Timothy J. Whelan, BM, BCh, of the department of oncology at McMaster University, Hamilton, Ont., noted that the primary goal of using adjuvant radiotherapy in patients with ductal carcinoma in situ (DCIS) is to reduce the risk of local recurrence of DCIS or of invasive breast cancer.

Despite widespread screening with mammography, along with improvements in technology so as to detect even smaller lesions, “there is increased concern about the overdiagnosis of DCIS,” they wrote. Results from recent studies generally suggest that patients with good prognostic factors and who have a low risk of local recurrence at 10 years (10%) are unlikely to gain any major benefit from being treated with radiotherapy.

They also pointed out that there is growing interest in the use of molecular markers as a means to help detect patients who are at a lower risk of recurrence and thus who may not benefit from radiotherapy.

“The results of the study by Giannakeas and colleagues are reassuring,” the editorialists wrote, as it demonstrated that the risk of breast cancer mortality in patients with DCIS is very low, and the potential absolute benefit of radiotherapy is also quite small. (The number of patients that need to be treated to prevent a breast cancer death was 370.) These data continue to support a strategy for low-risk DCIS of omitting radiotherapy after lumpectomy. This is especially pertinent when “one considers the negative effects of treatment: the cost and inconvenience of 5-6 weeks of daily treatments, acute adverse effects such as breast pain and fatigue, and potential long-term toxic effects of cardiac disease and second cancers.”

The editorialists also highlighted the authors’ speculation that there could be additional systemic effects of radiotherapy, possibly resulting from an elicited immune response or radiation scatter to distant tissues. While this hypothesis is theoretically possible, their results could also be explained by confounding factors, such as a higher use of endocrine therapy in patients who received adjuvant radiotherapy.

Dr. Whelan has received research support from Genomic Health. This editorial accompanied the article by Giannakeas et al. (JAMA Network Open. 2018;1[4]e181102). No other disclosures were reported.

In an accompanying editorial, Mira Goldberg, MD, and Timothy J. Whelan, BM, BCh, of the department of oncology at McMaster University, Hamilton, Ont., noted that the primary goal of using adjuvant radiotherapy in patients with ductal carcinoma in situ (DCIS) is to reduce the risk of local recurrence of DCIS or of invasive breast cancer.

Despite widespread screening with mammography, along with improvements in technology so as to detect even smaller lesions, “there is increased concern about the overdiagnosis of DCIS,” they wrote. Results from recent studies generally suggest that patients with good prognostic factors and who have a low risk of local recurrence at 10 years (10%) are unlikely to gain any major benefit from being treated with radiotherapy.

They also pointed out that there is growing interest in the use of molecular markers as a means to help detect patients who are at a lower risk of recurrence and thus who may not benefit from radiotherapy.

“The results of the study by Giannakeas and colleagues are reassuring,” the editorialists wrote, as it demonstrated that the risk of breast cancer mortality in patients with DCIS is very low, and the potential absolute benefit of radiotherapy is also quite small. (The number of patients that need to be treated to prevent a breast cancer death was 370.) These data continue to support a strategy for low-risk DCIS of omitting radiotherapy after lumpectomy. This is especially pertinent when “one considers the negative effects of treatment: the cost and inconvenience of 5-6 weeks of daily treatments, acute adverse effects such as breast pain and fatigue, and potential long-term toxic effects of cardiac disease and second cancers.”

The editorialists also highlighted the authors’ speculation that there could be additional systemic effects of radiotherapy, possibly resulting from an elicited immune response or radiation scatter to distant tissues. While this hypothesis is theoretically possible, their results could also be explained by confounding factors, such as a higher use of endocrine therapy in patients who received adjuvant radiotherapy.

Dr. Whelan has received research support from Genomic Health. This editorial accompanied the article by Giannakeas et al. (JAMA Network Open. 2018;1[4]e181102). No other disclosures were reported.

In an accompanying editorial, Mira Goldberg, MD, and Timothy J. Whelan, BM, BCh, of the department of oncology at McMaster University, Hamilton, Ont., noted that the primary goal of using adjuvant radiotherapy in patients with ductal carcinoma in situ (DCIS) is to reduce the risk of local recurrence of DCIS or of invasive breast cancer.

Despite widespread screening with mammography, along with improvements in technology so as to detect even smaller lesions, “there is increased concern about the overdiagnosis of DCIS,” they wrote. Results from recent studies generally suggest that patients with good prognostic factors and who have a low risk of local recurrence at 10 years (10%) are unlikely to gain any major benefit from being treated with radiotherapy.

They also pointed out that there is growing interest in the use of molecular markers as a means to help detect patients who are at a lower risk of recurrence and thus who may not benefit from radiotherapy.

“The results of the study by Giannakeas and colleagues are reassuring,” the editorialists wrote, as it demonstrated that the risk of breast cancer mortality in patients with DCIS is very low, and the potential absolute benefit of radiotherapy is also quite small. (The number of patients that need to be treated to prevent a breast cancer death was 370.) These data continue to support a strategy for low-risk DCIS of omitting radiotherapy after lumpectomy. This is especially pertinent when “one considers the negative effects of treatment: the cost and inconvenience of 5-6 weeks of daily treatments, acute adverse effects such as breast pain and fatigue, and potential long-term toxic effects of cardiac disease and second cancers.”

The editorialists also highlighted the authors’ speculation that there could be additional systemic effects of radiotherapy, possibly resulting from an elicited immune response or radiation scatter to distant tissues. While this hypothesis is theoretically possible, their results could also be explained by confounding factors, such as a higher use of endocrine therapy in patients who received adjuvant radiotherapy.

Dr. Whelan has received research support from Genomic Health. This editorial accompanied the article by Giannakeas et al. (JAMA Network Open. 2018;1[4]e181102). No other disclosures were reported.

Treatment with lumpectomy and radiotherapy was associated with a significant reduction in breast cancer mortality in patients with ductal carcinoma in situ (DCIS), compared with a lumpectomy alone or a mastectomy alone, investigators reported in JAMA Oncology.

Among women who received adjuvant radiation, there was an associated 23% reduced risk of dying of breast cancer. This extrapolated to a cumulative mortality of 2.33% for those treated with lumpectomy alone and 1.74% for women treated with lumpectomy and radiotherapy at 15 years (adjusted hazard ratio, 0.77; 95% confidence interval, 0.67-0.88; P less than .001).

“Although the clinical benefit is small, it is intriguing that radiotherapy has this effect, which appears to be attributable to systemic activity rather than local control,” wrote Vasily Giannakeas, MPH, of the Women’s College Research Institute, Toronto, and colleagues.

Emerging evidence suggests that adding radiotherapy to breast conserving surgery can reduce the risk of breast cancer mortality among women with DCIS and lower the risk of local recurrence. Because of the low rate of mortality associated with DCIS, the authors noted that it has been difficult to investigate deaths related to DCIS. The association of adjuvant radiotherapy with breast cancer survival in this population has also not yet been clearly established.

To determine the extent to which radiotherapy is associated with reduced risk of breast cancer mortality in patients treated for DCIS and identify patient subgroups who might derive the most benefit from radiotherapy, the authors conducted a historical cohort study using the Surveillance, Epidemiology, and End Results database. A total of 140,366 women diagnosed with first primary DCIS between 1998 and 2014 were identified, and three separate comparisons were made using 1:1 matching: lumpectomy with radiation versus lumpectomy alone, lumpectomy alone versus mastectomy, and lumpectomy with radiation therapy versus mastectomy.

A total of 35,070 women (25.0%) were treated with lumpectomy alone, 65,301 (46.5%) were treated with lumpectomy and radiotherapy, and 39,995 (28.5%) were treated with mastectomy.

The overall cumulative mortality for the entire cohort from breast cancer at 15 years was 2.03%. The actuarial 15-year mortality rate for the mastectomy group (2.26%) was similar to those who had lumpectomy without radiotherapy (2.33%).

The adjusted HR for death for mastectomy versus lumpectomy alone (based on 20,832 propensity-matched pairs) was 0.91 (95% CI, 0.78-1.05). The adjusted hazard ratios for death were 0.77 (95% CI, 0.67-0.88) for lumpectomy and radiotherapy versus lumpectomy alone (29,465 propensity-matched pairs), 0.91 (95% CI, 0.78-1.05) for mastectomy alone versus lumpectomy alone (20,832 propensity-matched pairs), and 0.75 (95% CI, 0.65-0.87) for lumpectomy and radiotherapy versus mastectomy (29,865 propensity-matched pairs).

When looking at subgroups and the effect of radiotherapy on mortality, the authors found the following: The HR was 0.59 (95% CI, 0.43-0.80) for patients aged younger than 50 years and 0.86 (95% CI, 0.73- 1.01) for those aged 50 years and older; it was 0.67 (95% CI, 0.51-0.87) for patients with ER-positive cancers, 0.50 (95% CI, 0.32-0.78) for ER-negative cancers, and 0.93 (95% CI, 0.77-1.13) for those with unknown ER status.

“How exactly radiotherapy affects survival is an important question that should be explored in future studies,” the authors concluded.

There was no outside funding source reported. Mr. Giannakeas is supported by the Canadian Institutes of Health Research Frederick Banting and Charles Best Doctoral Research Award.

SOURCE: Giannakeas V et al. JAMA Network Open. 2018 Aug 10. doi:10.1001/jamanetworkopen.2018.1100.

Treatment with lumpectomy and radiotherapy was associated with a significant reduction in breast cancer mortality in patients with ductal carcinoma in situ (DCIS), compared with a lumpectomy alone or a mastectomy alone, investigators reported in JAMA Oncology.

Among women who received adjuvant radiation, there was an associated 23% reduced risk of dying of breast cancer. This extrapolated to a cumulative mortality of 2.33% for those treated with lumpectomy alone and 1.74% for women treated with lumpectomy and radiotherapy at 15 years (adjusted hazard ratio, 0.77; 95% confidence interval, 0.67-0.88; P less than .001).

“Although the clinical benefit is small, it is intriguing that radiotherapy has this effect, which appears to be attributable to systemic activity rather than local control,” wrote Vasily Giannakeas, MPH, of the Women’s College Research Institute, Toronto, and colleagues.

Emerging evidence suggests that adding radiotherapy to breast conserving surgery can reduce the risk of breast cancer mortality among women with DCIS and lower the risk of local recurrence. Because of the low rate of mortality associated with DCIS, the authors noted that it has been difficult to investigate deaths related to DCIS. The association of adjuvant radiotherapy with breast cancer survival in this population has also not yet been clearly established.

To determine the extent to which radiotherapy is associated with reduced risk of breast cancer mortality in patients treated for DCIS and identify patient subgroups who might derive the most benefit from radiotherapy, the authors conducted a historical cohort study using the Surveillance, Epidemiology, and End Results database. A total of 140,366 women diagnosed with first primary DCIS between 1998 and 2014 were identified, and three separate comparisons were made using 1:1 matching: lumpectomy with radiation versus lumpectomy alone, lumpectomy alone versus mastectomy, and lumpectomy with radiation therapy versus mastectomy.

A total of 35,070 women (25.0%) were treated with lumpectomy alone, 65,301 (46.5%) were treated with lumpectomy and radiotherapy, and 39,995 (28.5%) were treated with mastectomy.

The overall cumulative mortality for the entire cohort from breast cancer at 15 years was 2.03%. The actuarial 15-year mortality rate for the mastectomy group (2.26%) was similar to those who had lumpectomy without radiotherapy (2.33%).

The adjusted HR for death for mastectomy versus lumpectomy alone (based on 20,832 propensity-matched pairs) was 0.91 (95% CI, 0.78-1.05). The adjusted hazard ratios for death were 0.77 (95% CI, 0.67-0.88) for lumpectomy and radiotherapy versus lumpectomy alone (29,465 propensity-matched pairs), 0.91 (95% CI, 0.78-1.05) for mastectomy alone versus lumpectomy alone (20,832 propensity-matched pairs), and 0.75 (95% CI, 0.65-0.87) for lumpectomy and radiotherapy versus mastectomy (29,865 propensity-matched pairs).

When looking at subgroups and the effect of radiotherapy on mortality, the authors found the following: The HR was 0.59 (95% CI, 0.43-0.80) for patients aged younger than 50 years and 0.86 (95% CI, 0.73- 1.01) for those aged 50 years and older; it was 0.67 (95% CI, 0.51-0.87) for patients with ER-positive cancers, 0.50 (95% CI, 0.32-0.78) for ER-negative cancers, and 0.93 (95% CI, 0.77-1.13) for those with unknown ER status.

“How exactly radiotherapy affects survival is an important question that should be explored in future studies,” the authors concluded.

There was no outside funding source reported. Mr. Giannakeas is supported by the Canadian Institutes of Health Research Frederick Banting and Charles Best Doctoral Research Award.

SOURCE: Giannakeas V et al. JAMA Network Open. 2018 Aug 10. doi:10.1001/jamanetworkopen.2018.1100.

FROM JAMA ONCOLOGY

Key clinical point: Lumpectomy and adjuvant radiotherapy together was superior to lumpectomy or mastectomy alone.

Major finding: The 15-year breast cancer mortality rate was 2.33% for lumpectomy alone, 1.74% for lumpectomy and radiation, and 2.26% for mastectomy.

Study details: A historical cohort study using Surveillance, Epidemiology, and End Results data that included 140,366 women diagnosed with first primary ductal carcinoma in situ.

Disclosures: There was no outside funding source reported. Mr. Giannakeas is supported by the Canadian Institutes of Health Research Frederick Banting and Charles Best Doctoral Research Award. No other disclosures were reported.

Source: Giannakeas V et al. JAMA Network Open. 2018 Aug 10. doi: 10.1001/jamanetworkopen.2018.1100.

Concurrent stimulant and opioid use ‘common’ in adult ADHD

A significant number of adults with attention-deficit/hyperactivity disorder are concurrently using stimulants and opioids, highlighting a need for research into the risks and benefits of long-term coadministration of these medications.

Researchers reported the results of a cross-sectional study using Medicaid Analytic eXtract data from 66,406 adults with ADHD across 29 states.

Overall, 32.7% used stimulants, and 5.4% had used both stimulants and opioids long term, defined as at least 30 consecutive days of use. Long-term opioid use was more common among adults who used stimulants, compared with those who did not use stimulants (16.5% vs. 13%), wrote Yu-Jung “Jenny” Wei, PhD, and her associates. The report was published in JAMA Network Open.

Most of the adults who used both stimulants and opioids concurrently long term were using short-acting opioids (81.8%) rather than long-acting (20.6%). However, nearly one-quarter (23.2%) had prescriptions for both long- and short-acting opioids.

The researchers noted a significant 12% increase in the prevalence of concurrent use of stimulants and opioids from 1999 to 2010.

“Our findings suggest that long-term concurrent use of stimulants and opioids has become an increasingly common practice among adult patients with ADHD,” wrote Dr. Wei, of the College of Pharmacy at the University of Florida, Gainesville, and her associates.

The researchers also found an increase in these trends with age: Adults in their 30s showed a 7% higher prevalence of long-term concurrent use, compared with adults in their 20s. In addition, those aged 41-50 years had a 14% higher prevalence, and those aged 51-64 years had a 17% higher prevalence.

Adults with pain had a 10% higher prevalence of concurrent use, while other People with schizophrenia appeared to have a 5% lower incidence of concurrent use.

“Although the concurrent use of stimulants and opioids may initially have been prompted by ADHD symptoms and comorbid chronic pain, continued use of opioids alone or combined with central nervous system stimulants may result in drug dependence and other adverse effects (e.g., overdose) because of the high potential for abuse and misuse,” the authors wrote. “Identifying these high-risk patients allows for early intervention and may reduce the number of adverse events associated with the long-term use of these medications.”

Among the limitations cited is that only prescription medications filled and reimbursed by Medicaid were included in the analysis. “Considering that opioid prescription fills are commonly paid out of pocket, our reported prevalence of concurrent stimulant-opioid use may be too low,” they wrote.

The authors reported no conflicts of interest. One author was supported by an award from the National Institute on Aging.

SOURCE: Wei Y-J et al. JAMA Network Open. 2018. Aug 10. doi: 10.1001/jamanetworkopen.2018.1152.

A significant number of adults with attention-deficit/hyperactivity disorder are concurrently using stimulants and opioids, highlighting a need for research into the risks and benefits of long-term coadministration of these medications.

Researchers reported the results of a cross-sectional study using Medicaid Analytic eXtract data from 66,406 adults with ADHD across 29 states.

Overall, 32.7% used stimulants, and 5.4% had used both stimulants and opioids long term, defined as at least 30 consecutive days of use. Long-term opioid use was more common among adults who used stimulants, compared with those who did not use stimulants (16.5% vs. 13%), wrote Yu-Jung “Jenny” Wei, PhD, and her associates. The report was published in JAMA Network Open.

Most of the adults who used both stimulants and opioids concurrently long term were using short-acting opioids (81.8%) rather than long-acting (20.6%). However, nearly one-quarter (23.2%) had prescriptions for both long- and short-acting opioids.

The researchers noted a significant 12% increase in the prevalence of concurrent use of stimulants and opioids from 1999 to 2010.

“Our findings suggest that long-term concurrent use of stimulants and opioids has become an increasingly common practice among adult patients with ADHD,” wrote Dr. Wei, of the College of Pharmacy at the University of Florida, Gainesville, and her associates.

The researchers also found an increase in these trends with age: Adults in their 30s showed a 7% higher prevalence of long-term concurrent use, compared with adults in their 20s. In addition, those aged 41-50 years had a 14% higher prevalence, and those aged 51-64 years had a 17% higher prevalence.

Adults with pain had a 10% higher prevalence of concurrent use, while other People with schizophrenia appeared to have a 5% lower incidence of concurrent use.

“Although the concurrent use of stimulants and opioids may initially have been prompted by ADHD symptoms and comorbid chronic pain, continued use of opioids alone or combined with central nervous system stimulants may result in drug dependence and other adverse effects (e.g., overdose) because of the high potential for abuse and misuse,” the authors wrote. “Identifying these high-risk patients allows for early intervention and may reduce the number of adverse events associated with the long-term use of these medications.”

Among the limitations cited is that only prescription medications filled and reimbursed by Medicaid were included in the analysis. “Considering that opioid prescription fills are commonly paid out of pocket, our reported prevalence of concurrent stimulant-opioid use may be too low,” they wrote.

The authors reported no conflicts of interest. One author was supported by an award from the National Institute on Aging.

SOURCE: Wei Y-J et al. JAMA Network Open. 2018. Aug 10. doi: 10.1001/jamanetworkopen.2018.1152.

A significant number of adults with attention-deficit/hyperactivity disorder are concurrently using stimulants and opioids, highlighting a need for research into the risks and benefits of long-term coadministration of these medications.

Researchers reported the results of a cross-sectional study using Medicaid Analytic eXtract data from 66,406 adults with ADHD across 29 states.

Overall, 32.7% used stimulants, and 5.4% had used both stimulants and opioids long term, defined as at least 30 consecutive days of use. Long-term opioid use was more common among adults who used stimulants, compared with those who did not use stimulants (16.5% vs. 13%), wrote Yu-Jung “Jenny” Wei, PhD, and her associates. The report was published in JAMA Network Open.

Most of the adults who used both stimulants and opioids concurrently long term were using short-acting opioids (81.8%) rather than long-acting (20.6%). However, nearly one-quarter (23.2%) had prescriptions for both long- and short-acting opioids.

The researchers noted a significant 12% increase in the prevalence of concurrent use of stimulants and opioids from 1999 to 2010.

“Our findings suggest that long-term concurrent use of stimulants and opioids has become an increasingly common practice among adult patients with ADHD,” wrote Dr. Wei, of the College of Pharmacy at the University of Florida, Gainesville, and her associates.

The researchers also found an increase in these trends with age: Adults in their 30s showed a 7% higher prevalence of long-term concurrent use, compared with adults in their 20s. In addition, those aged 41-50 years had a 14% higher prevalence, and those aged 51-64 years had a 17% higher prevalence.

Adults with pain had a 10% higher prevalence of concurrent use, while other People with schizophrenia appeared to have a 5% lower incidence of concurrent use.

“Although the concurrent use of stimulants and opioids may initially have been prompted by ADHD symptoms and comorbid chronic pain, continued use of opioids alone or combined with central nervous system stimulants may result in drug dependence and other adverse effects (e.g., overdose) because of the high potential for abuse and misuse,” the authors wrote. “Identifying these high-risk patients allows for early intervention and may reduce the number of adverse events associated with the long-term use of these medications.”

Among the limitations cited is that only prescription medications filled and reimbursed by Medicaid were included in the analysis. “Considering that opioid prescription fills are commonly paid out of pocket, our reported prevalence of concurrent stimulant-opioid use may be too low,” they wrote.

The authors reported no conflicts of interest. One author was supported by an award from the National Institute on Aging.

SOURCE: Wei Y-J et al. JAMA Network Open. 2018. Aug 10. doi: 10.1001/jamanetworkopen.2018.1152.

FROM JAMA NETWORK OPEN

Key clinical point: Identifying high-risk patients “allows for early intervention and may reduce the number of adverse events associated with the long-term use.”

Major finding: About 5% of adults with ADHD are on both opioids and stimulants long term.

Study details: Cross-sectional study of 66,406 adults with ADHD.

Disclosures: The authors reported no conflicts of interest. One author was supported by an award from the National Institute on Aging.

Source: Wei Y-J et al. JAMA Network Open. 2018. Aug 10. doi: 10.1001/jamanetworkopen.2018.1152.

Treating sleep disorders in chronic opioid users

BALTIMORE – Given the prevalence of opioid use in the general population, sleep specialists need to be alert to the effects of opioid use on sleep and the link between chronic use and sleep disorders, a pulmonologist recommended at the annual meeting of the Associated Professional Sleep Societies.

Chronic opioid use has multiple effects on sleep that render continuous positive airway pressure (CPAP) all but ineffective, said Bernardo J. Selim, MD, of Mayo Clinic, Rochester, Minn. Characteristic signs of the effects of chronic opioid use on sleep include ataxic central sleep apnea (CSA) and sustained hypoxemia, for which CPAP is generally not effective. Obtaining arterial blood gas measures in these patients is also important to rule out a hypoventilative condition, he added.

In his review of opioid-induced sleep disorders, Dr. Selim cited a small “landmark” study of 24 chronic pain patients on opioids that found 46% had sleep disordered breathing and that the risk rose with the morphine equivalent dose they were taking (J Clin Sleep Med. 2014 Aug 15; 10[8]:847-52).

A meta-analysis also found a dose-dependent relationship with the severity of CSA in patients on opioids, Dr. Selim noted (Anesth Analg. 2015 Jun;120[6]:1273-85). The prevalence of CSA was 24% in the study, which defined two risk factors for CSA severity: a morphine equivalent dose exceeding 200 mg/day and a low or normal body mass index.

Dr. Selim noted that opioids reduce respiration rate more than tidal volume and cause changes to respiratory rhythm. “[Opioids] decrease hypercapnia but increase hypoxic ventilatory response, decrease the arousal index, decrease upper-airway muscle tone, and decrease and also act on chest and abdominal wall compliance.”

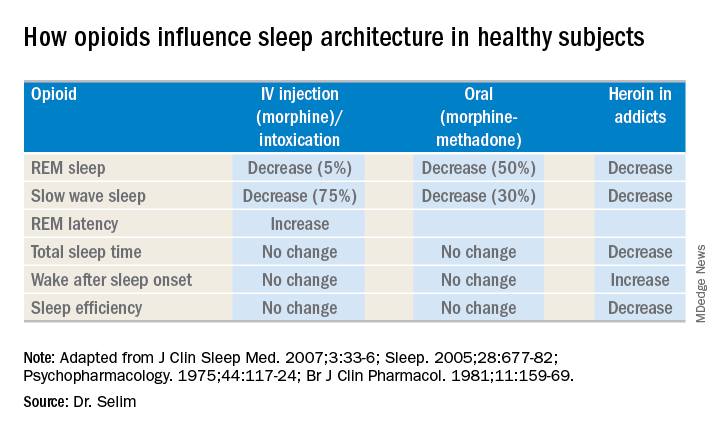

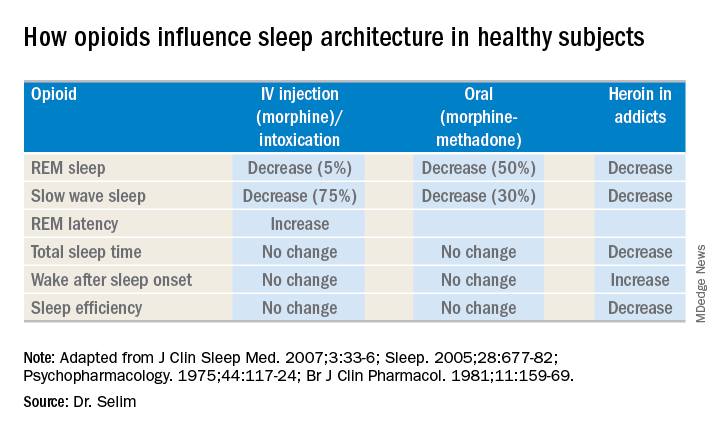

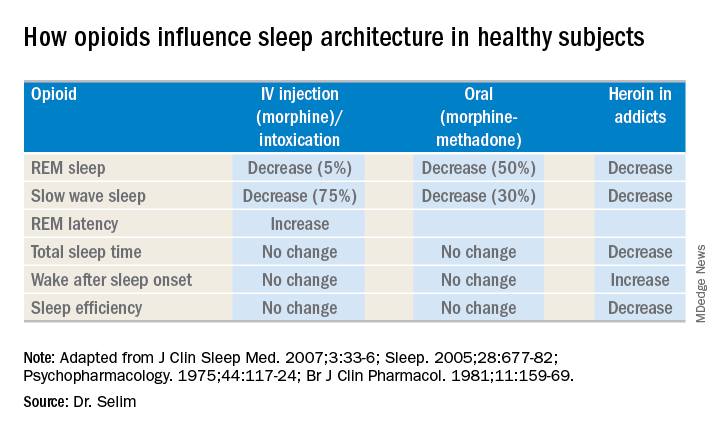

Further, different opioids and injection methods can influence breathing. For example, REM and slow-wave sleep decreased across all three categories – intravenous morphine, oral morphine or methadone, and heroin use.

Sleep specialists should be aware that all opioid receptor agonists, whether legal or illegal, have respiratory side effects, Dr. Selim said. “They can present in any way, in any form – CSA, obstructive sleep apnea [OSA], ataxic breathing or sustained hypoxemia. Most of the time [respiratory side effects] present as a combination of complex respiratory patterns.”

In one meta-analysis, CSA was significantly more prevalent in OSA patients on opioids than it was in nonusers, Dr. Selim said, with increased sleep apnea severity as well (J Clin Sleep Med. 2016 Apr 15;12[4]:617-25). Another study found that ataxic breathing was more frequent in non-REM sleep in chronic opioid users (odds ratio, 15.4; P = .017; J Clin Sleep Med. 2007 Aug 15;3[5]:455-61).

The key rule for treating sleep disorders in opioid-dependent patients is to change to nonopioid analgesics, Dr. Selim said. In that regard, ampakines are experimental drugs which have been shown to improve opioid-induced ventilation without loss of the analgesic effect (Clin Pharmacol Ther. 2010 Feb;87[2]:204-11). “Ampakines modulate the action of the glutamate neurotransmitter, decreasing opiate-induced respiratory depression,” Dr. Selim said. An emerging technology, adaptive servo-ventilation (ASV), has been as effective in the treatment of central and complex sleep apnea in chronic opioid users as it is in patients with congestive heart failure, Dr. Selim said (J Clin Sleep Med. 2016 May 15;12[5]:757-61). “ASV can be very effective in these patients; lower body mass index being a predictor for ASV success,” he said.

Dr. Selim reported having no financial relationships.

BALTIMORE – Given the prevalence of opioid use in the general population, sleep specialists need to be alert to the effects of opioid use on sleep and the link between chronic use and sleep disorders, a pulmonologist recommended at the annual meeting of the Associated Professional Sleep Societies.

Chronic opioid use has multiple effects on sleep that render continuous positive airway pressure (CPAP) all but ineffective, said Bernardo J. Selim, MD, of Mayo Clinic, Rochester, Minn. Characteristic signs of the effects of chronic opioid use on sleep include ataxic central sleep apnea (CSA) and sustained hypoxemia, for which CPAP is generally not effective. Obtaining arterial blood gas measures in these patients is also important to rule out a hypoventilative condition, he added.

In his review of opioid-induced sleep disorders, Dr. Selim cited a small “landmark” study of 24 chronic pain patients on opioids that found 46% had sleep disordered breathing and that the risk rose with the morphine equivalent dose they were taking (J Clin Sleep Med. 2014 Aug 15; 10[8]:847-52).

A meta-analysis also found a dose-dependent relationship with the severity of CSA in patients on opioids, Dr. Selim noted (Anesth Analg. 2015 Jun;120[6]:1273-85). The prevalence of CSA was 24% in the study, which defined two risk factors for CSA severity: a morphine equivalent dose exceeding 200 mg/day and a low or normal body mass index.

Dr. Selim noted that opioids reduce respiration rate more than tidal volume and cause changes to respiratory rhythm. “[Opioids] decrease hypercapnia but increase hypoxic ventilatory response, decrease the arousal index, decrease upper-airway muscle tone, and decrease and also act on chest and abdominal wall compliance.”

Further, different opioids and injection methods can influence breathing. For example, REM and slow-wave sleep decreased across all three categories – intravenous morphine, oral morphine or methadone, and heroin use.

Sleep specialists should be aware that all opioid receptor agonists, whether legal or illegal, have respiratory side effects, Dr. Selim said. “They can present in any way, in any form – CSA, obstructive sleep apnea [OSA], ataxic breathing or sustained hypoxemia. Most of the time [respiratory side effects] present as a combination of complex respiratory patterns.”

In one meta-analysis, CSA was significantly more prevalent in OSA patients on opioids than it was in nonusers, Dr. Selim said, with increased sleep apnea severity as well (J Clin Sleep Med. 2016 Apr 15;12[4]:617-25). Another study found that ataxic breathing was more frequent in non-REM sleep in chronic opioid users (odds ratio, 15.4; P = .017; J Clin Sleep Med. 2007 Aug 15;3[5]:455-61).

The key rule for treating sleep disorders in opioid-dependent patients is to change to nonopioid analgesics, Dr. Selim said. In that regard, ampakines are experimental drugs which have been shown to improve opioid-induced ventilation without loss of the analgesic effect (Clin Pharmacol Ther. 2010 Feb;87[2]:204-11). “Ampakines modulate the action of the glutamate neurotransmitter, decreasing opiate-induced respiratory depression,” Dr. Selim said. An emerging technology, adaptive servo-ventilation (ASV), has been as effective in the treatment of central and complex sleep apnea in chronic opioid users as it is in patients with congestive heart failure, Dr. Selim said (J Clin Sleep Med. 2016 May 15;12[5]:757-61). “ASV can be very effective in these patients; lower body mass index being a predictor for ASV success,” he said.

Dr. Selim reported having no financial relationships.

BALTIMORE – Given the prevalence of opioid use in the general population, sleep specialists need to be alert to the effects of opioid use on sleep and the link between chronic use and sleep disorders, a pulmonologist recommended at the annual meeting of the Associated Professional Sleep Societies.

Chronic opioid use has multiple effects on sleep that render continuous positive airway pressure (CPAP) all but ineffective, said Bernardo J. Selim, MD, of Mayo Clinic, Rochester, Minn. Characteristic signs of the effects of chronic opioid use on sleep include ataxic central sleep apnea (CSA) and sustained hypoxemia, for which CPAP is generally not effective. Obtaining arterial blood gas measures in these patients is also important to rule out a hypoventilative condition, he added.

In his review of opioid-induced sleep disorders, Dr. Selim cited a small “landmark” study of 24 chronic pain patients on opioids that found 46% had sleep disordered breathing and that the risk rose with the morphine equivalent dose they were taking (J Clin Sleep Med. 2014 Aug 15; 10[8]:847-52).

A meta-analysis also found a dose-dependent relationship with the severity of CSA in patients on opioids, Dr. Selim noted (Anesth Analg. 2015 Jun;120[6]:1273-85). The prevalence of CSA was 24% in the study, which defined two risk factors for CSA severity: a morphine equivalent dose exceeding 200 mg/day and a low or normal body mass index.

Dr. Selim noted that opioids reduce respiration rate more than tidal volume and cause changes to respiratory rhythm. “[Opioids] decrease hypercapnia but increase hypoxic ventilatory response, decrease the arousal index, decrease upper-airway muscle tone, and decrease and also act on chest and abdominal wall compliance.”

Further, different opioids and injection methods can influence breathing. For example, REM and slow-wave sleep decreased across all three categories – intravenous morphine, oral morphine or methadone, and heroin use.

Sleep specialists should be aware that all opioid receptor agonists, whether legal or illegal, have respiratory side effects, Dr. Selim said. “They can present in any way, in any form – CSA, obstructive sleep apnea [OSA], ataxic breathing or sustained hypoxemia. Most of the time [respiratory side effects] present as a combination of complex respiratory patterns.”

In one meta-analysis, CSA was significantly more prevalent in OSA patients on opioids than it was in nonusers, Dr. Selim said, with increased sleep apnea severity as well (J Clin Sleep Med. 2016 Apr 15;12[4]:617-25). Another study found that ataxic breathing was more frequent in non-REM sleep in chronic opioid users (odds ratio, 15.4; P = .017; J Clin Sleep Med. 2007 Aug 15;3[5]:455-61).

The key rule for treating sleep disorders in opioid-dependent patients is to change to nonopioid analgesics, Dr. Selim said. In that regard, ampakines are experimental drugs which have been shown to improve opioid-induced ventilation without loss of the analgesic effect (Clin Pharmacol Ther. 2010 Feb;87[2]:204-11). “Ampakines modulate the action of the glutamate neurotransmitter, decreasing opiate-induced respiratory depression,” Dr. Selim said. An emerging technology, adaptive servo-ventilation (ASV), has been as effective in the treatment of central and complex sleep apnea in chronic opioid users as it is in patients with congestive heart failure, Dr. Selim said (J Clin Sleep Med. 2016 May 15;12[5]:757-61). “ASV can be very effective in these patients; lower body mass index being a predictor for ASV success,” he said.

Dr. Selim reported having no financial relationships.

EXPERT ANALYSIS FROM SLEEP 2018

Climbing the therapeutic ladder in eczema-related itch

WASHINGTON – Currently available including antihistamines and an oral antiemetic approved for preventing chemotherapy-related nausea and vomiting, Peter Lio, MD, said at a symposium presented by the Coalition United for Better Eczema Care (CUBE-C).

There are four basic areas of treatment, which Dr. Lio, a dermatologist at Northwestern University, Chicago, referred to as the “itch therapeutic ladder.” In a video interview at the meeting, he reviewed the treatments, starting with topical therapies, which include camphor and menthol, strontium-containing topicals, as well as “dilute bleach-type products” that seem to have some anti-inflammatory and anti-itch effects.

The next levels: oral medications – antihistamines, followed by “more intense” options that may carry more risks, such as the antidepressant mirtazapine, and aprepitant, a neurokinin-1 receptor antagonist approved for the prevention of chemotherapy-induced and postoperative nausea and vomiting. Gabapentin and naltrexone can also be helpful for certain populations; all are used off-label, he pointed out.

Dr. Lio, formally trained in acupuncture, often uses alternative therapies as the fourth rung of the ladder. These include using a specific acupressure point, which he said “seems to give a little bit of relief.”

In the interview, he also discussed considerations in children with atopic dermatitis and exciting treatments in development, such as biologics that target “one of the master itch cytokines,” interleukin-31.

“Itch is such an important part of this disease because we know not only is it one of the key pieces that pushes the disease forward and keeps these cycles going, but also contributes a huge amount to the morbidity,” he said.

CUBE-C, established by the National Eczema Association (NEA), is a “network of cross-specialty leaders, patients and caregivers, constructing an educational curriculum based on standards of effective treatment and disease management,” according to the NEA.

The symposium was supported by an educational grant from Sanofi Genzyme, Regeneron Pharmaceuticals, and Pfizer. Dr. Lio reported serving as a speaker, consultant, and/or advisor for companies developing and marketing atopic dermatitis therapies and products.

WASHINGTON – Currently available including antihistamines and an oral antiemetic approved for preventing chemotherapy-related nausea and vomiting, Peter Lio, MD, said at a symposium presented by the Coalition United for Better Eczema Care (CUBE-C).

There are four basic areas of treatment, which Dr. Lio, a dermatologist at Northwestern University, Chicago, referred to as the “itch therapeutic ladder.” In a video interview at the meeting, he reviewed the treatments, starting with topical therapies, which include camphor and menthol, strontium-containing topicals, as well as “dilute bleach-type products” that seem to have some anti-inflammatory and anti-itch effects.

The next levels: oral medications – antihistamines, followed by “more intense” options that may carry more risks, such as the antidepressant mirtazapine, and aprepitant, a neurokinin-1 receptor antagonist approved for the prevention of chemotherapy-induced and postoperative nausea and vomiting. Gabapentin and naltrexone can also be helpful for certain populations; all are used off-label, he pointed out.

Dr. Lio, formally trained in acupuncture, often uses alternative therapies as the fourth rung of the ladder. These include using a specific acupressure point, which he said “seems to give a little bit of relief.”

In the interview, he also discussed considerations in children with atopic dermatitis and exciting treatments in development, such as biologics that target “one of the master itch cytokines,” interleukin-31.

“Itch is such an important part of this disease because we know not only is it one of the key pieces that pushes the disease forward and keeps these cycles going, but also contributes a huge amount to the morbidity,” he said.

CUBE-C, established by the National Eczema Association (NEA), is a “network of cross-specialty leaders, patients and caregivers, constructing an educational curriculum based on standards of effective treatment and disease management,” according to the NEA.

The symposium was supported by an educational grant from Sanofi Genzyme, Regeneron Pharmaceuticals, and Pfizer. Dr. Lio reported serving as a speaker, consultant, and/or advisor for companies developing and marketing atopic dermatitis therapies and products.

WASHINGTON – Currently available including antihistamines and an oral antiemetic approved for preventing chemotherapy-related nausea and vomiting, Peter Lio, MD, said at a symposium presented by the Coalition United for Better Eczema Care (CUBE-C).

There are four basic areas of treatment, which Dr. Lio, a dermatologist at Northwestern University, Chicago, referred to as the “itch therapeutic ladder.” In a video interview at the meeting, he reviewed the treatments, starting with topical therapies, which include camphor and menthol, strontium-containing topicals, as well as “dilute bleach-type products” that seem to have some anti-inflammatory and anti-itch effects.

The next levels: oral medications – antihistamines, followed by “more intense” options that may carry more risks, such as the antidepressant mirtazapine, and aprepitant, a neurokinin-1 receptor antagonist approved for the prevention of chemotherapy-induced and postoperative nausea and vomiting. Gabapentin and naltrexone can also be helpful for certain populations; all are used off-label, he pointed out.

Dr. Lio, formally trained in acupuncture, often uses alternative therapies as the fourth rung of the ladder. These include using a specific acupressure point, which he said “seems to give a little bit of relief.”

In the interview, he also discussed considerations in children with atopic dermatitis and exciting treatments in development, such as biologics that target “one of the master itch cytokines,” interleukin-31.

“Itch is such an important part of this disease because we know not only is it one of the key pieces that pushes the disease forward and keeps these cycles going, but also contributes a huge amount to the morbidity,” he said.

CUBE-C, established by the National Eczema Association (NEA), is a “network of cross-specialty leaders, patients and caregivers, constructing an educational curriculum based on standards of effective treatment and disease management,” according to the NEA.

The symposium was supported by an educational grant from Sanofi Genzyme, Regeneron Pharmaceuticals, and Pfizer. Dr. Lio reported serving as a speaker, consultant, and/or advisor for companies developing and marketing atopic dermatitis therapies and products.

Novel cEEG-based scoring system predicts inpatient seizure risk

LOS ANGELES – A novel scoring system based on six readily available seizure risk factors from a patient’s history and continuous electroencephalogram (cEEG) monitoring appears to accurately predict seizures in acutely ill hospitalized patients.

The final model of the system, dubbed the 2HELPS2B score, has an area under the curve (AUC) of 0.821, suggesting a “good overall fit,” Aaron Struck, MD, reported at the annual meeting of the American Academy of Neurology.

However, more relevant than the AUC and suggestive of high classification accuracy is the low calibration error of 2.7%, which shows that the actual incidence of seizures within a particular risk group is, on average, within 2.7% of predicted incidence, Dr. Struck of the University of Wisconsin, Madison, explained in an interview.

The use of cEEG has expanded, largely because of a high incidence of subclinical seizures in hospitalized patients with encephalopathy; EEG features believed to predict seizures include epileptiform discharges and periodic discharges, but the ways in which these variables may jointly affect seizure risk have not been studied, he said.

He and his colleagues used a prospective database to derive a dataset containing 24 clinical and electroencephalographic variables for 5,427 cEEG sessions of at least 24 hours each, and then, using a machine-learning method known as RiskSLIM, created a scoring system model to estimate seizure risk in patients undergoing cEEG.

The name of the scoring system – 2HELPS2B – represents the six variables included in the final model:

- 2 H is for frequency greater than 2.0 Hz for any periodic rhythmic pattern (1 point).

- E is for sporadic epileptiform discharges (1 point).

- L is for the presence of lateralized periodic discharges, lateralized rhythmic delta activity, or bilateral independent periodic discharges (1 point).

- P is for the presence of “plus” features, including superimposed, rhythmic, sharp, or fast activity (1 point).

- S is for prior seizure (1 point).

- 2B is for brief, potentially ictal, rhythmic discharges (2 points).

The predicted seizure risk rose with score, such that the seizure risk was less than 5% for a score of 0, 12% for 1, 27% for 2, 50% for 3, 73% for 4, 88% for 5, and greater than 95% for 6-7, Dr. Struck said. “Really, anything over 2 points, you’re at substantial risk for having seizures.”

Limitations of the study, which are being addressed in an ongoing, multicenter, prospective validation trial through the Critical Care EEG Monitoring Research Consortium, are mainly related to the constraints of the database; the duration of EEG needed to accurately calculate the 2HELPS2B score wasn’t defined, and cEEGs were of varying length.

“So in our validation study moving forward, these are two things we will address,” he said. “We also want to show that this is something that’s useful on a day-to-day basis – that it accurately gauges the degree of variability or potential severity of the ictal-interictal continuum pattern.”