User login

Encephalopathy common, often lethal in hospitalized patients with COVID-19

, new research shows. Results of a retrospective study show that of almost 4,500 patients with COVID-19, 12% were diagnosed with TME. Of these, 78% developed encephalopathy immediately prior to hospital admission. Septic encephalopathy, hypoxic-ischemic encephalopathy (HIE), and uremia were the most common causes, although multiple causes were present in close to 80% of patients. TME was also associated with a 24% higher risk of in-hospital death.

“We found that close to one in eight patients who were hospitalized with COVID-19 had TME that was not attributed to the effects of sedatives, and that this is incredibly common among these patients who are critically ill” said lead author Jennifer A. Frontera, MD, New York University.

“The general principle of our findings is to be more aggressive in TME; and from a neurologist perspective, the way to do this is to eliminate the effects of sedation, which is a confounder,” she said.

The study was published online March 16 in Neurocritical Care.

Drilling down

“Many neurological complications of COVID-19 are sequelae of severe illness or secondary effects of multisystem organ failure, but our previous work identified TME as the most common neurological complication,” Dr. Frontera said.

Previous research investigating encephalopathy among patients with COVID-19 included patients who may have been sedated or have had a positive Confusion Assessment Method (CAM) result.

“A lot of the delirium literature is effectively heterogeneous because there are a number of patients who are on sedative medication that, if you could turn it off, these patients would return to normal. Some may have underlying neurological issues that can be addressed, but you can›t get to the bottom of this unless you turn off the sedation,” Dr. Frontera noted.

“We wanted to be specific and try to drill down to see what the underlying cause of the encephalopathy was,” she said.

The researchers retrospectively analyzed data on 4,491 patients (≥ 18 years old) with COVID-19 who were admitted to four New York City hospitals between March 1, 2020, and May 20, 2020. Of these, 559 (12%) with TME were compared with 3,932 patients without TME.

The researchers looked at index admissions and included patients who had:

- New changes in mental status or significant worsening of mental status (in patients with baseline abnormal mental status).

- Hyperglycemia or with transient focal neurologic deficits that resolved with glucose correction.

- An adequate washout of sedating medications (when relevant) prior to mental status assessment.

Potential etiologies included electrolyte abnormalities, organ failure, hypertensive encephalopathy, sepsis or active infection, fever, nutritional deficiency, and environmental injury.

Foreign environment

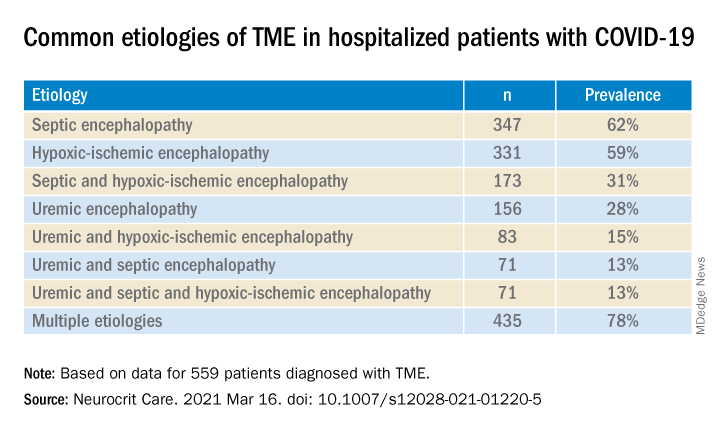

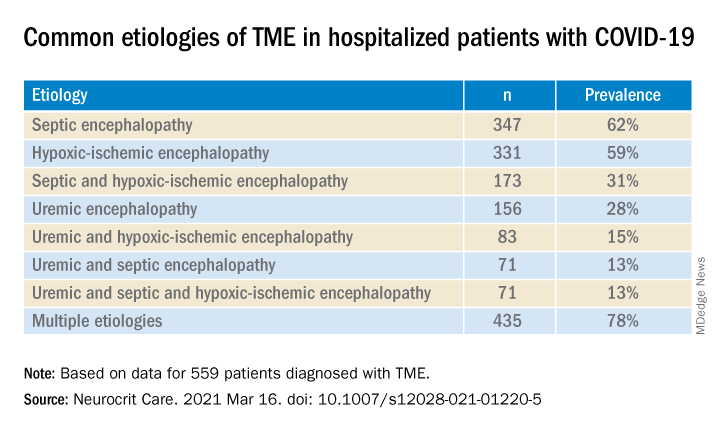

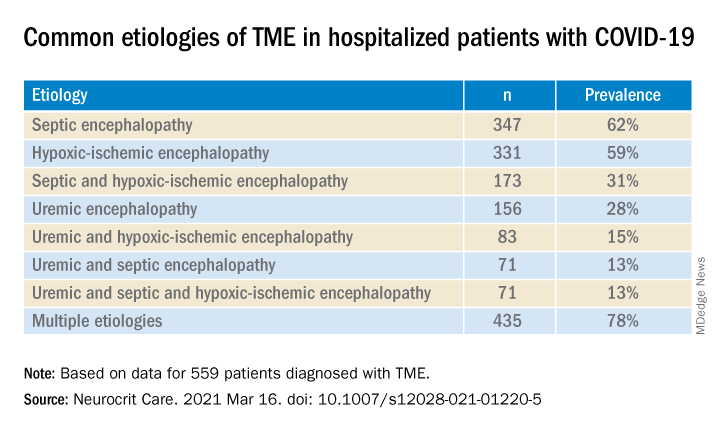

Most (78%) of the 559 patients diagnosed with TME had already developed encephalopathy immediately prior to hospital admission, the authors report. The most common etiologies of TME among hospitalized patients with COVID-19 are listed below.

Compared with patients without TME, those with TME – (all Ps < .001):

- Were older (76 vs. 62 years).

- Had higher rates of dementia (27% vs. 3%).

- Had higher rates of psychiatric history (20% vs. 10%).

- Were more often intubated (37% vs. 20%).

- Had a longer length of hospital stay (7.9 vs. 6.0 days).

- Were less often discharged home (25% vs. 66%).

“It’s no surprise that older patients and people with dementia or psychiatric illness are predisposed to becoming encephalopathic,” said Dr. Frontera. “Being in a foreign environment, such as a hospital, or being sleep-deprived in the ICU is likely to make them more confused during their hospital stay.”

Delirium as a symptom

In-hospital mortality or discharge to hospice was considerably higher in the TME versus non-TME patients (44% vs. 18%, respectively).

When the researchers adjusted for confounders (age, sex, race, worse Sequential Organ Failure Assessment score during hospitalization, ventilator status, study week, hospital location, and ICU care level) and excluded patients receiving only comfort care, they found that TME was associated with a 24% increased risk of in-hospital death (30% in patients with TME vs. 16% in those without TME).

The highest mortality risk was associated with hypoxemia, with 42% of patients with HIE dying during hospitalization, compared with 16% of patients without HIE (adjusted hazard ratio 1.56; 95% confidence interval, 1.21-2.00; P = .001).

“Not all patients who are intubated require sedation, but there’s generally a lot of hesitation in reducing or stopping sedation in some patients,” Dr. Frontera observed.

She acknowledged there are “many extremely sick patients whom you can’t ventilate without sedation.”

Nevertheless, “delirium in and of itself does not cause death. It’s a symptom, not a disease, and we have to figure out what causes it. Delirium might not need to be sedated, and it’s more important to see what the causal problem is.”

Independent predictor of death

Commenting on the study, Panayiotis N. Varelas, MD, PhD, vice president of the Neurocritical Care Society, said the study “approached the TME issue better than previously, namely allowing time for sedatives to wear off to have a better sample of patients with this syndrome.”

Dr. Varelas, who is chairman of the department of neurology and professor of neurology at Albany (N.Y.) Medical College, emphasized that TME “is not benign and, in patients with COVID-19, it is an independent predictor of in-hospital mortality.”

“One should take all possible measures … to avoid desaturation and hypotensive episodes and also aggressively treat SAE and uremic encephalopathy in hopes of improving the outcomes,” added Dr. Varelas, who was not involved with the study.

Also commenting on the study, Mitchell Elkind, MD, professor of neurology and epidemiology at Columbia University in New York, who was not associated with the research, said it “nicely distinguishes among the different causes of encephalopathy, including sepsis, hypoxia, and kidney failure … emphasizing just how sick these patients are.”

The study received no direct funding. Individual investigators were supported by grants from the National Institute on Aging and the National Institute of Neurological Disorders and Stroke. The investigators, Dr. Varelas, and Dr. Elkind have disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, new research shows. Results of a retrospective study show that of almost 4,500 patients with COVID-19, 12% were diagnosed with TME. Of these, 78% developed encephalopathy immediately prior to hospital admission. Septic encephalopathy, hypoxic-ischemic encephalopathy (HIE), and uremia were the most common causes, although multiple causes were present in close to 80% of patients. TME was also associated with a 24% higher risk of in-hospital death.

“We found that close to one in eight patients who were hospitalized with COVID-19 had TME that was not attributed to the effects of sedatives, and that this is incredibly common among these patients who are critically ill” said lead author Jennifer A. Frontera, MD, New York University.

“The general principle of our findings is to be more aggressive in TME; and from a neurologist perspective, the way to do this is to eliminate the effects of sedation, which is a confounder,” she said.

The study was published online March 16 in Neurocritical Care.

Drilling down

“Many neurological complications of COVID-19 are sequelae of severe illness or secondary effects of multisystem organ failure, but our previous work identified TME as the most common neurological complication,” Dr. Frontera said.

Previous research investigating encephalopathy among patients with COVID-19 included patients who may have been sedated or have had a positive Confusion Assessment Method (CAM) result.

“A lot of the delirium literature is effectively heterogeneous because there are a number of patients who are on sedative medication that, if you could turn it off, these patients would return to normal. Some may have underlying neurological issues that can be addressed, but you can›t get to the bottom of this unless you turn off the sedation,” Dr. Frontera noted.

“We wanted to be specific and try to drill down to see what the underlying cause of the encephalopathy was,” she said.

The researchers retrospectively analyzed data on 4,491 patients (≥ 18 years old) with COVID-19 who were admitted to four New York City hospitals between March 1, 2020, and May 20, 2020. Of these, 559 (12%) with TME were compared with 3,932 patients without TME.

The researchers looked at index admissions and included patients who had:

- New changes in mental status or significant worsening of mental status (in patients with baseline abnormal mental status).

- Hyperglycemia or with transient focal neurologic deficits that resolved with glucose correction.

- An adequate washout of sedating medications (when relevant) prior to mental status assessment.

Potential etiologies included electrolyte abnormalities, organ failure, hypertensive encephalopathy, sepsis or active infection, fever, nutritional deficiency, and environmental injury.

Foreign environment

Most (78%) of the 559 patients diagnosed with TME had already developed encephalopathy immediately prior to hospital admission, the authors report. The most common etiologies of TME among hospitalized patients with COVID-19 are listed below.

Compared with patients without TME, those with TME – (all Ps < .001):

- Were older (76 vs. 62 years).

- Had higher rates of dementia (27% vs. 3%).

- Had higher rates of psychiatric history (20% vs. 10%).

- Were more often intubated (37% vs. 20%).

- Had a longer length of hospital stay (7.9 vs. 6.0 days).

- Were less often discharged home (25% vs. 66%).

“It’s no surprise that older patients and people with dementia or psychiatric illness are predisposed to becoming encephalopathic,” said Dr. Frontera. “Being in a foreign environment, such as a hospital, or being sleep-deprived in the ICU is likely to make them more confused during their hospital stay.”

Delirium as a symptom

In-hospital mortality or discharge to hospice was considerably higher in the TME versus non-TME patients (44% vs. 18%, respectively).

When the researchers adjusted for confounders (age, sex, race, worse Sequential Organ Failure Assessment score during hospitalization, ventilator status, study week, hospital location, and ICU care level) and excluded patients receiving only comfort care, they found that TME was associated with a 24% increased risk of in-hospital death (30% in patients with TME vs. 16% in those without TME).

The highest mortality risk was associated with hypoxemia, with 42% of patients with HIE dying during hospitalization, compared with 16% of patients without HIE (adjusted hazard ratio 1.56; 95% confidence interval, 1.21-2.00; P = .001).

“Not all patients who are intubated require sedation, but there’s generally a lot of hesitation in reducing or stopping sedation in some patients,” Dr. Frontera observed.

She acknowledged there are “many extremely sick patients whom you can’t ventilate without sedation.”

Nevertheless, “delirium in and of itself does not cause death. It’s a symptom, not a disease, and we have to figure out what causes it. Delirium might not need to be sedated, and it’s more important to see what the causal problem is.”

Independent predictor of death

Commenting on the study, Panayiotis N. Varelas, MD, PhD, vice president of the Neurocritical Care Society, said the study “approached the TME issue better than previously, namely allowing time for sedatives to wear off to have a better sample of patients with this syndrome.”

Dr. Varelas, who is chairman of the department of neurology and professor of neurology at Albany (N.Y.) Medical College, emphasized that TME “is not benign and, in patients with COVID-19, it is an independent predictor of in-hospital mortality.”

“One should take all possible measures … to avoid desaturation and hypotensive episodes and also aggressively treat SAE and uremic encephalopathy in hopes of improving the outcomes,” added Dr. Varelas, who was not involved with the study.

Also commenting on the study, Mitchell Elkind, MD, professor of neurology and epidemiology at Columbia University in New York, who was not associated with the research, said it “nicely distinguishes among the different causes of encephalopathy, including sepsis, hypoxia, and kidney failure … emphasizing just how sick these patients are.”

The study received no direct funding. Individual investigators were supported by grants from the National Institute on Aging and the National Institute of Neurological Disorders and Stroke. The investigators, Dr. Varelas, and Dr. Elkind have disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, new research shows. Results of a retrospective study show that of almost 4,500 patients with COVID-19, 12% were diagnosed with TME. Of these, 78% developed encephalopathy immediately prior to hospital admission. Septic encephalopathy, hypoxic-ischemic encephalopathy (HIE), and uremia were the most common causes, although multiple causes were present in close to 80% of patients. TME was also associated with a 24% higher risk of in-hospital death.

“We found that close to one in eight patients who were hospitalized with COVID-19 had TME that was not attributed to the effects of sedatives, and that this is incredibly common among these patients who are critically ill” said lead author Jennifer A. Frontera, MD, New York University.

“The general principle of our findings is to be more aggressive in TME; and from a neurologist perspective, the way to do this is to eliminate the effects of sedation, which is a confounder,” she said.

The study was published online March 16 in Neurocritical Care.

Drilling down

“Many neurological complications of COVID-19 are sequelae of severe illness or secondary effects of multisystem organ failure, but our previous work identified TME as the most common neurological complication,” Dr. Frontera said.

Previous research investigating encephalopathy among patients with COVID-19 included patients who may have been sedated or have had a positive Confusion Assessment Method (CAM) result.

“A lot of the delirium literature is effectively heterogeneous because there are a number of patients who are on sedative medication that, if you could turn it off, these patients would return to normal. Some may have underlying neurological issues that can be addressed, but you can›t get to the bottom of this unless you turn off the sedation,” Dr. Frontera noted.

“We wanted to be specific and try to drill down to see what the underlying cause of the encephalopathy was,” she said.

The researchers retrospectively analyzed data on 4,491 patients (≥ 18 years old) with COVID-19 who were admitted to four New York City hospitals between March 1, 2020, and May 20, 2020. Of these, 559 (12%) with TME were compared with 3,932 patients without TME.

The researchers looked at index admissions and included patients who had:

- New changes in mental status or significant worsening of mental status (in patients with baseline abnormal mental status).

- Hyperglycemia or with transient focal neurologic deficits that resolved with glucose correction.

- An adequate washout of sedating medications (when relevant) prior to mental status assessment.

Potential etiologies included electrolyte abnormalities, organ failure, hypertensive encephalopathy, sepsis or active infection, fever, nutritional deficiency, and environmental injury.

Foreign environment

Most (78%) of the 559 patients diagnosed with TME had already developed encephalopathy immediately prior to hospital admission, the authors report. The most common etiologies of TME among hospitalized patients with COVID-19 are listed below.

Compared with patients without TME, those with TME – (all Ps < .001):

- Were older (76 vs. 62 years).

- Had higher rates of dementia (27% vs. 3%).

- Had higher rates of psychiatric history (20% vs. 10%).

- Were more often intubated (37% vs. 20%).

- Had a longer length of hospital stay (7.9 vs. 6.0 days).

- Were less often discharged home (25% vs. 66%).

“It’s no surprise that older patients and people with dementia or psychiatric illness are predisposed to becoming encephalopathic,” said Dr. Frontera. “Being in a foreign environment, such as a hospital, or being sleep-deprived in the ICU is likely to make them more confused during their hospital stay.”

Delirium as a symptom

In-hospital mortality or discharge to hospice was considerably higher in the TME versus non-TME patients (44% vs. 18%, respectively).

When the researchers adjusted for confounders (age, sex, race, worse Sequential Organ Failure Assessment score during hospitalization, ventilator status, study week, hospital location, and ICU care level) and excluded patients receiving only comfort care, they found that TME was associated with a 24% increased risk of in-hospital death (30% in patients with TME vs. 16% in those without TME).

The highest mortality risk was associated with hypoxemia, with 42% of patients with HIE dying during hospitalization, compared with 16% of patients without HIE (adjusted hazard ratio 1.56; 95% confidence interval, 1.21-2.00; P = .001).

“Not all patients who are intubated require sedation, but there’s generally a lot of hesitation in reducing or stopping sedation in some patients,” Dr. Frontera observed.

She acknowledged there are “many extremely sick patients whom you can’t ventilate without sedation.”

Nevertheless, “delirium in and of itself does not cause death. It’s a symptom, not a disease, and we have to figure out what causes it. Delirium might not need to be sedated, and it’s more important to see what the causal problem is.”

Independent predictor of death

Commenting on the study, Panayiotis N. Varelas, MD, PhD, vice president of the Neurocritical Care Society, said the study “approached the TME issue better than previously, namely allowing time for sedatives to wear off to have a better sample of patients with this syndrome.”

Dr. Varelas, who is chairman of the department of neurology and professor of neurology at Albany (N.Y.) Medical College, emphasized that TME “is not benign and, in patients with COVID-19, it is an independent predictor of in-hospital mortality.”

“One should take all possible measures … to avoid desaturation and hypotensive episodes and also aggressively treat SAE and uremic encephalopathy in hopes of improving the outcomes,” added Dr. Varelas, who was not involved with the study.

Also commenting on the study, Mitchell Elkind, MD, professor of neurology and epidemiology at Columbia University in New York, who was not associated with the research, said it “nicely distinguishes among the different causes of encephalopathy, including sepsis, hypoxia, and kidney failure … emphasizing just how sick these patients are.”

The study received no direct funding. Individual investigators were supported by grants from the National Institute on Aging and the National Institute of Neurological Disorders and Stroke. The investigators, Dr. Varelas, and Dr. Elkind have disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

FROM NEUROCRITICAL CARE

Neurologic drug prices jump 50% in five years

, new research shows. Results of the retrospective study also showed that most of the increased costs for these agents were due to rising costs for neuroimmunology drugs, mainly for those used to treat multiple sclerosis (MS).

“The same brand name medication in 2017 cost approximately 50% more than in 2013,” said Adam de Havenon, MD, assistant professor of neurology, University of Utah, Salt Lake City.

“An analogy would be if you bought an iPhone 5 in 2013 for $500, and then in 2017, you were asked to pay $750 for the exact same iPhone 5,” Dr. de Havenon added.

The study findings were published online March 10 in the journal Neurology.

$26 billion in payments

Both neurologists and patients are concerned about the high cost of prescription drugs for neurologic diseases, and Medicare Part D data indicate that these drugs are the most expensive component of neurologic care, the researchers noted. In addition, out-of-pocket costs have increased significantly for patients with neurologic disease such as Parkinson’s disease, epilepsy, and MS.

To understand trends in payments for neurologic drugs, Dr. de Havenon and colleagues analyzed Medicare Part D claims filed from 2013 to 2017. The payments include costs paid by Medicare, the patient, government subsidies, and other third-party payers.

In addition to examining more current Medicare Part D data than previous studies, the current analysis examined all medications prescribed by neurologists that consistently remained branded or generic during the 5-year study period, said Dr. de Havenon. This approach resulted in a large number of claims and a large total cost.

To calculate the percentage change in annual payment claims, the researchers used 2013 prices as a reference point. They identified drugs named in 2013 claims and classified them as generic, brand-name only, or brand-name with generic equivalent. Researchers also divided the drugs by neurologic subspecialty.

The analysis included 520 drugs, all of which were available in each year of the study period. Of these drugs, 322 were generic, 61 were brand-name only, and 137 were brand-name with a generic equivalent. There were 90.7 million total claims.

Results showed total payments amounted to $26.65 billion. Yearly total payments increased from $4.05 billion in 2013 to $6.09 billion in 2017, representing a 50.4% increase, even after adjusting for inflation. Total claims increased by 7.6% – from 17.1 million in 2013 to 18.4 million in 2017.

From 2013 to 2017, claim payments increased by 0.6% for generic drugs, 42.4% for brand-name only drugs, and 45% for brand-name drugs with generic equivalents. The proportion of claims increased from 81.9% to 88% for generic drugs and from 4.9% to 6.2% for brand-name only drugs.

However, the proportion of claims for brand-name drugs with generic equivalents decreased from 13.3% to 5.8%.

Treatment barrier

Neuroimmunologic drugs, most of which were prescribed for MS, had exceptional cost, the researchers noted. These drugs accounted for more than 50% of payments but only 4.3% of claims. Claim payment for these drugs increased by 46.9% during the study period, from $3,337 to $4,902.

When neuroimmunologic drugs were removed from the analysis there was still significant increase in claim payments for brand-name only drugs (50.4%) and brand-name drugs with generic equivalents (45.6%).

Although neuroimmunologic medicines, including monoclonal antibodies, are more expensive to produce, this factor alone does not explain their exceptional cost, said Dr. de Havenon. “The high cost of brand-name drugs in this speciality is likely because the market bears it,” he added. “In other words, MS is a disabling disease and the medications work, so historically the Centers for Medicare & Medicaid Services have been willing to tolerate the high cost of these primarily brand-name medications.”

Several countries have controlled drug costs by negotiating with pharmaceutical companies and through legislation, Dr. de Havenon noted.

“My intent with this article was to raise awareness on the topic, which I struggle with frequently as a clinician. I know I want my patients to have a medication, but the cost prevents it,” he said.

‘Unfettered’ price-setting

Commenting on the findings, Robert J. Fox, MD, vice chair for research at the Neurological Institute of the Cleveland Clinic, said the study “brings into clear light” what neurologists, particularly those who treat MS, have long suspected but did not really know. These neurologists “are typically distanced from the payment aspects of the medications they prescribe,” said Dr. Fox, who was not involved with the research.

Although a particular strength of the study was its comprehensiveness, the researchers excluded infusion claims – which account for a large portion of total patient care costs for many disorders, he noted.

Drugs for MS historically have been expensive, ostensibly because of their high cost of development. In addition, the large and continued price increase that occurs long after these drugs have been approved remains unexplained, said Dr. Fox.

He noted that the study findings might not directly affect clinical practice because neurologists will continue prescribing medications they think are best for their patients. “Instead, I think this is a lesson to lawmakers about the massive error in the Medicare Modernization Act of 2003, where the federal government was prohibited from negotiating drug prices. If the seller is unfettered in setting a price, then no one should be surprised when the price rises,” Dr. Fox said.

Because many new drugs and new generic formulations for treating MS have become available during the past year, “repeating these types of economic studies for the period 2020-2025 will help us understand if generic competition – as well as new laws if they are passed – alter price,” he concluded.

The study was funded by the American Academy of Neurology, which publishes Neurology. Dr. de Havenon has received clinical research funding from AMAG Pharmaceuticals and Regeneron Pharmaceuticals. Dr. Fox receives consulting fees from many pharmaceutical companies involved in the development of therapies for MS.

A version of this article first appeared on Medscape.com.

, new research shows. Results of the retrospective study also showed that most of the increased costs for these agents were due to rising costs for neuroimmunology drugs, mainly for those used to treat multiple sclerosis (MS).

“The same brand name medication in 2017 cost approximately 50% more than in 2013,” said Adam de Havenon, MD, assistant professor of neurology, University of Utah, Salt Lake City.

“An analogy would be if you bought an iPhone 5 in 2013 for $500, and then in 2017, you were asked to pay $750 for the exact same iPhone 5,” Dr. de Havenon added.

The study findings were published online March 10 in the journal Neurology.

$26 billion in payments

Both neurologists and patients are concerned about the high cost of prescription drugs for neurologic diseases, and Medicare Part D data indicate that these drugs are the most expensive component of neurologic care, the researchers noted. In addition, out-of-pocket costs have increased significantly for patients with neurologic disease such as Parkinson’s disease, epilepsy, and MS.

To understand trends in payments for neurologic drugs, Dr. de Havenon and colleagues analyzed Medicare Part D claims filed from 2013 to 2017. The payments include costs paid by Medicare, the patient, government subsidies, and other third-party payers.

In addition to examining more current Medicare Part D data than previous studies, the current analysis examined all medications prescribed by neurologists that consistently remained branded or generic during the 5-year study period, said Dr. de Havenon. This approach resulted in a large number of claims and a large total cost.

To calculate the percentage change in annual payment claims, the researchers used 2013 prices as a reference point. They identified drugs named in 2013 claims and classified them as generic, brand-name only, or brand-name with generic equivalent. Researchers also divided the drugs by neurologic subspecialty.

The analysis included 520 drugs, all of which were available in each year of the study period. Of these drugs, 322 were generic, 61 were brand-name only, and 137 were brand-name with a generic equivalent. There were 90.7 million total claims.

Results showed total payments amounted to $26.65 billion. Yearly total payments increased from $4.05 billion in 2013 to $6.09 billion in 2017, representing a 50.4% increase, even after adjusting for inflation. Total claims increased by 7.6% – from 17.1 million in 2013 to 18.4 million in 2017.

From 2013 to 2017, claim payments increased by 0.6% for generic drugs, 42.4% for brand-name only drugs, and 45% for brand-name drugs with generic equivalents. The proportion of claims increased from 81.9% to 88% for generic drugs and from 4.9% to 6.2% for brand-name only drugs.

However, the proportion of claims for brand-name drugs with generic equivalents decreased from 13.3% to 5.8%.

Treatment barrier

Neuroimmunologic drugs, most of which were prescribed for MS, had exceptional cost, the researchers noted. These drugs accounted for more than 50% of payments but only 4.3% of claims. Claim payment for these drugs increased by 46.9% during the study period, from $3,337 to $4,902.

When neuroimmunologic drugs were removed from the analysis there was still significant increase in claim payments for brand-name only drugs (50.4%) and brand-name drugs with generic equivalents (45.6%).

Although neuroimmunologic medicines, including monoclonal antibodies, are more expensive to produce, this factor alone does not explain their exceptional cost, said Dr. de Havenon. “The high cost of brand-name drugs in this speciality is likely because the market bears it,” he added. “In other words, MS is a disabling disease and the medications work, so historically the Centers for Medicare & Medicaid Services have been willing to tolerate the high cost of these primarily brand-name medications.”

Several countries have controlled drug costs by negotiating with pharmaceutical companies and through legislation, Dr. de Havenon noted.

“My intent with this article was to raise awareness on the topic, which I struggle with frequently as a clinician. I know I want my patients to have a medication, but the cost prevents it,” he said.

‘Unfettered’ price-setting

Commenting on the findings, Robert J. Fox, MD, vice chair for research at the Neurological Institute of the Cleveland Clinic, said the study “brings into clear light” what neurologists, particularly those who treat MS, have long suspected but did not really know. These neurologists “are typically distanced from the payment aspects of the medications they prescribe,” said Dr. Fox, who was not involved with the research.

Although a particular strength of the study was its comprehensiveness, the researchers excluded infusion claims – which account for a large portion of total patient care costs for many disorders, he noted.

Drugs for MS historically have been expensive, ostensibly because of their high cost of development. In addition, the large and continued price increase that occurs long after these drugs have been approved remains unexplained, said Dr. Fox.

He noted that the study findings might not directly affect clinical practice because neurologists will continue prescribing medications they think are best for their patients. “Instead, I think this is a lesson to lawmakers about the massive error in the Medicare Modernization Act of 2003, where the federal government was prohibited from negotiating drug prices. If the seller is unfettered in setting a price, then no one should be surprised when the price rises,” Dr. Fox said.

Because many new drugs and new generic formulations for treating MS have become available during the past year, “repeating these types of economic studies for the period 2020-2025 will help us understand if generic competition – as well as new laws if they are passed – alter price,” he concluded.

The study was funded by the American Academy of Neurology, which publishes Neurology. Dr. de Havenon has received clinical research funding from AMAG Pharmaceuticals and Regeneron Pharmaceuticals. Dr. Fox receives consulting fees from many pharmaceutical companies involved in the development of therapies for MS.

A version of this article first appeared on Medscape.com.

, new research shows. Results of the retrospective study also showed that most of the increased costs for these agents were due to rising costs for neuroimmunology drugs, mainly for those used to treat multiple sclerosis (MS).

“The same brand name medication in 2017 cost approximately 50% more than in 2013,” said Adam de Havenon, MD, assistant professor of neurology, University of Utah, Salt Lake City.

“An analogy would be if you bought an iPhone 5 in 2013 for $500, and then in 2017, you were asked to pay $750 for the exact same iPhone 5,” Dr. de Havenon added.

The study findings were published online March 10 in the journal Neurology.

$26 billion in payments

Both neurologists and patients are concerned about the high cost of prescription drugs for neurologic diseases, and Medicare Part D data indicate that these drugs are the most expensive component of neurologic care, the researchers noted. In addition, out-of-pocket costs have increased significantly for patients with neurologic disease such as Parkinson’s disease, epilepsy, and MS.

To understand trends in payments for neurologic drugs, Dr. de Havenon and colleagues analyzed Medicare Part D claims filed from 2013 to 2017. The payments include costs paid by Medicare, the patient, government subsidies, and other third-party payers.

In addition to examining more current Medicare Part D data than previous studies, the current analysis examined all medications prescribed by neurologists that consistently remained branded or generic during the 5-year study period, said Dr. de Havenon. This approach resulted in a large number of claims and a large total cost.

To calculate the percentage change in annual payment claims, the researchers used 2013 prices as a reference point. They identified drugs named in 2013 claims and classified them as generic, brand-name only, or brand-name with generic equivalent. Researchers also divided the drugs by neurologic subspecialty.

The analysis included 520 drugs, all of which were available in each year of the study period. Of these drugs, 322 were generic, 61 were brand-name only, and 137 were brand-name with a generic equivalent. There were 90.7 million total claims.

Results showed total payments amounted to $26.65 billion. Yearly total payments increased from $4.05 billion in 2013 to $6.09 billion in 2017, representing a 50.4% increase, even after adjusting for inflation. Total claims increased by 7.6% – from 17.1 million in 2013 to 18.4 million in 2017.

From 2013 to 2017, claim payments increased by 0.6% for generic drugs, 42.4% for brand-name only drugs, and 45% for brand-name drugs with generic equivalents. The proportion of claims increased from 81.9% to 88% for generic drugs and from 4.9% to 6.2% for brand-name only drugs.

However, the proportion of claims for brand-name drugs with generic equivalents decreased from 13.3% to 5.8%.

Treatment barrier

Neuroimmunologic drugs, most of which were prescribed for MS, had exceptional cost, the researchers noted. These drugs accounted for more than 50% of payments but only 4.3% of claims. Claim payment for these drugs increased by 46.9% during the study period, from $3,337 to $4,902.

When neuroimmunologic drugs were removed from the analysis there was still significant increase in claim payments for brand-name only drugs (50.4%) and brand-name drugs with generic equivalents (45.6%).

Although neuroimmunologic medicines, including monoclonal antibodies, are more expensive to produce, this factor alone does not explain their exceptional cost, said Dr. de Havenon. “The high cost of brand-name drugs in this speciality is likely because the market bears it,” he added. “In other words, MS is a disabling disease and the medications work, so historically the Centers for Medicare & Medicaid Services have been willing to tolerate the high cost of these primarily brand-name medications.”

Several countries have controlled drug costs by negotiating with pharmaceutical companies and through legislation, Dr. de Havenon noted.

“My intent with this article was to raise awareness on the topic, which I struggle with frequently as a clinician. I know I want my patients to have a medication, but the cost prevents it,” he said.

‘Unfettered’ price-setting

Commenting on the findings, Robert J. Fox, MD, vice chair for research at the Neurological Institute of the Cleveland Clinic, said the study “brings into clear light” what neurologists, particularly those who treat MS, have long suspected but did not really know. These neurologists “are typically distanced from the payment aspects of the medications they prescribe,” said Dr. Fox, who was not involved with the research.

Although a particular strength of the study was its comprehensiveness, the researchers excluded infusion claims – which account for a large portion of total patient care costs for many disorders, he noted.

Drugs for MS historically have been expensive, ostensibly because of their high cost of development. In addition, the large and continued price increase that occurs long after these drugs have been approved remains unexplained, said Dr. Fox.

He noted that the study findings might not directly affect clinical practice because neurologists will continue prescribing medications they think are best for their patients. “Instead, I think this is a lesson to lawmakers about the massive error in the Medicare Modernization Act of 2003, where the federal government was prohibited from negotiating drug prices. If the seller is unfettered in setting a price, then no one should be surprised when the price rises,” Dr. Fox said.

Because many new drugs and new generic formulations for treating MS have become available during the past year, “repeating these types of economic studies for the period 2020-2025 will help us understand if generic competition – as well as new laws if they are passed – alter price,” he concluded.

The study was funded by the American Academy of Neurology, which publishes Neurology. Dr. de Havenon has received clinical research funding from AMAG Pharmaceuticals and Regeneron Pharmaceuticals. Dr. Fox receives consulting fees from many pharmaceutical companies involved in the development of therapies for MS.

A version of this article first appeared on Medscape.com.

FROM NEUROLOGY

Despite risks and warnings, CNS polypharmacy is prevalent among patients with dementia

, new research suggests.

Investigators found that 14% of these individuals were receiving CNS-active polypharmacy, defined as combinations of multiple psychotropic and opioid medications taken for more than 30 days.

“For most patients, the risks of these medications, particularly in combination, are almost certainly greater than the potential benefits,” said Donovan Maust, MD, associate director of the geriatric psychiatry program, University of Michigan, Ann Arbor.

The study was published online March 9 in JAMA.

Serious risks

Memory impairment is the cardinal feature of dementia, but behavioral and psychological symptoms, which can include apathy, delusions, and agitation, are common during all stages of illness and cause significant caregiver distress, the researchers noted.

They noted that there is a dearth of high-quality evidence to support prescribing these medications in this patient population, yet “clinicians regularly prescribe psychotropic medications to community-dwelling persons with dementia in rates that far exceed use in the general older adult population.”

The Beers Criteria, from the American Geriatrics Society, advise against the practice of CNS polypharmacy because of the significant increase in risk for falls as well as impaired cognition, cardiac conduction abnormalities, respiratory suppression, and death when polypharmacy involves opioids.

They note that previous studies from Europe of polypharmacy for patients with dementia have not included antiepileptic medications or opioids, so the true extent of CNS-active polypharmacy may be “significantly” underestimated.

To determine the prevalence of polypharmacy with CNS-active medications among community-dwelling older adults with dementia, the researchers analyzed data on prescription fills for nearly 1.2 million community-dwelling Medicare patients with dementia.

The primary outcome was the prevalence of CNS-active polypharmacy in 2018. They defined CNS-active polypharmacy as exposure to three or more medications for more than 30 consecutive days from the following drug classes: antidepressants, antipsychotics, antiepileptics, benzodiazepines, nonbenzodiazepines, benzodiazepine receptor agonist hypnotics, and opioids.

They found that roughly one in seven (13.9%) patients met criteria for CNS-active polypharmacy. Of those receiving a CNS-active polypharmacy regimen, 57.8% had been doing so for longer than 180 days, and 6.8% had been doing so for a year. Nearly 30% of patients were exposed to five or more medications, and 5.2% were exposed to five or more medication classes.

Conservative approach warranted

Nearly all (92%) patients taking three or more CNS-active medications were taking an antidepressant, “consistent with their place as the psychotropic class most commonly prescribed both to older adults overall and those with dementia,” the investigators noted.

There is minimal high-quality evidence to support the efficacy of antidepressants for the treatment of depression for patients with dementia, they pointed out.

Nearly half (47%) of patients who were taking three or more CNS-active medications received at least one antipsychotic, most often quetiapine. Antipsychotics are not approved for people with dementia but are often prescribed off label for agitation, anxiety, and sleep problems, the researchers noted.

Nearly two thirds (62%) of patients with dementia who were taking three or more CNS drugs were taking an antiepileptic (most commonly, gabapentin); 41%, benzodiazepines; 32%, opioids; and 6%, Z-drugs.

The most common polypharmacy class combination included at least one antidepressant, one antiepileptic, and one antipsychotic. These accounted for 12.9% of polypharmacy days.

Despite limited high-quality evidence of efficacy, the prescribing of psychotropic medications and opioids is “pervasive” for adults with dementia in the United States, the investigators noted.

“Especially given that older adults with dementia might not be able to convey side effects they are experiencing, I think clinicians should be more conservative in how they are prescribing these medications and skeptical about the potential for benefit,” said Dr. Maust.

Regarding study limitations, the researchers noted that prescription medication claims may have led to an overestimation of the exposure to polypharmacy, insofar as the prescriptions may have been filled but not taken or were taken only on an as-needed basis.

In addition, the investigators were unable to determine the appropriateness of the particular combinations used or to examine the specific harms associated with CNS-active polypharmacy.

A major clinical challenge

Weighing in on the results, Howard Fillit, MD, founding executive director and chief science officer of the Alzheimer’s Drug Discovery Foundation, said the study is important because polypharmacy is one of the “geriatric giants, and the question is, what do you do about it?”

Dr. Fillit said it is important to conduct a careful medication review for all older patients, “making sure that the use of each drug is appropriate. The most important thing is to define what is the appropriate utilization of these kinds of drugs. That goes for both overutilization or misuse of these drugs and underutilization, where people are undertreated for symptoms that can’t be managed by behavioral management, for example,” Dr. Fillit said.

Dr. Fillit also said the finding that about 14% of dementia patients were receiving three or more of these drugs “may not be an outrageous number, because these patients, especially as they get into moderate and severe stages of disease, can be incredibly difficult to manage.

“Very often, dementia patients have depression, and up to 90% will have agitation and even psychosis during the course of dementia. And many of these patients need these types of drugs,” said Dr. Fillit.

Echoing the authors, Dr. Fillit said a key limitation of the study is not knowing whether the prescribing was appropriate or not.

The study was supported by a grant from the National Institute on Aging. Dr. Maust and Dr. Fillit have disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, new research suggests.

Investigators found that 14% of these individuals were receiving CNS-active polypharmacy, defined as combinations of multiple psychotropic and opioid medications taken for more than 30 days.

“For most patients, the risks of these medications, particularly in combination, are almost certainly greater than the potential benefits,” said Donovan Maust, MD, associate director of the geriatric psychiatry program, University of Michigan, Ann Arbor.

The study was published online March 9 in JAMA.

Serious risks

Memory impairment is the cardinal feature of dementia, but behavioral and psychological symptoms, which can include apathy, delusions, and agitation, are common during all stages of illness and cause significant caregiver distress, the researchers noted.

They noted that there is a dearth of high-quality evidence to support prescribing these medications in this patient population, yet “clinicians regularly prescribe psychotropic medications to community-dwelling persons with dementia in rates that far exceed use in the general older adult population.”

The Beers Criteria, from the American Geriatrics Society, advise against the practice of CNS polypharmacy because of the significant increase in risk for falls as well as impaired cognition, cardiac conduction abnormalities, respiratory suppression, and death when polypharmacy involves opioids.

They note that previous studies from Europe of polypharmacy for patients with dementia have not included antiepileptic medications or opioids, so the true extent of CNS-active polypharmacy may be “significantly” underestimated.

To determine the prevalence of polypharmacy with CNS-active medications among community-dwelling older adults with dementia, the researchers analyzed data on prescription fills for nearly 1.2 million community-dwelling Medicare patients with dementia.

The primary outcome was the prevalence of CNS-active polypharmacy in 2018. They defined CNS-active polypharmacy as exposure to three or more medications for more than 30 consecutive days from the following drug classes: antidepressants, antipsychotics, antiepileptics, benzodiazepines, nonbenzodiazepines, benzodiazepine receptor agonist hypnotics, and opioids.

They found that roughly one in seven (13.9%) patients met criteria for CNS-active polypharmacy. Of those receiving a CNS-active polypharmacy regimen, 57.8% had been doing so for longer than 180 days, and 6.8% had been doing so for a year. Nearly 30% of patients were exposed to five or more medications, and 5.2% were exposed to five or more medication classes.

Conservative approach warranted

Nearly all (92%) patients taking three or more CNS-active medications were taking an antidepressant, “consistent with their place as the psychotropic class most commonly prescribed both to older adults overall and those with dementia,” the investigators noted.

There is minimal high-quality evidence to support the efficacy of antidepressants for the treatment of depression for patients with dementia, they pointed out.

Nearly half (47%) of patients who were taking three or more CNS-active medications received at least one antipsychotic, most often quetiapine. Antipsychotics are not approved for people with dementia but are often prescribed off label for agitation, anxiety, and sleep problems, the researchers noted.

Nearly two thirds (62%) of patients with dementia who were taking three or more CNS drugs were taking an antiepileptic (most commonly, gabapentin); 41%, benzodiazepines; 32%, opioids; and 6%, Z-drugs.

The most common polypharmacy class combination included at least one antidepressant, one antiepileptic, and one antipsychotic. These accounted for 12.9% of polypharmacy days.

Despite limited high-quality evidence of efficacy, the prescribing of psychotropic medications and opioids is “pervasive” for adults with dementia in the United States, the investigators noted.

“Especially given that older adults with dementia might not be able to convey side effects they are experiencing, I think clinicians should be more conservative in how they are prescribing these medications and skeptical about the potential for benefit,” said Dr. Maust.

Regarding study limitations, the researchers noted that prescription medication claims may have led to an overestimation of the exposure to polypharmacy, insofar as the prescriptions may have been filled but not taken or were taken only on an as-needed basis.

In addition, the investigators were unable to determine the appropriateness of the particular combinations used or to examine the specific harms associated with CNS-active polypharmacy.

A major clinical challenge

Weighing in on the results, Howard Fillit, MD, founding executive director and chief science officer of the Alzheimer’s Drug Discovery Foundation, said the study is important because polypharmacy is one of the “geriatric giants, and the question is, what do you do about it?”

Dr. Fillit said it is important to conduct a careful medication review for all older patients, “making sure that the use of each drug is appropriate. The most important thing is to define what is the appropriate utilization of these kinds of drugs. That goes for both overutilization or misuse of these drugs and underutilization, where people are undertreated for symptoms that can’t be managed by behavioral management, for example,” Dr. Fillit said.

Dr. Fillit also said the finding that about 14% of dementia patients were receiving three or more of these drugs “may not be an outrageous number, because these patients, especially as they get into moderate and severe stages of disease, can be incredibly difficult to manage.

“Very often, dementia patients have depression, and up to 90% will have agitation and even psychosis during the course of dementia. And many of these patients need these types of drugs,” said Dr. Fillit.

Echoing the authors, Dr. Fillit said a key limitation of the study is not knowing whether the prescribing was appropriate or not.

The study was supported by a grant from the National Institute on Aging. Dr. Maust and Dr. Fillit have disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

, new research suggests.

Investigators found that 14% of these individuals were receiving CNS-active polypharmacy, defined as combinations of multiple psychotropic and opioid medications taken for more than 30 days.

“For most patients, the risks of these medications, particularly in combination, are almost certainly greater than the potential benefits,” said Donovan Maust, MD, associate director of the geriatric psychiatry program, University of Michigan, Ann Arbor.

The study was published online March 9 in JAMA.

Serious risks

Memory impairment is the cardinal feature of dementia, but behavioral and psychological symptoms, which can include apathy, delusions, and agitation, are common during all stages of illness and cause significant caregiver distress, the researchers noted.

They noted that there is a dearth of high-quality evidence to support prescribing these medications in this patient population, yet “clinicians regularly prescribe psychotropic medications to community-dwelling persons with dementia in rates that far exceed use in the general older adult population.”

The Beers Criteria, from the American Geriatrics Society, advise against the practice of CNS polypharmacy because of the significant increase in risk for falls as well as impaired cognition, cardiac conduction abnormalities, respiratory suppression, and death when polypharmacy involves opioids.

They note that previous studies from Europe of polypharmacy for patients with dementia have not included antiepileptic medications or opioids, so the true extent of CNS-active polypharmacy may be “significantly” underestimated.

To determine the prevalence of polypharmacy with CNS-active medications among community-dwelling older adults with dementia, the researchers analyzed data on prescription fills for nearly 1.2 million community-dwelling Medicare patients with dementia.

The primary outcome was the prevalence of CNS-active polypharmacy in 2018. They defined CNS-active polypharmacy as exposure to three or more medications for more than 30 consecutive days from the following drug classes: antidepressants, antipsychotics, antiepileptics, benzodiazepines, nonbenzodiazepines, benzodiazepine receptor agonist hypnotics, and opioids.

They found that roughly one in seven (13.9%) patients met criteria for CNS-active polypharmacy. Of those receiving a CNS-active polypharmacy regimen, 57.8% had been doing so for longer than 180 days, and 6.8% had been doing so for a year. Nearly 30% of patients were exposed to five or more medications, and 5.2% were exposed to five or more medication classes.

Conservative approach warranted

Nearly all (92%) patients taking three or more CNS-active medications were taking an antidepressant, “consistent with their place as the psychotropic class most commonly prescribed both to older adults overall and those with dementia,” the investigators noted.

There is minimal high-quality evidence to support the efficacy of antidepressants for the treatment of depression for patients with dementia, they pointed out.

Nearly half (47%) of patients who were taking three or more CNS-active medications received at least one antipsychotic, most often quetiapine. Antipsychotics are not approved for people with dementia but are often prescribed off label for agitation, anxiety, and sleep problems, the researchers noted.

Nearly two thirds (62%) of patients with dementia who were taking three or more CNS drugs were taking an antiepileptic (most commonly, gabapentin); 41%, benzodiazepines; 32%, opioids; and 6%, Z-drugs.

The most common polypharmacy class combination included at least one antidepressant, one antiepileptic, and one antipsychotic. These accounted for 12.9% of polypharmacy days.

Despite limited high-quality evidence of efficacy, the prescribing of psychotropic medications and opioids is “pervasive” for adults with dementia in the United States, the investigators noted.

“Especially given that older adults with dementia might not be able to convey side effects they are experiencing, I think clinicians should be more conservative in how they are prescribing these medications and skeptical about the potential for benefit,” said Dr. Maust.

Regarding study limitations, the researchers noted that prescription medication claims may have led to an overestimation of the exposure to polypharmacy, insofar as the prescriptions may have been filled but not taken or were taken only on an as-needed basis.

In addition, the investigators were unable to determine the appropriateness of the particular combinations used or to examine the specific harms associated with CNS-active polypharmacy.

A major clinical challenge

Weighing in on the results, Howard Fillit, MD, founding executive director and chief science officer of the Alzheimer’s Drug Discovery Foundation, said the study is important because polypharmacy is one of the “geriatric giants, and the question is, what do you do about it?”

Dr. Fillit said it is important to conduct a careful medication review for all older patients, “making sure that the use of each drug is appropriate. The most important thing is to define what is the appropriate utilization of these kinds of drugs. That goes for both overutilization or misuse of these drugs and underutilization, where people are undertreated for symptoms that can’t be managed by behavioral management, for example,” Dr. Fillit said.

Dr. Fillit also said the finding that about 14% of dementia patients were receiving three or more of these drugs “may not be an outrageous number, because these patients, especially as they get into moderate and severe stages of disease, can be incredibly difficult to manage.

“Very often, dementia patients have depression, and up to 90% will have agitation and even psychosis during the course of dementia. And many of these patients need these types of drugs,” said Dr. Fillit.

Echoing the authors, Dr. Fillit said a key limitation of the study is not knowing whether the prescribing was appropriate or not.

The study was supported by a grant from the National Institute on Aging. Dr. Maust and Dr. Fillit have disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

FROM JAMA

Palliative care for patients with dementia: When to refer?

Palliative care for people with dementia is increasingly recognized as a way to improve quality of life and provide relief from the myriad physical and psychological symptoms of advancing neurodegenerative disease. But unlike in cancer,

A new literature review has found these referrals to be all over the map among patients with dementia – with many occurring very late in the disease process – and do not reflect any consistent criteria based on patient needs.

For their research, published March 2 in the Journal of the American Geriatrics Society, Li Mo, MD, of the University of Texas MD Anderson Cancer Center in Houston, and colleagues looked at nearly 60 studies dating back to the early 1990s that contained information on referrals to palliative care for patients with dementia. While a palliative care approach can be provided by nonspecialists, all the included studies dealt at least in part with specialist care.

Standardized criteria is lacking

The investigators found advanced or late-stage dementia to be the most common reason cited for referral, with three quarters of the studies recommending palliative care for late-stage or advanced dementia, generally without qualifying what symptoms or needs were present. Patients received palliative care across a range of settings, including nursing homes, hospitals, and their own homes, though many articles did not include information on where patients received care.

A fifth of the articles suggested that medical complications of dementia including falls, pneumonia, and ulcers should trigger referrals to palliative care, while another fifth cited poor prognosis, defined varyingly as having between 2 years and 6 months likely left to live. Poor nutrition status was identified in 10% of studies as meriting referral.

Only 20% of the studies identified patient needs – evidence of psychological distress or functional decline, for example – as criteria for referral, despite these being ubiquitous in dementia. The authors said they were surprised by this finding, which could possibly be explained, they wrote, by “the interest among geriatrician, neurologist, and primary care teams to provide good symptom management,” reflecting a de facto palliative care approach. “There is also significant stigma associated with a specialist palliative care referral,” the authors noted.

Curiously, the researchers noted, a new diagnosis of dementia in more than a quarter of the studies triggered referral, a finding that possibly reflected delayed diagnoses.

The findings revealed “heterogeneity in the literature in reasons for involving specialist palliative care, which may partly explain the variation in patterns of palliative care referral,” Dr. Mo and colleagues wrote, stressing that more standardized criteria are urgently needed to bring dementia in line with cancer in terms of providing timely palliative care.

Patients with advancing dementia have little chance to self-report symptoms, meaning that more attention to patient complaints earlier in the disease course, and greater sensitivity to patient distress, are required. By routinely screening symptoms, clinicians could use specific cutoffs “as triggers to initiate automatic timely palliative care referral,” the authors concluded, noting that more research was needed before these cutoffs, whether based on symptom intensity or other measures, could be calculated.

Dr. Mo and colleagues acknowledged as weaknesses of their study the fact that a third of the articles in the review were based on expert consensus, while others did not distinguish clearly between primary and specialist palliative care.

A starting point for further discussion

Asked to comment on the findings, Elizabeth Sampson, MD, a palliative care researcher at University College London, praised Dr. Mo and colleagues’ study as “starting to pull together the strands” of a systematic approach to referrals and access to palliative care in dementia.

“Sometimes you need a paper like this to kick off the discussion to say look, this is where we are,” Dr. Sampson said, noting that the focus on need-based criteria dovetailed with a “general feeling in the field that we need to really think about needs, and what palliative care needs might be. What the threshold for referral should be we don’t know yet. Should it be three unmet needs? Or five? We’re still a long way from knowing.”

Dr. Sampson’s group is leading a UK-government funded research effort that aims to develop cost-effective palliative care interventions in dementia, in part through a tool that uses caregiver reports to assess symptom burden and patient needs. The research program “is founded on a needs-based approach, which aims to look at people’s individual needs and responding to them in a proactive way,” she said.

One of the obstacles to timely palliative care in dementia, Dr. Sampson said, is weighing resource allocation against what can be wildly varying prognoses. “Hospices understand when someone has terminal cancer and [is] likely to die within a few weeks, but it’s not unheard of for someone in very advanced stages of dementia to live another year,” she said. “There are concerns that a rapid increase in people with dementia being moved to palliative care could overwhelm already limited hospice capacity. We would argue that the best approach is to get palliative care out to where people with dementia live, which is usually the care home.”

Dr. Mo and colleagues’ study received funding from the National Institutes of Health, and its authors disclosed no financial conflicts of interest. Dr. Sampson’s work is supported by the UK’s Economic and Social Research Council and National Institute for Health Research. She disclosed no conflicts of interest.

Palliative care for people with dementia is increasingly recognized as a way to improve quality of life and provide relief from the myriad physical and psychological symptoms of advancing neurodegenerative disease. But unlike in cancer,

A new literature review has found these referrals to be all over the map among patients with dementia – with many occurring very late in the disease process – and do not reflect any consistent criteria based on patient needs.

For their research, published March 2 in the Journal of the American Geriatrics Society, Li Mo, MD, of the University of Texas MD Anderson Cancer Center in Houston, and colleagues looked at nearly 60 studies dating back to the early 1990s that contained information on referrals to palliative care for patients with dementia. While a palliative care approach can be provided by nonspecialists, all the included studies dealt at least in part with specialist care.

Standardized criteria is lacking

The investigators found advanced or late-stage dementia to be the most common reason cited for referral, with three quarters of the studies recommending palliative care for late-stage or advanced dementia, generally without qualifying what symptoms or needs were present. Patients received palliative care across a range of settings, including nursing homes, hospitals, and their own homes, though many articles did not include information on where patients received care.

A fifth of the articles suggested that medical complications of dementia including falls, pneumonia, and ulcers should trigger referrals to palliative care, while another fifth cited poor prognosis, defined varyingly as having between 2 years and 6 months likely left to live. Poor nutrition status was identified in 10% of studies as meriting referral.

Only 20% of the studies identified patient needs – evidence of psychological distress or functional decline, for example – as criteria for referral, despite these being ubiquitous in dementia. The authors said they were surprised by this finding, which could possibly be explained, they wrote, by “the interest among geriatrician, neurologist, and primary care teams to provide good symptom management,” reflecting a de facto palliative care approach. “There is also significant stigma associated with a specialist palliative care referral,” the authors noted.

Curiously, the researchers noted, a new diagnosis of dementia in more than a quarter of the studies triggered referral, a finding that possibly reflected delayed diagnoses.

The findings revealed “heterogeneity in the literature in reasons for involving specialist palliative care, which may partly explain the variation in patterns of palliative care referral,” Dr. Mo and colleagues wrote, stressing that more standardized criteria are urgently needed to bring dementia in line with cancer in terms of providing timely palliative care.

Patients with advancing dementia have little chance to self-report symptoms, meaning that more attention to patient complaints earlier in the disease course, and greater sensitivity to patient distress, are required. By routinely screening symptoms, clinicians could use specific cutoffs “as triggers to initiate automatic timely palliative care referral,” the authors concluded, noting that more research was needed before these cutoffs, whether based on symptom intensity or other measures, could be calculated.

Dr. Mo and colleagues acknowledged as weaknesses of their study the fact that a third of the articles in the review were based on expert consensus, while others did not distinguish clearly between primary and specialist palliative care.

A starting point for further discussion

Asked to comment on the findings, Elizabeth Sampson, MD, a palliative care researcher at University College London, praised Dr. Mo and colleagues’ study as “starting to pull together the strands” of a systematic approach to referrals and access to palliative care in dementia.

“Sometimes you need a paper like this to kick off the discussion to say look, this is where we are,” Dr. Sampson said, noting that the focus on need-based criteria dovetailed with a “general feeling in the field that we need to really think about needs, and what palliative care needs might be. What the threshold for referral should be we don’t know yet. Should it be three unmet needs? Or five? We’re still a long way from knowing.”

Dr. Sampson’s group is leading a UK-government funded research effort that aims to develop cost-effective palliative care interventions in dementia, in part through a tool that uses caregiver reports to assess symptom burden and patient needs. The research program “is founded on a needs-based approach, which aims to look at people’s individual needs and responding to them in a proactive way,” she said.

One of the obstacles to timely palliative care in dementia, Dr. Sampson said, is weighing resource allocation against what can be wildly varying prognoses. “Hospices understand when someone has terminal cancer and [is] likely to die within a few weeks, but it’s not unheard of for someone in very advanced stages of dementia to live another year,” she said. “There are concerns that a rapid increase in people with dementia being moved to palliative care could overwhelm already limited hospice capacity. We would argue that the best approach is to get palliative care out to where people with dementia live, which is usually the care home.”

Dr. Mo and colleagues’ study received funding from the National Institutes of Health, and its authors disclosed no financial conflicts of interest. Dr. Sampson’s work is supported by the UK’s Economic and Social Research Council and National Institute for Health Research. She disclosed no conflicts of interest.

Palliative care for people with dementia is increasingly recognized as a way to improve quality of life and provide relief from the myriad physical and psychological symptoms of advancing neurodegenerative disease. But unlike in cancer,

A new literature review has found these referrals to be all over the map among patients with dementia – with many occurring very late in the disease process – and do not reflect any consistent criteria based on patient needs.

For their research, published March 2 in the Journal of the American Geriatrics Society, Li Mo, MD, of the University of Texas MD Anderson Cancer Center in Houston, and colleagues looked at nearly 60 studies dating back to the early 1990s that contained information on referrals to palliative care for patients with dementia. While a palliative care approach can be provided by nonspecialists, all the included studies dealt at least in part with specialist care.

Standardized criteria is lacking

The investigators found advanced or late-stage dementia to be the most common reason cited for referral, with three quarters of the studies recommending palliative care for late-stage or advanced dementia, generally without qualifying what symptoms or needs were present. Patients received palliative care across a range of settings, including nursing homes, hospitals, and their own homes, though many articles did not include information on where patients received care.

A fifth of the articles suggested that medical complications of dementia including falls, pneumonia, and ulcers should trigger referrals to palliative care, while another fifth cited poor prognosis, defined varyingly as having between 2 years and 6 months likely left to live. Poor nutrition status was identified in 10% of studies as meriting referral.

Only 20% of the studies identified patient needs – evidence of psychological distress or functional decline, for example – as criteria for referral, despite these being ubiquitous in dementia. The authors said they were surprised by this finding, which could possibly be explained, they wrote, by “the interest among geriatrician, neurologist, and primary care teams to provide good symptom management,” reflecting a de facto palliative care approach. “There is also significant stigma associated with a specialist palliative care referral,” the authors noted.

Curiously, the researchers noted, a new diagnosis of dementia in more than a quarter of the studies triggered referral, a finding that possibly reflected delayed diagnoses.

The findings revealed “heterogeneity in the literature in reasons for involving specialist palliative care, which may partly explain the variation in patterns of palliative care referral,” Dr. Mo and colleagues wrote, stressing that more standardized criteria are urgently needed to bring dementia in line with cancer in terms of providing timely palliative care.

Patients with advancing dementia have little chance to self-report symptoms, meaning that more attention to patient complaints earlier in the disease course, and greater sensitivity to patient distress, are required. By routinely screening symptoms, clinicians could use specific cutoffs “as triggers to initiate automatic timely palliative care referral,” the authors concluded, noting that more research was needed before these cutoffs, whether based on symptom intensity or other measures, could be calculated.

Dr. Mo and colleagues acknowledged as weaknesses of their study the fact that a third of the articles in the review were based on expert consensus, while others did not distinguish clearly between primary and specialist palliative care.

A starting point for further discussion

Asked to comment on the findings, Elizabeth Sampson, MD, a palliative care researcher at University College London, praised Dr. Mo and colleagues’ study as “starting to pull together the strands” of a systematic approach to referrals and access to palliative care in dementia.

“Sometimes you need a paper like this to kick off the discussion to say look, this is where we are,” Dr. Sampson said, noting that the focus on need-based criteria dovetailed with a “general feeling in the field that we need to really think about needs, and what palliative care needs might be. What the threshold for referral should be we don’t know yet. Should it be three unmet needs? Or five? We’re still a long way from knowing.”

Dr. Sampson’s group is leading a UK-government funded research effort that aims to develop cost-effective palliative care interventions in dementia, in part through a tool that uses caregiver reports to assess symptom burden and patient needs. The research program “is founded on a needs-based approach, which aims to look at people’s individual needs and responding to them in a proactive way,” she said.

One of the obstacles to timely palliative care in dementia, Dr. Sampson said, is weighing resource allocation against what can be wildly varying prognoses. “Hospices understand when someone has terminal cancer and [is] likely to die within a few weeks, but it’s not unheard of for someone in very advanced stages of dementia to live another year,” she said. “There are concerns that a rapid increase in people with dementia being moved to palliative care could overwhelm already limited hospice capacity. We would argue that the best approach is to get palliative care out to where people with dementia live, which is usually the care home.”

Dr. Mo and colleagues’ study received funding from the National Institutes of Health, and its authors disclosed no financial conflicts of interest. Dr. Sampson’s work is supported by the UK’s Economic and Social Research Council and National Institute for Health Research. She disclosed no conflicts of interest.

FROM THE JOURNAL OF THE AMERICAN GERIATRICS SOCIETY

Risdiplam study shows promise for spinal muscular atrophy

, according to results of part 1 of the FIREFISH study.

A boost in SMN expression has been linked to improvements in survival and motor function, which was also observed in exploratory efficacy outcomes in the 2-part, phase 2-3, open-label study.

“No surviving infant was receiving permanent ventilation at month 12, and 7 of the 21 infants were able to sit without support, which is not expected in patients with type 1 spinal muscular atrophy, according to historical experience,” reported the FIREFISH Working Group led by Giovanni Baranello, MD, PhD, from the Dubowitz Neuromuscular Centre, National Institute for Health Research Great Ormond Street Hospital Biomedical Research Centre, Great Ormond Street Institute of Child Health University College London, and Great Ormond Street Hospital Trust, London.

However, “it cannot be stated with confidence that there was clinical benefit of the agent because the exploratory clinical endpoints were analyzed post hoc and can only be qualitatively compared with historical cohorts,” they added.

The findings were published online Feb. 24 in the New England Journal of Medicine.

A phase 2-3 open-label study

The study enrolled 21 infants with type 1 SMA, between the ages of 1 and 7 months. The majority (n = 17) were treated for 1 year with high-dose risdiplam, reaching 0.2 mg/kg of body weight per day by the twelfth month. Four infants in a low-dose cohort were treated with 0.08 mg/kg by the twelfth month. The medication was administered once daily orally in infants who were able to swallow, or by feeding tube for those who could not.

The primary outcomes of this first part of the study were safety, pharmacokinetics, pharmacodynamics (including the blood SMN protein concentration), and selection of the risdiplam dose for part 2 of the study. Exploratory outcomes included event-free survival, defined as being alive without tracheostomy or the use of permanent ventilation for 16 or more hours per day, and the ability to sit without support for at least 5 seconds.

In terms of safety, the study recorded 24 serious adverse events. “The most common serious adverse events were infections of the respiratory tract, and four infants died of respiratory complications; these findings are consistent with the neuromuscular respiratory failure that characterizes spinal muscular atrophy,” the authors reported. “The risdiplam-associated retinal toxic effects that had been previously observed in monkeys were not observed in the current study,” they added.

Regarding SMN protein levels, a median level of 2.1 times the baseline level was observed within 4 weeks after the initiation of treatment in the high-dose cohort, they reported. By 12 months, these median values had increased to 3.0 times and 1.9 times the baseline values in the low-dose and high-dose cohorts, respectively.

Looking at exploratory efficacy outcomes, 90% of infants survived without ventilatory support, and seven infants in the high-dose cohort were able to sit without support for at least 5 seconds. The higher dose of risdiplam (0.2 mg/kg per day) was selected for part 2 of the study.

The first oral treatment option