User login

Managing food allergy in children: An evidence-based update

Food allergy is a complex condition that has become a growing concern for parents and an increasing public health problem in the United States. Food allergy affects social interactions, school attendance, and quality of life, especially when associated with comorbid atopic conditions such as asthma, atopic dermatitis, and allergic rhinitis.1,2 It is the major cause of anaphylaxis in children, accounting for as many as 81% of cases.3 Societal costs of food allergy are great and are spread broadly across the health care system and the family. (See “What is the cost of food allergy?”2.)

SIDEBAR

What is the cost of food allergy?

Direct costs of food allergy to the health care system include medications, laboratory tests, office visits to primary care physicians and specialists, emergency department visits, and hospitalizations. Indirect costs include family medical and nonmedical expenses, lost work productivity, and job opportunity costs. Overall, the cost of food allergy in the United States is $24.8 billion annually—averaging $4184 for each affected child. Parents bear much of this expense.2

What a food allergy is—and isn’t

The National Institute of Allergy and Infectious Diseases (NIAID) defines food allergy as “an adverse health effect arising from a specific immune response that occurs reproducibly on exposure to a given food.”4 An adverse reaction to food or a food component that lacks an identified immunologic pathophysiology is not considered food allergy but is classified as food intolerance.4

Food allergy is caused by either immunoglobulin E (IgE)-mediated or non-IgE-mediated immunologic dysfunction. IgE antibodies can trigger an intense inflammatory response to certain allergens. Non-IgE-mediated food allergies are less common and not well understood.

This article focuses only on the diagnosis and management of IgE-mediated food allergy.

The culprits

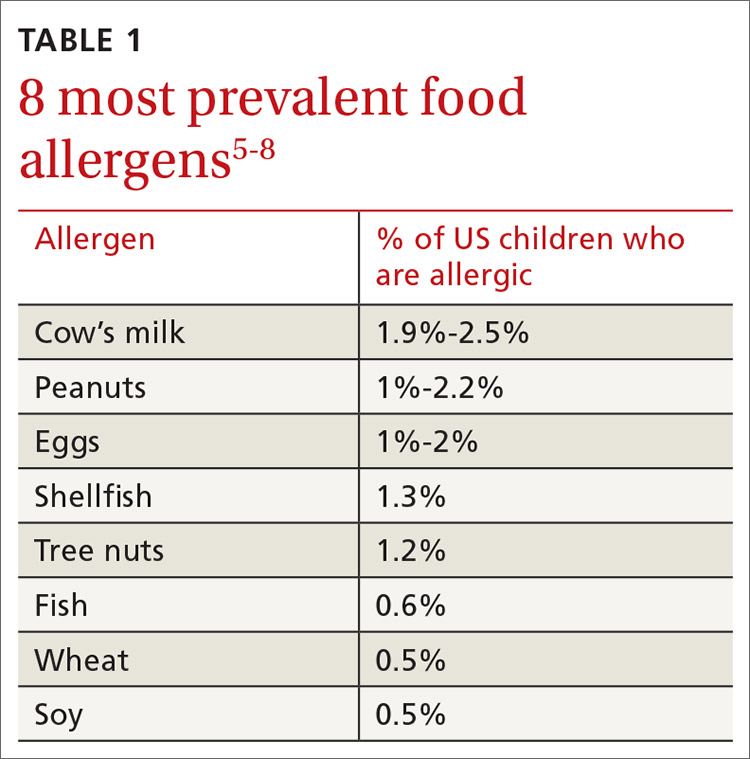

More than 170 foods have been reported to cause an IgE-mediated reaction. Table 15-8 lists the 8 foods that most commonly cause allergic reactions in the United States and that account for > 50% of allergies to food.9 Studies vary in their methodology for estimating the prevalence of allergy to individual foods, but cow’s milk and peanuts appear to be the most common, each affecting as many as 2% to 2.5% of children.7,8 In general, allergies to cow’s milk and to eggs are more prevalent in very young and preschool children, whereas allergies to peanuts, tree nuts, fish, and shellfish are more prevalent in older children.10 Labels on all packaged foods regulated by the US Food and Drug Administration must declare if the product contains even a trace of these 8 allergens.

How common is food allergy?

The Centers for Disease Control and Prevention (CDC) estimates that 4% to 6% of children in the United States have a food allergy.11,12 Almost 40% of food-allergic children have a history of severe food-induced reactions.13 Other developed countries cite similar estimates of overall prevalence.14,15

However, many estimates of the prevalence of food allergy are derived from self-reports, without objective data.9 Accurate evaluation of the prevalence of food allergy is challenging because of many factors, including differences in study methodology and the definition of allergy, geographic variation, racial and ethnic variations, and dietary exposure. Parents and children often confuse nonallergic food reactions, such as food intolerance, with food allergy. Precise determination of the prevalence and natural history of food allergy at the population level requires confirmatory oral food challenges of a representative sample of infants and young children with presumed food allergy.16

Continue to: The CDC concludes that the prevalence...

The CDC concludes that the prevalence of food allergy in children younger than 18 years increased by 18% from 1997 through 2007.17,18 The cause of this increase is unclear but likely multifactorial; hypotheses include an increase in associated atopic conditions, delayed introduction of allergenic foods, and living in an overly sterile environment with reduced exposure to microbes.19 A recent population-based study of food allergy among children in Olmsted County, Minnesota, found that the incidence of food allergy increased between 2002 and 2007, stabilized subsequently, and appears to be declining among children 1 to 4 years of age, following a peak in 2006-2007.19

What are the risk factors?

Proposed risk factors for food allergy include demographics, genetics, a history of atopic disease, and environmental factors. Food allergy might be more common in boys than in girls, and in African Americans and Asians than in Whites.12,16 A child is 7 times more likely to be allergic to peanuts if a parent or sibling has peanut allergy.20 Infants and children with eczema or asthma are more likely to develop food allergy; the severity of eczema correlates with risk.12,20 Improvements in hygiene in Western societies have decreased the spread of infection, but this has been accompanied by a rise in atopic disease. In countries where health standards are poor and exposure to pathogens is greater, the prevalence of allergy is low.21

Conversely, increased microbial exposure might help protect against atopy via a pathway in which T-helper cells prevent pro-allergic immune development and keep harmless environmental exposures from becoming allergens.22 Attendance at daycare and exposure to farm animals early in life reduces the likelihood of atopic disease.16,21 The presence of a dog in the home lessens the probability of egg allergy in infants.23 Food allergy is less common in younger siblings than in first-born children, possibly due to younger siblings’ increased exposure to infection and alterations in the gut microbiome.23,24

Diagnosis: Established by presentation, positive testing

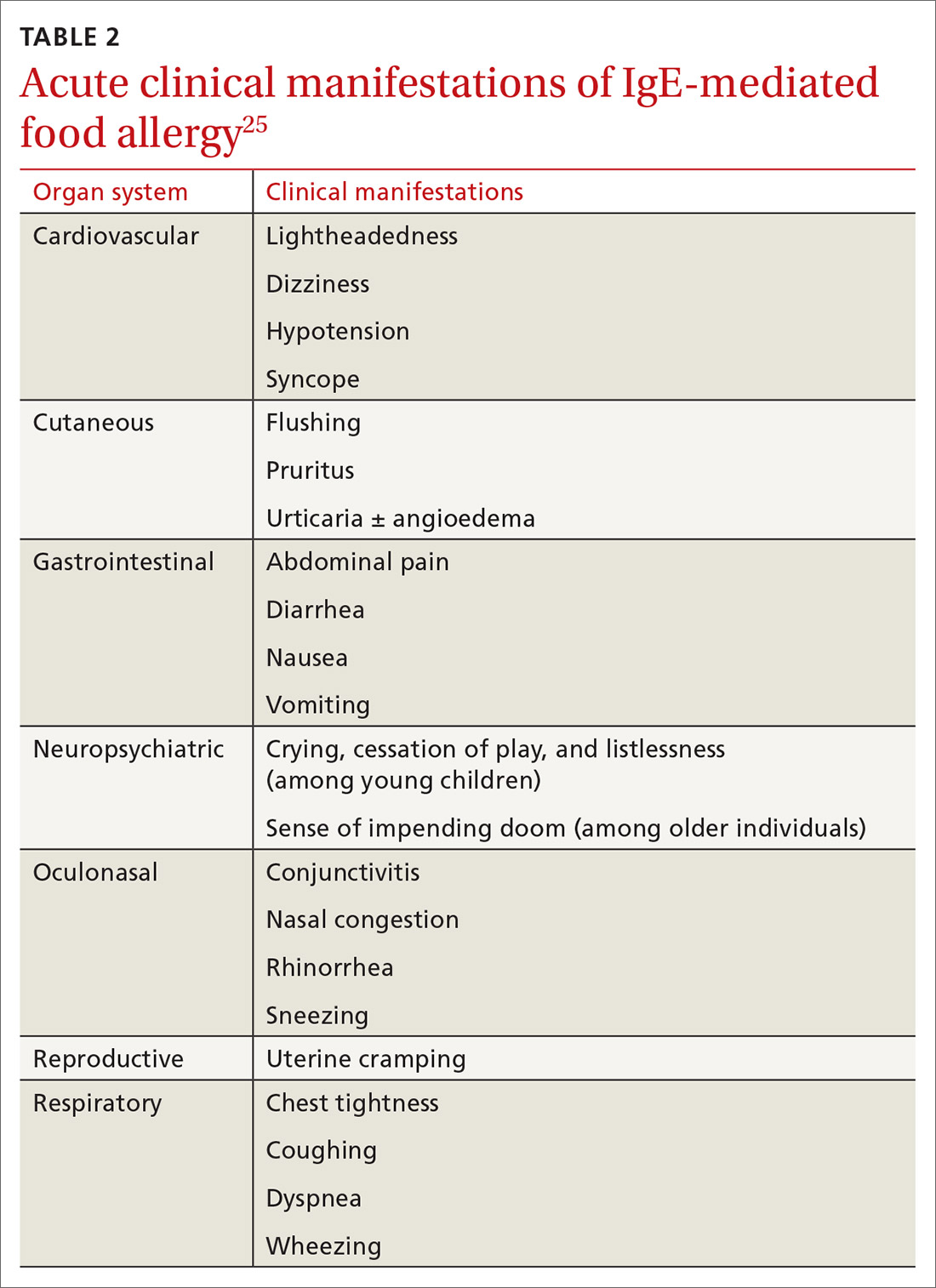

Onset of symptoms after exposure to a suspected food allergen almost always occurs within 2 hours and, typically, resolves within several hours. Symptoms should occur consistently after ingestion of the food allergen. Subsequent exposures can trigger more severe symptoms, depending on the amount, route, and duration of exposure to the allergen.25 Reactions typically follow ingestion or cutaneous exposures; inhalation rarely triggers a response.26 IgE-mediated release of histamine and other mediators from mast cells and basophils triggers reactions that typically involve one or more organ systems (Table 2).25

Cutaneous symptoms are the most common manifestations of food allergy, occurring in 70% to 80% of childhood reactions. Gastrointestinal and oral or respiratory symptoms occur in, respectively, 40% to 50% and 25% of allergic reactions to food. Cardiovascular symptoms develop in fewer than 10% of allergic reactions.26

Continue to: Anaphylaxis

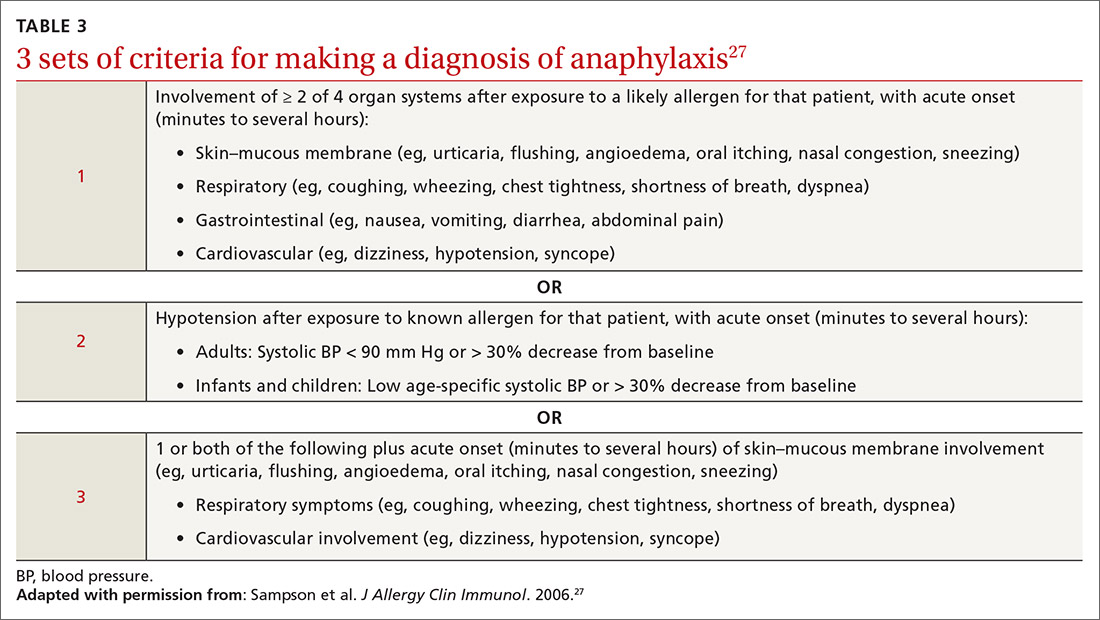

Anaphylaxis is a serious allergic reaction that develops rapidly and can cause death; diagnosis is based on specific criteria (Table 3).27 Data for rates of anaphylaxis due to food allergy are limited. The incidence of fatal reaction due to food allergy is estimated to be 1 in every 800,000 children annually.3

Clinical suspicion. Food allergy should be suspected in infants and children who present with anaphylaxis or other symptoms (Table 225) that occur within minutes to hours of ingesting food.4 Parental and self-reports alone are insufficient to diagnose food allergy. NIAID guidelines recommend that patient reports of food allergy be confirmed, because multiple studies demonstrate that 50% to 90% of presumed food allergies are not true allergy.4 Health care providers must obtain a detailed medical history and pertinent family history, plus perform a physical exam and allergy sensitivity testing. Methods to help diagnose food allergies include skin-prick tests, allergen-specific serum IgE tests, and oral food challenges.4

General principles and utility of testing

Before ordering tests, it’s important to distinguish between food sensitization and food allergy and to inform the families of children with suspected food allergy about the limitations of skin-prick tests and serum IgE tests. A child with IgE antibodies specific to a food or with a positive skin-prick test, but without symptoms upon ingestion of the food, is merely sensitized; food allergy indicates the appearance of symptoms following exposure to a specific food, in addition to the detection of specific IgE antibodies or a positive skin-prick test to that same food.28

Skin-prick testing. Skin-prick tests can be performed at any age. The procedure involves pricking or scratching the surface of the skin, usually the volar aspect of the forearm or the back, with a commercial extract. Testing should be performed by a physician or other provider who is properly trained in the technique and in interpreting results. The extract contains specific allergenic proteins that activate mast cells, resulting in a characteristic wheal-and-flare response that is typically measured 15 to 20 minutes after application. Some medications, such as H1- and H2-receptor blockers and tricyclic antidepressants, can interfere with results and need to be held for 3 to 5 days before testing.

A positive skin-prick test result is defined as a wheal ≥ 3 mm larger in diameter than the negative control. The larger the size of the wheal, the higher the likelihood of a reaction to the tested food.29 Patients who exhibit dermatographism might experience a wheal-and-flare response from the action of the skin-prick test, rather than from food-specific IgE antibodies. A negative skin-prick test has > 90% negative predictive value, so the test can rule out suspected food allergy.30 However, the skin-prick test alone cannot be used to diagnose food allergy because it has a high false-positive rate.

Continue to: Allergen-specific serum IgE testing

Allergen-specific serum IgE testing. Measurement of food-specific serum IgE levels is routinely available and requires only a blood specimen. The test can be used in patients with skin disease, and results are not affected by concurrent medications. The presence of food-specific IgE indicates that the patient is sensitized to that allergen and might react upon exposure; children with a higher level of antibody are more likely to react.29

Food-specific serum IgE tests are sensitive but nonspecific for food allergy.31 Broad food-allergy test panels often yield false-positive results that can lead to unnecessary dietary elimination, resulting in years of inconvenience, nutrition problems, and needless health care expense.32

It is appropriate to order tests of specific serum IgE to foods ingested within the 2 to 3–hour window before onset of symptoms to avoid broad food allergy test panels. Like skin-prick testing, positive allergen-specific serum IgE tests alone cannot diagnose food allergy.

Oral food challenge. The double-blind, placebo-controlled oral food challenge is the gold standard for the diagnosis of food allergy. Because this test is time-consuming and technically difficult, single-blind or open food challenges are more common. Oral food challenges should be performed only by a physician or other provider who can identify and treat anaphylaxis.

The oral challenge starts with a very low dose of suspected food allergen, which is gradually increased every 15 to 30 minutes as vital signs are monitored carefully. Patients are observed for an allergic reaction for 1 hour after the final dose.

Continue to: A retrospective study...

A retrospective study showed that, whereas 19% of patients reacted during an open food challenge, only 2% required epinephrine.33 Another study showed that 89% of children whose serum IgE testing was positive for specific foods were able to reintroduce those foods into the diet after a reassuring oral food challenge.34

Other diagnostic tests. The basophil activation assay, measurement of total serum IgE, atopy patch tests, and intradermal tests have been used, but are not recommended, for making the diagnosis of food allergy.4

How can food allergy be managed?

Medical options are few. No approved treatment exists for food allergy. However, it’s important to appropriately manage acute reactions and reduce the risk of subsequent reactions.1 Parents or other caregivers can give an H1 antihistamine, such as diphenhydramine, to infants and children with acute non-life-threatening symptoms. More severe symptoms require rapid administration of epinephrine.1 Auto-injectable epinephrine should be prescribed for parents and caregivers to use as needed for emergency treatment of anaphylaxis.

Team approach. A multidisciplinary approach to managing food allergy—involving physicians, school nurses, dietitians, and teachers, and using educational materials—is ideal. This strategy expands knowledge about food allergies, enhances correct administration of epinephrine, and reduces allergic reactions.1

Avoidance of food allergens can be challenging. Parents and caregivers should be taught to interpret the list of ingredients on food packages. Self-recognition of allergic reactions reduces the likelihood of a subsequent severe allergic reaction.35

Continue to: Importance of individualized care

Importance of individualized care. Health care providers should develop personalized management plans for their patients.1 (A good place to start is with the “Food Allergy & Anaphylaxis Emergency Care Plan”a developed by Food Allergy Research & Education [FARE]). Keep in mind that children with multiple food allergies consume less calcium and protein, and tend to be shorter4; therefore, it’s wise to closely monitor growth in these children and consider referral to a dietitian who is familiar with food allergy.

Potential of immunotherapy. Current research focuses on immunotherapy to induce tolerance to food allergens and protect against life-threatening allergic reactions. The goal of immunotherapy is to lessen adverse reactions to allergenic food proteins; the strategy is to have patients repeatedly ingest small but gradually increasing doses of the food allergen over many months.36 Although immunotherapy has successfully allowed some patients to consume larger quantities of a food without having an allergic reaction, it is unknown whether immunotherapy provides permanent resolution of food allergy. In addition, immunotherapy often causes serious systemic and local reactions.1,36,37

Is prevention possible?

Maternal diet during pregnancy and lactation does not affect development of food allergy in infants.38,39 Breastfeeding might prevent development of atopic disease, but evidence is insufficient to determine whether breastfeeding reduces the likelihood of food allergy.39 In nonbreastfed infants at high risk of food allergy, extensively or partially hydrolyzed formula might help protect against food allergy, compared to standard cow’s milk formula.9,39 Feeding with soy formula rather than cow’s milk formula does not help prevent food allergy.39,40 Pregnant and breastfeeding women should not restrict their diet as a means of preventing food allergy.39

Diet in infancy. Over the years, physicians have debated the proper timing of the introduction of solid foods into the diet of infants. Traditional teaching advocated delaying introduction of potentially allergenic foods to reduce the risk of food allergy; however, this guideline was based on inconsistent evidence,41 and the strategy did not reduce the incidence of food allergy. The prevalence of food allergy is lower in developing countries where caregivers introduce foods to infants at an earlier age.20

A recent large clinical trial indicates that early introduction of peanut-containing foods can help prevent peanut allergy. The study randomized 4- to 11-month-old infants with severe eczema, egg allergy, or both, to eat or avoid peanut products until 5 years of age. Infants assigned to eat peanuts were 81% less likely to develop peanut allergy than infants in the avoidance group. Absolute risk reduction was 14% (number need to treat = 7).42 Another study showed a nonsignificant (20%) lower relative risk of food allergy in breastfed infants who were fed potentially allergenic foods starting at 3 months of age, compared to being exclusively breastfed.43

Continue to: Based on these data...

Based on these data,42,43 NIAID instituted recommendations in 2017 aimed at preventing peanut allergy44:

- In healthy infants without known food allergy and those with mild or moderate eczema, caregivers can introduce peanut-containing foods at home with other solid foods.Parents who are anxious about a possible allergic reaction can introduce peanut products in a physician’s office.

- Infants at high risk of peanut allergy (those with severe eczema or egg allergy, or both) should undergo peanut-specific IgE or skin-prick testing:

- Negative test: indicates low risk of a reaction to peanuts; the infant should start consuming peanut-containing foods at 4 to 6 months of age, at home or in a physician’s office, depending on the parents’ preference

- Positive test: Referral to an allergist is recommended.

Do children outgrow food allergy?

Approximately 85% of children who have an allergy to milk, egg, soy, or wheat outgrow their allergy; however, only 15% to 20% who have an allergy to peanuts, tree nuts, fish, or shellfish eventually tolerate these foods. The time to resolution of food allergy varies with the food, and might not occur until adolescence.4 No test reliably predicts which children develop tolerance to any given food. A decrease in the food-specific serum IgE level or a decrease in the size of the wheal on skin-prick testing might portend the onset of tolerance to the food.4

CORRESPONDENCE

Catherine M. Bettcher, MD, FAAFP, Briarwood Family Medicine, 1801 Briarwood Circle, Building #10, Ann Arbor, MI 48108; cbettche@umich.edu.

1. Muraro A, Werfel T, Hoffmann-Sommergruber K, et al; . EAACI food allergy and anaphylaxis guidelines: diagnosis and management of food allergy. Allergy. 2014;69:1008-1025.

2. Gupta R, Holdford D, Bilaver L, et al. The economic impact of childhood food allergy in the United States. JAMA Pediatr. 2013;167:1026-1031.

3. Cianferoni A, Muraro A. Food-induced anaphylaxis. Immunol Allergy Clin North Am. 2012;32:165-195.

4., Boyce JA, Assa’ad A, Burks WA, et al. Guidelines for the diagnosis and management of food allergy in the United States: report of the NIAID-sponsored expert panel. J Allergy Clin Immunol. 2010;126(6 suppl):S1-S58.

5. Vierk KA, Koehler KM, Fein SB, et al. Prevalence of self-reported food allergy in American adults and use of food labels. J Allergy Clin Immunol. 2007;119:1504-1510.

6. Allen KJ, Koplin JJ. The epidemiology of IgE-mediated food allergy and anaphylaxis. Immunol Allergy Clin North Am. 2012;32:35-50.

7. Iweala OI, Choudhary SK, Commins SP. Food allergy. Curr Gastroenterol Rep. 2018;20:17.

8. Gupta RS, Warren CM, Smith BM, et al. The public health impact of parent-reported childhood food allergies in the United States. Pediatrics. 2018;142:e20181235.

9. Chafen JJS, Newberry SJ, Riedl MA, et al. Diagnosing and managing common food allergies: a systematic review. JAMA. 2010;303:1848-1856.

10. Nwaru BI, Hickstein L, Panesar SS, et al. Prevalence of common food allergies in Europe: a systematic review and meta-analysis. Allergy. 2014;69:992-1007.

11. Branum AM, Lukacs SL. Food allergy among U.S. children: trends in prevalence and hospitalizations. NCHS Data Brief No. 10. National Center for Health Statistics. October 2008. www.cdc.gov/nchs/products/databriefs/db10.htm. Accessed August 19, 2020.

12. Liu AH, Jaramillo R, Sicherer SH, et al. National prevalence and risk factors for food allergy and relationship to asthma: results from the National Health and Nutrition Examination Survey 2005-2006. J Allergy Clin Immunol. 2010;126:798-806.e13.

13. Gupta RS, Springston EE, Warrier MR, et al. The prevalence, severity, and distribution of childhood food allergy in the United States. Pediatrics. 2011;128:e9-e17.

14. Soller L, Ben-Shoshan M, Harrington DW, et al. Overall prevalence of self-reported food allergy in Canada. J Allergy Clin Immunol. 2012;130:986-988.

15. Venter C, Pereira B, Voigt K, et al. Prevalence and cumulative incidence of food hypersensitivity in the first 3 years of life. Allergy. 2008;63:354-359.

16. Savage J, Johns CB. Food allergy: epidemiology and natural history. Immunol Allergy Clin North Am. 2015;35:45-59.

17. Branum AM, Lukacs SL. Food allergy among children in the United States. Pediatrics. 2009;124:1549-1555.

18. Jackson KD, Howie LD, Akinbami LJ. Trends in allergic conditions among children: United States, 1997-2011. NCHS Data Brief No. 121. National Center for Health Statistics. May 2013. www.cdc.gov/nchs/products/databriefs/db121.htm. Accessed August 19, 2020.

19. Willits EK, Park MA, Hartz MF, et al. Food allergy: a comprehensive population-based cohort study. Mayo Clin Proc. 2018;93:1423-1430.

20. Lack G. Epidemiologic risks for food allergy. J Allergy Clin Immunol. 2008;121:1331-1336.

21. Okada H, Kuhn C, Feillet H, et al. The ‘hygiene hypothesis’ for autoimmune and allergic diseases: an update. Clin Exp Immunol. 2010;160:1-9.

22. Liu AH. Hygiene theory and allergy and asthma prevention. Paediatr Perinat Epidemiol. 2007;21 Suppl 3:2-7.

23. Prince BT, Mandel MJ, Nadeau K, et al. Gut microbiome and the development of food allergy and allergic disease. Pediatr Clin North Am. 2015;62:1479-1492.

24. Kusunoki T, Mukaida K, Morimoto T, et al. Birth order effect on childhood food allergy. Pediatr Allergy Immunol. 2012;23:250-254.

25. Abrams EM, Sicherer SH. Diagnosis and management of food allergy. CMAJ. 2016;188:1087-1093.

26. Perry TT, Matsui EC, Conover-Walker MK, et al. Risk of oral food challenges. J Allergy Clin Immunol. 2004;114:1164-1168.

27. Sampson HA, A, Campbell RL, et al. Second symposium on the definition and management of anaphylaxis: summary report—Second National Institute of Allergy and Infectious Disease/Food Allergy and Anaphylaxis Network symposium. J Allergy Clin Immunol. 2006;117:391-397.

28. Sampson HA. Food allergy. Part 2: diagnosis and management. J Allergy Clin Immunol. 1999;103:981-989.

29. Lieberman JA, Sicherer SH. Diagnosis of food allergy: epicutaneous skin tests, in vitro tests, and oral food challenge. Curr Allergy Asthma Rep. 2011;11:58-64.

30. Sicherer SH, Sampson HA. Food allergy. J Allergy Clin Immunol. 2010;125(2 suppl 2):S116-S125.

31. Soares-Weiser K, Takwoingi Y, Panesar SS, et al. The diagnosis of food allergy: a systematic review and meta-analysis. Allergy. 2014;69:76-86.

32. Bird JA, Crain M, Varshney P. Food allergen panel testing often results in misdiagnosis of food allergy. J Pediatr. 2015;166:97-100.

33. Lieberman JA, Cox AL, Vitale M, et al. Outcomes of office-based, open food challenges in the management of food allergy. J Allergy Clin Immunol. 2011;128:1120-1122.

34. Fleischer DM, Bock SA, Spears GC, et al. Oral food challenges in children with a diagnosis of food allergy. J Pediatr. 2011;158:578-583.e1.

35. Ewan PW, Clark AT. Long-term prospective observational study of patients with peanut and nut allergy after participation in a management plan. Lancet. 2001;357:111-115.

36. Nurmatov U, Dhami S, Arasi S, et al. Allergen immunotherapy for IgE-mediated food allergy: a systematic review and meta-analysis. Allergy. 2017;72:1133-1147.

37. Sampson HA, Aceves S, Bock SA, et al. Food allergy: a practice parameter update—2014. J Allergy Clin Immunol. 2014;134:1016-1025.e43.

38. Kramer MS, Kakuma R. Maternal dietary antigen avoidance during pregnancy or lactation, or both, for preventing or treating atopic disease in the child. Cochrane Database Syst Rev. 2012;2012(9):CD000133.

39. de Silva D, Geromi M, Halken S, et al; . Primary prevention of food allergy in children and adults: systematic review. Allergy. 2014;69:581-589.

40. Osborn DA, Sinn J. Soy formula for prevention of allergy and food intolerance in infants. Cochrane Database Syst Rev. 2004;(3):CD003741.

41. Filipiak B, Zutavern A, Koletzko S, et al; GINI-Group. Solid food introduction in relation to eczema: results from a four-year prospective birth cohort study. J Pediatr. 2007;151:352-358.

42. Du Toit G, Roberts G, Sayre PH, et al; LEAP Study Team. Randomized trial of peanut consumption in infants at risk for peanut allergy. N Engl J Med. 2015;372:803-813.

43. Perkin MR, Logan K, Tseng A, et al; EAT Study Team. Randomized trial of introduction of allergenic foods in breast-fed infants. N Engl J Med. 2016;374:1733-1743.

44. Togias A, Cooper SF, Acebal ML, et al. Addendum guidelines for the prevention of peanut allergy in the United States: report of the National Institute of Allergy and Infectious Diseases-sponsored expert panel. J Allergy Clin Immunol. 2017;139:29-44.

Food allergy is a complex condition that has become a growing concern for parents and an increasing public health problem in the United States. Food allergy affects social interactions, school attendance, and quality of life, especially when associated with comorbid atopic conditions such as asthma, atopic dermatitis, and allergic rhinitis.1,2 It is the major cause of anaphylaxis in children, accounting for as many as 81% of cases.3 Societal costs of food allergy are great and are spread broadly across the health care system and the family. (See “What is the cost of food allergy?”2.)

SIDEBAR

What is the cost of food allergy?

Direct costs of food allergy to the health care system include medications, laboratory tests, office visits to primary care physicians and specialists, emergency department visits, and hospitalizations. Indirect costs include family medical and nonmedical expenses, lost work productivity, and job opportunity costs. Overall, the cost of food allergy in the United States is $24.8 billion annually—averaging $4184 for each affected child. Parents bear much of this expense.2

What a food allergy is—and isn’t

The National Institute of Allergy and Infectious Diseases (NIAID) defines food allergy as “an adverse health effect arising from a specific immune response that occurs reproducibly on exposure to a given food.”4 An adverse reaction to food or a food component that lacks an identified immunologic pathophysiology is not considered food allergy but is classified as food intolerance.4

Food allergy is caused by either immunoglobulin E (IgE)-mediated or non-IgE-mediated immunologic dysfunction. IgE antibodies can trigger an intense inflammatory response to certain allergens. Non-IgE-mediated food allergies are less common and not well understood.

This article focuses only on the diagnosis and management of IgE-mediated food allergy.

The culprits

More than 170 foods have been reported to cause an IgE-mediated reaction. Table 15-8 lists the 8 foods that most commonly cause allergic reactions in the United States and that account for > 50% of allergies to food.9 Studies vary in their methodology for estimating the prevalence of allergy to individual foods, but cow’s milk and peanuts appear to be the most common, each affecting as many as 2% to 2.5% of children.7,8 In general, allergies to cow’s milk and to eggs are more prevalent in very young and preschool children, whereas allergies to peanuts, tree nuts, fish, and shellfish are more prevalent in older children.10 Labels on all packaged foods regulated by the US Food and Drug Administration must declare if the product contains even a trace of these 8 allergens.

How common is food allergy?

The Centers for Disease Control and Prevention (CDC) estimates that 4% to 6% of children in the United States have a food allergy.11,12 Almost 40% of food-allergic children have a history of severe food-induced reactions.13 Other developed countries cite similar estimates of overall prevalence.14,15

However, many estimates of the prevalence of food allergy are derived from self-reports, without objective data.9 Accurate evaluation of the prevalence of food allergy is challenging because of many factors, including differences in study methodology and the definition of allergy, geographic variation, racial and ethnic variations, and dietary exposure. Parents and children often confuse nonallergic food reactions, such as food intolerance, with food allergy. Precise determination of the prevalence and natural history of food allergy at the population level requires confirmatory oral food challenges of a representative sample of infants and young children with presumed food allergy.16

Continue to: The CDC concludes that the prevalence...

The CDC concludes that the prevalence of food allergy in children younger than 18 years increased by 18% from 1997 through 2007.17,18 The cause of this increase is unclear but likely multifactorial; hypotheses include an increase in associated atopic conditions, delayed introduction of allergenic foods, and living in an overly sterile environment with reduced exposure to microbes.19 A recent population-based study of food allergy among children in Olmsted County, Minnesota, found that the incidence of food allergy increased between 2002 and 2007, stabilized subsequently, and appears to be declining among children 1 to 4 years of age, following a peak in 2006-2007.19

What are the risk factors?

Proposed risk factors for food allergy include demographics, genetics, a history of atopic disease, and environmental factors. Food allergy might be more common in boys than in girls, and in African Americans and Asians than in Whites.12,16 A child is 7 times more likely to be allergic to peanuts if a parent or sibling has peanut allergy.20 Infants and children with eczema or asthma are more likely to develop food allergy; the severity of eczema correlates with risk.12,20 Improvements in hygiene in Western societies have decreased the spread of infection, but this has been accompanied by a rise in atopic disease. In countries where health standards are poor and exposure to pathogens is greater, the prevalence of allergy is low.21

Conversely, increased microbial exposure might help protect against atopy via a pathway in which T-helper cells prevent pro-allergic immune development and keep harmless environmental exposures from becoming allergens.22 Attendance at daycare and exposure to farm animals early in life reduces the likelihood of atopic disease.16,21 The presence of a dog in the home lessens the probability of egg allergy in infants.23 Food allergy is less common in younger siblings than in first-born children, possibly due to younger siblings’ increased exposure to infection and alterations in the gut microbiome.23,24

Diagnosis: Established by presentation, positive testing

Onset of symptoms after exposure to a suspected food allergen almost always occurs within 2 hours and, typically, resolves within several hours. Symptoms should occur consistently after ingestion of the food allergen. Subsequent exposures can trigger more severe symptoms, depending on the amount, route, and duration of exposure to the allergen.25 Reactions typically follow ingestion or cutaneous exposures; inhalation rarely triggers a response.26 IgE-mediated release of histamine and other mediators from mast cells and basophils triggers reactions that typically involve one or more organ systems (Table 2).25

Cutaneous symptoms are the most common manifestations of food allergy, occurring in 70% to 80% of childhood reactions. Gastrointestinal and oral or respiratory symptoms occur in, respectively, 40% to 50% and 25% of allergic reactions to food. Cardiovascular symptoms develop in fewer than 10% of allergic reactions.26

Continue to: Anaphylaxis

Anaphylaxis is a serious allergic reaction that develops rapidly and can cause death; diagnosis is based on specific criteria (Table 3).27 Data for rates of anaphylaxis due to food allergy are limited. The incidence of fatal reaction due to food allergy is estimated to be 1 in every 800,000 children annually.3

Clinical suspicion. Food allergy should be suspected in infants and children who present with anaphylaxis or other symptoms (Table 225) that occur within minutes to hours of ingesting food.4 Parental and self-reports alone are insufficient to diagnose food allergy. NIAID guidelines recommend that patient reports of food allergy be confirmed, because multiple studies demonstrate that 50% to 90% of presumed food allergies are not true allergy.4 Health care providers must obtain a detailed medical history and pertinent family history, plus perform a physical exam and allergy sensitivity testing. Methods to help diagnose food allergies include skin-prick tests, allergen-specific serum IgE tests, and oral food challenges.4

General principles and utility of testing

Before ordering tests, it’s important to distinguish between food sensitization and food allergy and to inform the families of children with suspected food allergy about the limitations of skin-prick tests and serum IgE tests. A child with IgE antibodies specific to a food or with a positive skin-prick test, but without symptoms upon ingestion of the food, is merely sensitized; food allergy indicates the appearance of symptoms following exposure to a specific food, in addition to the detection of specific IgE antibodies or a positive skin-prick test to that same food.28

Skin-prick testing. Skin-prick tests can be performed at any age. The procedure involves pricking or scratching the surface of the skin, usually the volar aspect of the forearm or the back, with a commercial extract. Testing should be performed by a physician or other provider who is properly trained in the technique and in interpreting results. The extract contains specific allergenic proteins that activate mast cells, resulting in a characteristic wheal-and-flare response that is typically measured 15 to 20 minutes after application. Some medications, such as H1- and H2-receptor blockers and tricyclic antidepressants, can interfere with results and need to be held for 3 to 5 days before testing.

A positive skin-prick test result is defined as a wheal ≥ 3 mm larger in diameter than the negative control. The larger the size of the wheal, the higher the likelihood of a reaction to the tested food.29 Patients who exhibit dermatographism might experience a wheal-and-flare response from the action of the skin-prick test, rather than from food-specific IgE antibodies. A negative skin-prick test has > 90% negative predictive value, so the test can rule out suspected food allergy.30 However, the skin-prick test alone cannot be used to diagnose food allergy because it has a high false-positive rate.

Continue to: Allergen-specific serum IgE testing

Allergen-specific serum IgE testing. Measurement of food-specific serum IgE levels is routinely available and requires only a blood specimen. The test can be used in patients with skin disease, and results are not affected by concurrent medications. The presence of food-specific IgE indicates that the patient is sensitized to that allergen and might react upon exposure; children with a higher level of antibody are more likely to react.29

Food-specific serum IgE tests are sensitive but nonspecific for food allergy.31 Broad food-allergy test panels often yield false-positive results that can lead to unnecessary dietary elimination, resulting in years of inconvenience, nutrition problems, and needless health care expense.32

It is appropriate to order tests of specific serum IgE to foods ingested within the 2 to 3–hour window before onset of symptoms to avoid broad food allergy test panels. Like skin-prick testing, positive allergen-specific serum IgE tests alone cannot diagnose food allergy.

Oral food challenge. The double-blind, placebo-controlled oral food challenge is the gold standard for the diagnosis of food allergy. Because this test is time-consuming and technically difficult, single-blind or open food challenges are more common. Oral food challenges should be performed only by a physician or other provider who can identify and treat anaphylaxis.

The oral challenge starts with a very low dose of suspected food allergen, which is gradually increased every 15 to 30 minutes as vital signs are monitored carefully. Patients are observed for an allergic reaction for 1 hour after the final dose.

Continue to: A retrospective study...

A retrospective study showed that, whereas 19% of patients reacted during an open food challenge, only 2% required epinephrine.33 Another study showed that 89% of children whose serum IgE testing was positive for specific foods were able to reintroduce those foods into the diet after a reassuring oral food challenge.34

Other diagnostic tests. The basophil activation assay, measurement of total serum IgE, atopy patch tests, and intradermal tests have been used, but are not recommended, for making the diagnosis of food allergy.4

How can food allergy be managed?

Medical options are few. No approved treatment exists for food allergy. However, it’s important to appropriately manage acute reactions and reduce the risk of subsequent reactions.1 Parents or other caregivers can give an H1 antihistamine, such as diphenhydramine, to infants and children with acute non-life-threatening symptoms. More severe symptoms require rapid administration of epinephrine.1 Auto-injectable epinephrine should be prescribed for parents and caregivers to use as needed for emergency treatment of anaphylaxis.

Team approach. A multidisciplinary approach to managing food allergy—involving physicians, school nurses, dietitians, and teachers, and using educational materials—is ideal. This strategy expands knowledge about food allergies, enhances correct administration of epinephrine, and reduces allergic reactions.1

Avoidance of food allergens can be challenging. Parents and caregivers should be taught to interpret the list of ingredients on food packages. Self-recognition of allergic reactions reduces the likelihood of a subsequent severe allergic reaction.35

Continue to: Importance of individualized care

Importance of individualized care. Health care providers should develop personalized management plans for their patients.1 (A good place to start is with the “Food Allergy & Anaphylaxis Emergency Care Plan”a developed by Food Allergy Research & Education [FARE]). Keep in mind that children with multiple food allergies consume less calcium and protein, and tend to be shorter4; therefore, it’s wise to closely monitor growth in these children and consider referral to a dietitian who is familiar with food allergy.

Potential of immunotherapy. Current research focuses on immunotherapy to induce tolerance to food allergens and protect against life-threatening allergic reactions. The goal of immunotherapy is to lessen adverse reactions to allergenic food proteins; the strategy is to have patients repeatedly ingest small but gradually increasing doses of the food allergen over many months.36 Although immunotherapy has successfully allowed some patients to consume larger quantities of a food without having an allergic reaction, it is unknown whether immunotherapy provides permanent resolution of food allergy. In addition, immunotherapy often causes serious systemic and local reactions.1,36,37

Is prevention possible?

Maternal diet during pregnancy and lactation does not affect development of food allergy in infants.38,39 Breastfeeding might prevent development of atopic disease, but evidence is insufficient to determine whether breastfeeding reduces the likelihood of food allergy.39 In nonbreastfed infants at high risk of food allergy, extensively or partially hydrolyzed formula might help protect against food allergy, compared to standard cow’s milk formula.9,39 Feeding with soy formula rather than cow’s milk formula does not help prevent food allergy.39,40 Pregnant and breastfeeding women should not restrict their diet as a means of preventing food allergy.39

Diet in infancy. Over the years, physicians have debated the proper timing of the introduction of solid foods into the diet of infants. Traditional teaching advocated delaying introduction of potentially allergenic foods to reduce the risk of food allergy; however, this guideline was based on inconsistent evidence,41 and the strategy did not reduce the incidence of food allergy. The prevalence of food allergy is lower in developing countries where caregivers introduce foods to infants at an earlier age.20

A recent large clinical trial indicates that early introduction of peanut-containing foods can help prevent peanut allergy. The study randomized 4- to 11-month-old infants with severe eczema, egg allergy, or both, to eat or avoid peanut products until 5 years of age. Infants assigned to eat peanuts were 81% less likely to develop peanut allergy than infants in the avoidance group. Absolute risk reduction was 14% (number need to treat = 7).42 Another study showed a nonsignificant (20%) lower relative risk of food allergy in breastfed infants who were fed potentially allergenic foods starting at 3 months of age, compared to being exclusively breastfed.43

Continue to: Based on these data...

Based on these data,42,43 NIAID instituted recommendations in 2017 aimed at preventing peanut allergy44:

- In healthy infants without known food allergy and those with mild or moderate eczema, caregivers can introduce peanut-containing foods at home with other solid foods.Parents who are anxious about a possible allergic reaction can introduce peanut products in a physician’s office.

- Infants at high risk of peanut allergy (those with severe eczema or egg allergy, or both) should undergo peanut-specific IgE or skin-prick testing:

- Negative test: indicates low risk of a reaction to peanuts; the infant should start consuming peanut-containing foods at 4 to 6 months of age, at home or in a physician’s office, depending on the parents’ preference

- Positive test: Referral to an allergist is recommended.

Do children outgrow food allergy?

Approximately 85% of children who have an allergy to milk, egg, soy, or wheat outgrow their allergy; however, only 15% to 20% who have an allergy to peanuts, tree nuts, fish, or shellfish eventually tolerate these foods. The time to resolution of food allergy varies with the food, and might not occur until adolescence.4 No test reliably predicts which children develop tolerance to any given food. A decrease in the food-specific serum IgE level or a decrease in the size of the wheal on skin-prick testing might portend the onset of tolerance to the food.4

CORRESPONDENCE

Catherine M. Bettcher, MD, FAAFP, Briarwood Family Medicine, 1801 Briarwood Circle, Building #10, Ann Arbor, MI 48108; cbettche@umich.edu.

Food allergy is a complex condition that has become a growing concern for parents and an increasing public health problem in the United States. Food allergy affects social interactions, school attendance, and quality of life, especially when associated with comorbid atopic conditions such as asthma, atopic dermatitis, and allergic rhinitis.1,2 It is the major cause of anaphylaxis in children, accounting for as many as 81% of cases.3 Societal costs of food allergy are great and are spread broadly across the health care system and the family. (See “What is the cost of food allergy?”2.)

SIDEBAR

What is the cost of food allergy?

Direct costs of food allergy to the health care system include medications, laboratory tests, office visits to primary care physicians and specialists, emergency department visits, and hospitalizations. Indirect costs include family medical and nonmedical expenses, lost work productivity, and job opportunity costs. Overall, the cost of food allergy in the United States is $24.8 billion annually—averaging $4184 for each affected child. Parents bear much of this expense.2

What a food allergy is—and isn’t

The National Institute of Allergy and Infectious Diseases (NIAID) defines food allergy as “an adverse health effect arising from a specific immune response that occurs reproducibly on exposure to a given food.”4 An adverse reaction to food or a food component that lacks an identified immunologic pathophysiology is not considered food allergy but is classified as food intolerance.4

Food allergy is caused by either immunoglobulin E (IgE)-mediated or non-IgE-mediated immunologic dysfunction. IgE antibodies can trigger an intense inflammatory response to certain allergens. Non-IgE-mediated food allergies are less common and not well understood.

This article focuses only on the diagnosis and management of IgE-mediated food allergy.

The culprits

More than 170 foods have been reported to cause an IgE-mediated reaction. Table 15-8 lists the 8 foods that most commonly cause allergic reactions in the United States and that account for > 50% of allergies to food.9 Studies vary in their methodology for estimating the prevalence of allergy to individual foods, but cow’s milk and peanuts appear to be the most common, each affecting as many as 2% to 2.5% of children.7,8 In general, allergies to cow’s milk and to eggs are more prevalent in very young and preschool children, whereas allergies to peanuts, tree nuts, fish, and shellfish are more prevalent in older children.10 Labels on all packaged foods regulated by the US Food and Drug Administration must declare if the product contains even a trace of these 8 allergens.

How common is food allergy?

The Centers for Disease Control and Prevention (CDC) estimates that 4% to 6% of children in the United States have a food allergy.11,12 Almost 40% of food-allergic children have a history of severe food-induced reactions.13 Other developed countries cite similar estimates of overall prevalence.14,15

However, many estimates of the prevalence of food allergy are derived from self-reports, without objective data.9 Accurate evaluation of the prevalence of food allergy is challenging because of many factors, including differences in study methodology and the definition of allergy, geographic variation, racial and ethnic variations, and dietary exposure. Parents and children often confuse nonallergic food reactions, such as food intolerance, with food allergy. Precise determination of the prevalence and natural history of food allergy at the population level requires confirmatory oral food challenges of a representative sample of infants and young children with presumed food allergy.16

Continue to: The CDC concludes that the prevalence...

The CDC concludes that the prevalence of food allergy in children younger than 18 years increased by 18% from 1997 through 2007.17,18 The cause of this increase is unclear but likely multifactorial; hypotheses include an increase in associated atopic conditions, delayed introduction of allergenic foods, and living in an overly sterile environment with reduced exposure to microbes.19 A recent population-based study of food allergy among children in Olmsted County, Minnesota, found that the incidence of food allergy increased between 2002 and 2007, stabilized subsequently, and appears to be declining among children 1 to 4 years of age, following a peak in 2006-2007.19

What are the risk factors?

Proposed risk factors for food allergy include demographics, genetics, a history of atopic disease, and environmental factors. Food allergy might be more common in boys than in girls, and in African Americans and Asians than in Whites.12,16 A child is 7 times more likely to be allergic to peanuts if a parent or sibling has peanut allergy.20 Infants and children with eczema or asthma are more likely to develop food allergy; the severity of eczema correlates with risk.12,20 Improvements in hygiene in Western societies have decreased the spread of infection, but this has been accompanied by a rise in atopic disease. In countries where health standards are poor and exposure to pathogens is greater, the prevalence of allergy is low.21

Conversely, increased microbial exposure might help protect against atopy via a pathway in which T-helper cells prevent pro-allergic immune development and keep harmless environmental exposures from becoming allergens.22 Attendance at daycare and exposure to farm animals early in life reduces the likelihood of atopic disease.16,21 The presence of a dog in the home lessens the probability of egg allergy in infants.23 Food allergy is less common in younger siblings than in first-born children, possibly due to younger siblings’ increased exposure to infection and alterations in the gut microbiome.23,24

Diagnosis: Established by presentation, positive testing

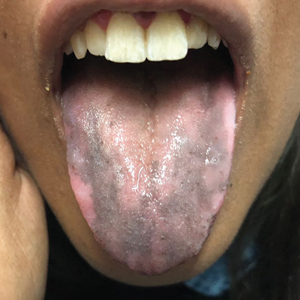

Onset of symptoms after exposure to a suspected food allergen almost always occurs within 2 hours and, typically, resolves within several hours. Symptoms should occur consistently after ingestion of the food allergen. Subsequent exposures can trigger more severe symptoms, depending on the amount, route, and duration of exposure to the allergen.25 Reactions typically follow ingestion or cutaneous exposures; inhalation rarely triggers a response.26 IgE-mediated release of histamine and other mediators from mast cells and basophils triggers reactions that typically involve one or more organ systems (Table 2).25

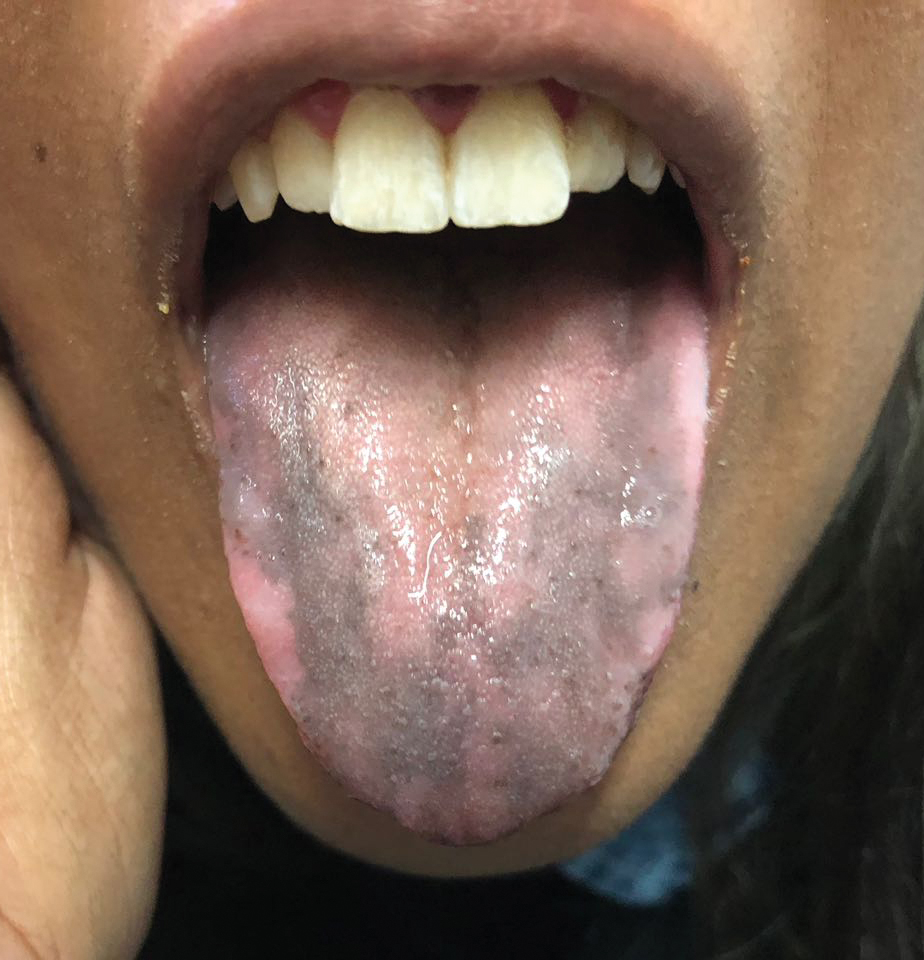

Cutaneous symptoms are the most common manifestations of food allergy, occurring in 70% to 80% of childhood reactions. Gastrointestinal and oral or respiratory symptoms occur in, respectively, 40% to 50% and 25% of allergic reactions to food. Cardiovascular symptoms develop in fewer than 10% of allergic reactions.26

Continue to: Anaphylaxis

Anaphylaxis is a serious allergic reaction that develops rapidly and can cause death; diagnosis is based on specific criteria (Table 3).27 Data for rates of anaphylaxis due to food allergy are limited. The incidence of fatal reaction due to food allergy is estimated to be 1 in every 800,000 children annually.3

Clinical suspicion. Food allergy should be suspected in infants and children who present with anaphylaxis or other symptoms (Table 225) that occur within minutes to hours of ingesting food.4 Parental and self-reports alone are insufficient to diagnose food allergy. NIAID guidelines recommend that patient reports of food allergy be confirmed, because multiple studies demonstrate that 50% to 90% of presumed food allergies are not true allergy.4 Health care providers must obtain a detailed medical history and pertinent family history, plus perform a physical exam and allergy sensitivity testing. Methods to help diagnose food allergies include skin-prick tests, allergen-specific serum IgE tests, and oral food challenges.4

General principles and utility of testing

Before ordering tests, it’s important to distinguish between food sensitization and food allergy and to inform the families of children with suspected food allergy about the limitations of skin-prick tests and serum IgE tests. A child with IgE antibodies specific to a food or with a positive skin-prick test, but without symptoms upon ingestion of the food, is merely sensitized; food allergy indicates the appearance of symptoms following exposure to a specific food, in addition to the detection of specific IgE antibodies or a positive skin-prick test to that same food.28

Skin-prick testing. Skin-prick tests can be performed at any age. The procedure involves pricking or scratching the surface of the skin, usually the volar aspect of the forearm or the back, with a commercial extract. Testing should be performed by a physician or other provider who is properly trained in the technique and in interpreting results. The extract contains specific allergenic proteins that activate mast cells, resulting in a characteristic wheal-and-flare response that is typically measured 15 to 20 minutes after application. Some medications, such as H1- and H2-receptor blockers and tricyclic antidepressants, can interfere with results and need to be held for 3 to 5 days before testing.

A positive skin-prick test result is defined as a wheal ≥ 3 mm larger in diameter than the negative control. The larger the size of the wheal, the higher the likelihood of a reaction to the tested food.29 Patients who exhibit dermatographism might experience a wheal-and-flare response from the action of the skin-prick test, rather than from food-specific IgE antibodies. A negative skin-prick test has > 90% negative predictive value, so the test can rule out suspected food allergy.30 However, the skin-prick test alone cannot be used to diagnose food allergy because it has a high false-positive rate.

Continue to: Allergen-specific serum IgE testing

Allergen-specific serum IgE testing. Measurement of food-specific serum IgE levels is routinely available and requires only a blood specimen. The test can be used in patients with skin disease, and results are not affected by concurrent medications. The presence of food-specific IgE indicates that the patient is sensitized to that allergen and might react upon exposure; children with a higher level of antibody are more likely to react.29

Food-specific serum IgE tests are sensitive but nonspecific for food allergy.31 Broad food-allergy test panels often yield false-positive results that can lead to unnecessary dietary elimination, resulting in years of inconvenience, nutrition problems, and needless health care expense.32

It is appropriate to order tests of specific serum IgE to foods ingested within the 2 to 3–hour window before onset of symptoms to avoid broad food allergy test panels. Like skin-prick testing, positive allergen-specific serum IgE tests alone cannot diagnose food allergy.

Oral food challenge. The double-blind, placebo-controlled oral food challenge is the gold standard for the diagnosis of food allergy. Because this test is time-consuming and technically difficult, single-blind or open food challenges are more common. Oral food challenges should be performed only by a physician or other provider who can identify and treat anaphylaxis.

The oral challenge starts with a very low dose of suspected food allergen, which is gradually increased every 15 to 30 minutes as vital signs are monitored carefully. Patients are observed for an allergic reaction for 1 hour after the final dose.

Continue to: A retrospective study...

A retrospective study showed that, whereas 19% of patients reacted during an open food challenge, only 2% required epinephrine.33 Another study showed that 89% of children whose serum IgE testing was positive for specific foods were able to reintroduce those foods into the diet after a reassuring oral food challenge.34

Other diagnostic tests. The basophil activation assay, measurement of total serum IgE, atopy patch tests, and intradermal tests have been used, but are not recommended, for making the diagnosis of food allergy.4

How can food allergy be managed?

Medical options are few. No approved treatment exists for food allergy. However, it’s important to appropriately manage acute reactions and reduce the risk of subsequent reactions.1 Parents or other caregivers can give an H1 antihistamine, such as diphenhydramine, to infants and children with acute non-life-threatening symptoms. More severe symptoms require rapid administration of epinephrine.1 Auto-injectable epinephrine should be prescribed for parents and caregivers to use as needed for emergency treatment of anaphylaxis.

Team approach. A multidisciplinary approach to managing food allergy—involving physicians, school nurses, dietitians, and teachers, and using educational materials—is ideal. This strategy expands knowledge about food allergies, enhances correct administration of epinephrine, and reduces allergic reactions.1

Avoidance of food allergens can be challenging. Parents and caregivers should be taught to interpret the list of ingredients on food packages. Self-recognition of allergic reactions reduces the likelihood of a subsequent severe allergic reaction.35

Continue to: Importance of individualized care

Importance of individualized care. Health care providers should develop personalized management plans for their patients.1 (A good place to start is with the “Food Allergy & Anaphylaxis Emergency Care Plan”a developed by Food Allergy Research & Education [FARE]). Keep in mind that children with multiple food allergies consume less calcium and protein, and tend to be shorter4; therefore, it’s wise to closely monitor growth in these children and consider referral to a dietitian who is familiar with food allergy.

Potential of immunotherapy. Current research focuses on immunotherapy to induce tolerance to food allergens and protect against life-threatening allergic reactions. The goal of immunotherapy is to lessen adverse reactions to allergenic food proteins; the strategy is to have patients repeatedly ingest small but gradually increasing doses of the food allergen over many months.36 Although immunotherapy has successfully allowed some patients to consume larger quantities of a food without having an allergic reaction, it is unknown whether immunotherapy provides permanent resolution of food allergy. In addition, immunotherapy often causes serious systemic and local reactions.1,36,37

Is prevention possible?

Maternal diet during pregnancy and lactation does not affect development of food allergy in infants.38,39 Breastfeeding might prevent development of atopic disease, but evidence is insufficient to determine whether breastfeeding reduces the likelihood of food allergy.39 In nonbreastfed infants at high risk of food allergy, extensively or partially hydrolyzed formula might help protect against food allergy, compared to standard cow’s milk formula.9,39 Feeding with soy formula rather than cow’s milk formula does not help prevent food allergy.39,40 Pregnant and breastfeeding women should not restrict their diet as a means of preventing food allergy.39

Diet in infancy. Over the years, physicians have debated the proper timing of the introduction of solid foods into the diet of infants. Traditional teaching advocated delaying introduction of potentially allergenic foods to reduce the risk of food allergy; however, this guideline was based on inconsistent evidence,41 and the strategy did not reduce the incidence of food allergy. The prevalence of food allergy is lower in developing countries where caregivers introduce foods to infants at an earlier age.20

A recent large clinical trial indicates that early introduction of peanut-containing foods can help prevent peanut allergy. The study randomized 4- to 11-month-old infants with severe eczema, egg allergy, or both, to eat or avoid peanut products until 5 years of age. Infants assigned to eat peanuts were 81% less likely to develop peanut allergy than infants in the avoidance group. Absolute risk reduction was 14% (number need to treat = 7).42 Another study showed a nonsignificant (20%) lower relative risk of food allergy in breastfed infants who were fed potentially allergenic foods starting at 3 months of age, compared to being exclusively breastfed.43

Continue to: Based on these data...

Based on these data,42,43 NIAID instituted recommendations in 2017 aimed at preventing peanut allergy44:

- In healthy infants without known food allergy and those with mild or moderate eczema, caregivers can introduce peanut-containing foods at home with other solid foods.Parents who are anxious about a possible allergic reaction can introduce peanut products in a physician’s office.

- Infants at high risk of peanut allergy (those with severe eczema or egg allergy, or both) should undergo peanut-specific IgE or skin-prick testing:

- Negative test: indicates low risk of a reaction to peanuts; the infant should start consuming peanut-containing foods at 4 to 6 months of age, at home or in a physician’s office, depending on the parents’ preference

- Positive test: Referral to an allergist is recommended.

Do children outgrow food allergy?

Approximately 85% of children who have an allergy to milk, egg, soy, or wheat outgrow their allergy; however, only 15% to 20% who have an allergy to peanuts, tree nuts, fish, or shellfish eventually tolerate these foods. The time to resolution of food allergy varies with the food, and might not occur until adolescence.4 No test reliably predicts which children develop tolerance to any given food. A decrease in the food-specific serum IgE level or a decrease in the size of the wheal on skin-prick testing might portend the onset of tolerance to the food.4

CORRESPONDENCE

Catherine M. Bettcher, MD, FAAFP, Briarwood Family Medicine, 1801 Briarwood Circle, Building #10, Ann Arbor, MI 48108; cbettche@umich.edu.

1. Muraro A, Werfel T, Hoffmann-Sommergruber K, et al; . EAACI food allergy and anaphylaxis guidelines: diagnosis and management of food allergy. Allergy. 2014;69:1008-1025.

2. Gupta R, Holdford D, Bilaver L, et al. The economic impact of childhood food allergy in the United States. JAMA Pediatr. 2013;167:1026-1031.

3. Cianferoni A, Muraro A. Food-induced anaphylaxis. Immunol Allergy Clin North Am. 2012;32:165-195.

4., Boyce JA, Assa’ad A, Burks WA, et al. Guidelines for the diagnosis and management of food allergy in the United States: report of the NIAID-sponsored expert panel. J Allergy Clin Immunol. 2010;126(6 suppl):S1-S58.

5. Vierk KA, Koehler KM, Fein SB, et al. Prevalence of self-reported food allergy in American adults and use of food labels. J Allergy Clin Immunol. 2007;119:1504-1510.

6. Allen KJ, Koplin JJ. The epidemiology of IgE-mediated food allergy and anaphylaxis. Immunol Allergy Clin North Am. 2012;32:35-50.

7. Iweala OI, Choudhary SK, Commins SP. Food allergy. Curr Gastroenterol Rep. 2018;20:17.

8. Gupta RS, Warren CM, Smith BM, et al. The public health impact of parent-reported childhood food allergies in the United States. Pediatrics. 2018;142:e20181235.

9. Chafen JJS, Newberry SJ, Riedl MA, et al. Diagnosing and managing common food allergies: a systematic review. JAMA. 2010;303:1848-1856.

10. Nwaru BI, Hickstein L, Panesar SS, et al. Prevalence of common food allergies in Europe: a systematic review and meta-analysis. Allergy. 2014;69:992-1007.

11. Branum AM, Lukacs SL. Food allergy among U.S. children: trends in prevalence and hospitalizations. NCHS Data Brief No. 10. National Center for Health Statistics. October 2008. www.cdc.gov/nchs/products/databriefs/db10.htm. Accessed August 19, 2020.

12. Liu AH, Jaramillo R, Sicherer SH, et al. National prevalence and risk factors for food allergy and relationship to asthma: results from the National Health and Nutrition Examination Survey 2005-2006. J Allergy Clin Immunol. 2010;126:798-806.e13.

13. Gupta RS, Springston EE, Warrier MR, et al. The prevalence, severity, and distribution of childhood food allergy in the United States. Pediatrics. 2011;128:e9-e17.

14. Soller L, Ben-Shoshan M, Harrington DW, et al. Overall prevalence of self-reported food allergy in Canada. J Allergy Clin Immunol. 2012;130:986-988.

15. Venter C, Pereira B, Voigt K, et al. Prevalence and cumulative incidence of food hypersensitivity in the first 3 years of life. Allergy. 2008;63:354-359.

16. Savage J, Johns CB. Food allergy: epidemiology and natural history. Immunol Allergy Clin North Am. 2015;35:45-59.

17. Branum AM, Lukacs SL. Food allergy among children in the United States. Pediatrics. 2009;124:1549-1555.

18. Jackson KD, Howie LD, Akinbami LJ. Trends in allergic conditions among children: United States, 1997-2011. NCHS Data Brief No. 121. National Center for Health Statistics. May 2013. www.cdc.gov/nchs/products/databriefs/db121.htm. Accessed August 19, 2020.

19. Willits EK, Park MA, Hartz MF, et al. Food allergy: a comprehensive population-based cohort study. Mayo Clin Proc. 2018;93:1423-1430.

20. Lack G. Epidemiologic risks for food allergy. J Allergy Clin Immunol. 2008;121:1331-1336.

21. Okada H, Kuhn C, Feillet H, et al. The ‘hygiene hypothesis’ for autoimmune and allergic diseases: an update. Clin Exp Immunol. 2010;160:1-9.

22. Liu AH. Hygiene theory and allergy and asthma prevention. Paediatr Perinat Epidemiol. 2007;21 Suppl 3:2-7.

23. Prince BT, Mandel MJ, Nadeau K, et al. Gut microbiome and the development of food allergy and allergic disease. Pediatr Clin North Am. 2015;62:1479-1492.

24. Kusunoki T, Mukaida K, Morimoto T, et al. Birth order effect on childhood food allergy. Pediatr Allergy Immunol. 2012;23:250-254.

25. Abrams EM, Sicherer SH. Diagnosis and management of food allergy. CMAJ. 2016;188:1087-1093.

26. Perry TT, Matsui EC, Conover-Walker MK, et al. Risk of oral food challenges. J Allergy Clin Immunol. 2004;114:1164-1168.

27. Sampson HA, A, Campbell RL, et al. Second symposium on the definition and management of anaphylaxis: summary report—Second National Institute of Allergy and Infectious Disease/Food Allergy and Anaphylaxis Network symposium. J Allergy Clin Immunol. 2006;117:391-397.

28. Sampson HA. Food allergy. Part 2: diagnosis and management. J Allergy Clin Immunol. 1999;103:981-989.

29. Lieberman JA, Sicherer SH. Diagnosis of food allergy: epicutaneous skin tests, in vitro tests, and oral food challenge. Curr Allergy Asthma Rep. 2011;11:58-64.

30. Sicherer SH, Sampson HA. Food allergy. J Allergy Clin Immunol. 2010;125(2 suppl 2):S116-S125.

31. Soares-Weiser K, Takwoingi Y, Panesar SS, et al. The diagnosis of food allergy: a systematic review and meta-analysis. Allergy. 2014;69:76-86.

32. Bird JA, Crain M, Varshney P. Food allergen panel testing often results in misdiagnosis of food allergy. J Pediatr. 2015;166:97-100.

33. Lieberman JA, Cox AL, Vitale M, et al. Outcomes of office-based, open food challenges in the management of food allergy. J Allergy Clin Immunol. 2011;128:1120-1122.

34. Fleischer DM, Bock SA, Spears GC, et al. Oral food challenges in children with a diagnosis of food allergy. J Pediatr. 2011;158:578-583.e1.

35. Ewan PW, Clark AT. Long-term prospective observational study of patients with peanut and nut allergy after participation in a management plan. Lancet. 2001;357:111-115.

36. Nurmatov U, Dhami S, Arasi S, et al. Allergen immunotherapy for IgE-mediated food allergy: a systematic review and meta-analysis. Allergy. 2017;72:1133-1147.

37. Sampson HA, Aceves S, Bock SA, et al. Food allergy: a practice parameter update—2014. J Allergy Clin Immunol. 2014;134:1016-1025.e43.

38. Kramer MS, Kakuma R. Maternal dietary antigen avoidance during pregnancy or lactation, or both, for preventing or treating atopic disease in the child. Cochrane Database Syst Rev. 2012;2012(9):CD000133.

39. de Silva D, Geromi M, Halken S, et al; . Primary prevention of food allergy in children and adults: systematic review. Allergy. 2014;69:581-589.

40. Osborn DA, Sinn J. Soy formula for prevention of allergy and food intolerance in infants. Cochrane Database Syst Rev. 2004;(3):CD003741.

41. Filipiak B, Zutavern A, Koletzko S, et al; GINI-Group. Solid food introduction in relation to eczema: results from a four-year prospective birth cohort study. J Pediatr. 2007;151:352-358.

42. Du Toit G, Roberts G, Sayre PH, et al; LEAP Study Team. Randomized trial of peanut consumption in infants at risk for peanut allergy. N Engl J Med. 2015;372:803-813.

43. Perkin MR, Logan K, Tseng A, et al; EAT Study Team. Randomized trial of introduction of allergenic foods in breast-fed infants. N Engl J Med. 2016;374:1733-1743.

44. Togias A, Cooper SF, Acebal ML, et al. Addendum guidelines for the prevention of peanut allergy in the United States: report of the National Institute of Allergy and Infectious Diseases-sponsored expert panel. J Allergy Clin Immunol. 2017;139:29-44.

1. Muraro A, Werfel T, Hoffmann-Sommergruber K, et al; . EAACI food allergy and anaphylaxis guidelines: diagnosis and management of food allergy. Allergy. 2014;69:1008-1025.

2. Gupta R, Holdford D, Bilaver L, et al. The economic impact of childhood food allergy in the United States. JAMA Pediatr. 2013;167:1026-1031.

3. Cianferoni A, Muraro A. Food-induced anaphylaxis. Immunol Allergy Clin North Am. 2012;32:165-195.

4., Boyce JA, Assa’ad A, Burks WA, et al. Guidelines for the diagnosis and management of food allergy in the United States: report of the NIAID-sponsored expert panel. J Allergy Clin Immunol. 2010;126(6 suppl):S1-S58.

5. Vierk KA, Koehler KM, Fein SB, et al. Prevalence of self-reported food allergy in American adults and use of food labels. J Allergy Clin Immunol. 2007;119:1504-1510.

6. Allen KJ, Koplin JJ. The epidemiology of IgE-mediated food allergy and anaphylaxis. Immunol Allergy Clin North Am. 2012;32:35-50.

7. Iweala OI, Choudhary SK, Commins SP. Food allergy. Curr Gastroenterol Rep. 2018;20:17.

8. Gupta RS, Warren CM, Smith BM, et al. The public health impact of parent-reported childhood food allergies in the United States. Pediatrics. 2018;142:e20181235.

9. Chafen JJS, Newberry SJ, Riedl MA, et al. Diagnosing and managing common food allergies: a systematic review. JAMA. 2010;303:1848-1856.

10. Nwaru BI, Hickstein L, Panesar SS, et al. Prevalence of common food allergies in Europe: a systematic review and meta-analysis. Allergy. 2014;69:992-1007.

11. Branum AM, Lukacs SL. Food allergy among U.S. children: trends in prevalence and hospitalizations. NCHS Data Brief No. 10. National Center for Health Statistics. October 2008. www.cdc.gov/nchs/products/databriefs/db10.htm. Accessed August 19, 2020.

12. Liu AH, Jaramillo R, Sicherer SH, et al. National prevalence and risk factors for food allergy and relationship to asthma: results from the National Health and Nutrition Examination Survey 2005-2006. J Allergy Clin Immunol. 2010;126:798-806.e13.

13. Gupta RS, Springston EE, Warrier MR, et al. The prevalence, severity, and distribution of childhood food allergy in the United States. Pediatrics. 2011;128:e9-e17.

14. Soller L, Ben-Shoshan M, Harrington DW, et al. Overall prevalence of self-reported food allergy in Canada. J Allergy Clin Immunol. 2012;130:986-988.

15. Venter C, Pereira B, Voigt K, et al. Prevalence and cumulative incidence of food hypersensitivity in the first 3 years of life. Allergy. 2008;63:354-359.

16. Savage J, Johns CB. Food allergy: epidemiology and natural history. Immunol Allergy Clin North Am. 2015;35:45-59.

17. Branum AM, Lukacs SL. Food allergy among children in the United States. Pediatrics. 2009;124:1549-1555.

18. Jackson KD, Howie LD, Akinbami LJ. Trends in allergic conditions among children: United States, 1997-2011. NCHS Data Brief No. 121. National Center for Health Statistics. May 2013. www.cdc.gov/nchs/products/databriefs/db121.htm. Accessed August 19, 2020.

19. Willits EK, Park MA, Hartz MF, et al. Food allergy: a comprehensive population-based cohort study. Mayo Clin Proc. 2018;93:1423-1430.

20. Lack G. Epidemiologic risks for food allergy. J Allergy Clin Immunol. 2008;121:1331-1336.

21. Okada H, Kuhn C, Feillet H, et al. The ‘hygiene hypothesis’ for autoimmune and allergic diseases: an update. Clin Exp Immunol. 2010;160:1-9.

22. Liu AH. Hygiene theory and allergy and asthma prevention. Paediatr Perinat Epidemiol. 2007;21 Suppl 3:2-7.

23. Prince BT, Mandel MJ, Nadeau K, et al. Gut microbiome and the development of food allergy and allergic disease. Pediatr Clin North Am. 2015;62:1479-1492.

24. Kusunoki T, Mukaida K, Morimoto T, et al. Birth order effect on childhood food allergy. Pediatr Allergy Immunol. 2012;23:250-254.

25. Abrams EM, Sicherer SH. Diagnosis and management of food allergy. CMAJ. 2016;188:1087-1093.

26. Perry TT, Matsui EC, Conover-Walker MK, et al. Risk of oral food challenges. J Allergy Clin Immunol. 2004;114:1164-1168.

27. Sampson HA, A, Campbell RL, et al. Second symposium on the definition and management of anaphylaxis: summary report—Second National Institute of Allergy and Infectious Disease/Food Allergy and Anaphylaxis Network symposium. J Allergy Clin Immunol. 2006;117:391-397.

28. Sampson HA. Food allergy. Part 2: diagnosis and management. J Allergy Clin Immunol. 1999;103:981-989.

29. Lieberman JA, Sicherer SH. Diagnosis of food allergy: epicutaneous skin tests, in vitro tests, and oral food challenge. Curr Allergy Asthma Rep. 2011;11:58-64.

30. Sicherer SH, Sampson HA. Food allergy. J Allergy Clin Immunol. 2010;125(2 suppl 2):S116-S125.

31. Soares-Weiser K, Takwoingi Y, Panesar SS, et al. The diagnosis of food allergy: a systematic review and meta-analysis. Allergy. 2014;69:76-86.

32. Bird JA, Crain M, Varshney P. Food allergen panel testing often results in misdiagnosis of food allergy. J Pediatr. 2015;166:97-100.

33. Lieberman JA, Cox AL, Vitale M, et al. Outcomes of office-based, open food challenges in the management of food allergy. J Allergy Clin Immunol. 2011;128:1120-1122.

34. Fleischer DM, Bock SA, Spears GC, et al. Oral food challenges in children with a diagnosis of food allergy. J Pediatr. 2011;158:578-583.e1.

35. Ewan PW, Clark AT. Long-term prospective observational study of patients with peanut and nut allergy after participation in a management plan. Lancet. 2001;357:111-115.

36. Nurmatov U, Dhami S, Arasi S, et al. Allergen immunotherapy for IgE-mediated food allergy: a systematic review and meta-analysis. Allergy. 2017;72:1133-1147.

37. Sampson HA, Aceves S, Bock SA, et al. Food allergy: a practice parameter update—2014. J Allergy Clin Immunol. 2014;134:1016-1025.e43.

38. Kramer MS, Kakuma R. Maternal dietary antigen avoidance during pregnancy or lactation, or both, for preventing or treating atopic disease in the child. Cochrane Database Syst Rev. 2012;2012(9):CD000133.

39. de Silva D, Geromi M, Halken S, et al; . Primary prevention of food allergy in children and adults: systematic review. Allergy. 2014;69:581-589.

40. Osborn DA, Sinn J. Soy formula for prevention of allergy and food intolerance in infants. Cochrane Database Syst Rev. 2004;(3):CD003741.

41. Filipiak B, Zutavern A, Koletzko S, et al; GINI-Group. Solid food introduction in relation to eczema: results from a four-year prospective birth cohort study. J Pediatr. 2007;151:352-358.

42. Du Toit G, Roberts G, Sayre PH, et al; LEAP Study Team. Randomized trial of peanut consumption in infants at risk for peanut allergy. N Engl J Med. 2015;372:803-813.

43. Perkin MR, Logan K, Tseng A, et al; EAT Study Team. Randomized trial of introduction of allergenic foods in breast-fed infants. N Engl J Med. 2016;374:1733-1743.

44. Togias A, Cooper SF, Acebal ML, et al. Addendum guidelines for the prevention of peanut allergy in the United States: report of the National Institute of Allergy and Infectious Diseases-sponsored expert panel. J Allergy Clin Immunol. 2017;139:29-44.

PRACTICE RECOMMENDATIONS

› Diagnose food allergy based on a convincing clinical history paired with positive diagnostic testing. A

› Use a multidisciplinary approach to improve caregiver and patient understanding of food allergy and to reduce allergic reactions. B

› Recommend early introduction of peanut products to infants to reduce the likelihood of peanut allergy. A

Strength of recommendation (SOR)

A Good-quality patient-oriented evidence

B Inconsistent or limited-quality patient-oriented evidence

C Consensus, usual practice, opinion, disease-oriented evidence, case series

An Atypical Long-Term Thiamine Treatment Regimen for Wernicke Encephalopathy

Wernicke-Korsakoff syndrome is a cluster of symptoms attributed to a disorder of vitamin B1 (thiamine) deficiency, manifesting as a combined presentation of alcohol-induced Wernicke encephalopathy (WE) and Korsakoff syndrome (KS).1 While there is consensus on the characteristic presentation and symptoms of WE, there is a lack of agreement on the exact definition of KS. The classic triad describing WE consists of ataxia, ophthalmoplegia, and confusion; however, reports now suggest that a majority of patients exhibit only 1 or 2 of the elements of the triad. KS is often seen as a condition of chronic thiamine deficiency manifesting as memory impairment alongside a cognitive and behavioral decline, with no clear consensus on the sequence of appearance of symptoms. The typical relationship is thought to be a progression of WE to KS if untreated.

From a mental health perspective, WE presents with delirium and confusion whereas KS manifests with irreversible dementia and a cognitive deterioration. Though it is commonly taught that KS-induced memory loss is permanent due to neuronal damage (classically identified as damage to the mammillary bodies - though other structures have been implicated as well), more recent research suggest otherwise.2 A review published in 2018, for example, gathered several case reports and case series that suggest significant improvement in memory and cognition attributed to behavioral and pharmacologic interventions, indicating this as an area deserving of further study.3 About 20% of patients diagnosed with WE by autopsy exhibited none of the classical triad symptoms prior to death.4 Hence, these conditions are surmised to be significantly underdiagnosed and misdiagnosed.

Though consensus regarding the appropriate treatment regimen is lacking for WE, a common protocol consists of high-dose parenteral thiamine for 4 to 7 days.5 This is usually followed by daily oral thiamine repletion until the patient either achieves complete abstinence from alcohol (ideal) or decreases consumption. The goal is to allow thiamine stores to replete and maintain at minimum required body levels moving forward. In this case report, we highlight the utilization of a long-term, unconventional intramuscular (IM) thiamine repletion regimen to ensure maintenance of a patient’s mental status, highlighting discrepancies in our understanding of the mechanisms at play in WE and its treatment.

Case Presentation

A 65-year-old male patient with a more than 3-decade history of daily hard liquor intake, multiple psychiatric hospitalizations for WE, and a prior suicide attempt, presented to the emergency department (ED) with increased frequency of falls, poor oral intake, confabulation, and diminished verbal communication. A chart review revealed memory impairment alongside the diagnoses of schizoaffective disorder and WE, and confusion that was responsive to thiamine administration as well as a history of hypertension, hyperlipidemia, osteoarthritis, and urinary retention secondary to benign prostatic hyperplasia (BPH).