User login

What Is the Mechanism of Alemtuzumab-Induced Autoimmunity?

SAN DIEGO—The monoclonal antibody alemtuzumab can be an effective treatment for people living with multiple sclerosis (MS), but the agent is also associated with an increased risk for developing other autoimmune diseases, leaving clinicians with a conundrum.

Alemtuzumab is an efficacious treatment in MS that can slow the rate of brain atrophy over the long term, said Alasdair Coles, MD, Professor of Neuroimmunology at the University of Cambridge, United Kingdom, at ACTRIMS 2018 Forum. “But one or two years after each cycle of alemtuzumab, patients are at high risk of autoimmune diseases. This is the not-too-worrying thyroid disease, but there are some troubling and potentially serious complications at lower frequency.”

Autoimmune thyroid disease can affect as much as 40% of patients treated with alemtuzumab, but immune thrombocytopenia (3%) and autoimmune renal disease (0.1%) are also reported. About one in 10 people treated with the monoclonal antibody for MS can also develop de novo asymptomatic autoantibodies.

“People ask, ‘Why doesn’t MS come back as part of this generic mechanism?’ I don’t know the answer to that question,” said Dr. Coles.

In the United States, alemtuzumab is indicated for treatment of relapsing-remitting MS in adults who have failed to respond adequately to two or more previous therapies. In contrast, “this has become a first-line treatment in the UK,” said Dr. Coles. “Unfortunately, we can offer no proven treatment to prevent this autoimmunity.”

Considering Proposed Mechanisms

Dr. Coles and other researchers are investigating the cellular mechanism underlying the paradoxical autoimmunity associated with alemtuzumab. Some have suggested that faulty immune B cells could be the culprit, but “there is no difference in B cell reconstitution between those who do and do not get autoimmunity,” said Dr. Coles. “So, we do not think that autoimmunity after alemtuzumab is primarily a B cell problem.” Other investigators have suggested that the mechanism is the depletion of a key immune regulatory cell associated with alemtuzumab. One such example is depletion in T cells as part of an autoimmune cascade that involve CD52-high expressing cells and sialic acid-binding immunoglobulin-like lectin 10. “We do not believe this,” said Dr. Coles. “We cannot replicate the finding of reduced CD52 high cells in type 1 diabetes or MS, nor the binding of SIGLEC-10 to CD52.”

Along with his colleague Joanne Jones, MD, PhD, also at the University of Cambridge, Dr. Coles and his team instead propose that autoimmunity after alemtuzumab therapy is associated with a homeostatic proliferation of T cells in the context of a defective thymus. “We see thymic function reduced after alemtuzumab for a few months. We do not know if alemtuzumab is having a direct impact on the thymus or if it is an indirect effect through a cytokine storm at the time of administering alemtuzumab.”

In addition, in contrast with B cells, both CD4-positive and CD8-positive T cells are clonally restricted after alemtuzumab treatment, said Dr. Coles. “These are the only changes that distinguish patients who do and do not develop autoimmunity,” he said. “Those who develop autoimmunity have reduced clonality and impaired thymic function, compared with those who do not.”

The theory is that the reconstitution of T cells after alemtuzumab comes preferentially from expansion of peripheral T cells, rather than naïve T cells from the thymus, which leads to a higher representation of autoreactive T cells and thus leads to B-cell- and antibody-mediated autoimmunity.

The Bigger Picture

The autoimmune phenomenon is not unique to alemtuzumab or MS. “This turns out to be one of a family of clinical situations where the reconstitution of the depleted lymphocyte repertoire leads to autoimmunity,” Dr. Coles said. A similar effect was seen years ago when very lymphopenic patients with HIV were given antiviral therapy. About 10% of treated patients had this effect. Furthermore, about 10% of patients who undergo bone marrow transplant may experience similar autoimmune concerns.

“What we do think is true is that we have tapped into a classical expression of autoimmunity,” Dr. Coles said. “Alemtuzumab is a fantastic opportunity to study the mechanisms underlying lymphopenia-associated autoimmunity.”

A Tantalizing Prospect

“It is a tantalizing prospect that susceptible individuals might be identified in the future prior to treatment,” Dr. Coles said. “We looked at IL-21. We showed that after treatment, and perhaps more interestingly, before treatment with alemtuzumab, serum levels of IL-21 are greater in those who subsequently develop autoimmune disease. This [finding] suggests [that] some individuals are prone to developing autoimmune disease and could be identified potentially prior to treatment with alemtuzumab.”

More research is needed, including the development of more sensitive IL-21 assays for use in this population, Dr. Coles said. “Please do not attempt to predict the risk of autoimmunity after alemtuzumab using the current commercial assays. This is a source of some frustration for me.” A potential route of lymphocyte repertoire reconstitution after alemtuzumab is thymic reconstitution, which could lead to a more diverse immune repertoire, Dr. Coles said. “If we can direct reconstitution through the thymic reconstitution, we should be able to prevent autoimmunity.”

Dr. Coles receives honoraria for travel and speaking from Sanofi Genzyme, which markets alemtuzumab.

—Damian McNamara

SAN DIEGO—The monoclonal antibody alemtuzumab can be an effective treatment for people living with multiple sclerosis (MS), but the agent is also associated with an increased risk for developing other autoimmune diseases, leaving clinicians with a conundrum.

Alemtuzumab is an efficacious treatment in MS that can slow the rate of brain atrophy over the long term, said Alasdair Coles, MD, Professor of Neuroimmunology at the University of Cambridge, United Kingdom, at ACTRIMS 2018 Forum. “But one or two years after each cycle of alemtuzumab, patients are at high risk of autoimmune diseases. This is the not-too-worrying thyroid disease, but there are some troubling and potentially serious complications at lower frequency.”

Autoimmune thyroid disease can affect as much as 40% of patients treated with alemtuzumab, but immune thrombocytopenia (3%) and autoimmune renal disease (0.1%) are also reported. About one in 10 people treated with the monoclonal antibody for MS can also develop de novo asymptomatic autoantibodies.

“People ask, ‘Why doesn’t MS come back as part of this generic mechanism?’ I don’t know the answer to that question,” said Dr. Coles.

In the United States, alemtuzumab is indicated for treatment of relapsing-remitting MS in adults who have failed to respond adequately to two or more previous therapies. In contrast, “this has become a first-line treatment in the UK,” said Dr. Coles. “Unfortunately, we can offer no proven treatment to prevent this autoimmunity.”

Considering Proposed Mechanisms

Dr. Coles and other researchers are investigating the cellular mechanism underlying the paradoxical autoimmunity associated with alemtuzumab. Some have suggested that faulty immune B cells could be the culprit, but “there is no difference in B cell reconstitution between those who do and do not get autoimmunity,” said Dr. Coles. “So, we do not think that autoimmunity after alemtuzumab is primarily a B cell problem.” Other investigators have suggested that the mechanism is the depletion of a key immune regulatory cell associated with alemtuzumab. One such example is depletion in T cells as part of an autoimmune cascade that involve CD52-high expressing cells and sialic acid-binding immunoglobulin-like lectin 10. “We do not believe this,” said Dr. Coles. “We cannot replicate the finding of reduced CD52 high cells in type 1 diabetes or MS, nor the binding of SIGLEC-10 to CD52.”

Along with his colleague Joanne Jones, MD, PhD, also at the University of Cambridge, Dr. Coles and his team instead propose that autoimmunity after alemtuzumab therapy is associated with a homeostatic proliferation of T cells in the context of a defective thymus. “We see thymic function reduced after alemtuzumab for a few months. We do not know if alemtuzumab is having a direct impact on the thymus or if it is an indirect effect through a cytokine storm at the time of administering alemtuzumab.”

In addition, in contrast with B cells, both CD4-positive and CD8-positive T cells are clonally restricted after alemtuzumab treatment, said Dr. Coles. “These are the only changes that distinguish patients who do and do not develop autoimmunity,” he said. “Those who develop autoimmunity have reduced clonality and impaired thymic function, compared with those who do not.”

The theory is that the reconstitution of T cells after alemtuzumab comes preferentially from expansion of peripheral T cells, rather than naïve T cells from the thymus, which leads to a higher representation of autoreactive T cells and thus leads to B-cell- and antibody-mediated autoimmunity.

The Bigger Picture

The autoimmune phenomenon is not unique to alemtuzumab or MS. “This turns out to be one of a family of clinical situations where the reconstitution of the depleted lymphocyte repertoire leads to autoimmunity,” Dr. Coles said. A similar effect was seen years ago when very lymphopenic patients with HIV were given antiviral therapy. About 10% of treated patients had this effect. Furthermore, about 10% of patients who undergo bone marrow transplant may experience similar autoimmune concerns.

“What we do think is true is that we have tapped into a classical expression of autoimmunity,” Dr. Coles said. “Alemtuzumab is a fantastic opportunity to study the mechanisms underlying lymphopenia-associated autoimmunity.”

A Tantalizing Prospect

“It is a tantalizing prospect that susceptible individuals might be identified in the future prior to treatment,” Dr. Coles said. “We looked at IL-21. We showed that after treatment, and perhaps more interestingly, before treatment with alemtuzumab, serum levels of IL-21 are greater in those who subsequently develop autoimmune disease. This [finding] suggests [that] some individuals are prone to developing autoimmune disease and could be identified potentially prior to treatment with alemtuzumab.”

More research is needed, including the development of more sensitive IL-21 assays for use in this population, Dr. Coles said. “Please do not attempt to predict the risk of autoimmunity after alemtuzumab using the current commercial assays. This is a source of some frustration for me.” A potential route of lymphocyte repertoire reconstitution after alemtuzumab is thymic reconstitution, which could lead to a more diverse immune repertoire, Dr. Coles said. “If we can direct reconstitution through the thymic reconstitution, we should be able to prevent autoimmunity.”

Dr. Coles receives honoraria for travel and speaking from Sanofi Genzyme, which markets alemtuzumab.

—Damian McNamara

SAN DIEGO—The monoclonal antibody alemtuzumab can be an effective treatment for people living with multiple sclerosis (MS), but the agent is also associated with an increased risk for developing other autoimmune diseases, leaving clinicians with a conundrum.

Alemtuzumab is an efficacious treatment in MS that can slow the rate of brain atrophy over the long term, said Alasdair Coles, MD, Professor of Neuroimmunology at the University of Cambridge, United Kingdom, at ACTRIMS 2018 Forum. “But one or two years after each cycle of alemtuzumab, patients are at high risk of autoimmune diseases. This is the not-too-worrying thyroid disease, but there are some troubling and potentially serious complications at lower frequency.”

Autoimmune thyroid disease can affect as much as 40% of patients treated with alemtuzumab, but immune thrombocytopenia (3%) and autoimmune renal disease (0.1%) are also reported. About one in 10 people treated with the monoclonal antibody for MS can also develop de novo asymptomatic autoantibodies.

“People ask, ‘Why doesn’t MS come back as part of this generic mechanism?’ I don’t know the answer to that question,” said Dr. Coles.

In the United States, alemtuzumab is indicated for treatment of relapsing-remitting MS in adults who have failed to respond adequately to two or more previous therapies. In contrast, “this has become a first-line treatment in the UK,” said Dr. Coles. “Unfortunately, we can offer no proven treatment to prevent this autoimmunity.”

Considering Proposed Mechanisms

Dr. Coles and other researchers are investigating the cellular mechanism underlying the paradoxical autoimmunity associated with alemtuzumab. Some have suggested that faulty immune B cells could be the culprit, but “there is no difference in B cell reconstitution between those who do and do not get autoimmunity,” said Dr. Coles. “So, we do not think that autoimmunity after alemtuzumab is primarily a B cell problem.” Other investigators have suggested that the mechanism is the depletion of a key immune regulatory cell associated with alemtuzumab. One such example is depletion in T cells as part of an autoimmune cascade that involve CD52-high expressing cells and sialic acid-binding immunoglobulin-like lectin 10. “We do not believe this,” said Dr. Coles. “We cannot replicate the finding of reduced CD52 high cells in type 1 diabetes or MS, nor the binding of SIGLEC-10 to CD52.”

Along with his colleague Joanne Jones, MD, PhD, also at the University of Cambridge, Dr. Coles and his team instead propose that autoimmunity after alemtuzumab therapy is associated with a homeostatic proliferation of T cells in the context of a defective thymus. “We see thymic function reduced after alemtuzumab for a few months. We do not know if alemtuzumab is having a direct impact on the thymus or if it is an indirect effect through a cytokine storm at the time of administering alemtuzumab.”

In addition, in contrast with B cells, both CD4-positive and CD8-positive T cells are clonally restricted after alemtuzumab treatment, said Dr. Coles. “These are the only changes that distinguish patients who do and do not develop autoimmunity,” he said. “Those who develop autoimmunity have reduced clonality and impaired thymic function, compared with those who do not.”

The theory is that the reconstitution of T cells after alemtuzumab comes preferentially from expansion of peripheral T cells, rather than naïve T cells from the thymus, which leads to a higher representation of autoreactive T cells and thus leads to B-cell- and antibody-mediated autoimmunity.

The Bigger Picture

The autoimmune phenomenon is not unique to alemtuzumab or MS. “This turns out to be one of a family of clinical situations where the reconstitution of the depleted lymphocyte repertoire leads to autoimmunity,” Dr. Coles said. A similar effect was seen years ago when very lymphopenic patients with HIV were given antiviral therapy. About 10% of treated patients had this effect. Furthermore, about 10% of patients who undergo bone marrow transplant may experience similar autoimmune concerns.

“What we do think is true is that we have tapped into a classical expression of autoimmunity,” Dr. Coles said. “Alemtuzumab is a fantastic opportunity to study the mechanisms underlying lymphopenia-associated autoimmunity.”

A Tantalizing Prospect

“It is a tantalizing prospect that susceptible individuals might be identified in the future prior to treatment,” Dr. Coles said. “We looked at IL-21. We showed that after treatment, and perhaps more interestingly, before treatment with alemtuzumab, serum levels of IL-21 are greater in those who subsequently develop autoimmune disease. This [finding] suggests [that] some individuals are prone to developing autoimmune disease and could be identified potentially prior to treatment with alemtuzumab.”

More research is needed, including the development of more sensitive IL-21 assays for use in this population, Dr. Coles said. “Please do not attempt to predict the risk of autoimmunity after alemtuzumab using the current commercial assays. This is a source of some frustration for me.” A potential route of lymphocyte repertoire reconstitution after alemtuzumab is thymic reconstitution, which could lead to a more diverse immune repertoire, Dr. Coles said. “If we can direct reconstitution through the thymic reconstitution, we should be able to prevent autoimmunity.”

Dr. Coles receives honoraria for travel and speaking from Sanofi Genzyme, which markets alemtuzumab.

—Damian McNamara

European Commission expands denosumab indication

The , making it is available for the prevention of skeletal-related events in adults with multiple myeloma and other advanced malignancies involving bone.

The European approval is based on the monoclonal antibody’s strong performance in a phase 3, international trial looking specifically at prevention of skeletal-related events in multiple myeloma patients.

During the trial, the drug demonstrated noninferiority to zoledronic acid in delaying the time to first skeletal-related event (hazard ratio, 0.98, 95% confidence interval: 0.85-1.14), according to Amgen, which markets denosumab. The median time to first skeletal-related event was 22.8 months for denosumab versus 24.0 months for zoledronic acid.

The denosumab indication was expanded to include prevention of skeletal-related events by the Food and Drug Administration in the United States in January 2018.

The , making it is available for the prevention of skeletal-related events in adults with multiple myeloma and other advanced malignancies involving bone.

The European approval is based on the monoclonal antibody’s strong performance in a phase 3, international trial looking specifically at prevention of skeletal-related events in multiple myeloma patients.

During the trial, the drug demonstrated noninferiority to zoledronic acid in delaying the time to first skeletal-related event (hazard ratio, 0.98, 95% confidence interval: 0.85-1.14), according to Amgen, which markets denosumab. The median time to first skeletal-related event was 22.8 months for denosumab versus 24.0 months for zoledronic acid.

The denosumab indication was expanded to include prevention of skeletal-related events by the Food and Drug Administration in the United States in January 2018.

The , making it is available for the prevention of skeletal-related events in adults with multiple myeloma and other advanced malignancies involving bone.

The European approval is based on the monoclonal antibody’s strong performance in a phase 3, international trial looking specifically at prevention of skeletal-related events in multiple myeloma patients.

During the trial, the drug demonstrated noninferiority to zoledronic acid in delaying the time to first skeletal-related event (hazard ratio, 0.98, 95% confidence interval: 0.85-1.14), according to Amgen, which markets denosumab. The median time to first skeletal-related event was 22.8 months for denosumab versus 24.0 months for zoledronic acid.

The denosumab indication was expanded to include prevention of skeletal-related events by the Food and Drug Administration in the United States in January 2018.

Disseminated Vesicles and Necrotic Papules

The Diagnosis: Lues Maligna

Laboratory evaluation demonstrated a total CD4 count of 26 cells/μL (reference range, 443-1471 cells/μL) with a viral load of 1,770,111 copies/mL (reference range, 0 copies/mL), as well as a positive rapid plasma reagin (RPR) test with a titer of 1:8 (reference range, nonreactive). A reactive treponemal antibody test confirmed a true positive RPR test result. Viral culture as well as direct fluorescence antibodies for varicella-zoster virus and an active vesicle of herpes simplex virus (HSV) were negative. Serum immunoglobulin titers for varicella-zoster virus demonstrated low IgM with a positive IgG demonstrating immunity without recent infection. Blood and lesional skin tissue cultures were negative for additional infectious etiologies including bacterial and fungal elements. A lumbar puncture was not performed.

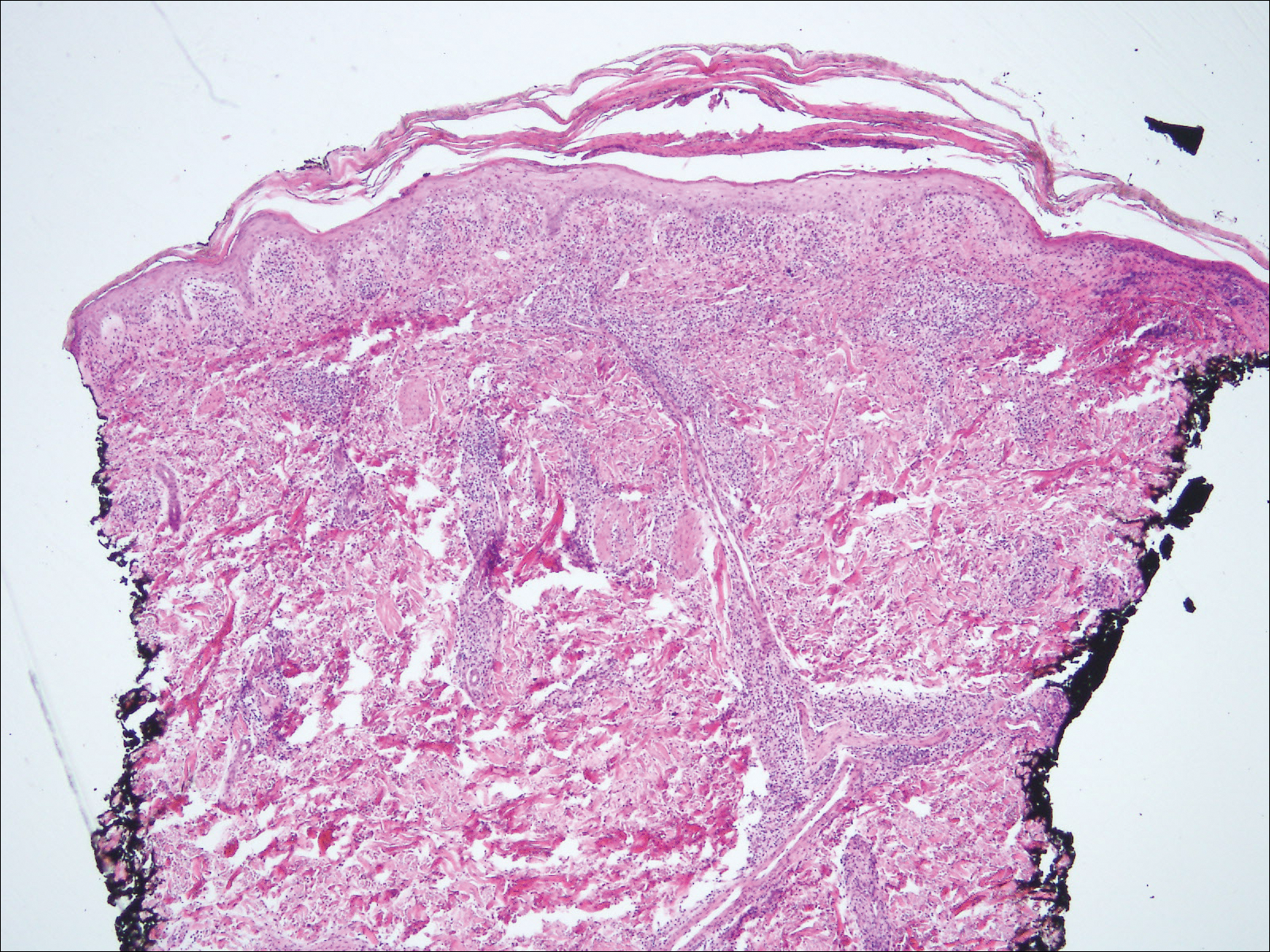

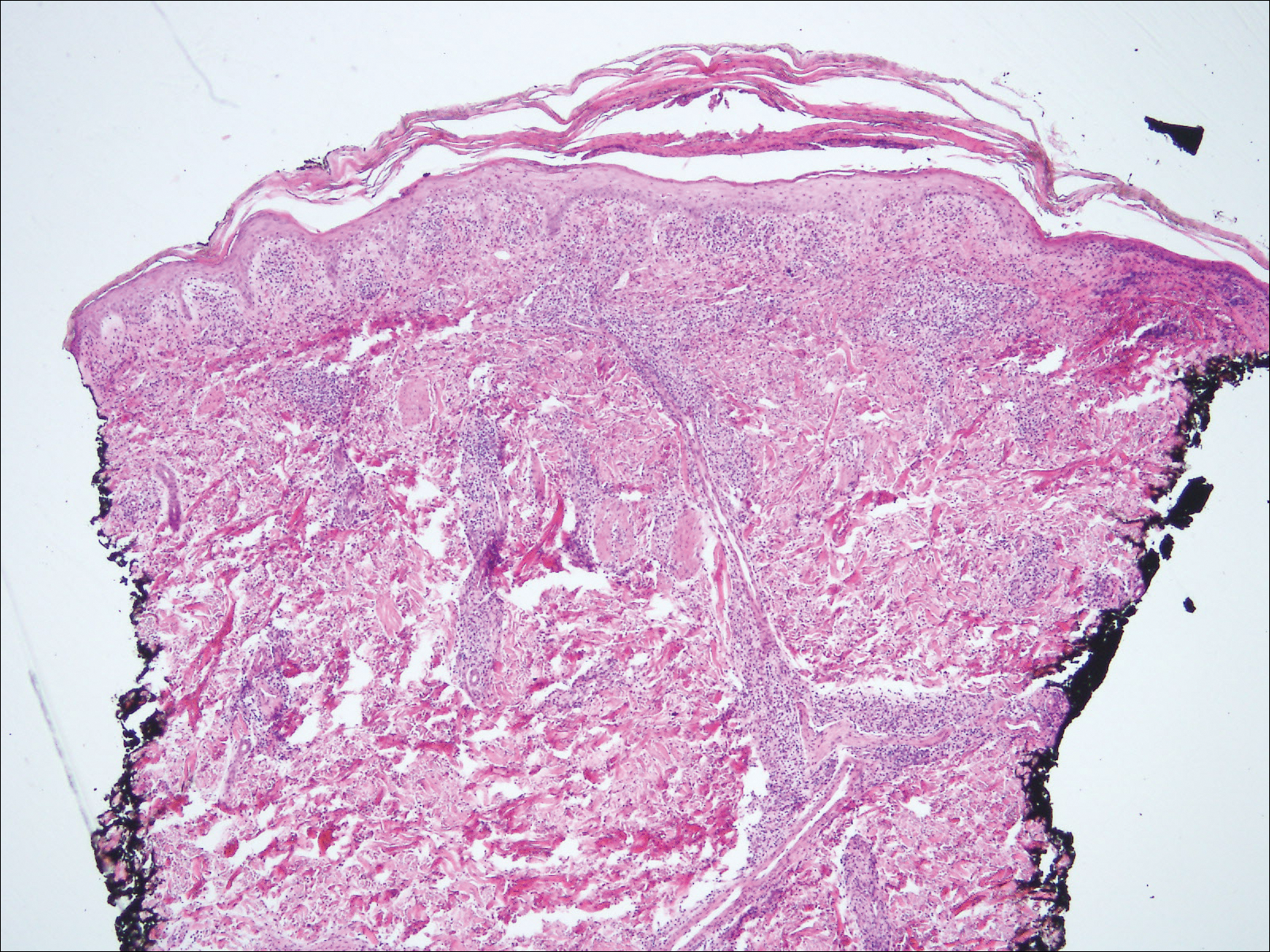

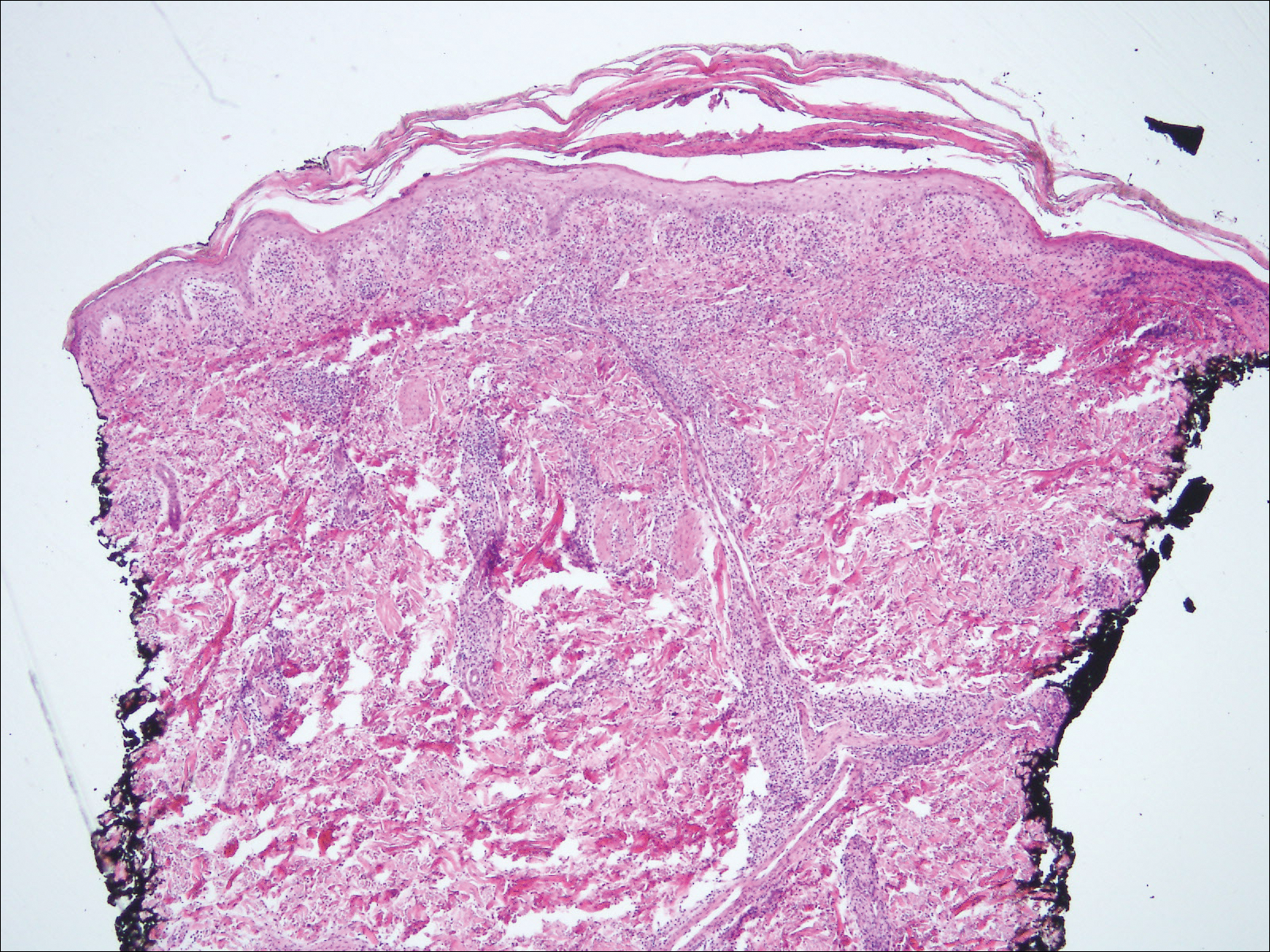

Biopsy of a papulonodule on the left arm demonstrated a lichenoid lymphohistiocytic infiltrate with superficial and deep inflammation (Figure 1). Neutrophils also were noted within a follicle with ballooning and acantholysis within the follicular epithelium. Additional staining for Mycobacterium, HSV-1, HSV-2, and Treponema were negative. In the clinical setting, this histologic pattern was most consistent with secondary syphilis. Pityriasis lichenoides et varioliformis acuta also was included in the histopathologic differential diagnosis by a dermatopathologist (M.C.).

Based on the clinical, microbiologic, and histopathologic findings, a diagnosis of lues maligna (cutaneous secondary syphilis) with a vesiculonecrotic presentation was made. The patient's low RPR titer was attributed to profound immunosuppression, while a confirmation of syphilis infection was made with treponemal antibody testing. Histopathologic examination was consistent with lues maligna and did not demonstrate evidence of any other infectious etiologies.

Following 7 days of intravenous penicillin, the patient demonstrated dramatic improvement of all skin lesions and was discharged receiving once-weekly intramuscular penicillin for 4 weeks. In accordance with the diagnosis, the patient demonstrated rapid improvement of the lesions following appropriate antibiotic therapy.

After the diagnosis of lues maligna was made, the patient disclosed a sexual encounter with a male partner 6 weeks prior to the current presentation, after which he developed a self-resolving genital ulcer suspicious for a primary chancre.

Increasing rates of syphilis transmission have been attributed to males aged 15 to 44 years who have sexual encounters with other males.1 Although syphilis commonly is known as the great mimicker, syphilology texts state that lesions are not associated with syphilis if vesicles are part of the cutaneous eruption in an adult.2 However, rare reports of secondary syphilis presenting as vesicles, pustules, bullae, and pityriasis lichenoides et varioliformis acuta-like eruptions also have been documented.2-4

Initial screening for suspected syphilis involves sensitive, but not specific, nontreponemal RPR testing reported in the form of a titer. Nontreponemal titers in human immunodeficiency virus-positive individuals can be unusually high or low, fluctuate rapidly, and/or be unresponsive to antibiotic therapy.1

Lues maligna is a rare form of malignant secondary syphilis that most commonly presents in human immunodeficiency virus-positive hosts.5 Although lues maligna often presents with ulceronodular lesions, 2 cases presenting with vesiculonecrotic lesions also have been reported.6 Patients often experience systemic symptoms including fever, fatigue, and joint pain. Rapid plasma reagin titers can range from 1:8 to 1:128 in affected individuals.6 Diagnosis is dependent on serologic and histologic confirmation while ruling out viral, fungal, and bacterial etiologies. Characteristic red-brown lesions of secondary syphilis involving the palms and soles (Figure 2) alsoaid in diagnosis.1 Additionally, identification of the Jarisch-Herxheimer reaction following treatment and rapid response to antibiotic therapy are helpful diagnostic findings.6,7 While histopathologic examination of lues maligna typically does not reveal evidence of spirochetes, it also is important to rule out other infectious etiologies.7

Our case emphasizes the importance of early recognition and treatment of the variable clinical, laboratory, and histologic presentations of lues maligna.

- Syphilis fact sheet. Centers for Disease Control and Prevention website. https://www.cdc.gov/std/syphilis/stdfact-syphilis.htm. Updated June 13, 2017. Accessed March 22, 2018.

- Lawrence P, Saxe N. Bullous secondary syphilis. Clin Exp Dermatol. 1992;17:44-46.

- Pastuszczak M, Woz´niak W, Jaworek AK, et al. Pityriasis lichenoides-like secondary syphilis and neurosyphilis in a HIV-infected patient. Postepy Dermatol Alergol. 2013;30:127-130.

- Schnirring-Judge M, Gustaferro C, Terol C. Vesiculobullous syphilis: a case involving an unusual cutaneous manifestation of secondary syphilis [published online November 24, 2010]. J Foot Ankle Surg. 2011;50:96-101.

- Pföhler C, Koerner R, von Müller L, et al. Lues maligna in a patient with unknown HIV infection. BMJ Case Rep. 2011. pii: bcr0520114221. doi: 10.1136/bcr.05.2011.4221.

- Don PC, Rubinstein R, Christie S. Malignant syphilis (lues maligna) and concurrent infection with HIV. Int J Dermatol. 1995;34:403-407.

- Tucker JD, Shah S, Jarell AD, et al. Lues maligna in early HIV infection case report and review of the literature. Sex Transm Dis. 2009;36:512-514.

The Diagnosis: Lues Maligna

Laboratory evaluation demonstrated a total CD4 count of 26 cells/μL (reference range, 443-1471 cells/μL) with a viral load of 1,770,111 copies/mL (reference range, 0 copies/mL), as well as a positive rapid plasma reagin (RPR) test with a titer of 1:8 (reference range, nonreactive). A reactive treponemal antibody test confirmed a true positive RPR test result. Viral culture as well as direct fluorescence antibodies for varicella-zoster virus and an active vesicle of herpes simplex virus (HSV) were negative. Serum immunoglobulin titers for varicella-zoster virus demonstrated low IgM with a positive IgG demonstrating immunity without recent infection. Blood and lesional skin tissue cultures were negative for additional infectious etiologies including bacterial and fungal elements. A lumbar puncture was not performed.

Biopsy of a papulonodule on the left arm demonstrated a lichenoid lymphohistiocytic infiltrate with superficial and deep inflammation (Figure 1). Neutrophils also were noted within a follicle with ballooning and acantholysis within the follicular epithelium. Additional staining for Mycobacterium, HSV-1, HSV-2, and Treponema were negative. In the clinical setting, this histologic pattern was most consistent with secondary syphilis. Pityriasis lichenoides et varioliformis acuta also was included in the histopathologic differential diagnosis by a dermatopathologist (M.C.).

Based on the clinical, microbiologic, and histopathologic findings, a diagnosis of lues maligna (cutaneous secondary syphilis) with a vesiculonecrotic presentation was made. The patient's low RPR titer was attributed to profound immunosuppression, while a confirmation of syphilis infection was made with treponemal antibody testing. Histopathologic examination was consistent with lues maligna and did not demonstrate evidence of any other infectious etiologies.

Following 7 days of intravenous penicillin, the patient demonstrated dramatic improvement of all skin lesions and was discharged receiving once-weekly intramuscular penicillin for 4 weeks. In accordance with the diagnosis, the patient demonstrated rapid improvement of the lesions following appropriate antibiotic therapy.

After the diagnosis of lues maligna was made, the patient disclosed a sexual encounter with a male partner 6 weeks prior to the current presentation, after which he developed a self-resolving genital ulcer suspicious for a primary chancre.

Increasing rates of syphilis transmission have been attributed to males aged 15 to 44 years who have sexual encounters with other males.1 Although syphilis commonly is known as the great mimicker, syphilology texts state that lesions are not associated with syphilis if vesicles are part of the cutaneous eruption in an adult.2 However, rare reports of secondary syphilis presenting as vesicles, pustules, bullae, and pityriasis lichenoides et varioliformis acuta-like eruptions also have been documented.2-4

Initial screening for suspected syphilis involves sensitive, but not specific, nontreponemal RPR testing reported in the form of a titer. Nontreponemal titers in human immunodeficiency virus-positive individuals can be unusually high or low, fluctuate rapidly, and/or be unresponsive to antibiotic therapy.1

Lues maligna is a rare form of malignant secondary syphilis that most commonly presents in human immunodeficiency virus-positive hosts.5 Although lues maligna often presents with ulceronodular lesions, 2 cases presenting with vesiculonecrotic lesions also have been reported.6 Patients often experience systemic symptoms including fever, fatigue, and joint pain. Rapid plasma reagin titers can range from 1:8 to 1:128 in affected individuals.6 Diagnosis is dependent on serologic and histologic confirmation while ruling out viral, fungal, and bacterial etiologies. Characteristic red-brown lesions of secondary syphilis involving the palms and soles (Figure 2) alsoaid in diagnosis.1 Additionally, identification of the Jarisch-Herxheimer reaction following treatment and rapid response to antibiotic therapy are helpful diagnostic findings.6,7 While histopathologic examination of lues maligna typically does not reveal evidence of spirochetes, it also is important to rule out other infectious etiologies.7

Our case emphasizes the importance of early recognition and treatment of the variable clinical, laboratory, and histologic presentations of lues maligna.

The Diagnosis: Lues Maligna

Laboratory evaluation demonstrated a total CD4 count of 26 cells/μL (reference range, 443-1471 cells/μL) with a viral load of 1,770,111 copies/mL (reference range, 0 copies/mL), as well as a positive rapid plasma reagin (RPR) test with a titer of 1:8 (reference range, nonreactive). A reactive treponemal antibody test confirmed a true positive RPR test result. Viral culture as well as direct fluorescence antibodies for varicella-zoster virus and an active vesicle of herpes simplex virus (HSV) were negative. Serum immunoglobulin titers for varicella-zoster virus demonstrated low IgM with a positive IgG demonstrating immunity without recent infection. Blood and lesional skin tissue cultures were negative for additional infectious etiologies including bacterial and fungal elements. A lumbar puncture was not performed.

Biopsy of a papulonodule on the left arm demonstrated a lichenoid lymphohistiocytic infiltrate with superficial and deep inflammation (Figure 1). Neutrophils also were noted within a follicle with ballooning and acantholysis within the follicular epithelium. Additional staining for Mycobacterium, HSV-1, HSV-2, and Treponema were negative. In the clinical setting, this histologic pattern was most consistent with secondary syphilis. Pityriasis lichenoides et varioliformis acuta also was included in the histopathologic differential diagnosis by a dermatopathologist (M.C.).

Based on the clinical, microbiologic, and histopathologic findings, a diagnosis of lues maligna (cutaneous secondary syphilis) with a vesiculonecrotic presentation was made. The patient's low RPR titer was attributed to profound immunosuppression, while a confirmation of syphilis infection was made with treponemal antibody testing. Histopathologic examination was consistent with lues maligna and did not demonstrate evidence of any other infectious etiologies.

Following 7 days of intravenous penicillin, the patient demonstrated dramatic improvement of all skin lesions and was discharged receiving once-weekly intramuscular penicillin for 4 weeks. In accordance with the diagnosis, the patient demonstrated rapid improvement of the lesions following appropriate antibiotic therapy.

After the diagnosis of lues maligna was made, the patient disclosed a sexual encounter with a male partner 6 weeks prior to the current presentation, after which he developed a self-resolving genital ulcer suspicious for a primary chancre.

Increasing rates of syphilis transmission have been attributed to males aged 15 to 44 years who have sexual encounters with other males.1 Although syphilis commonly is known as the great mimicker, syphilology texts state that lesions are not associated with syphilis if vesicles are part of the cutaneous eruption in an adult.2 However, rare reports of secondary syphilis presenting as vesicles, pustules, bullae, and pityriasis lichenoides et varioliformis acuta-like eruptions also have been documented.2-4

Initial screening for suspected syphilis involves sensitive, but not specific, nontreponemal RPR testing reported in the form of a titer. Nontreponemal titers in human immunodeficiency virus-positive individuals can be unusually high or low, fluctuate rapidly, and/or be unresponsive to antibiotic therapy.1

Lues maligna is a rare form of malignant secondary syphilis that most commonly presents in human immunodeficiency virus-positive hosts.5 Although lues maligna often presents with ulceronodular lesions, 2 cases presenting with vesiculonecrotic lesions also have been reported.6 Patients often experience systemic symptoms including fever, fatigue, and joint pain. Rapid plasma reagin titers can range from 1:8 to 1:128 in affected individuals.6 Diagnosis is dependent on serologic and histologic confirmation while ruling out viral, fungal, and bacterial etiologies. Characteristic red-brown lesions of secondary syphilis involving the palms and soles (Figure 2) alsoaid in diagnosis.1 Additionally, identification of the Jarisch-Herxheimer reaction following treatment and rapid response to antibiotic therapy are helpful diagnostic findings.6,7 While histopathologic examination of lues maligna typically does not reveal evidence of spirochetes, it also is important to rule out other infectious etiologies.7

Our case emphasizes the importance of early recognition and treatment of the variable clinical, laboratory, and histologic presentations of lues maligna.

- Syphilis fact sheet. Centers for Disease Control and Prevention website. https://www.cdc.gov/std/syphilis/stdfact-syphilis.htm. Updated June 13, 2017. Accessed March 22, 2018.

- Lawrence P, Saxe N. Bullous secondary syphilis. Clin Exp Dermatol. 1992;17:44-46.

- Pastuszczak M, Woz´niak W, Jaworek AK, et al. Pityriasis lichenoides-like secondary syphilis and neurosyphilis in a HIV-infected patient. Postepy Dermatol Alergol. 2013;30:127-130.

- Schnirring-Judge M, Gustaferro C, Terol C. Vesiculobullous syphilis: a case involving an unusual cutaneous manifestation of secondary syphilis [published online November 24, 2010]. J Foot Ankle Surg. 2011;50:96-101.

- Pföhler C, Koerner R, von Müller L, et al. Lues maligna in a patient with unknown HIV infection. BMJ Case Rep. 2011. pii: bcr0520114221. doi: 10.1136/bcr.05.2011.4221.

- Don PC, Rubinstein R, Christie S. Malignant syphilis (lues maligna) and concurrent infection with HIV. Int J Dermatol. 1995;34:403-407.

- Tucker JD, Shah S, Jarell AD, et al. Lues maligna in early HIV infection case report and review of the literature. Sex Transm Dis. 2009;36:512-514.

- Syphilis fact sheet. Centers for Disease Control and Prevention website. https://www.cdc.gov/std/syphilis/stdfact-syphilis.htm. Updated June 13, 2017. Accessed March 22, 2018.

- Lawrence P, Saxe N. Bullous secondary syphilis. Clin Exp Dermatol. 1992;17:44-46.

- Pastuszczak M, Woz´niak W, Jaworek AK, et al. Pityriasis lichenoides-like secondary syphilis and neurosyphilis in a HIV-infected patient. Postepy Dermatol Alergol. 2013;30:127-130.

- Schnirring-Judge M, Gustaferro C, Terol C. Vesiculobullous syphilis: a case involving an unusual cutaneous manifestation of secondary syphilis [published online November 24, 2010]. J Foot Ankle Surg. 2011;50:96-101.

- Pföhler C, Koerner R, von Müller L, et al. Lues maligna in a patient with unknown HIV infection. BMJ Case Rep. 2011. pii: bcr0520114221. doi: 10.1136/bcr.05.2011.4221.

- Don PC, Rubinstein R, Christie S. Malignant syphilis (lues maligna) and concurrent infection with HIV. Int J Dermatol. 1995;34:403-407.

- Tucker JD, Shah S, Jarell AD, et al. Lues maligna in early HIV infection case report and review of the literature. Sex Transm Dis. 2009;36:512-514.

A 30-year-old man who had contracted human immunodeficiency virus from a male sexual partner 4 years prior presented to the emergency department with fevers, chills, night sweats, and rhinorrhea of 2 weeks' duration. He reported that he had been off highly active antiretroviral therapy for 2 years. Physical examination revealed numerous erythematous, papulonecrotic, crusted lesions on the face, neck, chest, back, arms, and legs that had developed over the past 4 days. Fluid-filled vesicles also were noted on the arms and legs, while erythematous, indurated nodules with overlying scaling were noted on the bilateral palms and soles. The patient reported that he had been vaccinated for varicella-zoster virus as a child without primary infection.

Patients With MS May Not Receive Appropriate Medicines From Primary Care Doctors

SAN DIEGO—Patients with multiple sclerosis (MS) who are treated by primary care physicians are significantly less likely to receive disease-modifying therapies (DMTs) than patients treated by neurologists, even though they have more symptoms, according to a study reported at the ACTRIMS 2018 Forum.

Approximately 85% of patients treated by neurologists at MS centers receive DMTs, compared with 51% of those treated at primary care offices.

In addition, patients treated at primary care practices have several kinds of symptoms. “This [finding] suggests there is a critical need for neurologists, especially MS specialists, to reach out and collaborate with these primary care providers and provide education about how to manage MS and improve both the treatment and the outcomes,” said lead study author Michael T. Halpern, MD, PhD, Associate Professor of Public Health at Temple University in Philadelphia.

Dr. Halpern and colleagues analyzed data from the Sonya Slifka Longitudinal MS Study. They focused on patients with MS who received care at MS centers (376 patients, all treated by neurologists), neurology practices (552 patients), and primary care practices (55 patients).

In the three groups, most of the patients were female (from 77% to 82%). Compared with patients treated at MS centers, those who were treated at primary care practices were more likely to be white (98% vs 82%), to have less than a college education (69% vs 42%), and to have Medicaid or veteran coverage, or be uninsured (22% vs 11%).

The rate of patients receiving DMTs was 84% at MS centers and 79% at neurology practices. About 51% of patients treated by primary care doctors received DMTs, even though they reported more symptoms in areas such as vision, walking, bowel, speech, and numbness, compared with patients in the other groups.

The study does not indicate why the patients with MS who are treated by primary care physicians are not receiving appropriate therapies, and it is not known whether the absence of treatment makes their conditions worse.

Nevertheless, it has been well documented that DMTs can reduce disease progression and relapses, Dr. Halpern said. “Individuals with MS who are not being appropriately treated are more likely to experience symptoms, relapses, and faster [accumulation of] disability.”

Primary care doctors may not be providing appropriate treatment because they lack the training and expertise to properly prescribe MS medications, said Dr. Halpern. Whatever the explanation, MS subspecialists and primary care doctors clearly need to collaborate more, he said.

—Randy Dotinga

SAN DIEGO—Patients with multiple sclerosis (MS) who are treated by primary care physicians are significantly less likely to receive disease-modifying therapies (DMTs) than patients treated by neurologists, even though they have more symptoms, according to a study reported at the ACTRIMS 2018 Forum.

Approximately 85% of patients treated by neurologists at MS centers receive DMTs, compared with 51% of those treated at primary care offices.

In addition, patients treated at primary care practices have several kinds of symptoms. “This [finding] suggests there is a critical need for neurologists, especially MS specialists, to reach out and collaborate with these primary care providers and provide education about how to manage MS and improve both the treatment and the outcomes,” said lead study author Michael T. Halpern, MD, PhD, Associate Professor of Public Health at Temple University in Philadelphia.

Dr. Halpern and colleagues analyzed data from the Sonya Slifka Longitudinal MS Study. They focused on patients with MS who received care at MS centers (376 patients, all treated by neurologists), neurology practices (552 patients), and primary care practices (55 patients).

In the three groups, most of the patients were female (from 77% to 82%). Compared with patients treated at MS centers, those who were treated at primary care practices were more likely to be white (98% vs 82%), to have less than a college education (69% vs 42%), and to have Medicaid or veteran coverage, or be uninsured (22% vs 11%).

The rate of patients receiving DMTs was 84% at MS centers and 79% at neurology practices. About 51% of patients treated by primary care doctors received DMTs, even though they reported more symptoms in areas such as vision, walking, bowel, speech, and numbness, compared with patients in the other groups.

The study does not indicate why the patients with MS who are treated by primary care physicians are not receiving appropriate therapies, and it is not known whether the absence of treatment makes their conditions worse.

Nevertheless, it has been well documented that DMTs can reduce disease progression and relapses, Dr. Halpern said. “Individuals with MS who are not being appropriately treated are more likely to experience symptoms, relapses, and faster [accumulation of] disability.”

Primary care doctors may not be providing appropriate treatment because they lack the training and expertise to properly prescribe MS medications, said Dr. Halpern. Whatever the explanation, MS subspecialists and primary care doctors clearly need to collaborate more, he said.

—Randy Dotinga

SAN DIEGO—Patients with multiple sclerosis (MS) who are treated by primary care physicians are significantly less likely to receive disease-modifying therapies (DMTs) than patients treated by neurologists, even though they have more symptoms, according to a study reported at the ACTRIMS 2018 Forum.

Approximately 85% of patients treated by neurologists at MS centers receive DMTs, compared with 51% of those treated at primary care offices.

In addition, patients treated at primary care practices have several kinds of symptoms. “This [finding] suggests there is a critical need for neurologists, especially MS specialists, to reach out and collaborate with these primary care providers and provide education about how to manage MS and improve both the treatment and the outcomes,” said lead study author Michael T. Halpern, MD, PhD, Associate Professor of Public Health at Temple University in Philadelphia.

Dr. Halpern and colleagues analyzed data from the Sonya Slifka Longitudinal MS Study. They focused on patients with MS who received care at MS centers (376 patients, all treated by neurologists), neurology practices (552 patients), and primary care practices (55 patients).

In the three groups, most of the patients were female (from 77% to 82%). Compared with patients treated at MS centers, those who were treated at primary care practices were more likely to be white (98% vs 82%), to have less than a college education (69% vs 42%), and to have Medicaid or veteran coverage, or be uninsured (22% vs 11%).

The rate of patients receiving DMTs was 84% at MS centers and 79% at neurology practices. About 51% of patients treated by primary care doctors received DMTs, even though they reported more symptoms in areas such as vision, walking, bowel, speech, and numbness, compared with patients in the other groups.

The study does not indicate why the patients with MS who are treated by primary care physicians are not receiving appropriate therapies, and it is not known whether the absence of treatment makes their conditions worse.

Nevertheless, it has been well documented that DMTs can reduce disease progression and relapses, Dr. Halpern said. “Individuals with MS who are not being appropriately treated are more likely to experience symptoms, relapses, and faster [accumulation of] disability.”

Primary care doctors may not be providing appropriate treatment because they lack the training and expertise to properly prescribe MS medications, said Dr. Halpern. Whatever the explanation, MS subspecialists and primary care doctors clearly need to collaborate more, he said.

—Randy Dotinga

Posttransplant cyclophosphamide helped reduce GVHD rates

SALT LAKE CITY – The combination of mycophenolate mofetil, tacrolimus, and posttransplant cyclophosphamide outperformed other prophylaxis regimens at reducing graft versus host disease with relapse-free survival in a multicenter trial.

The trial’s primary aim was to compare rates of post–hematopoietic stem cell transplant GVHD-free and relapse-free survival (GRFS) in the three study arms, compared with the tacrolimus/methotrexate group, who were receiving a “contemporary control,” Javier Bolaños-Meade, MD, said during a late-breaking abstract session of the combined annual meetings of the Center for International Blood & Marrow Transplant Research and the American Society for Blood and Marrow Transplantation.

The mycophenolate mofetil/tacrolimus/posttransplant cyclophosphamide group had a hazard ratio of 0.72 for reaching the primary endpoint – GRFS (95% confidence interval, 0.55-0.94; P = .04), compared with patients receiving the control regimen. In the study, GRFS was defined as the amount of time elapsed between transplant and any of: grade III-IV acute GVHD, chronic GVHD severe enough to require systemic therapy, disease relapse or progression, or death. Grade III-IV acute GVHD and GVHD survival were superior with mycophenolate mofetil/tacrolimus/posttransplant cyclophosphamide, compared with the control (P = .006 and .01, respectively).

The phase 2 trial enrolled adults aged 18-75 years who had a malignant disease and a matched donor, and were slated to receive reduced intensity conditioning. The study randomized patients 1:1:1 to one of three experimental regimens and 224 to the control tacrolimus/methotrexate regimen. In the experimental arms, 92 patients received mycophenolate mofetil/tacrolimus/posttransplant cyclophosphamide; 89 patients received tacrolimus/methotrexate/maraviroc, and 92 patients received tacrolimus/methotrexate/bortezomib.

“According to predetermined parameters for success, tacrolimus/mycophenolate mofetil/cyclophosphamide was superior to control in GRFS, severe acute GVHD, chronic GVHD requiring immunosuppression, and GVHD-free survival, without a negative impact on treatment-related mortality, relapse/progression, overall survival or disease-free survival,” Dr. Bolaños-Meade said.

Patients could be included in the study if they had acute leukemia, chronic myelogenous leukemia, or myelodysplastic syndrome; patients with these diagnoses could have no circulating blasts and had to have less than 10% blasts in bone marrow. Patients with chronic lymphocytic leukemia and lymphoma with sensitive disease at the time of transplant were also eligible. All patients received peripheral blood stem cells, and underwent reduced intensity conditioning.

Permissible conditioning regimens included fludarabine/busulfan dosed at 8 mg/kg or less, fludarabine/cyclophosphamide with or without total body irradiation (TBI), fludarabine/TBI at 200 cGy, or fludarabine/melphalan dosed at less than 150 mg/m2 of body surface area. Alemtuzumab and anti-thymocyte globulin were not permitted.

Patients had to have a cardiac ejection fraction greater than 40%. For inclusion, patients had to have estimated creatinine clearance greater than 40 mL/min, bilirubin less than two times the upper limit of normal, and ALT/AST less than 2.5 times the upper limit of normal. Inclusion criteria also required adequate pulmonary function, defined as hemoglobin-corrected diffused capacity of carbon monoxide of at least 40% and forced expiratory volume in one second of 50% or greater.

Patients’ donors had to be either siblings, or 7/8 or 8/8 human leukocyte antigen-matched unrelated donors.

The patients receiving tacrolimus/methotrexate who served as controls were also collected prospectively, from centers that were not participating in the three-arm clinical trial. These patients also received reduced intensity conditioning and a peripheral blood stem cell transplant. This arm of the study was run through the Center for International Blood & Marrow Transplant Research. “I want to stress that the entry criteria were the same as for the intervention arms of the study,” Dr. Bolaños-Meade said.

Using a baseline rate of 23% for the GRFS endpoint, Dr. Bolaños-Meade and his collaborators established the size of the intervention and control arm so that the study would have 86%-88% power to detect a 20% improvement in the rate of GRFS over the contemporary control GVHD prophylaxis.

Across all study arms, patients were a median of 64 years old and most (58%-67%) were men. A little more than half of the patients had a Karnofsky Performance Status of 90%-100%. The Hematopoietic Cell Transplantation–Comorbidity Index was 3 or greater in about 40% of patients in the intervention arms, and in 62% of those in the control arm.

The phase 2 study was not designed to compare each experimental arm against the others, but only to compare each experimental arm to the control, said Dr. Bolaños-Meade, of the department of oncology at Johns Hopkins University, Baltimore.

“The comparisons that were made in this study ... have a limited power to really show superiority,” he said, adding that the National Clinical Trials Network is beginning a phase 3 trial that directly compares posttransplant cyclophosphamide to tacrolimus/methotrexate.

Dr. Bolaños-Meade reported serving on the data safety monitoring board of Incyte.

SOURCE: Bolaños-Meade J et al. 2018 BMT Tandem Meetings, Abstract LBA1.

SALT LAKE CITY – The combination of mycophenolate mofetil, tacrolimus, and posttransplant cyclophosphamide outperformed other prophylaxis regimens at reducing graft versus host disease with relapse-free survival in a multicenter trial.

The trial’s primary aim was to compare rates of post–hematopoietic stem cell transplant GVHD-free and relapse-free survival (GRFS) in the three study arms, compared with the tacrolimus/methotrexate group, who were receiving a “contemporary control,” Javier Bolaños-Meade, MD, said during a late-breaking abstract session of the combined annual meetings of the Center for International Blood & Marrow Transplant Research and the American Society for Blood and Marrow Transplantation.

The mycophenolate mofetil/tacrolimus/posttransplant cyclophosphamide group had a hazard ratio of 0.72 for reaching the primary endpoint – GRFS (95% confidence interval, 0.55-0.94; P = .04), compared with patients receiving the control regimen. In the study, GRFS was defined as the amount of time elapsed between transplant and any of: grade III-IV acute GVHD, chronic GVHD severe enough to require systemic therapy, disease relapse or progression, or death. Grade III-IV acute GVHD and GVHD survival were superior with mycophenolate mofetil/tacrolimus/posttransplant cyclophosphamide, compared with the control (P = .006 and .01, respectively).

The phase 2 trial enrolled adults aged 18-75 years who had a malignant disease and a matched donor, and were slated to receive reduced intensity conditioning. The study randomized patients 1:1:1 to one of three experimental regimens and 224 to the control tacrolimus/methotrexate regimen. In the experimental arms, 92 patients received mycophenolate mofetil/tacrolimus/posttransplant cyclophosphamide; 89 patients received tacrolimus/methotrexate/maraviroc, and 92 patients received tacrolimus/methotrexate/bortezomib.

“According to predetermined parameters for success, tacrolimus/mycophenolate mofetil/cyclophosphamide was superior to control in GRFS, severe acute GVHD, chronic GVHD requiring immunosuppression, and GVHD-free survival, without a negative impact on treatment-related mortality, relapse/progression, overall survival or disease-free survival,” Dr. Bolaños-Meade said.

Patients could be included in the study if they had acute leukemia, chronic myelogenous leukemia, or myelodysplastic syndrome; patients with these diagnoses could have no circulating blasts and had to have less than 10% blasts in bone marrow. Patients with chronic lymphocytic leukemia and lymphoma with sensitive disease at the time of transplant were also eligible. All patients received peripheral blood stem cells, and underwent reduced intensity conditioning.

Permissible conditioning regimens included fludarabine/busulfan dosed at 8 mg/kg or less, fludarabine/cyclophosphamide with or without total body irradiation (TBI), fludarabine/TBI at 200 cGy, or fludarabine/melphalan dosed at less than 150 mg/m2 of body surface area. Alemtuzumab and anti-thymocyte globulin were not permitted.

Patients had to have a cardiac ejection fraction greater than 40%. For inclusion, patients had to have estimated creatinine clearance greater than 40 mL/min, bilirubin less than two times the upper limit of normal, and ALT/AST less than 2.5 times the upper limit of normal. Inclusion criteria also required adequate pulmonary function, defined as hemoglobin-corrected diffused capacity of carbon monoxide of at least 40% and forced expiratory volume in one second of 50% or greater.

Patients’ donors had to be either siblings, or 7/8 or 8/8 human leukocyte antigen-matched unrelated donors.

The patients receiving tacrolimus/methotrexate who served as controls were also collected prospectively, from centers that were not participating in the three-arm clinical trial. These patients also received reduced intensity conditioning and a peripheral blood stem cell transplant. This arm of the study was run through the Center for International Blood & Marrow Transplant Research. “I want to stress that the entry criteria were the same as for the intervention arms of the study,” Dr. Bolaños-Meade said.

Using a baseline rate of 23% for the GRFS endpoint, Dr. Bolaños-Meade and his collaborators established the size of the intervention and control arm so that the study would have 86%-88% power to detect a 20% improvement in the rate of GRFS over the contemporary control GVHD prophylaxis.

Across all study arms, patients were a median of 64 years old and most (58%-67%) were men. A little more than half of the patients had a Karnofsky Performance Status of 90%-100%. The Hematopoietic Cell Transplantation–Comorbidity Index was 3 or greater in about 40% of patients in the intervention arms, and in 62% of those in the control arm.

The phase 2 study was not designed to compare each experimental arm against the others, but only to compare each experimental arm to the control, said Dr. Bolaños-Meade, of the department of oncology at Johns Hopkins University, Baltimore.

“The comparisons that were made in this study ... have a limited power to really show superiority,” he said, adding that the National Clinical Trials Network is beginning a phase 3 trial that directly compares posttransplant cyclophosphamide to tacrolimus/methotrexate.

Dr. Bolaños-Meade reported serving on the data safety monitoring board of Incyte.

SOURCE: Bolaños-Meade J et al. 2018 BMT Tandem Meetings, Abstract LBA1.

SALT LAKE CITY – The combination of mycophenolate mofetil, tacrolimus, and posttransplant cyclophosphamide outperformed other prophylaxis regimens at reducing graft versus host disease with relapse-free survival in a multicenter trial.

The trial’s primary aim was to compare rates of post–hematopoietic stem cell transplant GVHD-free and relapse-free survival (GRFS) in the three study arms, compared with the tacrolimus/methotrexate group, who were receiving a “contemporary control,” Javier Bolaños-Meade, MD, said during a late-breaking abstract session of the combined annual meetings of the Center for International Blood & Marrow Transplant Research and the American Society for Blood and Marrow Transplantation.

The mycophenolate mofetil/tacrolimus/posttransplant cyclophosphamide group had a hazard ratio of 0.72 for reaching the primary endpoint – GRFS (95% confidence interval, 0.55-0.94; P = .04), compared with patients receiving the control regimen. In the study, GRFS was defined as the amount of time elapsed between transplant and any of: grade III-IV acute GVHD, chronic GVHD severe enough to require systemic therapy, disease relapse or progression, or death. Grade III-IV acute GVHD and GVHD survival were superior with mycophenolate mofetil/tacrolimus/posttransplant cyclophosphamide, compared with the control (P = .006 and .01, respectively).

The phase 2 trial enrolled adults aged 18-75 years who had a malignant disease and a matched donor, and were slated to receive reduced intensity conditioning. The study randomized patients 1:1:1 to one of three experimental regimens and 224 to the control tacrolimus/methotrexate regimen. In the experimental arms, 92 patients received mycophenolate mofetil/tacrolimus/posttransplant cyclophosphamide; 89 patients received tacrolimus/methotrexate/maraviroc, and 92 patients received tacrolimus/methotrexate/bortezomib.

“According to predetermined parameters for success, tacrolimus/mycophenolate mofetil/cyclophosphamide was superior to control in GRFS, severe acute GVHD, chronic GVHD requiring immunosuppression, and GVHD-free survival, without a negative impact on treatment-related mortality, relapse/progression, overall survival or disease-free survival,” Dr. Bolaños-Meade said.

Patients could be included in the study if they had acute leukemia, chronic myelogenous leukemia, or myelodysplastic syndrome; patients with these diagnoses could have no circulating blasts and had to have less than 10% blasts in bone marrow. Patients with chronic lymphocytic leukemia and lymphoma with sensitive disease at the time of transplant were also eligible. All patients received peripheral blood stem cells, and underwent reduced intensity conditioning.

Permissible conditioning regimens included fludarabine/busulfan dosed at 8 mg/kg or less, fludarabine/cyclophosphamide with or without total body irradiation (TBI), fludarabine/TBI at 200 cGy, or fludarabine/melphalan dosed at less than 150 mg/m2 of body surface area. Alemtuzumab and anti-thymocyte globulin were not permitted.

Patients had to have a cardiac ejection fraction greater than 40%. For inclusion, patients had to have estimated creatinine clearance greater than 40 mL/min, bilirubin less than two times the upper limit of normal, and ALT/AST less than 2.5 times the upper limit of normal. Inclusion criteria also required adequate pulmonary function, defined as hemoglobin-corrected diffused capacity of carbon monoxide of at least 40% and forced expiratory volume in one second of 50% or greater.

Patients’ donors had to be either siblings, or 7/8 or 8/8 human leukocyte antigen-matched unrelated donors.

The patients receiving tacrolimus/methotrexate who served as controls were also collected prospectively, from centers that were not participating in the three-arm clinical trial. These patients also received reduced intensity conditioning and a peripheral blood stem cell transplant. This arm of the study was run through the Center for International Blood & Marrow Transplant Research. “I want to stress that the entry criteria were the same as for the intervention arms of the study,” Dr. Bolaños-Meade said.

Using a baseline rate of 23% for the GRFS endpoint, Dr. Bolaños-Meade and his collaborators established the size of the intervention and control arm so that the study would have 86%-88% power to detect a 20% improvement in the rate of GRFS over the contemporary control GVHD prophylaxis.

Across all study arms, patients were a median of 64 years old and most (58%-67%) were men. A little more than half of the patients had a Karnofsky Performance Status of 90%-100%. The Hematopoietic Cell Transplantation–Comorbidity Index was 3 or greater in about 40% of patients in the intervention arms, and in 62% of those in the control arm.

The phase 2 study was not designed to compare each experimental arm against the others, but only to compare each experimental arm to the control, said Dr. Bolaños-Meade, of the department of oncology at Johns Hopkins University, Baltimore.

“The comparisons that were made in this study ... have a limited power to really show superiority,” he said, adding that the National Clinical Trials Network is beginning a phase 3 trial that directly compares posttransplant cyclophosphamide to tacrolimus/methotrexate.

Dr. Bolaños-Meade reported serving on the data safety monitoring board of Incyte.

SOURCE: Bolaños-Meade J et al. 2018 BMT Tandem Meetings, Abstract LBA1.

REPORTING FROM THE 2018 BMT TANDEM MEETINGS

Key clinical point:

Major finding: The hazard ratio for GVHD-free and relapse-free survival was 0.72 for those receiving cyclophosphamide, compared with controls (P = .04).

Study details: Randomized, controlled trial of 497 patients receiving one of three intervention arm posttransplant regimens for GVHD prophylaxis, or a control regimen of tacrolimus and methotrexate.

Disclosures: Dr. Bolaños-Meade reported serving on the data safety monitoring board of Incyte.

Source: Bolaños-Meade J et al. 2018 BMT Tandem Meetings, Abstract LB1.

Troubleshooting Gait and Voice Problems After DBS for Parkinson’s Disease

LAS VEGAS—In patients with Parkinson’s disease, deep brain stimulation (DBS) can improve gait significantly and reduce vocal tremor. Some patients may fail to improve following implantation, however, and others who do improve may later worsen. In such cases, neurologists can address problems with gait and voice tremor using various steps to optimize DBS treatment, according to two lectures delivered at the 21st Annual Meeting of the North American Neuromodulation Society.

Refractory Gait Impairment

Gait impairment and freezing of gait may persist for years in some patients, despite DBS treatment at the traditional frequency of 130 Hz. Studies by Moreau and colleagues indicate that stimulation at 60 Hz improves these outcomes in previously refractory patients, said Helen M. Brontë-Stewart, MD, MSE, the John E. Cahill Family Professor and Director of the Stanford Movement Disorders Center at Stanford University School of Medicine in California. Research by Ricchi et al shows that low-frequency DBS also reduces gait impairment and freezing of gait in the early stages after implantation.

The factors that predict which patients will benefit from low-frequency DBS of the subthalamic nucleus (STN) are increased age, severe axial phenotype at five years after surgery, and lower preoperative levodopa responsiveness. But low-frequency DBS may not be adequate to improve other motor signs such as tremor, said Dr. Brontë-Stewart. Improvements on low-frequency DBS also may not last long.

The literature about which part of the STN should be stimulated for more effective treatment contains mixed results. Several investigations, including a 2011 study by McNeely et al, showed that high-frequency DBS is most efficacious when applied to the dorsolateral margin of STN. Other studies, including one performed by Dr. Brontë-Stewart and colleagues, indicate that stimulating the ventral area of the STN is more effective. Khoo et al found that 60-Hz stimulation was superior to 130-Hz stimulation for axial motor signs in Parkinson’s disease. “Clearly, we do not have consensus,” said Dr. Brontë-Stewart.

Postsurgical Gait Worsening

If a patient’s gait worsens shortly after DBS surgery, one possible explanation is that the leads were misplaced. Gait also could worsen if high-frequency DBS is applied outside the STN, especially in the anterior, medial, and dorsal regions, said Dr. Brontë-Stewart. If a patient’s gait and akinesia worsen with high-frequency STN DBS, but his or her tremor and rigidity improve, the cause may be diffusion of the stimulatory field into the pallido-fugal fibers before decussation of the pallido-pedunculopontine nucleus (PPN) pathway.

“The combination of STN DBS and medication may lead to lower-extremity dyskinesias,” which may account for gait worsening in some patients, said Dr. Brontë-Stewart. “It is important to look at these patients off medication. It may show that the dyskinesias are interfering with the gait studies, and whether the medication is affecting their cognition, which may also worsen gait.”

Patients’ gait and balance may worsen years after implantation. For example, stimulation-resistant axial symptoms may emerge after five years of DBS even if treatment remains effective for appendicular symptoms. This outcome may follow progression of the disease into nondopaminergic networks. Another possible cause is increased voltage that involves pallido-fugal pathways, thus enlarging the field of stimulation, said Dr. Brontë-Stewart.

For patients with delayed worsening, Dr. Brontë-Stewart advises that “if you reprogram DBS, focusing on gait symmetry, you can improve gait, including freezing of gait. Many of us program DBS for appendicular symptoms, and we fail to do this for gait…. Perhaps use bipolar or interleaving programming to restrict field extension.”

Preoperative improvement in Unified Parkinson’s Disease Rating Scale Part III scores in response to levodopa treatment is the best predictor of the effect of DBS on gait and freezing of gait. Improvement in freezing of gait following STN DBS has, in turn, been related to reduced medication dosing and lack of worsening of cognition, concluded Dr. Brontë-Stewart.

An Initial Approach to Vocal Tremor

The literature suggests that in patients with Parkinson’s disease, STN DBS often results in deterioration of speech that may not improve when the stimulation is stopped. Predictors of vocal problems include presurgical dysarthria, duration and severity of presurgical disease progression, and contact placement around the left STN.

“There is no large evidence base upon which to work when you are trying to … deal with someone who comes to you with speech problems,” said Bryan T. Klassen, MD, Assistant Professor of Neurology at the Mayo Clinic in Rochester, Minnesota. Addressing potential speech problems before implantation “should be a major part of any DBS protocol,” he added. A neurologist should document a patient’s pre-existing speech issues carefully. At Mayo Clinic, all patients scheduled to undergo implantation visit a speech pathologist first, and the examination is recorded.

In addition, patients need to understand that vocal tremor may be a symptom of Parkinson’s disease and may not result from DBS. On the other hand, neurologists also should inform patients that inserting the leads may cause dysarthria even before the battery for the device is implanted. Patients ultimately may have to choose between optimal tremor control and optimal speech, said Dr. Klassen.

Disease-Related Vocal Abnormalities

When a patient presents with speech problems, the neurologist must determine whether they result from the disease or from stimulation. Symptoms that have responded insufficiently to DBS are likely related to the disease, as are symptoms consistent with disease progression, such as gradually progressive dysarthria. These symptoms may respond to more aggressive stimulation. A patient with worsening hypokinetic dysarthria, however, may not improve, and could worsen, with more aggressive stimulation.

No clear criteria can help a neurologist determine whether to consider abnormal speech nonresponsive to stimulation. This determination relies on clinical judgment and should be communicated clearly to the patient, said Dr. Klassen. At that point, the neurologist and patient may consider speech therapy.

Stimulation-Related Vocal Abnormalities

DBS implantation itself sometimes causes dysarthria that may improve over the course of weeks or months. Implantation also may worsen pre-existing dysarthria. “That [side effect] does not necessarily have to limit what or how you are stimulating for tremor control,” said Dr. Klassen. If the symptom results from stimulation, it will improve when stimulation is stopped. It may take as little as a few seconds or as long as several weeks for vocal abnormalities to improve, but tremor worsens while stimulation is turned off.

A neurologist should locate the source of any stimulation-dependent vocal abnormality so that he or she can focus the stimulation field on that source. Although the left lead tends to be implicated in vocal abnormalities more often than the right lead, the neurologist needs to determine the leads’ contributions empirically by turning the leads off individually. “Depending on the washout [period], that may take more time than you would like,” said Dr. Klassen.

A review of the initial monopolar thresholds can indicate which regions along the electrode tend to affect speech the most. Postoperative imaging may help in this determination. If the patient has a prolonged washout period, the neurologist can give him or her “homework,” said Dr. Klassen. To do this, the neurologist sets the DBS device to run several programs and asks the patient to record his or her experiences in a notebook.

Optimizing the Stimulation Settings

Vocal abnormalities that arise after surgery may indicate that the stimulation parameters need to be modified. First, neurologists must choose the optimal lead location along the electrode. Eccentric steering or multiple-source current steering may reduce vocal tremor by better defining the distribution of current.

To reduce the volume of tissue activated, the neurologist can increase the pulse width, reduce the amplitude, or switch to a bipolar configuration. If a particular setting causes side effects, reducing the voltage may increase tolerability, albeit at the expense of efficacy. Switching from a high frequency to a low frequency also may reduce vocal tremor. If it is impossible to control limb tremor and vocal abnormalities optimally with a single setting, the patient may choose the setting that provides the most acceptable overall control.

Another option is to allow the patient to switch as necessary between a program optimized for tremor control and one optimized for speech. A patient may also choose to turn stimulation on and off as needed. Finally, adjunctive speech therapy can reduce vocal tremor, said Dr. Klassen.

—Erik Greb

LAS VEGAS—In patients with Parkinson’s disease, deep brain stimulation (DBS) can improve gait significantly and reduce vocal tremor. Some patients may fail to improve following implantation, however, and others who do improve may later worsen. In such cases, neurologists can address problems with gait and voice tremor using various steps to optimize DBS treatment, according to two lectures delivered at the 21st Annual Meeting of the North American Neuromodulation Society.

Refractory Gait Impairment

Gait impairment and freezing of gait may persist for years in some patients, despite DBS treatment at the traditional frequency of 130 Hz. Studies by Moreau and colleagues indicate that stimulation at 60 Hz improves these outcomes in previously refractory patients, said Helen M. Brontë-Stewart, MD, MSE, the John E. Cahill Family Professor and Director of the Stanford Movement Disorders Center at Stanford University School of Medicine in California. Research by Ricchi et al shows that low-frequency DBS also reduces gait impairment and freezing of gait in the early stages after implantation.

The factors that predict which patients will benefit from low-frequency DBS of the subthalamic nucleus (STN) are increased age, severe axial phenotype at five years after surgery, and lower preoperative levodopa responsiveness. But low-frequency DBS may not be adequate to improve other motor signs such as tremor, said Dr. Brontë-Stewart. Improvements on low-frequency DBS also may not last long.

The literature about which part of the STN should be stimulated for more effective treatment contains mixed results. Several investigations, including a 2011 study by McNeely et al, showed that high-frequency DBS is most efficacious when applied to the dorsolateral margin of STN. Other studies, including one performed by Dr. Brontë-Stewart and colleagues, indicate that stimulating the ventral area of the STN is more effective. Khoo et al found that 60-Hz stimulation was superior to 130-Hz stimulation for axial motor signs in Parkinson’s disease. “Clearly, we do not have consensus,” said Dr. Brontë-Stewart.

Postsurgical Gait Worsening

If a patient’s gait worsens shortly after DBS surgery, one possible explanation is that the leads were misplaced. Gait also could worsen if high-frequency DBS is applied outside the STN, especially in the anterior, medial, and dorsal regions, said Dr. Brontë-Stewart. If a patient’s gait and akinesia worsen with high-frequency STN DBS, but his or her tremor and rigidity improve, the cause may be diffusion of the stimulatory field into the pallido-fugal fibers before decussation of the pallido-pedunculopontine nucleus (PPN) pathway.

“The combination of STN DBS and medication may lead to lower-extremity dyskinesias,” which may account for gait worsening in some patients, said Dr. Brontë-Stewart. “It is important to look at these patients off medication. It may show that the dyskinesias are interfering with the gait studies, and whether the medication is affecting their cognition, which may also worsen gait.”

Patients’ gait and balance may worsen years after implantation. For example, stimulation-resistant axial symptoms may emerge after five years of DBS even if treatment remains effective for appendicular symptoms. This outcome may follow progression of the disease into nondopaminergic networks. Another possible cause is increased voltage that involves pallido-fugal pathways, thus enlarging the field of stimulation, said Dr. Brontë-Stewart.

For patients with delayed worsening, Dr. Brontë-Stewart advises that “if you reprogram DBS, focusing on gait symmetry, you can improve gait, including freezing of gait. Many of us program DBS for appendicular symptoms, and we fail to do this for gait…. Perhaps use bipolar or interleaving programming to restrict field extension.”

Preoperative improvement in Unified Parkinson’s Disease Rating Scale Part III scores in response to levodopa treatment is the best predictor of the effect of DBS on gait and freezing of gait. Improvement in freezing of gait following STN DBS has, in turn, been related to reduced medication dosing and lack of worsening of cognition, concluded Dr. Brontë-Stewart.

An Initial Approach to Vocal Tremor

The literature suggests that in patients with Parkinson’s disease, STN DBS often results in deterioration of speech that may not improve when the stimulation is stopped. Predictors of vocal problems include presurgical dysarthria, duration and severity of presurgical disease progression, and contact placement around the left STN.

“There is no large evidence base upon which to work when you are trying to … deal with someone who comes to you with speech problems,” said Bryan T. Klassen, MD, Assistant Professor of Neurology at the Mayo Clinic in Rochester, Minnesota. Addressing potential speech problems before implantation “should be a major part of any DBS protocol,” he added. A neurologist should document a patient’s pre-existing speech issues carefully. At Mayo Clinic, all patients scheduled to undergo implantation visit a speech pathologist first, and the examination is recorded.

In addition, patients need to understand that vocal tremor may be a symptom of Parkinson’s disease and may not result from DBS. On the other hand, neurologists also should inform patients that inserting the leads may cause dysarthria even before the battery for the device is implanted. Patients ultimately may have to choose between optimal tremor control and optimal speech, said Dr. Klassen.

Disease-Related Vocal Abnormalities

When a patient presents with speech problems, the neurologist must determine whether they result from the disease or from stimulation. Symptoms that have responded insufficiently to DBS are likely related to the disease, as are symptoms consistent with disease progression, such as gradually progressive dysarthria. These symptoms may respond to more aggressive stimulation. A patient with worsening hypokinetic dysarthria, however, may not improve, and could worsen, with more aggressive stimulation.

No clear criteria can help a neurologist determine whether to consider abnormal speech nonresponsive to stimulation. This determination relies on clinical judgment and should be communicated clearly to the patient, said Dr. Klassen. At that point, the neurologist and patient may consider speech therapy.

Stimulation-Related Vocal Abnormalities

DBS implantation itself sometimes causes dysarthria that may improve over the course of weeks or months. Implantation also may worsen pre-existing dysarthria. “That [side effect] does not necessarily have to limit what or how you are stimulating for tremor control,” said Dr. Klassen. If the symptom results from stimulation, it will improve when stimulation is stopped. It may take as little as a few seconds or as long as several weeks for vocal abnormalities to improve, but tremor worsens while stimulation is turned off.

A neurologist should locate the source of any stimulation-dependent vocal abnormality so that he or she can focus the stimulation field on that source. Although the left lead tends to be implicated in vocal abnormalities more often than the right lead, the neurologist needs to determine the leads’ contributions empirically by turning the leads off individually. “Depending on the washout [period], that may take more time than you would like,” said Dr. Klassen.