User login

Tackling the Readmissions Problem

Virtually every hospital system in the country deals with the challenge of readmissions, especially 30-day readmissions, and it’s only getting worse. “With the changes in healthcare and length of stay becoming shorter, patients are being discharged sicker than they used to be,” says Kevin Tolliver, MD, FACP, of Sidney & Lois Eskenazi Hospital Outpatient Care Center. “At our large public hospital system in Indianapolis, we designed an Internal Medicine Transitional Care Practice with the goal of decreasing readmission rates.”

Since October 2015, patients without a primary care doctor and those with a high LACE score have been referred to the new Transitional Care clinic. The first step: While still hospitalized, they meet briefly with Dr. Tolliver, who tells them, “‘You’re a candidate for this hospital follow-up clinic; this is why we think you would benefit.’ Patients, universally, are very thankful and eager to come.” The patients have their follow-up appointment scheduled before they are discharged.

At that appointment, the goal is to head off anything that would put them at risk for readmission. “We have a pharmacy, social workers, substance abuse counselors, diabetes educators—it’s one-stop shopping to address their needs,” Dr. Tolliver says. “Once we ensure that they’re not at risk for readmission, we help them get back to their primary care doctor or help them get one.”

Data for the clinic’s first four months show those patients who met with Dr. Tolliver before leaving the hospital were 50% more likely to keep their hospital follow-up visit. “That’s significant, particularly for us, because we take care of an indigent population; the no-show rate is usually our biggest challenge,” he says. Patients who were seen had a 30-day readmission rate of about 13.9%, while those who qualified but weren’t seen had a readmission rate of 21.8%.

“That has all kinds of positive consequences: less frustration for providers and patients and huge financial implications for the hospital system as well,” Dr. Tolliver says. “That there are these new models of post-discharge clinics out there and that there’s data suggesting that they work, particularly for a high-risk group of people, I think is worth knowing.”

Virtually every hospital system in the country deals with the challenge of readmissions, especially 30-day readmissions, and it’s only getting worse. “With the changes in healthcare and length of stay becoming shorter, patients are being discharged sicker than they used to be,” says Kevin Tolliver, MD, FACP, of Sidney & Lois Eskenazi Hospital Outpatient Care Center. “At our large public hospital system in Indianapolis, we designed an Internal Medicine Transitional Care Practice with the goal of decreasing readmission rates.”

Since October 2015, patients without a primary care doctor and those with a high LACE score have been referred to the new Transitional Care clinic. The first step: While still hospitalized, they meet briefly with Dr. Tolliver, who tells them, “‘You’re a candidate for this hospital follow-up clinic; this is why we think you would benefit.’ Patients, universally, are very thankful and eager to come.” The patients have their follow-up appointment scheduled before they are discharged.

At that appointment, the goal is to head off anything that would put them at risk for readmission. “We have a pharmacy, social workers, substance abuse counselors, diabetes educators—it’s one-stop shopping to address their needs,” Dr. Tolliver says. “Once we ensure that they’re not at risk for readmission, we help them get back to their primary care doctor or help them get one.”

Data for the clinic’s first four months show those patients who met with Dr. Tolliver before leaving the hospital were 50% more likely to keep their hospital follow-up visit. “That’s significant, particularly for us, because we take care of an indigent population; the no-show rate is usually our biggest challenge,” he says. Patients who were seen had a 30-day readmission rate of about 13.9%, while those who qualified but weren’t seen had a readmission rate of 21.8%.

“That has all kinds of positive consequences: less frustration for providers and patients and huge financial implications for the hospital system as well,” Dr. Tolliver says. “That there are these new models of post-discharge clinics out there and that there’s data suggesting that they work, particularly for a high-risk group of people, I think is worth knowing.”

Virtually every hospital system in the country deals with the challenge of readmissions, especially 30-day readmissions, and it’s only getting worse. “With the changes in healthcare and length of stay becoming shorter, patients are being discharged sicker than they used to be,” says Kevin Tolliver, MD, FACP, of Sidney & Lois Eskenazi Hospital Outpatient Care Center. “At our large public hospital system in Indianapolis, we designed an Internal Medicine Transitional Care Practice with the goal of decreasing readmission rates.”

Since October 2015, patients without a primary care doctor and those with a high LACE score have been referred to the new Transitional Care clinic. The first step: While still hospitalized, they meet briefly with Dr. Tolliver, who tells them, “‘You’re a candidate for this hospital follow-up clinic; this is why we think you would benefit.’ Patients, universally, are very thankful and eager to come.” The patients have their follow-up appointment scheduled before they are discharged.

At that appointment, the goal is to head off anything that would put them at risk for readmission. “We have a pharmacy, social workers, substance abuse counselors, diabetes educators—it’s one-stop shopping to address their needs,” Dr. Tolliver says. “Once we ensure that they’re not at risk for readmission, we help them get back to their primary care doctor or help them get one.”

Data for the clinic’s first four months show those patients who met with Dr. Tolliver before leaving the hospital were 50% more likely to keep their hospital follow-up visit. “That’s significant, particularly for us, because we take care of an indigent population; the no-show rate is usually our biggest challenge,” he says. Patients who were seen had a 30-day readmission rate of about 13.9%, while those who qualified but weren’t seen had a readmission rate of 21.8%.

“That has all kinds of positive consequences: less frustration for providers and patients and huge financial implications for the hospital system as well,” Dr. Tolliver says. “That there are these new models of post-discharge clinics out there and that there’s data suggesting that they work, particularly for a high-risk group of people, I think is worth knowing.”

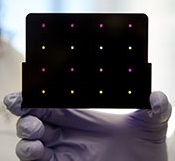

A new paper-based test for the Zika virus

based test for Zika virus.

Purple dots indicate samples

infected with Zika, and yellow

dots indicate Zika-free samples.

Photo courtesy of the Wyss

Institute at Harvard University

A new paper-based test can diagnose Zika virus infection within a few hours, according to research published in Cell.

The test is based on technology previously developed to detect the Ebola virus.

In October 2014, researchers demonstrated that they could create synthetic gene networks and embed them on small discs of paper.

These gene networks can be programmed to detect a particular genetic sequence, which causes the paper to change color.

Upon learning about the Zika outbreak, the researchers decided to try adapting this technology to diagnose Zika.

“In a small number of weeks, we developed and validated a relatively rapid, inexpensive Zika diagnostic platform,” said study author James Collins, PhD, of the Massachusetts Institute of Technology in Cambridge.

Dr Collins and his colleagues developed sensors, embedded in the paper discs, that can detect 24 different RNA sequences found in the Zika viral genome. When the target RNA sequence is present, it initiates a series of interactions that turns the paper from yellow to purple.

This color change can be seen with the naked eye, but the researchers also developed an electronic reader that makes it easier to quantify the change, especially in cases where the sensor is detecting more than one RNA sequence.

All of the cellular components necessary for this process—including proteins, nucleic acids, and ribosomes—can be extracted from living cells and freeze-dried onto paper.

These paper discs can be stored at room temperature, making it easy to ship them to any location. Once rehydrated, all of the components function just as they would inside a living cell.

The researchers also incorporated a step that boosts the amount of viral RNA in the blood sample before exposing it to the sensor, using a system called nucleic acid sequence based amplification (NASBA). This amplification step, which takes 1 to 2 hours, increases the test’s sensitivity 1 million-fold.

The team tested this diagnostic platform using synthesized RNA sequences corresponding to the Zika genome, which were then added to human blood serum.

They found the test could detect very low viral RNA concentrations in those samples and could also distinguish Zika from dengue.

The researchers then tested samples taken from monkeys infected with the Zika virus. (Samples from humans affected by the current Zika outbreak were too difficult to obtain.)

The team found that, in these samples, the test could detect viral RNA concentrations as low as 2 or 3 parts per quadrillion.

The researchers believe this approach could also be adapted to other viruses that may emerge in the future. Dr Collins hopes to team up with other scientists to further develop the technology for diagnosing Zika.

“Here, we’ve done a nice proof-of-principle demonstration, but more work and additional testing would be needed to ensure safety and efficacy before actual deployment,” he said. “We’re not far off.” ![]()

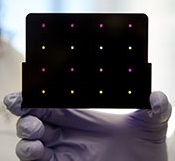

based test for Zika virus.

Purple dots indicate samples

infected with Zika, and yellow

dots indicate Zika-free samples.

Photo courtesy of the Wyss

Institute at Harvard University

A new paper-based test can diagnose Zika virus infection within a few hours, according to research published in Cell.

The test is based on technology previously developed to detect the Ebola virus.

In October 2014, researchers demonstrated that they could create synthetic gene networks and embed them on small discs of paper.

These gene networks can be programmed to detect a particular genetic sequence, which causes the paper to change color.

Upon learning about the Zika outbreak, the researchers decided to try adapting this technology to diagnose Zika.

“In a small number of weeks, we developed and validated a relatively rapid, inexpensive Zika diagnostic platform,” said study author James Collins, PhD, of the Massachusetts Institute of Technology in Cambridge.

Dr Collins and his colleagues developed sensors, embedded in the paper discs, that can detect 24 different RNA sequences found in the Zika viral genome. When the target RNA sequence is present, it initiates a series of interactions that turns the paper from yellow to purple.

This color change can be seen with the naked eye, but the researchers also developed an electronic reader that makes it easier to quantify the change, especially in cases where the sensor is detecting more than one RNA sequence.

All of the cellular components necessary for this process—including proteins, nucleic acids, and ribosomes—can be extracted from living cells and freeze-dried onto paper.

These paper discs can be stored at room temperature, making it easy to ship them to any location. Once rehydrated, all of the components function just as they would inside a living cell.

The researchers also incorporated a step that boosts the amount of viral RNA in the blood sample before exposing it to the sensor, using a system called nucleic acid sequence based amplification (NASBA). This amplification step, which takes 1 to 2 hours, increases the test’s sensitivity 1 million-fold.

The team tested this diagnostic platform using synthesized RNA sequences corresponding to the Zika genome, which were then added to human blood serum.

They found the test could detect very low viral RNA concentrations in those samples and could also distinguish Zika from dengue.

The researchers then tested samples taken from monkeys infected with the Zika virus. (Samples from humans affected by the current Zika outbreak were too difficult to obtain.)

The team found that, in these samples, the test could detect viral RNA concentrations as low as 2 or 3 parts per quadrillion.

The researchers believe this approach could also be adapted to other viruses that may emerge in the future. Dr Collins hopes to team up with other scientists to further develop the technology for diagnosing Zika.

“Here, we’ve done a nice proof-of-principle demonstration, but more work and additional testing would be needed to ensure safety and efficacy before actual deployment,” he said. “We’re not far off.” ![]()

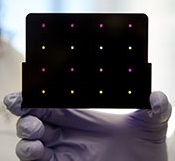

based test for Zika virus.

Purple dots indicate samples

infected with Zika, and yellow

dots indicate Zika-free samples.

Photo courtesy of the Wyss

Institute at Harvard University

A new paper-based test can diagnose Zika virus infection within a few hours, according to research published in Cell.

The test is based on technology previously developed to detect the Ebola virus.

In October 2014, researchers demonstrated that they could create synthetic gene networks and embed them on small discs of paper.

These gene networks can be programmed to detect a particular genetic sequence, which causes the paper to change color.

Upon learning about the Zika outbreak, the researchers decided to try adapting this technology to diagnose Zika.

“In a small number of weeks, we developed and validated a relatively rapid, inexpensive Zika diagnostic platform,” said study author James Collins, PhD, of the Massachusetts Institute of Technology in Cambridge.

Dr Collins and his colleagues developed sensors, embedded in the paper discs, that can detect 24 different RNA sequences found in the Zika viral genome. When the target RNA sequence is present, it initiates a series of interactions that turns the paper from yellow to purple.

This color change can be seen with the naked eye, but the researchers also developed an electronic reader that makes it easier to quantify the change, especially in cases where the sensor is detecting more than one RNA sequence.

All of the cellular components necessary for this process—including proteins, nucleic acids, and ribosomes—can be extracted from living cells and freeze-dried onto paper.

These paper discs can be stored at room temperature, making it easy to ship them to any location. Once rehydrated, all of the components function just as they would inside a living cell.

The researchers also incorporated a step that boosts the amount of viral RNA in the blood sample before exposing it to the sensor, using a system called nucleic acid sequence based amplification (NASBA). This amplification step, which takes 1 to 2 hours, increases the test’s sensitivity 1 million-fold.

The team tested this diagnostic platform using synthesized RNA sequences corresponding to the Zika genome, which were then added to human blood serum.

They found the test could detect very low viral RNA concentrations in those samples and could also distinguish Zika from dengue.

The researchers then tested samples taken from monkeys infected with the Zika virus. (Samples from humans affected by the current Zika outbreak were too difficult to obtain.)

The team found that, in these samples, the test could detect viral RNA concentrations as low as 2 or 3 parts per quadrillion.

The researchers believe this approach could also be adapted to other viruses that may emerge in the future. Dr Collins hopes to team up with other scientists to further develop the technology for diagnosing Zika.

“Here, we’ve done a nice proof-of-principle demonstration, but more work and additional testing would be needed to ensure safety and efficacy before actual deployment,” he said. “We’re not far off.” ![]()

NCI: Use of dug wells in New England linked with risk of bladder cancer

Increased risk of bladder cancer in New England may be partly due to drinking water from private wells, particularly dug wells established during the first half of the 20th century, according to researchers from the National Cancer Institute. Bladder cancer mortality rates have been elevated in northern New England for at least 5 decades. Incidence rates in Maine, New Hampshire, and Vermont are about 20% higher than in the United States overall, researchers said in a press release.

To explore reasons for the elevated risk, NCI investigators and colleagues in New England compared well water consumption, smoking, occupation, ancestry, use of wood-burning stoves, and consumption of various foods for 1,213 people in New England who were newly diagnosed with bladder cancer, and 1,418 people without bladder cancer who were matched by geographic area.

The amount of arsenic ingested through drinking water was estimated based on current levels and historical information. Increasing cumulative exposure was associated with an increasing risk of bladder cancer. Among people who used private wells, people who drank the most water had twice the risk of those who drank the least. Highest risk was seen among those who drank water from dug wells established before 1960, when the use of arsenic-based pesticides was common.

“Arsenic is an established cause of bladder cancer, largely based on observations from earlier studies in highly exposed populations,” said Debra Silverman, Sc.D., chief of the Occupational and Environmental Epidemiology Branch of the NCI, Rockville, Md., and senior author on the study. “However, emerging evidence suggests that low to moderate levels of exposure may also increase risk,” she said in the press release.

“Although smoking and employment in high-risk occupations both showed their expected associations with bladder cancer risk in this population, they were similar to those found in other populations,” Dr. Silverman said. “This suggests that neither risk factor explains the excess occurrence of bladder cancer in northern New England.”

These study results indicate historical consumption of water from private wells, particularly dug wells in an era when arsenic-based pesticides were widely used, may have contributed to the excess rate in New England residents, Dr. Silverman and colleagues concluded.

The study was published in the Journal of the National Cancer Institute (2016;108[9]:djw099).

lnikolaides@frontlinemedcom.com

On Twitter @NikolaidesLaura

Increased risk of bladder cancer in New England may be partly due to drinking water from private wells, particularly dug wells established during the first half of the 20th century, according to researchers from the National Cancer Institute. Bladder cancer mortality rates have been elevated in northern New England for at least 5 decades. Incidence rates in Maine, New Hampshire, and Vermont are about 20% higher than in the United States overall, researchers said in a press release.

To explore reasons for the elevated risk, NCI investigators and colleagues in New England compared well water consumption, smoking, occupation, ancestry, use of wood-burning stoves, and consumption of various foods for 1,213 people in New England who were newly diagnosed with bladder cancer, and 1,418 people without bladder cancer who were matched by geographic area.

The amount of arsenic ingested through drinking water was estimated based on current levels and historical information. Increasing cumulative exposure was associated with an increasing risk of bladder cancer. Among people who used private wells, people who drank the most water had twice the risk of those who drank the least. Highest risk was seen among those who drank water from dug wells established before 1960, when the use of arsenic-based pesticides was common.

“Arsenic is an established cause of bladder cancer, largely based on observations from earlier studies in highly exposed populations,” said Debra Silverman, Sc.D., chief of the Occupational and Environmental Epidemiology Branch of the NCI, Rockville, Md., and senior author on the study. “However, emerging evidence suggests that low to moderate levels of exposure may also increase risk,” she said in the press release.

“Although smoking and employment in high-risk occupations both showed their expected associations with bladder cancer risk in this population, they were similar to those found in other populations,” Dr. Silverman said. “This suggests that neither risk factor explains the excess occurrence of bladder cancer in northern New England.”

These study results indicate historical consumption of water from private wells, particularly dug wells in an era when arsenic-based pesticides were widely used, may have contributed to the excess rate in New England residents, Dr. Silverman and colleagues concluded.

The study was published in the Journal of the National Cancer Institute (2016;108[9]:djw099).

lnikolaides@frontlinemedcom.com

On Twitter @NikolaidesLaura

Increased risk of bladder cancer in New England may be partly due to drinking water from private wells, particularly dug wells established during the first half of the 20th century, according to researchers from the National Cancer Institute. Bladder cancer mortality rates have been elevated in northern New England for at least 5 decades. Incidence rates in Maine, New Hampshire, and Vermont are about 20% higher than in the United States overall, researchers said in a press release.

To explore reasons for the elevated risk, NCI investigators and colleagues in New England compared well water consumption, smoking, occupation, ancestry, use of wood-burning stoves, and consumption of various foods for 1,213 people in New England who were newly diagnosed with bladder cancer, and 1,418 people without bladder cancer who were matched by geographic area.

The amount of arsenic ingested through drinking water was estimated based on current levels and historical information. Increasing cumulative exposure was associated with an increasing risk of bladder cancer. Among people who used private wells, people who drank the most water had twice the risk of those who drank the least. Highest risk was seen among those who drank water from dug wells established before 1960, when the use of arsenic-based pesticides was common.

“Arsenic is an established cause of bladder cancer, largely based on observations from earlier studies in highly exposed populations,” said Debra Silverman, Sc.D., chief of the Occupational and Environmental Epidemiology Branch of the NCI, Rockville, Md., and senior author on the study. “However, emerging evidence suggests that low to moderate levels of exposure may also increase risk,” she said in the press release.

“Although smoking and employment in high-risk occupations both showed their expected associations with bladder cancer risk in this population, they were similar to those found in other populations,” Dr. Silverman said. “This suggests that neither risk factor explains the excess occurrence of bladder cancer in northern New England.”

These study results indicate historical consumption of water from private wells, particularly dug wells in an era when arsenic-based pesticides were widely used, may have contributed to the excess rate in New England residents, Dr. Silverman and colleagues concluded.

The study was published in the Journal of the National Cancer Institute (2016;108[9]:djw099).

lnikolaides@frontlinemedcom.com

On Twitter @NikolaidesLaura

Amaal J. Starling, MD

The video associated with this article is no longer available on this site. Please view all of our videos on the MDedge YouTube channel

The video associated with this article is no longer available on this site. Please view all of our videos on the MDedge YouTube channel

The video associated with this article is no longer available on this site. Please view all of our videos on the MDedge YouTube channel

Brodalumab effective for rare, severe types of psoriasis

The investigational interleukin-17 inhibitor brodalumab was safe and effective in a small phase III Japanese study of adults with two rare and severe types of psoriasis, generalized pustular psoriasis (GPP) and psoriatic erythroderma (PsE). The results were published in the British Journal of Dermatology.

The 52-week open label studyevaluated the safety and efficacy of brodalumab in 30 Japanese adults (mean age 48 years) with GPP (12 patients) and PsE (18 patients). Brodalumab, a human monoclonal antibody against human IL-17RA that blocks the biologic activities of IL-17, was administered by subcutaneous injection. Efficacy was assessed via Clinical Global Impression of Improvement (CGI) scores, the primary endpoint (Br J Dermatol. 2016 April 23. doi: 10.1111/bjd.14702).

A high proportion of patients with either disease achieved “improved” or “remission” CGI scores at weeks 2, 12, and 52, reported Dr. Kenshi Yamasaki, of the department of dermatology at Tohoku University, Miyagi, Japan, and his associates

At week 52, almost 92% of those with GPP and 100% of those with PsE had achieved “improved” or “remission” scores. The most common adverse event was nasopharyngitis, which occurred in one-third of patients. Infection-related adverse events were grade 1 or 2, no adverse events were fatal, and none of the five serious adverse events noted were considered to be attributable to treatment, they added. Although anti-brodalumab neutralizing antibodies were not detected, one patient tested positive for anti-brodalumab binding antibodies.

Noting that treatment with brodalumab has been associated with significant improvements in patients with plaque psoriasis and psoriatic arthritis in phase II and III studies, “results from this study confirm that brodalumab can improve patient symptoms not long after treatment is initiated,” in patients with GPP and PsE, the authors concluded. While acknowledging the study limitations, including the open label design and a small sample size, they added, “IL-17RA blocking will be a promising therapeutic target in patients with GPP and PsE.”

The safety profile and low expression of anti-brodalumab antibodies indicated that brodalumab was suitable for long-term use, they said.

The study was funded by Kyowa Hakko Kirin. All authors disclosed ties to pharmaceutical companies, including the funding source; one author is an employee of the company.

The investigational interleukin-17 inhibitor brodalumab was safe and effective in a small phase III Japanese study of adults with two rare and severe types of psoriasis, generalized pustular psoriasis (GPP) and psoriatic erythroderma (PsE). The results were published in the British Journal of Dermatology.

The 52-week open label studyevaluated the safety and efficacy of brodalumab in 30 Japanese adults (mean age 48 years) with GPP (12 patients) and PsE (18 patients). Brodalumab, a human monoclonal antibody against human IL-17RA that blocks the biologic activities of IL-17, was administered by subcutaneous injection. Efficacy was assessed via Clinical Global Impression of Improvement (CGI) scores, the primary endpoint (Br J Dermatol. 2016 April 23. doi: 10.1111/bjd.14702).

A high proportion of patients with either disease achieved “improved” or “remission” CGI scores at weeks 2, 12, and 52, reported Dr. Kenshi Yamasaki, of the department of dermatology at Tohoku University, Miyagi, Japan, and his associates

At week 52, almost 92% of those with GPP and 100% of those with PsE had achieved “improved” or “remission” scores. The most common adverse event was nasopharyngitis, which occurred in one-third of patients. Infection-related adverse events were grade 1 or 2, no adverse events were fatal, and none of the five serious adverse events noted were considered to be attributable to treatment, they added. Although anti-brodalumab neutralizing antibodies were not detected, one patient tested positive for anti-brodalumab binding antibodies.

Noting that treatment with brodalumab has been associated with significant improvements in patients with plaque psoriasis and psoriatic arthritis in phase II and III studies, “results from this study confirm that brodalumab can improve patient symptoms not long after treatment is initiated,” in patients with GPP and PsE, the authors concluded. While acknowledging the study limitations, including the open label design and a small sample size, they added, “IL-17RA blocking will be a promising therapeutic target in patients with GPP and PsE.”

The safety profile and low expression of anti-brodalumab antibodies indicated that brodalumab was suitable for long-term use, they said.

The study was funded by Kyowa Hakko Kirin. All authors disclosed ties to pharmaceutical companies, including the funding source; one author is an employee of the company.

The investigational interleukin-17 inhibitor brodalumab was safe and effective in a small phase III Japanese study of adults with two rare and severe types of psoriasis, generalized pustular psoriasis (GPP) and psoriatic erythroderma (PsE). The results were published in the British Journal of Dermatology.

The 52-week open label studyevaluated the safety and efficacy of brodalumab in 30 Japanese adults (mean age 48 years) with GPP (12 patients) and PsE (18 patients). Brodalumab, a human monoclonal antibody against human IL-17RA that blocks the biologic activities of IL-17, was administered by subcutaneous injection. Efficacy was assessed via Clinical Global Impression of Improvement (CGI) scores, the primary endpoint (Br J Dermatol. 2016 April 23. doi: 10.1111/bjd.14702).

A high proportion of patients with either disease achieved “improved” or “remission” CGI scores at weeks 2, 12, and 52, reported Dr. Kenshi Yamasaki, of the department of dermatology at Tohoku University, Miyagi, Japan, and his associates

At week 52, almost 92% of those with GPP and 100% of those with PsE had achieved “improved” or “remission” scores. The most common adverse event was nasopharyngitis, which occurred in one-third of patients. Infection-related adverse events were grade 1 or 2, no adverse events were fatal, and none of the five serious adverse events noted were considered to be attributable to treatment, they added. Although anti-brodalumab neutralizing antibodies were not detected, one patient tested positive for anti-brodalumab binding antibodies.

Noting that treatment with brodalumab has been associated with significant improvements in patients with plaque psoriasis and psoriatic arthritis in phase II and III studies, “results from this study confirm that brodalumab can improve patient symptoms not long after treatment is initiated,” in patients with GPP and PsE, the authors concluded. While acknowledging the study limitations, including the open label design and a small sample size, they added, “IL-17RA blocking will be a promising therapeutic target in patients with GPP and PsE.”

The safety profile and low expression of anti-brodalumab antibodies indicated that brodalumab was suitable for long-term use, they said.

The study was funded by Kyowa Hakko Kirin. All authors disclosed ties to pharmaceutical companies, including the funding source; one author is an employee of the company.

FROM THE BRITISH JOURNAL OF DERMATOLOGY

Key clinical point: The interluekin-17 inhibitor brodalumab significantly improved symptoms of generalized pustular psoriasis and psoriatic erythroderma in a small, Japanese open label study.

Major finding: Almost all brodalumab-treated patients with generalized pustular psoriasis (GPP) or psoriatic erythroderma (PsE) showed high levels of clinical improvement and low levels of adverse events.

Data sources: The phase III open-label multicenter study evaluated the safety and efficacy of brodalumab in 30 adults with GPP or PsE over 52 weeks.

Disclosures: Funding was provided by Kyowa Hakko Kirin. All authors disclosed ties to industry sources, including the funding source.

Reassurance on cardiovascular safety of Breo Ellipta for COPD

CHICAGO – Using an inhaled long-acting beta agonist and corticosteroid to treat patients with moderate chronic obstructive pulmonary disease who have known cardiovascular disease had no impact on the cardiovascular event rate in the landmark SUMMIT trial, Dr. David E. Newby reported at the annual meeting of the American College of Cardiology.

SUMMIT (the Study to Understand Mortality and Morbidity) was a randomized, double-blind, placebo-controlled, 43-country, four-arm clinical trial in which 16,485 patients with moderate COPD were placed on once-daily inhaled fluticasone furoate/vilanterol 100/25 mcg by dry powder inhaler (Breo Ellipta), either drug alone, or placebo for an average of 2 years. Two-thirds of participants had overt cardiovascular disease; the rest were at high risk as defined by age greater than 60 years plus the presence of two or more cardiovascular risk factors.

This was the first large prospective clinical trial to investigate the impact of respiratory therapy on survival in patients with two commonly comorbid conditions. The incidence of major adverse cardiovascular events – a prespecified secondary endpoint – was of major interest because some other long-acting beta agonists have been linked to increased cardiovascular risk, explained Dr. Newby of the University of Edinburgh.

The primary endpoint in SUMMIT was all-cause mortality. The 12.2% relative risk reduction in the fluticasone furoate/vilanterol group, compared with placebo, didn’t achieve statistical significance. Neither did the 7.4% reduction in the secondary composite endpoint of cardiovascular death, MI, stroke, unstable angina, or TIA seen in the corticosteroid/LABA group, which is reassuring from a safety standpoint, he noted.

A positive result was seen for another secondary endpoint: the rate of lung function decline as measured by forced expiratory volume in 1 second. The rate of decline was 8 mL/year less with fluticasone furoate/vilanterol, compared with placebo.

The Breo Ellipta group also had significantly lower rates of moderate or severe COPD exacerbations, hospitalization for exacerbations, and improved quality of life as measured by the St. George’s Respiratory Questionnaire – COPD total score at 12 months.

The SUMMIT trial was sponsored by GlaxoSmithKline. The presenter is a consultant to GSK and eight other pharmaceutical companies.

CHICAGO – Using an inhaled long-acting beta agonist and corticosteroid to treat patients with moderate chronic obstructive pulmonary disease who have known cardiovascular disease had no impact on the cardiovascular event rate in the landmark SUMMIT trial, Dr. David E. Newby reported at the annual meeting of the American College of Cardiology.

SUMMIT (the Study to Understand Mortality and Morbidity) was a randomized, double-blind, placebo-controlled, 43-country, four-arm clinical trial in which 16,485 patients with moderate COPD were placed on once-daily inhaled fluticasone furoate/vilanterol 100/25 mcg by dry powder inhaler (Breo Ellipta), either drug alone, or placebo for an average of 2 years. Two-thirds of participants had overt cardiovascular disease; the rest were at high risk as defined by age greater than 60 years plus the presence of two or more cardiovascular risk factors.

This was the first large prospective clinical trial to investigate the impact of respiratory therapy on survival in patients with two commonly comorbid conditions. The incidence of major adverse cardiovascular events – a prespecified secondary endpoint – was of major interest because some other long-acting beta agonists have been linked to increased cardiovascular risk, explained Dr. Newby of the University of Edinburgh.

The primary endpoint in SUMMIT was all-cause mortality. The 12.2% relative risk reduction in the fluticasone furoate/vilanterol group, compared with placebo, didn’t achieve statistical significance. Neither did the 7.4% reduction in the secondary composite endpoint of cardiovascular death, MI, stroke, unstable angina, or TIA seen in the corticosteroid/LABA group, which is reassuring from a safety standpoint, he noted.

A positive result was seen for another secondary endpoint: the rate of lung function decline as measured by forced expiratory volume in 1 second. The rate of decline was 8 mL/year less with fluticasone furoate/vilanterol, compared with placebo.

The Breo Ellipta group also had significantly lower rates of moderate or severe COPD exacerbations, hospitalization for exacerbations, and improved quality of life as measured by the St. George’s Respiratory Questionnaire – COPD total score at 12 months.

The SUMMIT trial was sponsored by GlaxoSmithKline. The presenter is a consultant to GSK and eight other pharmaceutical companies.

CHICAGO – Using an inhaled long-acting beta agonist and corticosteroid to treat patients with moderate chronic obstructive pulmonary disease who have known cardiovascular disease had no impact on the cardiovascular event rate in the landmark SUMMIT trial, Dr. David E. Newby reported at the annual meeting of the American College of Cardiology.

SUMMIT (the Study to Understand Mortality and Morbidity) was a randomized, double-blind, placebo-controlled, 43-country, four-arm clinical trial in which 16,485 patients with moderate COPD were placed on once-daily inhaled fluticasone furoate/vilanterol 100/25 mcg by dry powder inhaler (Breo Ellipta), either drug alone, or placebo for an average of 2 years. Two-thirds of participants had overt cardiovascular disease; the rest were at high risk as defined by age greater than 60 years plus the presence of two or more cardiovascular risk factors.

This was the first large prospective clinical trial to investigate the impact of respiratory therapy on survival in patients with two commonly comorbid conditions. The incidence of major adverse cardiovascular events – a prespecified secondary endpoint – was of major interest because some other long-acting beta agonists have been linked to increased cardiovascular risk, explained Dr. Newby of the University of Edinburgh.

The primary endpoint in SUMMIT was all-cause mortality. The 12.2% relative risk reduction in the fluticasone furoate/vilanterol group, compared with placebo, didn’t achieve statistical significance. Neither did the 7.4% reduction in the secondary composite endpoint of cardiovascular death, MI, stroke, unstable angina, or TIA seen in the corticosteroid/LABA group, which is reassuring from a safety standpoint, he noted.

A positive result was seen for another secondary endpoint: the rate of lung function decline as measured by forced expiratory volume in 1 second. The rate of decline was 8 mL/year less with fluticasone furoate/vilanterol, compared with placebo.

The Breo Ellipta group also had significantly lower rates of moderate or severe COPD exacerbations, hospitalization for exacerbations, and improved quality of life as measured by the St. George’s Respiratory Questionnaire – COPD total score at 12 months.

The SUMMIT trial was sponsored by GlaxoSmithKline. The presenter is a consultant to GSK and eight other pharmaceutical companies.

AT ACC 16

Key clinical point: Once-daily inhaled fluticasone furoate/vilanterol 100/25 mcg to treat moderate COPD in patients with comorbid cardiovascular disease did not increase their risk of cardiovascular events.

Major finding: The major adverse cardiovascular event rate in patients on once-daily inhaled fluticasone furoate/vilanterol 100/25 mcg was 7.4% less than with placebo, a nonsignificant difference.

Data source: This was a randomized, double-blind, placebo-controlled, 2-year clinical trial including 16,485 patients with moderate COPD and overt cardiovascular disease or at high risk for it.

Disclosures: The SUMMIT trial was sponsored by GlaxoSmithKline. The presenter is a consultant to GSK and eight other pharmaceutical companies.

What’s Less Noticeable: A Straight Scar or a Zigzag Scar?

One of the determinants of a successful surgical outcome is the perception, on the part of the patient, of the cosmesis of a scar. The use of Z-plasty is an accepted means by which to break a scar up into smaller geometric segments. In some instances, a Z-plasty is used for scar revision to elongate a scar that may be pulling. However, a study published online in JAMA Facial Plastic Surgery on April 7 mentions the lack of studies measuring the perception of these scars among the normal population after surgery.

Ratnarathorn et al designed a prospective Internet-based survey with a goal of 580 responses to give a power of 90%. The survey was distributed to a diverse sample of the US population. Using editing software, Ratnarathorn et al superimposed a mature linear scar and a mature zigzag scar onto the faces of standardized headshots from 4 individuals (2 males, 2 females). Each individual had 1 image of the linear scar and 1 image of the zigzag scars superimposed onto each of 3 anatomical areas—forehead (flat surface), cheek (convex surface), and temple (concave surface)—yielding 24 images for the respondents to assess.

A 24.5% (n=876) response rate was achieved with 3575 surveys distributed. Of the 876 respondents, 810 (92.5%) completed the survey (46.1% male, 53.9% female). Respondents were asked to rate the scars on a scale of 1 to 10 (1=normal skin; 10=worst scar imaginable).

Results were statistically significantly lower (better) for the linear scars compared to the zigzag scars in all 3 anatomic areas and across both male and female groups with a mean score of 2.9 versus 4.5 (P<.001). A multivariable regression model of respondent age, sex, educational level, and income showed no statistically significant effect on the rating of the scars.

What’s the issue?

This study highlights some interesting points. Coming from an academic practice, we oftentimes find ourselves teaching residents a variety of skin closure techniques to deal with defects from skin cancer excisions. It is both challenging and fun to design complex closures; however, we must keep in mind what is in the best interest of the patient. One of the points I try to emphasize is that we must understand that there are no true straight lines on the face. In fact, when scars from procedures appear as geometric shapes on the face, our eyes tend to be drawn to them. For this reason, it often is best to use curvilinear lines wherever possible. Ratnarathorn et al highlights that point exactly. More studies of this nature are needed to assess what is perceived as a successful outcome, by both physicians and patients.

As you follow your patients for the long-term, have you noticed that you perform more or fewer zigzag scars?

One of the determinants of a successful surgical outcome is the perception, on the part of the patient, of the cosmesis of a scar. The use of Z-plasty is an accepted means by which to break a scar up into smaller geometric segments. In some instances, a Z-plasty is used for scar revision to elongate a scar that may be pulling. However, a study published online in JAMA Facial Plastic Surgery on April 7 mentions the lack of studies measuring the perception of these scars among the normal population after surgery.

Ratnarathorn et al designed a prospective Internet-based survey with a goal of 580 responses to give a power of 90%. The survey was distributed to a diverse sample of the US population. Using editing software, Ratnarathorn et al superimposed a mature linear scar and a mature zigzag scar onto the faces of standardized headshots from 4 individuals (2 males, 2 females). Each individual had 1 image of the linear scar and 1 image of the zigzag scars superimposed onto each of 3 anatomical areas—forehead (flat surface), cheek (convex surface), and temple (concave surface)—yielding 24 images for the respondents to assess.

A 24.5% (n=876) response rate was achieved with 3575 surveys distributed. Of the 876 respondents, 810 (92.5%) completed the survey (46.1% male, 53.9% female). Respondents were asked to rate the scars on a scale of 1 to 10 (1=normal skin; 10=worst scar imaginable).

Results were statistically significantly lower (better) for the linear scars compared to the zigzag scars in all 3 anatomic areas and across both male and female groups with a mean score of 2.9 versus 4.5 (P<.001). A multivariable regression model of respondent age, sex, educational level, and income showed no statistically significant effect on the rating of the scars.

What’s the issue?

This study highlights some interesting points. Coming from an academic practice, we oftentimes find ourselves teaching residents a variety of skin closure techniques to deal with defects from skin cancer excisions. It is both challenging and fun to design complex closures; however, we must keep in mind what is in the best interest of the patient. One of the points I try to emphasize is that we must understand that there are no true straight lines on the face. In fact, when scars from procedures appear as geometric shapes on the face, our eyes tend to be drawn to them. For this reason, it often is best to use curvilinear lines wherever possible. Ratnarathorn et al highlights that point exactly. More studies of this nature are needed to assess what is perceived as a successful outcome, by both physicians and patients.

As you follow your patients for the long-term, have you noticed that you perform more or fewer zigzag scars?

One of the determinants of a successful surgical outcome is the perception, on the part of the patient, of the cosmesis of a scar. The use of Z-plasty is an accepted means by which to break a scar up into smaller geometric segments. In some instances, a Z-plasty is used for scar revision to elongate a scar that may be pulling. However, a study published online in JAMA Facial Plastic Surgery on April 7 mentions the lack of studies measuring the perception of these scars among the normal population after surgery.

Ratnarathorn et al designed a prospective Internet-based survey with a goal of 580 responses to give a power of 90%. The survey was distributed to a diverse sample of the US population. Using editing software, Ratnarathorn et al superimposed a mature linear scar and a mature zigzag scar onto the faces of standardized headshots from 4 individuals (2 males, 2 females). Each individual had 1 image of the linear scar and 1 image of the zigzag scars superimposed onto each of 3 anatomical areas—forehead (flat surface), cheek (convex surface), and temple (concave surface)—yielding 24 images for the respondents to assess.

A 24.5% (n=876) response rate was achieved with 3575 surveys distributed. Of the 876 respondents, 810 (92.5%) completed the survey (46.1% male, 53.9% female). Respondents were asked to rate the scars on a scale of 1 to 10 (1=normal skin; 10=worst scar imaginable).

Results were statistically significantly lower (better) for the linear scars compared to the zigzag scars in all 3 anatomic areas and across both male and female groups with a mean score of 2.9 versus 4.5 (P<.001). A multivariable regression model of respondent age, sex, educational level, and income showed no statistically significant effect on the rating of the scars.

What’s the issue?

This study highlights some interesting points. Coming from an academic practice, we oftentimes find ourselves teaching residents a variety of skin closure techniques to deal with defects from skin cancer excisions. It is both challenging and fun to design complex closures; however, we must keep in mind what is in the best interest of the patient. One of the points I try to emphasize is that we must understand that there are no true straight lines on the face. In fact, when scars from procedures appear as geometric shapes on the face, our eyes tend to be drawn to them. For this reason, it often is best to use curvilinear lines wherever possible. Ratnarathorn et al highlights that point exactly. More studies of this nature are needed to assess what is perceived as a successful outcome, by both physicians and patients.

As you follow your patients for the long-term, have you noticed that you perform more or fewer zigzag scars?

Why we should strive for a vaginal hysterectomy rate of 40%

One of the great honors of my professional career was being nominated to the presidency of the Society of Gynecologic Surgeons and being given the opportunity to deliver the presidential address at the Society’s 42nd annual scientific meeting in Palm Springs, Calif.

One of the core principles of the SGS mission statement is supporting excellence in gynecologic surgery and, to that end, the main focus of my term was to address the decline in vaginal hysterectomy rates. What follows is an excerpt from my speech explaining the rationale for vaginal hysterectomy (VH) and steps the SGS is taking to reverse the decline.

Unfortunately, what is happening in today’s practice environment is declining use of vaginal hysterectomy, with concomitant increases in endoscopic hysterectomy, mostly with the use of robotic assistance. Being the president of a society previously known as the Vaginal Surgeons Society, it would not be surprising to hear that I have been accused of being “anti-robot.”

Nothing could be further from the truth.

When we talk about the surgical treatment of patients with endometrial and cervical cancer, I do not need a randomized clinical trial to know that not making a laparotomy incision is probably a good thing when you’re treating these patients. There are benefits to using robotic techniques in this subpopulation; it is cost effective due to the reduced morbidity and straight stick laparoscopy for these patients is difficult to perform; therefore it’s not been as widely published or performed. I believe that robotic hysterectomy for these disorders should be the standard of care. In this regard, I am pro robot (Gynecologic Oncol. 2015;138[2]:457-71).

On the other hand, I also don’t need a randomized trial (even though randomized trials exist) to know that if you have a choice to make, or not make, extra incisions during surgery, it’s better to not make the extra incisions.

It’s certainly not rocket science to know that a Zeppelin or Heaney clamp is orders of magnitude cheaper than equipment required to perform an endoscopic hysterectomy – $22.25 USD for instrument and $3.19 USD to process per case (Am J Obstet Gynecol. 2016;214[4]:S461-2]).

Level I evidence demonstrates that when compared to other minimally invasive hysterectomy techniques, vaginal hysterectomy is cheaper, the convalescence is stable or reduced, and the complication rates are lower (Cochrane Database Syst Rev. 2015 Aug. 12;8:CD003677).

Moreover, if you don’t place a port, you can’t get a port site complication (these complications are rare, but potentially serious when they occur). You can’t perforate the common iliac vein. You can’t put a Veress needle through the small bowel. You can’t get a Richter’s hernia. And finally, while you can get cuff dehiscence with vaginal hysterectomy, I’ve never seen it, and this is a real issue with the endoscopic approaches (Cochrane Database Syst Rev. 2012 Feb. 15;2:CD006583 ).

This isn’t just my opinion. Every major surgical society has recommended vaginal hysterectomy when technically feasible.

Of course, “technical feasibility” is the kicker and it’s important to ask what this means.

First, we have to look at what I call the hysterectomy continuum. There are the young, sexually-active women with uterovaginal procidentia where an endoscopic approach for sacral colpopexy might be considered. Then you have patients who are vaginally parous, have a mobile uterus less than 12 weeks in size, and have a basic gynecologic condition such as dysfunctional uterine bleeding, cervical intraepithelial neoplasia, or painful menses (this is about 40%-50% of patients when I reviewed internal North Valley Permanente Group data in 2012); these patients are certainly excellent candidates for vaginal hysterectomy. Then there are patients with 30-week-size fibroid uterus, three prior C-sections, and known stage 4 endometriosis (where an open or robotic approach would be justified).

Second, we have to address the contradictory data presented in the literature regarding vaginal hysterectomy rates. On one hand, we have data from large case series and randomized, controlled trials which demonstrate that it’s feasible to perform a high percentage of vaginal hysterectomies (Obstet Gynecol. 2004;103[6]:1321-5and Arch Gynecol Obstet. 2014;290[3]:485-91). On the other hand, 40 years of population data show the opposite (Obstet Gynecol. 2009;114[5]:1041-8).

In the pre-endoscopic era, 80% and 20% of hysterectomies were performed via the abdominal and vaginal routes, respectively. During the laparoscopic era, 64%, 22%, and 14% of hysterectomies were performed via the abdominal, vaginal, and laparoscopic routes, respectively. And during the current robotic era, it is now 32%, 16%, 28%, and 25% performed via the abdominal, vaginal, laparoscopic, and robotic routes, respectively.

During this 40-year time frame, despite data and recommendations that support vaginal hysterectomy, there has never been an obvious incentive to perform this procedure (e.g. to my knowledge, no one has ever been paid more to do a vaginal hysterectomy, or been prominently featured on a hospital’s website regarding his or her ability to perform an “incision-less” hysterectomy (Am J Obstet Gynecol. 2012;207[3]:174.e1-174.e7). Why weren’t and why aren’t we outraged about this? I have always been under the impression that cheaper and safer is better!

The first thing I hear to explain this – mostly from robotic surgeons and from the robotic surgery device sales representatives – is that the decline in the proportion of vaginal hysterectomies is irrelevant in that it has taken the robot to meaningfully reduce open hysterectomy rates. The other argument I hear – mostly from the laparoscopic surgeons – is that vaginal hysterectomy rates have not changed because most gynecologists cannot and will never be able to perform the procedure. So, what is the point of even discussing solutions?

I disagree with the laparoscopic and robotic surgeons. We should be outraged and do something to effect change. Vaginal hysterectomy offers better value (for surgeons who aren’t thinking about value right now, I suggest that you start. Value-based reimbursement is coming soon) and we know that a high percentage of vaginal hysterectomies are feasible in general gynecologic populations. Surgeons who perform vaginal hysterectomy are not magicians or better surgeons, just differently trained. We have to recognize that many, or even most, patients are candidates for vaginal hysterectomy.

Finally, when we look at robotics for benign disease, we spend more money than on other minimally invasive hysterectomy techniques but we don’t get better outcomes (J Minim Invasive Gynecol. 2010;17[6]:730-8and Eur J Obstet Gynecol Reprod Biol. 150[1]:92-6). Yet surgeons currently use robotics for 25% or more of benign hysterectomies.

What are we thinking and how can we afford to continue this?

We need to counsel our patients (and ourselves) that a total hysterectomy requires an incision in the vagina, and there can be a need for additional abdominal incisions of varying size and number. Fully informed consent must include a discussion of all types of hysterectomy including both patient and surgeon factors associated with the recommended route. Ultimately, the route of hysterectomy should be based on the patient and not the surgeon (Obstet Gynecol. 2014;124[3]:585-8).

It is easy to say, and supported by the evidence, that we should do more vaginal hysterectomies. It is also easy to note that the rate of vaginal hysterectomy has been stable to declining over the last 4 decades and that there are significant issues with residency training in gynecologic surgery (serious issues, but beyond the scope of this editorial).

So, what are we at SGS doing to support increased rates of vaginal hysterectomy? Every December we sponsor a postgraduate course on vaginal hysterectomy techniques. This is an excellent learning opportunity. (Visit www.sgsonline.org for more information regarding dates and costs). We’re starting partnerships with the American College of Obstetricians and Gynecologists (ACOG), the Foundation for Exxcellence in Women’s Health and others, to begin a “train the trainer” program to teach junior faculty how to do and teach vaginal hysterectomy. We’ve developed CREOG (Council on Resident Education in Obstetrics and Gynecology) modules to educate residents about the procedure, and we are in the process of communicating with residency and fellowship program directors about what else we can do to assist them with vaginal hysterectomy teaching. Other goals are to work with ACOG to develop quality metrics for hysterectomy and to develop physician-focused alternative payment models that recognize the value of vaginal hysterectomy.

I believe that in this country we should train for, incentivize, and insist upon a vaginal hysterectomy rate of at least 40% (this albeit arbitrary percentage is based upon the majority of vaginally parous women with uteri less than 12 weeks in size and a minority of the more difficult patients getting a vaginal hysterectomy). And before you say “it’s never been 40%,” please consider the famous quotation by Dr. William Mayo: “The best interest of the patient is the only interest to be considered.” Clearly, the best interest of the patient, if she is a candidate, is to have a vaginal hysterectomy. Our mission at SGS is to facilitate surgical education to make more patients candidates for vaginal hysterectomy so that we can achieve the 40% goal.

Dr. Walter is director of urogynecology and pelvic pain at The Permanente Medical Group, Roseville, Calif. He is also the immediate past president of the Society of Gynecologic Surgeons. He reported having no financial disclosures.

One of the great honors of my professional career was being nominated to the presidency of the Society of Gynecologic Surgeons and being given the opportunity to deliver the presidential address at the Society’s 42nd annual scientific meeting in Palm Springs, Calif.

One of the core principles of the SGS mission statement is supporting excellence in gynecologic surgery and, to that end, the main focus of my term was to address the decline in vaginal hysterectomy rates. What follows is an excerpt from my speech explaining the rationale for vaginal hysterectomy (VH) and steps the SGS is taking to reverse the decline.

Unfortunately, what is happening in today’s practice environment is declining use of vaginal hysterectomy, with concomitant increases in endoscopic hysterectomy, mostly with the use of robotic assistance. Being the president of a society previously known as the Vaginal Surgeons Society, it would not be surprising to hear that I have been accused of being “anti-robot.”

Nothing could be further from the truth.

When we talk about the surgical treatment of patients with endometrial and cervical cancer, I do not need a randomized clinical trial to know that not making a laparotomy incision is probably a good thing when you’re treating these patients. There are benefits to using robotic techniques in this subpopulation; it is cost effective due to the reduced morbidity and straight stick laparoscopy for these patients is difficult to perform; therefore it’s not been as widely published or performed. I believe that robotic hysterectomy for these disorders should be the standard of care. In this regard, I am pro robot (Gynecologic Oncol. 2015;138[2]:457-71).

On the other hand, I also don’t need a randomized trial (even though randomized trials exist) to know that if you have a choice to make, or not make, extra incisions during surgery, it’s better to not make the extra incisions.

It’s certainly not rocket science to know that a Zeppelin or Heaney clamp is orders of magnitude cheaper than equipment required to perform an endoscopic hysterectomy – $22.25 USD for instrument and $3.19 USD to process per case (Am J Obstet Gynecol. 2016;214[4]:S461-2]).

Level I evidence demonstrates that when compared to other minimally invasive hysterectomy techniques, vaginal hysterectomy is cheaper, the convalescence is stable or reduced, and the complication rates are lower (Cochrane Database Syst Rev. 2015 Aug. 12;8:CD003677).

Moreover, if you don’t place a port, you can’t get a port site complication (these complications are rare, but potentially serious when they occur). You can’t perforate the common iliac vein. You can’t put a Veress needle through the small bowel. You can’t get a Richter’s hernia. And finally, while you can get cuff dehiscence with vaginal hysterectomy, I’ve never seen it, and this is a real issue with the endoscopic approaches (Cochrane Database Syst Rev. 2012 Feb. 15;2:CD006583 ).

This isn’t just my opinion. Every major surgical society has recommended vaginal hysterectomy when technically feasible.

Of course, “technical feasibility” is the kicker and it’s important to ask what this means.

First, we have to look at what I call the hysterectomy continuum. There are the young, sexually-active women with uterovaginal procidentia where an endoscopic approach for sacral colpopexy might be considered. Then you have patients who are vaginally parous, have a mobile uterus less than 12 weeks in size, and have a basic gynecologic condition such as dysfunctional uterine bleeding, cervical intraepithelial neoplasia, or painful menses (this is about 40%-50% of patients when I reviewed internal North Valley Permanente Group data in 2012); these patients are certainly excellent candidates for vaginal hysterectomy. Then there are patients with 30-week-size fibroid uterus, three prior C-sections, and known stage 4 endometriosis (where an open or robotic approach would be justified).

Second, we have to address the contradictory data presented in the literature regarding vaginal hysterectomy rates. On one hand, we have data from large case series and randomized, controlled trials which demonstrate that it’s feasible to perform a high percentage of vaginal hysterectomies (Obstet Gynecol. 2004;103[6]:1321-5and Arch Gynecol Obstet. 2014;290[3]:485-91). On the other hand, 40 years of population data show the opposite (Obstet Gynecol. 2009;114[5]:1041-8).

In the pre-endoscopic era, 80% and 20% of hysterectomies were performed via the abdominal and vaginal routes, respectively. During the laparoscopic era, 64%, 22%, and 14% of hysterectomies were performed via the abdominal, vaginal, and laparoscopic routes, respectively. And during the current robotic era, it is now 32%, 16%, 28%, and 25% performed via the abdominal, vaginal, laparoscopic, and robotic routes, respectively.

During this 40-year time frame, despite data and recommendations that support vaginal hysterectomy, there has never been an obvious incentive to perform this procedure (e.g. to my knowledge, no one has ever been paid more to do a vaginal hysterectomy, or been prominently featured on a hospital’s website regarding his or her ability to perform an “incision-less” hysterectomy (Am J Obstet Gynecol. 2012;207[3]:174.e1-174.e7). Why weren’t and why aren’t we outraged about this? I have always been under the impression that cheaper and safer is better!

The first thing I hear to explain this – mostly from robotic surgeons and from the robotic surgery device sales representatives – is that the decline in the proportion of vaginal hysterectomies is irrelevant in that it has taken the robot to meaningfully reduce open hysterectomy rates. The other argument I hear – mostly from the laparoscopic surgeons – is that vaginal hysterectomy rates have not changed because most gynecologists cannot and will never be able to perform the procedure. So, what is the point of even discussing solutions?

I disagree with the laparoscopic and robotic surgeons. We should be outraged and do something to effect change. Vaginal hysterectomy offers better value (for surgeons who aren’t thinking about value right now, I suggest that you start. Value-based reimbursement is coming soon) and we know that a high percentage of vaginal hysterectomies are feasible in general gynecologic populations. Surgeons who perform vaginal hysterectomy are not magicians or better surgeons, just differently trained. We have to recognize that many, or even most, patients are candidates for vaginal hysterectomy.

Finally, when we look at robotics for benign disease, we spend more money than on other minimally invasive hysterectomy techniques but we don’t get better outcomes (J Minim Invasive Gynecol. 2010;17[6]:730-8and Eur J Obstet Gynecol Reprod Biol. 150[1]:92-6). Yet surgeons currently use robotics for 25% or more of benign hysterectomies.

What are we thinking and how can we afford to continue this?

We need to counsel our patients (and ourselves) that a total hysterectomy requires an incision in the vagina, and there can be a need for additional abdominal incisions of varying size and number. Fully informed consent must include a discussion of all types of hysterectomy including both patient and surgeon factors associated with the recommended route. Ultimately, the route of hysterectomy should be based on the patient and not the surgeon (Obstet Gynecol. 2014;124[3]:585-8).

It is easy to say, and supported by the evidence, that we should do more vaginal hysterectomies. It is also easy to note that the rate of vaginal hysterectomy has been stable to declining over the last 4 decades and that there are significant issues with residency training in gynecologic surgery (serious issues, but beyond the scope of this editorial).

So, what are we at SGS doing to support increased rates of vaginal hysterectomy? Every December we sponsor a postgraduate course on vaginal hysterectomy techniques. This is an excellent learning opportunity. (Visit www.sgsonline.org for more information regarding dates and costs). We’re starting partnerships with the American College of Obstetricians and Gynecologists (ACOG), the Foundation for Exxcellence in Women’s Health and others, to begin a “train the trainer” program to teach junior faculty how to do and teach vaginal hysterectomy. We’ve developed CREOG (Council on Resident Education in Obstetrics and Gynecology) modules to educate residents about the procedure, and we are in the process of communicating with residency and fellowship program directors about what else we can do to assist them with vaginal hysterectomy teaching. Other goals are to work with ACOG to develop quality metrics for hysterectomy and to develop physician-focused alternative payment models that recognize the value of vaginal hysterectomy.

I believe that in this country we should train for, incentivize, and insist upon a vaginal hysterectomy rate of at least 40% (this albeit arbitrary percentage is based upon the majority of vaginally parous women with uteri less than 12 weeks in size and a minority of the more difficult patients getting a vaginal hysterectomy). And before you say “it’s never been 40%,” please consider the famous quotation by Dr. William Mayo: “The best interest of the patient is the only interest to be considered.” Clearly, the best interest of the patient, if she is a candidate, is to have a vaginal hysterectomy. Our mission at SGS is to facilitate surgical education to make more patients candidates for vaginal hysterectomy so that we can achieve the 40% goal.

Dr. Walter is director of urogynecology and pelvic pain at The Permanente Medical Group, Roseville, Calif. He is also the immediate past president of the Society of Gynecologic Surgeons. He reported having no financial disclosures.

One of the great honors of my professional career was being nominated to the presidency of the Society of Gynecologic Surgeons and being given the opportunity to deliver the presidential address at the Society’s 42nd annual scientific meeting in Palm Springs, Calif.

One of the core principles of the SGS mission statement is supporting excellence in gynecologic surgery and, to that end, the main focus of my term was to address the decline in vaginal hysterectomy rates. What follows is an excerpt from my speech explaining the rationale for vaginal hysterectomy (VH) and steps the SGS is taking to reverse the decline.

Unfortunately, what is happening in today’s practice environment is declining use of vaginal hysterectomy, with concomitant increases in endoscopic hysterectomy, mostly with the use of robotic assistance. Being the president of a society previously known as the Vaginal Surgeons Society, it would not be surprising to hear that I have been accused of being “anti-robot.”

Nothing could be further from the truth.

When we talk about the surgical treatment of patients with endometrial and cervical cancer, I do not need a randomized clinical trial to know that not making a laparotomy incision is probably a good thing when you’re treating these patients. There are benefits to using robotic techniques in this subpopulation; it is cost effective due to the reduced morbidity and straight stick laparoscopy for these patients is difficult to perform; therefore it’s not been as widely published or performed. I believe that robotic hysterectomy for these disorders should be the standard of care. In this regard, I am pro robot (Gynecologic Oncol. 2015;138[2]:457-71).

On the other hand, I also don’t need a randomized trial (even though randomized trials exist) to know that if you have a choice to make, or not make, extra incisions during surgery, it’s better to not make the extra incisions.

It’s certainly not rocket science to know that a Zeppelin or Heaney clamp is orders of magnitude cheaper than equipment required to perform an endoscopic hysterectomy – $22.25 USD for instrument and $3.19 USD to process per case (Am J Obstet Gynecol. 2016;214[4]:S461-2]).

Level I evidence demonstrates that when compared to other minimally invasive hysterectomy techniques, vaginal hysterectomy is cheaper, the convalescence is stable or reduced, and the complication rates are lower (Cochrane Database Syst Rev. 2015 Aug. 12;8:CD003677).

Moreover, if you don’t place a port, you can’t get a port site complication (these complications are rare, but potentially serious when they occur). You can’t perforate the common iliac vein. You can’t put a Veress needle through the small bowel. You can’t get a Richter’s hernia. And finally, while you can get cuff dehiscence with vaginal hysterectomy, I’ve never seen it, and this is a real issue with the endoscopic approaches (Cochrane Database Syst Rev. 2012 Feb. 15;2:CD006583 ).

This isn’t just my opinion. Every major surgical society has recommended vaginal hysterectomy when technically feasible.

Of course, “technical feasibility” is the kicker and it’s important to ask what this means.

First, we have to look at what I call the hysterectomy continuum. There are the young, sexually-active women with uterovaginal procidentia where an endoscopic approach for sacral colpopexy might be considered. Then you have patients who are vaginally parous, have a mobile uterus less than 12 weeks in size, and have a basic gynecologic condition such as dysfunctional uterine bleeding, cervical intraepithelial neoplasia, or painful menses (this is about 40%-50% of patients when I reviewed internal North Valley Permanente Group data in 2012); these patients are certainly excellent candidates for vaginal hysterectomy. Then there are patients with 30-week-size fibroid uterus, three prior C-sections, and known stage 4 endometriosis (where an open or robotic approach would be justified).

Second, we have to address the contradictory data presented in the literature regarding vaginal hysterectomy rates. On one hand, we have data from large case series and randomized, controlled trials which demonstrate that it’s feasible to perform a high percentage of vaginal hysterectomies (Obstet Gynecol. 2004;103[6]:1321-5and Arch Gynecol Obstet. 2014;290[3]:485-91). On the other hand, 40 years of population data show the opposite (Obstet Gynecol. 2009;114[5]:1041-8).

In the pre-endoscopic era, 80% and 20% of hysterectomies were performed via the abdominal and vaginal routes, respectively. During the laparoscopic era, 64%, 22%, and 14% of hysterectomies were performed via the abdominal, vaginal, and laparoscopic routes, respectively. And during the current robotic era, it is now 32%, 16%, 28%, and 25% performed via the abdominal, vaginal, laparoscopic, and robotic routes, respectively.

During this 40-year time frame, despite data and recommendations that support vaginal hysterectomy, there has never been an obvious incentive to perform this procedure (e.g. to my knowledge, no one has ever been paid more to do a vaginal hysterectomy, or been prominently featured on a hospital’s website regarding his or her ability to perform an “incision-less” hysterectomy (Am J Obstet Gynecol. 2012;207[3]:174.e1-174.e7). Why weren’t and why aren’t we outraged about this? I have always been under the impression that cheaper and safer is better!

The first thing I hear to explain this – mostly from robotic surgeons and from the robotic surgery device sales representatives – is that the decline in the proportion of vaginal hysterectomies is irrelevant in that it has taken the robot to meaningfully reduce open hysterectomy rates. The other argument I hear – mostly from the laparoscopic surgeons – is that vaginal hysterectomy rates have not changed because most gynecologists cannot and will never be able to perform the procedure. So, what is the point of even discussing solutions?

I disagree with the laparoscopic and robotic surgeons. We should be outraged and do something to effect change. Vaginal hysterectomy offers better value (for surgeons who aren’t thinking about value right now, I suggest that you start. Value-based reimbursement is coming soon) and we know that a high percentage of vaginal hysterectomies are feasible in general gynecologic populations. Surgeons who perform vaginal hysterectomy are not magicians or better surgeons, just differently trained. We have to recognize that many, or even most, patients are candidates for vaginal hysterectomy.

Finally, when we look at robotics for benign disease, we spend more money than on other minimally invasive hysterectomy techniques but we don’t get better outcomes (J Minim Invasive Gynecol. 2010;17[6]:730-8and Eur J Obstet Gynecol Reprod Biol. 150[1]:92-6). Yet surgeons currently use robotics for 25% or more of benign hysterectomies.

What are we thinking and how can we afford to continue this?

We need to counsel our patients (and ourselves) that a total hysterectomy requires an incision in the vagina, and there can be a need for additional abdominal incisions of varying size and number. Fully informed consent must include a discussion of all types of hysterectomy including both patient and surgeon factors associated with the recommended route. Ultimately, the route of hysterectomy should be based on the patient and not the surgeon (Obstet Gynecol. 2014;124[3]:585-8).

It is easy to say, and supported by the evidence, that we should do more vaginal hysterectomies. It is also easy to note that the rate of vaginal hysterectomy has been stable to declining over the last 4 decades and that there are significant issues with residency training in gynecologic surgery (serious issues, but beyond the scope of this editorial).

So, what are we at SGS doing to support increased rates of vaginal hysterectomy? Every December we sponsor a postgraduate course on vaginal hysterectomy techniques. This is an excellent learning opportunity. (Visit www.sgsonline.org for more information regarding dates and costs). We’re starting partnerships with the American College of Obstetricians and Gynecologists (ACOG), the Foundation for Exxcellence in Women’s Health and others, to begin a “train the trainer” program to teach junior faculty how to do and teach vaginal hysterectomy. We’ve developed CREOG (Council on Resident Education in Obstetrics and Gynecology) modules to educate residents about the procedure, and we are in the process of communicating with residency and fellowship program directors about what else we can do to assist them with vaginal hysterectomy teaching. Other goals are to work with ACOG to develop quality metrics for hysterectomy and to develop physician-focused alternative payment models that recognize the value of vaginal hysterectomy.

I believe that in this country we should train for, incentivize, and insist upon a vaginal hysterectomy rate of at least 40% (this albeit arbitrary percentage is based upon the majority of vaginally parous women with uteri less than 12 weeks in size and a minority of the more difficult patients getting a vaginal hysterectomy). And before you say “it’s never been 40%,” please consider the famous quotation by Dr. William Mayo: “The best interest of the patient is the only interest to be considered.” Clearly, the best interest of the patient, if she is a candidate, is to have a vaginal hysterectomy. Our mission at SGS is to facilitate surgical education to make more patients candidates for vaginal hysterectomy so that we can achieve the 40% goal.

Dr. Walter is director of urogynecology and pelvic pain at The Permanente Medical Group, Roseville, Calif. He is also the immediate past president of the Society of Gynecologic Surgeons. He reported having no financial disclosures.

USDA to release more funds for antibiotic resistance research

The U.S. Department of Agriculture has made $6 million available through its Agriculture and Food Research Initiative to fund research on antimicrobial resistance.

“The research projects funded through this announcement will help us succeed in our efforts to preserve the effectiveness of antibiotics and protect public health,” said U.S. Agriculture Secretary Tom Vilsack in a statement.