User login

MDedge latest news is breaking news from medical conferences, journals, guidelines, the FDA and CDC.

Liposomal Irinotecan May Be Effective in Later Lines for Pancreatic Cancer

“The selection of later lines of chemotherapy regimens should be based on the differential safety profile, patient status, the cost of treatment, and health‐related quality of life,” reported lead author Amol Gupta, MD, from the Sidney Kimmel Comprehensive Cancer Center at the Johns Hopkins Hospital in Baltimore.

The findings, published in Cancer on July 10, 2024, are “noteworthy with respect to calling attention to this important situation and the role of a new drug in the PDAC pharmacologic armamentarium,” Vincent J. Picozzi, MD from the Division of Hematology‐Oncology, Virginia Mason Medical Center, Seattle, wrote in an accompanying editorial.

Treatment of PDAC remains a challenge, the authors noted, as most diagnoses occur at a metastatic or locally advanced stage at which treatments provide only modest benefits and significant toxicities.

The introduction of the first‐line FOLFIRINOX regimen (fluorouracil [5‐FU], leucovorin [LV], irinotecan [IRI], and oxaliplatin) and gemcitabine plus nanoparticle albumin‐bound (nab)‐paclitaxel “has improved the survival of patients with advanced PDAC and increased the number of patients eligible for subsequent therapies” beyond the first-line setting, according to the authors. But survival benefits with FOLFIRINOX and gemcitabine‐based therapy remain poor, with median overall survival (OS) of 6 and 7.6 months, respectively, they noted.

Timing Liposomal Irinotecan

The liposomal formulation of IRI has resulted in improved pharmacokinetics and decreased toxicity, but use of this new formulation in later lines of therapy is sometimes limited by concerns that prior exposure to conventional IRI in FOLFIRINOX might produce cross-resistance and reduce the benefit of nal-IRI, the authors explained.

To examine this question, the researchers included eight retrospective chart reviews published up until April 2023 that included a total of 1368 patients who were treated with nal-IRI for locally advanced or metastatic PDAC (five studies) or locally advanced or metastatic PDAC (three studies). Patients ranged in age from 57.8 to 65 years, with the proportion of male patients ranging from 45.7% to 68.6%. The sample sizes of the studies ranged from 29 to 675 patients, with a follow-up range of 6-12.9 months.

Between 84.5% and 100% of patients had received two or more prior lines of therapy, with prior IRI exposure ranging from 20.9% to 100%. In total, 499 patients had prior IRI exposure, mostly in the first-line setting. When reported, most patients gave the reason for IRI discontinuation as disease progression.

Across all patients, the pooled progression-free survival (PFS) and OS were 2.02 months and 4.26 months, respectively, with comparable outcomes in patients with prior IRI exposure compared with those without: PFS (hazard ratio [HR], 1.17; 95% CI, 0.94-1.47; P = .17) and OS (HR, 1.16; 95% CI, 0.95-1.42; P = .16), respectively. This held true regardless of whether patients had experienced progressive disease on conventional IRI. Specifically, the PFS and OS of patients who discontinued conventional IRI because of progressive disease were comparable (HR, 1.50 and HR, 1.70) with those of patients who did not have progressive disease.

The authors reported there was substantial variation in predictors of nal-IRI outcomes among the studies examined. One study reported a numerical but not statistically significant better PFS and OS associated with longer IRI exposure and a higher cumulative dose of prior IRI. Two studies suggested worse PFS and OS associated with later treatment lines of nal-IRI, although adjusted HR did not reflect this. Sequence of treatment was a significant predictor of outcome, as were surgery and metastatic disease, bone and liver metastases, serum albumin < 40 g/L, a neutrophil‐to-lymphocyte ratio > 5, and an elevated baseline carbohydrate antigen 19‐9 level.

“There are several reasons to consider why nal‐IRI may be effective after standard IRI has failed to be so,” said Dr. Picozzi, suggesting pharmacokinetics or an improved tumor tissue/normal tissue exposure ratio as possible explanations. “However, as the authors point out, the results from this pooled, retrospective review could also simply be the result of methodological bias (eg, selection bias) or patient selection,” he added.

“Perhaps the best way of considering the results of the analysis (remembering that the median PFS was essentially the same as the first restaging visit) is that nal‐IRI may be just another in a list of suboptimal treatment options in this situation, he wrote. “If its inherent activity is very low in third‐line treatment (like all other agents to date), the use and response to prior standard IRI may be largely irrelevant.”

Consequently, he concurs with the authors that the selection of third-line treatment options and beyond for advanced APDAC should not be influenced by prior IRI exposure.

First-Line Liposomal Irinotecan Approved

In February, the US Food and Drug Administration (FDA) approved nal-IRI (Onivyde) as part of a new first-line regimen for first-line metastatic PDAC. In the new regimen (NALIRIFOX), nal-IRI is substituted for the conventional IRI found in FOLFIRINOX, boosting the cost more than 15-fold from around $500-$7800 per cycle.

The FDA approval was based on the results of the NAPOLI-3 study, which did not compare outcomes of NALIRIFOX with FOLFIRINOX, but rather, compared first-line nal-IRI with the combination of nab‐paclitaxel and gemcitabine. The study showed longer OS (HR, 0.83; 95% CI, 0.70-0.99; P = .04) and PFS (HR, 0.69; 95% CI, 0.58-0.83; P < .001), with first-line nal-IRI.

The absence of a head-to-head comparison has some oncologists debating whether the new regimen is a potential new standard first-line treatment or whether the cost outweighs the potential benefits.

One study author reported grants/contracts from AbbVie, Bristol Myers Squibb, Curegenix, Medivir, Merck, and Nouscom; personal/consulting fees from Bayer, Catenion, G1 Therapeutics, Janssen Pharmaceuticals, Merck, Merus, Nouscom, Regeneron, Sirtex Medical Inc., Tango Therapeutics, and Tavotek Biotherapeutics; and support for other professional activities from Bristol Myers Squibb and Merck outside the submitted work. Another study author reported personal/consulting fees from Astellas Pharma, AstraZeneca, IDEAYA Biosciences, Merck, Merus, Moderna, RenovoRx, Seattle Genetics, and TriSalus Life Sciences outside the submitted work. The remaining authors and Picozzi disclosed no conflicts of interest.

A version of this article first appeared on Medscape.com.

“The selection of later lines of chemotherapy regimens should be based on the differential safety profile, patient status, the cost of treatment, and health‐related quality of life,” reported lead author Amol Gupta, MD, from the Sidney Kimmel Comprehensive Cancer Center at the Johns Hopkins Hospital in Baltimore.

The findings, published in Cancer on July 10, 2024, are “noteworthy with respect to calling attention to this important situation and the role of a new drug in the PDAC pharmacologic armamentarium,” Vincent J. Picozzi, MD from the Division of Hematology‐Oncology, Virginia Mason Medical Center, Seattle, wrote in an accompanying editorial.

Treatment of PDAC remains a challenge, the authors noted, as most diagnoses occur at a metastatic or locally advanced stage at which treatments provide only modest benefits and significant toxicities.

The introduction of the first‐line FOLFIRINOX regimen (fluorouracil [5‐FU], leucovorin [LV], irinotecan [IRI], and oxaliplatin) and gemcitabine plus nanoparticle albumin‐bound (nab)‐paclitaxel “has improved the survival of patients with advanced PDAC and increased the number of patients eligible for subsequent therapies” beyond the first-line setting, according to the authors. But survival benefits with FOLFIRINOX and gemcitabine‐based therapy remain poor, with median overall survival (OS) of 6 and 7.6 months, respectively, they noted.

Timing Liposomal Irinotecan

The liposomal formulation of IRI has resulted in improved pharmacokinetics and decreased toxicity, but use of this new formulation in later lines of therapy is sometimes limited by concerns that prior exposure to conventional IRI in FOLFIRINOX might produce cross-resistance and reduce the benefit of nal-IRI, the authors explained.

To examine this question, the researchers included eight retrospective chart reviews published up until April 2023 that included a total of 1368 patients who were treated with nal-IRI for locally advanced or metastatic PDAC (five studies) or locally advanced or metastatic PDAC (three studies). Patients ranged in age from 57.8 to 65 years, with the proportion of male patients ranging from 45.7% to 68.6%. The sample sizes of the studies ranged from 29 to 675 patients, with a follow-up range of 6-12.9 months.

Between 84.5% and 100% of patients had received two or more prior lines of therapy, with prior IRI exposure ranging from 20.9% to 100%. In total, 499 patients had prior IRI exposure, mostly in the first-line setting. When reported, most patients gave the reason for IRI discontinuation as disease progression.

Across all patients, the pooled progression-free survival (PFS) and OS were 2.02 months and 4.26 months, respectively, with comparable outcomes in patients with prior IRI exposure compared with those without: PFS (hazard ratio [HR], 1.17; 95% CI, 0.94-1.47; P = .17) and OS (HR, 1.16; 95% CI, 0.95-1.42; P = .16), respectively. This held true regardless of whether patients had experienced progressive disease on conventional IRI. Specifically, the PFS and OS of patients who discontinued conventional IRI because of progressive disease were comparable (HR, 1.50 and HR, 1.70) with those of patients who did not have progressive disease.

The authors reported there was substantial variation in predictors of nal-IRI outcomes among the studies examined. One study reported a numerical but not statistically significant better PFS and OS associated with longer IRI exposure and a higher cumulative dose of prior IRI. Two studies suggested worse PFS and OS associated with later treatment lines of nal-IRI, although adjusted HR did not reflect this. Sequence of treatment was a significant predictor of outcome, as were surgery and metastatic disease, bone and liver metastases, serum albumin < 40 g/L, a neutrophil‐to-lymphocyte ratio > 5, and an elevated baseline carbohydrate antigen 19‐9 level.

“There are several reasons to consider why nal‐IRI may be effective after standard IRI has failed to be so,” said Dr. Picozzi, suggesting pharmacokinetics or an improved tumor tissue/normal tissue exposure ratio as possible explanations. “However, as the authors point out, the results from this pooled, retrospective review could also simply be the result of methodological bias (eg, selection bias) or patient selection,” he added.

“Perhaps the best way of considering the results of the analysis (remembering that the median PFS was essentially the same as the first restaging visit) is that nal‐IRI may be just another in a list of suboptimal treatment options in this situation, he wrote. “If its inherent activity is very low in third‐line treatment (like all other agents to date), the use and response to prior standard IRI may be largely irrelevant.”

Consequently, he concurs with the authors that the selection of third-line treatment options and beyond for advanced APDAC should not be influenced by prior IRI exposure.

First-Line Liposomal Irinotecan Approved

In February, the US Food and Drug Administration (FDA) approved nal-IRI (Onivyde) as part of a new first-line regimen for first-line metastatic PDAC. In the new regimen (NALIRIFOX), nal-IRI is substituted for the conventional IRI found in FOLFIRINOX, boosting the cost more than 15-fold from around $500-$7800 per cycle.

The FDA approval was based on the results of the NAPOLI-3 study, which did not compare outcomes of NALIRIFOX with FOLFIRINOX, but rather, compared first-line nal-IRI with the combination of nab‐paclitaxel and gemcitabine. The study showed longer OS (HR, 0.83; 95% CI, 0.70-0.99; P = .04) and PFS (HR, 0.69; 95% CI, 0.58-0.83; P < .001), with first-line nal-IRI.

The absence of a head-to-head comparison has some oncologists debating whether the new regimen is a potential new standard first-line treatment or whether the cost outweighs the potential benefits.

One study author reported grants/contracts from AbbVie, Bristol Myers Squibb, Curegenix, Medivir, Merck, and Nouscom; personal/consulting fees from Bayer, Catenion, G1 Therapeutics, Janssen Pharmaceuticals, Merck, Merus, Nouscom, Regeneron, Sirtex Medical Inc., Tango Therapeutics, and Tavotek Biotherapeutics; and support for other professional activities from Bristol Myers Squibb and Merck outside the submitted work. Another study author reported personal/consulting fees from Astellas Pharma, AstraZeneca, IDEAYA Biosciences, Merck, Merus, Moderna, RenovoRx, Seattle Genetics, and TriSalus Life Sciences outside the submitted work. The remaining authors and Picozzi disclosed no conflicts of interest.

A version of this article first appeared on Medscape.com.

“The selection of later lines of chemotherapy regimens should be based on the differential safety profile, patient status, the cost of treatment, and health‐related quality of life,” reported lead author Amol Gupta, MD, from the Sidney Kimmel Comprehensive Cancer Center at the Johns Hopkins Hospital in Baltimore.

The findings, published in Cancer on July 10, 2024, are “noteworthy with respect to calling attention to this important situation and the role of a new drug in the PDAC pharmacologic armamentarium,” Vincent J. Picozzi, MD from the Division of Hematology‐Oncology, Virginia Mason Medical Center, Seattle, wrote in an accompanying editorial.

Treatment of PDAC remains a challenge, the authors noted, as most diagnoses occur at a metastatic or locally advanced stage at which treatments provide only modest benefits and significant toxicities.

The introduction of the first‐line FOLFIRINOX regimen (fluorouracil [5‐FU], leucovorin [LV], irinotecan [IRI], and oxaliplatin) and gemcitabine plus nanoparticle albumin‐bound (nab)‐paclitaxel “has improved the survival of patients with advanced PDAC and increased the number of patients eligible for subsequent therapies” beyond the first-line setting, according to the authors. But survival benefits with FOLFIRINOX and gemcitabine‐based therapy remain poor, with median overall survival (OS) of 6 and 7.6 months, respectively, they noted.

Timing Liposomal Irinotecan

The liposomal formulation of IRI has resulted in improved pharmacokinetics and decreased toxicity, but use of this new formulation in later lines of therapy is sometimes limited by concerns that prior exposure to conventional IRI in FOLFIRINOX might produce cross-resistance and reduce the benefit of nal-IRI, the authors explained.

To examine this question, the researchers included eight retrospective chart reviews published up until April 2023 that included a total of 1368 patients who were treated with nal-IRI for locally advanced or metastatic PDAC (five studies) or locally advanced or metastatic PDAC (three studies). Patients ranged in age from 57.8 to 65 years, with the proportion of male patients ranging from 45.7% to 68.6%. The sample sizes of the studies ranged from 29 to 675 patients, with a follow-up range of 6-12.9 months.

Between 84.5% and 100% of patients had received two or more prior lines of therapy, with prior IRI exposure ranging from 20.9% to 100%. In total, 499 patients had prior IRI exposure, mostly in the first-line setting. When reported, most patients gave the reason for IRI discontinuation as disease progression.

Across all patients, the pooled progression-free survival (PFS) and OS were 2.02 months and 4.26 months, respectively, with comparable outcomes in patients with prior IRI exposure compared with those without: PFS (hazard ratio [HR], 1.17; 95% CI, 0.94-1.47; P = .17) and OS (HR, 1.16; 95% CI, 0.95-1.42; P = .16), respectively. This held true regardless of whether patients had experienced progressive disease on conventional IRI. Specifically, the PFS and OS of patients who discontinued conventional IRI because of progressive disease were comparable (HR, 1.50 and HR, 1.70) with those of patients who did not have progressive disease.

The authors reported there was substantial variation in predictors of nal-IRI outcomes among the studies examined. One study reported a numerical but not statistically significant better PFS and OS associated with longer IRI exposure and a higher cumulative dose of prior IRI. Two studies suggested worse PFS and OS associated with later treatment lines of nal-IRI, although adjusted HR did not reflect this. Sequence of treatment was a significant predictor of outcome, as were surgery and metastatic disease, bone and liver metastases, serum albumin < 40 g/L, a neutrophil‐to-lymphocyte ratio > 5, and an elevated baseline carbohydrate antigen 19‐9 level.

“There are several reasons to consider why nal‐IRI may be effective after standard IRI has failed to be so,” said Dr. Picozzi, suggesting pharmacokinetics or an improved tumor tissue/normal tissue exposure ratio as possible explanations. “However, as the authors point out, the results from this pooled, retrospective review could also simply be the result of methodological bias (eg, selection bias) or patient selection,” he added.

“Perhaps the best way of considering the results of the analysis (remembering that the median PFS was essentially the same as the first restaging visit) is that nal‐IRI may be just another in a list of suboptimal treatment options in this situation, he wrote. “If its inherent activity is very low in third‐line treatment (like all other agents to date), the use and response to prior standard IRI may be largely irrelevant.”

Consequently, he concurs with the authors that the selection of third-line treatment options and beyond for advanced APDAC should not be influenced by prior IRI exposure.

First-Line Liposomal Irinotecan Approved

In February, the US Food and Drug Administration (FDA) approved nal-IRI (Onivyde) as part of a new first-line regimen for first-line metastatic PDAC. In the new regimen (NALIRIFOX), nal-IRI is substituted for the conventional IRI found in FOLFIRINOX, boosting the cost more than 15-fold from around $500-$7800 per cycle.

The FDA approval was based on the results of the NAPOLI-3 study, which did not compare outcomes of NALIRIFOX with FOLFIRINOX, but rather, compared first-line nal-IRI with the combination of nab‐paclitaxel and gemcitabine. The study showed longer OS (HR, 0.83; 95% CI, 0.70-0.99; P = .04) and PFS (HR, 0.69; 95% CI, 0.58-0.83; P < .001), with first-line nal-IRI.

The absence of a head-to-head comparison has some oncologists debating whether the new regimen is a potential new standard first-line treatment or whether the cost outweighs the potential benefits.

One study author reported grants/contracts from AbbVie, Bristol Myers Squibb, Curegenix, Medivir, Merck, and Nouscom; personal/consulting fees from Bayer, Catenion, G1 Therapeutics, Janssen Pharmaceuticals, Merck, Merus, Nouscom, Regeneron, Sirtex Medical Inc., Tango Therapeutics, and Tavotek Biotherapeutics; and support for other professional activities from Bristol Myers Squibb and Merck outside the submitted work. Another study author reported personal/consulting fees from Astellas Pharma, AstraZeneca, IDEAYA Biosciences, Merck, Merus, Moderna, RenovoRx, Seattle Genetics, and TriSalus Life Sciences outside the submitted work. The remaining authors and Picozzi disclosed no conflicts of interest.

A version of this article first appeared on Medscape.com.

FROM CANCER

Elinzanetant Reduces Menopausal Symptoms

TOPLINE:

. The drug also improved sleep disturbances and menopause-related quality of life, with a favorable safety profile.

METHODOLOGY:

- Researchers conducted two randomized, double-blind, placebo-controlled phase 3 trials (OASIS 1 and 2) across 77 sites in the United States, Europe, Canada, and Israel.

- A total of 796 postmenopausal participants aged 40-65 years experiencing moderate to severe vasomotor symptoms were included.

- Participants received either 120 mg of elinzanetant or a placebo once daily for 12 weeks, followed by elinzanetant for an additional 14 weeks.

- Primary outcomes measured were changes in frequency and severity of vasomotor symptoms from baseline to weeks 4 and 12, using an electronic hot flash daily diary.

- Secondary outcomes included changes in sleep disturbances and menopause-related quality of life, assessed using the Patient-Reported Outcomes Measurement Information System Sleep Disturbance–Short Form 8b (PROMIS SD SF 8b) and Menopause-Specific Quality of Life (MENQOL) questionnaires.

TAKEAWAY:

- Elinzanetant significantly reduced the frequency of vasomotor symptoms by week 4 (OASIS 1: −3.3 [95% CI, −4.5 to −2.1]; OASIS 2: −3.0 [95% CI, −4.4 to −1.7]; P < .001).

- By week 12, elinzanetant further reduced vasomotor symptom frequency (OASIS 1: −3.2 [95% CI, −4.8 to −1.6]; OASIS 2: −3.2 [95% CI, −4.6 to −1.9]; P < .001).

- Elinzanetant improved sleep disturbances, with significant reductions in PROMIS SD-SF 8b total T scores at week 12 (OASIS 1: −5.6 [95% CI, −7.2 to −4.0]; OASIS 2: −4.3 [95% CI, −5.8 to −2.9]; P < .001).

- Menopause-related quality of life also improved significantly with elinzanetant, as indicated by reductions in MENQOL total scores at week 12 (OASIS 1: −0.4 [95% CI, −0.6 to −0.2]; OASIS 2: − 0.3 [95% CI, −0.5 to − 0.1]; P = .0059).

IN PRACTICE:

“These results have clinically relevant implications because vasomotor symptoms often pose significant impacts on menopausal individual’s overall health, everyday activities, sleep, quality of life, and work productivity,” wrote the study authors.

SOURCE:

The studies were led by JoAnn V. Pinkerton, MD, MSCP, University of Virginia Health in Charlottesville, and James A. Simon, MD, MSCP, George Washington University in Washington, DC. The results were published online in JAMA.

LIMITATIONS:

The OASIS 1 and 2 trials included only postmenopausal individuals, which may limit the generalizability of the findings to other populations. The study relied on patient-reported outcomes, which can be influenced by subjective perception and may introduce bias. The placebo response observed in the trials is consistent with that seen in other vasomotor symptom studies, potentially affecting the interpretation of the results. Further research is needed to assess the long-term safety and efficacy of elinzanetant beyond the 26-week treatment period.

DISCLOSURES:

Dr. Pinkerton received grants from Bayer Pharmaceuticals to the University of Virginia and consulting fees from Bayer Pharmaceutical. Dr. Simon reported grants from Bayer Healthcare, AbbVie, Daré Bioscience, Mylan, and Myovant/Sumitomo and personal fees from Astellas Pharma, Ascend Therapeutics, California Institute of Integral Studies, Femasys, Khyra, Madorra, Mayne Pharma, Pfizer, Pharmavite, Scynexis Inc, Vella Bioscience, and Bayer. Additional disclosures are noted in the original article.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

TOPLINE:

. The drug also improved sleep disturbances and menopause-related quality of life, with a favorable safety profile.

METHODOLOGY:

- Researchers conducted two randomized, double-blind, placebo-controlled phase 3 trials (OASIS 1 and 2) across 77 sites in the United States, Europe, Canada, and Israel.

- A total of 796 postmenopausal participants aged 40-65 years experiencing moderate to severe vasomotor symptoms were included.

- Participants received either 120 mg of elinzanetant or a placebo once daily for 12 weeks, followed by elinzanetant for an additional 14 weeks.

- Primary outcomes measured were changes in frequency and severity of vasomotor symptoms from baseline to weeks 4 and 12, using an electronic hot flash daily diary.

- Secondary outcomes included changes in sleep disturbances and menopause-related quality of life, assessed using the Patient-Reported Outcomes Measurement Information System Sleep Disturbance–Short Form 8b (PROMIS SD SF 8b) and Menopause-Specific Quality of Life (MENQOL) questionnaires.

TAKEAWAY:

- Elinzanetant significantly reduced the frequency of vasomotor symptoms by week 4 (OASIS 1: −3.3 [95% CI, −4.5 to −2.1]; OASIS 2: −3.0 [95% CI, −4.4 to −1.7]; P < .001).

- By week 12, elinzanetant further reduced vasomotor symptom frequency (OASIS 1: −3.2 [95% CI, −4.8 to −1.6]; OASIS 2: −3.2 [95% CI, −4.6 to −1.9]; P < .001).

- Elinzanetant improved sleep disturbances, with significant reductions in PROMIS SD-SF 8b total T scores at week 12 (OASIS 1: −5.6 [95% CI, −7.2 to −4.0]; OASIS 2: −4.3 [95% CI, −5.8 to −2.9]; P < .001).

- Menopause-related quality of life also improved significantly with elinzanetant, as indicated by reductions in MENQOL total scores at week 12 (OASIS 1: −0.4 [95% CI, −0.6 to −0.2]; OASIS 2: − 0.3 [95% CI, −0.5 to − 0.1]; P = .0059).

IN PRACTICE:

“These results have clinically relevant implications because vasomotor symptoms often pose significant impacts on menopausal individual’s overall health, everyday activities, sleep, quality of life, and work productivity,” wrote the study authors.

SOURCE:

The studies were led by JoAnn V. Pinkerton, MD, MSCP, University of Virginia Health in Charlottesville, and James A. Simon, MD, MSCP, George Washington University in Washington, DC. The results were published online in JAMA.

LIMITATIONS:

The OASIS 1 and 2 trials included only postmenopausal individuals, which may limit the generalizability of the findings to other populations. The study relied on patient-reported outcomes, which can be influenced by subjective perception and may introduce bias. The placebo response observed in the trials is consistent with that seen in other vasomotor symptom studies, potentially affecting the interpretation of the results. Further research is needed to assess the long-term safety and efficacy of elinzanetant beyond the 26-week treatment period.

DISCLOSURES:

Dr. Pinkerton received grants from Bayer Pharmaceuticals to the University of Virginia and consulting fees from Bayer Pharmaceutical. Dr. Simon reported grants from Bayer Healthcare, AbbVie, Daré Bioscience, Mylan, and Myovant/Sumitomo and personal fees from Astellas Pharma, Ascend Therapeutics, California Institute of Integral Studies, Femasys, Khyra, Madorra, Mayne Pharma, Pfizer, Pharmavite, Scynexis Inc, Vella Bioscience, and Bayer. Additional disclosures are noted in the original article.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

TOPLINE:

. The drug also improved sleep disturbances and menopause-related quality of life, with a favorable safety profile.

METHODOLOGY:

- Researchers conducted two randomized, double-blind, placebo-controlled phase 3 trials (OASIS 1 and 2) across 77 sites in the United States, Europe, Canada, and Israel.

- A total of 796 postmenopausal participants aged 40-65 years experiencing moderate to severe vasomotor symptoms were included.

- Participants received either 120 mg of elinzanetant or a placebo once daily for 12 weeks, followed by elinzanetant for an additional 14 weeks.

- Primary outcomes measured were changes in frequency and severity of vasomotor symptoms from baseline to weeks 4 and 12, using an electronic hot flash daily diary.

- Secondary outcomes included changes in sleep disturbances and menopause-related quality of life, assessed using the Patient-Reported Outcomes Measurement Information System Sleep Disturbance–Short Form 8b (PROMIS SD SF 8b) and Menopause-Specific Quality of Life (MENQOL) questionnaires.

TAKEAWAY:

- Elinzanetant significantly reduced the frequency of vasomotor symptoms by week 4 (OASIS 1: −3.3 [95% CI, −4.5 to −2.1]; OASIS 2: −3.0 [95% CI, −4.4 to −1.7]; P < .001).

- By week 12, elinzanetant further reduced vasomotor symptom frequency (OASIS 1: −3.2 [95% CI, −4.8 to −1.6]; OASIS 2: −3.2 [95% CI, −4.6 to −1.9]; P < .001).

- Elinzanetant improved sleep disturbances, with significant reductions in PROMIS SD-SF 8b total T scores at week 12 (OASIS 1: −5.6 [95% CI, −7.2 to −4.0]; OASIS 2: −4.3 [95% CI, −5.8 to −2.9]; P < .001).

- Menopause-related quality of life also improved significantly with elinzanetant, as indicated by reductions in MENQOL total scores at week 12 (OASIS 1: −0.4 [95% CI, −0.6 to −0.2]; OASIS 2: − 0.3 [95% CI, −0.5 to − 0.1]; P = .0059).

IN PRACTICE:

“These results have clinically relevant implications because vasomotor symptoms often pose significant impacts on menopausal individual’s overall health, everyday activities, sleep, quality of life, and work productivity,” wrote the study authors.

SOURCE:

The studies were led by JoAnn V. Pinkerton, MD, MSCP, University of Virginia Health in Charlottesville, and James A. Simon, MD, MSCP, George Washington University in Washington, DC. The results were published online in JAMA.

LIMITATIONS:

The OASIS 1 and 2 trials included only postmenopausal individuals, which may limit the generalizability of the findings to other populations. The study relied on patient-reported outcomes, which can be influenced by subjective perception and may introduce bias. The placebo response observed in the trials is consistent with that seen in other vasomotor symptom studies, potentially affecting the interpretation of the results. Further research is needed to assess the long-term safety and efficacy of elinzanetant beyond the 26-week treatment period.

DISCLOSURES:

Dr. Pinkerton received grants from Bayer Pharmaceuticals to the University of Virginia and consulting fees from Bayer Pharmaceutical. Dr. Simon reported grants from Bayer Healthcare, AbbVie, Daré Bioscience, Mylan, and Myovant/Sumitomo and personal fees from Astellas Pharma, Ascend Therapeutics, California Institute of Integral Studies, Femasys, Khyra, Madorra, Mayne Pharma, Pfizer, Pharmavite, Scynexis Inc, Vella Bioscience, and Bayer. Additional disclosures are noted in the original article.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

Untreated Hypertension Tied to Alzheimer’s Disease Risk

TOPLINE:

Older adults with untreated hypertension have a 36% increased risk for Alzheimer’s disease (AD) compared with those without hypertension and a 42% increased risk for AD compared with those with treated hypertension.

METHODOLOGY:

- In this meta-analysis, researchers analyzed the data of 31,250 participants aged 60 years or older (mean age, 72.1 years; 41% men) from 14 community-based studies across 14 countries.

- Mean follow-up was 4.2 years, and blood pressure measurements, hypertension diagnosis, and antihypertensive medication use were recorded.

- Overall, 35.9% had no history of hypertension or antihypertensive medication use, 50.7% had a history of hypertension with antihypertensive medication use, and 9.4% had a history of hypertension without antihypertensive medication use.

- The main outcomes were AD and non-AD dementia.

TAKEAWAY:

- In total, 1415 participants developed AD, and 681 developed non-AD dementia.

- Participants with untreated hypertension had a 36% increased risk for AD compared with healthy controls (hazard ratio [HR], 1.36; P = .041) and a 42% increased risk for AD (HR, 1.42; P = .013) compared with those with treated hypertension.

- Compared with healthy controls, patients with treated hypertension did not show an elevated risk for AD (HR, 0.961; P = .6644).

- Patients with both treated (HR, 1.285; P = .027) and untreated (HR, 1.693; P = .003) hypertension had an increased risk for non-AD dementia compared with healthy controls. Patients with treated and untreated hypertension had a similar risk for non-AD dementia.

IN PRACTICE:

“These results suggest that treating high blood pressure as a person ages continues to be a crucial factor in reducing their risk of Alzheimer’s disease,” the lead author Matthew J. Lennon, MD, PhD, said in a press release.

SOURCE:

This study was led by Matthew J. Lennon, MD, PhD, School of Clinical Medicine, UNSW Sydney, Sydney, Australia. It was published online in Neurology.

LIMITATIONS:

Varied definitions for hypertension across different locations might have led to discrepancies in diagnosis. Additionally, the study did not account for potential confounders such as stroke, transient ischemic attack, and heart disease, which may act as mediators rather than covariates. Furthermore, the study did not report mortality data, which may have affected the interpretation of dementia risk.

DISCLOSURES:

This research was supported by the National Institute on Aging of the National Institutes of Health. Some authors reported ties with several institutions and pharmaceutical companies outside this work. Full disclosures are available in the original article.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

TOPLINE:

Older adults with untreated hypertension have a 36% increased risk for Alzheimer’s disease (AD) compared with those without hypertension and a 42% increased risk for AD compared with those with treated hypertension.

METHODOLOGY:

- In this meta-analysis, researchers analyzed the data of 31,250 participants aged 60 years or older (mean age, 72.1 years; 41% men) from 14 community-based studies across 14 countries.

- Mean follow-up was 4.2 years, and blood pressure measurements, hypertension diagnosis, and antihypertensive medication use were recorded.

- Overall, 35.9% had no history of hypertension or antihypertensive medication use, 50.7% had a history of hypertension with antihypertensive medication use, and 9.4% had a history of hypertension without antihypertensive medication use.

- The main outcomes were AD and non-AD dementia.

TAKEAWAY:

- In total, 1415 participants developed AD, and 681 developed non-AD dementia.

- Participants with untreated hypertension had a 36% increased risk for AD compared with healthy controls (hazard ratio [HR], 1.36; P = .041) and a 42% increased risk for AD (HR, 1.42; P = .013) compared with those with treated hypertension.

- Compared with healthy controls, patients with treated hypertension did not show an elevated risk for AD (HR, 0.961; P = .6644).

- Patients with both treated (HR, 1.285; P = .027) and untreated (HR, 1.693; P = .003) hypertension had an increased risk for non-AD dementia compared with healthy controls. Patients with treated and untreated hypertension had a similar risk for non-AD dementia.

IN PRACTICE:

“These results suggest that treating high blood pressure as a person ages continues to be a crucial factor in reducing their risk of Alzheimer’s disease,” the lead author Matthew J. Lennon, MD, PhD, said in a press release.

SOURCE:

This study was led by Matthew J. Lennon, MD, PhD, School of Clinical Medicine, UNSW Sydney, Sydney, Australia. It was published online in Neurology.

LIMITATIONS:

Varied definitions for hypertension across different locations might have led to discrepancies in diagnosis. Additionally, the study did not account for potential confounders such as stroke, transient ischemic attack, and heart disease, which may act as mediators rather than covariates. Furthermore, the study did not report mortality data, which may have affected the interpretation of dementia risk.

DISCLOSURES:

This research was supported by the National Institute on Aging of the National Institutes of Health. Some authors reported ties with several institutions and pharmaceutical companies outside this work. Full disclosures are available in the original article.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

TOPLINE:

Older adults with untreated hypertension have a 36% increased risk for Alzheimer’s disease (AD) compared with those without hypertension and a 42% increased risk for AD compared with those with treated hypertension.

METHODOLOGY:

- In this meta-analysis, researchers analyzed the data of 31,250 participants aged 60 years or older (mean age, 72.1 years; 41% men) from 14 community-based studies across 14 countries.

- Mean follow-up was 4.2 years, and blood pressure measurements, hypertension diagnosis, and antihypertensive medication use were recorded.

- Overall, 35.9% had no history of hypertension or antihypertensive medication use, 50.7% had a history of hypertension with antihypertensive medication use, and 9.4% had a history of hypertension without antihypertensive medication use.

- The main outcomes were AD and non-AD dementia.

TAKEAWAY:

- In total, 1415 participants developed AD, and 681 developed non-AD dementia.

- Participants with untreated hypertension had a 36% increased risk for AD compared with healthy controls (hazard ratio [HR], 1.36; P = .041) and a 42% increased risk for AD (HR, 1.42; P = .013) compared with those with treated hypertension.

- Compared with healthy controls, patients with treated hypertension did not show an elevated risk for AD (HR, 0.961; P = .6644).

- Patients with both treated (HR, 1.285; P = .027) and untreated (HR, 1.693; P = .003) hypertension had an increased risk for non-AD dementia compared with healthy controls. Patients with treated and untreated hypertension had a similar risk for non-AD dementia.

IN PRACTICE:

“These results suggest that treating high blood pressure as a person ages continues to be a crucial factor in reducing their risk of Alzheimer’s disease,” the lead author Matthew J. Lennon, MD, PhD, said in a press release.

SOURCE:

This study was led by Matthew J. Lennon, MD, PhD, School of Clinical Medicine, UNSW Sydney, Sydney, Australia. It was published online in Neurology.

LIMITATIONS:

Varied definitions for hypertension across different locations might have led to discrepancies in diagnosis. Additionally, the study did not account for potential confounders such as stroke, transient ischemic attack, and heart disease, which may act as mediators rather than covariates. Furthermore, the study did not report mortality data, which may have affected the interpretation of dementia risk.

DISCLOSURES:

This research was supported by the National Institute on Aging of the National Institutes of Health. Some authors reported ties with several institutions and pharmaceutical companies outside this work. Full disclosures are available in the original article.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

Veterans Found Relief From Chronic Pain Through Telehealth Mindfulness

TOPLINE:

METHODOLOGY:

- Researchers conducted a randomized clinical trial of 811 veterans who had moderate to severe chronic pain and were recruited from three Veterans Affairs facilities in the United States.

- Participants were divided into three groups: Group MBI (270), self-paced MBI (271), and usual care (270), with interventions lasting 8 weeks.

- The primary outcome was pain-related function measured using a scale on interference from pain in areas like mood, walking, work, relationships, and sleep at 10 weeks, 6 months, and 1 year.

- Secondary outcomes included pain intensity, anxiety, fatigue, sleep disturbance, participation in social roles and activities, depression, and posttraumatic stress disorder (PTSD).

TAKEAWAY:

- Pain-related function significantly improved in participants in both the MBI groups versus usual care group, with a mean difference of −0.4 (95% CI, −0.7 to −0.2) for group MBI and −0.7 (95% CI, −1.0 to −0.4) for self-paced MBI (P < .001).

- Compared with the usual care group, both the MBI groups had significantly improved secondary outcomes, including pain intensity, depression, and PTSD.

- The probability of achieving 30% improvement in pain-related function was higher for group MBI at 10 weeks and 6 months and for self-paced MBI at all three timepoints.

- No significant differences were found between the MBI groups for primary and secondary outcomes.

IN PRACTICE:

“The viability and similarity of both these approaches for delivering MBIs increase patient options for meeting their individual needs and could help accelerate and improve the implementation of nonpharmacological pain treatment in health care systems,” the study authors wrote.

SOURCE:

The study was led by Diana J. Burgess, PhD, of the Center for Care Delivery and Outcomes Research, VA Health Systems Research in Minneapolis, Minnesota, and published online in JAMA Internal Medicine.

LIMITATIONS:

The trial was not designed to compare less resource-intensive MBIs with more intensive mindfulness-based stress reduction programs or in-person MBIs. The study did not address cost-effectiveness or control for time, attention, and other contextual factors. The high nonresponse rate (81%) to initial recruitment may have affected the generalizability of the findings.

DISCLOSURES:

The study was supported by the Pain Management Collaboratory–Pragmatic Clinical Trials Demonstration. Various authors reported grants from the National Center for Complementary and Integrative Health and the National Institute of Nursing Research.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

TOPLINE:

METHODOLOGY:

- Researchers conducted a randomized clinical trial of 811 veterans who had moderate to severe chronic pain and were recruited from three Veterans Affairs facilities in the United States.

- Participants were divided into three groups: Group MBI (270), self-paced MBI (271), and usual care (270), with interventions lasting 8 weeks.

- The primary outcome was pain-related function measured using a scale on interference from pain in areas like mood, walking, work, relationships, and sleep at 10 weeks, 6 months, and 1 year.

- Secondary outcomes included pain intensity, anxiety, fatigue, sleep disturbance, participation in social roles and activities, depression, and posttraumatic stress disorder (PTSD).

TAKEAWAY:

- Pain-related function significantly improved in participants in both the MBI groups versus usual care group, with a mean difference of −0.4 (95% CI, −0.7 to −0.2) for group MBI and −0.7 (95% CI, −1.0 to −0.4) for self-paced MBI (P < .001).

- Compared with the usual care group, both the MBI groups had significantly improved secondary outcomes, including pain intensity, depression, and PTSD.

- The probability of achieving 30% improvement in pain-related function was higher for group MBI at 10 weeks and 6 months and for self-paced MBI at all three timepoints.

- No significant differences were found between the MBI groups for primary and secondary outcomes.

IN PRACTICE:

“The viability and similarity of both these approaches for delivering MBIs increase patient options for meeting their individual needs and could help accelerate and improve the implementation of nonpharmacological pain treatment in health care systems,” the study authors wrote.

SOURCE:

The study was led by Diana J. Burgess, PhD, of the Center for Care Delivery and Outcomes Research, VA Health Systems Research in Minneapolis, Minnesota, and published online in JAMA Internal Medicine.

LIMITATIONS:

The trial was not designed to compare less resource-intensive MBIs with more intensive mindfulness-based stress reduction programs or in-person MBIs. The study did not address cost-effectiveness or control for time, attention, and other contextual factors. The high nonresponse rate (81%) to initial recruitment may have affected the generalizability of the findings.

DISCLOSURES:

The study was supported by the Pain Management Collaboratory–Pragmatic Clinical Trials Demonstration. Various authors reported grants from the National Center for Complementary and Integrative Health and the National Institute of Nursing Research.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

TOPLINE:

METHODOLOGY:

- Researchers conducted a randomized clinical trial of 811 veterans who had moderate to severe chronic pain and were recruited from three Veterans Affairs facilities in the United States.

- Participants were divided into three groups: Group MBI (270), self-paced MBI (271), and usual care (270), with interventions lasting 8 weeks.

- The primary outcome was pain-related function measured using a scale on interference from pain in areas like mood, walking, work, relationships, and sleep at 10 weeks, 6 months, and 1 year.

- Secondary outcomes included pain intensity, anxiety, fatigue, sleep disturbance, participation in social roles and activities, depression, and posttraumatic stress disorder (PTSD).

TAKEAWAY:

- Pain-related function significantly improved in participants in both the MBI groups versus usual care group, with a mean difference of −0.4 (95% CI, −0.7 to −0.2) for group MBI and −0.7 (95% CI, −1.0 to −0.4) for self-paced MBI (P < .001).

- Compared with the usual care group, both the MBI groups had significantly improved secondary outcomes, including pain intensity, depression, and PTSD.

- The probability of achieving 30% improvement in pain-related function was higher for group MBI at 10 weeks and 6 months and for self-paced MBI at all three timepoints.

- No significant differences were found between the MBI groups for primary and secondary outcomes.

IN PRACTICE:

“The viability and similarity of both these approaches for delivering MBIs increase patient options for meeting their individual needs and could help accelerate and improve the implementation of nonpharmacological pain treatment in health care systems,” the study authors wrote.

SOURCE:

The study was led by Diana J. Burgess, PhD, of the Center for Care Delivery and Outcomes Research, VA Health Systems Research in Minneapolis, Minnesota, and published online in JAMA Internal Medicine.

LIMITATIONS:

The trial was not designed to compare less resource-intensive MBIs with more intensive mindfulness-based stress reduction programs or in-person MBIs. The study did not address cost-effectiveness or control for time, attention, and other contextual factors. The high nonresponse rate (81%) to initial recruitment may have affected the generalizability of the findings.

DISCLOSURES:

The study was supported by the Pain Management Collaboratory–Pragmatic Clinical Trials Demonstration. Various authors reported grants from the National Center for Complementary and Integrative Health and the National Institute of Nursing Research.

This article was created using several editorial tools, including AI, as part of the process. Human editors reviewed this content before publication. A version of this article first appeared on Medscape.com.

The Next Frontier of Antibiotic Discovery: Inside Your Gut

Scientists at Stanford University and the University of Pennsylvania have discovered a new antibiotic candidate in a surprising place: the human gut.

In mice, the antibiotic — a peptide known as prevotellin-2 — showed antimicrobial potency on par with polymyxin B, an antibiotic medication used to treat multidrug-resistant infections. Meanwhile, the peptide mainly left commensal, or beneficial, bacteria alone. The study, published in Cell, also identified several other potent antibiotic peptides with the potential to combat antimicrobial-resistant infections.

The research is part of a larger quest to find new antibiotics that can fight drug-resistant infections, a critical public health threat with more than 2.8 million cases and 35,000 deaths annually in the United States. That quest is urgent, said study author César de la Fuente, PhD, professor of bioengineering at the University of Pennsylvania, Philadelphia.

“The main pillars that have enabled us to almost double our lifespan in the last 100 years or so have been antibiotics, vaccines, and clean water,” said Dr. de la Fuente. “Imagine taking out one of those. I think it would be pretty dramatic.” (Dr. De la Fuente’s lab has become known for finding antibiotic candidates in unusual places, like ancient genetic information of Neanderthals and woolly mammoths.)

The first widely used antibiotic, penicillin, was discovered in 1928, when a physician studying Staphylococcus bacteria returned to his lab after summer break to find mold growing in one of his petri dishes. But many other antibiotics — like streptomycin, tetracycline, and erythromycin — were discovered from soil bacteria, which produce variations of these substances to compete with other microorganisms.

By looking in the gut microbiome, the researchers hoped to identify peptides that the trillions of microbes use against each other in the fight for limited resources — ideally, peptides that wouldn’t broadly kill off the entire microbiome.

Kill the Bad, Spare the Good

Many traditional antibiotics are small molecules. This means they can wipe out the good bacteria in your body, and because each targets a specific bacterial function, bad bacteria can become resistant to them.

Peptide antibiotics, on the other hand, don’t diffuse into the whole body. If taken orally, they stay in the gut; if taken intravenously, they generally stay in the blood. And because of how they kill bacteria, targeting the membrane, they’re also less prone to bacterial resistance.

The microbiome is like a big reservoir of pathogens, said Ami Bhatt, MD, PhD, hematologist at Stanford University in California and one of the study’s authors. Because many antibiotics kill healthy gut bacteria, “what you have left over,” Dr. Bhatt said, “is this big open niche that gets filled up with multidrug-resistant organisms like E coli [Escherichia coli] or vancomycin-resistant Enterococcus.”

Dr. Bhatt has seen cancer patients undergo successful treatment only to die of a multidrug-resistant infection, because current antibiotics fail against those pathogens. “That’s like winning the battle to lose the war.”

By investigating the microbiome, “we wanted to see if we could identify antimicrobial peptides that might spare key members of our regular microbiome, so that we wouldn’t totally disrupt the microbiome the way we do when we use broad-spectrum, small molecule–based antibiotics,” Dr. Bhatt said.

The researchers used artificial intelligence to sift through 400,000 proteins to predict, based on known antibiotics, which peptide sequences might have antimicrobial properties. From the results, they chose 78 peptides to synthesize and test.

“The application of computational approaches combined with experimental validation is very powerful and exciting,” said Jennifer Geddes-McAlister, PhD, professor of cell biology at the University of Guelph in Ontario, Canada, who was not involved in the study. “The study is robust in its approach to microbiome sampling.”

The Long Journey from Lab to Clinic

More than half of the peptides the team tested effectively inhibited the growth of harmful bacteria, and prevotellin-2 (derived from the bacteria Prevotella copri)stood out as the most powerful.

“The study validates experimental data from the lab using animal models, which moves discoveries closer to the clinic,” said Dr. Geddes-McAlister. “Further testing with clinical trials is needed, but the potential for clinical application is promising.”

Unfortunately, that’s not likely to happen anytime soon, said Dr. de la Fuente. “There is not enough economic incentive” for companies to develop new antibiotics. Ten years is his most hopeful guess for when we might see prevotellin-2, or a similar antibiotic, complete clinical trials.

A version of this article first appeared on Medscape.com.

Scientists at Stanford University and the University of Pennsylvania have discovered a new antibiotic candidate in a surprising place: the human gut.

In mice, the antibiotic — a peptide known as prevotellin-2 — showed antimicrobial potency on par with polymyxin B, an antibiotic medication used to treat multidrug-resistant infections. Meanwhile, the peptide mainly left commensal, or beneficial, bacteria alone. The study, published in Cell, also identified several other potent antibiotic peptides with the potential to combat antimicrobial-resistant infections.

The research is part of a larger quest to find new antibiotics that can fight drug-resistant infections, a critical public health threat with more than 2.8 million cases and 35,000 deaths annually in the United States. That quest is urgent, said study author César de la Fuente, PhD, professor of bioengineering at the University of Pennsylvania, Philadelphia.

“The main pillars that have enabled us to almost double our lifespan in the last 100 years or so have been antibiotics, vaccines, and clean water,” said Dr. de la Fuente. “Imagine taking out one of those. I think it would be pretty dramatic.” (Dr. De la Fuente’s lab has become known for finding antibiotic candidates in unusual places, like ancient genetic information of Neanderthals and woolly mammoths.)

The first widely used antibiotic, penicillin, was discovered in 1928, when a physician studying Staphylococcus bacteria returned to his lab after summer break to find mold growing in one of his petri dishes. But many other antibiotics — like streptomycin, tetracycline, and erythromycin — were discovered from soil bacteria, which produce variations of these substances to compete with other microorganisms.

By looking in the gut microbiome, the researchers hoped to identify peptides that the trillions of microbes use against each other in the fight for limited resources — ideally, peptides that wouldn’t broadly kill off the entire microbiome.

Kill the Bad, Spare the Good

Many traditional antibiotics are small molecules. This means they can wipe out the good bacteria in your body, and because each targets a specific bacterial function, bad bacteria can become resistant to them.

Peptide antibiotics, on the other hand, don’t diffuse into the whole body. If taken orally, they stay in the gut; if taken intravenously, they generally stay in the blood. And because of how they kill bacteria, targeting the membrane, they’re also less prone to bacterial resistance.

The microbiome is like a big reservoir of pathogens, said Ami Bhatt, MD, PhD, hematologist at Stanford University in California and one of the study’s authors. Because many antibiotics kill healthy gut bacteria, “what you have left over,” Dr. Bhatt said, “is this big open niche that gets filled up with multidrug-resistant organisms like E coli [Escherichia coli] or vancomycin-resistant Enterococcus.”

Dr. Bhatt has seen cancer patients undergo successful treatment only to die of a multidrug-resistant infection, because current antibiotics fail against those pathogens. “That’s like winning the battle to lose the war.”

By investigating the microbiome, “we wanted to see if we could identify antimicrobial peptides that might spare key members of our regular microbiome, so that we wouldn’t totally disrupt the microbiome the way we do when we use broad-spectrum, small molecule–based antibiotics,” Dr. Bhatt said.

The researchers used artificial intelligence to sift through 400,000 proteins to predict, based on known antibiotics, which peptide sequences might have antimicrobial properties. From the results, they chose 78 peptides to synthesize and test.

“The application of computational approaches combined with experimental validation is very powerful and exciting,” said Jennifer Geddes-McAlister, PhD, professor of cell biology at the University of Guelph in Ontario, Canada, who was not involved in the study. “The study is robust in its approach to microbiome sampling.”

The Long Journey from Lab to Clinic

More than half of the peptides the team tested effectively inhibited the growth of harmful bacteria, and prevotellin-2 (derived from the bacteria Prevotella copri)stood out as the most powerful.

“The study validates experimental data from the lab using animal models, which moves discoveries closer to the clinic,” said Dr. Geddes-McAlister. “Further testing with clinical trials is needed, but the potential for clinical application is promising.”

Unfortunately, that’s not likely to happen anytime soon, said Dr. de la Fuente. “There is not enough economic incentive” for companies to develop new antibiotics. Ten years is his most hopeful guess for when we might see prevotellin-2, or a similar antibiotic, complete clinical trials.

A version of this article first appeared on Medscape.com.

Scientists at Stanford University and the University of Pennsylvania have discovered a new antibiotic candidate in a surprising place: the human gut.

In mice, the antibiotic — a peptide known as prevotellin-2 — showed antimicrobial potency on par with polymyxin B, an antibiotic medication used to treat multidrug-resistant infections. Meanwhile, the peptide mainly left commensal, or beneficial, bacteria alone. The study, published in Cell, also identified several other potent antibiotic peptides with the potential to combat antimicrobial-resistant infections.

The research is part of a larger quest to find new antibiotics that can fight drug-resistant infections, a critical public health threat with more than 2.8 million cases and 35,000 deaths annually in the United States. That quest is urgent, said study author César de la Fuente, PhD, professor of bioengineering at the University of Pennsylvania, Philadelphia.

“The main pillars that have enabled us to almost double our lifespan in the last 100 years or so have been antibiotics, vaccines, and clean water,” said Dr. de la Fuente. “Imagine taking out one of those. I think it would be pretty dramatic.” (Dr. De la Fuente’s lab has become known for finding antibiotic candidates in unusual places, like ancient genetic information of Neanderthals and woolly mammoths.)

The first widely used antibiotic, penicillin, was discovered in 1928, when a physician studying Staphylococcus bacteria returned to his lab after summer break to find mold growing in one of his petri dishes. But many other antibiotics — like streptomycin, tetracycline, and erythromycin — were discovered from soil bacteria, which produce variations of these substances to compete with other microorganisms.

By looking in the gut microbiome, the researchers hoped to identify peptides that the trillions of microbes use against each other in the fight for limited resources — ideally, peptides that wouldn’t broadly kill off the entire microbiome.

Kill the Bad, Spare the Good

Many traditional antibiotics are small molecules. This means they can wipe out the good bacteria in your body, and because each targets a specific bacterial function, bad bacteria can become resistant to them.

Peptide antibiotics, on the other hand, don’t diffuse into the whole body. If taken orally, they stay in the gut; if taken intravenously, they generally stay in the blood. And because of how they kill bacteria, targeting the membrane, they’re also less prone to bacterial resistance.

The microbiome is like a big reservoir of pathogens, said Ami Bhatt, MD, PhD, hematologist at Stanford University in California and one of the study’s authors. Because many antibiotics kill healthy gut bacteria, “what you have left over,” Dr. Bhatt said, “is this big open niche that gets filled up with multidrug-resistant organisms like E coli [Escherichia coli] or vancomycin-resistant Enterococcus.”

Dr. Bhatt has seen cancer patients undergo successful treatment only to die of a multidrug-resistant infection, because current antibiotics fail against those pathogens. “That’s like winning the battle to lose the war.”

By investigating the microbiome, “we wanted to see if we could identify antimicrobial peptides that might spare key members of our regular microbiome, so that we wouldn’t totally disrupt the microbiome the way we do when we use broad-spectrum, small molecule–based antibiotics,” Dr. Bhatt said.

The researchers used artificial intelligence to sift through 400,000 proteins to predict, based on known antibiotics, which peptide sequences might have antimicrobial properties. From the results, they chose 78 peptides to synthesize and test.

“The application of computational approaches combined with experimental validation is very powerful and exciting,” said Jennifer Geddes-McAlister, PhD, professor of cell biology at the University of Guelph in Ontario, Canada, who was not involved in the study. “The study is robust in its approach to microbiome sampling.”

The Long Journey from Lab to Clinic

More than half of the peptides the team tested effectively inhibited the growth of harmful bacteria, and prevotellin-2 (derived from the bacteria Prevotella copri)stood out as the most powerful.

“The study validates experimental data from the lab using animal models, which moves discoveries closer to the clinic,” said Dr. Geddes-McAlister. “Further testing with clinical trials is needed, but the potential for clinical application is promising.”

Unfortunately, that’s not likely to happen anytime soon, said Dr. de la Fuente. “There is not enough economic incentive” for companies to develop new antibiotics. Ten years is his most hopeful guess for when we might see prevotellin-2, or a similar antibiotic, complete clinical trials.

A version of this article first appeared on Medscape.com.

FROM CELL

Severe COVID-19 Tied to Increased Risk for Mental Illness

New research adds to a growing body of evidence suggesting that COVID-19 infection can be hard on mental health.

, particularly in those with severe COVID who had not been vaccinated.

Importantly, vaccination appeared to mitigate the adverse effects of COVID-19 on mental health, the investigators found.

“Our results highlight the importance COVID-19 vaccination in the general population and particularly among those with mental illnesses, who may be at higher risk of both SARS-CoV-2 infection and adverse outcomes following COVID-19,” first author Venexia Walker, PhD, with University of Bristol, United Kingdom, said in a news release.

The study was published online on August 21 in JAMA Psychiatry.

Novel Data

“Before this study, a number of papers had looked at associations of COVID diagnosis with mental ill health, and broadly speaking, they had reported associations of different magnitudes,” study author Jonathan A. C. Sterne, PhD, with University of Bristol, noted in a journal podcast.

“Some studies were restricted to patients who were hospitalized with COVID-19 and some not and the duration of follow-up varied. And importantly, the nature of COVID-19 changed profoundly as vaccination became available and there was little data on the impact of vaccination on associations of COVID-19 with subsequent mental ill health,” Dr. Sterne said.

The UK study was conducted in three cohorts — a cohort of about 18.6 million people who were diagnosed with COVID-19 before a vaccine was available, a cohort of about 14 million adults who were vaccinated, and a cohort of about 3.2 million people who were unvaccinated.

The researchers compared rates of various mental illnesses after COVID-19 with rates before or without COVID-19 and by vaccination status.

Across all cohorts, rates of most mental illnesses examined were “markedly elevated” during the first month following a COVID-19 diagnosis compared with rates before or without COVID-19.

For example, the adjusted hazard ratios for depression (the most common illness) and serious mental illness in the month after COVID-19 were 1.93 and 1.49, respectively, in the prevaccination cohort and 1.79 and 1.45, respectively, in the unvaccinated cohort compared with 1.16 and 0.91 in the vaccinated cohort.

This elevation in the rate of mental illnesses was mainly seen after severe COVID-19 that led to hospitalization and remained higher for up to a year following severe COVID-19 in unvaccinated adults.

For severe COVID-19 with hospitalization, the adjusted hazard ratio for depression in the month following admission was 16.3 in the prevaccine cohort, 15.6 in the unvaccinated cohort, and 12.9 in the vaccinated cohort.

The adjusted hazard ratios for serious mental illness in the month after COVID hospitalization was 9.71 in the prevaccine cohort, 8.75 with no vaccination, and 6.52 with vaccination.

“Incidences of other mental illnesses were broadly similar to those of depression and serious mental illness, both overall and for COVID-19 with and without hospitalization,” the authors report in their paper.

Consistent with prior research, subgroup analyzes found the association of COVID-19 and mental illness was stronger among older adults and men, with no marked differences by ethnic group.

“We should be concerned about continuing consequences in people who experienced severe COVID-19 early in the pandemic, and they may include a continuing higher incidence of mental ill health, such as depression and serious mental illness,” Dr. Sterne said in the podcast.

In terms of ongoing booster vaccinations, “people who are advised that they are under vaccinated or recommended for further COVID-19 vaccination, should take those invitations seriously, because by preventing severe COVID-19, which is what vaccination does, you can prevent consequences such as mental illness,” Dr. Sterne added.

The study was supported by the COVID-19 Longitudinal Health and Wellbeing National Core Study, which is funded by the Medical Research Council and National Institute for Health and Care Research. The authors had no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

New research adds to a growing body of evidence suggesting that COVID-19 infection can be hard on mental health.

, particularly in those with severe COVID who had not been vaccinated.

Importantly, vaccination appeared to mitigate the adverse effects of COVID-19 on mental health, the investigators found.

“Our results highlight the importance COVID-19 vaccination in the general population and particularly among those with mental illnesses, who may be at higher risk of both SARS-CoV-2 infection and adverse outcomes following COVID-19,” first author Venexia Walker, PhD, with University of Bristol, United Kingdom, said in a news release.

The study was published online on August 21 in JAMA Psychiatry.

Novel Data

“Before this study, a number of papers had looked at associations of COVID diagnosis with mental ill health, and broadly speaking, they had reported associations of different magnitudes,” study author Jonathan A. C. Sterne, PhD, with University of Bristol, noted in a journal podcast.

“Some studies were restricted to patients who were hospitalized with COVID-19 and some not and the duration of follow-up varied. And importantly, the nature of COVID-19 changed profoundly as vaccination became available and there was little data on the impact of vaccination on associations of COVID-19 with subsequent mental ill health,” Dr. Sterne said.

The UK study was conducted in three cohorts — a cohort of about 18.6 million people who were diagnosed with COVID-19 before a vaccine was available, a cohort of about 14 million adults who were vaccinated, and a cohort of about 3.2 million people who were unvaccinated.

The researchers compared rates of various mental illnesses after COVID-19 with rates before or without COVID-19 and by vaccination status.

Across all cohorts, rates of most mental illnesses examined were “markedly elevated” during the first month following a COVID-19 diagnosis compared with rates before or without COVID-19.

For example, the adjusted hazard ratios for depression (the most common illness) and serious mental illness in the month after COVID-19 were 1.93 and 1.49, respectively, in the prevaccination cohort and 1.79 and 1.45, respectively, in the unvaccinated cohort compared with 1.16 and 0.91 in the vaccinated cohort.

This elevation in the rate of mental illnesses was mainly seen after severe COVID-19 that led to hospitalization and remained higher for up to a year following severe COVID-19 in unvaccinated adults.

For severe COVID-19 with hospitalization, the adjusted hazard ratio for depression in the month following admission was 16.3 in the prevaccine cohort, 15.6 in the unvaccinated cohort, and 12.9 in the vaccinated cohort.

The adjusted hazard ratios for serious mental illness in the month after COVID hospitalization was 9.71 in the prevaccine cohort, 8.75 with no vaccination, and 6.52 with vaccination.

“Incidences of other mental illnesses were broadly similar to those of depression and serious mental illness, both overall and for COVID-19 with and without hospitalization,” the authors report in their paper.

Consistent with prior research, subgroup analyzes found the association of COVID-19 and mental illness was stronger among older adults and men, with no marked differences by ethnic group.

“We should be concerned about continuing consequences in people who experienced severe COVID-19 early in the pandemic, and they may include a continuing higher incidence of mental ill health, such as depression and serious mental illness,” Dr. Sterne said in the podcast.

In terms of ongoing booster vaccinations, “people who are advised that they are under vaccinated or recommended for further COVID-19 vaccination, should take those invitations seriously, because by preventing severe COVID-19, which is what vaccination does, you can prevent consequences such as mental illness,” Dr. Sterne added.

The study was supported by the COVID-19 Longitudinal Health and Wellbeing National Core Study, which is funded by the Medical Research Council and National Institute for Health and Care Research. The authors had no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

New research adds to a growing body of evidence suggesting that COVID-19 infection can be hard on mental health.

, particularly in those with severe COVID who had not been vaccinated.

Importantly, vaccination appeared to mitigate the adverse effects of COVID-19 on mental health, the investigators found.

“Our results highlight the importance COVID-19 vaccination in the general population and particularly among those with mental illnesses, who may be at higher risk of both SARS-CoV-2 infection and adverse outcomes following COVID-19,” first author Venexia Walker, PhD, with University of Bristol, United Kingdom, said in a news release.

The study was published online on August 21 in JAMA Psychiatry.

Novel Data

“Before this study, a number of papers had looked at associations of COVID diagnosis with mental ill health, and broadly speaking, they had reported associations of different magnitudes,” study author Jonathan A. C. Sterne, PhD, with University of Bristol, noted in a journal podcast.

“Some studies were restricted to patients who were hospitalized with COVID-19 and some not and the duration of follow-up varied. And importantly, the nature of COVID-19 changed profoundly as vaccination became available and there was little data on the impact of vaccination on associations of COVID-19 with subsequent mental ill health,” Dr. Sterne said.

The UK study was conducted in three cohorts — a cohort of about 18.6 million people who were diagnosed with COVID-19 before a vaccine was available, a cohort of about 14 million adults who were vaccinated, and a cohort of about 3.2 million people who were unvaccinated.

The researchers compared rates of various mental illnesses after COVID-19 with rates before or without COVID-19 and by vaccination status.

Across all cohorts, rates of most mental illnesses examined were “markedly elevated” during the first month following a COVID-19 diagnosis compared with rates before or without COVID-19.

For example, the adjusted hazard ratios for depression (the most common illness) and serious mental illness in the month after COVID-19 were 1.93 and 1.49, respectively, in the prevaccination cohort and 1.79 and 1.45, respectively, in the unvaccinated cohort compared with 1.16 and 0.91 in the vaccinated cohort.

This elevation in the rate of mental illnesses was mainly seen after severe COVID-19 that led to hospitalization and remained higher for up to a year following severe COVID-19 in unvaccinated adults.

For severe COVID-19 with hospitalization, the adjusted hazard ratio for depression in the month following admission was 16.3 in the prevaccine cohort, 15.6 in the unvaccinated cohort, and 12.9 in the vaccinated cohort.

The adjusted hazard ratios for serious mental illness in the month after COVID hospitalization was 9.71 in the prevaccine cohort, 8.75 with no vaccination, and 6.52 with vaccination.

“Incidences of other mental illnesses were broadly similar to those of depression and serious mental illness, both overall and for COVID-19 with and without hospitalization,” the authors report in their paper.

Consistent with prior research, subgroup analyzes found the association of COVID-19 and mental illness was stronger among older adults and men, with no marked differences by ethnic group.

“We should be concerned about continuing consequences in people who experienced severe COVID-19 early in the pandemic, and they may include a continuing higher incidence of mental ill health, such as depression and serious mental illness,” Dr. Sterne said in the podcast.

In terms of ongoing booster vaccinations, “people who are advised that they are under vaccinated or recommended for further COVID-19 vaccination, should take those invitations seriously, because by preventing severe COVID-19, which is what vaccination does, you can prevent consequences such as mental illness,” Dr. Sterne added.

The study was supported by the COVID-19 Longitudinal Health and Wellbeing National Core Study, which is funded by the Medical Research Council and National Institute for Health and Care Research. The authors had no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

A Step-by-Step Guide for Diagnosing Cushing Syndrome

“Moon face” is a term that’s become popular on social media, used to describe people with unusually round faces who are purported to have high levels of cortisol. But the term “moon face” isn’t new. It was actually coined in the 1930s by neurosurgeon Harvey Cushing, MD, who identified patients with a constellation of clinical characteristics — a condition that came to bear his name — which included rapidly developing facial adiposity. And indeed, elevated cortisol is a hallmark feature of Cushing syndrome (CS), but there are other reasons for elevated cortisol and other manifestations of CS.

Today, the term “moon face” has been replaced with “round face,” which is considered more encompassing and culturally sensitive, said Maria Fleseriu, MD, professor of medicine and neurological surgery and director of the Pituitary Center at Oregon Health and Science University in Portland, Oregon.

Facial roundness can lead clinicians to be suspicious that their patient is experiencing CS. But because a round face is associated with several other conditions, it’s important to be familiar with its particular presentation in CS, as well as how to diagnose and treat CS.

Pathophysiology of CS

Dr. Fleseriu defined CS as “prolonged nonphysiologic increase in cortisol, due either to exogenous use of steroids (oral, topical, or inhaled) or to excess endogenous cortisol production.” She added that it’s important “to always exclude exogenous causes before conducting a further workup to determine the type and cause of cortisol excess.”

Dr. Fleseriu said. Other causes of CS are ectopic (caused by neuroendocrine tumors) or adrenal. CS affects primarily females and typically has an onset between ages 20 and 50 years, depending on the CS type.

Diagnosis of CS is “substantially delayed for most patients, due to metabolic syndrome phenotypic overlap and lack of a single pathognomonic symptom,” according to Dr. Fleseriu.

An accurate diagnosis should be on the basis of signs and symptoms, biochemical screening, other laboratory testing, and diagnostic imaging.

Look for Clinical Signs and Symptoms of CS

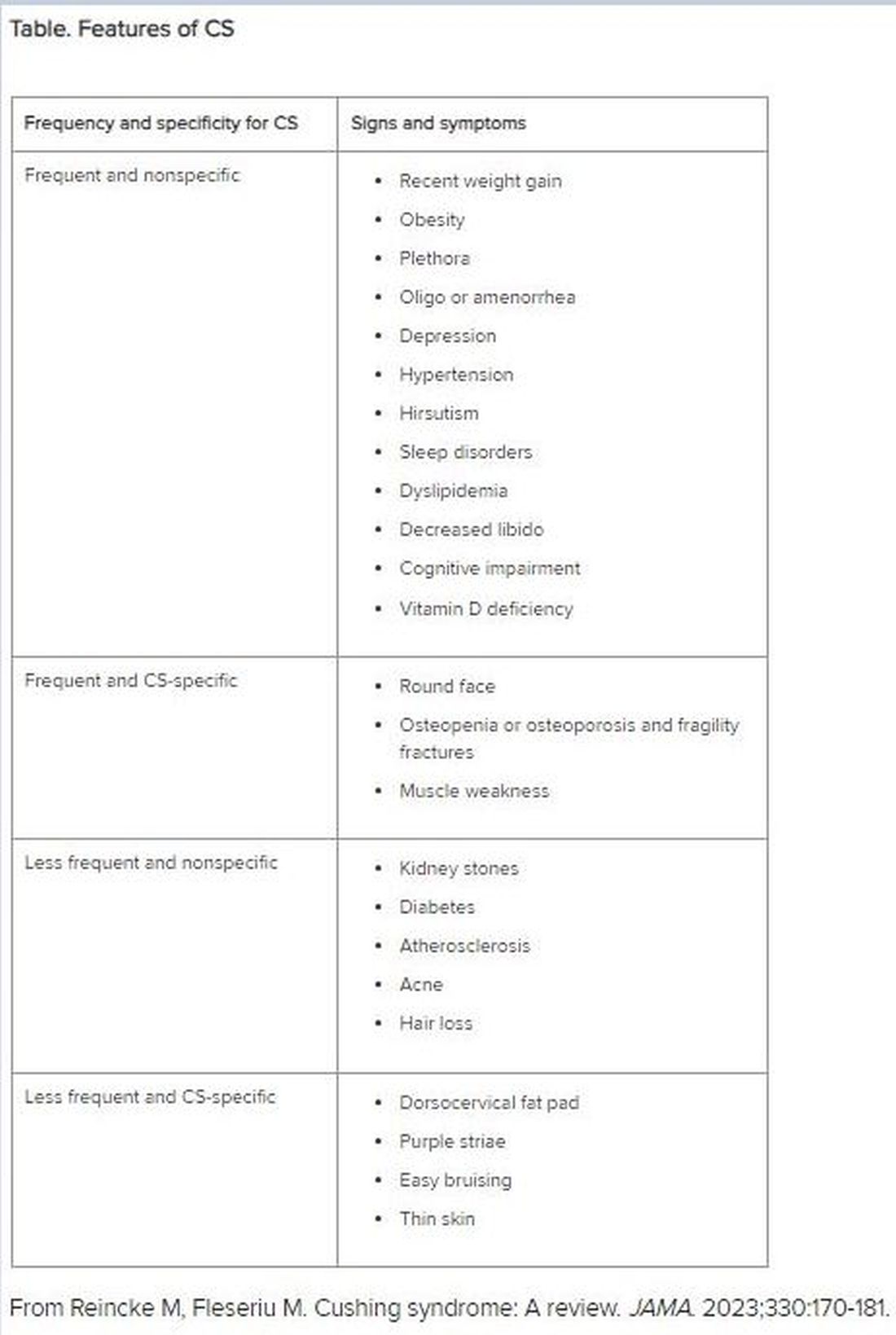

“CS mostly presents as a combination of two or more features,” Dr. Fleseriu stated. These include increased fat pads (in the face, neck, and trunk), skin changes, signs of protein catabolism, growth retardation and body weight increase in children, and metabolic dysregulations (Table).

“Biochemical screening should be performed in patients with a combination of symptoms, and therefore an increased pretest probability for CS,” Dr. Fleseriu advised.

A CS diagnosis requires not only biochemical confirmation of hypercortisolemia but also determination of the underlying cause of the excess endogenous cortisol production. This is a key step, as the management of CS is specific to its etiology.