User login

Patch Test–Directed Dietary Avoidance in the Management of Irritable Bowel Syndrome

Irritable bowel syndrome (IBS) is one of the most common disorders managed by primary care physicians and gastroenterologists.1 Characterized by abdominal pain coinciding with altered stool form and/or frequency as defined by the Rome IV diagnostic criteria,2 symptoms range from mild to debilitating and may remarkably impair quality of life and work productivity.1

The cause of IBS is poorly understood. Proposed pathophysiologic factors include impaired mucosal function, microbial imbalance, visceral hypersensitivity, psychologic dysfunction, genetic factors, neurotransmitter imbalance, postinfectious gastroenteritis, inflammation, and food intolerance, any or all of which may lead to the development and maintenance of IBS symptoms.3 More recent observations of inflammation in the intestinal lining4,5 and proinflammatory peripherally circulating cytokines6 challenge its traditional classification as a functional disorder.

The cause of this inflammation is of intense interest, with speculation that the bacterial microbiota, bile acids, association with postinfectious gastroenteritis and inflammatory bowel disease cases, and/or foods may contribute. Although approximately 50% of individuals with IBS report that foods aggravate their symptoms,7 studies investigating type I antibody–mediated immediate hypersensitivity have largely failed to demonstrate a substantial link, prompting many authorities to regard these associations as food “intolerances” rather than true allergies. Based on this body of literature, a large 2010 consensus report on all aspects of food allergies advises against food allergy testing for IBS.8

In contrast, by utilizing type IV food allergen skin patch testing, 2 proof-of-concept studies9,10 investigated a different allergic mechanism in IBS, namely cell-mediated delayed-type hypersensitivity. Because many foods and food additives are known to cause allergic contact dermatitis,11 it was hypothesized that these foods may elicit a similar delayed-type hypersensitivity response in the intestinal lining in previously sensitized individuals. By following a patch test–guided food avoidance diet, a large subpopulation of patients with IBS experienced partial or complete IBS symptom relief.9,10 Our study further investigates a role for food-related delayed-type hypersensitivities in the pathogenesis of IBS.

Methods

Patient Selection

This study was conducted in a secondary care community-based setting. All patients were self-referred over an 18-month period ending in October 2019, had physician-diagnosed IBS, and/or met the Rome IV criteria for IBS and presented expressly for the food patch testing on a fee-for-service basis. Subtype of IBS was determined on presentation by the self-reported historically predominant symptom. Duration of IBS symptoms was self-reported and was rounded to the nearest year for purposes of data collection.

Exclusion criteria included pregnancy, known allergy to adhesive tape or any of the food allergens used in the study, severe skin rash, symptoms that had a known cause other than IBS, or active treatment with systemic immunosuppressive medications.

Patch Testing

Skin patch testing was initiated using an extensive panel of 117 type IV food allergens (eTable)11 identified in the literature,12 most of which utilized standard compounded formulations13 or were available from reputable patch test manufacturers (Brial Allergen GmbH; Chemotechnique Diagnostics). This panel was not approved by the US Food and Drug Administration. The freeze-dried vegetable formulations were taken from the 2018 report.9 Standard skin patch test procedure protocols12 were used, affixing the patches to the upper aspect of the back.

Following patch test application on day 1, two follow-up visits occurred on day 3 and either day 4 or day 5. On day 3, patches were removed, and the initial results were read by a board-certified dermatologist according to a standard grading system.14 Interpretation of patch tests included no reaction, questionable reaction consisting of macular erythema, weak reaction consisting of erythema and slight edema, or strong reaction consisting of erythema and marked edema. On day 4 or day 5, the final patch test reading was performed, and patients were informed of their results. Patients were advised to avoid ingestion of all foods that elicited a questionable or positive patch test response for at least 3 months, and information about the foods and their avoidance also was distributed and reviewed.

Food Avoidance Questionnaire

Patients with questionable or positive patch tests at 72 or 96 hours were advised of their eligibility to participate in an institutional review board–approved food avoidance questionnaire study investigating the utility of patch test–guided food avoidance on IBS symptoms. The questionnaire assessed the following: (1) baseline average abdominal pain prior to patch test–guided avoidance diet (0=no symptoms; 10=very severe); (2) average abdominal pain since initiation of patch test–guided avoidance diet (0=no symptoms; 10=very severe); (3) degree of improvement in overall IBS symptoms by the end of the food avoidance period (0=no improvement; 10=great improvement); (4) compliance with the avoidance diet for the duration of the avoidance period (completely, partially, not at all, or not sure).

Questionnaires and informed consent were mailed to patients via the US Postal Service 3 months after completing the patch testing. The questionnaire and consent were to be completed and returned after dietary avoidance of the identified allergens for at least 3 months. Patients were not compensated for participation in the study.

Statistical Analysis

Statistical analysis of data collected from study questionnaires was performed with Microsoft Excel. Mean abdominal pain and mean global improvement scores were reported along with 1 SD of the mean. For comparison of mean abdominal pain and improvement in global IBS symptoms from baseline to after 3 months of identified allergen avoidance, a Mann-Whitney U test was performed, with P<.05 being considered statistically significant.

Results

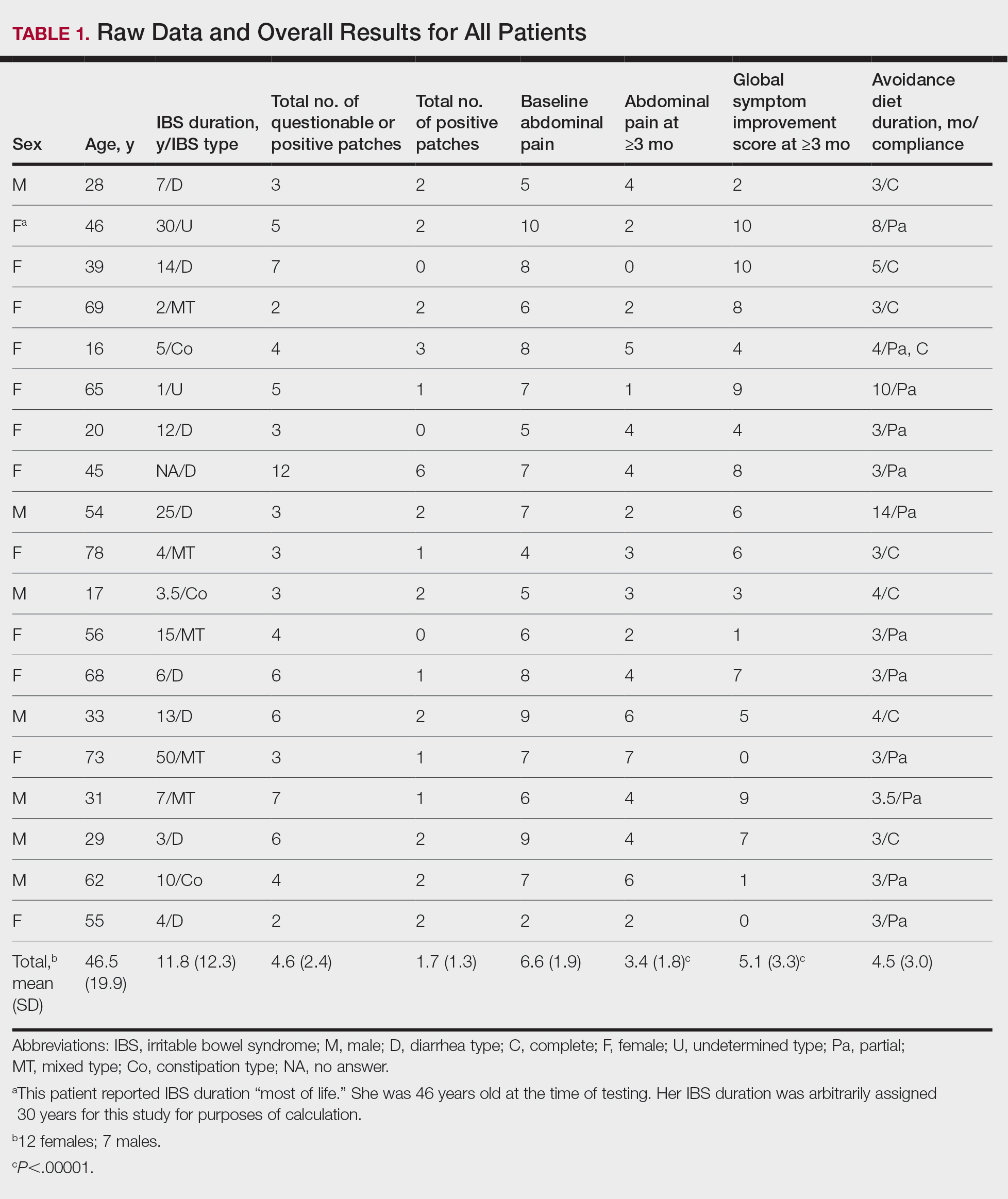

Thirty-seven consecutive patients underwent the testing and were eligible for the study. Nineteen patients were included in the study by virtue of completing and returning their posttest food avoidance questionnaire and informed consent. Eighteen patients were White and 1 was Asian. Subcategories of IBS were diarrhea predominant (9 [47.4%]), constipation predominant (3 [15.8%]), mixed type (5 [26.3%]), and undetermined type (2 [10.5%]). Questionnaire answers were reported after a mean (SD) duration of patch test–directed food avoidance of 4.5 (3.0) months (Table 1).

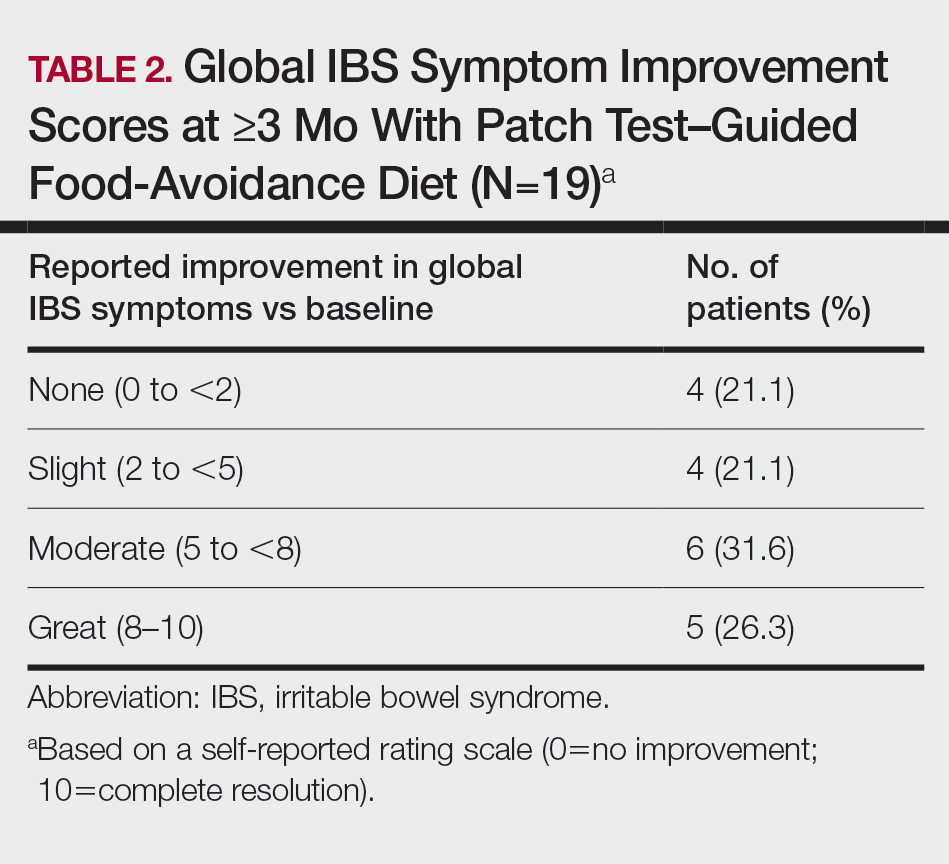

Overall Improvement

Fifteen (78.9%) patients reported at least slight to great improvement in their global IBS symptoms, and 4 (21.1%) reported no improvement (Table 2), with a mean (SD) improvement score of 5.1 (3.3)(P<.00001).

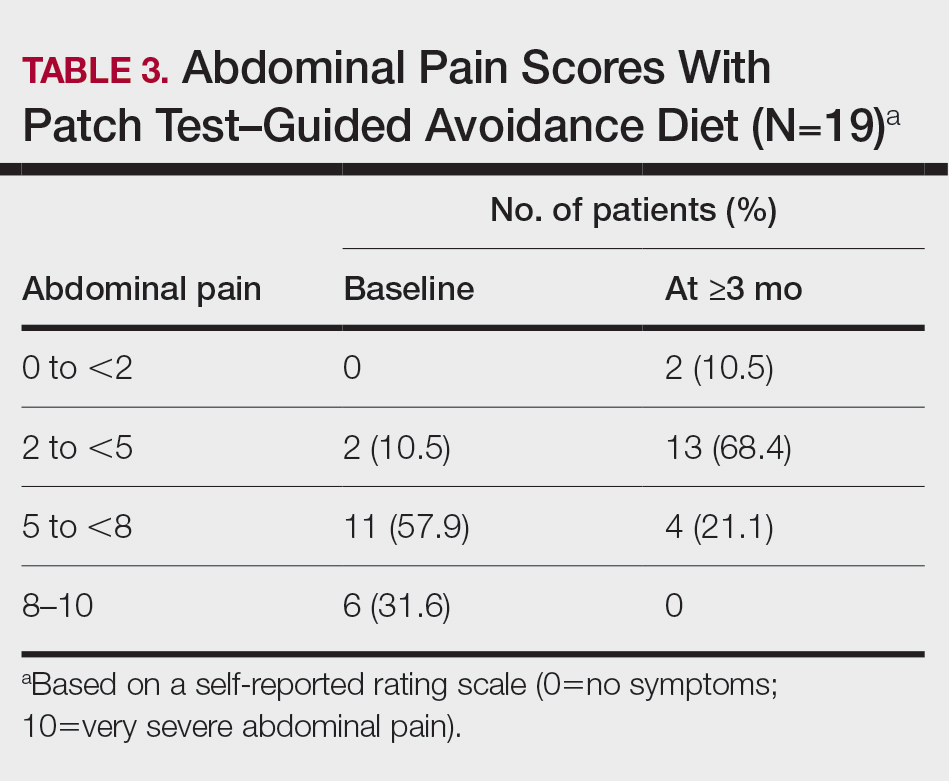

Abdominal Pain

All 19 patients reported mild to marked abdominal pain at baseline. The mean (SD) baseline pain score was 6.6 (1.9). The mean (SD) pain score was 3.4 (1.8)(P<.00001) after an average patch test–guided dietary avoidance of 4.5 (3.0) months (Table 3).

Comment

Despite intense research interest and a growing number of new medications for IBS approved by the US Food and Drug Administration, there remains a large void in the search for cost-effective and efficacious approaches for IBS evaluation and treatment. In addition to major disturbances in quality of life,14,15 the cost to society in direct medical expenses and indirect costs associated with loss of productivity and work absenteeism is considerable; estimates range from $21 billion or more annually.16

Food Hypersensitivities Triggering IBS

This study further evaluated a role for skin patch testing to identify delayed-type (type IV) food hypersensitivities that trigger IBS symptoms and differed from the prior investigations9,10 in that the symptoms used to define IBS were updated from the Rome III17 to the newer Rome IV2 criteria. The data presented here show moderate to great improvement in global IBS symptoms in 58% (11/19) of patients, which is in line with a 2018 report of 40 study participants for whom follow-up at 3 or more months was available,9 providing additional support for a role for type IV food allergies in causing the same gastrointestinal tract symptoms that define IBS. The distinction between food-related studies, including this one, that implicate food allergies9,10 and prior studies that did not support a role for food allergies in IBS pathogenesis8 can be accounted for by the type of allergy investigated. Conclusions that IBS flares after food ingestion were attributable to intolerance rather than true allergy were based on results investigating only the humoral arm and failed to consider the cell-mediated arm of the immune system. As such, foods that appear to trigger IBS symptoms on an allergic basis in our study are recognized in the literature12 as type IV allergens that elicit cell-mediated immunologic responses rather than more widely recognized type I allergens, such as peanuts and shellfish, that elicit immediate-type hypersensitivity responses. Although any type IV food allergen(s) could be responsible, a pattern emerged in this study and the study published in 2018.9 Namely, some foods stood out as more frequently inducing patch test reactions, with the 3 most common being carmine, cinnamon bark oil, and sodium bisulfite (eTable). The sample size is relatively small, but the results raise the question of whether these foods are the most likely to trigger IBS symptoms in the general population. If so, is it the result of a higher innate sensitizing potential and/or a higher frequency of exposure in commonly eaten foods? Larger randomized clinical trials are needed.

Immune Response and IBS

There is mounting evidence that the immune system may play a role in the pathophysiology of IBS.18 Both lymphocyte infiltration of the myenteric plexus and an increase in intestinal mucosal T lymphocytes have been observed, and it is generally accepted that the mucosal immune system seems to be activated, at least in a subset of patients with IBS.19 Irritable bowel syndrome associations with quiescent inflammatory bowel disease or postinfectious gastroenteritis provide 2 potential causes for the inflammation, but most IBS patients have had neither.20 The mucosal lining of the intestine and immune system have vast exposure to intraluminal allergens in transit, and it is hypothesized that the same delayed-type hypersensitivity response elicited in the skin by patch testing is elicited in the intestine, resulting in the inflammation that triggers IBS symptoms.10 The results here add to the growing body of evidence that ingestion of type IV food allergens by previously sensitized individuals could, in fact, be the primary source of the inflammation observed in a large subpopulation of individuals who carry a diagnosis of IBS.

Food Allergens in Patch Testing

Many of the food allergens used in this study are commonly found in various nonfood products that may contact the skin. For example, many flavorings are used as fragrances, and many preservatives, binders, thickeners, emulsifiers, and stabilizers serve the same role in moisturizers, cosmetics, and topical medications. Likewise, nickel sulfate hexahydrate, ubiquitous in foods that arise from the earth, often is found in metal in jewelry, clothing components, and cell phones. All are potential sensitizers. Thus, the question may arise whether the causal relationship between the food allergens identified by patch testing and IBS symptoms might be more of a systemic effect akin to systemic contact dermatitis as sometimes follows ingestion of an allergen to which an individual has been topically sensitized, rather than the proposed localized immunologic response in the intestinal lining. We were unaware of patient history of allergic contact dermatitis to any of the patch test allergens in this study, but the dermatologist author here (M.S.) has unpublished experience with 2 other patients with IBS who have benefited from low-nickel diets after having had positive patch tests to nickel sulfate hexahydrate and who, in retrospect, did report a history of earring dermatitis. Future investigations using pre– and post–food challenge histologic assessments of the intestinal mucosa in patients who benefit from patch test–guided food avoidance diets should help to better define the mechanism.

Because IBS has not been traditionally associated with structural or biochemical abnormalities detectable with current routine diagnostic tools, it has long been viewed as a functional disorder. The findings published more recently,9,10 in addition to this study’s results, would negate this functional classification in the subset of patients with IBS symptoms who experience sustained relief of their symptoms by patch test–directed food avoidance. The underlying delayed-type hypersensitivity pathogenesis of the IBS-like symptoms in these individuals would mandate an organic classification, aptly named allergic contact enteritis.10

Follow-up Data

The mean (SD) follow-up duration for this study and the 2018 report9 was 4.5 (3.0) months and 7.6 (3.9) months, respectively. The placebo effect is a concern for disorders such as IBS in which primarily subjective outcome measures are available,21 and in a retrospective analysis of 25 randomized, placebo-controlled IBS clinical trials, Spiller22 concluded the optimum length of such trials to be more than 3 months, which these studies exceed. Although not blinded or placebo controlled, the length of follow-up in the 2018 report9 and here enhances the validity of the results.

Limitation

The retrospective manner in which the self-assessments were reported in this study introduces the potential for recall bias, a variable that could affect results. The presence and direction of bias by any given individual cannot be known, making it difficult to determine any effect it may have had. Further investigation should include daily assessments and refine the primary study end points to include both abdominal pain and the defecation considerations that define IBS.

Conclusion

Food patch testing has the potential to offer a safe, cost-effective approach to the evaluation and management of IBS symptoms. Randomized clinical trials are needed to further investigate the validity of the proof-of-concept results to date. For patients who benefit from a patch test–guided avoidance diet, invasive and costly endoscopic, radiologic, and laboratory testing and pharmacologic management could be averted. Symptomatic relief could be attained simply by avoiding the implicated foods, essentially doing more by doing less.

- Enck P, Aziz Q, Barbara G, et al. Irritable bowel syndrome. Nat Rev Dis Primers. 2016;2:1-24.

- Lacy BE, Patel NK. Rome criteria and a diagnostic approach to irritable bowel syndrome. J Clin Med. 2017;6:99.

- Barbara G, De Giorgio R, Stanghellini V, et al. New pathophysiological mechanisms in irritable bowel syndrome. Aliment Pharmacol Ther. 2004;20(suppl 2):1-9

- Chadwick VS, Chen W, Shu D, et al. Activation of the mucosal immune system in irritable bowel syndrome. Gastroenterology 2002;122:1778-1783.

- Tornblom H, Lindberg G, Nyberg B, et al. Full-thickness biopsy of the jejunum reveals inflammation and enteric neuropathy in irritable bowel syndrome. Gastroenterology. 2002;123:1972-1979.

- O’Mahony L, McCarthy J, Kelly

P, et al. Lactobacillus and bifidobacterium in irritable bowel syndrome: symptom responses and relationship to cytokine profiles. Gastroenterology. 2005;128:541-551. - Ragnarsson G, Bodemar G. Pain is temporally related to eating but not to defecation in the irritable bowel syndrome (IBS): patients’ description of diarrhea, constipation and symptom variation during a prospective 6-week study. Eur J Gastroenterol Hepatol. 1998;10:415-421.

- Boyce JA, Assa’ad A, Burks AW, et al. Guidelines for the diagnosis and management of food allergy in the United States: report of the NAID-sponsored expert panel. J Allergy Clin Immunol. 2010;126(6 suppl):S1-S58.

- Shin GH, Smith MS, Toro B, et al. Utility of food patch testing in the evaluation and management of irritable bowel syndrome. Skin. 2018;2:1-15.

- Stierstorfer MB, Sha CT. Food patch testing for irritable bowel syndrome. J Am Acad Dermatol. 2013;68:377-384.

- Marks JG, Belsito DV, DeLeo MD, et al. North American Contact Dermatitis Group patch test results for the detection of delayed-type hypersensitivity to topical allergens. J Am Acad Dermatol. 1998;38:911-918.

- Rietschel RL, Fowler JF Jr. Fisher’s Contact Dermatitis. BC Decker; 2008.

- DeGroot AC. Patch Testing. acdegroot Publishing; 2008.

- Gralnek IM, Hays RD, Kilbourne A, et al. The impact of irritable bowel syndrome on health-related quality of life. Gastroenterology. 2000;119:654-660.

- Halder SL, Lock GR, Talley NJ, et al. Impact of functional gastrointestinal disorders on health-related quality of life: a population-based case–control study. Aliment Pharmacol Ther. 2004;19:233-242.

- International Foundation for Gastrointestinal Disorders. About IBS. statistics. Accessed July 20, 2021. https://www.aboutibs.org/facts-about-ibs/statistics.html

- Rome Foundation. Guidelines—Rome III diagnostic criteria for functional gastrointestinal disorders. J Gastrointestin Liver Dis. 2006;15:307-312.

- Collins SM. Is the irritable gut an inflamed gut? Scand J Gastroenterol. 1992;192(suppl):102-105.

- Park MI, Camilleri M. Is there a role of food allergy in irritable bowel syndrome and functional dyspepsia? a systemic review. Neurogastroenterol Motil. 2006;18:595-607.

- Grover M, Herfarth H, Drossman DA. The functional-organic dichotomy: postinfectious irritable bowel syndrome and inflammatory bowel disease–irritable bowel syndrome. Clin Gastroenterol Hepatol. 2009;7:48-53.

- Hrobiartsson A, Gotzsche PC. Is the placebo powerless? an analysis of clinical trials comparing placebo with no treatment. N Engl J Med. 2001;344:1594-1602.

- Spiller RC. Problems and challenges in the design of irritable bowel syndrome clinical trials: experience from published trials. Am J Med. 1999;107:91S-97S.

Irritable bowel syndrome (IBS) is one of the most common disorders managed by primary care physicians and gastroenterologists.1 Characterized by abdominal pain coinciding with altered stool form and/or frequency as defined by the Rome IV diagnostic criteria,2 symptoms range from mild to debilitating and may remarkably impair quality of life and work productivity.1

The cause of IBS is poorly understood. Proposed pathophysiologic factors include impaired mucosal function, microbial imbalance, visceral hypersensitivity, psychologic dysfunction, genetic factors, neurotransmitter imbalance, postinfectious gastroenteritis, inflammation, and food intolerance, any or all of which may lead to the development and maintenance of IBS symptoms.3 More recent observations of inflammation in the intestinal lining4,5 and proinflammatory peripherally circulating cytokines6 challenge its traditional classification as a functional disorder.

The cause of this inflammation is of intense interest, with speculation that the bacterial microbiota, bile acids, association with postinfectious gastroenteritis and inflammatory bowel disease cases, and/or foods may contribute. Although approximately 50% of individuals with IBS report that foods aggravate their symptoms,7 studies investigating type I antibody–mediated immediate hypersensitivity have largely failed to demonstrate a substantial link, prompting many authorities to regard these associations as food “intolerances” rather than true allergies. Based on this body of literature, a large 2010 consensus report on all aspects of food allergies advises against food allergy testing for IBS.8

In contrast, by utilizing type IV food allergen skin patch testing, 2 proof-of-concept studies9,10 investigated a different allergic mechanism in IBS, namely cell-mediated delayed-type hypersensitivity. Because many foods and food additives are known to cause allergic contact dermatitis,11 it was hypothesized that these foods may elicit a similar delayed-type hypersensitivity response in the intestinal lining in previously sensitized individuals. By following a patch test–guided food avoidance diet, a large subpopulation of patients with IBS experienced partial or complete IBS symptom relief.9,10 Our study further investigates a role for food-related delayed-type hypersensitivities in the pathogenesis of IBS.

Methods

Patient Selection

This study was conducted in a secondary care community-based setting. All patients were self-referred over an 18-month period ending in October 2019, had physician-diagnosed IBS, and/or met the Rome IV criteria for IBS and presented expressly for the food patch testing on a fee-for-service basis. Subtype of IBS was determined on presentation by the self-reported historically predominant symptom. Duration of IBS symptoms was self-reported and was rounded to the nearest year for purposes of data collection.

Exclusion criteria included pregnancy, known allergy to adhesive tape or any of the food allergens used in the study, severe skin rash, symptoms that had a known cause other than IBS, or active treatment with systemic immunosuppressive medications.

Patch Testing

Skin patch testing was initiated using an extensive panel of 117 type IV food allergens (eTable)11 identified in the literature,12 most of which utilized standard compounded formulations13 or were available from reputable patch test manufacturers (Brial Allergen GmbH; Chemotechnique Diagnostics). This panel was not approved by the US Food and Drug Administration. The freeze-dried vegetable formulations were taken from the 2018 report.9 Standard skin patch test procedure protocols12 were used, affixing the patches to the upper aspect of the back.

Following patch test application on day 1, two follow-up visits occurred on day 3 and either day 4 or day 5. On day 3, patches were removed, and the initial results were read by a board-certified dermatologist according to a standard grading system.14 Interpretation of patch tests included no reaction, questionable reaction consisting of macular erythema, weak reaction consisting of erythema and slight edema, or strong reaction consisting of erythema and marked edema. On day 4 or day 5, the final patch test reading was performed, and patients were informed of their results. Patients were advised to avoid ingestion of all foods that elicited a questionable or positive patch test response for at least 3 months, and information about the foods and their avoidance also was distributed and reviewed.

Food Avoidance Questionnaire

Patients with questionable or positive patch tests at 72 or 96 hours were advised of their eligibility to participate in an institutional review board–approved food avoidance questionnaire study investigating the utility of patch test–guided food avoidance on IBS symptoms. The questionnaire assessed the following: (1) baseline average abdominal pain prior to patch test–guided avoidance diet (0=no symptoms; 10=very severe); (2) average abdominal pain since initiation of patch test–guided avoidance diet (0=no symptoms; 10=very severe); (3) degree of improvement in overall IBS symptoms by the end of the food avoidance period (0=no improvement; 10=great improvement); (4) compliance with the avoidance diet for the duration of the avoidance period (completely, partially, not at all, or not sure).

Questionnaires and informed consent were mailed to patients via the US Postal Service 3 months after completing the patch testing. The questionnaire and consent were to be completed and returned after dietary avoidance of the identified allergens for at least 3 months. Patients were not compensated for participation in the study.

Statistical Analysis

Statistical analysis of data collected from study questionnaires was performed with Microsoft Excel. Mean abdominal pain and mean global improvement scores were reported along with 1 SD of the mean. For comparison of mean abdominal pain and improvement in global IBS symptoms from baseline to after 3 months of identified allergen avoidance, a Mann-Whitney U test was performed, with P<.05 being considered statistically significant.

Results

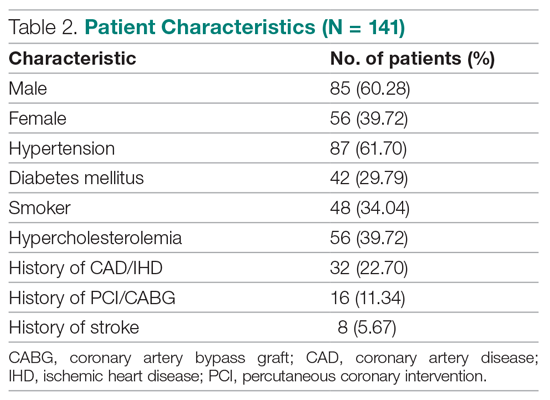

Thirty-seven consecutive patients underwent the testing and were eligible for the study. Nineteen patients were included in the study by virtue of completing and returning their posttest food avoidance questionnaire and informed consent. Eighteen patients were White and 1 was Asian. Subcategories of IBS were diarrhea predominant (9 [47.4%]), constipation predominant (3 [15.8%]), mixed type (5 [26.3%]), and undetermined type (2 [10.5%]). Questionnaire answers were reported after a mean (SD) duration of patch test–directed food avoidance of 4.5 (3.0) months (Table 1).

Overall Improvement

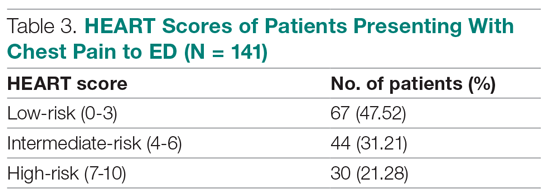

Fifteen (78.9%) patients reported at least slight to great improvement in their global IBS symptoms, and 4 (21.1%) reported no improvement (Table 2), with a mean (SD) improvement score of 5.1 (3.3)(P<.00001).

Abdominal Pain

All 19 patients reported mild to marked abdominal pain at baseline. The mean (SD) baseline pain score was 6.6 (1.9). The mean (SD) pain score was 3.4 (1.8)(P<.00001) after an average patch test–guided dietary avoidance of 4.5 (3.0) months (Table 3).

Comment

Despite intense research interest and a growing number of new medications for IBS approved by the US Food and Drug Administration, there remains a large void in the search for cost-effective and efficacious approaches for IBS evaluation and treatment. In addition to major disturbances in quality of life,14,15 the cost to society in direct medical expenses and indirect costs associated with loss of productivity and work absenteeism is considerable; estimates range from $21 billion or more annually.16

Food Hypersensitivities Triggering IBS

This study further evaluated a role for skin patch testing to identify delayed-type (type IV) food hypersensitivities that trigger IBS symptoms and differed from the prior investigations9,10 in that the symptoms used to define IBS were updated from the Rome III17 to the newer Rome IV2 criteria. The data presented here show moderate to great improvement in global IBS symptoms in 58% (11/19) of patients, which is in line with a 2018 report of 40 study participants for whom follow-up at 3 or more months was available,9 providing additional support for a role for type IV food allergies in causing the same gastrointestinal tract symptoms that define IBS. The distinction between food-related studies, including this one, that implicate food allergies9,10 and prior studies that did not support a role for food allergies in IBS pathogenesis8 can be accounted for by the type of allergy investigated. Conclusions that IBS flares after food ingestion were attributable to intolerance rather than true allergy were based on results investigating only the humoral arm and failed to consider the cell-mediated arm of the immune system. As such, foods that appear to trigger IBS symptoms on an allergic basis in our study are recognized in the literature12 as type IV allergens that elicit cell-mediated immunologic responses rather than more widely recognized type I allergens, such as peanuts and shellfish, that elicit immediate-type hypersensitivity responses. Although any type IV food allergen(s) could be responsible, a pattern emerged in this study and the study published in 2018.9 Namely, some foods stood out as more frequently inducing patch test reactions, with the 3 most common being carmine, cinnamon bark oil, and sodium bisulfite (eTable). The sample size is relatively small, but the results raise the question of whether these foods are the most likely to trigger IBS symptoms in the general population. If so, is it the result of a higher innate sensitizing potential and/or a higher frequency of exposure in commonly eaten foods? Larger randomized clinical trials are needed.

Immune Response and IBS

There is mounting evidence that the immune system may play a role in the pathophysiology of IBS.18 Both lymphocyte infiltration of the myenteric plexus and an increase in intestinal mucosal T lymphocytes have been observed, and it is generally accepted that the mucosal immune system seems to be activated, at least in a subset of patients with IBS.19 Irritable bowel syndrome associations with quiescent inflammatory bowel disease or postinfectious gastroenteritis provide 2 potential causes for the inflammation, but most IBS patients have had neither.20 The mucosal lining of the intestine and immune system have vast exposure to intraluminal allergens in transit, and it is hypothesized that the same delayed-type hypersensitivity response elicited in the skin by patch testing is elicited in the intestine, resulting in the inflammation that triggers IBS symptoms.10 The results here add to the growing body of evidence that ingestion of type IV food allergens by previously sensitized individuals could, in fact, be the primary source of the inflammation observed in a large subpopulation of individuals who carry a diagnosis of IBS.

Food Allergens in Patch Testing

Many of the food allergens used in this study are commonly found in various nonfood products that may contact the skin. For example, many flavorings are used as fragrances, and many preservatives, binders, thickeners, emulsifiers, and stabilizers serve the same role in moisturizers, cosmetics, and topical medications. Likewise, nickel sulfate hexahydrate, ubiquitous in foods that arise from the earth, often is found in metal in jewelry, clothing components, and cell phones. All are potential sensitizers. Thus, the question may arise whether the causal relationship between the food allergens identified by patch testing and IBS symptoms might be more of a systemic effect akin to systemic contact dermatitis as sometimes follows ingestion of an allergen to which an individual has been topically sensitized, rather than the proposed localized immunologic response in the intestinal lining. We were unaware of patient history of allergic contact dermatitis to any of the patch test allergens in this study, but the dermatologist author here (M.S.) has unpublished experience with 2 other patients with IBS who have benefited from low-nickel diets after having had positive patch tests to nickel sulfate hexahydrate and who, in retrospect, did report a history of earring dermatitis. Future investigations using pre– and post–food challenge histologic assessments of the intestinal mucosa in patients who benefit from patch test–guided food avoidance diets should help to better define the mechanism.

Because IBS has not been traditionally associated with structural or biochemical abnormalities detectable with current routine diagnostic tools, it has long been viewed as a functional disorder. The findings published more recently,9,10 in addition to this study’s results, would negate this functional classification in the subset of patients with IBS symptoms who experience sustained relief of their symptoms by patch test–directed food avoidance. The underlying delayed-type hypersensitivity pathogenesis of the IBS-like symptoms in these individuals would mandate an organic classification, aptly named allergic contact enteritis.10

Follow-up Data

The mean (SD) follow-up duration for this study and the 2018 report9 was 4.5 (3.0) months and 7.6 (3.9) months, respectively. The placebo effect is a concern for disorders such as IBS in which primarily subjective outcome measures are available,21 and in a retrospective analysis of 25 randomized, placebo-controlled IBS clinical trials, Spiller22 concluded the optimum length of such trials to be more than 3 months, which these studies exceed. Although not blinded or placebo controlled, the length of follow-up in the 2018 report9 and here enhances the validity of the results.

Limitation

The retrospective manner in which the self-assessments were reported in this study introduces the potential for recall bias, a variable that could affect results. The presence and direction of bias by any given individual cannot be known, making it difficult to determine any effect it may have had. Further investigation should include daily assessments and refine the primary study end points to include both abdominal pain and the defecation considerations that define IBS.

Conclusion

Food patch testing has the potential to offer a safe, cost-effective approach to the evaluation and management of IBS symptoms. Randomized clinical trials are needed to further investigate the validity of the proof-of-concept results to date. For patients who benefit from a patch test–guided avoidance diet, invasive and costly endoscopic, radiologic, and laboratory testing and pharmacologic management could be averted. Symptomatic relief could be attained simply by avoiding the implicated foods, essentially doing more by doing less.

Irritable bowel syndrome (IBS) is one of the most common disorders managed by primary care physicians and gastroenterologists.1 Characterized by abdominal pain coinciding with altered stool form and/or frequency as defined by the Rome IV diagnostic criteria,2 symptoms range from mild to debilitating and may remarkably impair quality of life and work productivity.1

The cause of IBS is poorly understood. Proposed pathophysiologic factors include impaired mucosal function, microbial imbalance, visceral hypersensitivity, psychologic dysfunction, genetic factors, neurotransmitter imbalance, postinfectious gastroenteritis, inflammation, and food intolerance, any or all of which may lead to the development and maintenance of IBS symptoms.3 More recent observations of inflammation in the intestinal lining4,5 and proinflammatory peripherally circulating cytokines6 challenge its traditional classification as a functional disorder.

The cause of this inflammation is of intense interest, with speculation that the bacterial microbiota, bile acids, association with postinfectious gastroenteritis and inflammatory bowel disease cases, and/or foods may contribute. Although approximately 50% of individuals with IBS report that foods aggravate their symptoms,7 studies investigating type I antibody–mediated immediate hypersensitivity have largely failed to demonstrate a substantial link, prompting many authorities to regard these associations as food “intolerances” rather than true allergies. Based on this body of literature, a large 2010 consensus report on all aspects of food allergies advises against food allergy testing for IBS.8

In contrast, by utilizing type IV food allergen skin patch testing, 2 proof-of-concept studies9,10 investigated a different allergic mechanism in IBS, namely cell-mediated delayed-type hypersensitivity. Because many foods and food additives are known to cause allergic contact dermatitis,11 it was hypothesized that these foods may elicit a similar delayed-type hypersensitivity response in the intestinal lining in previously sensitized individuals. By following a patch test–guided food avoidance diet, a large subpopulation of patients with IBS experienced partial or complete IBS symptom relief.9,10 Our study further investigates a role for food-related delayed-type hypersensitivities in the pathogenesis of IBS.

Methods

Patient Selection

This study was conducted in a secondary care community-based setting. All patients were self-referred over an 18-month period ending in October 2019, had physician-diagnosed IBS, and/or met the Rome IV criteria for IBS and presented expressly for the food patch testing on a fee-for-service basis. Subtype of IBS was determined on presentation by the self-reported historically predominant symptom. Duration of IBS symptoms was self-reported and was rounded to the nearest year for purposes of data collection.

Exclusion criteria included pregnancy, known allergy to adhesive tape or any of the food allergens used in the study, severe skin rash, symptoms that had a known cause other than IBS, or active treatment with systemic immunosuppressive medications.

Patch Testing

Skin patch testing was initiated using an extensive panel of 117 type IV food allergens (eTable)11 identified in the literature,12 most of which utilized standard compounded formulations13 or were available from reputable patch test manufacturers (Brial Allergen GmbH; Chemotechnique Diagnostics). This panel was not approved by the US Food and Drug Administration. The freeze-dried vegetable formulations were taken from the 2018 report.9 Standard skin patch test procedure protocols12 were used, affixing the patches to the upper aspect of the back.

Following patch test application on day 1, two follow-up visits occurred on day 3 and either day 4 or day 5. On day 3, patches were removed, and the initial results were read by a board-certified dermatologist according to a standard grading system.14 Interpretation of patch tests included no reaction, questionable reaction consisting of macular erythema, weak reaction consisting of erythema and slight edema, or strong reaction consisting of erythema and marked edema. On day 4 or day 5, the final patch test reading was performed, and patients were informed of their results. Patients were advised to avoid ingestion of all foods that elicited a questionable or positive patch test response for at least 3 months, and information about the foods and their avoidance also was distributed and reviewed.

Food Avoidance Questionnaire

Patients with questionable or positive patch tests at 72 or 96 hours were advised of their eligibility to participate in an institutional review board–approved food avoidance questionnaire study investigating the utility of patch test–guided food avoidance on IBS symptoms. The questionnaire assessed the following: (1) baseline average abdominal pain prior to patch test–guided avoidance diet (0=no symptoms; 10=very severe); (2) average abdominal pain since initiation of patch test–guided avoidance diet (0=no symptoms; 10=very severe); (3) degree of improvement in overall IBS symptoms by the end of the food avoidance period (0=no improvement; 10=great improvement); (4) compliance with the avoidance diet for the duration of the avoidance period (completely, partially, not at all, or not sure).

Questionnaires and informed consent were mailed to patients via the US Postal Service 3 months after completing the patch testing. The questionnaire and consent were to be completed and returned after dietary avoidance of the identified allergens for at least 3 months. Patients were not compensated for participation in the study.

Statistical Analysis

Statistical analysis of data collected from study questionnaires was performed with Microsoft Excel. Mean abdominal pain and mean global improvement scores were reported along with 1 SD of the mean. For comparison of mean abdominal pain and improvement in global IBS symptoms from baseline to after 3 months of identified allergen avoidance, a Mann-Whitney U test was performed, with P<.05 being considered statistically significant.

Results

Thirty-seven consecutive patients underwent the testing and were eligible for the study. Nineteen patients were included in the study by virtue of completing and returning their posttest food avoidance questionnaire and informed consent. Eighteen patients were White and 1 was Asian. Subcategories of IBS were diarrhea predominant (9 [47.4%]), constipation predominant (3 [15.8%]), mixed type (5 [26.3%]), and undetermined type (2 [10.5%]). Questionnaire answers were reported after a mean (SD) duration of patch test–directed food avoidance of 4.5 (3.0) months (Table 1).

Overall Improvement

Fifteen (78.9%) patients reported at least slight to great improvement in their global IBS symptoms, and 4 (21.1%) reported no improvement (Table 2), with a mean (SD) improvement score of 5.1 (3.3)(P<.00001).

Abdominal Pain

All 19 patients reported mild to marked abdominal pain at baseline. The mean (SD) baseline pain score was 6.6 (1.9). The mean (SD) pain score was 3.4 (1.8)(P<.00001) after an average patch test–guided dietary avoidance of 4.5 (3.0) months (Table 3).

Comment

Despite intense research interest and a growing number of new medications for IBS approved by the US Food and Drug Administration, there remains a large void in the search for cost-effective and efficacious approaches for IBS evaluation and treatment. In addition to major disturbances in quality of life,14,15 the cost to society in direct medical expenses and indirect costs associated with loss of productivity and work absenteeism is considerable; estimates range from $21 billion or more annually.16

Food Hypersensitivities Triggering IBS

This study further evaluated a role for skin patch testing to identify delayed-type (type IV) food hypersensitivities that trigger IBS symptoms and differed from the prior investigations9,10 in that the symptoms used to define IBS were updated from the Rome III17 to the newer Rome IV2 criteria. The data presented here show moderate to great improvement in global IBS symptoms in 58% (11/19) of patients, which is in line with a 2018 report of 40 study participants for whom follow-up at 3 or more months was available,9 providing additional support for a role for type IV food allergies in causing the same gastrointestinal tract symptoms that define IBS. The distinction between food-related studies, including this one, that implicate food allergies9,10 and prior studies that did not support a role for food allergies in IBS pathogenesis8 can be accounted for by the type of allergy investigated. Conclusions that IBS flares after food ingestion were attributable to intolerance rather than true allergy were based on results investigating only the humoral arm and failed to consider the cell-mediated arm of the immune system. As such, foods that appear to trigger IBS symptoms on an allergic basis in our study are recognized in the literature12 as type IV allergens that elicit cell-mediated immunologic responses rather than more widely recognized type I allergens, such as peanuts and shellfish, that elicit immediate-type hypersensitivity responses. Although any type IV food allergen(s) could be responsible, a pattern emerged in this study and the study published in 2018.9 Namely, some foods stood out as more frequently inducing patch test reactions, with the 3 most common being carmine, cinnamon bark oil, and sodium bisulfite (eTable). The sample size is relatively small, but the results raise the question of whether these foods are the most likely to trigger IBS symptoms in the general population. If so, is it the result of a higher innate sensitizing potential and/or a higher frequency of exposure in commonly eaten foods? Larger randomized clinical trials are needed.

Immune Response and IBS

There is mounting evidence that the immune system may play a role in the pathophysiology of IBS.18 Both lymphocyte infiltration of the myenteric plexus and an increase in intestinal mucosal T lymphocytes have been observed, and it is generally accepted that the mucosal immune system seems to be activated, at least in a subset of patients with IBS.19 Irritable bowel syndrome associations with quiescent inflammatory bowel disease or postinfectious gastroenteritis provide 2 potential causes for the inflammation, but most IBS patients have had neither.20 The mucosal lining of the intestine and immune system have vast exposure to intraluminal allergens in transit, and it is hypothesized that the same delayed-type hypersensitivity response elicited in the skin by patch testing is elicited in the intestine, resulting in the inflammation that triggers IBS symptoms.10 The results here add to the growing body of evidence that ingestion of type IV food allergens by previously sensitized individuals could, in fact, be the primary source of the inflammation observed in a large subpopulation of individuals who carry a diagnosis of IBS.

Food Allergens in Patch Testing

Many of the food allergens used in this study are commonly found in various nonfood products that may contact the skin. For example, many flavorings are used as fragrances, and many preservatives, binders, thickeners, emulsifiers, and stabilizers serve the same role in moisturizers, cosmetics, and topical medications. Likewise, nickel sulfate hexahydrate, ubiquitous in foods that arise from the earth, often is found in metal in jewelry, clothing components, and cell phones. All are potential sensitizers. Thus, the question may arise whether the causal relationship between the food allergens identified by patch testing and IBS symptoms might be more of a systemic effect akin to systemic contact dermatitis as sometimes follows ingestion of an allergen to which an individual has been topically sensitized, rather than the proposed localized immunologic response in the intestinal lining. We were unaware of patient history of allergic contact dermatitis to any of the patch test allergens in this study, but the dermatologist author here (M.S.) has unpublished experience with 2 other patients with IBS who have benefited from low-nickel diets after having had positive patch tests to nickel sulfate hexahydrate and who, in retrospect, did report a history of earring dermatitis. Future investigations using pre– and post–food challenge histologic assessments of the intestinal mucosa in patients who benefit from patch test–guided food avoidance diets should help to better define the mechanism.

Because IBS has not been traditionally associated with structural or biochemical abnormalities detectable with current routine diagnostic tools, it has long been viewed as a functional disorder. The findings published more recently,9,10 in addition to this study’s results, would negate this functional classification in the subset of patients with IBS symptoms who experience sustained relief of their symptoms by patch test–directed food avoidance. The underlying delayed-type hypersensitivity pathogenesis of the IBS-like symptoms in these individuals would mandate an organic classification, aptly named allergic contact enteritis.10

Follow-up Data

The mean (SD) follow-up duration for this study and the 2018 report9 was 4.5 (3.0) months and 7.6 (3.9) months, respectively. The placebo effect is a concern for disorders such as IBS in which primarily subjective outcome measures are available,21 and in a retrospective analysis of 25 randomized, placebo-controlled IBS clinical trials, Spiller22 concluded the optimum length of such trials to be more than 3 months, which these studies exceed. Although not blinded or placebo controlled, the length of follow-up in the 2018 report9 and here enhances the validity of the results.

Limitation

The retrospective manner in which the self-assessments were reported in this study introduces the potential for recall bias, a variable that could affect results. The presence and direction of bias by any given individual cannot be known, making it difficult to determine any effect it may have had. Further investigation should include daily assessments and refine the primary study end points to include both abdominal pain and the defecation considerations that define IBS.

Conclusion

Food patch testing has the potential to offer a safe, cost-effective approach to the evaluation and management of IBS symptoms. Randomized clinical trials are needed to further investigate the validity of the proof-of-concept results to date. For patients who benefit from a patch test–guided avoidance diet, invasive and costly endoscopic, radiologic, and laboratory testing and pharmacologic management could be averted. Symptomatic relief could be attained simply by avoiding the implicated foods, essentially doing more by doing less.

- Enck P, Aziz Q, Barbara G, et al. Irritable bowel syndrome. Nat Rev Dis Primers. 2016;2:1-24.

- Lacy BE, Patel NK. Rome criteria and a diagnostic approach to irritable bowel syndrome. J Clin Med. 2017;6:99.

- Barbara G, De Giorgio R, Stanghellini V, et al. New pathophysiological mechanisms in irritable bowel syndrome. Aliment Pharmacol Ther. 2004;20(suppl 2):1-9

- Chadwick VS, Chen W, Shu D, et al. Activation of the mucosal immune system in irritable bowel syndrome. Gastroenterology 2002;122:1778-1783.

- Tornblom H, Lindberg G, Nyberg B, et al. Full-thickness biopsy of the jejunum reveals inflammation and enteric neuropathy in irritable bowel syndrome. Gastroenterology. 2002;123:1972-1979.

- O’Mahony L, McCarthy J, Kelly

P, et al. Lactobacillus and bifidobacterium in irritable bowel syndrome: symptom responses and relationship to cytokine profiles. Gastroenterology. 2005;128:541-551. - Ragnarsson G, Bodemar G. Pain is temporally related to eating but not to defecation in the irritable bowel syndrome (IBS): patients’ description of diarrhea, constipation and symptom variation during a prospective 6-week study. Eur J Gastroenterol Hepatol. 1998;10:415-421.

- Boyce JA, Assa’ad A, Burks AW, et al. Guidelines for the diagnosis and management of food allergy in the United States: report of the NAID-sponsored expert panel. J Allergy Clin Immunol. 2010;126(6 suppl):S1-S58.

- Shin GH, Smith MS, Toro B, et al. Utility of food patch testing in the evaluation and management of irritable bowel syndrome. Skin. 2018;2:1-15.

- Stierstorfer MB, Sha CT. Food patch testing for irritable bowel syndrome. J Am Acad Dermatol. 2013;68:377-384.

- Marks JG, Belsito DV, DeLeo MD, et al. North American Contact Dermatitis Group patch test results for the detection of delayed-type hypersensitivity to topical allergens. J Am Acad Dermatol. 1998;38:911-918.

- Rietschel RL, Fowler JF Jr. Fisher’s Contact Dermatitis. BC Decker; 2008.

- DeGroot AC. Patch Testing. acdegroot Publishing; 2008.

- Gralnek IM, Hays RD, Kilbourne A, et al. The impact of irritable bowel syndrome on health-related quality of life. Gastroenterology. 2000;119:654-660.

- Halder SL, Lock GR, Talley NJ, et al. Impact of functional gastrointestinal disorders on health-related quality of life: a population-based case–control study. Aliment Pharmacol Ther. 2004;19:233-242.

- International Foundation for Gastrointestinal Disorders. About IBS. statistics. Accessed July 20, 2021. https://www.aboutibs.org/facts-about-ibs/statistics.html

- Rome Foundation. Guidelines—Rome III diagnostic criteria for functional gastrointestinal disorders. J Gastrointestin Liver Dis. 2006;15:307-312.

- Collins SM. Is the irritable gut an inflamed gut? Scand J Gastroenterol. 1992;192(suppl):102-105.

- Park MI, Camilleri M. Is there a role of food allergy in irritable bowel syndrome and functional dyspepsia? a systemic review. Neurogastroenterol Motil. 2006;18:595-607.

- Grover M, Herfarth H, Drossman DA. The functional-organic dichotomy: postinfectious irritable bowel syndrome and inflammatory bowel disease–irritable bowel syndrome. Clin Gastroenterol Hepatol. 2009;7:48-53.

- Hrobiartsson A, Gotzsche PC. Is the placebo powerless? an analysis of clinical trials comparing placebo with no treatment. N Engl J Med. 2001;344:1594-1602.

- Spiller RC. Problems and challenges in the design of irritable bowel syndrome clinical trials: experience from published trials. Am J Med. 1999;107:91S-97S.

- Enck P, Aziz Q, Barbara G, et al. Irritable bowel syndrome. Nat Rev Dis Primers. 2016;2:1-24.

- Lacy BE, Patel NK. Rome criteria and a diagnostic approach to irritable bowel syndrome. J Clin Med. 2017;6:99.

- Barbara G, De Giorgio R, Stanghellini V, et al. New pathophysiological mechanisms in irritable bowel syndrome. Aliment Pharmacol Ther. 2004;20(suppl 2):1-9

- Chadwick VS, Chen W, Shu D, et al. Activation of the mucosal immune system in irritable bowel syndrome. Gastroenterology 2002;122:1778-1783.

- Tornblom H, Lindberg G, Nyberg B, et al. Full-thickness biopsy of the jejunum reveals inflammation and enteric neuropathy in irritable bowel syndrome. Gastroenterology. 2002;123:1972-1979.

- O’Mahony L, McCarthy J, Kelly

P, et al. Lactobacillus and bifidobacterium in irritable bowel syndrome: symptom responses and relationship to cytokine profiles. Gastroenterology. 2005;128:541-551. - Ragnarsson G, Bodemar G. Pain is temporally related to eating but not to defecation in the irritable bowel syndrome (IBS): patients’ description of diarrhea, constipation and symptom variation during a prospective 6-week study. Eur J Gastroenterol Hepatol. 1998;10:415-421.

- Boyce JA, Assa’ad A, Burks AW, et al. Guidelines for the diagnosis and management of food allergy in the United States: report of the NAID-sponsored expert panel. J Allergy Clin Immunol. 2010;126(6 suppl):S1-S58.

- Shin GH, Smith MS, Toro B, et al. Utility of food patch testing in the evaluation and management of irritable bowel syndrome. Skin. 2018;2:1-15.

- Stierstorfer MB, Sha CT. Food patch testing for irritable bowel syndrome. J Am Acad Dermatol. 2013;68:377-384.

- Marks JG, Belsito DV, DeLeo MD, et al. North American Contact Dermatitis Group patch test results for the detection of delayed-type hypersensitivity to topical allergens. J Am Acad Dermatol. 1998;38:911-918.

- Rietschel RL, Fowler JF Jr. Fisher’s Contact Dermatitis. BC Decker; 2008.

- DeGroot AC. Patch Testing. acdegroot Publishing; 2008.

- Gralnek IM, Hays RD, Kilbourne A, et al. The impact of irritable bowel syndrome on health-related quality of life. Gastroenterology. 2000;119:654-660.

- Halder SL, Lock GR, Talley NJ, et al. Impact of functional gastrointestinal disorders on health-related quality of life: a population-based case–control study. Aliment Pharmacol Ther. 2004;19:233-242.

- International Foundation for Gastrointestinal Disorders. About IBS. statistics. Accessed July 20, 2021. https://www.aboutibs.org/facts-about-ibs/statistics.html

- Rome Foundation. Guidelines—Rome III diagnostic criteria for functional gastrointestinal disorders. J Gastrointestin Liver Dis. 2006;15:307-312.

- Collins SM. Is the irritable gut an inflamed gut? Scand J Gastroenterol. 1992;192(suppl):102-105.

- Park MI, Camilleri M. Is there a role of food allergy in irritable bowel syndrome and functional dyspepsia? a systemic review. Neurogastroenterol Motil. 2006;18:595-607.

- Grover M, Herfarth H, Drossman DA. The functional-organic dichotomy: postinfectious irritable bowel syndrome and inflammatory bowel disease–irritable bowel syndrome. Clin Gastroenterol Hepatol. 2009;7:48-53.

- Hrobiartsson A, Gotzsche PC. Is the placebo powerless? an analysis of clinical trials comparing placebo with no treatment. N Engl J Med. 2001;344:1594-1602.

- Spiller RC. Problems and challenges in the design of irritable bowel syndrome clinical trials: experience from published trials. Am J Med. 1999;107:91S-97S.

Practice Points

- Recent observations of inflammation in irritable bowel syndrome (IBS) challenge its traditional classification as a functional disorder.

- Delayed-type food hypersensitivities, as detectable by skin patch testing, to type IV food allergens are one plausible cause for intestinal inflammation.

- Patch test–directed food avoidance improves IBS symptoms in some patients and offers a new approach to the evaluation and management of this condition.

- Dermatologists and other health care practitioners with expertise in patch testing are uniquely positioned to utilize these skills to help patients with IBS.

Comparison of Renal Function Between Tenofovir Disoproxil Fumarate and Other Nucleos(t)ide Reverse Transcriptase Inhibitors in Patients With Hepatitis B Virus Infection

Infection with hepatitis B virus (HBV) is associated with risk of potentially lethal, chronic infection and is a major public health problem. Infection from HBV has the potential to lead to liver failure, cirrhosis, and cancer.1,2 Chronic HBV infection exists in as many as 2.2 million Americans, and in 2015 alone, HBV was estimated to be associated with 887,000 deaths worldwide.1,3 Suppression of viral load is the basis of treatment, necessitating long-term use of medication for treatment.4 Nucleoside reverse transcriptase inhibitors (entecavir, lamivudine, telbivudine) and nucleotide reverse transcriptase inhibitors (adefovir, tenofovir), have improved the efficacy and tolerability of chronic HBV treatment compared with interferon-based agents.4-7 However, concerns remain regarding long-term risk of nephrotoxicity, in particular with tenofovir disoproxil fumarate (TDF), which could lead to a limitation of safe and effective options for certain populations.5,6,8 A newer formulation, tenofovir alafenamide fumarate (TAF), has improved the kidney risks, but expense remains a limiting factor for this agent.9

Nucleos(t)ide reverse transcriptase inhibitors (NRTIs) have demonstrated efficacy in reducing HBV viral load and other markers of improvement in chronic HBV, but entecavir and tenofovir have tended to demonstrate greater efficacy in clinical trials.5-7 Several studies have suggested potential benefits of tenofovir-based treatment over other NRTIs, including greater viral load achievement compared with adefovir, efficacy in patients with previous failure of lamivudine or adefovir, and long-term efficacy in chronic HBV infection.10-12 A 2019 systematic review suggests TDF and TAF are more effective than other NRTIs for achieving viral load suppression.13 Other NRTIs are not without their own risks, including mitochondrial dysfunction, mostly with lamivudine and telbivudine.4

Despite these data, guidelines have varied in their treatment recommendations in the context of chronic kidney disease partly due to variations in the evidence regarding nephrotoxicity.7,14 Cohort studies and case reports have suggested association between TDF and acute kidney injury in patients with HIV infection as well as long-term reductions in kidney function.15,16 In one study, 58% of patients treated with TDF did not return to baseline kidney function after an event of acute kidney injury.17 However, little data are available on whether this association exists for chronic HBV treatment in the absence of HIV infection. One retrospective analysis comparing TDF and entecavir in chronic HBV without HIV showed greater incidence of creatinine clearance < 60 mL/min with TDF but greater incidence of serum creatinine (SCr) ≥ 2.5 mg/dL in the entacavir group, making it difficult to reach a clear conclusion on risks.18 Other studies have either suffered from small cohorts with TDF or included patients with HIV coinfection.19,20 Although a retrospective comparison of TDF and entecavir, randomly matched 1:2 to account for differences between groups, showed lower estimated glomerular filtration rate (eGFR) in the TDF group, more data are needed.21 Entecavir remains an option for many patient, but for those who have failed nucleosides, few options remain.

With the advantages available from TDF and the continued expense of TAF, more data regarding the risks of nephrotoxicity with TDF would be beneficial. The objective of this study was to compare treatment with TDF and other NRTIs in chronic HBV monoinfection to distinguish any differences in kidney function changes over time. With hopes of gathering enough data to distinguish between groups, information was gathered from across the Veterans Health Administration (VHA) system.

Methods

A nationwide, multicenter, retrospective, cohort study of veterans with HBV infection was conducted to compare the effects of various NRTIs on renal function. Patient were identified through the US Department of Veterans Affairs Corporate Data Warehouse (CDW), using data from July 1, 2005 to July 31, 2015. Patients were included who had positive HBV surface antigen (HBsAg) or newly prescribed NRTI. Multiple drug episodes could be included for each patient. That is, if a patient who had previously been included had another instance of a newly prescribed NRTI, this would be included in the analysis. Exclusion criteria were patients aged < 18 years, those with NRTI prescription for ≤ 1 month, and concurrent HIV infection. All patients with HBsAg were included for the study for increasing the sensitivity in gathering patients; however, those patients were included only if they received NRTI concurrent with the laboratory test results used for the primary endpoint (ie, SCr) to be included in the analysis.

How data are received from CDW bears some explanation. A basic way to understand the way data are received is that questions can be asked such as “for X population, at this point in time, was the patient on Y drug and what was the SCr value.” Therefore, inclusion and exclusion must first be specified to define the population, after which point certain data points can be received depending on the specifications made. For this reason, there is no way to determine, for example, whether a certain patient continued TDF use for the duration of the study, only at the defined points in time (described below) to receive the specific data.

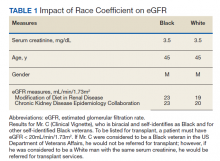

For the patients included, information was retrieved from the first receipt of the NRTI prescription to 36 months after initiation. Baseline characteristics included age, sex, race, and ethnicity, and were defined at time of NRTI initiation. Values for SCr were compared at baseline, 3, 6, 12, 24, and 36 months after prescription of NRTI. The date of laboratory results was associated with the nearest date of comparison. Values for eGFR were determined by the modification of diet in renal disease equation. Values for eGFR are available in the CDW, whereas there is no direct means to calculate creatinine clearance with the available data, so eGFR was used for this study.

The primary endpoint was a change in eGFR in patients taking TDF after adjustment for time with the full cohort. Secondary analyses included the overall effect of time for the full cohort and change in renal function for each NRTI group. Mean and standard deviation for eGFR were determined for each NRTI group using the available data points. Analyses of the primary and secondary endpoints were completed using a linear mixed model with terms for time, to account for fixed effects, and specific NRTI used to account for random effects. A 2-sided α of .05 was used to determine statistical significance.

Results

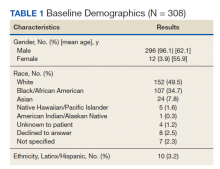

A total of 413 drug episodes from 308 subjects met inclusion criteria for the study. Of these subjects, 229 were still living at the time of query. Most study participants were male (96%), the mean age was 62.1 years for males and 55.9 years for females; 49.5% were White and 39.7% were Black veterans (Table 1).

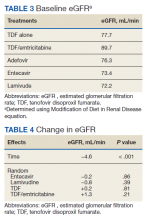

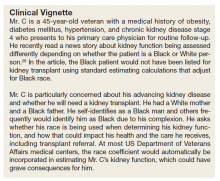

The NRTIs received by patients during the study period included TDF, TDF/emtricitabine, adefovir, entecavir, and lamivudine. No patients were on telbivudine. Formulations including TAF had not been approved by the US Food and Drug Administration (FDA) by the end of the study period, and as such were not found in the study.13 A plurality of participants received entecavir (94 of 223 at baseline), followed by TDF (n = 38) (Table 2). Of note, only 8 participants received TDF/emtricitabine at baseline. Differences were found between the groups in number of SCr data points available at 36 months vs baseline. The TDF group had the greatest reduction in data points available with 38 laboratory values at baseline vs 15 at 36 months (39.5% of baseline). From the available data, it is not possible to determine whether these represent medication discontinuations, missing values, lost to follow-up, or some other cause. Baseline eGFR was highest in the 2 TDF groups, with TDF alone at 77.7 mL/min (1.4-5.5 mL/min higher than the nontenofovir groups) and TDF/emtricitabine at 89.7 mL/min (13.4-17.5 mL/min higher than nontenofovir groups) (Table 3).

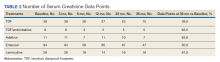

Table 4 contains data for the primarily and secondary analyses, examining change in eGFR. The fixed-effects analysis revealed a significant negative association between eGFR and time of −4.6 mL/min (P < .001) for all the NRTI groups combined. After accounting for this effect of time, there was no statistically significant correlation between use of TDF and change in eGFR (+0.2 mL/min, P = .81). For the TDF/emtricitabine group, a positive but statistically nonsignificant change was found (+1.3 mL/min, P = .21), but numbers were small and may have been insufficient to detect a difference. Similarly, no statistically significant change in eGFR was found after the fixed effects for either entecavir (−0.2 mL/min, P = .86) or lamivudine (−0.8 mL/min, P = .39). While included in the full analysis for fixed effects, random effects data were not received for the adefovir group due to heterogeneity and small quantity of the data, producing an unclear result.

Discussion

This study demonstrated a decline in eGFR over time in a similar fashion for all NRTIs used in patients treated for HBV monoinfection, but no greater decline in renal function was found with use of TDF vs other NRTIs. A statistically significant decline in eGFR of −4.55 mL/min over the 36-month time frame of the study was demonstrated for the full cohort, but no statistically significant change in eGFR was found for any individual NRTI after accounting for the fixed effect of time. If TDF is not associated with additional risk of nephrotoxicity compared with other NRTIs, this could have important implications for treatment when considering the evidence that tenofovir-based treatment seems to be more effective than other medications for suppressing viral load.13

This result runs contrary to data in patients given NRTIs for HIV infection as well as a more recent cohort study in chronic HBV infectioin, which showed a statistically significant difference in kidney dysfunction between TDF and entecavir (-15.73 vs -5.96 mL/min/m2, P < .001).5-7,21 Possible mechanism for differences in response between HIV and HBV patients has not been elucidated, but the inherent risk of developing chronic kidney disease from HIV disease may play a role.22 The possibility remains that all NRTIs cause a degree of kidney impairment in patients treated for chronic HBV infection as evidenced by the statistically significant fixed effect for time in the present study. The cause of this effect is unknown but may be independently related to HBV infection or may be specific to NRTI therapy. No control group of patients not receiving NRTI therapy was included in this study, so conclusions cannot be drawn regarding whether all NRTIs are associated with decline in renal function in chronic HBV infection.

Limitations

Although this study did not detect a difference in change in eGFR between TDF and other NRTI treatments, it is possible that the length of data collection was not adequate to account for possible kidney injury from TDF. A study assessing renal tubular dysfunction in patients receiving adefovir or TDF showed a mean onset of dysfunction of 49 months.15 It is possible that participants in this study would go on to develop renal dysfunction in the future. This potential also was observed in a more recent retrospective cohort study in chronic HBV infection, which showed the greatest degree of decline in kidney function between 36 and 48 months (−11.87 to −15.73 mL/min/m2 for the TDF group).21

The retrospective design created additional limitations. We attempted to account for some by using a matched cohort for the entecavir group, and there was no statistically significant difference between the groups in baseline characteristics. In HIV patients, a 10-year follow-up study continued to show decline in eGFR throughout the study, though the greatest degree of reduction occurred in the first year of the study.10 The higher baseline eGFR of the TDF recipients, 77.7 mL/min for the TDF alone group and 89.7 mL/min for the TDF/emtricitabine group vs 72.2 to 76.3 mL/min in the other NRTI groups, suggests high potential for selection bias. Some health care providers were likely to avoid TDF in patients with lower eGFR due to the data suggesting nephrotoxicity in other populations. Another limitation is that the reason for the missing laboratory values could not be determined. The TDF group had the greatest disparity in SCr data availability at baseline vs 36 months, with 39.5% concurrence with TDF alone compared with 50.0 to 63.6% in the other groups. Other treatment received outside the VHA system also could have influenced results.

Conclusions

This retrospective, multicenter, cohort study did not find a difference between TDF and other NRTIs for changes in renal function over time in patients with HBV infection without HIV. There was a fixed effect for time, ie, all NRTI groups showed some decline in renal function over time (−4.6 mL/min), but the effects were similar across groups. The results appear contrary to studies with comorbid HIV showing a decline in renal function with TDF, but present studies in HBV monotherapy have mixed results.

Further studies are needed to validate these results, as this and previous studies have several limitations. If these results are confirmed, a possible mechanism for these differences between patients with and without HIV should be examined. In addition, a study looking specifically at incidence of acute kidney injury rather than overall decline in renal function would add important data. If the results of this study are confirmed, there could be clinical implications in choice of agent with treatment of HBV monoinfection. This would add to the overall armament of medications available for chronic HBV infection and could create cost savings in certain situations if providers feel more comfortable continuing to use TDF instead of switching to the more expensive TAF.

Acknowledgments

Funding for this study was provided by the Veterans Health Administration.

1. Chartier M, Maier MM, Morgan TR, et al. Achieving excellence in hepatitis B virus care for veterans in the Veterans Health Administration. Fed Pract. 2018;35(suppl 2):S49-S53.

2. Chayanupatkul M, Omino R, Mittal S, et al. Hepatocellular carcinoma in the absence of cirrhosis in patients with chronic hepatitis B virus infection. J Hepatol. 2017;66(2):355-362. doi:10.1016/j.jhep.2016.09.013

3. World Health Organization. Global hepatitis report, 2017. Published April 19, 2017. Accessed July 15, 2021. https://www.who.int/publications/i/item/global-hepatitis-report-2017

4. Kayaaslan B, Guner R. Adverse effects of oral antiviral therapy in chronic hepatitis B. World J Hepatol. 2017;9(5):227-241. doi:10.4254/wjh.v9.i5.227

5. Lampertico P, Chan HL, Janssen HL, Strasser SI, Schindler R, Berg T. Review article: long-term safety of nucleoside and nucleotide analogues in HBV-monoinfected patients. Aliment Pharmacol Ther. 2016;44(1):16-34. doi:10.1111/apt.13659

6. Pipili C, Cholongitas E, Papatheodoridis G. Review article: nucleos(t)ide analogues in patients with chronic hepatitis B virus infection and chronic kidney disease. Aliment Pharmacol Ther. 2014;39(1):35-46. doi:10.1111/apt.12538

7. Terrault NA, Bzowej NH, Chang KM, et al. AASLD guidelines for treatment of chronic hepatitis B. Hepatology. 2016;63(1):261-283. doi:10.1002/hep.28156

8. Gupta SK. Tenofovir-associated Fanconi syndrome: review of the FDA adverse event reporting system. AIDS Patient Care STDS. 2008;22(2):99-103. doi:10.1089/apc.2007.0052

9. Canadian Agency for Drugs and Technologies in Health. Pharmacoeconomic review teport: tenofovir alafenamide (Vemlidy): (Gilead Sciences Canada, Inc.): indication: treatment of chronic hepatitis B in adults with compensated liver disease. Published April 2018. Accessed July 15, 2021. https://www.ncbi.nlm.nih.gov/books/NBK532825/

10. Marcellin P, Heathcote EJ, Buti M, et al. Tenofovir disoproxil fumarate versus adefovir dipivoxil for chronic hepatitis B. N Engl J Med. 2008;359(23):2442-2455. doi:10.1056/NEJMoa0802878

11. van Bömmel F, de Man RA, Wedemeyer H, et al. Long-term efficacy of tenofovir monotherapy for hepatitis B virus-monoinfected patients after failure of nucleoside/nucleotide analogues. Hepatology. 2010;51(1):73-80. doi:10.1002/hep.23246

12. Gordon SC, Krastev Z, Horban A, et al. Efficacy of tenofovir disoproxil fumarate at 240 weeks in patients with chronic hepatitis B with high baseline viral load. Hepatology. 2013;58(2):505-513. doi:10.1002/hep.26277

13. Wong WWL, Pechivanoglou P, Wong J, et al. Antiviral treatment for treatment-naïve chronic hepatitis B: systematic review and network meta-analysis of randomized controlled trials. Syst Rev. 2019;8(1):207. Published 2019 Aug 19. doi:10.1186/s13643-019-1126-1

14. Han Y, Zeng A, Liao H, Liu Y, Chen Y, Ding H. The efficacy and safety comparison between tenofovir and entecavir in treatment of chronic hepatitis B and HBV related cirrhosis: A systematic review and meta-analysis. Int Immunopharmacol. 2017;42:168-175. doi:10.1016/j.intimp.2016.11.022

15. Laprise C, Baril JG, Dufresne S, Trottier H. Association between tenofovir exposure and reduced kidney function in a cohort of HIV-positive patients: results from 10 years of follow-up. Clin Infect Dis. 2013;56(4):567-575. doi:10.1093/cid/cis937

16. Hall AM, Hendry BM, Nitsch D, Connolly JO. Tenofovir-associated kidney toxicity in HIV-infected patients: a review of the evidence. Am J Kidney Dis. 2011;57(5):773-780. doi:10.1053/j.ajkd.2011.01.022

17. Veiga TM, Prazeres AB, Silva D, et al. Tenofovir nephrotoxicity is an important cause of acute kidney injury in hiv infected inpatients. Abstract FR-PO481 presented at: American Society of Nephrology Kidney Week 2015; November 6, 2015; San Diego, CA.

18. Tan LK, Gilleece Y, Mandalia S, et al. Reduced glomerular filtration rate but sustained virologic response in HIV/hepatitis B co-infected individuals on long-term tenofovir. J Viral Hepat. 2009;16(7):471-478. doi:10.1111/j.1365-2893.2009.01084.x

19. Gish RG, Clark MD, Kane SD, Shaw RE, Mangahas MF, Baqai S. Similar risk of renal events among patients treated with tenofovir or entecavir for chronic hepatitis B. Clin Gastroenterol Hepatol. 2012;10(8):941-e68. doi:10.1016/j.cgh.2012.04.008

20. Gara N, Zhao X, Collins MT, et al. Renal tubular dysfunction during long-term adefovir or tenofovir therapy in chronic hepatitis B. Aliment Pharmacol Ther. 2012;35(11):1317-1325. doi:10.1111/j.1365-2036.2012.05093.x

21. Tsai HJ, Chuang YW, Lee SW, Wu CY, Yeh HZ, Lee TY. Using the chronic kidney disease guidelines to evaluate the renal safety of tenofovir disoproxil fumarate in hepatitis B patients. Aliment Pharmacol Ther. 2018;47(12):1673-1681. doi:10.1111/apt.14682

22. Szczech LA, Gupta SK, Habash R, et al. The clinical epidemiology and course of the spectrum of renal diseases associated with HIV infection. Kidney Int. 2004;66(3):1145-1152. doi:10.1111/j.1523-1755.2004.00865.x

Infection with hepatitis B virus (HBV) is associated with risk of potentially lethal, chronic infection and is a major public health problem. Infection from HBV has the potential to lead to liver failure, cirrhosis, and cancer.1,2 Chronic HBV infection exists in as many as 2.2 million Americans, and in 2015 alone, HBV was estimated to be associated with 887,000 deaths worldwide.1,3 Suppression of viral load is the basis of treatment, necessitating long-term use of medication for treatment.4 Nucleoside reverse transcriptase inhibitors (entecavir, lamivudine, telbivudine) and nucleotide reverse transcriptase inhibitors (adefovir, tenofovir), have improved the efficacy and tolerability of chronic HBV treatment compared with interferon-based agents.4-7 However, concerns remain regarding long-term risk of nephrotoxicity, in particular with tenofovir disoproxil fumarate (TDF), which could lead to a limitation of safe and effective options for certain populations.5,6,8 A newer formulation, tenofovir alafenamide fumarate (TAF), has improved the kidney risks, but expense remains a limiting factor for this agent.9

Nucleos(t)ide reverse transcriptase inhibitors (NRTIs) have demonstrated efficacy in reducing HBV viral load and other markers of improvement in chronic HBV, but entecavir and tenofovir have tended to demonstrate greater efficacy in clinical trials.5-7 Several studies have suggested potential benefits of tenofovir-based treatment over other NRTIs, including greater viral load achievement compared with adefovir, efficacy in patients with previous failure of lamivudine or adefovir, and long-term efficacy in chronic HBV infection.10-12 A 2019 systematic review suggests TDF and TAF are more effective than other NRTIs for achieving viral load suppression.13 Other NRTIs are not without their own risks, including mitochondrial dysfunction, mostly with lamivudine and telbivudine.4

Despite these data, guidelines have varied in their treatment recommendations in the context of chronic kidney disease partly due to variations in the evidence regarding nephrotoxicity.7,14 Cohort studies and case reports have suggested association between TDF and acute kidney injury in patients with HIV infection as well as long-term reductions in kidney function.15,16 In one study, 58% of patients treated with TDF did not return to baseline kidney function after an event of acute kidney injury.17 However, little data are available on whether this association exists for chronic HBV treatment in the absence of HIV infection. One retrospective analysis comparing TDF and entecavir in chronic HBV without HIV showed greater incidence of creatinine clearance < 60 mL/min with TDF but greater incidence of serum creatinine (SCr) ≥ 2.5 mg/dL in the entacavir group, making it difficult to reach a clear conclusion on risks.18 Other studies have either suffered from small cohorts with TDF or included patients with HIV coinfection.19,20 Although a retrospective comparison of TDF and entecavir, randomly matched 1:2 to account for differences between groups, showed lower estimated glomerular filtration rate (eGFR) in the TDF group, more data are needed.21 Entecavir remains an option for many patient, but for those who have failed nucleosides, few options remain.

With the advantages available from TDF and the continued expense of TAF, more data regarding the risks of nephrotoxicity with TDF would be beneficial. The objective of this study was to compare treatment with TDF and other NRTIs in chronic HBV monoinfection to distinguish any differences in kidney function changes over time. With hopes of gathering enough data to distinguish between groups, information was gathered from across the Veterans Health Administration (VHA) system.

Methods