User login

New NAS report seeks to modernize STI paradigm

Approximately 68 million cases of sexually transmitted infections are reported in the United States each year, yet antiquated approaches to STI prevention, in addition to health care inequities and lack of funding, have substantially prevented providers and officials from curbing the spread. In response to rising case numbers, the National Academies of Sciences, Engineering, and Medicine released a report this week with recommendations to modernize the nation’s STI surveillance and monitoring systems, increase the capabilities of the STI workforce, and address structural barriers to STI prevention and access to care.

Given the rising rates of STIs and the urgent, unmet need for prevention and treatment, the Centers for Disease Control and Prevention’s National Association of County and City Health Officials commissioned the National Academies to develop actionable recommendations to control STIs. The new report marks a long road toward the public’s willingness to discuss STDs, or what a 1997 Institute of Medicine report described as a “hidden epidemic” that had been largely neglected in public discourse.

Jeffrey Crowley, MPH, committee member and an author of the new National Academies report, said in an interview that, despite the increased openness to discuss STIs in today’s society, STD rates since the late 1990s have gotten much worse. Lack of appropriate governmental funding for research and drug development, structural inequities, and persisting stigmatization are key drivers for rising rates, explained Mr. Crowley.

Addressing structural barriers to STI prevention

Playing a prominent role in the National Academies report are issues of structural and institutional barriers to STI prevention and care. In the report, the authors argued that a policy-based approach should seek to promote sexual health and eliminate structural racism and inequities to drive improvements in STI management.

“We think it’s these structural factors that are central to all the inequities that play out,” said Mr. Crowley, “and they either don’t get any attention or, if they do get attention, people don’t really speak concretely enough about how we address them.”

The concrete steps, as outlined in the report, begin with addressing factors that involve the health care industry at large. Automatic STI screening as part of routine visits, alerts in electronic health records that remind clinicians to screen patients, and reminders to test patients can be initial low-cost actions health care systems can take to improve STI testing, particularly in marginalized communities. Mr. Crowley noted that greater evidence is needed to support further steps to address structural factors that contribute to barriers in STI screening and treatment access.

Given the complexities inherent in structural barriers to STI care, the report calls on a whole-government response, in partnership with affected communities, to normalize discussions involving sexual well-being. “We have to ask ourselves how we can build healthier communities and how can we integrate sexual health into that dialogue in a way that improves our response to STI prevention and control,” Mr. Crowley explained.

Harnessing AI and dating apps

The report also addresses the power of artificial intelligence to predict STI rates and to discover trends in risk factors, both of which may improve STI surveillance and assist in the development of tailored interventions. The report calls for policy that will enable companies and the government to capitalize on AI to evaluate large collections of data in EHRs, insurance claims databases, social media, search engines, and even dating apps.

In particular, dating apps could be an avenue through which the public and private sectors could improve STI prevention, diagnosis, and treatment. “People want to focus on this idea of whether these apps increase transmission risk,” said Mr. Crowley. “But we would say that this is asking the wrong question, because these technologies are not going away.” He noted that private and public enterprises could work together to leverage these technologies to increase awareness of prevention and testing.

Unifying the STI/HIV and COVID-19 workforce

The report also recommends that the nation unify the STI/HIV workforce with the COVID-19 workforce. Given the high levels of expertise in these professional working groups, the report suggests unification could potentially address both the current crisis and possible future disease outbreaks. Combining COVID-19 response teams with underresourced STI/HIV programs may also improve the delivery of STI testing, considering that STI testing programs have had to compete for resources during the pandemic.

Addressing stigma

The National Academies report also addresses the ongoing issue of stigma, which results from “blaming” individuals and the choices they make so as to create shame, embarrassment, and discrimination. Because of stigma, sexually active people may be unwilling to seek recommended screening, which can lead to delays in diagnosis and treatment and can increase the risk for negative health outcomes.

“As a nation, we’ve almost focused too intently on individual-level factors in a way that’s driven stigma and really hasn’t been helpful for combating the problem,” said Mr. Crowley. He added that, instead of focusing solely on individual-level choices, the nation should instead work to reframe sexual health as a key aspect of overall physical, mental, and emotional well-being. Doing so could create more opportunities to address structural barriers to STI prevention and ensure that more prevention and screening services are available in stigma-free environments.

“I know what we’re recommending is ambitious, but it’s not too big to be achieved, and we’re not saying tomorrow we’re going to transform the world,” Mr. Crowley concluded. “It’s a puzzle with many pieces, but the long-term impact is really all of these pieces fitting together so that, over time, we can reduce the burden STIs have on the population.”

Implications for real-world change

H. Hunter Handsfield, MD, professor emeritus of medicine for the Center for AIDS and STD at the University of Washington, Seattle, said in an interview that this report essentially is a response to evolving societal changes, new and emerging means of social engagement, and increased focus on racial/ethnic disparities. “These features have all come to the forefront of health care and general policy discussions in recent years,” said Dr. Handsfield, who was not part of the committee that developed the NAS report.

Greater scrutiny on public health infrastructure and its relationship with health disparities in the United States makes the publication of these new recommendations especially appropriate during this era of enhanced focus on social justice. Although the report features the tone and quality needed to bolster bipartisan support, said Dr. Handsfield, it’s hard to predict whether such support will come to fruition in today’s political environment.

In terms of the effects the recommendations may have on STI rates, Dr. Handsfield noted that cherry-picking elements from the report to direct policy may result in its having only a trivial impact. “The report is really an appropriate and necessary response, and almost all the recommendations made can be helpful,” he said, “but for true effectiveness, all the elements need to be implemented to drive policy and funding.”

A version of this article first appeared on Medscape.com.

Approximately 68 million cases of sexually transmitted infections are reported in the United States each year, yet antiquated approaches to STI prevention, in addition to health care inequities and lack of funding, have substantially prevented providers and officials from curbing the spread. In response to rising case numbers, the National Academies of Sciences, Engineering, and Medicine released a report this week with recommendations to modernize the nation’s STI surveillance and monitoring systems, increase the capabilities of the STI workforce, and address structural barriers to STI prevention and access to care.

Given the rising rates of STIs and the urgent, unmet need for prevention and treatment, the Centers for Disease Control and Prevention’s National Association of County and City Health Officials commissioned the National Academies to develop actionable recommendations to control STIs. The new report marks a long road toward the public’s willingness to discuss STDs, or what a 1997 Institute of Medicine report described as a “hidden epidemic” that had been largely neglected in public discourse.

Jeffrey Crowley, MPH, committee member and an author of the new National Academies report, said in an interview that, despite the increased openness to discuss STIs in today’s society, STD rates since the late 1990s have gotten much worse. Lack of appropriate governmental funding for research and drug development, structural inequities, and persisting stigmatization are key drivers for rising rates, explained Mr. Crowley.

Addressing structural barriers to STI prevention

Playing a prominent role in the National Academies report are issues of structural and institutional barriers to STI prevention and care. In the report, the authors argued that a policy-based approach should seek to promote sexual health and eliminate structural racism and inequities to drive improvements in STI management.

“We think it’s these structural factors that are central to all the inequities that play out,” said Mr. Crowley, “and they either don’t get any attention or, if they do get attention, people don’t really speak concretely enough about how we address them.”

The concrete steps, as outlined in the report, begin with addressing factors that involve the health care industry at large. Automatic STI screening as part of routine visits, alerts in electronic health records that remind clinicians to screen patients, and reminders to test patients can be initial low-cost actions health care systems can take to improve STI testing, particularly in marginalized communities. Mr. Crowley noted that greater evidence is needed to support further steps to address structural factors that contribute to barriers in STI screening and treatment access.

Given the complexities inherent in structural barriers to STI care, the report calls on a whole-government response, in partnership with affected communities, to normalize discussions involving sexual well-being. “We have to ask ourselves how we can build healthier communities and how can we integrate sexual health into that dialogue in a way that improves our response to STI prevention and control,” Mr. Crowley explained.

Harnessing AI and dating apps

The report also addresses the power of artificial intelligence to predict STI rates and to discover trends in risk factors, both of which may improve STI surveillance and assist in the development of tailored interventions. The report calls for policy that will enable companies and the government to capitalize on AI to evaluate large collections of data in EHRs, insurance claims databases, social media, search engines, and even dating apps.

In particular, dating apps could be an avenue through which the public and private sectors could improve STI prevention, diagnosis, and treatment. “People want to focus on this idea of whether these apps increase transmission risk,” said Mr. Crowley. “But we would say that this is asking the wrong question, because these technologies are not going away.” He noted that private and public enterprises could work together to leverage these technologies to increase awareness of prevention and testing.

Unifying the STI/HIV and COVID-19 workforce

The report also recommends that the nation unify the STI/HIV workforce with the COVID-19 workforce. Given the high levels of expertise in these professional working groups, the report suggests unification could potentially address both the current crisis and possible future disease outbreaks. Combining COVID-19 response teams with underresourced STI/HIV programs may also improve the delivery of STI testing, considering that STI testing programs have had to compete for resources during the pandemic.

Addressing stigma

The National Academies report also addresses the ongoing issue of stigma, which results from “blaming” individuals and the choices they make so as to create shame, embarrassment, and discrimination. Because of stigma, sexually active people may be unwilling to seek recommended screening, which can lead to delays in diagnosis and treatment and can increase the risk for negative health outcomes.

“As a nation, we’ve almost focused too intently on individual-level factors in a way that’s driven stigma and really hasn’t been helpful for combating the problem,” said Mr. Crowley. He added that, instead of focusing solely on individual-level choices, the nation should instead work to reframe sexual health as a key aspect of overall physical, mental, and emotional well-being. Doing so could create more opportunities to address structural barriers to STI prevention and ensure that more prevention and screening services are available in stigma-free environments.

“I know what we’re recommending is ambitious, but it’s not too big to be achieved, and we’re not saying tomorrow we’re going to transform the world,” Mr. Crowley concluded. “It’s a puzzle with many pieces, but the long-term impact is really all of these pieces fitting together so that, over time, we can reduce the burden STIs have on the population.”

Implications for real-world change

H. Hunter Handsfield, MD, professor emeritus of medicine for the Center for AIDS and STD at the University of Washington, Seattle, said in an interview that this report essentially is a response to evolving societal changes, new and emerging means of social engagement, and increased focus on racial/ethnic disparities. “These features have all come to the forefront of health care and general policy discussions in recent years,” said Dr. Handsfield, who was not part of the committee that developed the NAS report.

Greater scrutiny on public health infrastructure and its relationship with health disparities in the United States makes the publication of these new recommendations especially appropriate during this era of enhanced focus on social justice. Although the report features the tone and quality needed to bolster bipartisan support, said Dr. Handsfield, it’s hard to predict whether such support will come to fruition in today’s political environment.

In terms of the effects the recommendations may have on STI rates, Dr. Handsfield noted that cherry-picking elements from the report to direct policy may result in its having only a trivial impact. “The report is really an appropriate and necessary response, and almost all the recommendations made can be helpful,” he said, “but for true effectiveness, all the elements need to be implemented to drive policy and funding.”

A version of this article first appeared on Medscape.com.

Approximately 68 million cases of sexually transmitted infections are reported in the United States each year, yet antiquated approaches to STI prevention, in addition to health care inequities and lack of funding, have substantially prevented providers and officials from curbing the spread. In response to rising case numbers, the National Academies of Sciences, Engineering, and Medicine released a report this week with recommendations to modernize the nation’s STI surveillance and monitoring systems, increase the capabilities of the STI workforce, and address structural barriers to STI prevention and access to care.

Given the rising rates of STIs and the urgent, unmet need for prevention and treatment, the Centers for Disease Control and Prevention’s National Association of County and City Health Officials commissioned the National Academies to develop actionable recommendations to control STIs. The new report marks a long road toward the public’s willingness to discuss STDs, or what a 1997 Institute of Medicine report described as a “hidden epidemic” that had been largely neglected in public discourse.

Jeffrey Crowley, MPH, committee member and an author of the new National Academies report, said in an interview that, despite the increased openness to discuss STIs in today’s society, STD rates since the late 1990s have gotten much worse. Lack of appropriate governmental funding for research and drug development, structural inequities, and persisting stigmatization are key drivers for rising rates, explained Mr. Crowley.

Addressing structural barriers to STI prevention

Playing a prominent role in the National Academies report are issues of structural and institutional barriers to STI prevention and care. In the report, the authors argued that a policy-based approach should seek to promote sexual health and eliminate structural racism and inequities to drive improvements in STI management.

“We think it’s these structural factors that are central to all the inequities that play out,” said Mr. Crowley, “and they either don’t get any attention or, if they do get attention, people don’t really speak concretely enough about how we address them.”

The concrete steps, as outlined in the report, begin with addressing factors that involve the health care industry at large. Automatic STI screening as part of routine visits, alerts in electronic health records that remind clinicians to screen patients, and reminders to test patients can be initial low-cost actions health care systems can take to improve STI testing, particularly in marginalized communities. Mr. Crowley noted that greater evidence is needed to support further steps to address structural factors that contribute to barriers in STI screening and treatment access.

Given the complexities inherent in structural barriers to STI care, the report calls on a whole-government response, in partnership with affected communities, to normalize discussions involving sexual well-being. “We have to ask ourselves how we can build healthier communities and how can we integrate sexual health into that dialogue in a way that improves our response to STI prevention and control,” Mr. Crowley explained.

Harnessing AI and dating apps

The report also addresses the power of artificial intelligence to predict STI rates and to discover trends in risk factors, both of which may improve STI surveillance and assist in the development of tailored interventions. The report calls for policy that will enable companies and the government to capitalize on AI to evaluate large collections of data in EHRs, insurance claims databases, social media, search engines, and even dating apps.

In particular, dating apps could be an avenue through which the public and private sectors could improve STI prevention, diagnosis, and treatment. “People want to focus on this idea of whether these apps increase transmission risk,” said Mr. Crowley. “But we would say that this is asking the wrong question, because these technologies are not going away.” He noted that private and public enterprises could work together to leverage these technologies to increase awareness of prevention and testing.

Unifying the STI/HIV and COVID-19 workforce

The report also recommends that the nation unify the STI/HIV workforce with the COVID-19 workforce. Given the high levels of expertise in these professional working groups, the report suggests unification could potentially address both the current crisis and possible future disease outbreaks. Combining COVID-19 response teams with underresourced STI/HIV programs may also improve the delivery of STI testing, considering that STI testing programs have had to compete for resources during the pandemic.

Addressing stigma

The National Academies report also addresses the ongoing issue of stigma, which results from “blaming” individuals and the choices they make so as to create shame, embarrassment, and discrimination. Because of stigma, sexually active people may be unwilling to seek recommended screening, which can lead to delays in diagnosis and treatment and can increase the risk for negative health outcomes.

“As a nation, we’ve almost focused too intently on individual-level factors in a way that’s driven stigma and really hasn’t been helpful for combating the problem,” said Mr. Crowley. He added that, instead of focusing solely on individual-level choices, the nation should instead work to reframe sexual health as a key aspect of overall physical, mental, and emotional well-being. Doing so could create more opportunities to address structural barriers to STI prevention and ensure that more prevention and screening services are available in stigma-free environments.

“I know what we’re recommending is ambitious, but it’s not too big to be achieved, and we’re not saying tomorrow we’re going to transform the world,” Mr. Crowley concluded. “It’s a puzzle with many pieces, but the long-term impact is really all of these pieces fitting together so that, over time, we can reduce the burden STIs have on the population.”

Implications for real-world change

H. Hunter Handsfield, MD, professor emeritus of medicine for the Center for AIDS and STD at the University of Washington, Seattle, said in an interview that this report essentially is a response to evolving societal changes, new and emerging means of social engagement, and increased focus on racial/ethnic disparities. “These features have all come to the forefront of health care and general policy discussions in recent years,” said Dr. Handsfield, who was not part of the committee that developed the NAS report.

Greater scrutiny on public health infrastructure and its relationship with health disparities in the United States makes the publication of these new recommendations especially appropriate during this era of enhanced focus on social justice. Although the report features the tone and quality needed to bolster bipartisan support, said Dr. Handsfield, it’s hard to predict whether such support will come to fruition in today’s political environment.

In terms of the effects the recommendations may have on STI rates, Dr. Handsfield noted that cherry-picking elements from the report to direct policy may result in its having only a trivial impact. “The report is really an appropriate and necessary response, and almost all the recommendations made can be helpful,” he said, “but for true effectiveness, all the elements need to be implemented to drive policy and funding.”

A version of this article first appeared on Medscape.com.

The significance of mismatch repair deficiency in endometrial cancer

Women with Lynch syndrome are known to carry an approximately 60% lifetime risk of endometrial cancer. These cancers result from inherited deleterious mutations in genes that code for mismatch repair proteins. However, mismatch repair deficiency (MMR-d) is not exclusively found in the tumors of patients with Lynch syndrome, and much is being learned about this group of endometrial cancers, their behavior, and their vulnerability to targeted therapies.

During the processes of DNA replication, recombination, or chemical and physical damage, mismatches in base pairs frequently occurs. Mismatch repair proteins function to identify and repair such errors, and the loss of their function causes the accumulation of the insertions or deletions of short, repetitive sequences of DNA. This phenomenon can be measured using polymerase chain reaction (PCR) screening of known microsatellites to look for the accumulation of errors, a phenotype which is called microsatellite instability (MSI). The accumulation of errors in DNA sequences is thought to lead to mutations in cancer-related genes.

The four predominant mismatch repair genes include MLH1, MSH2, MSH 6, and PMS2. These genes may possess loss of function through a germline/inherited mechanism, such as Lynch syndrome, or can be sporadically acquired. Approximately 20%-30% of endometrial cancers exhibit MMR-d with acquired, sporadic losses in function being the majority of cases and only approximately 10% a result of Lynch syndrome. Mutations in PMS2 are the dominant genotype of Lynch syndrome, whereas loss of function in MLH1 is most frequent aberration in sporadic cases of MMR-d endometrial cancer.1

Endometrial cancers can be tested for MMR-d by performing immunohistochemistry to look for loss of expression in the four most common MMR genes. If there is loss of expression of MLH1, additional triage testing can be performed to determine if this loss is caused by the epigenetic phenomenon of hypermethylation. When present, this excludes Lynch syndrome and suggests a sporadic form origin of the disease. If there is loss of expression of the MMR genes (including loss of MLH1 and subsequent negative testing for promotor methylation), the patient should receive genetic testing for the presence of a germline mutation indicating Lynch syndrome. As an adjunct or alternative to immunohistochemistry, PCR studies or next-generation sequencing can be used to measure the presence of microsatellite instability in a process that identifies the expansion or reduction in repetitive DNA sequences of the tumor, compared with normal tumor.2

It is of the highest importance to identify endometrial cancers caused by Lynch syndrome because this enables providers to offer cascade testing of relatives, and to intensify screening or preventative measures for the many other cancers (such as colon, upper gastrointestinal, breast, and urothelial) for which these patients are at risk. Therefore, routine screening for MMR-d tumors is recommended in all cases of endometrial cancer, not simply those of a young age at diagnosis or for whom a strong family history exists.3 Using family history factors, primary tumor site, and age as a trigger for screening for Lynch syndrome, such as the Bethesda Guidelines, is associated with a 82% sensitivity in identifying Lynch syndrome. In a meta-analysis including testing results from 1,159 women with endometrial cancer, 43% of patients who were diagnosed with Lynch syndrome via molecular analysis would have been missed by clinical screening using Bethesda Guidelines.2

Discovering cases of Lynch syndrome is not the only benefit of routine testing for MMR-d in endometrial cancers. There is also significant value in the characterization of sporadic mismatch repair–deficient tumors because this information provides prognostic information and guides therapy. Tumors with a microsatellite-high phenotype/MMR-d were identified as one of the four distinct molecular subgroups of endometrial cancer by the Cancer Genome Atlas.4 Patients with this molecular profile exhibited “intermediate” prognostic outcomes, performing better than the “serous-like” cancers with p53 mutations, yet worse than patients with a POLE ultramutated group who rarely experience recurrences or death, even in the setting of unfavorable histology.

Beyond prognostication, the molecular profile of endometrial cancers also influence their responsiveness to therapeutics, highlighting the importance of splitting, not lumping endometrial cancers into relevant molecular subgroups when designing research and practicing clinical medicine. The PORTEC-3 trial studied 410 women with high-risk endometrial cancer, and randomized participants to receive either adjuvant radiation alone, or radiation with chemotherapy.5 There were no differences in progression-free survival between the two therapeutic strategies when analyzed in aggregate. However, when analyzed by Cancer Genome Atlas molecular subgroup, it was noted that there was a clear benefit from chemotherapy for patients with p53 mutations. For patients with MMR-d tumors, no such benefit was observed. Patients assigned this molecular subgroup did no better with the addition of platinum and taxane chemotherapy over radiation alone. Unfortunately, for patients with MMR-d tumors, recurrence rates remained high, suggesting that we can and need to discover more effective therapies for these tumors than what is available with conventional radiation or platinum and taxane chemotherapy. Targeted therapy may be the solution to this problem. Through microsatellite instability, MMR-d tumors create somatic mutations which result in neoantigens, an immunogenic environment. This state up-regulates checkpoint inhibitor proteins, which serve as an actionable target for anti-PD-L1 antibodies, such as the drug pembrolizumab which has been shown to be highly active against MMR-d endometrial cancer. In the landmark, KEYNOTE-158 trial, patients with advanced, recurrent solid tumors that exhibited MMR-d were treated with pembrolizumab.6 This included 49 patients with endometrial cancer, among whom there was a 79% response rate. Subsequently, pembrolizumab was granted Food and Drug Administration approval for use in advanced, recurrent MMR-d/MSI-high endometrial cancer. Trials are currently enrolling patients to explore the utility of this drug in the up-front setting in both early- and late-stage disease with a hope that this targeted therapy can do what conventional cytotoxic chemotherapy has failed to do.

Therefore, given the clinical significance of mismatch repair deficiency, all patients with endometrial cancer should be investigated for loss of expression in these proteins, and if present, considered for the possibility of Lynch syndrome. While most will not have an inherited cause, this information regarding their tumor biology remains critically important in both prognostication and decision-making surrounding other therapies and their eligibility for promising clinical trials.

Dr. Rossi is assistant professor in the division of gynecologic oncology at the University of North Carolina at Chapel Hill. She has no conflicts of interest to declare. Email her at obnews@mdedge.com.

References

1. Simpkins SB et al. Hum. Mol. Genet. 1999;8:661-6.

2. Kahn R et al. Cancer. 2019 Sep 15;125(18):2172-3183.

3. SGO Clinical Practice Statement: Screening for Lynch Syndrome in Endometrial Cancer. https://www.sgo.org/clinical-practice/guidelines/screening-for-lynch-syndrome-in-endometrial-cancer/

4. Kandoth et al. Nature. 2013;497(7447):67-73.

5. Leon-Castillo A et al. J Clin Oncol. 2020 Oct 10;38(29):3388-97.

6. Marabelle A et al. J Clin Oncol. 2020 Jan 1;38(1):1-10.

Women with Lynch syndrome are known to carry an approximately 60% lifetime risk of endometrial cancer. These cancers result from inherited deleterious mutations in genes that code for mismatch repair proteins. However, mismatch repair deficiency (MMR-d) is not exclusively found in the tumors of patients with Lynch syndrome, and much is being learned about this group of endometrial cancers, their behavior, and their vulnerability to targeted therapies.

During the processes of DNA replication, recombination, or chemical and physical damage, mismatches in base pairs frequently occurs. Mismatch repair proteins function to identify and repair such errors, and the loss of their function causes the accumulation of the insertions or deletions of short, repetitive sequences of DNA. This phenomenon can be measured using polymerase chain reaction (PCR) screening of known microsatellites to look for the accumulation of errors, a phenotype which is called microsatellite instability (MSI). The accumulation of errors in DNA sequences is thought to lead to mutations in cancer-related genes.

The four predominant mismatch repair genes include MLH1, MSH2, MSH 6, and PMS2. These genes may possess loss of function through a germline/inherited mechanism, such as Lynch syndrome, or can be sporadically acquired. Approximately 20%-30% of endometrial cancers exhibit MMR-d with acquired, sporadic losses in function being the majority of cases and only approximately 10% a result of Lynch syndrome. Mutations in PMS2 are the dominant genotype of Lynch syndrome, whereas loss of function in MLH1 is most frequent aberration in sporadic cases of MMR-d endometrial cancer.1

Endometrial cancers can be tested for MMR-d by performing immunohistochemistry to look for loss of expression in the four most common MMR genes. If there is loss of expression of MLH1, additional triage testing can be performed to determine if this loss is caused by the epigenetic phenomenon of hypermethylation. When present, this excludes Lynch syndrome and suggests a sporadic form origin of the disease. If there is loss of expression of the MMR genes (including loss of MLH1 and subsequent negative testing for promotor methylation), the patient should receive genetic testing for the presence of a germline mutation indicating Lynch syndrome. As an adjunct or alternative to immunohistochemistry, PCR studies or next-generation sequencing can be used to measure the presence of microsatellite instability in a process that identifies the expansion or reduction in repetitive DNA sequences of the tumor, compared with normal tumor.2

It is of the highest importance to identify endometrial cancers caused by Lynch syndrome because this enables providers to offer cascade testing of relatives, and to intensify screening or preventative measures for the many other cancers (such as colon, upper gastrointestinal, breast, and urothelial) for which these patients are at risk. Therefore, routine screening for MMR-d tumors is recommended in all cases of endometrial cancer, not simply those of a young age at diagnosis or for whom a strong family history exists.3 Using family history factors, primary tumor site, and age as a trigger for screening for Lynch syndrome, such as the Bethesda Guidelines, is associated with a 82% sensitivity in identifying Lynch syndrome. In a meta-analysis including testing results from 1,159 women with endometrial cancer, 43% of patients who were diagnosed with Lynch syndrome via molecular analysis would have been missed by clinical screening using Bethesda Guidelines.2

Discovering cases of Lynch syndrome is not the only benefit of routine testing for MMR-d in endometrial cancers. There is also significant value in the characterization of sporadic mismatch repair–deficient tumors because this information provides prognostic information and guides therapy. Tumors with a microsatellite-high phenotype/MMR-d were identified as one of the four distinct molecular subgroups of endometrial cancer by the Cancer Genome Atlas.4 Patients with this molecular profile exhibited “intermediate” prognostic outcomes, performing better than the “serous-like” cancers with p53 mutations, yet worse than patients with a POLE ultramutated group who rarely experience recurrences or death, even in the setting of unfavorable histology.

Beyond prognostication, the molecular profile of endometrial cancers also influence their responsiveness to therapeutics, highlighting the importance of splitting, not lumping endometrial cancers into relevant molecular subgroups when designing research and practicing clinical medicine. The PORTEC-3 trial studied 410 women with high-risk endometrial cancer, and randomized participants to receive either adjuvant radiation alone, or radiation with chemotherapy.5 There were no differences in progression-free survival between the two therapeutic strategies when analyzed in aggregate. However, when analyzed by Cancer Genome Atlas molecular subgroup, it was noted that there was a clear benefit from chemotherapy for patients with p53 mutations. For patients with MMR-d tumors, no such benefit was observed. Patients assigned this molecular subgroup did no better with the addition of platinum and taxane chemotherapy over radiation alone. Unfortunately, for patients with MMR-d tumors, recurrence rates remained high, suggesting that we can and need to discover more effective therapies for these tumors than what is available with conventional radiation or platinum and taxane chemotherapy. Targeted therapy may be the solution to this problem. Through microsatellite instability, MMR-d tumors create somatic mutations which result in neoantigens, an immunogenic environment. This state up-regulates checkpoint inhibitor proteins, which serve as an actionable target for anti-PD-L1 antibodies, such as the drug pembrolizumab which has been shown to be highly active against MMR-d endometrial cancer. In the landmark, KEYNOTE-158 trial, patients with advanced, recurrent solid tumors that exhibited MMR-d were treated with pembrolizumab.6 This included 49 patients with endometrial cancer, among whom there was a 79% response rate. Subsequently, pembrolizumab was granted Food and Drug Administration approval for use in advanced, recurrent MMR-d/MSI-high endometrial cancer. Trials are currently enrolling patients to explore the utility of this drug in the up-front setting in both early- and late-stage disease with a hope that this targeted therapy can do what conventional cytotoxic chemotherapy has failed to do.

Therefore, given the clinical significance of mismatch repair deficiency, all patients with endometrial cancer should be investigated for loss of expression in these proteins, and if present, considered for the possibility of Lynch syndrome. While most will not have an inherited cause, this information regarding their tumor biology remains critically important in both prognostication and decision-making surrounding other therapies and their eligibility for promising clinical trials.

Dr. Rossi is assistant professor in the division of gynecologic oncology at the University of North Carolina at Chapel Hill. She has no conflicts of interest to declare. Email her at obnews@mdedge.com.

References

1. Simpkins SB et al. Hum. Mol. Genet. 1999;8:661-6.

2. Kahn R et al. Cancer. 2019 Sep 15;125(18):2172-3183.

3. SGO Clinical Practice Statement: Screening for Lynch Syndrome in Endometrial Cancer. https://www.sgo.org/clinical-practice/guidelines/screening-for-lynch-syndrome-in-endometrial-cancer/

4. Kandoth et al. Nature. 2013;497(7447):67-73.

5. Leon-Castillo A et al. J Clin Oncol. 2020 Oct 10;38(29):3388-97.

6. Marabelle A et al. J Clin Oncol. 2020 Jan 1;38(1):1-10.

Women with Lynch syndrome are known to carry an approximately 60% lifetime risk of endometrial cancer. These cancers result from inherited deleterious mutations in genes that code for mismatch repair proteins. However, mismatch repair deficiency (MMR-d) is not exclusively found in the tumors of patients with Lynch syndrome, and much is being learned about this group of endometrial cancers, their behavior, and their vulnerability to targeted therapies.

During the processes of DNA replication, recombination, or chemical and physical damage, mismatches in base pairs frequently occurs. Mismatch repair proteins function to identify and repair such errors, and the loss of their function causes the accumulation of the insertions or deletions of short, repetitive sequences of DNA. This phenomenon can be measured using polymerase chain reaction (PCR) screening of known microsatellites to look for the accumulation of errors, a phenotype which is called microsatellite instability (MSI). The accumulation of errors in DNA sequences is thought to lead to mutations in cancer-related genes.

The four predominant mismatch repair genes include MLH1, MSH2, MSH 6, and PMS2. These genes may possess loss of function through a germline/inherited mechanism, such as Lynch syndrome, or can be sporadically acquired. Approximately 20%-30% of endometrial cancers exhibit MMR-d with acquired, sporadic losses in function being the majority of cases and only approximately 10% a result of Lynch syndrome. Mutations in PMS2 are the dominant genotype of Lynch syndrome, whereas loss of function in MLH1 is most frequent aberration in sporadic cases of MMR-d endometrial cancer.1

Endometrial cancers can be tested for MMR-d by performing immunohistochemistry to look for loss of expression in the four most common MMR genes. If there is loss of expression of MLH1, additional triage testing can be performed to determine if this loss is caused by the epigenetic phenomenon of hypermethylation. When present, this excludes Lynch syndrome and suggests a sporadic form origin of the disease. If there is loss of expression of the MMR genes (including loss of MLH1 and subsequent negative testing for promotor methylation), the patient should receive genetic testing for the presence of a germline mutation indicating Lynch syndrome. As an adjunct or alternative to immunohistochemistry, PCR studies or next-generation sequencing can be used to measure the presence of microsatellite instability in a process that identifies the expansion or reduction in repetitive DNA sequences of the tumor, compared with normal tumor.2

It is of the highest importance to identify endometrial cancers caused by Lynch syndrome because this enables providers to offer cascade testing of relatives, and to intensify screening or preventative measures for the many other cancers (such as colon, upper gastrointestinal, breast, and urothelial) for which these patients are at risk. Therefore, routine screening for MMR-d tumors is recommended in all cases of endometrial cancer, not simply those of a young age at diagnosis or for whom a strong family history exists.3 Using family history factors, primary tumor site, and age as a trigger for screening for Lynch syndrome, such as the Bethesda Guidelines, is associated with a 82% sensitivity in identifying Lynch syndrome. In a meta-analysis including testing results from 1,159 women with endometrial cancer, 43% of patients who were diagnosed with Lynch syndrome via molecular analysis would have been missed by clinical screening using Bethesda Guidelines.2

Discovering cases of Lynch syndrome is not the only benefit of routine testing for MMR-d in endometrial cancers. There is also significant value in the characterization of sporadic mismatch repair–deficient tumors because this information provides prognostic information and guides therapy. Tumors with a microsatellite-high phenotype/MMR-d were identified as one of the four distinct molecular subgroups of endometrial cancer by the Cancer Genome Atlas.4 Patients with this molecular profile exhibited “intermediate” prognostic outcomes, performing better than the “serous-like” cancers with p53 mutations, yet worse than patients with a POLE ultramutated group who rarely experience recurrences or death, even in the setting of unfavorable histology.

Beyond prognostication, the molecular profile of endometrial cancers also influence their responsiveness to therapeutics, highlighting the importance of splitting, not lumping endometrial cancers into relevant molecular subgroups when designing research and practicing clinical medicine. The PORTEC-3 trial studied 410 women with high-risk endometrial cancer, and randomized participants to receive either adjuvant radiation alone, or radiation with chemotherapy.5 There were no differences in progression-free survival between the two therapeutic strategies when analyzed in aggregate. However, when analyzed by Cancer Genome Atlas molecular subgroup, it was noted that there was a clear benefit from chemotherapy for patients with p53 mutations. For patients with MMR-d tumors, no such benefit was observed. Patients assigned this molecular subgroup did no better with the addition of platinum and taxane chemotherapy over radiation alone. Unfortunately, for patients with MMR-d tumors, recurrence rates remained high, suggesting that we can and need to discover more effective therapies for these tumors than what is available with conventional radiation or platinum and taxane chemotherapy. Targeted therapy may be the solution to this problem. Through microsatellite instability, MMR-d tumors create somatic mutations which result in neoantigens, an immunogenic environment. This state up-regulates checkpoint inhibitor proteins, which serve as an actionable target for anti-PD-L1 antibodies, such as the drug pembrolizumab which has been shown to be highly active against MMR-d endometrial cancer. In the landmark, KEYNOTE-158 trial, patients with advanced, recurrent solid tumors that exhibited MMR-d were treated with pembrolizumab.6 This included 49 patients with endometrial cancer, among whom there was a 79% response rate. Subsequently, pembrolizumab was granted Food and Drug Administration approval for use in advanced, recurrent MMR-d/MSI-high endometrial cancer. Trials are currently enrolling patients to explore the utility of this drug in the up-front setting in both early- and late-stage disease with a hope that this targeted therapy can do what conventional cytotoxic chemotherapy has failed to do.

Therefore, given the clinical significance of mismatch repair deficiency, all patients with endometrial cancer should be investigated for loss of expression in these proteins, and if present, considered for the possibility of Lynch syndrome. While most will not have an inherited cause, this information regarding their tumor biology remains critically important in both prognostication and decision-making surrounding other therapies and their eligibility for promising clinical trials.

Dr. Rossi is assistant professor in the division of gynecologic oncology at the University of North Carolina at Chapel Hill. She has no conflicts of interest to declare. Email her at obnews@mdedge.com.

References

1. Simpkins SB et al. Hum. Mol. Genet. 1999;8:661-6.

2. Kahn R et al. Cancer. 2019 Sep 15;125(18):2172-3183.

3. SGO Clinical Practice Statement: Screening for Lynch Syndrome in Endometrial Cancer. https://www.sgo.org/clinical-practice/guidelines/screening-for-lynch-syndrome-in-endometrial-cancer/

4. Kandoth et al. Nature. 2013;497(7447):67-73.

5. Leon-Castillo A et al. J Clin Oncol. 2020 Oct 10;38(29):3388-97.

6. Marabelle A et al. J Clin Oncol. 2020 Jan 1;38(1):1-10.

Lenvatinib Plus Pembrolizumab Improves Outcomes in Previously Untreated Advanced Clear Cell Renal Cell Carcinoma

Study Overview

Objective. To evaluate the efficacy and safety of lenvatinib in combination with everolimus or pembrolizumab compared with sunitinib alone for the treatment of newly diagnosed advanced clear cell renal cell carcinoma (ccRCC).

Design. Global, multicenter, randomized, open-label, phase 3 trial.

Intervention. Patients were randomized in a 1:1:1 ratio to receive treatment with 1 of 3 regimens: lenvatinib 20 mg daily plus pembrolizumab 200 mg on day 1 of each 21-day cycle; lenvatinib 18 mg daily plus everolimus 5 mg once daily for each 21-day cycle; or sunitinib 50 mg daily for 4 weeks followed by 2 weeks off. Patients were stratified according to geographic region and Memorial Sloan Kettering Cancer Center (MSKCC) prognostic risk group.

Setting and participants. A total of 1417 patients were screened, and 1069 patients underwent randomization between October 2016 and July 2019: 355 patients were randomized to the lenvatinib plus pembrolizumab group, 357 were randomized to the lenvatinib plus everolimus group, and 357 were randomized to the sunitinib alone group. The patients must have had a diagnosis of previously untreated advanced renal cell carcinoma with a clear-cell component. All the patients need to have a Karnofsky performance status of at least 70, adequate renal function, and controlled blood pressure with or without antihypertensive medications.

Main outcome measures. The primary endpoint assessed the progression-free survival (PFS) as evaluated by independent review committee using RECIST, version 1.1. Imaging was performed at the time of screening and every 8 weeks thereafter. Secondary endpoints were safety, overall survival (OS), and objective response rate as well as investigator-assessed PFS. Also, they assessed the duration of response. During the treatment period, the safety and adverse events were assessed up to 30 days from the last dose of the trial drug.

Main results. The baseline characteristics were well balanced between the treatment groups. More than 70% of enrolled participants were male. Approximately 60% of participants were MSKCC intermediate risk, 27% were favorable risk, and 9% were poor risk. Patients with a PD-L1 combined positive score of 1% or more represented 30% of the population. The remainder had a PD-L1 combined positive score of <1% (30%) or such data were not available (38%). Liver metastases were present in 17% of patients at baseline in each group, and 70% of patients had a prior nephrectomy. The data cutoff occurred in August 2020 for PFS and the median follow-up for OS was 26.6 months. Around 40% of the participants in the lenvatinib plus pembrolizumab group, 18.8% in the sunitinib group, and 31% in the lenvatinib plus everolimus group were still receiving trial treatment at data cutoff. The leading cause for discontinuing therapy was disease progression. Approximately 50% of patients in the lenvatinib/everolimus group and sunitinib group received subsequent checkpoint inhibitor therapy after progression.

The median PFS in the lenvatinib plus pembrolizumab group was significantly longer than in the sunitinib group, 23.9 months vs 9.2 months (hazard ratio [HR], 0.39; 95% CI, 0.32-0.49; P < 0.001). The median PFS was also significantly longer in the lenvatinib plus everolimus group compared with sunitinib, 14.7 vs 9.2 months (HR 0.65; 95% CI 0.53-0.80; P < 0.001). The PFS benefit favored the lenvatinib combination groups over sunitinib in all subgroups, including the MSKCC prognostic risk groups. The median OS was not reached with any treatment, with 79% of patients in the lenvatinib plus pembrolizumab group, 66% of patients in the lenvatinib plus everolimus group, and 70% in the sunitinib group still alive at 24 months. Survival was significantly longer in the lenvatinib plus pembrolizumab group compared with sunitinib (HR, 0.66; 95% CI, 0.49-0.88; P = 0.005). The OS favored lenvatinib/pembrolizumab over sunitinib in the PD-L1 positive or negative groups. The median duration of response in the lenvatinib plus pembrolizumab group was 25.8 months compared to 16.6 months and 14.6 months in the lenvatinib plus everolimus and sunitinib groups, respectively. Complete response rates were higher in the lenvatinib plus pembrolizumab group (16%) compared with lenvatinib/everolimus (9.8%) or sunitinib (4.2%). The median time to response was around 1.9 months in all 3 groups.

The most frequent adverse events seen in all groups were diarrhea, hypertension, fatigue, and nausea. Hypothyroidism was seen more frequently in the lenvatinib plus pembrolizumab group (47%). Grade 3 adverse events were seen in approximately 80% of patients in all groups. The most common grade 3 or higher adverse event was hypertension in all 3 groups. The median time for discontinuing treatment due to side effects was 8.97 months in the lenvatinib plus pembrolizumab arm, 5.49 months in the lenvatinib plus everolimus group, and 4.57 months in the sunitinib group. In the lenvatinib plus pembrolizumab group, 15 patients had grade 5 adverse events; 11 participants had fatal events not related to disease progression. In the lenvatinib plus everolimus group, there were 22 patients with grade 5 events, with 10 fatal events not related to disease progression. In the sunitinib group, 11 patients had grade 5 events, and only 2 fatal events were not linked to disease progression.

Conclusion. The combination of lenvatinib plus pembrolizumab significantly prolongs PFS and OS compared with sunitinib in patients with previously untreated and advanced ccRCC. The median OS has not yet been reached.

Commentary

The results of the current phase 3 CLEAR trial highlight the efficacy and safety of lenvatinib plus pembrolizumab as a first-line treatment in advanced ccRCC. This trial adds to the rapidly growing body of literature supporting the notion that the combination of anti-PD-1 based therapy with either CTLA-4 antibodies or VEGF receptor tyrosine kinase inhibitors (TKI) improves outcomes in previously untreated patients with advanced ccRCC. Previously presented data from Keynote-426 (pembrolizumab plus axitinib), Checkmate-214 (nivolumab plus ipilimumab), and Javelin Renal 101 (Avelumab plus axitinib) have also shown improved outcomes with combination therapy in the frontline setting.1-4 While the landscape of therapeutic options in the frontline setting continues to grow, there remains lack of clarity as to how to tailor our therapeutic decisions for specific patient populations. The exception would be nivolumab and ipilimumab, which are currently indicated for IMDC intermediate- or poor-risk patients.

The combination of VEGFR TKI therapy and PD-1 antibodies provides rapid disease control, with a median time to response in the current study of 1.9 months, and, generally speaking, a low risk of progression in the first 6 months of therapy. While cross-trial comparisons are always problematic, the PFS reported in this study and others with VEGFR TKI and PD-1 antibody combinations is quite impressive and surpasses that noted in Checkmate 214.3 While the median OS survival has not yet been reached, the long duration of PFS and complete response rate of 16% in this study certainly make this an attractive frontline option for newly diagnosed patients with advanced ccRCC. Longer follow-up is needed to confirm the survival benefit noted.

Applications for Clinical Practice

The current data support the use VEGFR TKI and anti-PD1 therapy in the frontline setting. How to choose between such combination regimens or combination immunotherapy remains unclear, and further biomarker-based assessments are needed to help guide therapeutic decisions for our patients.

1. Motzer, R, Alekseev B, Rha SY, et al. Lenvatinib plus pembrolizumab or everolimus for advanced renal cell carcinoma [published online ahead of print, 2021 Feb 13]. N Engl J Med. 2021;10.1056/NEJMoa2035716. doi:10.1056/NEJMoa2035716

2. Rini, BI, Plimack ER, Stus V, et al. Pembrolizumab plus axitinib versus sunitinib for advanced renal-cell carcinoma. N Engl J Med. 2019;380(12):1116-1127.

3. Motzer, RJ, Tannir NM, McDermott DF, et al. Nivolumab plus ipilimumab versus sunitinib in advanced renal-cell carcinoma. N Engl J Med. 2018;378(14):1277-1290.

4. Motzer, RJ, Penkov K, Haanen J, et al. Avelumab plus axitinib versus sunitinib for advanced renal-cell carcinoma. N Engl J Med. 2019;380(12):1103-1115.

Study Overview

Objective. To evaluate the efficacy and safety of lenvatinib in combination with everolimus or pembrolizumab compared with sunitinib alone for the treatment of newly diagnosed advanced clear cell renal cell carcinoma (ccRCC).

Design. Global, multicenter, randomized, open-label, phase 3 trial.

Intervention. Patients were randomized in a 1:1:1 ratio to receive treatment with 1 of 3 regimens: lenvatinib 20 mg daily plus pembrolizumab 200 mg on day 1 of each 21-day cycle; lenvatinib 18 mg daily plus everolimus 5 mg once daily for each 21-day cycle; or sunitinib 50 mg daily for 4 weeks followed by 2 weeks off. Patients were stratified according to geographic region and Memorial Sloan Kettering Cancer Center (MSKCC) prognostic risk group.

Setting and participants. A total of 1417 patients were screened, and 1069 patients underwent randomization between October 2016 and July 2019: 355 patients were randomized to the lenvatinib plus pembrolizumab group, 357 were randomized to the lenvatinib plus everolimus group, and 357 were randomized to the sunitinib alone group. The patients must have had a diagnosis of previously untreated advanced renal cell carcinoma with a clear-cell component. All the patients need to have a Karnofsky performance status of at least 70, adequate renal function, and controlled blood pressure with or without antihypertensive medications.

Main outcome measures. The primary endpoint assessed the progression-free survival (PFS) as evaluated by independent review committee using RECIST, version 1.1. Imaging was performed at the time of screening and every 8 weeks thereafter. Secondary endpoints were safety, overall survival (OS), and objective response rate as well as investigator-assessed PFS. Also, they assessed the duration of response. During the treatment period, the safety and adverse events were assessed up to 30 days from the last dose of the trial drug.

Main results. The baseline characteristics were well balanced between the treatment groups. More than 70% of enrolled participants were male. Approximately 60% of participants were MSKCC intermediate risk, 27% were favorable risk, and 9% were poor risk. Patients with a PD-L1 combined positive score of 1% or more represented 30% of the population. The remainder had a PD-L1 combined positive score of <1% (30%) or such data were not available (38%). Liver metastases were present in 17% of patients at baseline in each group, and 70% of patients had a prior nephrectomy. The data cutoff occurred in August 2020 for PFS and the median follow-up for OS was 26.6 months. Around 40% of the participants in the lenvatinib plus pembrolizumab group, 18.8% in the sunitinib group, and 31% in the lenvatinib plus everolimus group were still receiving trial treatment at data cutoff. The leading cause for discontinuing therapy was disease progression. Approximately 50% of patients in the lenvatinib/everolimus group and sunitinib group received subsequent checkpoint inhibitor therapy after progression.

The median PFS in the lenvatinib plus pembrolizumab group was significantly longer than in the sunitinib group, 23.9 months vs 9.2 months (hazard ratio [HR], 0.39; 95% CI, 0.32-0.49; P < 0.001). The median PFS was also significantly longer in the lenvatinib plus everolimus group compared with sunitinib, 14.7 vs 9.2 months (HR 0.65; 95% CI 0.53-0.80; P < 0.001). The PFS benefit favored the lenvatinib combination groups over sunitinib in all subgroups, including the MSKCC prognostic risk groups. The median OS was not reached with any treatment, with 79% of patients in the lenvatinib plus pembrolizumab group, 66% of patients in the lenvatinib plus everolimus group, and 70% in the sunitinib group still alive at 24 months. Survival was significantly longer in the lenvatinib plus pembrolizumab group compared with sunitinib (HR, 0.66; 95% CI, 0.49-0.88; P = 0.005). The OS favored lenvatinib/pembrolizumab over sunitinib in the PD-L1 positive or negative groups. The median duration of response in the lenvatinib plus pembrolizumab group was 25.8 months compared to 16.6 months and 14.6 months in the lenvatinib plus everolimus and sunitinib groups, respectively. Complete response rates were higher in the lenvatinib plus pembrolizumab group (16%) compared with lenvatinib/everolimus (9.8%) or sunitinib (4.2%). The median time to response was around 1.9 months in all 3 groups.

The most frequent adverse events seen in all groups were diarrhea, hypertension, fatigue, and nausea. Hypothyroidism was seen more frequently in the lenvatinib plus pembrolizumab group (47%). Grade 3 adverse events were seen in approximately 80% of patients in all groups. The most common grade 3 or higher adverse event was hypertension in all 3 groups. The median time for discontinuing treatment due to side effects was 8.97 months in the lenvatinib plus pembrolizumab arm, 5.49 months in the lenvatinib plus everolimus group, and 4.57 months in the sunitinib group. In the lenvatinib plus pembrolizumab group, 15 patients had grade 5 adverse events; 11 participants had fatal events not related to disease progression. In the lenvatinib plus everolimus group, there were 22 patients with grade 5 events, with 10 fatal events not related to disease progression. In the sunitinib group, 11 patients had grade 5 events, and only 2 fatal events were not linked to disease progression.

Conclusion. The combination of lenvatinib plus pembrolizumab significantly prolongs PFS and OS compared with sunitinib in patients with previously untreated and advanced ccRCC. The median OS has not yet been reached.

Commentary

The results of the current phase 3 CLEAR trial highlight the efficacy and safety of lenvatinib plus pembrolizumab as a first-line treatment in advanced ccRCC. This trial adds to the rapidly growing body of literature supporting the notion that the combination of anti-PD-1 based therapy with either CTLA-4 antibodies or VEGF receptor tyrosine kinase inhibitors (TKI) improves outcomes in previously untreated patients with advanced ccRCC. Previously presented data from Keynote-426 (pembrolizumab plus axitinib), Checkmate-214 (nivolumab plus ipilimumab), and Javelin Renal 101 (Avelumab plus axitinib) have also shown improved outcomes with combination therapy in the frontline setting.1-4 While the landscape of therapeutic options in the frontline setting continues to grow, there remains lack of clarity as to how to tailor our therapeutic decisions for specific patient populations. The exception would be nivolumab and ipilimumab, which are currently indicated for IMDC intermediate- or poor-risk patients.

The combination of VEGFR TKI therapy and PD-1 antibodies provides rapid disease control, with a median time to response in the current study of 1.9 months, and, generally speaking, a low risk of progression in the first 6 months of therapy. While cross-trial comparisons are always problematic, the PFS reported in this study and others with VEGFR TKI and PD-1 antibody combinations is quite impressive and surpasses that noted in Checkmate 214.3 While the median OS survival has not yet been reached, the long duration of PFS and complete response rate of 16% in this study certainly make this an attractive frontline option for newly diagnosed patients with advanced ccRCC. Longer follow-up is needed to confirm the survival benefit noted.

Applications for Clinical Practice

The current data support the use VEGFR TKI and anti-PD1 therapy in the frontline setting. How to choose between such combination regimens or combination immunotherapy remains unclear, and further biomarker-based assessments are needed to help guide therapeutic decisions for our patients.

Study Overview

Objective. To evaluate the efficacy and safety of lenvatinib in combination with everolimus or pembrolizumab compared with sunitinib alone for the treatment of newly diagnosed advanced clear cell renal cell carcinoma (ccRCC).

Design. Global, multicenter, randomized, open-label, phase 3 trial.

Intervention. Patients were randomized in a 1:1:1 ratio to receive treatment with 1 of 3 regimens: lenvatinib 20 mg daily plus pembrolizumab 200 mg on day 1 of each 21-day cycle; lenvatinib 18 mg daily plus everolimus 5 mg once daily for each 21-day cycle; or sunitinib 50 mg daily for 4 weeks followed by 2 weeks off. Patients were stratified according to geographic region and Memorial Sloan Kettering Cancer Center (MSKCC) prognostic risk group.

Setting and participants. A total of 1417 patients were screened, and 1069 patients underwent randomization between October 2016 and July 2019: 355 patients were randomized to the lenvatinib plus pembrolizumab group, 357 were randomized to the lenvatinib plus everolimus group, and 357 were randomized to the sunitinib alone group. The patients must have had a diagnosis of previously untreated advanced renal cell carcinoma with a clear-cell component. All the patients need to have a Karnofsky performance status of at least 70, adequate renal function, and controlled blood pressure with or without antihypertensive medications.

Main outcome measures. The primary endpoint assessed the progression-free survival (PFS) as evaluated by independent review committee using RECIST, version 1.1. Imaging was performed at the time of screening and every 8 weeks thereafter. Secondary endpoints were safety, overall survival (OS), and objective response rate as well as investigator-assessed PFS. Also, they assessed the duration of response. During the treatment period, the safety and adverse events were assessed up to 30 days from the last dose of the trial drug.

Main results. The baseline characteristics were well balanced between the treatment groups. More than 70% of enrolled participants were male. Approximately 60% of participants were MSKCC intermediate risk, 27% were favorable risk, and 9% were poor risk. Patients with a PD-L1 combined positive score of 1% or more represented 30% of the population. The remainder had a PD-L1 combined positive score of <1% (30%) or such data were not available (38%). Liver metastases were present in 17% of patients at baseline in each group, and 70% of patients had a prior nephrectomy. The data cutoff occurred in August 2020 for PFS and the median follow-up for OS was 26.6 months. Around 40% of the participants in the lenvatinib plus pembrolizumab group, 18.8% in the sunitinib group, and 31% in the lenvatinib plus everolimus group were still receiving trial treatment at data cutoff. The leading cause for discontinuing therapy was disease progression. Approximately 50% of patients in the lenvatinib/everolimus group and sunitinib group received subsequent checkpoint inhibitor therapy after progression.

The median PFS in the lenvatinib plus pembrolizumab group was significantly longer than in the sunitinib group, 23.9 months vs 9.2 months (hazard ratio [HR], 0.39; 95% CI, 0.32-0.49; P < 0.001). The median PFS was also significantly longer in the lenvatinib plus everolimus group compared with sunitinib, 14.7 vs 9.2 months (HR 0.65; 95% CI 0.53-0.80; P < 0.001). The PFS benefit favored the lenvatinib combination groups over sunitinib in all subgroups, including the MSKCC prognostic risk groups. The median OS was not reached with any treatment, with 79% of patients in the lenvatinib plus pembrolizumab group, 66% of patients in the lenvatinib plus everolimus group, and 70% in the sunitinib group still alive at 24 months. Survival was significantly longer in the lenvatinib plus pembrolizumab group compared with sunitinib (HR, 0.66; 95% CI, 0.49-0.88; P = 0.005). The OS favored lenvatinib/pembrolizumab over sunitinib in the PD-L1 positive or negative groups. The median duration of response in the lenvatinib plus pembrolizumab group was 25.8 months compared to 16.6 months and 14.6 months in the lenvatinib plus everolimus and sunitinib groups, respectively. Complete response rates were higher in the lenvatinib plus pembrolizumab group (16%) compared with lenvatinib/everolimus (9.8%) or sunitinib (4.2%). The median time to response was around 1.9 months in all 3 groups.

The most frequent adverse events seen in all groups were diarrhea, hypertension, fatigue, and nausea. Hypothyroidism was seen more frequently in the lenvatinib plus pembrolizumab group (47%). Grade 3 adverse events were seen in approximately 80% of patients in all groups. The most common grade 3 or higher adverse event was hypertension in all 3 groups. The median time for discontinuing treatment due to side effects was 8.97 months in the lenvatinib plus pembrolizumab arm, 5.49 months in the lenvatinib plus everolimus group, and 4.57 months in the sunitinib group. In the lenvatinib plus pembrolizumab group, 15 patients had grade 5 adverse events; 11 participants had fatal events not related to disease progression. In the lenvatinib plus everolimus group, there were 22 patients with grade 5 events, with 10 fatal events not related to disease progression. In the sunitinib group, 11 patients had grade 5 events, and only 2 fatal events were not linked to disease progression.

Conclusion. The combination of lenvatinib plus pembrolizumab significantly prolongs PFS and OS compared with sunitinib in patients with previously untreated and advanced ccRCC. The median OS has not yet been reached.

Commentary

The results of the current phase 3 CLEAR trial highlight the efficacy and safety of lenvatinib plus pembrolizumab as a first-line treatment in advanced ccRCC. This trial adds to the rapidly growing body of literature supporting the notion that the combination of anti-PD-1 based therapy with either CTLA-4 antibodies or VEGF receptor tyrosine kinase inhibitors (TKI) improves outcomes in previously untreated patients with advanced ccRCC. Previously presented data from Keynote-426 (pembrolizumab plus axitinib), Checkmate-214 (nivolumab plus ipilimumab), and Javelin Renal 101 (Avelumab plus axitinib) have also shown improved outcomes with combination therapy in the frontline setting.1-4 While the landscape of therapeutic options in the frontline setting continues to grow, there remains lack of clarity as to how to tailor our therapeutic decisions for specific patient populations. The exception would be nivolumab and ipilimumab, which are currently indicated for IMDC intermediate- or poor-risk patients.

The combination of VEGFR TKI therapy and PD-1 antibodies provides rapid disease control, with a median time to response in the current study of 1.9 months, and, generally speaking, a low risk of progression in the first 6 months of therapy. While cross-trial comparisons are always problematic, the PFS reported in this study and others with VEGFR TKI and PD-1 antibody combinations is quite impressive and surpasses that noted in Checkmate 214.3 While the median OS survival has not yet been reached, the long duration of PFS and complete response rate of 16% in this study certainly make this an attractive frontline option for newly diagnosed patients with advanced ccRCC. Longer follow-up is needed to confirm the survival benefit noted.

Applications for Clinical Practice

The current data support the use VEGFR TKI and anti-PD1 therapy in the frontline setting. How to choose between such combination regimens or combination immunotherapy remains unclear, and further biomarker-based assessments are needed to help guide therapeutic decisions for our patients.

1. Motzer, R, Alekseev B, Rha SY, et al. Lenvatinib plus pembrolizumab or everolimus for advanced renal cell carcinoma [published online ahead of print, 2021 Feb 13]. N Engl J Med. 2021;10.1056/NEJMoa2035716. doi:10.1056/NEJMoa2035716

2. Rini, BI, Plimack ER, Stus V, et al. Pembrolizumab plus axitinib versus sunitinib for advanced renal-cell carcinoma. N Engl J Med. 2019;380(12):1116-1127.

3. Motzer, RJ, Tannir NM, McDermott DF, et al. Nivolumab plus ipilimumab versus sunitinib in advanced renal-cell carcinoma. N Engl J Med. 2018;378(14):1277-1290.

4. Motzer, RJ, Penkov K, Haanen J, et al. Avelumab plus axitinib versus sunitinib for advanced renal-cell carcinoma. N Engl J Med. 2019;380(12):1103-1115.

1. Motzer, R, Alekseev B, Rha SY, et al. Lenvatinib plus pembrolizumab or everolimus for advanced renal cell carcinoma [published online ahead of print, 2021 Feb 13]. N Engl J Med. 2021;10.1056/NEJMoa2035716. doi:10.1056/NEJMoa2035716

2. Rini, BI, Plimack ER, Stus V, et al. Pembrolizumab plus axitinib versus sunitinib for advanced renal-cell carcinoma. N Engl J Med. 2019;380(12):1116-1127.

3. Motzer, RJ, Tannir NM, McDermott DF, et al. Nivolumab plus ipilimumab versus sunitinib in advanced renal-cell carcinoma. N Engl J Med. 2018;378(14):1277-1290.

4. Motzer, RJ, Penkov K, Haanen J, et al. Avelumab plus axitinib versus sunitinib for advanced renal-cell carcinoma. N Engl J Med. 2019;380(12):1103-1115.

Use of Fecal Immunochemical Testing in Acute Patient Care in a Safety Net Hospital System

From Baylor College of Medicine, Houston, TX (Drs. Spezia-Lindner, Montealegre, Muldrew, and Suarez) and Harris Health System, Houston, TX (Shanna L. Harris, Maria Daheri, and Drs. Muldrew and Suarez).

Abstract

Objective: To characterize and analyze the prevalence, indications for, and outcomes of fecal immunochemical testing (FIT) in acute patient care within a safety net health care system’s emergency departments (EDs) and inpatient settings.

Design: Retrospective cohort study derived from administrative data.

Setting: A large, urban, safety net health care delivery system in Texas. The data gathered were from the health care system’s 2 primary hospitals and their associated EDs. This health care system utilizes FIT exclusively for fecal occult blood testing.

Participants: Adults ≥18 years who underwent FIT in the ED or inpatient setting between August 2016 and March 2017. Chart review abstractions were performed on a sample (n = 382) from the larger subset.

Measurements: Primary data points included total FITs performed in acute patient care during the study period, basic demographic data, FIT indications, FIT result, receipt of invasive diagnostic follow-up, and result of invasive diagnostic follow-up. Multivariable log-binomial regression was used to calculate risk ratios (RRs) to assess the association between FIT result and receipt of diagnostic follow-up. Chi-square analysis was used to compare the proportion of abnormal findings on diagnostic follow-up by FIT result.

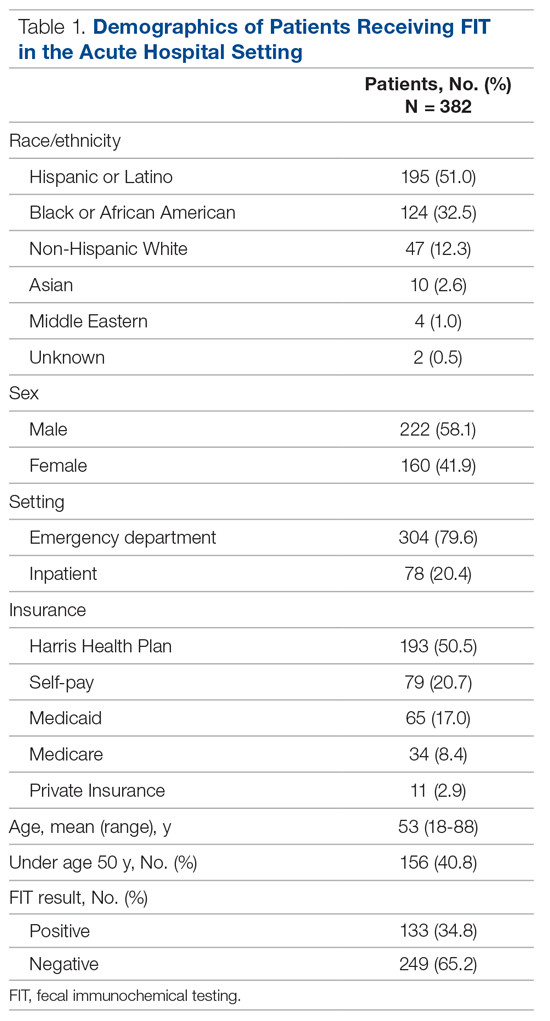

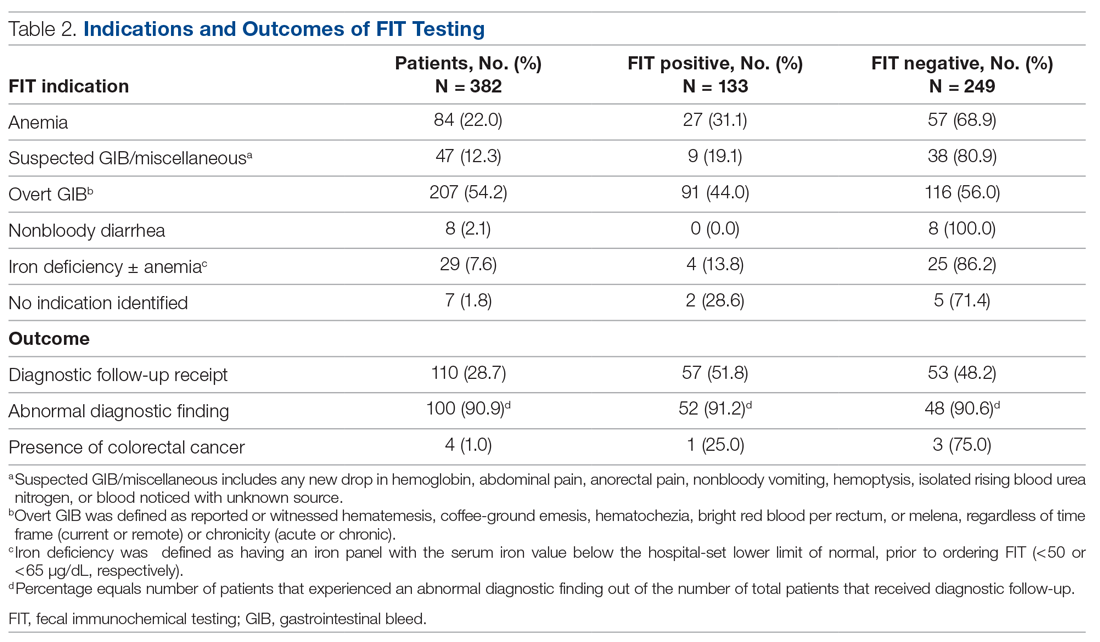

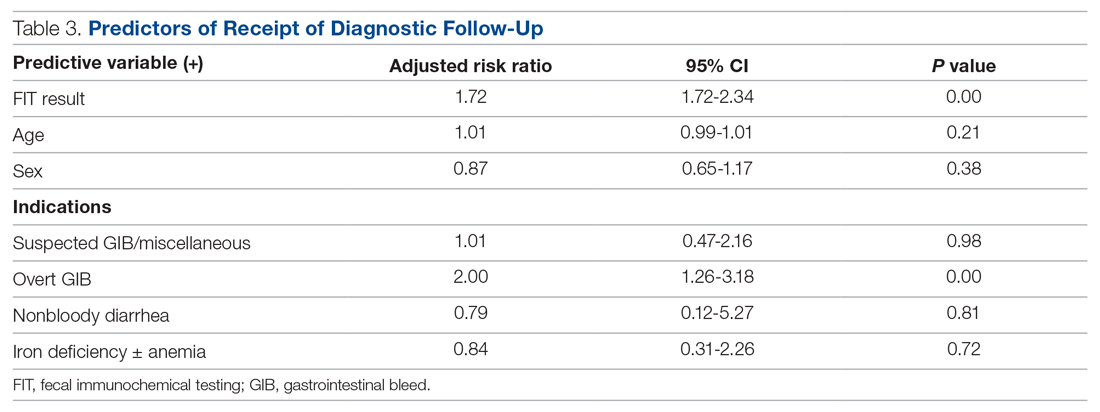

Results: During the 8-month study period, 2718 FITs were performed in the ED and inpatient setting, comprising 5.7% of system-wide FITs. Of the 382 patients included in the chart review who underwent acute care FIT, a majority had their test performed in the ED (304, 79.6%), 133 of which were positive (34.8%). The most common indication for FIT was evidence of overt gastrointestinal (GI) bleed (207, 54.2%), followed by anemia (84, 22.0%). While a positive FIT result was significantly associated with obtaining a diagnostic exam in multivariate analysis (RR, 1.72; P < 0.001), having signs of overt GI bleeding was a stronger predictor of diagnostic follow-up (RR, 2.00; P = 0.003). Of patients who underwent FIT and received diagnostic follow-up (n = 110), 48.2% were FIT negative. These patients were just as likely to have an abnormal finding as FIT-positive patients (90.6% vs 91.2%; P = 0.86). Of the 382 patients in the study, 4 (1.0%) were subsequently diagnosed with colorectal cancer (CRC). Of those 4 patients, 1 (25%) was FIT positive.

Conclusion: FIT is being utilized in acute patient care outside of its established indication for CRC screening in asymptomatic, average-risk adults. Our study demonstrates that FIT is not useful in acute patient care.

Keywords: FOBT; FIT; fecal immunochemical testing; inpatient.

Colorectal cancer (CRC) is the second leading cause of cancer-related mortality in the United States. It is estimated that in 2020, 147,950 individuals will be diagnosed with invasive CRC and 53,200 will die from it.1 While the overall incidence has been declining for decades, it is rising in young adults.2–4 Screening using direct visualization procedures (colonoscopy and sigmoidoscopy) and stool-based tests has been demonstrated to improve detection of precancerous and early cancerous lesions, thereby reducing CRC mortality.5 However, screening rates in the United States are suboptimal, with only 68.8% of adults aged 50 to 75 years screened according to guidelines in 2018.6Stool-based testing is a well-established and validated screening measure for CRC in asymptomatic individuals at average risk. Its widespread use in this population has been shown to cost-effectively screen for CRC among adults 50 years of age and older.5,7 Presently, the 2 most commonly used stool-based assays in the US health care system are guaiac-based tests (guaiac fecal occult blood test [gFOBT], Hemoccult) and

Despite the exclusive validation of FOBTs for use in CRC screening, studies have demonstrated that they are commonly used for a multitude of additional indications in emergency department (ED) and inpatient settings, most aimed at detecting or confirming GI blood loss. This may lead to inappropriate patient management, including the receipt of unnecessary follow-up procedures, which can incur significant costs to the patient and the health system.13-19 These costs may be particularly burdensome in safety net health systems (ie, those that offer access to care regardless of the patient’s ability to pay), which serve a large proportion of socioeconomically disadvantaged individuals in the United States.20,21 To our knowledge, no published study to date has specifically investigated the role of FIT in acute patient management.

This study characterizes the use of FIT in acute patient care within a large, urban, safety net health care system. Through a retrospective review of administrative data and patient charts, we evaluated FIT use prevalence, indications, and patient outcomes in the ED and inpatient settings.

Methods

Setting

This study was conducted in a large, urban, county-based integrated delivery system in Houston, Texas, that provides health care services to one of the largest uninsured and underinsured populations in the country.22 The health system includes 2 main hospitals and more than 20 ambulatory care clinics. Within its ambulatory care clinics, the health system implements a population-based screening strategy using stool-based testing. All adults aged 50 years or older who are due for FIT are identified through the health-maintenance module of the electronic medical record (EMR) and offered a take-home FIT. The health system utilizes FIT exclusively (OC-Light S FIT, Polymedco, Cortlandt Manor, NY); no guaiac-based assays are available.

Design and Data Collection

We began by using administrative records to determine the proportion of FITs conducted health system-wide that were ordered and completed in the acute care setting over the study period (August 2016-March 2017). Specifically, we used aggregate quality metric reports, which quantify the number of FITs conducted at each health system clinic and hospital each month, to calculate the proportion of FITs done in the ED and inpatient hospital setting.

We then conducted a retrospective cohort study of 382 adult patients who received FIT in the EDs and inpatient wards in both of the health system’s hospitals over the study period. All data were collected by retrospective chart review in Epic (Madison, WI) EMRs. Sampling was performed by selecting the medical record numbers corresponding to the first 50 completed FITs chronologically each month over the 8-month period, with a total of 400 charts reviewed.

Data collected included basic patient demographics, location of FIT ordering (ED vs inpatient), primary service ordering FIT, FIT indication, FIT result, and receipt and results of invasive diagnostic follow-up. Demographics collected included age, biological sex, race (self-selected), and insurance coverage.