User login

Cardiorenal syndrome

To the Editor: I read with interest the thoughtful review of cardiorenal syndrome by Drs. Thind, Loehrke, and Wilt1 and the accompanying editorial by Dr. Grodin.2 These articles certainly add to our growing knowledge of the syndrome and the importance of treating volume overload in these complex patients.

Indeed, we and others have stressed the primary importance of renal dysfunction in patients with volume overload and acute decompensated heart failure.3,4 We have learned that even small rises in serum creatinine predict poor outcomes in these patients. And even if the serum creatinine level comes back down during hospitalization, acute kidney injury (AKI) is still associated with risk.5

Nevertheless, clinicians remain frustrated with the practical management of patients with volume overload and worsening AKI. When faced with a rising serum creatinine level in a patient being treated for decompensated heart failure with signs or symptoms of volume overload, I suggest the following:

Perform careful bedside and chart review searching for evidence of AKI related to causes other than cardiorenal syndrome. Ask whether the rise in serum creatinine could be caused by new obstruction (eg, urinary retention, upper urinary tract obstruction), a nephrotoxin (eg, nonsteroidal anti-inflammatory drugs), a primary tubulointerstitial or glomerular process (eg, drug-induced acute interstitial nephritis, acute glomerulonephritis), acute tubular necrosis, or a new hemodynamic event threatening renal perfusion (eg, hypotension, a new arrhythmia). It is often best to arrive at a diagnosis of AKI due to cardiorenal dysfunction by exclusion, much like the working definitions of hepatorenal syndrome.6 This requires review of the urine sediment (looking for evidence of granular casts of acute tubular necrosis, or evidence of glomerulonephritis or interstitial nephritis), electronic medical record, vital signs, telemetry, and perhaps renal ultrasonography.

In the absence of frank evidence of “overdiuresis” such as worsening hypernatremia, with dropping blood pressure, clinical hypoperfusion, and contraction alkalosis, avoid the temptation to suspend diuretics. Alternatively, an increase in diuretic dose, or addition of a distal diuretic (ie, metolazone) may be needed to address persistent renal venous congestion as the cause of the AKI.3 In this situation, be sure to monitor electrolytes, volume status, and renal function closely while diuretic treatment is augmented. In many such cases, the serum creatinine may actually start to decrease after a more robust diuresis is generated. In these patients, it may also be prudent to temporarily suspend antagonists of the renin-angiotensin-aldosterone system, although this remains controversial.

Management of such patients should be done collaboratively with cardiologists well versed in the treatment of cardiorenal syndrome. It may be possible that the worsening renal function in these patients represents important changes in cardiac rhythm or function (eg, low cardiac output state, new or worsening valvular disease, ongoing myocardial ischemia, cardiac tamponade, uncontrolled bradycardia or tachyarrythmia). Interventions aimed at reversing such perturbations could be the most important steps in improving cardiorenal function and reversing AKI.

- Thind GS, Loehrke M, Wilt JL. Acute cardiorenal syndrome: mechanisms and clinical implications. Cleve Clin J Med 2018; 85(3):231–239. doi:10.3949/ccjm.85a.17019

- Grodin JL. Hemodynamically, the kidney is at the heart of cardiorenal syndrome. Cleve Clin J Med 2018; 85(3):240–242. doi:10.3949/ccjm.85a.17126

- Freda BJ, Slawsky M, Mallidi J, Braden GL. Decongestive treatment of acute decompensated heart failure: cardiorenal implications of ultrafiltration and diuretics. Am J Kid Dis 2011; 58(6):1005–1017. doi:10.1053/j.ajkd.2011.07.023

- Tang WH, Kitai T. Intrarenal blood flow: a window into the congestive kidney failure phenotype of heart failure? JACC Heart Fail 2016; 4(8):683–686. doi:10.1016/j.jchf.2016.05.009

- Freda BJ, Knee AB, Braden GL, Visintainer PF, Thakaer CV. Effect of transient and sustained acute kidney injury on readmissions in acute decompensated heart failure. Am J Cardiol 2017; 119(11):1809–1814. doi:10.1016/j.amjcard.2017.02.044

- Bucsics T, Krones E. Renal dysfunction in cirrhosis: acute kidney injury and the hepatorenal syndrome. Gastroenterol Rep (Oxf) 2017; 5(2):127–137. doi:10.1093/gastro/gox009

To the Editor: I read with interest the thoughtful review of cardiorenal syndrome by Drs. Thind, Loehrke, and Wilt1 and the accompanying editorial by Dr. Grodin.2 These articles certainly add to our growing knowledge of the syndrome and the importance of treating volume overload in these complex patients.

Indeed, we and others have stressed the primary importance of renal dysfunction in patients with volume overload and acute decompensated heart failure.3,4 We have learned that even small rises in serum creatinine predict poor outcomes in these patients. And even if the serum creatinine level comes back down during hospitalization, acute kidney injury (AKI) is still associated with risk.5

Nevertheless, clinicians remain frustrated with the practical management of patients with volume overload and worsening AKI. When faced with a rising serum creatinine level in a patient being treated for decompensated heart failure with signs or symptoms of volume overload, I suggest the following:

Perform careful bedside and chart review searching for evidence of AKI related to causes other than cardiorenal syndrome. Ask whether the rise in serum creatinine could be caused by new obstruction (eg, urinary retention, upper urinary tract obstruction), a nephrotoxin (eg, nonsteroidal anti-inflammatory drugs), a primary tubulointerstitial or glomerular process (eg, drug-induced acute interstitial nephritis, acute glomerulonephritis), acute tubular necrosis, or a new hemodynamic event threatening renal perfusion (eg, hypotension, a new arrhythmia). It is often best to arrive at a diagnosis of AKI due to cardiorenal dysfunction by exclusion, much like the working definitions of hepatorenal syndrome.6 This requires review of the urine sediment (looking for evidence of granular casts of acute tubular necrosis, or evidence of glomerulonephritis or interstitial nephritis), electronic medical record, vital signs, telemetry, and perhaps renal ultrasonography.

In the absence of frank evidence of “overdiuresis” such as worsening hypernatremia, with dropping blood pressure, clinical hypoperfusion, and contraction alkalosis, avoid the temptation to suspend diuretics. Alternatively, an increase in diuretic dose, or addition of a distal diuretic (ie, metolazone) may be needed to address persistent renal venous congestion as the cause of the AKI.3 In this situation, be sure to monitor electrolytes, volume status, and renal function closely while diuretic treatment is augmented. In many such cases, the serum creatinine may actually start to decrease after a more robust diuresis is generated. In these patients, it may also be prudent to temporarily suspend antagonists of the renin-angiotensin-aldosterone system, although this remains controversial.

Management of such patients should be done collaboratively with cardiologists well versed in the treatment of cardiorenal syndrome. It may be possible that the worsening renal function in these patients represents important changes in cardiac rhythm or function (eg, low cardiac output state, new or worsening valvular disease, ongoing myocardial ischemia, cardiac tamponade, uncontrolled bradycardia or tachyarrythmia). Interventions aimed at reversing such perturbations could be the most important steps in improving cardiorenal function and reversing AKI.

To the Editor: I read with interest the thoughtful review of cardiorenal syndrome by Drs. Thind, Loehrke, and Wilt1 and the accompanying editorial by Dr. Grodin.2 These articles certainly add to our growing knowledge of the syndrome and the importance of treating volume overload in these complex patients.

Indeed, we and others have stressed the primary importance of renal dysfunction in patients with volume overload and acute decompensated heart failure.3,4 We have learned that even small rises in serum creatinine predict poor outcomes in these patients. And even if the serum creatinine level comes back down during hospitalization, acute kidney injury (AKI) is still associated with risk.5

Nevertheless, clinicians remain frustrated with the practical management of patients with volume overload and worsening AKI. When faced with a rising serum creatinine level in a patient being treated for decompensated heart failure with signs or symptoms of volume overload, I suggest the following:

Perform careful bedside and chart review searching for evidence of AKI related to causes other than cardiorenal syndrome. Ask whether the rise in serum creatinine could be caused by new obstruction (eg, urinary retention, upper urinary tract obstruction), a nephrotoxin (eg, nonsteroidal anti-inflammatory drugs), a primary tubulointerstitial or glomerular process (eg, drug-induced acute interstitial nephritis, acute glomerulonephritis), acute tubular necrosis, or a new hemodynamic event threatening renal perfusion (eg, hypotension, a new arrhythmia). It is often best to arrive at a diagnosis of AKI due to cardiorenal dysfunction by exclusion, much like the working definitions of hepatorenal syndrome.6 This requires review of the urine sediment (looking for evidence of granular casts of acute tubular necrosis, or evidence of glomerulonephritis or interstitial nephritis), electronic medical record, vital signs, telemetry, and perhaps renal ultrasonography.

In the absence of frank evidence of “overdiuresis” such as worsening hypernatremia, with dropping blood pressure, clinical hypoperfusion, and contraction alkalosis, avoid the temptation to suspend diuretics. Alternatively, an increase in diuretic dose, or addition of a distal diuretic (ie, metolazone) may be needed to address persistent renal venous congestion as the cause of the AKI.3 In this situation, be sure to monitor electrolytes, volume status, and renal function closely while diuretic treatment is augmented. In many such cases, the serum creatinine may actually start to decrease after a more robust diuresis is generated. In these patients, it may also be prudent to temporarily suspend antagonists of the renin-angiotensin-aldosterone system, although this remains controversial.

Management of such patients should be done collaboratively with cardiologists well versed in the treatment of cardiorenal syndrome. It may be possible that the worsening renal function in these patients represents important changes in cardiac rhythm or function (eg, low cardiac output state, new or worsening valvular disease, ongoing myocardial ischemia, cardiac tamponade, uncontrolled bradycardia or tachyarrythmia). Interventions aimed at reversing such perturbations could be the most important steps in improving cardiorenal function and reversing AKI.

- Thind GS, Loehrke M, Wilt JL. Acute cardiorenal syndrome: mechanisms and clinical implications. Cleve Clin J Med 2018; 85(3):231–239. doi:10.3949/ccjm.85a.17019

- Grodin JL. Hemodynamically, the kidney is at the heart of cardiorenal syndrome. Cleve Clin J Med 2018; 85(3):240–242. doi:10.3949/ccjm.85a.17126

- Freda BJ, Slawsky M, Mallidi J, Braden GL. Decongestive treatment of acute decompensated heart failure: cardiorenal implications of ultrafiltration and diuretics. Am J Kid Dis 2011; 58(6):1005–1017. doi:10.1053/j.ajkd.2011.07.023

- Tang WH, Kitai T. Intrarenal blood flow: a window into the congestive kidney failure phenotype of heart failure? JACC Heart Fail 2016; 4(8):683–686. doi:10.1016/j.jchf.2016.05.009

- Freda BJ, Knee AB, Braden GL, Visintainer PF, Thakaer CV. Effect of transient and sustained acute kidney injury on readmissions in acute decompensated heart failure. Am J Cardiol 2017; 119(11):1809–1814. doi:10.1016/j.amjcard.2017.02.044

- Bucsics T, Krones E. Renal dysfunction in cirrhosis: acute kidney injury and the hepatorenal syndrome. Gastroenterol Rep (Oxf) 2017; 5(2):127–137. doi:10.1093/gastro/gox009

- Thind GS, Loehrke M, Wilt JL. Acute cardiorenal syndrome: mechanisms and clinical implications. Cleve Clin J Med 2018; 85(3):231–239. doi:10.3949/ccjm.85a.17019

- Grodin JL. Hemodynamically, the kidney is at the heart of cardiorenal syndrome. Cleve Clin J Med 2018; 85(3):240–242. doi:10.3949/ccjm.85a.17126

- Freda BJ, Slawsky M, Mallidi J, Braden GL. Decongestive treatment of acute decompensated heart failure: cardiorenal implications of ultrafiltration and diuretics. Am J Kid Dis 2011; 58(6):1005–1017. doi:10.1053/j.ajkd.2011.07.023

- Tang WH, Kitai T. Intrarenal blood flow: a window into the congestive kidney failure phenotype of heart failure? JACC Heart Fail 2016; 4(8):683–686. doi:10.1016/j.jchf.2016.05.009

- Freda BJ, Knee AB, Braden GL, Visintainer PF, Thakaer CV. Effect of transient and sustained acute kidney injury on readmissions in acute decompensated heart failure. Am J Cardiol 2017; 119(11):1809–1814. doi:10.1016/j.amjcard.2017.02.044

- Bucsics T, Krones E. Renal dysfunction in cirrhosis: acute kidney injury and the hepatorenal syndrome. Gastroenterol Rep (Oxf) 2017; 5(2):127–137. doi:10.1093/gastro/gox009

In reply: Cardiorenal syndrome

In Reply: We thank Dr. Freda for his remarks and observations. Certainly, the clinical importance of this entity and the challenge it poses to clinicians cannot be overemphasized. We concur with the overall message and reply to his specific comments:

We completely agree that clinical data-gathering is of paramount importance. This includes careful history-taking, physical examination, electronic medical record review, laboratory data review, and imaging. As discussed in our article, renal electrolytes will reveal a prerenal state in acute cardiorenal syndrome, and other causes of prerenal acute kidney injury (AKI) should be ruled out. The role of point-of-care ultrasonography (eg, to measure the size and respirophasic variation of the inferior vena cava) as a vital diagnostic tool has been well described, and we endorse it.1 Moreover, apart from snapshot values, trends are also very important. This is especially pertinent when the patient care is being transferred to a new service (eg, from hospitalist service to the critical care service). In this case, careful review of diuretic dosage, renal function trend, intake and output, and weight trend would help in the diagnosis.

Inadequate diuretic therapy is perhaps one of the most common errors made in the management of patients with acute cardiorenal syndrome. As mentioned in our article, diuretics should be correctly dosed based on the patient’s renal function. It is a common misconception that diuretics are nephrotoxic: in reality, there is no direct renal toxicity from the drug itself. Certainly, overdiuresis may lead to AKI, but this is not a valid concern in patients with acute cardiorenal syndrome, who are fluid-overloaded by definition.

Another challenging clinical scenario is when a patient is diagnosed with acute cardiorenal syndrome but renal function worsens with diuretic therapy. In our experience, this is a paradoxical situation and often stems from misinterpretation of clinical data. The most common example is diuretic underdosage leading to inadequate diuretic response. Renal function will continue to decline in these patients, as renal congestion has not yet been relieved. This reiterates the importance of paying close attention to urine output and intake-output data. When the diuretic regimen is strengthened and a robust diuretic response is achieved, renal function should improve as systemic congestion diminishes.

Acute cardiorenal syndrome stems from hemodynamic derangements, and a multidisciplinary approach may certainly lead to better outcomes. Although we described the general theme of hemodynamic disturbances, patients with acute cardiorenal syndrome may have certain unique and complex hemodynamic “phenotypes” that we did not discuss due to the limited scope of the paper. One such phenotype worth mentioning is decompensated right heart failure, as seen in patients with severe pulmonary hypertension. Acute cardiorenal syndrome due to renal congestion is often seen in these patients, but they also have certain other unique characteristics such as ventricular interdependence.2 Giving intravenous fluids to these patients not only will worsen renal function but can also cause catastrophic reduction in cardiac output and blood pressure due to worsening interventricular septal bowing. Certain treatments (eg, pulmonary vasodilators) are unique to this patient population, and these patients should hence be managed by experienced clinicians.

- Blehar DJ, Dickman E, Gaspari R. Identification of congestive heart failure via respiratory variation of inferior vena cava diameter. Am J Emerg Med 2009; 27(1):71–75. doi:10.1016/j.ajem.2008.01.002

- Piazza G, Goldhaber SZ. The acutely decompensated right ventricle: pathways for diagnosis and management. Chest 2005128(3):1836–1852. doi:10.1378/chest.128.3.1836

In Reply: We thank Dr. Freda for his remarks and observations. Certainly, the clinical importance of this entity and the challenge it poses to clinicians cannot be overemphasized. We concur with the overall message and reply to his specific comments:

We completely agree that clinical data-gathering is of paramount importance. This includes careful history-taking, physical examination, electronic medical record review, laboratory data review, and imaging. As discussed in our article, renal electrolytes will reveal a prerenal state in acute cardiorenal syndrome, and other causes of prerenal acute kidney injury (AKI) should be ruled out. The role of point-of-care ultrasonography (eg, to measure the size and respirophasic variation of the inferior vena cava) as a vital diagnostic tool has been well described, and we endorse it.1 Moreover, apart from snapshot values, trends are also very important. This is especially pertinent when the patient care is being transferred to a new service (eg, from hospitalist service to the critical care service). In this case, careful review of diuretic dosage, renal function trend, intake and output, and weight trend would help in the diagnosis.

Inadequate diuretic therapy is perhaps one of the most common errors made in the management of patients with acute cardiorenal syndrome. As mentioned in our article, diuretics should be correctly dosed based on the patient’s renal function. It is a common misconception that diuretics are nephrotoxic: in reality, there is no direct renal toxicity from the drug itself. Certainly, overdiuresis may lead to AKI, but this is not a valid concern in patients with acute cardiorenal syndrome, who are fluid-overloaded by definition.

Another challenging clinical scenario is when a patient is diagnosed with acute cardiorenal syndrome but renal function worsens with diuretic therapy. In our experience, this is a paradoxical situation and often stems from misinterpretation of clinical data. The most common example is diuretic underdosage leading to inadequate diuretic response. Renal function will continue to decline in these patients, as renal congestion has not yet been relieved. This reiterates the importance of paying close attention to urine output and intake-output data. When the diuretic regimen is strengthened and a robust diuretic response is achieved, renal function should improve as systemic congestion diminishes.

Acute cardiorenal syndrome stems from hemodynamic derangements, and a multidisciplinary approach may certainly lead to better outcomes. Although we described the general theme of hemodynamic disturbances, patients with acute cardiorenal syndrome may have certain unique and complex hemodynamic “phenotypes” that we did not discuss due to the limited scope of the paper. One such phenotype worth mentioning is decompensated right heart failure, as seen in patients with severe pulmonary hypertension. Acute cardiorenal syndrome due to renal congestion is often seen in these patients, but they also have certain other unique characteristics such as ventricular interdependence.2 Giving intravenous fluids to these patients not only will worsen renal function but can also cause catastrophic reduction in cardiac output and blood pressure due to worsening interventricular septal bowing. Certain treatments (eg, pulmonary vasodilators) are unique to this patient population, and these patients should hence be managed by experienced clinicians.

In Reply: We thank Dr. Freda for his remarks and observations. Certainly, the clinical importance of this entity and the challenge it poses to clinicians cannot be overemphasized. We concur with the overall message and reply to his specific comments:

We completely agree that clinical data-gathering is of paramount importance. This includes careful history-taking, physical examination, electronic medical record review, laboratory data review, and imaging. As discussed in our article, renal electrolytes will reveal a prerenal state in acute cardiorenal syndrome, and other causes of prerenal acute kidney injury (AKI) should be ruled out. The role of point-of-care ultrasonography (eg, to measure the size and respirophasic variation of the inferior vena cava) as a vital diagnostic tool has been well described, and we endorse it.1 Moreover, apart from snapshot values, trends are also very important. This is especially pertinent when the patient care is being transferred to a new service (eg, from hospitalist service to the critical care service). In this case, careful review of diuretic dosage, renal function trend, intake and output, and weight trend would help in the diagnosis.

Inadequate diuretic therapy is perhaps one of the most common errors made in the management of patients with acute cardiorenal syndrome. As mentioned in our article, diuretics should be correctly dosed based on the patient’s renal function. It is a common misconception that diuretics are nephrotoxic: in reality, there is no direct renal toxicity from the drug itself. Certainly, overdiuresis may lead to AKI, but this is not a valid concern in patients with acute cardiorenal syndrome, who are fluid-overloaded by definition.

Another challenging clinical scenario is when a patient is diagnosed with acute cardiorenal syndrome but renal function worsens with diuretic therapy. In our experience, this is a paradoxical situation and often stems from misinterpretation of clinical data. The most common example is diuretic underdosage leading to inadequate diuretic response. Renal function will continue to decline in these patients, as renal congestion has not yet been relieved. This reiterates the importance of paying close attention to urine output and intake-output data. When the diuretic regimen is strengthened and a robust diuretic response is achieved, renal function should improve as systemic congestion diminishes.

Acute cardiorenal syndrome stems from hemodynamic derangements, and a multidisciplinary approach may certainly lead to better outcomes. Although we described the general theme of hemodynamic disturbances, patients with acute cardiorenal syndrome may have certain unique and complex hemodynamic “phenotypes” that we did not discuss due to the limited scope of the paper. One such phenotype worth mentioning is decompensated right heart failure, as seen in patients with severe pulmonary hypertension. Acute cardiorenal syndrome due to renal congestion is often seen in these patients, but they also have certain other unique characteristics such as ventricular interdependence.2 Giving intravenous fluids to these patients not only will worsen renal function but can also cause catastrophic reduction in cardiac output and blood pressure due to worsening interventricular septal bowing. Certain treatments (eg, pulmonary vasodilators) are unique to this patient population, and these patients should hence be managed by experienced clinicians.

- Blehar DJ, Dickman E, Gaspari R. Identification of congestive heart failure via respiratory variation of inferior vena cava diameter. Am J Emerg Med 2009; 27(1):71–75. doi:10.1016/j.ajem.2008.01.002

- Piazza G, Goldhaber SZ. The acutely decompensated right ventricle: pathways for diagnosis and management. Chest 2005128(3):1836–1852. doi:10.1378/chest.128.3.1836

- Blehar DJ, Dickman E, Gaspari R. Identification of congestive heart failure via respiratory variation of inferior vena cava diameter. Am J Emerg Med 2009; 27(1):71–75. doi:10.1016/j.ajem.2008.01.002

- Piazza G, Goldhaber SZ. The acutely decompensated right ventricle: pathways for diagnosis and management. Chest 2005128(3):1836–1852. doi:10.1378/chest.128.3.1836

Patient-Centered, Payer-Centered, or Both? The 30-Day Readmission Metric

There is little doubt that preventing 30-day readmissions to the hospital results in lower costs for payers. However, reducing costs alone does not make this metric a measure of “high value” care.1 Rather, it is the improvement in the effectiveness of the discharge process that occurs alongside lower costs that makes readmission reduction efforts “high value” – or a “win-win” for patients and payers.

However, the article by Nuckols and colleagues in this month’s issue of the Journal of Hospital Medicine (JHM) suggests that it might not be that simple and adds nuance to the ongoing discussion about the 30-day readmission metric.2 The study used data collected by the federal government to examine changes not only in 30-day readmission rates between 2009-2010 and 2013-2014 but also changes in emergency department (ED) and observation unit visits. What they found is important. In general, despite reductions in 30-day readmissions for patients served by Medicare and private insurance, there were increases in observation unit and ED visits across all payer types (including Medicare and private insurance). These increases in observation unit and ED visits resulted in statistically higher overall “revisit” rates for the uninsured and those insured by Medicaid and offset any improvements in the “revisit” rates resulting from reductions in 30-day readmissions for those with private insurance. Those insured by Medicare—representing about 300,000 of the 420,000 visits analyzed—still had a statistically lower “revisit” rate, but it was only marginally lower (25.0% in 2013-2014 versus 25.3% in 2009-2010).2

The generalizability of the Nuckols’ study was limited in that it examined only index admissions for acute myocardial infarction (AMI), heart failure (HF), and pneumonia and used data from only Georgia, Nebraska, South Carolina, and Tennessee—the four states where observation and ED visit data were available in the federal database.2 The study also did not examine hospital-level revisit data; hence, it was not able to determine if hospitals with greater reductions in readmission rates had greater increases in observation or ED visits, as one might predict. Despite these limitations, the rigor of the study was noteworthy. The authors used matching techniques to ensure that the populations examined in the two time periods were comparable. Unlike previous research,3,4 they also used a comprehensive definition of a hospital “revisit” (including both observation and ED visits) and measured “revisit” rates across several payer types, rather than focusing exclusively on those covered by fee for service Medicare, as in past studies.4,5

What the study by Nuckols and colleagues suggests is that even though patients may be readmitted less, they may be coming back to the ED or getting admitted to the observation unit more, resulting in overall “revisit” rates that are marginally lower for Medicare patients, but often the same or even higher for other payer groups, particularly disadvantaged payer groups who are uninsured or insured by Medicaid.2 Although the authors do not assert causality for these trends, it is worth noting that the much-discussed Hospital Readmission Reduction Program (or “readmission penalty”) applies only to Medicare patients aged more than 65 years. It is likely that this program influenced the differences identified between payer groups in this article.

Beyond the policy implications of these findings, the experience of patients cared for in these different settings is of paramount importance. Unfortunately, there are limited data comparing patient perceptions, preferences, or outcomes resulting from readmission to an inpatient service versus an observation unit or ED visit within 30 days of discharge. However, there is reason to believe that costs could be higher for some patients treated in the ED or an observation unit as compared to those in the inpatient setting,6 and that care continuity and quality may be different across these settings. In a recent white paper on observation care published by the Society of Hospital Medicine (SHM) Public Policy Committee,7 the SHM reported the results of a 2017 survey of its members about observation care. The results were concerning. An overwhelming majority of respondents (87%) believed that the rules for observation are unclear for patients, and 68% of respondents believed that policy changes mandating informing patients of their observation status have created conflict between the provider and the patient.7 As shared by one respondent, “the observation issue can severely damage the therapeutic bond with patient/family, who may conclude that the hospitalist has more interest in saving someone money at the expense of patient care.”7 Thus, there is significant concern about the nature of observation stays and the experience for patients and providers. We should take care to better understand these experiences given that readmission reduction efforts may funnel more patients into observation care.

As a next step, we recommend further examination of how “revisit” rates have changed over time for patients with any discharge diagnosis, and not just those with pneumonia, AMI, or HF.8 Such examinations should be stratified by payer to identify differential impacts on those with lower socioeconomic status. Analyses should also examine changes in “revisit” types at the hospital level to better understand if hospitals with reductions in readmission rates are simply shifting revisits to the observation unit or ED. It is possible that inpatient readmissions for any given hospital are decreasing without concomitant increases in observation visits, as there are forces independent of the readmission penalty, such as the Recovery Audit Contractor program, that are driving hospitals to more frequently code patients as observation visits rather than inpatient admissions.9 Thus, readmissions could decrease and observation unit visits could increase independent of one another. We also recommend further research to examine differences in care quality, clinical outcomes, and costs for those readmitted to the hospital within 30 days of discharge versus those cared for in observation units or the ED. The challenge of such studies will be to identify and examine comparable populations of patients across these three settings. Examining patient perceptions and preferences across these settings is also critical. Finally, when assessing interventions to reduce inpatient readmissions, we need to consider “revisits” as a whole, not simply readmissions.10 Otherwise, we may simply be promoting the use of interventions that shift inpatient readmissions to observation unit or ED revisits, and there is little that is patient-centered or high value about that.9

Disclosures

The authors have nothing to disclose.

1. Smith M, Saunders R, Stuckhardt L, McGinnis JM, eds. Best care at lower cost: the path to continuously learning health care in America. Washington, DC: National Academies Press; 2013. PubMed

2. Nuckols TK, Fingar KR, Barrett ML, et al. Returns to emergency department, observation, or inpatient care within 30 days after hospitalization in 4 states, 2009 and 2010 versus 2013 and 2014. J Hosp Med. 2018;13(5):296-303. PubMed

3. Fingar KR, Washington R. Trends in Hospital Readmissions for Four High-Volume Conditions, 2009–2013. Statistical Brief No. 196. https://www.hcup-us.ahrq.gov/reports/statbriefs/sb196-Readmissions-Trends-High-Volume-Conditions.pdf. Accessed March 5, 2018.

4. Zuckerman RB, Sheingold SH, Orav EJ, Ruhter J, Epstein AM. Readmissions, observation, and the Hospital Readmissions Reduction Program. N Engl J Med. 2016;374(16):1543-1551. DOI: 10.1056/NEJMsa1513024. PubMed

5. Gerhardt G, Yemane A, Apostle K, Oelschlaeger A, Rollins E, Brennan N. Evaluating whether changes in utilization of hospital outpatient services contributed to lower Medicare readmission rate. Medicare Medicaid Res Rev. 2014;4(1). DOI: 10.5600/mmrr2014-004-01-b03 PubMed

6. Kangovi S, Cafardi SG, Smith RA, Kulkarni R, Grande D. Patient financial responsibility for observation care. J Hosp Med. 2015;10(11):718-723. DOI: 10.1002/jhm.2436. PubMed

7. The Hospital Observation Care Problem: Perspectives and Solutions from the Society of Hospital Medicine. Society of Hospital Medicine Public Policy Committee. https://www.hospitalmedicine.org/globalassets/policy-and-advocacy/advocacy-pdf/shms-observation-white-paper-2017. Accessed February 12, 2018.

8. Rosen AK, Chen Q, Shwartz M, et al. Does use of a hospital-wide readmission measure versus condition-specific readmission measures make a difference for hospital profiling and payment penalties? Medical Care. 2016;54(2):155-161. DOI: 10.1097/MLR.0000000000000455. PubMed

9. Baugh CW, Schuur JD. Observation care-high-value care or a cost-shifting loophole? N Engl J Med. 2013;369(4):302-305. DOI: 10.1056/NEJMp1304493. PubMed

10. Cassel CK, Conway PH, Delbanco SF, Jha AK, Saunders RS, Lee TH. Getting more performance from performance measurement. N Engl J Med. 2014;371(23):2145-2147. DOI: 10.1056/NEJMp1408345. PubMed

There is little doubt that preventing 30-day readmissions to the hospital results in lower costs for payers. However, reducing costs alone does not make this metric a measure of “high value” care.1 Rather, it is the improvement in the effectiveness of the discharge process that occurs alongside lower costs that makes readmission reduction efforts “high value” – or a “win-win” for patients and payers.

However, the article by Nuckols and colleagues in this month’s issue of the Journal of Hospital Medicine (JHM) suggests that it might not be that simple and adds nuance to the ongoing discussion about the 30-day readmission metric.2 The study used data collected by the federal government to examine changes not only in 30-day readmission rates between 2009-2010 and 2013-2014 but also changes in emergency department (ED) and observation unit visits. What they found is important. In general, despite reductions in 30-day readmissions for patients served by Medicare and private insurance, there were increases in observation unit and ED visits across all payer types (including Medicare and private insurance). These increases in observation unit and ED visits resulted in statistically higher overall “revisit” rates for the uninsured and those insured by Medicaid and offset any improvements in the “revisit” rates resulting from reductions in 30-day readmissions for those with private insurance. Those insured by Medicare—representing about 300,000 of the 420,000 visits analyzed—still had a statistically lower “revisit” rate, but it was only marginally lower (25.0% in 2013-2014 versus 25.3% in 2009-2010).2

The generalizability of the Nuckols’ study was limited in that it examined only index admissions for acute myocardial infarction (AMI), heart failure (HF), and pneumonia and used data from only Georgia, Nebraska, South Carolina, and Tennessee—the four states where observation and ED visit data were available in the federal database.2 The study also did not examine hospital-level revisit data; hence, it was not able to determine if hospitals with greater reductions in readmission rates had greater increases in observation or ED visits, as one might predict. Despite these limitations, the rigor of the study was noteworthy. The authors used matching techniques to ensure that the populations examined in the two time periods were comparable. Unlike previous research,3,4 they also used a comprehensive definition of a hospital “revisit” (including both observation and ED visits) and measured “revisit” rates across several payer types, rather than focusing exclusively on those covered by fee for service Medicare, as in past studies.4,5

What the study by Nuckols and colleagues suggests is that even though patients may be readmitted less, they may be coming back to the ED or getting admitted to the observation unit more, resulting in overall “revisit” rates that are marginally lower for Medicare patients, but often the same or even higher for other payer groups, particularly disadvantaged payer groups who are uninsured or insured by Medicaid.2 Although the authors do not assert causality for these trends, it is worth noting that the much-discussed Hospital Readmission Reduction Program (or “readmission penalty”) applies only to Medicare patients aged more than 65 years. It is likely that this program influenced the differences identified between payer groups in this article.

Beyond the policy implications of these findings, the experience of patients cared for in these different settings is of paramount importance. Unfortunately, there are limited data comparing patient perceptions, preferences, or outcomes resulting from readmission to an inpatient service versus an observation unit or ED visit within 30 days of discharge. However, there is reason to believe that costs could be higher for some patients treated in the ED or an observation unit as compared to those in the inpatient setting,6 and that care continuity and quality may be different across these settings. In a recent white paper on observation care published by the Society of Hospital Medicine (SHM) Public Policy Committee,7 the SHM reported the results of a 2017 survey of its members about observation care. The results were concerning. An overwhelming majority of respondents (87%) believed that the rules for observation are unclear for patients, and 68% of respondents believed that policy changes mandating informing patients of their observation status have created conflict between the provider and the patient.7 As shared by one respondent, “the observation issue can severely damage the therapeutic bond with patient/family, who may conclude that the hospitalist has more interest in saving someone money at the expense of patient care.”7 Thus, there is significant concern about the nature of observation stays and the experience for patients and providers. We should take care to better understand these experiences given that readmission reduction efforts may funnel more patients into observation care.

As a next step, we recommend further examination of how “revisit” rates have changed over time for patients with any discharge diagnosis, and not just those with pneumonia, AMI, or HF.8 Such examinations should be stratified by payer to identify differential impacts on those with lower socioeconomic status. Analyses should also examine changes in “revisit” types at the hospital level to better understand if hospitals with reductions in readmission rates are simply shifting revisits to the observation unit or ED. It is possible that inpatient readmissions for any given hospital are decreasing without concomitant increases in observation visits, as there are forces independent of the readmission penalty, such as the Recovery Audit Contractor program, that are driving hospitals to more frequently code patients as observation visits rather than inpatient admissions.9 Thus, readmissions could decrease and observation unit visits could increase independent of one another. We also recommend further research to examine differences in care quality, clinical outcomes, and costs for those readmitted to the hospital within 30 days of discharge versus those cared for in observation units or the ED. The challenge of such studies will be to identify and examine comparable populations of patients across these three settings. Examining patient perceptions and preferences across these settings is also critical. Finally, when assessing interventions to reduce inpatient readmissions, we need to consider “revisits” as a whole, not simply readmissions.10 Otherwise, we may simply be promoting the use of interventions that shift inpatient readmissions to observation unit or ED revisits, and there is little that is patient-centered or high value about that.9

Disclosures

The authors have nothing to disclose.

There is little doubt that preventing 30-day readmissions to the hospital results in lower costs for payers. However, reducing costs alone does not make this metric a measure of “high value” care.1 Rather, it is the improvement in the effectiveness of the discharge process that occurs alongside lower costs that makes readmission reduction efforts “high value” – or a “win-win” for patients and payers.

However, the article by Nuckols and colleagues in this month’s issue of the Journal of Hospital Medicine (JHM) suggests that it might not be that simple and adds nuance to the ongoing discussion about the 30-day readmission metric.2 The study used data collected by the federal government to examine changes not only in 30-day readmission rates between 2009-2010 and 2013-2014 but also changes in emergency department (ED) and observation unit visits. What they found is important. In general, despite reductions in 30-day readmissions for patients served by Medicare and private insurance, there were increases in observation unit and ED visits across all payer types (including Medicare and private insurance). These increases in observation unit and ED visits resulted in statistically higher overall “revisit” rates for the uninsured and those insured by Medicaid and offset any improvements in the “revisit” rates resulting from reductions in 30-day readmissions for those with private insurance. Those insured by Medicare—representing about 300,000 of the 420,000 visits analyzed—still had a statistically lower “revisit” rate, but it was only marginally lower (25.0% in 2013-2014 versus 25.3% in 2009-2010).2

The generalizability of the Nuckols’ study was limited in that it examined only index admissions for acute myocardial infarction (AMI), heart failure (HF), and pneumonia and used data from only Georgia, Nebraska, South Carolina, and Tennessee—the four states where observation and ED visit data were available in the federal database.2 The study also did not examine hospital-level revisit data; hence, it was not able to determine if hospitals with greater reductions in readmission rates had greater increases in observation or ED visits, as one might predict. Despite these limitations, the rigor of the study was noteworthy. The authors used matching techniques to ensure that the populations examined in the two time periods were comparable. Unlike previous research,3,4 they also used a comprehensive definition of a hospital “revisit” (including both observation and ED visits) and measured “revisit” rates across several payer types, rather than focusing exclusively on those covered by fee for service Medicare, as in past studies.4,5

What the study by Nuckols and colleagues suggests is that even though patients may be readmitted less, they may be coming back to the ED or getting admitted to the observation unit more, resulting in overall “revisit” rates that are marginally lower for Medicare patients, but often the same or even higher for other payer groups, particularly disadvantaged payer groups who are uninsured or insured by Medicaid.2 Although the authors do not assert causality for these trends, it is worth noting that the much-discussed Hospital Readmission Reduction Program (or “readmission penalty”) applies only to Medicare patients aged more than 65 years. It is likely that this program influenced the differences identified between payer groups in this article.

Beyond the policy implications of these findings, the experience of patients cared for in these different settings is of paramount importance. Unfortunately, there are limited data comparing patient perceptions, preferences, or outcomes resulting from readmission to an inpatient service versus an observation unit or ED visit within 30 days of discharge. However, there is reason to believe that costs could be higher for some patients treated in the ED or an observation unit as compared to those in the inpatient setting,6 and that care continuity and quality may be different across these settings. In a recent white paper on observation care published by the Society of Hospital Medicine (SHM) Public Policy Committee,7 the SHM reported the results of a 2017 survey of its members about observation care. The results were concerning. An overwhelming majority of respondents (87%) believed that the rules for observation are unclear for patients, and 68% of respondents believed that policy changes mandating informing patients of their observation status have created conflict between the provider and the patient.7 As shared by one respondent, “the observation issue can severely damage the therapeutic bond with patient/family, who may conclude that the hospitalist has more interest in saving someone money at the expense of patient care.”7 Thus, there is significant concern about the nature of observation stays and the experience for patients and providers. We should take care to better understand these experiences given that readmission reduction efforts may funnel more patients into observation care.

As a next step, we recommend further examination of how “revisit” rates have changed over time for patients with any discharge diagnosis, and not just those with pneumonia, AMI, or HF.8 Such examinations should be stratified by payer to identify differential impacts on those with lower socioeconomic status. Analyses should also examine changes in “revisit” types at the hospital level to better understand if hospitals with reductions in readmission rates are simply shifting revisits to the observation unit or ED. It is possible that inpatient readmissions for any given hospital are decreasing without concomitant increases in observation visits, as there are forces independent of the readmission penalty, such as the Recovery Audit Contractor program, that are driving hospitals to more frequently code patients as observation visits rather than inpatient admissions.9 Thus, readmissions could decrease and observation unit visits could increase independent of one another. We also recommend further research to examine differences in care quality, clinical outcomes, and costs for those readmitted to the hospital within 30 days of discharge versus those cared for in observation units or the ED. The challenge of such studies will be to identify and examine comparable populations of patients across these three settings. Examining patient perceptions and preferences across these settings is also critical. Finally, when assessing interventions to reduce inpatient readmissions, we need to consider “revisits” as a whole, not simply readmissions.10 Otherwise, we may simply be promoting the use of interventions that shift inpatient readmissions to observation unit or ED revisits, and there is little that is patient-centered or high value about that.9

Disclosures

The authors have nothing to disclose.

1. Smith M, Saunders R, Stuckhardt L, McGinnis JM, eds. Best care at lower cost: the path to continuously learning health care in America. Washington, DC: National Academies Press; 2013. PubMed

2. Nuckols TK, Fingar KR, Barrett ML, et al. Returns to emergency department, observation, or inpatient care within 30 days after hospitalization in 4 states, 2009 and 2010 versus 2013 and 2014. J Hosp Med. 2018;13(5):296-303. PubMed

3. Fingar KR, Washington R. Trends in Hospital Readmissions for Four High-Volume Conditions, 2009–2013. Statistical Brief No. 196. https://www.hcup-us.ahrq.gov/reports/statbriefs/sb196-Readmissions-Trends-High-Volume-Conditions.pdf. Accessed March 5, 2018.

4. Zuckerman RB, Sheingold SH, Orav EJ, Ruhter J, Epstein AM. Readmissions, observation, and the Hospital Readmissions Reduction Program. N Engl J Med. 2016;374(16):1543-1551. DOI: 10.1056/NEJMsa1513024. PubMed

5. Gerhardt G, Yemane A, Apostle K, Oelschlaeger A, Rollins E, Brennan N. Evaluating whether changes in utilization of hospital outpatient services contributed to lower Medicare readmission rate. Medicare Medicaid Res Rev. 2014;4(1). DOI: 10.5600/mmrr2014-004-01-b03 PubMed

6. Kangovi S, Cafardi SG, Smith RA, Kulkarni R, Grande D. Patient financial responsibility for observation care. J Hosp Med. 2015;10(11):718-723. DOI: 10.1002/jhm.2436. PubMed

7. The Hospital Observation Care Problem: Perspectives and Solutions from the Society of Hospital Medicine. Society of Hospital Medicine Public Policy Committee. https://www.hospitalmedicine.org/globalassets/policy-and-advocacy/advocacy-pdf/shms-observation-white-paper-2017. Accessed February 12, 2018.

8. Rosen AK, Chen Q, Shwartz M, et al. Does use of a hospital-wide readmission measure versus condition-specific readmission measures make a difference for hospital profiling and payment penalties? Medical Care. 2016;54(2):155-161. DOI: 10.1097/MLR.0000000000000455. PubMed

9. Baugh CW, Schuur JD. Observation care-high-value care or a cost-shifting loophole? N Engl J Med. 2013;369(4):302-305. DOI: 10.1056/NEJMp1304493. PubMed

10. Cassel CK, Conway PH, Delbanco SF, Jha AK, Saunders RS, Lee TH. Getting more performance from performance measurement. N Engl J Med. 2014;371(23):2145-2147. DOI: 10.1056/NEJMp1408345. PubMed

1. Smith M, Saunders R, Stuckhardt L, McGinnis JM, eds. Best care at lower cost: the path to continuously learning health care in America. Washington, DC: National Academies Press; 2013. PubMed

2. Nuckols TK, Fingar KR, Barrett ML, et al. Returns to emergency department, observation, or inpatient care within 30 days after hospitalization in 4 states, 2009 and 2010 versus 2013 and 2014. J Hosp Med. 2018;13(5):296-303. PubMed

3. Fingar KR, Washington R. Trends in Hospital Readmissions for Four High-Volume Conditions, 2009–2013. Statistical Brief No. 196. https://www.hcup-us.ahrq.gov/reports/statbriefs/sb196-Readmissions-Trends-High-Volume-Conditions.pdf. Accessed March 5, 2018.

4. Zuckerman RB, Sheingold SH, Orav EJ, Ruhter J, Epstein AM. Readmissions, observation, and the Hospital Readmissions Reduction Program. N Engl J Med. 2016;374(16):1543-1551. DOI: 10.1056/NEJMsa1513024. PubMed

5. Gerhardt G, Yemane A, Apostle K, Oelschlaeger A, Rollins E, Brennan N. Evaluating whether changes in utilization of hospital outpatient services contributed to lower Medicare readmission rate. Medicare Medicaid Res Rev. 2014;4(1). DOI: 10.5600/mmrr2014-004-01-b03 PubMed

6. Kangovi S, Cafardi SG, Smith RA, Kulkarni R, Grande D. Patient financial responsibility for observation care. J Hosp Med. 2015;10(11):718-723. DOI: 10.1002/jhm.2436. PubMed

7. The Hospital Observation Care Problem: Perspectives and Solutions from the Society of Hospital Medicine. Society of Hospital Medicine Public Policy Committee. https://www.hospitalmedicine.org/globalassets/policy-and-advocacy/advocacy-pdf/shms-observation-white-paper-2017. Accessed February 12, 2018.

8. Rosen AK, Chen Q, Shwartz M, et al. Does use of a hospital-wide readmission measure versus condition-specific readmission measures make a difference for hospital profiling and payment penalties? Medical Care. 2016;54(2):155-161. DOI: 10.1097/MLR.0000000000000455. PubMed

9. Baugh CW, Schuur JD. Observation care-high-value care or a cost-shifting loophole? N Engl J Med. 2013;369(4):302-305. DOI: 10.1056/NEJMp1304493. PubMed

10. Cassel CK, Conway PH, Delbanco SF, Jha AK, Saunders RS, Lee TH. Getting more performance from performance measurement. N Engl J Med. 2014;371(23):2145-2147. DOI: 10.1056/NEJMp1408345. PubMed

© 2018 Society of Hospital Medicine

Improving Teamwork and Patient Outcomes with Daily Structured Interdisciplinary Bedside Rounds: A Multimethod Evaluation

Evidence has emerged over the last decade of the importance of the front line patient care team in improving quality and safety of patient care.1-3 Improving collaboration and workflow is thought to increase reliability of care delivery.1 One promising method to improve collaboration is the interdisciplinary ward round (IDR), whereby medical, nursing, and allied health staff attend ward rounds together. IDRs have been shown to reduce the average cost and length of hospital stay,4,5 although a recent systematic review found inconsistent improvements across studies.6 Using the term “interdisciplinary,” however, does not necessarily imply the inclusion of all disciplines necessary for patient care. The challenge of conducting interdisciplinary rounds is considerable in today’s busy clinical environment: health professionals who are spread across multiple locations within the hospital, and who have competing hospital responsibilities and priorities, must come together at the same time and for a set period each day. A survey with respondents from Australia, the United States, and Canada found that only 65% of rounds labelled “interdisciplinary” included a physician.7

While IDRs are not new, structured IDRs involve the purposeful inclusion of all disciplinary groups relevant to a patient’s care, alongside a checklist tool to aid comprehensive but concise daily assessment of progress and treatment planning. Novel, structured IDR interventions have been tested recently in various settings, resulting in improved teamwork, hospital performance, and patient outcomes in the US, including the Structured Interdisciplinary Bedside Round (SIBR) model.8-12

The aim of this study was to assess the impact of the new structure and the associated practice changes on interprofessional working and a set of key patient and hospital outcome measures. As part of the intervention, the hospital established an Acute Medical Unit (AMU) based on the Accountable Care Unit model.13

METHODS

Description of the Intervention

The AMU brought together 2 existing medical wards, a general medical ward and a 48-hour turnaround Medical Assessment Unit (MAU), into 1 geographical location with 26 beds. Prior to the merger, the MAU and general medical ward had separate and distinct cultures and workflows. The MAU was staffed with experienced nurses; nurses worked within a patient allocation model, the workload was shared, and relationships were collegial. In contrast, the medical ward was more typical of the remainder of the hospital: nurses had a heavy workload, managed a large group of longer-term complex patients, and they used a team-based nursing model of care in which senior nurses supervised junior staff. It was decided that because of the seniority of the MAU staff, they should be in charge of the combined AMU, and the patient allocation model of care would be used to facilitate SIBR.

Consultants, junior doctors, nurses, and allied health professionals (including a pharmacist, physiotherapist, occupational therapist, and social worker) were geographically aligned to the new ward, allowing them to participate as a team in daily structured ward rounds. Rounds are scheduled at the same time each day to enable family participation. The ward round is coordinated by a registrar or intern, with input from patient, family, nursing staff, pharmacy, allied health, and other doctors (intern, registrar, and consultant) based on the unit. The patient load is distributed between 2 rounds: 1 scheduled for 10

Data Collection Strategy

The study was set in an AMU in a large tertiary care hospital in regional Australia and used a convergent parallel multimethod approach14 to evaluate the implementation and effect of SIBR in the AMU. The study population consisted of 32 clinicians employed at the study hospital: (1) the leadership team involved in the development and implementation of the intervention and (2) members of clinical staff who were part of the AMU team.

Qualitative Data

Qualitative measures consisted of semistructured interviews. We utilized multiple strategies to recruit interviewees, including a snowball technique, criterion sampling,15 and emergent sampling, so that we could seek the views of both the leadership team responsible for the implementation and “frontline” clinical staff whose daily work was directly affected by it. Everyone who was initially recruited agreed to be interviewed, and additional frontline staff asked to be interviewed once they realized that we were asking about how staff experienced the changes in practice.

The research team developed a semistructured interview guide based on an understanding of the merger of the 2 units as well as an understanding of changes in practice of the rounds (provided in Appendix 1). The questions were pilot tested on a separate unit and revised. Questions were structured into 5 topic areas: planning and implementation of AMU/SIBR model, changes in work practices because of the new model, team functioning, job satisfaction, and perceived impact of the new model on patients and families. All interviews were audio-recorded and transcribed verbatim for analysis.

Quantitative Data

Quantitative data were collected on patient outcome measures: length of stay (LOS), discharge date and time, mode of separation (including death), primary diagnostic category, total hospital stay cost and “clinical response calls,” and patient demographic data (age, gender, and Patient Clinical Complexity Level [PCCL]). The PCCL is a standard measure used in Australian public inpatient facilities and is calculated for each episode of care.16 It measures the cumulative effect of a patient’s complications and/or comorbidities and takes an integer value between 0 (no clinical complexity effect) and 4 (catastrophic clinical complexity effect).

Data regarding LOS, diagnosis (Australian Refined Diagnosis Related Groups [AR-DRG], version 7), discharge date, and mode of separation (including death) were obtained from the New South Wales Ministry of Health’s Health Information Exchange for patients discharged during the year prior to the intervention through 1 year after the implementation of the intervention. The total hospital stay cost for these individuals was obtained from the local Health Service Organizational Performance Management unit. Inclusion criteria were inpatients aged over 15 years experiencing acute episodes of care; patients with a primary diagnostic category of mental diseases and disorders were excluded. LOS was calculated based on ward stay. AMU data were compared with the remaining hospital ward data (the control group). Data on “clinical response calls” per month per ward were also obtained for the 12 months prior to intervention and the 12 months of the intervention.

Analysis

Qualitative Analysis

Qualitative data analysis consisted of a hybrid form of textual analysis, combining inductive and deductive logics.17,18 Initially, 3 researchers (J.P., J.J., and R.C.W.) independently coded the interview data inductively to identify themes. Discrepancies were resolved through discussion until consensus was reached. Then, to further facilitate analysis, the researchers deductively imposed a matrix categorization, consisting of 4 a priori categories: context/conditions, practices/processes, professional interactions, and consequences.19,20 Additional a priori categories were used to sort the themes further in terms of experiences prior to, during, and following implementation of the intervention. To compare changes in those different time periods, we wanted to know what themes were related to implementation and whether those themes continued to be applicable to sustainability of the changes.

Quantitative analysis. Distribution of continuous data was examined by using the one-sample Kolmogorov-Smirnov test. We compared pre-SIBR (baseline) measures using the Student t test for normally distributed data, the Mann-Whitney U z test for nonparametric data (denoted as M-W U z), and χ2 tests for categorical data. Changes in monthly “clinical response calls” between the AMU and the control wards over time were explored by using analysis of variance (ANOVA). Changes in LOS and cost of stay from the year prior to the intervention to the first year of the intervention were analyzed by using generalized linear models, which are a form of linear regression. Factors, or independent variables, included in the models were time period (before or during intervention), ward (AMU or control), an interaction term (time by ward), patient age, gender, primary diagnosis (major diagnostic categories of the AR-DRG version 7.0), and acuity (PCCL). The estimated marginal means for cost of stay for the 12-month period prior to the intervention and for the first 12 months of the intervention were produced. All statistical analyses were performed by using IBM SPSS version 21 (IBM Corp., Armonk, New York) and with alpha set at P < .05.

RESULTS

Qualitative Evaluation of the Intervention

Participants.

Three researchers (RCW, JP, and JJ) conducted in-person, semistructured interviews with 32 clinicians (9 male, 23 female) during a 3-day period. The duration of the interviews ranged from 19 minutes to 68 minutes. Participants consisted of 8 doctors, 18 nurses, 5 allied health professionals, and an administrator. Ten of the participants were involved in the leadership group that drove the planning and implementation of SIBR and the AMU.

Themes

Context and Conditions of Work

Practices and Processes

Participants perceived postintervention work processes to be more efficient. A primary example was a near-universal approval of the time saved from not “chasing” other professionals now that they were predictably available on the ward. More timely decision-making was thought to result from this predicted availability and associated improvements in communication.

The SIBR enforced a workflow on all staff, who felt there was less flexibility to work autonomously (doctors) or according to patients’ needs (nurses). More junior staff expressed anxiety about delayed completion of discharge-related administrative tasks because of the midday completion of the round. Allied health professionals who had commitments in other areas of the hospital often faced a dilemma about how to prioritize SIBR attendance and activities on other wards. This was managed differently depending on the specific allied health profession and the individuals within that profession.

Professional Interactions

In terms of interprofessional dynamics on the AMU, the implementation of SIBR resulted in a shift in power between the doctors and the nurses. In the old ward, doctors largely controlled the timing of medical rounding processes. In the new AMU, doctors had to relinquish some control over the timing of personal workflow to comply with the requirements of SIBR. Furthermore, there was evidence that this had some impact on traditional hierarchical models of communication and created a more level playing field, as nonmedical professionals felt more empowered to voice their thoughts during and outside of rounds.

The rounds provided much greater visibility of the “big picture” and each profession’s role within it; this allowed each clinician to adjust their work to fit in and take account of others. The process was not instantaneous, and trust developed over a period of weeks. Better communication meant fewer misunderstandings, and workload dropped.

The participation of allied health professionals in the round enhanced clinician interprofessional skills and knowledge. The more inclusive approach facilitated greater trust between clinical disciplines and a development of increased confidence among nursing, allied health, and administrative professionals.

In contrast to the positive impacts of the new model of care on communication and relationships within the AMU, interdepartmental relationships were seen to have suffered. The processes and practices of the new AMU are different to those in the other hospital departments, resulting in some isolation of the unit and difficulties interacting with other areas of the hospital. For example, the trade-offs that allied health professionals made to participate in SIBR often came at the expense of other units or departments.

Consequences

All interviewees lauded the benefits of the SIBR intervention for patients. Patients were perceived to be better informed and more respected, and they benefited from greater perceived timeliness of treatment and discharge, easier access to doctors, better continuity of treatment and outcomes, improved nurse knowledge of their circumstances, and fewer gaps in their care. Clinicians spoke directly to the patient during SIBR, rather than consulting with professional colleagues over the patient’s head. Some staff felt that doctors were now thinking of patients as “people” rather than “a set of symptoms.” Nurses discovered that informed patients are easier to manage.

Staff members were prepared to compromise on their own needs in the interests of the patient. The emphasis on the patient during rounds resulted in improved advocacy behaviors of clinicians. The nurses became more empowered and able to show greater initiative. Families appeared to find it much easier to access the doctors and obtain information about the patient, resulting in less distress and a greater sense of control and trust in the process.

Quantitative Evaluation of the Intervention

Hospital Outcomes

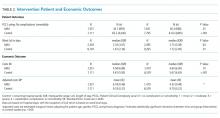

Patient demographics did not change over time within either the AMU or control wards. However, there were significant increases in Patient Clinical Complexity Level (PCCL) ratings for both the AMU (44.7% to 40.3%; P<0.05) and the control wards (65.2% to 61.6%; P < .001). There was not a statistically significant shift over time in median LoS on the ward prior to (2.16 days, IQR 3.07) and during SIBR in the AMU (2.15 days; IQR 3.28), while LoS increased in the control (pre-SIBR: 1.67, 2.34; during SIBR 1.73, 2.40; M-W U z = -2.46, P = .014). Mortality rates were stable across time for both the AMU (pre-SIBR 2.6% [95% confidence interval {CI}, 1.9-3.5]; during SIBR 2.8% [95% CI, 2.1-3.7]) and the control (pre-SIBR 1.3% [95% CI, 1.0-1.5]; during SIBR 1.2% [95% CI, 1.0-1.4]).

The total number of “clinical response calls” or “flags” per month dropped significantly from pre-SIBR to during SIBR for the AMU from a mean of 63.1 (standard deviation 15.1) to 31.5 (10.8), but remained relatively stable in the control (pre-SIBR 72.5 [17.6]; during SIBR 74.0 [28.3]), and this difference was statistically significant (F (1,44) = 9.03; P = .004). There was no change in monthly “red flags” or “rapid response calls” over time (AMU: 10.5 [3.6] to 9.1 [4.7]; control: 40.3 [11.7] to 41.8 [10.8]). The change in total “clinical response calls” over time was attributable to the “yellow flags” or the decline in “calls for clinical review” in the AMU (from 52.6 [13.5] to 22.4 [9.2]). The average monthly “yellow flags” remained stable in the control (pre-SIBR 32.2 [11.6]; during SIBR 32.3 [22.4]). The AMU and the control wards differed significantly in how the number of monthly “calls for clinical review” changed from pre-SIBR to during SIBR (F (1,44) = 12.18; P = .001).

The 2 main outcome measures, LOS and costs, were analyzed to determine whether changes over time differed between the AMU and the control wards after accounting for age, gender, and PCCL. There was no statistically significant difference between the AMU and control wards in terms of change in LOS over time (Wald χ2 = 1.05; degrees of freedom [df] = 1; P = .31). There was a statistically significant interaction for cost of stay, indicating that ward types differed in how they changed over time (with a drop in cost over time observed in the AMU and an increase observed in the control) (Wald χ2 = 6.34; df = 1; P = .012.

DISCUSSION

We report on the implementation of an AMU model of care, including the reorganization of a nursing unit, implementation of IDR, and geographical localization. Our study design allowed a more comprehensive assessment of the implementation of system redesign to include provider perceptions and clinical outcomes.

The 2 very different cultures of the old wards that were combined into the AMU, as well as the fact that the teams had not previously worked together, made the merger of the 2 wards difficult. Historically, the 2 teams had worked in very different ways, and this created barriers to implementation. The SIBR also demanded new ways of working closely with other disciplines, which disrupted older clinical cultures and relationships. While organizational culture is often discussed, and even measured, the full impact of cultural factors when making workplace changes is frequently underestimated.21 The development of a new culture takes time, and it can lag organizational structural changes by months or even years.22 As our interviewees expressed, often emotionally, there was a sense of loss during the merger of the 2 units. While this is a potential consequence of any large organizational change, it could be addressed during the planning stages, prior to implementation, by acknowledging and perhaps honoring what is being left behind. It is safe to assume that future units implementing the rounding intervention will not fully realize commensurate levels of culture change until well after the structural and process changes are finalized, and only then if explicit effort is made to engender cultural change.

Overall, however, the interviewees perceived that the SIBR intervention led to improved teamwork and team functioning. These improvements were thought to benefit task performance and patient safety. Our study is consistent with other research in the literature that reported that greater staff empowerment and commitment is associated with interdisciplinary patient care interventions in front line caregiving teams.23,24 The perception of a more equal nurse-physician relationship resulted in improved job satisfaction, better interprofessional relationships, and perceived improvements in patient care. A flatter power gradient across professions and increased interdisciplinary teamwork has been shown to be associated with improved patient outcomes.25,26

Changes to clinician workflow can significantly impact the introduction of new models of care. A mandated time each day for structured rounds meant less flexibility in workflow for clinicians and made greater demands on their time management and communication skills. Furthermore, the need for human resource negotiations with nurse representatives was an unexpected component of successfully introducing the changes to workflow. Once the benefits of saved time and better communication became evident, changes to workflow were generally accepted. These challenges can be managed if stakeholders are engaged and supportive of the changes.13

Finally, our findings emphasize the importance of combining qualitative and quantitative data when evaluating an intervention. In this case, the qualitative outcomes that include “intangible” positive effects, such as cultural change and improved staff understanding of one another’s roles, might encourage us to continue with the SIBR intervention, which would allow more time to see if the trend of reduced LOS identified in the statistical analysis would translate to a significant effect over time.

We are unable to identify which aspects of the intervention led to the greatest impact on our outcomes. A recent study found that interdisciplinary rounds had no impact on patients’ perceptions of shared decision-making or care satisfaction.27 Although our findings indicated many potential benefits for patients, we were not able to interview patients or their carers to confirm these findings. In addition, we do not have any patient-centered outcomes, which would be important to consider in future work. Although our data on clinical response calls might be seen as a proxy for adverse events, we do not have data on adverse events or errors, and these are important to consider in future work. Finally, our findings are based on data from a single institution.

CONCLUSIONS

While there were some criticisms, participants expressed overwhelmingly positive reactions to the SIBR. The biggest reported benefit was perceived improved communication and understanding between and within the clinical professions, and between clinicians and patients. Improved communication was perceived to have fostered improved teamwork and team functioning, with most respondents feeling that they were a valued part of the new team. Improved teamwork was thought to contribute to improved task performance and led interviewees to perceive a higher level of patient safety. This research highlights the need for multimethod evaluations that address contextual factors as well as clinical outcomes.

Acknowledgments

The authors would like to acknowledge the clinicians and staff members who participated in this study. We would also like to acknowledge the support from the NSW Clinical Excellence Commission, in particular, Dr. Peter Kennedy, Mr. Wilson Yeung, Ms. Tracy Clarke, and Mr. Allan Zhang, and also from Ms. Karen Storey and Mr. Steve Shea of the Organisational Performance Management team at the Orange Health Service.

Disclosures

None of the authors had conflicts of interest in relation to the conduct or reporting of this study, with the exception that the lead author’s institution, the Australian Institute of Health Innovation, received a small grant from the New South Wales Clinical Excellence Commission to conduct the work. Ethics approval for the research was granted by the Greater Western Area Health Service Human Research Ethics Committee (HREC/13/GWAHS/22). All interviewees consented to participate in the study. For patient data, consent was not obtained, but presented data are anonymized. The full dataset is available from the corresponding author with restrictions. This research was funded by the NSW Clinical Excellence Commission, who also encouraged submission of the article for publication. The funding source did not have any role in conduct or reporting of the study. R.C.W., J.P., and J.J. conceptualized and conducted the qualitative component of the study, including method, data collection, data analysis, and writing of the manuscript. G.L., C.H., and H.D. conceptualized the quantitative component of the study, including method, data collection, data analysis, and writing of the manuscript. G.S. contributed to conceptualization of the study, and significantly contributed to the revision of the manuscript. All authors, external and internal, had full access to all of the data (including statistical reports and tables) in the study and can take responsibility for the integrity of the data and the accuracy of the data analysis. As the lead author, R.C.W. affirms that the manuscript is an honest, accurate, and transparent account of the study being reported, that no important aspects of the study have been omitted, and that any discrepancies from the study as planned have been explained.

1. Johnson JK, Batalden PB. Educating health professionals to improve care within the clinical microsystem. McLaughlin and Kaluzny’s Continuous Quality Improvement In Health Care. Burlington: Jones & Bartlett Learning; 2013.

2. Mohr JJ, Batalden P, Barach PB. Integrating patient safety into the clinical microsystem. Qual Saf Health Care. 2004;13:ii34-ii38. PubMed

3. Sanchez JA, Barach PR. High reliability organizations and surgical microsystems: re-engineering surgical care. Surg Clin North Am. 2012;92:1-14. PubMed

4. Curley C, McEachern JE, Speroff T. A firm trial of interdisciplinary rounds on the inpatient medical wards: an intervention designed using continuous quality improvement. Med Care. 1998;36:AS4-AS12. PubMed

5. O’Mahony S, Mazur E, Charney P, Wang Y, Fine J. Use of multidisciplinary rounds to simultaneously improve quality outcomes, enhance resident education, and shorten length of stay. J Gen Intern Med. 2007;22:1073-1079. PubMed

6. Pannick S, Beveridge I, Wachter RM, Sevdalis N. Improving the quality and safety of care on the medical ward: a review and synthesis of the evidence base. Eur J Intern Med. 2014;25:874-887. PubMed

7. Halm MA, Gagner S, Goering M, Sabo J, Smith M, Zaccagnini M. Interdisciplinary rounds: impact on patients, families, and staff. Clin Nurse Spec. 2003;17:133-142. PubMed

8. Stein J, Murphy D, Payne C, et al. A remedy for fragmented hospital care. Harvard Business Review. 2013.

9. O’Leary KJ, Buck R, Fligiel HM, et al. Structured interdisciplinary rounds in a medical teaching unit: improving patient safety. Arch Intern Med. 2010;171:678-684. PubMed

10. O’Leary KJ, Haviley C, Slade ME, Shah HM, Lee J, Williams MV. Improving teamwork: impact of structured interdisciplinary rounds on a hospitalist unit. J Hosp Med. 2011;6:88-93. PubMed

11. O’Leary KJ, Ritter CD, Wheeler H, Szekendi MK, Brinton TS, Williams MV. Teamwork on inpatient medical units: assessing attitudes and barriers. Qual Saf Health Care. 2011;19:117-121. PubMed

12. O’Leary KJ, Creden AJ, Slade ME, et al. Implementation of unit-based interventions to improve teamwork and patient safety on a medical service. Am J Med Qual. 2014;30:409-416. PubMed

13. Stein J, Payne C, Methvin A, et al. Reorganizing a hospital ward as an accountable care unit. J Hosp Med. 2015;10:36-40. PubMed

14. Creswell JW. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches. Thousand Oaks: SAGE Publications; 2013.

15. Palinkas LA, Horwitz SM, Green CA, Wisdom JP, Duan N, Hoagwood K. Purposeful sampling for qualitative data collection and analysis in mixed method implementation research. Adm Pol Ment Health. 2015;42:533-544. PubMed

16. Australian Consortium for Classification Development (ACCD). Review of the AR-DRG classification Case Complexity Process: Final Report; 2014.

http://ihpa.gov.au/internet/ihpa/publishing.nsf/Content/admitted-acute. Accessed September 21, 2015.

17. Lofland J, Lofland LH. Analyzing Social Settings. Belmont: Wadsworth Publishing Company; 2006.

18. Miles MB, Huberman AM, Saldaña J. Qualitative Data Analysis: A Methods Sourcebook. Los Angeles: SAGE Publications; 2014.