User login

New guideline for in-hospital care of diabetes says use CGMs

Goal-directed glycemic management – which may include new technologies for glucose monitoring – for non–critically ill hospitalized patients who have diabetes or newly recognized hyperglycemia can improve outcomes, according to a new practice guideline from the Endocrine Society.

Even though roughly 35% of hospitalized patients have diabetes or newly discovered hyperglycemia, there is “wide variability in glycemic management in clinical practice,” writing panel chair Mary Korytkowski, MD, from the University of Pittsburgh, said at the annual meeting of the Endocrine Society. “These patients get admitted to every patient service in the hospital, meaning that every clinical service will encounter this group of patients, and their glycemic management can have a major effect on their outcomes. Both short term and long term.”

This guideline provides strategies “to achieve previously recommended glycemic goals while also reducing the risk for hypoglycemia, and this includes inpatient use of insulin pump therapy or continuous glucose monitoring [CGM] devices, among others,” she said.

It also includes “recommendations for preoperative glycemic goals as well as when the use of correctional insulin – well known as sliding scale insulin – may be appropriate” and when it is not.

The document, which replaces a 2012 guideline, was published online in the Journal of Clinical Endocrinology & Metabolism.

A multidisciplinary panel developed the document over the last 3 years to answer 10 clinical practice questions related to management of non–critically ill hospitalized patients with diabetes or newly discovered hyperglycemia.

Use of CGM devices in hospital

The first recommendation is: “In adults with insulin-treated diabetes hospitalized for noncritical illness who are at high risk of hypoglycemia, we suggest the use of real-time [CGM] with confirmatory bedside point-of-care blood glucose monitoring for adjustments in insulin dosing rather than point-of-care blood glucose rather than testing alone in hospital settings where resources and training are available.” (Conditional recommendation. Low certainty of evidence).

“We were actually very careful in terms of looking at the data” for use of CGMs, Dr. Korytkowski said in an interview.

Although CGMs are approved by the Food and Drug Administration in the outpatient setting, and that’s becoming the standard of care there, they are not yet approved for in-hospital use.

However, the FDA granted an emergency allowance for use of CGMs in hospitals during the COVID-19 pandemic.

That was “when everyone was scrambling for what to do,” Dr. Korytkowski noted. “There was a shortage of personal protective equipment and a real interest in trying to limit the amount of exposure of healthcare personnel in some of these really critically ill patients for whom intravenous insulin therapy was used to control their glucose level.”

On March 1, the FDA granted Breakthrough Devices Designation for Dexcom CGM use in the hospital setting.

The new guideline suggests CGM be used to detect trends in glycemic management, with insulin dosing decisions made with point-of-care glucose measure (the standard of care).

To implement CGM for glycemic management in hospitals, Dr. Korytkowski said, would require “extensive staff and nursing education to have people with expertise available to provide support to nursing personnel who are both placing these devices, changing these devices, looking at trends, and then knowing when to remove them for certain procedures such as MRI or radiologic procedures.”

“We know that not all hospitals may be readily available to use these devices,” she said. “It is an area of active research. But the use of these devices during the pandemic, in both critical care and non–critical care setting has really provided us with a lot of information that was used to formulate this suggestion in the guideline.”

The document addresses the following areas: CGM, continuous subcutaneous insulin infusion pump therapy, inpatient diabetes education, prespecified preoperative glycemic targets, use of neutral protamine Hagedorn insulin for glucocorticoid or enteral nutrition-associated hyperglycemia, noninsulin therapies, preoperative carbohydrate-containing oral fluids, carbohydrate counting for prandial (mealtime) insulin dosing, and correctional and scheduled (basal or basal bolus) insulin therapies.

Nine key recommendations

Dr. Korytkowski identified nine key recommendations:

- CGM systems can help guide glycemic management with reduced risk for hypoglycemia.

- Patients experiencing glucocorticoid- or enteral nutrition–associated hyperglycemia require scheduled insulin therapy to address anticipated glucose excursions.

- Selected patients using insulin pump therapy prior to a hospital admission can continue to use these devices in the hospital if they have the mental and physical capacity to do so with knowledgeable hospital personnel.

- Diabetes self-management education provided to hospitalized patients can promote improved glycemic control following discharge with reductions in the risk for hospital readmission. “We know that is recommended for patients in the outpatient setting but often they do not get this,” she said. “We were able to observe that this can also impact long-term outcomes “

- Patients with diabetes scheduled for elective surgery may have improved postoperative outcomes when preoperative hemoglobin A1c is 8% or less and preoperative blood glucose is less than 180 mg/dL. “This recommendation answers the question: ‘Where should glycemic goals be for people who are undergoing surgery?’ ”

- Providing preoperative carbohydrate-containing beverages to patients with known diabetes is not recommended.

- Patients with newly recognized hyperglycemia or well-managed diabetes on noninsulin therapy may be treated with correctional insulin alone as initial therapy at hospital admission.

- Some noninsulin diabetes therapies can be used in combination with correction insulin for patients with type 2 diabetes who have mild hyperglycemia.

- Correctional insulin – “otherwise known as sliding-scale insulin” – can be used as initial therapy for patients with newly recognized hyperglycemia or type 2 diabetes treated with noninsulin therapy prior to hospital admission.

- Scheduled insulin therapy is preferred for patients experiencing persistent blood glucose values greater than 180 mg/dL and is recommended for patients using insulin therapy prior to admission.

The guideline writers’ hopes

“We hope that this guideline will resolve debates” about appropriate preoperative glycemic management and when sliding-scale insulin can be used and should not be used, said Dr. Korytkowski.

The authors also hope that “it will stimulate research funding for this very important aspect of diabetes care, and that hospitals will recognize the importance of having access to knowledgeable diabetes care and education specialists who can provide staff education regarding inpatient glycemic management, provide oversight for patients using insulin pump therapy or CGM devices, and empower hospital nurses to provide diabetes [self-management] education prior to patient discharge.”

Claire Pegg, the patient representative on the panel, hopes “that this guideline serves as the beginning of a conversation that will allow inpatient caregivers to provide individualized care to patients – some of whom may be self-sufficient with their glycemic management and others who need additional assistance.”

Development of the guideline was funded by the Endocrine Society. Dr. Korytkowski has reported no relevant financial disclosures.

A version of this article first appeared on Medscape.com.

Goal-directed glycemic management – which may include new technologies for glucose monitoring – for non–critically ill hospitalized patients who have diabetes or newly recognized hyperglycemia can improve outcomes, according to a new practice guideline from the Endocrine Society.

Even though roughly 35% of hospitalized patients have diabetes or newly discovered hyperglycemia, there is “wide variability in glycemic management in clinical practice,” writing panel chair Mary Korytkowski, MD, from the University of Pittsburgh, said at the annual meeting of the Endocrine Society. “These patients get admitted to every patient service in the hospital, meaning that every clinical service will encounter this group of patients, and their glycemic management can have a major effect on their outcomes. Both short term and long term.”

This guideline provides strategies “to achieve previously recommended glycemic goals while also reducing the risk for hypoglycemia, and this includes inpatient use of insulin pump therapy or continuous glucose monitoring [CGM] devices, among others,” she said.

It also includes “recommendations for preoperative glycemic goals as well as when the use of correctional insulin – well known as sliding scale insulin – may be appropriate” and when it is not.

The document, which replaces a 2012 guideline, was published online in the Journal of Clinical Endocrinology & Metabolism.

A multidisciplinary panel developed the document over the last 3 years to answer 10 clinical practice questions related to management of non–critically ill hospitalized patients with diabetes or newly discovered hyperglycemia.

Use of CGM devices in hospital

The first recommendation is: “In adults with insulin-treated diabetes hospitalized for noncritical illness who are at high risk of hypoglycemia, we suggest the use of real-time [CGM] with confirmatory bedside point-of-care blood glucose monitoring for adjustments in insulin dosing rather than point-of-care blood glucose rather than testing alone in hospital settings where resources and training are available.” (Conditional recommendation. Low certainty of evidence).

“We were actually very careful in terms of looking at the data” for use of CGMs, Dr. Korytkowski said in an interview.

Although CGMs are approved by the Food and Drug Administration in the outpatient setting, and that’s becoming the standard of care there, they are not yet approved for in-hospital use.

However, the FDA granted an emergency allowance for use of CGMs in hospitals during the COVID-19 pandemic.

That was “when everyone was scrambling for what to do,” Dr. Korytkowski noted. “There was a shortage of personal protective equipment and a real interest in trying to limit the amount of exposure of healthcare personnel in some of these really critically ill patients for whom intravenous insulin therapy was used to control their glucose level.”

On March 1, the FDA granted Breakthrough Devices Designation for Dexcom CGM use in the hospital setting.

The new guideline suggests CGM be used to detect trends in glycemic management, with insulin dosing decisions made with point-of-care glucose measure (the standard of care).

To implement CGM for glycemic management in hospitals, Dr. Korytkowski said, would require “extensive staff and nursing education to have people with expertise available to provide support to nursing personnel who are both placing these devices, changing these devices, looking at trends, and then knowing when to remove them for certain procedures such as MRI or radiologic procedures.”

“We know that not all hospitals may be readily available to use these devices,” she said. “It is an area of active research. But the use of these devices during the pandemic, in both critical care and non–critical care setting has really provided us with a lot of information that was used to formulate this suggestion in the guideline.”

The document addresses the following areas: CGM, continuous subcutaneous insulin infusion pump therapy, inpatient diabetes education, prespecified preoperative glycemic targets, use of neutral protamine Hagedorn insulin for glucocorticoid or enteral nutrition-associated hyperglycemia, noninsulin therapies, preoperative carbohydrate-containing oral fluids, carbohydrate counting for prandial (mealtime) insulin dosing, and correctional and scheduled (basal or basal bolus) insulin therapies.

Nine key recommendations

Dr. Korytkowski identified nine key recommendations:

- CGM systems can help guide glycemic management with reduced risk for hypoglycemia.

- Patients experiencing glucocorticoid- or enteral nutrition–associated hyperglycemia require scheduled insulin therapy to address anticipated glucose excursions.

- Selected patients using insulin pump therapy prior to a hospital admission can continue to use these devices in the hospital if they have the mental and physical capacity to do so with knowledgeable hospital personnel.

- Diabetes self-management education provided to hospitalized patients can promote improved glycemic control following discharge with reductions in the risk for hospital readmission. “We know that is recommended for patients in the outpatient setting but often they do not get this,” she said. “We were able to observe that this can also impact long-term outcomes “

- Patients with diabetes scheduled for elective surgery may have improved postoperative outcomes when preoperative hemoglobin A1c is 8% or less and preoperative blood glucose is less than 180 mg/dL. “This recommendation answers the question: ‘Where should glycemic goals be for people who are undergoing surgery?’ ”

- Providing preoperative carbohydrate-containing beverages to patients with known diabetes is not recommended.

- Patients with newly recognized hyperglycemia or well-managed diabetes on noninsulin therapy may be treated with correctional insulin alone as initial therapy at hospital admission.

- Some noninsulin diabetes therapies can be used in combination with correction insulin for patients with type 2 diabetes who have mild hyperglycemia.

- Correctional insulin – “otherwise known as sliding-scale insulin” – can be used as initial therapy for patients with newly recognized hyperglycemia or type 2 diabetes treated with noninsulin therapy prior to hospital admission.

- Scheduled insulin therapy is preferred for patients experiencing persistent blood glucose values greater than 180 mg/dL and is recommended for patients using insulin therapy prior to admission.

The guideline writers’ hopes

“We hope that this guideline will resolve debates” about appropriate preoperative glycemic management and when sliding-scale insulin can be used and should not be used, said Dr. Korytkowski.

The authors also hope that “it will stimulate research funding for this very important aspect of diabetes care, and that hospitals will recognize the importance of having access to knowledgeable diabetes care and education specialists who can provide staff education regarding inpatient glycemic management, provide oversight for patients using insulin pump therapy or CGM devices, and empower hospital nurses to provide diabetes [self-management] education prior to patient discharge.”

Claire Pegg, the patient representative on the panel, hopes “that this guideline serves as the beginning of a conversation that will allow inpatient caregivers to provide individualized care to patients – some of whom may be self-sufficient with their glycemic management and others who need additional assistance.”

Development of the guideline was funded by the Endocrine Society. Dr. Korytkowski has reported no relevant financial disclosures.

A version of this article first appeared on Medscape.com.

Goal-directed glycemic management – which may include new technologies for glucose monitoring – for non–critically ill hospitalized patients who have diabetes or newly recognized hyperglycemia can improve outcomes, according to a new practice guideline from the Endocrine Society.

Even though roughly 35% of hospitalized patients have diabetes or newly discovered hyperglycemia, there is “wide variability in glycemic management in clinical practice,” writing panel chair Mary Korytkowski, MD, from the University of Pittsburgh, said at the annual meeting of the Endocrine Society. “These patients get admitted to every patient service in the hospital, meaning that every clinical service will encounter this group of patients, and their glycemic management can have a major effect on their outcomes. Both short term and long term.”

This guideline provides strategies “to achieve previously recommended glycemic goals while also reducing the risk for hypoglycemia, and this includes inpatient use of insulin pump therapy or continuous glucose monitoring [CGM] devices, among others,” she said.

It also includes “recommendations for preoperative glycemic goals as well as when the use of correctional insulin – well known as sliding scale insulin – may be appropriate” and when it is not.

The document, which replaces a 2012 guideline, was published online in the Journal of Clinical Endocrinology & Metabolism.

A multidisciplinary panel developed the document over the last 3 years to answer 10 clinical practice questions related to management of non–critically ill hospitalized patients with diabetes or newly discovered hyperglycemia.

Use of CGM devices in hospital

The first recommendation is: “In adults with insulin-treated diabetes hospitalized for noncritical illness who are at high risk of hypoglycemia, we suggest the use of real-time [CGM] with confirmatory bedside point-of-care blood glucose monitoring for adjustments in insulin dosing rather than point-of-care blood glucose rather than testing alone in hospital settings where resources and training are available.” (Conditional recommendation. Low certainty of evidence).

“We were actually very careful in terms of looking at the data” for use of CGMs, Dr. Korytkowski said in an interview.

Although CGMs are approved by the Food and Drug Administration in the outpatient setting, and that’s becoming the standard of care there, they are not yet approved for in-hospital use.

However, the FDA granted an emergency allowance for use of CGMs in hospitals during the COVID-19 pandemic.

That was “when everyone was scrambling for what to do,” Dr. Korytkowski noted. “There was a shortage of personal protective equipment and a real interest in trying to limit the amount of exposure of healthcare personnel in some of these really critically ill patients for whom intravenous insulin therapy was used to control their glucose level.”

On March 1, the FDA granted Breakthrough Devices Designation for Dexcom CGM use in the hospital setting.

The new guideline suggests CGM be used to detect trends in glycemic management, with insulin dosing decisions made with point-of-care glucose measure (the standard of care).

To implement CGM for glycemic management in hospitals, Dr. Korytkowski said, would require “extensive staff and nursing education to have people with expertise available to provide support to nursing personnel who are both placing these devices, changing these devices, looking at trends, and then knowing when to remove them for certain procedures such as MRI or radiologic procedures.”

“We know that not all hospitals may be readily available to use these devices,” she said. “It is an area of active research. But the use of these devices during the pandemic, in both critical care and non–critical care setting has really provided us with a lot of information that was used to formulate this suggestion in the guideline.”

The document addresses the following areas: CGM, continuous subcutaneous insulin infusion pump therapy, inpatient diabetes education, prespecified preoperative glycemic targets, use of neutral protamine Hagedorn insulin for glucocorticoid or enteral nutrition-associated hyperglycemia, noninsulin therapies, preoperative carbohydrate-containing oral fluids, carbohydrate counting for prandial (mealtime) insulin dosing, and correctional and scheduled (basal or basal bolus) insulin therapies.

Nine key recommendations

Dr. Korytkowski identified nine key recommendations:

- CGM systems can help guide glycemic management with reduced risk for hypoglycemia.

- Patients experiencing glucocorticoid- or enteral nutrition–associated hyperglycemia require scheduled insulin therapy to address anticipated glucose excursions.

- Selected patients using insulin pump therapy prior to a hospital admission can continue to use these devices in the hospital if they have the mental and physical capacity to do so with knowledgeable hospital personnel.

- Diabetes self-management education provided to hospitalized patients can promote improved glycemic control following discharge with reductions in the risk for hospital readmission. “We know that is recommended for patients in the outpatient setting but often they do not get this,” she said. “We were able to observe that this can also impact long-term outcomes “

- Patients with diabetes scheduled for elective surgery may have improved postoperative outcomes when preoperative hemoglobin A1c is 8% or less and preoperative blood glucose is less than 180 mg/dL. “This recommendation answers the question: ‘Where should glycemic goals be for people who are undergoing surgery?’ ”

- Providing preoperative carbohydrate-containing beverages to patients with known diabetes is not recommended.

- Patients with newly recognized hyperglycemia or well-managed diabetes on noninsulin therapy may be treated with correctional insulin alone as initial therapy at hospital admission.

- Some noninsulin diabetes therapies can be used in combination with correction insulin for patients with type 2 diabetes who have mild hyperglycemia.

- Correctional insulin – “otherwise known as sliding-scale insulin” – can be used as initial therapy for patients with newly recognized hyperglycemia or type 2 diabetes treated with noninsulin therapy prior to hospital admission.

- Scheduled insulin therapy is preferred for patients experiencing persistent blood glucose values greater than 180 mg/dL and is recommended for patients using insulin therapy prior to admission.

The guideline writers’ hopes

“We hope that this guideline will resolve debates” about appropriate preoperative glycemic management and when sliding-scale insulin can be used and should not be used, said Dr. Korytkowski.

The authors also hope that “it will stimulate research funding for this very important aspect of diabetes care, and that hospitals will recognize the importance of having access to knowledgeable diabetes care and education specialists who can provide staff education regarding inpatient glycemic management, provide oversight for patients using insulin pump therapy or CGM devices, and empower hospital nurses to provide diabetes [self-management] education prior to patient discharge.”

Claire Pegg, the patient representative on the panel, hopes “that this guideline serves as the beginning of a conversation that will allow inpatient caregivers to provide individualized care to patients – some of whom may be self-sufficient with their glycemic management and others who need additional assistance.”

Development of the guideline was funded by the Endocrine Society. Dr. Korytkowski has reported no relevant financial disclosures.

A version of this article first appeared on Medscape.com.

FROM ENDO 2022

FDA: Urgent device correction, recall for Philips ventilator

The U.S. Food and Drug Administration has announced a Class I recall for Philips Respironics V60 and V60 Plus ventilators, citing a power failure leading to potential oxygen deprivation. Class I recalls, the most severe, are reserved for devices that may cause serious injury or death, as noted in the FDA’s announcement. As of April 14, one death and four injuries have been associated with this device failure.

These ventilators are commonly used in hospitals or under medical supervision for patients who have difficulty regulating breathing on their own. Normally, if oxygen flow is interrupted, the device sounds alarms, alerting supervisors. The failure comes when a power fluctuation causes the device to randomly shut down, which forces the alarm system to reboot. This internal disruption is the reason for the recall.

When the device shuts down out of the blue, it may or may not sound the requisite alarm that would allow providers to intervene. If the device does not sound the alarm, patients may lose oxygen for an extended period, without a provider even knowing.

Philips was notified of these problems and began the recall process on March 10. Currently, it is estimated that 56,671 devices have been distributed throughout the United States. The FDA and Philips Respironics advise that if providers are already using these ventilators, they may continue to do so in accordance with extra set of instructions.

First, customers should connect the device to an external alarm or nurse call system. Second, they should use an external oxygen monitor and a pulse oximeter to keep track of air flow. Finally, if one is available, there should be a backup ventilator on the premises. That way, if there is an interruption in oxygen flow, someone will be alerted and can quickly intervene.

If there is a problem, the patient should be removed from the Philips ventilator and immediately placed on an alternate device. The FDA instructs customers who have experienced problems to report them to its MedWatch database.

A version of this article first appeared on Medscape.com.

The U.S. Food and Drug Administration has announced a Class I recall for Philips Respironics V60 and V60 Plus ventilators, citing a power failure leading to potential oxygen deprivation. Class I recalls, the most severe, are reserved for devices that may cause serious injury or death, as noted in the FDA’s announcement. As of April 14, one death and four injuries have been associated with this device failure.

These ventilators are commonly used in hospitals or under medical supervision for patients who have difficulty regulating breathing on their own. Normally, if oxygen flow is interrupted, the device sounds alarms, alerting supervisors. The failure comes when a power fluctuation causes the device to randomly shut down, which forces the alarm system to reboot. This internal disruption is the reason for the recall.

When the device shuts down out of the blue, it may or may not sound the requisite alarm that would allow providers to intervene. If the device does not sound the alarm, patients may lose oxygen for an extended period, without a provider even knowing.

Philips was notified of these problems and began the recall process on March 10. Currently, it is estimated that 56,671 devices have been distributed throughout the United States. The FDA and Philips Respironics advise that if providers are already using these ventilators, they may continue to do so in accordance with extra set of instructions.

First, customers should connect the device to an external alarm or nurse call system. Second, they should use an external oxygen monitor and a pulse oximeter to keep track of air flow. Finally, if one is available, there should be a backup ventilator on the premises. That way, if there is an interruption in oxygen flow, someone will be alerted and can quickly intervene.

If there is a problem, the patient should be removed from the Philips ventilator and immediately placed on an alternate device. The FDA instructs customers who have experienced problems to report them to its MedWatch database.

A version of this article first appeared on Medscape.com.

The U.S. Food and Drug Administration has announced a Class I recall for Philips Respironics V60 and V60 Plus ventilators, citing a power failure leading to potential oxygen deprivation. Class I recalls, the most severe, are reserved for devices that may cause serious injury or death, as noted in the FDA’s announcement. As of April 14, one death and four injuries have been associated with this device failure.

These ventilators are commonly used in hospitals or under medical supervision for patients who have difficulty regulating breathing on their own. Normally, if oxygen flow is interrupted, the device sounds alarms, alerting supervisors. The failure comes when a power fluctuation causes the device to randomly shut down, which forces the alarm system to reboot. This internal disruption is the reason for the recall.

When the device shuts down out of the blue, it may or may not sound the requisite alarm that would allow providers to intervene. If the device does not sound the alarm, patients may lose oxygen for an extended period, without a provider even knowing.

Philips was notified of these problems and began the recall process on March 10. Currently, it is estimated that 56,671 devices have been distributed throughout the United States. The FDA and Philips Respironics advise that if providers are already using these ventilators, they may continue to do so in accordance with extra set of instructions.

First, customers should connect the device to an external alarm or nurse call system. Second, they should use an external oxygen monitor and a pulse oximeter to keep track of air flow. Finally, if one is available, there should be a backup ventilator on the premises. That way, if there is an interruption in oxygen flow, someone will be alerted and can quickly intervene.

If there is a problem, the patient should be removed from the Philips ventilator and immediately placed on an alternate device. The FDA instructs customers who have experienced problems to report them to its MedWatch database.

A version of this article first appeared on Medscape.com.

Hospital medicine gains popularity among newly minted physicians

In a new study, published in Annals of Internal Medicine, researchers from ABIM reviewed certification data from 67,902 general internists, accounting for 80% of all general internists certified in the United States from 1990 to 2017.

The researchers also used data from Medicare fee-for-service claims from 2008-2018 to measure and categorize practice setting types. The claims were from patients aged 65 years or older with at least 20 evaluation and management visits each year. Practice settings were categorized as hospitalist, outpatient, or mixed.

“ABIM is always working to understand the real-life experience of physicians, and this project grew out of that sort of analysis,” lead author Bradley M. Gray, PhD, a health services researcher at ABIM in Philadelphia, said in an interview. “We wanted to better understand practice setting, because that relates to the kinds of questions that we ask on our certifying exams. When we did this, we noticed a trend toward hospital medicine.”

Overall, the percentages of general internists in hospitalist practice and outpatient-only practice increased during the study period, from 25% to 40% and from 23% to 38%, respectively. By contrast, the percentage of general internists in a mixed-practice setting decreased from 52% to 23%, a 56% decline. Most of the physicians who left the mixed practice setting switched to outpatient-only practices.

Among the internists certified in 2017, 71% practiced as hospitalists, compared with 8% practicing as outpatient-only physicians. Most physicians remained in their original choice of practice setting. For physicians certified in 1999 and 2012, 86% and 85%, respectively, of those who chose hospitalist medicine remained in the hospital setting 5 years later, as did 95% of outpatient physicians, but only 57% of mixed-practice physicians.

The shift to outpatient practice among senior physicians offset the potential decline in outpatient primary care resulting from the increased choice of hospitalist medicine by new internists, the researchers noted.

The study findings were limited by several factors, including the reliance on Medicare fee-for-service claims, the researchers noted.

“We were surprised by both the dramatic shift toward hospital medicine by new physicians and the shift to outpatient only (an extreme category) for more senior physicians,” Dr. Gray said in an interview.

The shift toward outpatient practice among older physicians may be driven by convenience, said Dr. Gray. “I suspect that it is more efficient to specialize in terms of practice setting. Only seeing patients in the outpatient setting means that you don’t have to travel to the hospital, which can be time consuming.

“Also, with fewer new physicians going into primary care, older physicians need to focus on outpatient visits. This could be problematic in the future as more senior physicians retire and are replaced by new physicians who focus on hospital care,” which could lead to more shortages in primary care physicians, he explained.

The trend toward hospital medicine as a career has been going on since before the pandemic, said Dr. Gray. “I don’t think the pandemic will ultimately impact this trend. That said, at least in the short run, there may have been a decreased demand for primary care, but that is just my speculation. As more data flow in we will be able to answer this question more directly.”

Next steps for research included digging deeper into the data to understand the nature of conditions facing hospitalists, Dr. Gray said.

Implications for primary care

“This study provides an updated snapshot of the popularity of hospital medicine,” said Bradley A. Sharpe, MD, of the division of hospital medicine at the University of California, San Francisco. “It is also important to conduct this study now as health systems think about the challenge of providing high-quality primary care with a rapidly decreasing number of internists choosing to practice outpatient medicine.” Dr. Sharpe was not involved in the study.

“The most surprising finding to me was not the increase in general internists focusing on hospital medicine, but the amount of the increase; it is remarkable that nearly three quarters of general internists are choosing to practice as hospitalists,” Dr. Sharpe noted.

“I think there are a number of key factors at play,” he said. “First, as hospital medicine as a field is now more than 25 years old, hospitals and health systems have evolved to create hospital medicine jobs that are interesting, engaging, rewarding (financially and otherwise), doable, and sustainable. Second, being an outpatient internist is incredibly challenging; multiple studies have shown that it is essentially impossible to complete the evidence-based preventive care for a panel of patients on top of everything else. We know burnout rates are often higher among primary care and family medicine providers. On top of that, the expansion of electronic health records and patient access has led to a massive increase in messages to providers; this has been shown to be associated with burnout.”

The potential impact of the pandemic on physicians’ choices and the trend toward hospital medicine is an interested question, Dr. Sharpe said. The current study showed only trends through 2017.

“To be honest, I think it is difficult to predict,” he said. “Hospitalists shouldered much of the burden of COVID care nationally and burnout rates are high. One could imagine the extra work (as well as concern for personal safety) could lead to fewer providers choosing hospital medicine.

“At the same time, the pandemic has driven many of us to reflect on life and our values and what is important and, through that lens, providers might choose hospital medicine as a more sustainable, do-able, rewarding, and enjoyable career choice,” Dr. Sharpe emphasized.

“Additional research could explore the drivers of this clear trend toward hospital medicine. Determining what is motivating this trend could help hospitals and health systems ensure they have the right workforce for the future and, in particular, how to create outpatient positions that are attractive and rewarding,” he said.

The study received no outside funding. The researchers and Dr. Sharpe disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

In a new study, published in Annals of Internal Medicine, researchers from ABIM reviewed certification data from 67,902 general internists, accounting for 80% of all general internists certified in the United States from 1990 to 2017.

The researchers also used data from Medicare fee-for-service claims from 2008-2018 to measure and categorize practice setting types. The claims were from patients aged 65 years or older with at least 20 evaluation and management visits each year. Practice settings were categorized as hospitalist, outpatient, or mixed.

“ABIM is always working to understand the real-life experience of physicians, and this project grew out of that sort of analysis,” lead author Bradley M. Gray, PhD, a health services researcher at ABIM in Philadelphia, said in an interview. “We wanted to better understand practice setting, because that relates to the kinds of questions that we ask on our certifying exams. When we did this, we noticed a trend toward hospital medicine.”

Overall, the percentages of general internists in hospitalist practice and outpatient-only practice increased during the study period, from 25% to 40% and from 23% to 38%, respectively. By contrast, the percentage of general internists in a mixed-practice setting decreased from 52% to 23%, a 56% decline. Most of the physicians who left the mixed practice setting switched to outpatient-only practices.

Among the internists certified in 2017, 71% practiced as hospitalists, compared with 8% practicing as outpatient-only physicians. Most physicians remained in their original choice of practice setting. For physicians certified in 1999 and 2012, 86% and 85%, respectively, of those who chose hospitalist medicine remained in the hospital setting 5 years later, as did 95% of outpatient physicians, but only 57% of mixed-practice physicians.

The shift to outpatient practice among senior physicians offset the potential decline in outpatient primary care resulting from the increased choice of hospitalist medicine by new internists, the researchers noted.

The study findings were limited by several factors, including the reliance on Medicare fee-for-service claims, the researchers noted.

“We were surprised by both the dramatic shift toward hospital medicine by new physicians and the shift to outpatient only (an extreme category) for more senior physicians,” Dr. Gray said in an interview.

The shift toward outpatient practice among older physicians may be driven by convenience, said Dr. Gray. “I suspect that it is more efficient to specialize in terms of practice setting. Only seeing patients in the outpatient setting means that you don’t have to travel to the hospital, which can be time consuming.

“Also, with fewer new physicians going into primary care, older physicians need to focus on outpatient visits. This could be problematic in the future as more senior physicians retire and are replaced by new physicians who focus on hospital care,” which could lead to more shortages in primary care physicians, he explained.

The trend toward hospital medicine as a career has been going on since before the pandemic, said Dr. Gray. “I don’t think the pandemic will ultimately impact this trend. That said, at least in the short run, there may have been a decreased demand for primary care, but that is just my speculation. As more data flow in we will be able to answer this question more directly.”

Next steps for research included digging deeper into the data to understand the nature of conditions facing hospitalists, Dr. Gray said.

Implications for primary care

“This study provides an updated snapshot of the popularity of hospital medicine,” said Bradley A. Sharpe, MD, of the division of hospital medicine at the University of California, San Francisco. “It is also important to conduct this study now as health systems think about the challenge of providing high-quality primary care with a rapidly decreasing number of internists choosing to practice outpatient medicine.” Dr. Sharpe was not involved in the study.

“The most surprising finding to me was not the increase in general internists focusing on hospital medicine, but the amount of the increase; it is remarkable that nearly three quarters of general internists are choosing to practice as hospitalists,” Dr. Sharpe noted.

“I think there are a number of key factors at play,” he said. “First, as hospital medicine as a field is now more than 25 years old, hospitals and health systems have evolved to create hospital medicine jobs that are interesting, engaging, rewarding (financially and otherwise), doable, and sustainable. Second, being an outpatient internist is incredibly challenging; multiple studies have shown that it is essentially impossible to complete the evidence-based preventive care for a panel of patients on top of everything else. We know burnout rates are often higher among primary care and family medicine providers. On top of that, the expansion of electronic health records and patient access has led to a massive increase in messages to providers; this has been shown to be associated with burnout.”

The potential impact of the pandemic on physicians’ choices and the trend toward hospital medicine is an interested question, Dr. Sharpe said. The current study showed only trends through 2017.

“To be honest, I think it is difficult to predict,” he said. “Hospitalists shouldered much of the burden of COVID care nationally and burnout rates are high. One could imagine the extra work (as well as concern for personal safety) could lead to fewer providers choosing hospital medicine.

“At the same time, the pandemic has driven many of us to reflect on life and our values and what is important and, through that lens, providers might choose hospital medicine as a more sustainable, do-able, rewarding, and enjoyable career choice,” Dr. Sharpe emphasized.

“Additional research could explore the drivers of this clear trend toward hospital medicine. Determining what is motivating this trend could help hospitals and health systems ensure they have the right workforce for the future and, in particular, how to create outpatient positions that are attractive and rewarding,” he said.

The study received no outside funding. The researchers and Dr. Sharpe disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

In a new study, published in Annals of Internal Medicine, researchers from ABIM reviewed certification data from 67,902 general internists, accounting for 80% of all general internists certified in the United States from 1990 to 2017.

The researchers also used data from Medicare fee-for-service claims from 2008-2018 to measure and categorize practice setting types. The claims were from patients aged 65 years or older with at least 20 evaluation and management visits each year. Practice settings were categorized as hospitalist, outpatient, or mixed.

“ABIM is always working to understand the real-life experience of physicians, and this project grew out of that sort of analysis,” lead author Bradley M. Gray, PhD, a health services researcher at ABIM in Philadelphia, said in an interview. “We wanted to better understand practice setting, because that relates to the kinds of questions that we ask on our certifying exams. When we did this, we noticed a trend toward hospital medicine.”

Overall, the percentages of general internists in hospitalist practice and outpatient-only practice increased during the study period, from 25% to 40% and from 23% to 38%, respectively. By contrast, the percentage of general internists in a mixed-practice setting decreased from 52% to 23%, a 56% decline. Most of the physicians who left the mixed practice setting switched to outpatient-only practices.

Among the internists certified in 2017, 71% practiced as hospitalists, compared with 8% practicing as outpatient-only physicians. Most physicians remained in their original choice of practice setting. For physicians certified in 1999 and 2012, 86% and 85%, respectively, of those who chose hospitalist medicine remained in the hospital setting 5 years later, as did 95% of outpatient physicians, but only 57% of mixed-practice physicians.

The shift to outpatient practice among senior physicians offset the potential decline in outpatient primary care resulting from the increased choice of hospitalist medicine by new internists, the researchers noted.

The study findings were limited by several factors, including the reliance on Medicare fee-for-service claims, the researchers noted.

“We were surprised by both the dramatic shift toward hospital medicine by new physicians and the shift to outpatient only (an extreme category) for more senior physicians,” Dr. Gray said in an interview.

The shift toward outpatient practice among older physicians may be driven by convenience, said Dr. Gray. “I suspect that it is more efficient to specialize in terms of practice setting. Only seeing patients in the outpatient setting means that you don’t have to travel to the hospital, which can be time consuming.

“Also, with fewer new physicians going into primary care, older physicians need to focus on outpatient visits. This could be problematic in the future as more senior physicians retire and are replaced by new physicians who focus on hospital care,” which could lead to more shortages in primary care physicians, he explained.

The trend toward hospital medicine as a career has been going on since before the pandemic, said Dr. Gray. “I don’t think the pandemic will ultimately impact this trend. That said, at least in the short run, there may have been a decreased demand for primary care, but that is just my speculation. As more data flow in we will be able to answer this question more directly.”

Next steps for research included digging deeper into the data to understand the nature of conditions facing hospitalists, Dr. Gray said.

Implications for primary care

“This study provides an updated snapshot of the popularity of hospital medicine,” said Bradley A. Sharpe, MD, of the division of hospital medicine at the University of California, San Francisco. “It is also important to conduct this study now as health systems think about the challenge of providing high-quality primary care with a rapidly decreasing number of internists choosing to practice outpatient medicine.” Dr. Sharpe was not involved in the study.

“The most surprising finding to me was not the increase in general internists focusing on hospital medicine, but the amount of the increase; it is remarkable that nearly three quarters of general internists are choosing to practice as hospitalists,” Dr. Sharpe noted.

“I think there are a number of key factors at play,” he said. “First, as hospital medicine as a field is now more than 25 years old, hospitals and health systems have evolved to create hospital medicine jobs that are interesting, engaging, rewarding (financially and otherwise), doable, and sustainable. Second, being an outpatient internist is incredibly challenging; multiple studies have shown that it is essentially impossible to complete the evidence-based preventive care for a panel of patients on top of everything else. We know burnout rates are often higher among primary care and family medicine providers. On top of that, the expansion of electronic health records and patient access has led to a massive increase in messages to providers; this has been shown to be associated with burnout.”

The potential impact of the pandemic on physicians’ choices and the trend toward hospital medicine is an interested question, Dr. Sharpe said. The current study showed only trends through 2017.

“To be honest, I think it is difficult to predict,” he said. “Hospitalists shouldered much of the burden of COVID care nationally and burnout rates are high. One could imagine the extra work (as well as concern for personal safety) could lead to fewer providers choosing hospital medicine.

“At the same time, the pandemic has driven many of us to reflect on life and our values and what is important and, through that lens, providers might choose hospital medicine as a more sustainable, do-able, rewarding, and enjoyable career choice,” Dr. Sharpe emphasized.

“Additional research could explore the drivers of this clear trend toward hospital medicine. Determining what is motivating this trend could help hospitals and health systems ensure they have the right workforce for the future and, in particular, how to create outpatient positions that are attractive and rewarding,” he said.

The study received no outside funding. The researchers and Dr. Sharpe disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Where Does the Hospital Belong? Perspectives on Hospital at Home in the 21st Century

From Medically Home Group, Boston, MA.

Brick-and-mortar hospitals in the United States have historically been considered the dominant setting for providing care to patients. The coordination and delivery of care has previously been bound to physical hospitals largely because multidisciplinary services were only accessible in an individual location. While the fundamental make-up of these services remains unchanged, these services are now available in alternate settings. Some of these services include access to a patient care team, supplies, diagnostics, pharmacy, and advanced therapeutic interventions. Presently, the physical environment is becoming increasingly irrelevant as the core of what makes the traditional hospital—the professional staff, collaborative work processes, and the dynamics of the space—have all been translated into a modern digitally integrated environment. The elements necessary to providing safe, effective care in a physical hospital setting are now available in a patient’s home.

Impetus for the Model

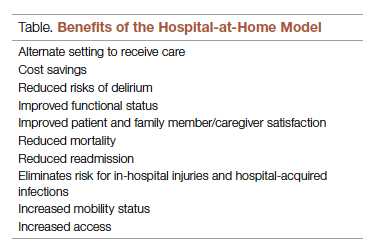

As hospitals reconsider how and where they deliver patient care because of limited resources, the hospital-at-home model has gained significant momentum and interest. This model transforms a home into a hospital. The inpatient acute care episode is entirely substituted with an intensive at-home hospital admission enabled by technology, multidisciplinary teams, and ancillary services. Furthermore, patients requiring post-acute support can be transitioned to their next phase of care seamlessly. Given the nationwide nursing shortage, aging population, challenges uncovered by the COVID-19 pandemic, rising hospital costs, nurse/provider burnout related to challenging work environments, and capacity constraints, a shift toward the combination of virtual and in-home care is imperative. The hospital-at-home model has been associated with superior patient outcomes, including reduced risks of delirium, improved functional status, improved patient and family member satisfaction, reduced mortality, reduced readmissions, and significantly lower costs.1 COVID-19 alone has unmasked major facility-based deficiencies and limitations of our health care system. While the pandemic is not the impetus for the hospital-at-home model, the extended stress of this event has created a unique opportunity to reimagine and transform our health care delivery system so that it is less fragmented and more flexible.

Nursing in the Model

Nursing is central to the hospital-at-home model. Virtual nurses provide meticulous care plan oversight, assessment, and documentation across in-home service providers, to ensure holistic, safe, transparent, and continuous progression toward care plan milestones. The virtual nurse monitors patients using in-home technology that is set up at the time of admission. Connecting with patients to verify social and medical needs, the virtual nurse advocates for their patients and uses these technologies to care and deploy on-demand hands-on services to the patient. Service providers such as paramedics, infusion nurses, or home health nurses may be deployed to provide services in the patient’s home. By bringing in supplies, therapeutics, and interdisciplinary team members, the capabilities of a brick-and-mortar hospital are replicated in the home. All actions that occur wherever the patient is receiving care are overseen by professional nursing staff; in short, virtual nurses are the equivalent of bedside nurses in the brick-and-mortar health care facilities.

Potential Benefits

There are many benefits to the hospital-at-home model (Table). This health care model can be particularly helpful for patients who require frequent admission to acute care facilities, and is well suited for patients with a range of conditions, including those with COVID-19, pneumonia, cellulitis, or congestive heart failure. This care model helps eliminate some of the stressors for patients who have chronic illnesses or other conditions that require frequent hospital admissions. Patients can independently recover at home and can also be surrounded by their loved ones and pets while recovering. This care approach additionally eliminates the risk of hospital-acquired infections and injuries. The hospital-at-home model allows for increased mobility,2 as patients are familiar with their surroundings, resulting in reduced onset of delirium. Additionally, patients with improved mobility performance are less likely to experience negative health outcomes.3 There is less chance of sleep disruption as the patient is sleeping in their own bed—no unfamiliar roommate, no call bells or health care personnel frequently coming into the room. The in-home technology set up for remote patient monitoring is designed with the user in mind. Ease of use empowers the patient to collaborate with their care team on their own terms and center the priorities of themselves and their families.

Positive Outcomes

The hospital-at-home model is associated with positive outcomes. The authors of a systematic review identified 10 randomized controlled trials of hospital-at-home programs (with a total of 1372 patients), but were able to obtain data for only 5 of these trials (with a total of 844 patients).4 They found a 38% reduction in 6-month mortality for patients who received hospital care at home, as well as significantly higher patient satisfaction across a range of medical conditions, including patients with cellulitis and community-acquired pneumonia, as well as elderly patients with multiple medical conditions. The authors concluded that hospital care at home was less expensive than admission to an acute care hospital.4 Similarly, a meta-analysis done by Caplan et al5 that included 61 randomized controlled trials concluded that hospital at home is associated with reductions in mortality, readmission rates, and cost, and increases in patient and caregiver satisfaction. Levine et al2 found reduced costs and utilization with home hospitalization compared to in-hospital care, as well as improved patient mobility status.

The home is the ideal place to empower patients and caregivers to engage in self-management.2 Receiving hospital care at home eliminates the need for dealing with transportation arrangements, traffic, road tolls, and time/scheduling constraints, or finding care for a dependent family member, some of the many stressors that may be experienced by patients who require frequent trips to the hospital. For patients who may not be clinically suitable candidates for hospital at home, such as those requiring critical care intervention and support, the brick-and-mortar hospital is still the appropriate site of care. The hospital-at-home model helps prevent bed shortages in brick-and-mortar hospital settings by allowing hospital care at home for patients who meet preset criteria. These patients can be hospitalized in alternative locations such as their own homes or the residence of a friend. This helps increase health system capacity as well as resiliency.

In addition to expanding safe and appropriate treatment spaces, the hospital-at-home model helps increase access to care for patients during nonstandard hours, including weekends, holidays, or when the waiting time in the emergency room is painfully long. Furthermore, providing care in the home gives the clinical team valuable insight into the patient’s daily life and routine. Performing medication reconciliation with the medicine cabinet in sight and dietary education in a patient’s kitchen are powerful touch points.2 For example, a patient with congestive heart failure who must undergo diuresis is much more likely to meet their care goals when their home diet is aligned with the treatment goal. By being able to see exactly what is in a patient’s pantry and fridge, the care team can create a much more tailored approach to sodium intake and fluid management. Providers can create and execute true patient-centric care as they gain direct insight into the patient’s lifestyle, which is clearly valuable when creating care plans for complex chronic health issues.

Challenges to Implementation and Scaling

Although there are clear benefits to hospital at home, how to best implement and scale this model presents a challenge. In addition to educating patients and families about this model of care, health care systems must expand their hospital-at-home programs and provide education about this model to clinical staff and trainees, and insurers must create reimbursement paradigms. Patients meeting eligibility criteria to enroll in hospital at home is the easiest hurdle, as hospital-at-home programs function best when they enroll and service as many patients as possible, including underserved populations.

Upfront Costs and Cost Savings

While there are upfront costs to set up technology and coordinate services, hospital at home also provides significant total cost savings when compared to coordination associated with brick-and-mortar admission. Hospital care accounts for about one-third of total medical expenditures and is a leading cause of debt.2 Eliminating fixed hospital costs such as facility, overhead, and equipment costs through adoption of the hospital-at-home model can lead to a reduction in expenditures. It has been found that fewer laboratory and diagnostic tests are ordered for hospital-at-home patients when compared to similar patients in brick-and-mortar hospital settings, with comparable or better clinical patient outcomes.6 Furthermore, it is estimated that there are cost savings of 19% to 30% when compared to traditional inpatient care.6 Without legislative action, upon the end of the current COVID-19 public health emergency, the Centers for Medicare & Medicaid Service’s Acute Hospital Care at Home waiver will terminate. This could slow down scaling of the model.However, over the past 2 years there has been enough buy-in from major health systems and patients to continue the momentum of the model’s growth. When setting up a hospital-at-home program, it would be wise to consider a few factors: where in the hospital or health system entity structure the hospital-at-home program will reside, which existing resources can be leveraged within the hospital or health system, and what are the state or federal regulatory requirements for such a program. This type of program continues to fill gaps within the US health care system, meeting the needs of widely overlooked populations and increasing access to essential ancillary services.

Conclusion

It is time to consider our bias toward hospital-first options when managing the care needs of our patients. Health care providers have the option to advocate for holistic care, better experience, and better outcomes. Home-based options are safe, equitable, and patient-centric. Increased costs, consumerism, and technology have pushed us to think about alternative approaches to patient care delivery, and the pandemic created a unique opportunity to see just how far the health care system could stretch itself with capacity constraints, insufficient resources, and staff shortages. In light of new possibilities, it is time to reimagine and transform our health care delivery system so that it is unified, seamless, cohesive, and flexible.

Corresponding author: Payal Sharma, DNP, MSN, RN, FNP-BC, CBN; psharma@medicallyhome.com.

Disclosures: None reported.

1. Cai S, Laurel PA, Makineni R, Marks ML. Evaluation of a hospital-in-home program implemented among veterans. Am J Manag Care. 2017;23(8):482-487.

2. Levine DM, Ouchi K, Blanchfield B, et al. Hospital-level care at home for acutely ill adults: a pilot randomized controlled trial. J Gen Intern Med. 2018;33(5):729-736. doi:10.1007/s11606-018-4307-z

3. Shuman V, Coyle PC, Perera S,et al. Association between improved mobility and distal health outcomes. J Gerontol A Biol Sci Med Sci. 2020;75(12):2412-2417. doi:10.1093/gerona/glaa086

4. Shepperd S, Doll H, Angus RM, et al. Avoiding hospital admission through provision of hospital care at home: a systematic review and meta-analysis of individual patient data. CMAJ. 2009;180(2):175-182. doi:10.1503/cmaj.081491

5. Caplan GA, Sulaiman NS, Mangin DA, et al. A meta-analysis of “hospital in the home”. Med J Aust. 2012;197(9):512-519. doi:10.5694/mja12.10480

6. Hospital at Home. Johns Hopkins Medicine. Healthcare Solutions. Accessed May 20, 2022. https://www.johnshopkinssolutions.com/solution/hospital-at-home/

From Medically Home Group, Boston, MA.

Brick-and-mortar hospitals in the United States have historically been considered the dominant setting for providing care to patients. The coordination and delivery of care has previously been bound to physical hospitals largely because multidisciplinary services were only accessible in an individual location. While the fundamental make-up of these services remains unchanged, these services are now available in alternate settings. Some of these services include access to a patient care team, supplies, diagnostics, pharmacy, and advanced therapeutic interventions. Presently, the physical environment is becoming increasingly irrelevant as the core of what makes the traditional hospital—the professional staff, collaborative work processes, and the dynamics of the space—have all been translated into a modern digitally integrated environment. The elements necessary to providing safe, effective care in a physical hospital setting are now available in a patient’s home.

Impetus for the Model

As hospitals reconsider how and where they deliver patient care because of limited resources, the hospital-at-home model has gained significant momentum and interest. This model transforms a home into a hospital. The inpatient acute care episode is entirely substituted with an intensive at-home hospital admission enabled by technology, multidisciplinary teams, and ancillary services. Furthermore, patients requiring post-acute support can be transitioned to their next phase of care seamlessly. Given the nationwide nursing shortage, aging population, challenges uncovered by the COVID-19 pandemic, rising hospital costs, nurse/provider burnout related to challenging work environments, and capacity constraints, a shift toward the combination of virtual and in-home care is imperative. The hospital-at-home model has been associated with superior patient outcomes, including reduced risks of delirium, improved functional status, improved patient and family member satisfaction, reduced mortality, reduced readmissions, and significantly lower costs.1 COVID-19 alone has unmasked major facility-based deficiencies and limitations of our health care system. While the pandemic is not the impetus for the hospital-at-home model, the extended stress of this event has created a unique opportunity to reimagine and transform our health care delivery system so that it is less fragmented and more flexible.

Nursing in the Model

Nursing is central to the hospital-at-home model. Virtual nurses provide meticulous care plan oversight, assessment, and documentation across in-home service providers, to ensure holistic, safe, transparent, and continuous progression toward care plan milestones. The virtual nurse monitors patients using in-home technology that is set up at the time of admission. Connecting with patients to verify social and medical needs, the virtual nurse advocates for their patients and uses these technologies to care and deploy on-demand hands-on services to the patient. Service providers such as paramedics, infusion nurses, or home health nurses may be deployed to provide services in the patient’s home. By bringing in supplies, therapeutics, and interdisciplinary team members, the capabilities of a brick-and-mortar hospital are replicated in the home. All actions that occur wherever the patient is receiving care are overseen by professional nursing staff; in short, virtual nurses are the equivalent of bedside nurses in the brick-and-mortar health care facilities.

Potential Benefits

There are many benefits to the hospital-at-home model (Table). This health care model can be particularly helpful for patients who require frequent admission to acute care facilities, and is well suited for patients with a range of conditions, including those with COVID-19, pneumonia, cellulitis, or congestive heart failure. This care model helps eliminate some of the stressors for patients who have chronic illnesses or other conditions that require frequent hospital admissions. Patients can independently recover at home and can also be surrounded by their loved ones and pets while recovering. This care approach additionally eliminates the risk of hospital-acquired infections and injuries. The hospital-at-home model allows for increased mobility,2 as patients are familiar with their surroundings, resulting in reduced onset of delirium. Additionally, patients with improved mobility performance are less likely to experience negative health outcomes.3 There is less chance of sleep disruption as the patient is sleeping in their own bed—no unfamiliar roommate, no call bells or health care personnel frequently coming into the room. The in-home technology set up for remote patient monitoring is designed with the user in mind. Ease of use empowers the patient to collaborate with their care team on their own terms and center the priorities of themselves and their families.

Positive Outcomes

The hospital-at-home model is associated with positive outcomes. The authors of a systematic review identified 10 randomized controlled trials of hospital-at-home programs (with a total of 1372 patients), but were able to obtain data for only 5 of these trials (with a total of 844 patients).4 They found a 38% reduction in 6-month mortality for patients who received hospital care at home, as well as significantly higher patient satisfaction across a range of medical conditions, including patients with cellulitis and community-acquired pneumonia, as well as elderly patients with multiple medical conditions. The authors concluded that hospital care at home was less expensive than admission to an acute care hospital.4 Similarly, a meta-analysis done by Caplan et al5 that included 61 randomized controlled trials concluded that hospital at home is associated with reductions in mortality, readmission rates, and cost, and increases in patient and caregiver satisfaction. Levine et al2 found reduced costs and utilization with home hospitalization compared to in-hospital care, as well as improved patient mobility status.

The home is the ideal place to empower patients and caregivers to engage in self-management.2 Receiving hospital care at home eliminates the need for dealing with transportation arrangements, traffic, road tolls, and time/scheduling constraints, or finding care for a dependent family member, some of the many stressors that may be experienced by patients who require frequent trips to the hospital. For patients who may not be clinically suitable candidates for hospital at home, such as those requiring critical care intervention and support, the brick-and-mortar hospital is still the appropriate site of care. The hospital-at-home model helps prevent bed shortages in brick-and-mortar hospital settings by allowing hospital care at home for patients who meet preset criteria. These patients can be hospitalized in alternative locations such as their own homes or the residence of a friend. This helps increase health system capacity as well as resiliency.

In addition to expanding safe and appropriate treatment spaces, the hospital-at-home model helps increase access to care for patients during nonstandard hours, including weekends, holidays, or when the waiting time in the emergency room is painfully long. Furthermore, providing care in the home gives the clinical team valuable insight into the patient’s daily life and routine. Performing medication reconciliation with the medicine cabinet in sight and dietary education in a patient’s kitchen are powerful touch points.2 For example, a patient with congestive heart failure who must undergo diuresis is much more likely to meet their care goals when their home diet is aligned with the treatment goal. By being able to see exactly what is in a patient’s pantry and fridge, the care team can create a much more tailored approach to sodium intake and fluid management. Providers can create and execute true patient-centric care as they gain direct insight into the patient’s lifestyle, which is clearly valuable when creating care plans for complex chronic health issues.

Challenges to Implementation and Scaling

Although there are clear benefits to hospital at home, how to best implement and scale this model presents a challenge. In addition to educating patients and families about this model of care, health care systems must expand their hospital-at-home programs and provide education about this model to clinical staff and trainees, and insurers must create reimbursement paradigms. Patients meeting eligibility criteria to enroll in hospital at home is the easiest hurdle, as hospital-at-home programs function best when they enroll and service as many patients as possible, including underserved populations.

Upfront Costs and Cost Savings

While there are upfront costs to set up technology and coordinate services, hospital at home also provides significant total cost savings when compared to coordination associated with brick-and-mortar admission. Hospital care accounts for about one-third of total medical expenditures and is a leading cause of debt.2 Eliminating fixed hospital costs such as facility, overhead, and equipment costs through adoption of the hospital-at-home model can lead to a reduction in expenditures. It has been found that fewer laboratory and diagnostic tests are ordered for hospital-at-home patients when compared to similar patients in brick-and-mortar hospital settings, with comparable or better clinical patient outcomes.6 Furthermore, it is estimated that there are cost savings of 19% to 30% when compared to traditional inpatient care.6 Without legislative action, upon the end of the current COVID-19 public health emergency, the Centers for Medicare & Medicaid Service’s Acute Hospital Care at Home waiver will terminate. This could slow down scaling of the model.However, over the past 2 years there has been enough buy-in from major health systems and patients to continue the momentum of the model’s growth. When setting up a hospital-at-home program, it would be wise to consider a few factors: where in the hospital or health system entity structure the hospital-at-home program will reside, which existing resources can be leveraged within the hospital or health system, and what are the state or federal regulatory requirements for such a program. This type of program continues to fill gaps within the US health care system, meeting the needs of widely overlooked populations and increasing access to essential ancillary services.

Conclusion

It is time to consider our bias toward hospital-first options when managing the care needs of our patients. Health care providers have the option to advocate for holistic care, better experience, and better outcomes. Home-based options are safe, equitable, and patient-centric. Increased costs, consumerism, and technology have pushed us to think about alternative approaches to patient care delivery, and the pandemic created a unique opportunity to see just how far the health care system could stretch itself with capacity constraints, insufficient resources, and staff shortages. In light of new possibilities, it is time to reimagine and transform our health care delivery system so that it is unified, seamless, cohesive, and flexible.

Corresponding author: Payal Sharma, DNP, MSN, RN, FNP-BC, CBN; psharma@medicallyhome.com.

Disclosures: None reported.

From Medically Home Group, Boston, MA.

Brick-and-mortar hospitals in the United States have historically been considered the dominant setting for providing care to patients. The coordination and delivery of care has previously been bound to physical hospitals largely because multidisciplinary services were only accessible in an individual location. While the fundamental make-up of these services remains unchanged, these services are now available in alternate settings. Some of these services include access to a patient care team, supplies, diagnostics, pharmacy, and advanced therapeutic interventions. Presently, the physical environment is becoming increasingly irrelevant as the core of what makes the traditional hospital—the professional staff, collaborative work processes, and the dynamics of the space—have all been translated into a modern digitally integrated environment. The elements necessary to providing safe, effective care in a physical hospital setting are now available in a patient’s home.

Impetus for the Model

As hospitals reconsider how and where they deliver patient care because of limited resources, the hospital-at-home model has gained significant momentum and interest. This model transforms a home into a hospital. The inpatient acute care episode is entirely substituted with an intensive at-home hospital admission enabled by technology, multidisciplinary teams, and ancillary services. Furthermore, patients requiring post-acute support can be transitioned to their next phase of care seamlessly. Given the nationwide nursing shortage, aging population, challenges uncovered by the COVID-19 pandemic, rising hospital costs, nurse/provider burnout related to challenging work environments, and capacity constraints, a shift toward the combination of virtual and in-home care is imperative. The hospital-at-home model has been associated with superior patient outcomes, including reduced risks of delirium, improved functional status, improved patient and family member satisfaction, reduced mortality, reduced readmissions, and significantly lower costs.1 COVID-19 alone has unmasked major facility-based deficiencies and limitations of our health care system. While the pandemic is not the impetus for the hospital-at-home model, the extended stress of this event has created a unique opportunity to reimagine and transform our health care delivery system so that it is less fragmented and more flexible.

Nursing in the Model

Nursing is central to the hospital-at-home model. Virtual nurses provide meticulous care plan oversight, assessment, and documentation across in-home service providers, to ensure holistic, safe, transparent, and continuous progression toward care plan milestones. The virtual nurse monitors patients using in-home technology that is set up at the time of admission. Connecting with patients to verify social and medical needs, the virtual nurse advocates for their patients and uses these technologies to care and deploy on-demand hands-on services to the patient. Service providers such as paramedics, infusion nurses, or home health nurses may be deployed to provide services in the patient’s home. By bringing in supplies, therapeutics, and interdisciplinary team members, the capabilities of a brick-and-mortar hospital are replicated in the home. All actions that occur wherever the patient is receiving care are overseen by professional nursing staff; in short, virtual nurses are the equivalent of bedside nurses in the brick-and-mortar health care facilities.

Potential Benefits

There are many benefits to the hospital-at-home model (Table). This health care model can be particularly helpful for patients who require frequent admission to acute care facilities, and is well suited for patients with a range of conditions, including those with COVID-19, pneumonia, cellulitis, or congestive heart failure. This care model helps eliminate some of the stressors for patients who have chronic illnesses or other conditions that require frequent hospital admissions. Patients can independently recover at home and can also be surrounded by their loved ones and pets while recovering. This care approach additionally eliminates the risk of hospital-acquired infections and injuries. The hospital-at-home model allows for increased mobility,2 as patients are familiar with their surroundings, resulting in reduced onset of delirium. Additionally, patients with improved mobility performance are less likely to experience negative health outcomes.3 There is less chance of sleep disruption as the patient is sleeping in their own bed—no unfamiliar roommate, no call bells or health care personnel frequently coming into the room. The in-home technology set up for remote patient monitoring is designed with the user in mind. Ease of use empowers the patient to collaborate with their care team on their own terms and center the priorities of themselves and their families.

Positive Outcomes