User login

Not so fast food

As long as I can remember, children have been notoriously wasteful when dining in school cafeterias. Even those children who bring their own food often return home in the afternoon with their lunches half eaten. Not surprisingly, the food tossed out is often the healthier portion of the meal. Schools have tried a variety of strategies to curb this wastage, including using volunteer student monitors to police and encourage ecologically based recycling.

The authors of a recent study published on JAMA Network Open observed that when elementary and middle-school students were allowed a 20-minute seated lunch period they consumed more food and there was significantly less waste of fruits and vegetable compared with when the students’ lunch period was limited to 10 minutes. Interestingly, there was no difference in the beverage and entrée consumption when the lunch period was doubled.

The authors postulate that younger children may not have acquired the dexterity to feed themselves optimally in the shorter lunch period. I’m not sure I buy that argument. It may be simply that the children ate and drank their favorites first and needed a bit more time to allow their little guts to move things along. But, regardless of the explanation, the investigators’ observations deserve further study.

When I was in high school our lunch period was a full hour, which allowed me to make the half mile walk to home and back to eat a home-prepared meal. The noon hour was when school clubs and committees met and there was a full schedule of diversions to fill out the hour. I don’t recall the seated portion of the lunch period having any time restriction.

By the time my own children were in middle school, lunch periods lasted no longer than 20 minutes. I was not surprised to learn from this recent study that in some schools the seated lunch period has been shortened to 10 minutes. In some cases the truncated lunch periods are a response to space and time limitations. I fear that occasionally, educators and administrators have found it so difficult to keep young children who are accustomed to watching television while they eat engaged that the periods have been shortened to minimize the chaos.

Here in Maine, the governor has just announced plans to offer free breakfast and lunch to every student in response to a federal initiative. If we intend to make nutrition a cornerstone of the educational process this study from the University of Illinois at Urbana-Champaign suggests that we must do more than simply provide the food at no cost. We must somehow carve out more time in the day for the children to eat a healthy diet.

But, where is this time going to come from? Many school systems have already cannibalized physical education to the point that most children are not getting a healthy amount of exercise. It is unfortunate that we have come to expect public school systems to solve all of our societal ills and compensate for less-than-healthy home environments. But that is the reality. If we think nutrition and physical activity are important components of our children’s educations then we must make the time necessary to provide them.

Will this mean longer school days? And will those longer days cost money? You bet they will, but that may be the price we have to pay for healthier, better educated children.

Dr. Wilkoff practiced primary care pediatrics in Brunswick, Maine, for nearly 40 years. He has authored several books on behavioral pediatrics, including “How to Say No to Your Toddler.” Other than a Littman stethoscope he accepted as a first-year medical student in 1966, Dr. Wilkoff reports having nothing to disclose. Email him at pdnews@mdedge.com.

As long as I can remember, children have been notoriously wasteful when dining in school cafeterias. Even those children who bring their own food often return home in the afternoon with their lunches half eaten. Not surprisingly, the food tossed out is often the healthier portion of the meal. Schools have tried a variety of strategies to curb this wastage, including using volunteer student monitors to police and encourage ecologically based recycling.

The authors of a recent study published on JAMA Network Open observed that when elementary and middle-school students were allowed a 20-minute seated lunch period they consumed more food and there was significantly less waste of fruits and vegetable compared with when the students’ lunch period was limited to 10 minutes. Interestingly, there was no difference in the beverage and entrée consumption when the lunch period was doubled.

The authors postulate that younger children may not have acquired the dexterity to feed themselves optimally in the shorter lunch period. I’m not sure I buy that argument. It may be simply that the children ate and drank their favorites first and needed a bit more time to allow their little guts to move things along. But, regardless of the explanation, the investigators’ observations deserve further study.

When I was in high school our lunch period was a full hour, which allowed me to make the half mile walk to home and back to eat a home-prepared meal. The noon hour was when school clubs and committees met and there was a full schedule of diversions to fill out the hour. I don’t recall the seated portion of the lunch period having any time restriction.

By the time my own children were in middle school, lunch periods lasted no longer than 20 minutes. I was not surprised to learn from this recent study that in some schools the seated lunch period has been shortened to 10 minutes. In some cases the truncated lunch periods are a response to space and time limitations. I fear that occasionally, educators and administrators have found it so difficult to keep young children who are accustomed to watching television while they eat engaged that the periods have been shortened to minimize the chaos.

Here in Maine, the governor has just announced plans to offer free breakfast and lunch to every student in response to a federal initiative. If we intend to make nutrition a cornerstone of the educational process this study from the University of Illinois at Urbana-Champaign suggests that we must do more than simply provide the food at no cost. We must somehow carve out more time in the day for the children to eat a healthy diet.

But, where is this time going to come from? Many school systems have already cannibalized physical education to the point that most children are not getting a healthy amount of exercise. It is unfortunate that we have come to expect public school systems to solve all of our societal ills and compensate for less-than-healthy home environments. But that is the reality. If we think nutrition and physical activity are important components of our children’s educations then we must make the time necessary to provide them.

Will this mean longer school days? And will those longer days cost money? You bet they will, but that may be the price we have to pay for healthier, better educated children.

Dr. Wilkoff practiced primary care pediatrics in Brunswick, Maine, for nearly 40 years. He has authored several books on behavioral pediatrics, including “How to Say No to Your Toddler.” Other than a Littman stethoscope he accepted as a first-year medical student in 1966, Dr. Wilkoff reports having nothing to disclose. Email him at pdnews@mdedge.com.

As long as I can remember, children have been notoriously wasteful when dining in school cafeterias. Even those children who bring their own food often return home in the afternoon with their lunches half eaten. Not surprisingly, the food tossed out is often the healthier portion of the meal. Schools have tried a variety of strategies to curb this wastage, including using volunteer student monitors to police and encourage ecologically based recycling.

The authors of a recent study published on JAMA Network Open observed that when elementary and middle-school students were allowed a 20-minute seated lunch period they consumed more food and there was significantly less waste of fruits and vegetable compared with when the students’ lunch period was limited to 10 minutes. Interestingly, there was no difference in the beverage and entrée consumption when the lunch period was doubled.

The authors postulate that younger children may not have acquired the dexterity to feed themselves optimally in the shorter lunch period. I’m not sure I buy that argument. It may be simply that the children ate and drank their favorites first and needed a bit more time to allow their little guts to move things along. But, regardless of the explanation, the investigators’ observations deserve further study.

When I was in high school our lunch period was a full hour, which allowed me to make the half mile walk to home and back to eat a home-prepared meal. The noon hour was when school clubs and committees met and there was a full schedule of diversions to fill out the hour. I don’t recall the seated portion of the lunch period having any time restriction.

By the time my own children were in middle school, lunch periods lasted no longer than 20 minutes. I was not surprised to learn from this recent study that in some schools the seated lunch period has been shortened to 10 minutes. In some cases the truncated lunch periods are a response to space and time limitations. I fear that occasionally, educators and administrators have found it so difficult to keep young children who are accustomed to watching television while they eat engaged that the periods have been shortened to minimize the chaos.

Here in Maine, the governor has just announced plans to offer free breakfast and lunch to every student in response to a federal initiative. If we intend to make nutrition a cornerstone of the educational process this study from the University of Illinois at Urbana-Champaign suggests that we must do more than simply provide the food at no cost. We must somehow carve out more time in the day for the children to eat a healthy diet.

But, where is this time going to come from? Many school systems have already cannibalized physical education to the point that most children are not getting a healthy amount of exercise. It is unfortunate that we have come to expect public school systems to solve all of our societal ills and compensate for less-than-healthy home environments. But that is the reality. If we think nutrition and physical activity are important components of our children’s educations then we must make the time necessary to provide them.

Will this mean longer school days? And will those longer days cost money? You bet they will, but that may be the price we have to pay for healthier, better educated children.

Dr. Wilkoff practiced primary care pediatrics in Brunswick, Maine, for nearly 40 years. He has authored several books on behavioral pediatrics, including “How to Say No to Your Toddler.” Other than a Littman stethoscope he accepted as a first-year medical student in 1966, Dr. Wilkoff reports having nothing to disclose. Email him at pdnews@mdedge.com.

Children and COVID: New cases soar to near-record level

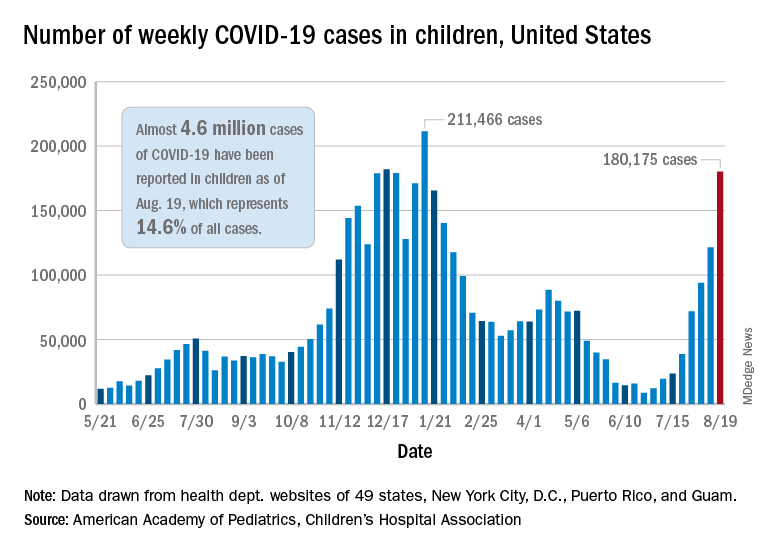

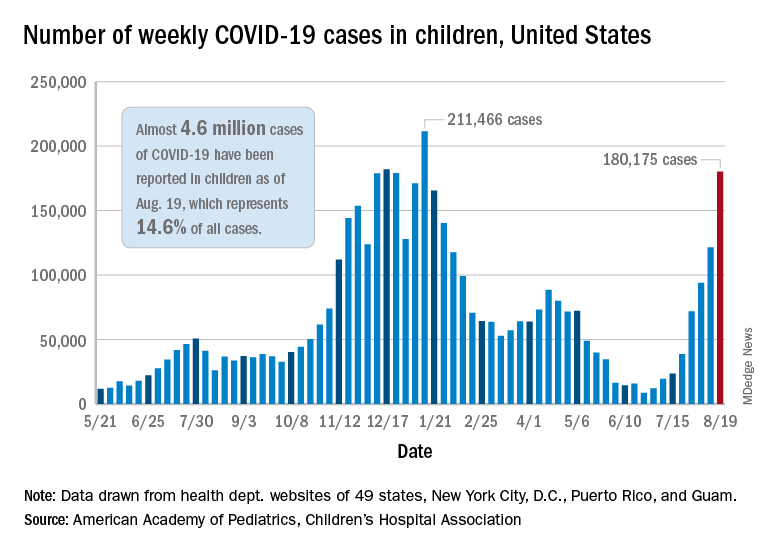

Weekly cases of COVID-19 in children jumped by nearly 50% in the United States, posting the highest count since hitting a pandemic high back in mid-January, a new report shows.

The latest weekly figure represents a 48% increase over the previous week and an increase of over 2,000% in the 8 weeks since the national count dropped to a low of 8,500 cases for the week of June 18-24, the American Academy of Pediatrics and the Children’s Hospital Association said in their weekly COVID report.

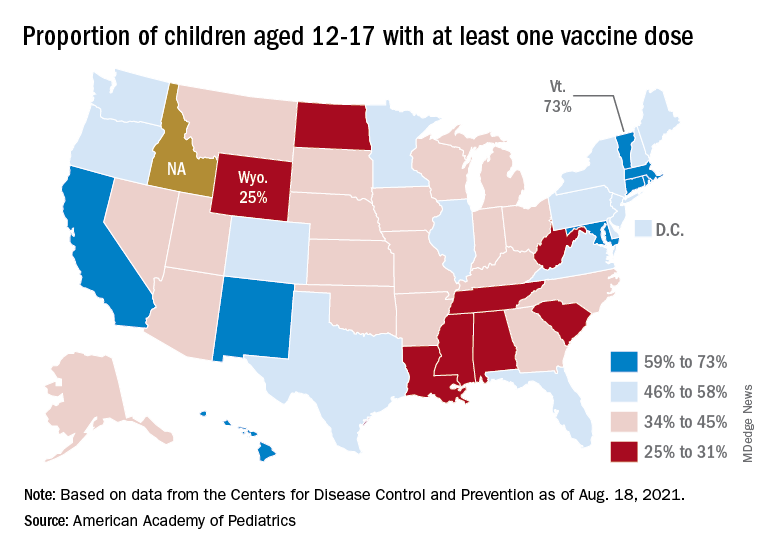

Vaccinations, in the meantime, appear to be headed in the opposite direction. Vaccine initiations were down for the second consecutive week, falling by 18% among 12- to 15-year-olds and by 15% in those aged 16-17 years, according to data from the Centers for Disease Control and Prevention.

Nationally, about 47% of children aged 12-15 and 56% of those aged 16-17 have received at least one dose of COVID vaccine as of Aug. 23, with 34% and 44%, respectively, reaching full vaccination. The total number of children with at least one dose is 11.6 million, including a relatively small number (about 200,000) of children under age 12 years, the CDC said on its COVID Data Tracker.

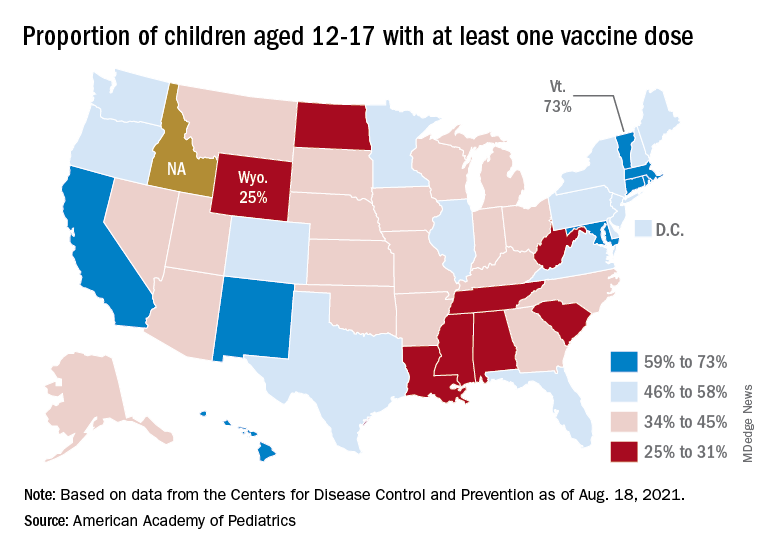

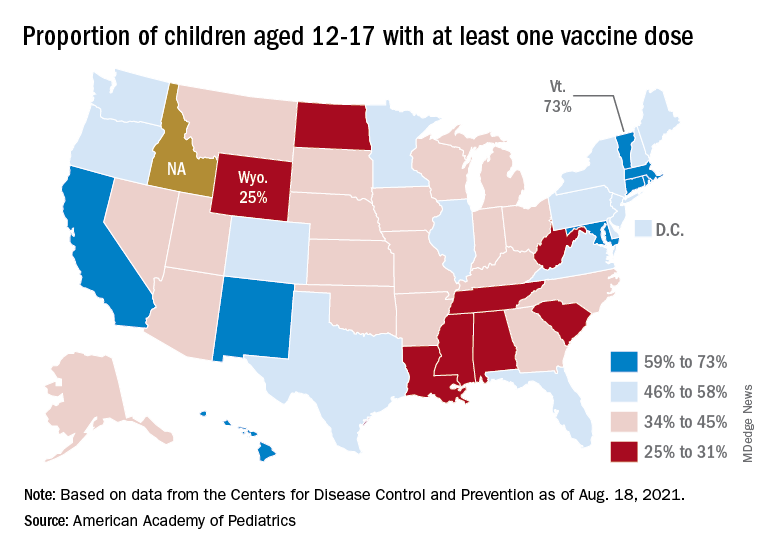

At the state level, vaccination is a source of considerable disparity. In Vermont, 73% of children aged 12-17 had received at least one dose by Aug. 18, and 63% were fully vaccinated. In Wyoming, however, just 25% of children had received at least one dose (17% are fully vaccinated), while Alabama has a lowest-in-the-nation full vaccination rate of 14%, based on a separate AAP analysis of CDC data.

There are seven states in which over 60% of 12- to 17-year-olds have at least started the vaccine regimen and five states where less than 30% have received at least one dose, the AAP noted.

Back on the incidence side of the pandemic, Mississippi and Hawaii had the largest increases in new cases over the past 2 weeks, followed by Florida and West Virginia. Cumulative figures show that California has had the most cases overall in children (550,337), Vermont has the highest proportion of all cases in children (22.9%), and Rhode Island has the highest rate of cases per 100,000 (10,636), the AAP and CHA said in the joint report based on data from 49 states, the District of Columbia, New York City, Puerto Rico, and Guam.

Add up all those jurisdictions, and it works out to 4.6 million children infected with SARS-CoV-2 as of Aug. 19, with children representing 14.6% of all cases since the start of the pandemic. There have been over 18,000 hospitalizations so far, which is just 2.3% of the total for all ages in the 23 states (and New York City) that are reporting such data on their health department websites, the AAP and CHA said.

The number of COVID-related deaths in children is now 402 after the largest 1-week increase (24) since late May of 2020, when the AAP/CHA coverage began. Mortality data by age are available from 44 states, New York City, Puerto Rico, and Guam.

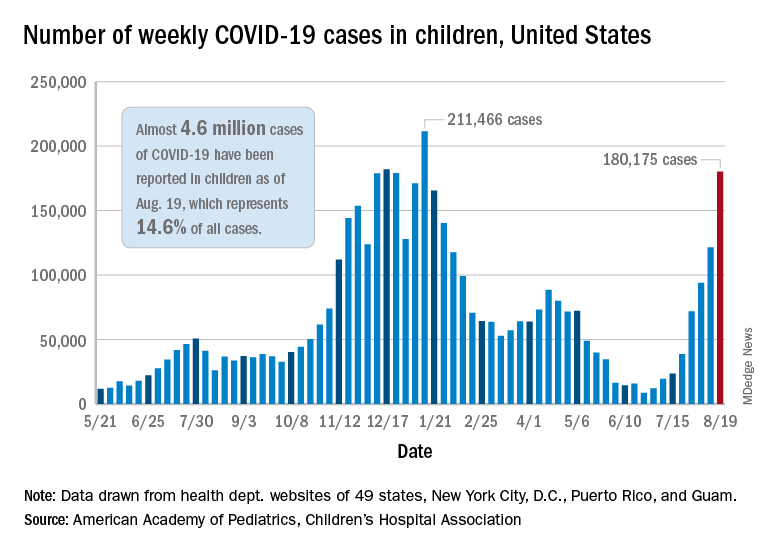

Weekly cases of COVID-19 in children jumped by nearly 50% in the United States, posting the highest count since hitting a pandemic high back in mid-January, a new report shows.

The latest weekly figure represents a 48% increase over the previous week and an increase of over 2,000% in the 8 weeks since the national count dropped to a low of 8,500 cases for the week of June 18-24, the American Academy of Pediatrics and the Children’s Hospital Association said in their weekly COVID report.

Vaccinations, in the meantime, appear to be headed in the opposite direction. Vaccine initiations were down for the second consecutive week, falling by 18% among 12- to 15-year-olds and by 15% in those aged 16-17 years, according to data from the Centers for Disease Control and Prevention.

Nationally, about 47% of children aged 12-15 and 56% of those aged 16-17 have received at least one dose of COVID vaccine as of Aug. 23, with 34% and 44%, respectively, reaching full vaccination. The total number of children with at least one dose is 11.6 million, including a relatively small number (about 200,000) of children under age 12 years, the CDC said on its COVID Data Tracker.

At the state level, vaccination is a source of considerable disparity. In Vermont, 73% of children aged 12-17 had received at least one dose by Aug. 18, and 63% were fully vaccinated. In Wyoming, however, just 25% of children had received at least one dose (17% are fully vaccinated), while Alabama has a lowest-in-the-nation full vaccination rate of 14%, based on a separate AAP analysis of CDC data.

There are seven states in which over 60% of 12- to 17-year-olds have at least started the vaccine regimen and five states where less than 30% have received at least one dose, the AAP noted.

Back on the incidence side of the pandemic, Mississippi and Hawaii had the largest increases in new cases over the past 2 weeks, followed by Florida and West Virginia. Cumulative figures show that California has had the most cases overall in children (550,337), Vermont has the highest proportion of all cases in children (22.9%), and Rhode Island has the highest rate of cases per 100,000 (10,636), the AAP and CHA said in the joint report based on data from 49 states, the District of Columbia, New York City, Puerto Rico, and Guam.

Add up all those jurisdictions, and it works out to 4.6 million children infected with SARS-CoV-2 as of Aug. 19, with children representing 14.6% of all cases since the start of the pandemic. There have been over 18,000 hospitalizations so far, which is just 2.3% of the total for all ages in the 23 states (and New York City) that are reporting such data on their health department websites, the AAP and CHA said.

The number of COVID-related deaths in children is now 402 after the largest 1-week increase (24) since late May of 2020, when the AAP/CHA coverage began. Mortality data by age are available from 44 states, New York City, Puerto Rico, and Guam.

Weekly cases of COVID-19 in children jumped by nearly 50% in the United States, posting the highest count since hitting a pandemic high back in mid-January, a new report shows.

The latest weekly figure represents a 48% increase over the previous week and an increase of over 2,000% in the 8 weeks since the national count dropped to a low of 8,500 cases for the week of June 18-24, the American Academy of Pediatrics and the Children’s Hospital Association said in their weekly COVID report.

Vaccinations, in the meantime, appear to be headed in the opposite direction. Vaccine initiations were down for the second consecutive week, falling by 18% among 12- to 15-year-olds and by 15% in those aged 16-17 years, according to data from the Centers for Disease Control and Prevention.

Nationally, about 47% of children aged 12-15 and 56% of those aged 16-17 have received at least one dose of COVID vaccine as of Aug. 23, with 34% and 44%, respectively, reaching full vaccination. The total number of children with at least one dose is 11.6 million, including a relatively small number (about 200,000) of children under age 12 years, the CDC said on its COVID Data Tracker.

At the state level, vaccination is a source of considerable disparity. In Vermont, 73% of children aged 12-17 had received at least one dose by Aug. 18, and 63% were fully vaccinated. In Wyoming, however, just 25% of children had received at least one dose (17% are fully vaccinated), while Alabama has a lowest-in-the-nation full vaccination rate of 14%, based on a separate AAP analysis of CDC data.

There are seven states in which over 60% of 12- to 17-year-olds have at least started the vaccine regimen and five states where less than 30% have received at least one dose, the AAP noted.

Back on the incidence side of the pandemic, Mississippi and Hawaii had the largest increases in new cases over the past 2 weeks, followed by Florida and West Virginia. Cumulative figures show that California has had the most cases overall in children (550,337), Vermont has the highest proportion of all cases in children (22.9%), and Rhode Island has the highest rate of cases per 100,000 (10,636), the AAP and CHA said in the joint report based on data from 49 states, the District of Columbia, New York City, Puerto Rico, and Guam.

Add up all those jurisdictions, and it works out to 4.6 million children infected with SARS-CoV-2 as of Aug. 19, with children representing 14.6% of all cases since the start of the pandemic. There have been over 18,000 hospitalizations so far, which is just 2.3% of the total for all ages in the 23 states (and New York City) that are reporting such data on their health department websites, the AAP and CHA said.

The number of COVID-related deaths in children is now 402 after the largest 1-week increase (24) since late May of 2020, when the AAP/CHA coverage began. Mortality data by age are available from 44 states, New York City, Puerto Rico, and Guam.

U.S. kidney transplants grow in number and success

During 2016-2019, U.S. centers performed kidney transplants in nearly 77,000 patients, a jump of almost 25% compared with 4-year averages of about 62,000 patients throughout 2004-2015. That works out to about 15,000 more patients receiving donor kidneys, Sundaram Hariharan, MD, and associates reported in the New England Journal of Medicine in a review of all U.S. renal transplantations performed during 1996-2019.

Coupled with the volume uptick during this 24-year period were new lows in graft losses and patient deaths. By 2018, mortality during the first year following transplantation occurred at about a 1% rate among patients who had received a kidney from a living donor, and at about a 3% rate when the organ came from a deceased donor, nearly half the rate of 2 decades earlier, in 1996. Rates of first-year graft loss during 2017 were also about half of what they had been in 1996, occurring in about 2% of patients who received a living donor organ and in about 6% of those who got a kidney from a deceased donor during 2017.

“Twenty years ago, kidney transplantation was the preferred option compared with dialysis, and even more so now,” summed up Dr. Hariharan, a senior transplant nephrologist and professor of medicine and surgery at the University of Pittsburgh Medical Center and first author of the report. Kidney transplantation survival at U.S. centers “improved steadily over the past 24 years, despite patient variables becoming worse,” he said in an interview.

Kidney recipients are older, more obese, and have more prevalent diabetes

During the period studied, kidney transplant recipients became on average older and more obese, and had a higher prevalence of diabetes; the age of organ donors grew as well. The prevalence of diabetes among patients who received a kidney from a deceased donor increased from 24% during 1996-1999 to 36% during 2016-2019, while diabetes prevalence among recipients of an organ from a living donor rose from 25% in 1996-1999 to 29% during 2016-2019.

The improved graft and patient survival numbers “are very encouraging trends,” said Michelle A. Josephson, MD, professor and medical director of kidney transplantation at the University of Chicago, who was not involved with the report. “We have been hearing for a number of years that short-term graft survival had improved, but I’m thrilled to learn that long-term survival has also improved.”

The report documented 10-year survival of graft recipients during 2008-2011 of 67%, up from 61% during 1996-1999, and a 10-year overall graft survival rate of 54% in the 2008-2011 cohort, an improvement from the 42% rate in patients who received their organs in 1996-1999, changes Dr. Hariharan characterized as “modest.”

These improvements in long-term graft and patient survival are “meaningful, and particularly notable that outcomes improved despite increased complexity of the transplant population,” said Krista L. Lentine, MD, PhD, professor and medical director of living donation at Saint Louis University. But “despite these improvements, long-term graft survival remains limited,” she cautioned, especially because of risks for substantial complications from chronic immunosuppressive treatment including infection, cancer, glucose intolerance, and dyslipidemia.

The analysis reported by Dr. Hariharan and his associates used data collected by the Scientific Registry of Transplant Patients, run under contract with the U.S. Department of Health and Human Services, which has tracked all patients who have had kidney transplants at U.S. centers since the late 1980s, said Dr. Hariharan. The database included just over 362,000 total transplants during the 24-year period studied, with 36% of all transplants involving organs from living donors with the remaining patients receiving kidneys from deceased donors.

Living donations still stagnant; deceased-donor kidneys rise

The data showed that the rate of transplants from living donors was stagnant for 2 decades, with 22,525 patients transplanted during 2000-2003, and 23,746 transplanted during 2016-2019, with very similar rates during the intervening years. The recent spurt in transplants during 2016-2019 compared with the preceding decade depended almost entirely on kidneys from deceased donors. This rate jumped from the steady, slow rise it showed during 1996-2015, when deceased-donor transplants rose from about 30,000 during 1996-1999 to about 41,000 during 2012-2015, to a more dramatic increase of about 12,000 additional transplants during the most recent period, adding up to a total of more than 53,000 transplants from deceased donors during 2016-2019.

“I strongly recommend organs from living donors” when feasible, said Dr. Hariharan. “At some centers, a high proportion of transplants use living donors, but not at other centers,” he said.

It’s unknown why transplants using organs from deceased donors has shown this growth, but Dr. Hariharan suggested a multifactorial explanation. Those factors include growth in the number of patients with end-stage renal disease who require dialysis, increased numbers of patients listed for kidney transplant, new approaches that allow organs from older donors and those infected with pathogens such as hepatitis C virus or HIV, greater numbers of people and families agreeing to donate organs, and possibly the opioid crisis that may have led to increased organ donation. The number of U.S. centers performing kidney transplants rose from fewer than 200 about a quarter of a century ago to about 250 today, he added.

‘Immuno Bill’ guarantees Medicare coverage for immunosuppression

Dr. Hariharan voiced optimism that graft and patient survival rates will continue to improve going forward. One factor will likely be the passage in late 2020 of the “Immuno Bill” by the U.S. Congress, which among other things mandated ongoing coverage starting in 2023 for immunosuppressive drugs for all Medicare beneficiaries with a kidney transplant. Until then, Medicare provides coverage for only 36 months, a time limit that has resulted in nearly 400 kidney recipients annually losing coverage of their immunosuppression medications.

Dr. Hariharan and coauthors called the existing potential for discontinuation of immunosuppressive drug an “unnecessary impediment to long-term survival for which patients and society paid a heavy price.”

“Kidney transplantation, especially from living donors, offers patients with kidney failure the best chance for long-term survival and improved quality of life, with lower cost to the health care system,” Dr. Lentine said in an interview. Despite the many positive trends detailed in the report from Dr. Hariharan and coauthors, “the vast majority of the more than 700,000 people in the United States with kidney failure will not have an opportunity to receive a transplant due to limitations in organ supply.” And many patients who receive a kidney transplant eventually must resume dialysis because of “limited long-term graft survival resulting from allograft nephropathy, recurrent native disease, medication nonadherence, or other causes.” Plus many potentially transplantable organs go unused.

Dr. Lentine cited a position statement issued in July 2021 by the National Kidney Foundation that made several recommendations on how to improve access to kidney transplants and improve outcomes. “Expanding opportunities for safe living donation, eliminating racial disparities in living-donor access, improving wait-list access and transport readiness, maximizing use of deceased-donor organs, and extending graft longevity are critical priorities,” said Dr. Lentine, lead author on the statement.

“For many or even most patients with kidney failure transplantation is the optimal form of renal replacement. The better recent outcomes and evolving management strategies make transplantation an even more attractive option,” said Dr. Josephson. Improved outcomes among U.S. transplant patients also highlights the “importance of increasing access to kidney transplantation” for all people with kidney failure who could benefit from this treatment, she added.

Dr. Hariharan and Dr. Lentine had no relevant disclosures. Dr. Josephson has been a consultant to UCB and has an ownership interest in Seagen.

During 2016-2019, U.S. centers performed kidney transplants in nearly 77,000 patients, a jump of almost 25% compared with 4-year averages of about 62,000 patients throughout 2004-2015. That works out to about 15,000 more patients receiving donor kidneys, Sundaram Hariharan, MD, and associates reported in the New England Journal of Medicine in a review of all U.S. renal transplantations performed during 1996-2019.

Coupled with the volume uptick during this 24-year period were new lows in graft losses and patient deaths. By 2018, mortality during the first year following transplantation occurred at about a 1% rate among patients who had received a kidney from a living donor, and at about a 3% rate when the organ came from a deceased donor, nearly half the rate of 2 decades earlier, in 1996. Rates of first-year graft loss during 2017 were also about half of what they had been in 1996, occurring in about 2% of patients who received a living donor organ and in about 6% of those who got a kidney from a deceased donor during 2017.

“Twenty years ago, kidney transplantation was the preferred option compared with dialysis, and even more so now,” summed up Dr. Hariharan, a senior transplant nephrologist and professor of medicine and surgery at the University of Pittsburgh Medical Center and first author of the report. Kidney transplantation survival at U.S. centers “improved steadily over the past 24 years, despite patient variables becoming worse,” he said in an interview.

Kidney recipients are older, more obese, and have more prevalent diabetes

During the period studied, kidney transplant recipients became on average older and more obese, and had a higher prevalence of diabetes; the age of organ donors grew as well. The prevalence of diabetes among patients who received a kidney from a deceased donor increased from 24% during 1996-1999 to 36% during 2016-2019, while diabetes prevalence among recipients of an organ from a living donor rose from 25% in 1996-1999 to 29% during 2016-2019.

The improved graft and patient survival numbers “are very encouraging trends,” said Michelle A. Josephson, MD, professor and medical director of kidney transplantation at the University of Chicago, who was not involved with the report. “We have been hearing for a number of years that short-term graft survival had improved, but I’m thrilled to learn that long-term survival has also improved.”

The report documented 10-year survival of graft recipients during 2008-2011 of 67%, up from 61% during 1996-1999, and a 10-year overall graft survival rate of 54% in the 2008-2011 cohort, an improvement from the 42% rate in patients who received their organs in 1996-1999, changes Dr. Hariharan characterized as “modest.”

These improvements in long-term graft and patient survival are “meaningful, and particularly notable that outcomes improved despite increased complexity of the transplant population,” said Krista L. Lentine, MD, PhD, professor and medical director of living donation at Saint Louis University. But “despite these improvements, long-term graft survival remains limited,” she cautioned, especially because of risks for substantial complications from chronic immunosuppressive treatment including infection, cancer, glucose intolerance, and dyslipidemia.

The analysis reported by Dr. Hariharan and his associates used data collected by the Scientific Registry of Transplant Patients, run under contract with the U.S. Department of Health and Human Services, which has tracked all patients who have had kidney transplants at U.S. centers since the late 1980s, said Dr. Hariharan. The database included just over 362,000 total transplants during the 24-year period studied, with 36% of all transplants involving organs from living donors with the remaining patients receiving kidneys from deceased donors.

Living donations still stagnant; deceased-donor kidneys rise

The data showed that the rate of transplants from living donors was stagnant for 2 decades, with 22,525 patients transplanted during 2000-2003, and 23,746 transplanted during 2016-2019, with very similar rates during the intervening years. The recent spurt in transplants during 2016-2019 compared with the preceding decade depended almost entirely on kidneys from deceased donors. This rate jumped from the steady, slow rise it showed during 1996-2015, when deceased-donor transplants rose from about 30,000 during 1996-1999 to about 41,000 during 2012-2015, to a more dramatic increase of about 12,000 additional transplants during the most recent period, adding up to a total of more than 53,000 transplants from deceased donors during 2016-2019.

“I strongly recommend organs from living donors” when feasible, said Dr. Hariharan. “At some centers, a high proportion of transplants use living donors, but not at other centers,” he said.

It’s unknown why transplants using organs from deceased donors has shown this growth, but Dr. Hariharan suggested a multifactorial explanation. Those factors include growth in the number of patients with end-stage renal disease who require dialysis, increased numbers of patients listed for kidney transplant, new approaches that allow organs from older donors and those infected with pathogens such as hepatitis C virus or HIV, greater numbers of people and families agreeing to donate organs, and possibly the opioid crisis that may have led to increased organ donation. The number of U.S. centers performing kidney transplants rose from fewer than 200 about a quarter of a century ago to about 250 today, he added.

‘Immuno Bill’ guarantees Medicare coverage for immunosuppression

Dr. Hariharan voiced optimism that graft and patient survival rates will continue to improve going forward. One factor will likely be the passage in late 2020 of the “Immuno Bill” by the U.S. Congress, which among other things mandated ongoing coverage starting in 2023 for immunosuppressive drugs for all Medicare beneficiaries with a kidney transplant. Until then, Medicare provides coverage for only 36 months, a time limit that has resulted in nearly 400 kidney recipients annually losing coverage of their immunosuppression medications.

Dr. Hariharan and coauthors called the existing potential for discontinuation of immunosuppressive drug an “unnecessary impediment to long-term survival for which patients and society paid a heavy price.”

“Kidney transplantation, especially from living donors, offers patients with kidney failure the best chance for long-term survival and improved quality of life, with lower cost to the health care system,” Dr. Lentine said in an interview. Despite the many positive trends detailed in the report from Dr. Hariharan and coauthors, “the vast majority of the more than 700,000 people in the United States with kidney failure will not have an opportunity to receive a transplant due to limitations in organ supply.” And many patients who receive a kidney transplant eventually must resume dialysis because of “limited long-term graft survival resulting from allograft nephropathy, recurrent native disease, medication nonadherence, or other causes.” Plus many potentially transplantable organs go unused.

Dr. Lentine cited a position statement issued in July 2021 by the National Kidney Foundation that made several recommendations on how to improve access to kidney transplants and improve outcomes. “Expanding opportunities for safe living donation, eliminating racial disparities in living-donor access, improving wait-list access and transport readiness, maximizing use of deceased-donor organs, and extending graft longevity are critical priorities,” said Dr. Lentine, lead author on the statement.

“For many or even most patients with kidney failure transplantation is the optimal form of renal replacement. The better recent outcomes and evolving management strategies make transplantation an even more attractive option,” said Dr. Josephson. Improved outcomes among U.S. transplant patients also highlights the “importance of increasing access to kidney transplantation” for all people with kidney failure who could benefit from this treatment, she added.

Dr. Hariharan and Dr. Lentine had no relevant disclosures. Dr. Josephson has been a consultant to UCB and has an ownership interest in Seagen.

During 2016-2019, U.S. centers performed kidney transplants in nearly 77,000 patients, a jump of almost 25% compared with 4-year averages of about 62,000 patients throughout 2004-2015. That works out to about 15,000 more patients receiving donor kidneys, Sundaram Hariharan, MD, and associates reported in the New England Journal of Medicine in a review of all U.S. renal transplantations performed during 1996-2019.

Coupled with the volume uptick during this 24-year period were new lows in graft losses and patient deaths. By 2018, mortality during the first year following transplantation occurred at about a 1% rate among patients who had received a kidney from a living donor, and at about a 3% rate when the organ came from a deceased donor, nearly half the rate of 2 decades earlier, in 1996. Rates of first-year graft loss during 2017 were also about half of what they had been in 1996, occurring in about 2% of patients who received a living donor organ and in about 6% of those who got a kidney from a deceased donor during 2017.

“Twenty years ago, kidney transplantation was the preferred option compared with dialysis, and even more so now,” summed up Dr. Hariharan, a senior transplant nephrologist and professor of medicine and surgery at the University of Pittsburgh Medical Center and first author of the report. Kidney transplantation survival at U.S. centers “improved steadily over the past 24 years, despite patient variables becoming worse,” he said in an interview.

Kidney recipients are older, more obese, and have more prevalent diabetes

During the period studied, kidney transplant recipients became on average older and more obese, and had a higher prevalence of diabetes; the age of organ donors grew as well. The prevalence of diabetes among patients who received a kidney from a deceased donor increased from 24% during 1996-1999 to 36% during 2016-2019, while diabetes prevalence among recipients of an organ from a living donor rose from 25% in 1996-1999 to 29% during 2016-2019.

The improved graft and patient survival numbers “are very encouraging trends,” said Michelle A. Josephson, MD, professor and medical director of kidney transplantation at the University of Chicago, who was not involved with the report. “We have been hearing for a number of years that short-term graft survival had improved, but I’m thrilled to learn that long-term survival has also improved.”

The report documented 10-year survival of graft recipients during 2008-2011 of 67%, up from 61% during 1996-1999, and a 10-year overall graft survival rate of 54% in the 2008-2011 cohort, an improvement from the 42% rate in patients who received their organs in 1996-1999, changes Dr. Hariharan characterized as “modest.”

These improvements in long-term graft and patient survival are “meaningful, and particularly notable that outcomes improved despite increased complexity of the transplant population,” said Krista L. Lentine, MD, PhD, professor and medical director of living donation at Saint Louis University. But “despite these improvements, long-term graft survival remains limited,” she cautioned, especially because of risks for substantial complications from chronic immunosuppressive treatment including infection, cancer, glucose intolerance, and dyslipidemia.

The analysis reported by Dr. Hariharan and his associates used data collected by the Scientific Registry of Transplant Patients, run under contract with the U.S. Department of Health and Human Services, which has tracked all patients who have had kidney transplants at U.S. centers since the late 1980s, said Dr. Hariharan. The database included just over 362,000 total transplants during the 24-year period studied, with 36% of all transplants involving organs from living donors with the remaining patients receiving kidneys from deceased donors.

Living donations still stagnant; deceased-donor kidneys rise

The data showed that the rate of transplants from living donors was stagnant for 2 decades, with 22,525 patients transplanted during 2000-2003, and 23,746 transplanted during 2016-2019, with very similar rates during the intervening years. The recent spurt in transplants during 2016-2019 compared with the preceding decade depended almost entirely on kidneys from deceased donors. This rate jumped from the steady, slow rise it showed during 1996-2015, when deceased-donor transplants rose from about 30,000 during 1996-1999 to about 41,000 during 2012-2015, to a more dramatic increase of about 12,000 additional transplants during the most recent period, adding up to a total of more than 53,000 transplants from deceased donors during 2016-2019.

“I strongly recommend organs from living donors” when feasible, said Dr. Hariharan. “At some centers, a high proportion of transplants use living donors, but not at other centers,” he said.

It’s unknown why transplants using organs from deceased donors has shown this growth, but Dr. Hariharan suggested a multifactorial explanation. Those factors include growth in the number of patients with end-stage renal disease who require dialysis, increased numbers of patients listed for kidney transplant, new approaches that allow organs from older donors and those infected with pathogens such as hepatitis C virus or HIV, greater numbers of people and families agreeing to donate organs, and possibly the opioid crisis that may have led to increased organ donation. The number of U.S. centers performing kidney transplants rose from fewer than 200 about a quarter of a century ago to about 250 today, he added.

‘Immuno Bill’ guarantees Medicare coverage for immunosuppression

Dr. Hariharan voiced optimism that graft and patient survival rates will continue to improve going forward. One factor will likely be the passage in late 2020 of the “Immuno Bill” by the U.S. Congress, which among other things mandated ongoing coverage starting in 2023 for immunosuppressive drugs for all Medicare beneficiaries with a kidney transplant. Until then, Medicare provides coverage for only 36 months, a time limit that has resulted in nearly 400 kidney recipients annually losing coverage of their immunosuppression medications.

Dr. Hariharan and coauthors called the existing potential for discontinuation of immunosuppressive drug an “unnecessary impediment to long-term survival for which patients and society paid a heavy price.”

“Kidney transplantation, especially from living donors, offers patients with kidney failure the best chance for long-term survival and improved quality of life, with lower cost to the health care system,” Dr. Lentine said in an interview. Despite the many positive trends detailed in the report from Dr. Hariharan and coauthors, “the vast majority of the more than 700,000 people in the United States with kidney failure will not have an opportunity to receive a transplant due to limitations in organ supply.” And many patients who receive a kidney transplant eventually must resume dialysis because of “limited long-term graft survival resulting from allograft nephropathy, recurrent native disease, medication nonadherence, or other causes.” Plus many potentially transplantable organs go unused.

Dr. Lentine cited a position statement issued in July 2021 by the National Kidney Foundation that made several recommendations on how to improve access to kidney transplants and improve outcomes. “Expanding opportunities for safe living donation, eliminating racial disparities in living-donor access, improving wait-list access and transport readiness, maximizing use of deceased-donor organs, and extending graft longevity are critical priorities,” said Dr. Lentine, lead author on the statement.

“For many or even most patients with kidney failure transplantation is the optimal form of renal replacement. The better recent outcomes and evolving management strategies make transplantation an even more attractive option,” said Dr. Josephson. Improved outcomes among U.S. transplant patients also highlights the “importance of increasing access to kidney transplantation” for all people with kidney failure who could benefit from this treatment, she added.

Dr. Hariharan and Dr. Lentine had no relevant disclosures. Dr. Josephson has been a consultant to UCB and has an ownership interest in Seagen.

FROM THE NEW ENGLAND JOURNAL OF MEDICINE

Prevalence of youth-onset diabetes climbing, type 2 disease more so in racial/ethnic minorities

The prevalence of youth-onset diabetes in the United States rose significantly from 2001 to 2017, with rates of type 2 diabetes climbing disproportionately among racial/ethnic minorities, according to investigators.

In individuals aged 19 years or younger, prevalence rates of type 1 and type 2 diabetes increased 45.1% and 95.3%, respectively, reported lead author Jean M. Lawrence, ScD, MPH, MSSA, program director of the division of diabetes, endocrinology, and metabolic diseases at the National Institute of Diabetes and Digestive and Kidney Diseases, National Institutes of Health, Bethesda, Md., and colleagues.

“Elucidating differences in diabetes prevalence trends by diabetes type and demographic characteristics is essential to describe the burden of disease and to estimate current and future resource needs,” Dr. Lawrence and colleagues wrote in JAMA.

The retrospective analysis was a part of the ongoing SEARCH study, which includes data from individuals in six areas across the United States: Colorado, California, Ohio, South Carolina, Washington state, and Arizona/New Mexico (Indian Health Services). In the present report, three prevalence years were evaluated: 2001, 2009, and 2017. For each year, approximately 3.5 million youths were included. Findings were reported in terms of diabetes type, race/ethnicity, age at diagnosis, and sex.

Absolute prevalence of type 1 diabetes per 1,000 youths increased from 1.48 in 2001, to 1.93 in 2009, and finally 2.15 in 2017. Across the 16-year period, this represents an absolute increase of 0.67 (95% confidence interval, 0.64-0.70), and a relative increase of 45.1% (95% CI, 40.0%-50.4%). In absolute terms, prevalence increased most among non-Hispanic White (0.93 per 1,000) and non-Hispanic Black (0.89 per 1,000) youths.

While type 2 diabetes was comparatively less common than type 1 diabetes, absolute prevalence per 1,000 youths increased to a greater degree, rising from 0.34 in 2001 to 0.46 in 2009 and to 0.67 in 2017. This amounts to relative increase across the period of 95.3% (95% CI, 77.0%-115.4%). Absolute increases were disproportionate among racial/ethnic minorities, particularly Black and Hispanic youths, who had absolute increases per 1,000 youths of 0.85 (95% CI, 0.74-0.97) and 0.57 (95% CI, 0.51-0.64), respectively, compared with 0.05 (95% CI, 0.03-0.07) for White youths.

“Increases [among Black and Hispanic youths] were not linear,” the investigators noted. “Hispanic youths had a significantly greater increase in the first interval compared with the second interval, while Black youths had no significant increase in the first interval and a significant increase in the second interval.”

Dr. Lawrence and colleagues offered several possible factors driving these trends in type 2 diabetes.

“Changes in anthropometric risk factors appear to play a significant role,” they wrote, noting that “Black and Mexican American teenagers experienced the greatest increase in prevalence of obesity/severe obesity from 1999 to 2018, which may contribute to race and ethnicity differences. Other contributing factors may include increases in exposure to maternal obesity and diabetes (gestational and type 2 diabetes) and exposure to environmental chemicals.”

According to Megan Kelsey, MD, associate professor of pediatric endocrinology, director of lifestyle medicine endocrinology, and medical director of the bariatric surgery center at Children’s Hospital Colorado, Aurora, the increased rates of type 2 diabetes reported by the study are alarming, yet they pale in comparison with what’s been happening since the pandemic began.

“Individual institutions have reported anywhere between a 50% – which is basically what we’re seeing at our hospital – to a 300% increase in new diagnoses [of type 2 diabetes] in a single-year time period,” Dr. Kelsey said in an interview. “So what is reported [in the present study] doesn’t even get at what’s been going on over the past year and a half.”

Dr. Kelsey offered some speculative drivers of this recent surge in cases, including stress, weight gain caused by sedentary behavior and more access to food, and the possibility that SARS-CoV-2 may infect pancreatic islet beta cells, thereby interfering with insulin production.

Type 2 diabetes is particularly concerning among young people, Dr. Kelsey noted, as it is more challenging to manage than adult-onset disease.

Young patients “also develop complications much sooner than you’d expect,” she added. “So we really need to understand why these rates are increasing, how we can identify kids at risk, and how we can better prevent it, so we aren’t stuck with a disease that’s really difficult to treat.”

To this end, the NIH recently opened applications for investigators to participate in a prospective longitudinal study of youth-onset type 2 diabetes. Young people at risk of diabetes will be followed through puberty, a period of increased risk, according to Dr. Kelsey.

“The goal will be to take kids who don’t yet have [type 2] diabetes, but are at risk, and try to better understand, as some of them progress to developing diabetes, what is going on,” Dr. Kelsey said. “What are other factors that we can use to better predict who’s going to develop diabetes? And can we use the information from this [upcoming] study to understand how to better prevent it? Because nothing that has been tried so far has worked.”

The study was supported by the Centers for Disease Control and Prevention, NIDDK, and others. The investigators and Dr. Kelsey reported no conflicts of interest.

The prevalence of youth-onset diabetes in the United States rose significantly from 2001 to 2017, with rates of type 2 diabetes climbing disproportionately among racial/ethnic minorities, according to investigators.

In individuals aged 19 years or younger, prevalence rates of type 1 and type 2 diabetes increased 45.1% and 95.3%, respectively, reported lead author Jean M. Lawrence, ScD, MPH, MSSA, program director of the division of diabetes, endocrinology, and metabolic diseases at the National Institute of Diabetes and Digestive and Kidney Diseases, National Institutes of Health, Bethesda, Md., and colleagues.

“Elucidating differences in diabetes prevalence trends by diabetes type and demographic characteristics is essential to describe the burden of disease and to estimate current and future resource needs,” Dr. Lawrence and colleagues wrote in JAMA.

The retrospective analysis was a part of the ongoing SEARCH study, which includes data from individuals in six areas across the United States: Colorado, California, Ohio, South Carolina, Washington state, and Arizona/New Mexico (Indian Health Services). In the present report, three prevalence years were evaluated: 2001, 2009, and 2017. For each year, approximately 3.5 million youths were included. Findings were reported in terms of diabetes type, race/ethnicity, age at diagnosis, and sex.

Absolute prevalence of type 1 diabetes per 1,000 youths increased from 1.48 in 2001, to 1.93 in 2009, and finally 2.15 in 2017. Across the 16-year period, this represents an absolute increase of 0.67 (95% confidence interval, 0.64-0.70), and a relative increase of 45.1% (95% CI, 40.0%-50.4%). In absolute terms, prevalence increased most among non-Hispanic White (0.93 per 1,000) and non-Hispanic Black (0.89 per 1,000) youths.

While type 2 diabetes was comparatively less common than type 1 diabetes, absolute prevalence per 1,000 youths increased to a greater degree, rising from 0.34 in 2001 to 0.46 in 2009 and to 0.67 in 2017. This amounts to relative increase across the period of 95.3% (95% CI, 77.0%-115.4%). Absolute increases were disproportionate among racial/ethnic minorities, particularly Black and Hispanic youths, who had absolute increases per 1,000 youths of 0.85 (95% CI, 0.74-0.97) and 0.57 (95% CI, 0.51-0.64), respectively, compared with 0.05 (95% CI, 0.03-0.07) for White youths.

“Increases [among Black and Hispanic youths] were not linear,” the investigators noted. “Hispanic youths had a significantly greater increase in the first interval compared with the second interval, while Black youths had no significant increase in the first interval and a significant increase in the second interval.”

Dr. Lawrence and colleagues offered several possible factors driving these trends in type 2 diabetes.

“Changes in anthropometric risk factors appear to play a significant role,” they wrote, noting that “Black and Mexican American teenagers experienced the greatest increase in prevalence of obesity/severe obesity from 1999 to 2018, which may contribute to race and ethnicity differences. Other contributing factors may include increases in exposure to maternal obesity and diabetes (gestational and type 2 diabetes) and exposure to environmental chemicals.”

According to Megan Kelsey, MD, associate professor of pediatric endocrinology, director of lifestyle medicine endocrinology, and medical director of the bariatric surgery center at Children’s Hospital Colorado, Aurora, the increased rates of type 2 diabetes reported by the study are alarming, yet they pale in comparison with what’s been happening since the pandemic began.

“Individual institutions have reported anywhere between a 50% – which is basically what we’re seeing at our hospital – to a 300% increase in new diagnoses [of type 2 diabetes] in a single-year time period,” Dr. Kelsey said in an interview. “So what is reported [in the present study] doesn’t even get at what’s been going on over the past year and a half.”

Dr. Kelsey offered some speculative drivers of this recent surge in cases, including stress, weight gain caused by sedentary behavior and more access to food, and the possibility that SARS-CoV-2 may infect pancreatic islet beta cells, thereby interfering with insulin production.

Type 2 diabetes is particularly concerning among young people, Dr. Kelsey noted, as it is more challenging to manage than adult-onset disease.

Young patients “also develop complications much sooner than you’d expect,” she added. “So we really need to understand why these rates are increasing, how we can identify kids at risk, and how we can better prevent it, so we aren’t stuck with a disease that’s really difficult to treat.”

To this end, the NIH recently opened applications for investigators to participate in a prospective longitudinal study of youth-onset type 2 diabetes. Young people at risk of diabetes will be followed through puberty, a period of increased risk, according to Dr. Kelsey.

“The goal will be to take kids who don’t yet have [type 2] diabetes, but are at risk, and try to better understand, as some of them progress to developing diabetes, what is going on,” Dr. Kelsey said. “What are other factors that we can use to better predict who’s going to develop diabetes? And can we use the information from this [upcoming] study to understand how to better prevent it? Because nothing that has been tried so far has worked.”

The study was supported by the Centers for Disease Control and Prevention, NIDDK, and others. The investigators and Dr. Kelsey reported no conflicts of interest.

The prevalence of youth-onset diabetes in the United States rose significantly from 2001 to 2017, with rates of type 2 diabetes climbing disproportionately among racial/ethnic minorities, according to investigators.

In individuals aged 19 years or younger, prevalence rates of type 1 and type 2 diabetes increased 45.1% and 95.3%, respectively, reported lead author Jean M. Lawrence, ScD, MPH, MSSA, program director of the division of diabetes, endocrinology, and metabolic diseases at the National Institute of Diabetes and Digestive and Kidney Diseases, National Institutes of Health, Bethesda, Md., and colleagues.

“Elucidating differences in diabetes prevalence trends by diabetes type and demographic characteristics is essential to describe the burden of disease and to estimate current and future resource needs,” Dr. Lawrence and colleagues wrote in JAMA.

The retrospective analysis was a part of the ongoing SEARCH study, which includes data from individuals in six areas across the United States: Colorado, California, Ohio, South Carolina, Washington state, and Arizona/New Mexico (Indian Health Services). In the present report, three prevalence years were evaluated: 2001, 2009, and 2017. For each year, approximately 3.5 million youths were included. Findings were reported in terms of diabetes type, race/ethnicity, age at diagnosis, and sex.

Absolute prevalence of type 1 diabetes per 1,000 youths increased from 1.48 in 2001, to 1.93 in 2009, and finally 2.15 in 2017. Across the 16-year period, this represents an absolute increase of 0.67 (95% confidence interval, 0.64-0.70), and a relative increase of 45.1% (95% CI, 40.0%-50.4%). In absolute terms, prevalence increased most among non-Hispanic White (0.93 per 1,000) and non-Hispanic Black (0.89 per 1,000) youths.

While type 2 diabetes was comparatively less common than type 1 diabetes, absolute prevalence per 1,000 youths increased to a greater degree, rising from 0.34 in 2001 to 0.46 in 2009 and to 0.67 in 2017. This amounts to relative increase across the period of 95.3% (95% CI, 77.0%-115.4%). Absolute increases were disproportionate among racial/ethnic minorities, particularly Black and Hispanic youths, who had absolute increases per 1,000 youths of 0.85 (95% CI, 0.74-0.97) and 0.57 (95% CI, 0.51-0.64), respectively, compared with 0.05 (95% CI, 0.03-0.07) for White youths.

“Increases [among Black and Hispanic youths] were not linear,” the investigators noted. “Hispanic youths had a significantly greater increase in the first interval compared with the second interval, while Black youths had no significant increase in the first interval and a significant increase in the second interval.”

Dr. Lawrence and colleagues offered several possible factors driving these trends in type 2 diabetes.

“Changes in anthropometric risk factors appear to play a significant role,” they wrote, noting that “Black and Mexican American teenagers experienced the greatest increase in prevalence of obesity/severe obesity from 1999 to 2018, which may contribute to race and ethnicity differences. Other contributing factors may include increases in exposure to maternal obesity and diabetes (gestational and type 2 diabetes) and exposure to environmental chemicals.”

According to Megan Kelsey, MD, associate professor of pediatric endocrinology, director of lifestyle medicine endocrinology, and medical director of the bariatric surgery center at Children’s Hospital Colorado, Aurora, the increased rates of type 2 diabetes reported by the study are alarming, yet they pale in comparison with what’s been happening since the pandemic began.

“Individual institutions have reported anywhere between a 50% – which is basically what we’re seeing at our hospital – to a 300% increase in new diagnoses [of type 2 diabetes] in a single-year time period,” Dr. Kelsey said in an interview. “So what is reported [in the present study] doesn’t even get at what’s been going on over the past year and a half.”

Dr. Kelsey offered some speculative drivers of this recent surge in cases, including stress, weight gain caused by sedentary behavior and more access to food, and the possibility that SARS-CoV-2 may infect pancreatic islet beta cells, thereby interfering with insulin production.

Type 2 diabetes is particularly concerning among young people, Dr. Kelsey noted, as it is more challenging to manage than adult-onset disease.

Young patients “also develop complications much sooner than you’d expect,” she added. “So we really need to understand why these rates are increasing, how we can identify kids at risk, and how we can better prevent it, so we aren’t stuck with a disease that’s really difficult to treat.”

To this end, the NIH recently opened applications for investigators to participate in a prospective longitudinal study of youth-onset type 2 diabetes. Young people at risk of diabetes will be followed through puberty, a period of increased risk, according to Dr. Kelsey.

“The goal will be to take kids who don’t yet have [type 2] diabetes, but are at risk, and try to better understand, as some of them progress to developing diabetes, what is going on,” Dr. Kelsey said. “What are other factors that we can use to better predict who’s going to develop diabetes? And can we use the information from this [upcoming] study to understand how to better prevent it? Because nothing that has been tried so far has worked.”

The study was supported by the Centers for Disease Control and Prevention, NIDDK, and others. The investigators and Dr. Kelsey reported no conflicts of interest.

FROM JAMA

Prevalence of high-risk HPV types dwindled since vaccine approval

Young women who received the quadrivalent human papillomavirus (HPV) vaccine had fewer and fewer infections with high-risk HPV strains covered by the vaccine year after year, but the incidence of high-risk strains that were not covered by the vaccine increased over the same 12-year period, researchers report in a study published August 23 in JAMA Open Network.

“One of the unique contributions that this study provides is the evaluation of a real-world example of the HPV infection rates following immunization in a population of adolescent girls and young adult women at a single health center in a large U.S. city, reflecting strong evidence of vaccine effectiveness,” write Nicolas F. Schlecht, PhD, a professor of oncology at Roswell Park Comprehensive Cancer Center, Buffalo, and his colleagues. “Previous surveillance studies from the U.S. have involved older women and populations with relatively low vaccine coverage.”

In addition to supporting the value of continuing to vaccinate teens against HPV, the findings underscore the importance of continuing to screen women for cervical cancer, Dr. Schlecht said in an interview.

“HPV has not and is not going away,” he said. “We need to keep on our toes with screening and other measures to continue to prevent the development of cervix cancer,” including monitoring different high-risk HPV types and keeping a close eye on cervical precancer rates, particularly CIN3 and cervix cancer, he said. “The vaccines are definitely a good thing. Just getting rid of HPV16 is an amazing accomplishment.”

Kevin Ault, MD, a professor of ob/gyn and academic specialist director of clinical and translational research at the University of Kansas, Kansas City, told this news organization that other studies have had similar findings, but this one is larger with longer follow-up.

“The take-home message is that vaccines work, and this is especially true for the HPV vaccine,” said Dr. Ault, who was not involved in the research. “The vaccine prevents HPV infections and the consequences of these infections, such as cervical cancer. The results are consistent with other studies in different settings, so they are likely generalizable.”

The researchers collected data from October 2007, shortly after the vaccine was approved, through September 2019 on sexually active adolescent and young women aged 13 to 21 years who had received the HPV vaccine and had agreed to follow-up assessments every 6 months until they turned 26. Each follow-up included the collecting of samples of cervical and anal cells for polymerase chain reaction testing for the presence of HPV types.

More than half of the 1,453 participants were Hispanic (58.8%), and half were Black (50.4%), including 15% Hispanic and Black patients. The average age of the participants was 18 years. They were tracked for a median 2.4 years. Nearly half the participants (48%) received the HPV vaccine prior to sexual debut.

For the longitudinal study, the researchers adjusted for participants’ age, the year they received the vaccine, and the years since they were vaccinated. They also tracked breakthrough infections for the four types of HPV covered by the vaccine in participants who received the vaccine before sexual debut.

“We evaluated whether infection rates for HPV have changed since the administration of the vaccine by assessing longitudinally the probability of HPV detection over time among vaccinated participants while adjusting for changes in cohort characteristics over time,” the researchers write. In their statistical analysis, they made adjustments for the number of vaccine doses participants received before their first study visit, age at sexual debut, age at first vaccine dose, number of sexual partners in the preceding 6 months, consistency of condom use during sex, history of a positive chlamydia test, and, for anal HPV analyses, whether the participants had had anal sex in the previous 6 months.

The average age at first intercourse remained steady at 15 years throughout the study, but the average age of vaccination dropped from 18 years in 2008 to 12 years in 2019 (P < .001). More than half the participants (64%) had had at least three lifetime sexual partners at baseline.

After adjustment for age, the researchers found that the incidence of the four HPV types covered by the vaccine – HPV-6, HPV-11, HPV-16, and HPV-18 – dropped more each year, shifting from 9.1% from 2008-2010 to 4.7% from 2017-2019. The effect was even greater among those vaccinated prior to sexual debut; for those patients, the incidence of the four vaccine types dropped from 8.8% to 1.7% over the course of the study. Declines over time also occurred for anal types HPV-31 (adjusted odds ratio [aOR] = 0.76) and HPV-45 (aOR = 0.77). Those vaccinated prior to any sexual intercourse had 19% lower odds of infection per year with a vaccine-covered HPV type.

“We were really excited to see that the types targeted by the vaccines were considerably lower over time in our population,” Dr. Schlecht told this news organization. “This is an important observation, since most of these types are the most worrisome for cervical cancer.”

They were surprised, however, to see overall HPV prevalence increase over time, particularly with the high-risk HPV types that were not covered by the quadrivalent vaccine.

Prevalence of cervical high-risk types not in the vaccine increased from 25.1% from 2008-2010 to 30.5% from 2017-2019. Odds of detection of high-risk HPV types not covered by the vaccine increased 8% each year, particularly for HPV-56 and HPV-68; anal HPV types increased 11% each year. Neither age nor recent number of sexual partners affected the findings.

“The underlying mechanisms for the observed increased detection of specific non-vaccine HPV types over time are not yet clear.”

“We hope this doesn’t translate into some increase in cervical neoplasia that is unanticipated,” Dr. Schlecht said. He noted that the attributable risks for cancer associated with nonvaccine high-risk HPV types remain low. “Theoretical concerns are one thing; actual data is what drives the show,” he said.

The research was funded by the National Institutes of Health and the Icahn School of Medicine at Mount Sinai, New York. Dr. Schlecht has served on advisory boards for Merck, GlaxoSmithKline (GSK), and PDS Biotechnology. One author previously served on a GSK advisory board, and another worked with Merck on an early vaccine trial. Dr. Ault has disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Young women who received the quadrivalent human papillomavirus (HPV) vaccine had fewer and fewer infections with high-risk HPV strains covered by the vaccine year after year, but the incidence of high-risk strains that were not covered by the vaccine increased over the same 12-year period, researchers report in a study published August 23 in JAMA Open Network.

“One of the unique contributions that this study provides is the evaluation of a real-world example of the HPV infection rates following immunization in a population of adolescent girls and young adult women at a single health center in a large U.S. city, reflecting strong evidence of vaccine effectiveness,” write Nicolas F. Schlecht, PhD, a professor of oncology at Roswell Park Comprehensive Cancer Center, Buffalo, and his colleagues. “Previous surveillance studies from the U.S. have involved older women and populations with relatively low vaccine coverage.”

In addition to supporting the value of continuing to vaccinate teens against HPV, the findings underscore the importance of continuing to screen women for cervical cancer, Dr. Schlecht said in an interview.

“HPV has not and is not going away,” he said. “We need to keep on our toes with screening and other measures to continue to prevent the development of cervix cancer,” including monitoring different high-risk HPV types and keeping a close eye on cervical precancer rates, particularly CIN3 and cervix cancer, he said. “The vaccines are definitely a good thing. Just getting rid of HPV16 is an amazing accomplishment.”

Kevin Ault, MD, a professor of ob/gyn and academic specialist director of clinical and translational research at the University of Kansas, Kansas City, told this news organization that other studies have had similar findings, but this one is larger with longer follow-up.

“The take-home message is that vaccines work, and this is especially true for the HPV vaccine,” said Dr. Ault, who was not involved in the research. “The vaccine prevents HPV infections and the consequences of these infections, such as cervical cancer. The results are consistent with other studies in different settings, so they are likely generalizable.”

The researchers collected data from October 2007, shortly after the vaccine was approved, through September 2019 on sexually active adolescent and young women aged 13 to 21 years who had received the HPV vaccine and had agreed to follow-up assessments every 6 months until they turned 26. Each follow-up included the collecting of samples of cervical and anal cells for polymerase chain reaction testing for the presence of HPV types.

More than half of the 1,453 participants were Hispanic (58.8%), and half were Black (50.4%), including 15% Hispanic and Black patients. The average age of the participants was 18 years. They were tracked for a median 2.4 years. Nearly half the participants (48%) received the HPV vaccine prior to sexual debut.

For the longitudinal study, the researchers adjusted for participants’ age, the year they received the vaccine, and the years since they were vaccinated. They also tracked breakthrough infections for the four types of HPV covered by the vaccine in participants who received the vaccine before sexual debut.

“We evaluated whether infection rates for HPV have changed since the administration of the vaccine by assessing longitudinally the probability of HPV detection over time among vaccinated participants while adjusting for changes in cohort characteristics over time,” the researchers write. In their statistical analysis, they made adjustments for the number of vaccine doses participants received before their first study visit, age at sexual debut, age at first vaccine dose, number of sexual partners in the preceding 6 months, consistency of condom use during sex, history of a positive chlamydia test, and, for anal HPV analyses, whether the participants had had anal sex in the previous 6 months.

The average age at first intercourse remained steady at 15 years throughout the study, but the average age of vaccination dropped from 18 years in 2008 to 12 years in 2019 (P < .001). More than half the participants (64%) had had at least three lifetime sexual partners at baseline.

After adjustment for age, the researchers found that the incidence of the four HPV types covered by the vaccine – HPV-6, HPV-11, HPV-16, and HPV-18 – dropped more each year, shifting from 9.1% from 2008-2010 to 4.7% from 2017-2019. The effect was even greater among those vaccinated prior to sexual debut; for those patients, the incidence of the four vaccine types dropped from 8.8% to 1.7% over the course of the study. Declines over time also occurred for anal types HPV-31 (adjusted odds ratio [aOR] = 0.76) and HPV-45 (aOR = 0.77). Those vaccinated prior to any sexual intercourse had 19% lower odds of infection per year with a vaccine-covered HPV type.

“We were really excited to see that the types targeted by the vaccines were considerably lower over time in our population,” Dr. Schlecht told this news organization. “This is an important observation, since most of these types are the most worrisome for cervical cancer.”

They were surprised, however, to see overall HPV prevalence increase over time, particularly with the high-risk HPV types that were not covered by the quadrivalent vaccine.

Prevalence of cervical high-risk types not in the vaccine increased from 25.1% from 2008-2010 to 30.5% from 2017-2019. Odds of detection of high-risk HPV types not covered by the vaccine increased 8% each year, particularly for HPV-56 and HPV-68; anal HPV types increased 11% each year. Neither age nor recent number of sexual partners affected the findings.

“The underlying mechanisms for the observed increased detection of specific non-vaccine HPV types over time are not yet clear.”

“We hope this doesn’t translate into some increase in cervical neoplasia that is unanticipated,” Dr. Schlecht said. He noted that the attributable risks for cancer associated with nonvaccine high-risk HPV types remain low. “Theoretical concerns are one thing; actual data is what drives the show,” he said.

The research was funded by the National Institutes of Health and the Icahn School of Medicine at Mount Sinai, New York. Dr. Schlecht has served on advisory boards for Merck, GlaxoSmithKline (GSK), and PDS Biotechnology. One author previously served on a GSK advisory board, and another worked with Merck on an early vaccine trial. Dr. Ault has disclosed no relevant financial relationships.

A version of this article first appeared on Medscape.com.

Young women who received the quadrivalent human papillomavirus (HPV) vaccine had fewer and fewer infections with high-risk HPV strains covered by the vaccine year after year, but the incidence of high-risk strains that were not covered by the vaccine increased over the same 12-year period, researchers report in a study published August 23 in JAMA Open Network.

“One of the unique contributions that this study provides is the evaluation of a real-world example of the HPV infection rates following immunization in a population of adolescent girls and young adult women at a single health center in a large U.S. city, reflecting strong evidence of vaccine effectiveness,” write Nicolas F. Schlecht, PhD, a professor of oncology at Roswell Park Comprehensive Cancer Center, Buffalo, and his colleagues. “Previous surveillance studies from the U.S. have involved older women and populations with relatively low vaccine coverage.”

In addition to supporting the value of continuing to vaccinate teens against HPV, the findings underscore the importance of continuing to screen women for cervical cancer, Dr. Schlecht said in an interview.

“HPV has not and is not going away,” he said. “We need to keep on our toes with screening and other measures to continue to prevent the development of cervix cancer,” including monitoring different high-risk HPV types and keeping a close eye on cervical precancer rates, particularly CIN3 and cervix cancer, he said. “The vaccines are definitely a good thing. Just getting rid of HPV16 is an amazing accomplishment.”

Kevin Ault, MD, a professor of ob/gyn and academic specialist director of clinical and translational research at the University of Kansas, Kansas City, told this news organization that other studies have had similar findings, but this one is larger with longer follow-up.

“The take-home message is that vaccines work, and this is especially true for the HPV vaccine,” said Dr. Ault, who was not involved in the research. “The vaccine prevents HPV infections and the consequences of these infections, such as cervical cancer. The results are consistent with other studies in different settings, so they are likely generalizable.”

The researchers collected data from October 2007, shortly after the vaccine was approved, through September 2019 on sexually active adolescent and young women aged 13 to 21 years who had received the HPV vaccine and had agreed to follow-up assessments every 6 months until they turned 26. Each follow-up included the collecting of samples of cervical and anal cells for polymerase chain reaction testing for the presence of HPV types.

More than half of the 1,453 participants were Hispanic (58.8%), and half were Black (50.4%), including 15% Hispanic and Black patients. The average age of the participants was 18 years. They were tracked for a median 2.4 years. Nearly half the participants (48%) received the HPV vaccine prior to sexual debut.

For the longitudinal study, the researchers adjusted for participants’ age, the year they received the vaccine, and the years since they were vaccinated. They also tracked breakthrough infections for the four types of HPV covered by the vaccine in participants who received the vaccine before sexual debut.