User login

Prodrug infusion beats oral Parkinson’s disease therapy for motor symptoms

, according to a new study. The beneficial effects of these phosphate prodrugs of levodopa and carbidopa were most noticeable in the early morning, results of the phase 1B study showed.

As Parkinson’s disease progresses and dosing of oral levodopa/carbidopa (LD/CD) increases, its therapeutic window narrows, resulting in troublesome dyskinesia at peak drug levels and tremors and rigidity when levels fall.

“Foslevodopa/foscarbidopa shows lower ‘off’ time than oral levodopa/carbidopa, and this was statistically significant. Also, foslevodopa/foscarbidopa (fosL/fosC) showed more ‘on’ time without dyskinesia, compared with oral levodopa/carbidopa. This was also statistically significant,” lead author Sven Stodtmann, PhD, of AbbVie GmbH, Ludwigshafen, Germany, reported in his recorded presentation at the Movement Disorders Society’s 23rd International Congress of Parkinson’s Disease and Movement Disorder (Virtual) 2020.

Continuous infusion versus oral therapy

The analysis included 20 patients, and all data from these individuals were collected between 4:30 a.m. and 9:30 p.m.

Participants were 12 men and 8 women, aged 30-80 years, with advanced, idiopathic Parkinson’s disease responsive to levodopa but inadequately controlled on their current stable therapy, having a minimum of 2.5 off hours/day. Mean age was 61.3 plus or minus 10.5 years (range 35-77 years).

In this single-arm, open-label study, they received subcutaneous infusions of personalized therapeutic doses of fosL/fosC 24 hours/day for 28 days after a 10- to 30-day screening period during which they recorded LD/CD doses in a diary and had motor symptoms monitored using a wearable device.

Following the screening period, fosL/fosC doses were titrated over up to 5 days, with subsequent weekly study visits, for a total time on fosL/fosC of 28 days. Drug titration was aimed at maximizing functional on time and minimizing the number of off episodes while minimizing troublesome dyskinesia.

Continuous infusion of fosL/fosC performed better than oral LD/CD on all counts.

“The off time is much lower in the morning for people on foslevodopa/foscarbidopa [compared with oral LD/CD] because this is a 24-hour infusion product,” Dr. Stodtmann explained.

The effect was maintained over the course of the day with little fluctuation with fosL/fosC, off periods never exceeding about 25% between 4:30 a.m. and 9 p.m. For LD/CD, off periods were highest in the early morning and peaked at about 50% on a 3- to 4-hour cycle during the course of the day.

Increased on time without dyskinesia varied between about 60% and 80% during the day with fosL/fosC, showing the greatest difference between fosL/fosC and oral LD/CD in the early morning hours.

“On time with nontroublesome dyskinesia was lower for foscarbidopa/foslevodopa, compared to oral levodopa/carbidopa, but this was not statistically significant,” Dr. Stodtmann said. On time with troublesome dyskinesia followed the same pattern, again, not statistically significant.

Looking at the data another way, the investigators calculated the odds ratios of motor symptoms using fosL/fosC, compared with oral LD/CD. Use of fosL/fosC was associated with a 59% lower risk of being in the off state during the day, compared with oral LD/CD (odds ratio, 0.4; 95% confidence interval, 0.2-0.7; P < .01). Similarly, the probability of being in the on state without dyskinesia was much greater with fosL/fosC (OR, 2.75; 95% CI, 1.08-6.99; P < .05).

Encouraging, but more data needed

Indu Subramanian, MD, of the department of neurology at the University of California, Los Angeles, and director of the Parkinson’s Disease Research, Education, and Clinical Center at the West Los Angeles Veterans Affairs Hospital, commented that the field has been waiting to see data on fosL/fosC.

“It seems like it’s pretty reasonable in terms of what the goals were, which is to improve stability of Parkinson’s symptoms, to improve off time and give on time without troublesome dyskinesia,” she said. “So I think those [goals] have been met.”

Dr. Subramanian, who was not involved with the research, said she would have liked to have seen results concerning safety of this drug formulation, which the presentation lacked, “because historically, there have been issues with nodule formation and skin breakdown, things like that, due to the stability of the product in the subcutaneous form. … So, always to my understanding, there has been this search for things that are tolerated in the subcutaneous delivery.”

If this formulation proves safe and tolerable, Dr. Subramanian sees a potential place for it for some patients with advanced Parkinson’s disease.

“Certainly a subcutaneous formulation will be better than something that requires … deep brain surgery or even a pump insertion like Duopa [carbidopa/levodopa enteral suspension, AbbVie] or something like that,” she said. “I think [it] would be beneficial over something with the gut because the gut historically has been a problem to rely on in advanced Parkinson’s patients due to slower transit times, and the gut itself is affected with Parkinson’s disease.”

Dr. Stodtmann and all coauthors are employees of AbbVie, which was the sponsor of the study and was responsible for all aspects of it. Dr. Subramanian has given talks for Acadia Pharmaceuticals and Acorda Therapeutics in the past.

A version of this article originally appeared on Medscape.com.

, according to a new study. The beneficial effects of these phosphate prodrugs of levodopa and carbidopa were most noticeable in the early morning, results of the phase 1B study showed.

As Parkinson’s disease progresses and dosing of oral levodopa/carbidopa (LD/CD) increases, its therapeutic window narrows, resulting in troublesome dyskinesia at peak drug levels and tremors and rigidity when levels fall.

“Foslevodopa/foscarbidopa shows lower ‘off’ time than oral levodopa/carbidopa, and this was statistically significant. Also, foslevodopa/foscarbidopa (fosL/fosC) showed more ‘on’ time without dyskinesia, compared with oral levodopa/carbidopa. This was also statistically significant,” lead author Sven Stodtmann, PhD, of AbbVie GmbH, Ludwigshafen, Germany, reported in his recorded presentation at the Movement Disorders Society’s 23rd International Congress of Parkinson’s Disease and Movement Disorder (Virtual) 2020.

Continuous infusion versus oral therapy

The analysis included 20 patients, and all data from these individuals were collected between 4:30 a.m. and 9:30 p.m.

Participants were 12 men and 8 women, aged 30-80 years, with advanced, idiopathic Parkinson’s disease responsive to levodopa but inadequately controlled on their current stable therapy, having a minimum of 2.5 off hours/day. Mean age was 61.3 plus or minus 10.5 years (range 35-77 years).

In this single-arm, open-label study, they received subcutaneous infusions of personalized therapeutic doses of fosL/fosC 24 hours/day for 28 days after a 10- to 30-day screening period during which they recorded LD/CD doses in a diary and had motor symptoms monitored using a wearable device.

Following the screening period, fosL/fosC doses were titrated over up to 5 days, with subsequent weekly study visits, for a total time on fosL/fosC of 28 days. Drug titration was aimed at maximizing functional on time and minimizing the number of off episodes while minimizing troublesome dyskinesia.

Continuous infusion of fosL/fosC performed better than oral LD/CD on all counts.

“The off time is much lower in the morning for people on foslevodopa/foscarbidopa [compared with oral LD/CD] because this is a 24-hour infusion product,” Dr. Stodtmann explained.

The effect was maintained over the course of the day with little fluctuation with fosL/fosC, off periods never exceeding about 25% between 4:30 a.m. and 9 p.m. For LD/CD, off periods were highest in the early morning and peaked at about 50% on a 3- to 4-hour cycle during the course of the day.

Increased on time without dyskinesia varied between about 60% and 80% during the day with fosL/fosC, showing the greatest difference between fosL/fosC and oral LD/CD in the early morning hours.

“On time with nontroublesome dyskinesia was lower for foscarbidopa/foslevodopa, compared to oral levodopa/carbidopa, but this was not statistically significant,” Dr. Stodtmann said. On time with troublesome dyskinesia followed the same pattern, again, not statistically significant.

Looking at the data another way, the investigators calculated the odds ratios of motor symptoms using fosL/fosC, compared with oral LD/CD. Use of fosL/fosC was associated with a 59% lower risk of being in the off state during the day, compared with oral LD/CD (odds ratio, 0.4; 95% confidence interval, 0.2-0.7; P < .01). Similarly, the probability of being in the on state without dyskinesia was much greater with fosL/fosC (OR, 2.75; 95% CI, 1.08-6.99; P < .05).

Encouraging, but more data needed

Indu Subramanian, MD, of the department of neurology at the University of California, Los Angeles, and director of the Parkinson’s Disease Research, Education, and Clinical Center at the West Los Angeles Veterans Affairs Hospital, commented that the field has been waiting to see data on fosL/fosC.

“It seems like it’s pretty reasonable in terms of what the goals were, which is to improve stability of Parkinson’s symptoms, to improve off time and give on time without troublesome dyskinesia,” she said. “So I think those [goals] have been met.”

Dr. Subramanian, who was not involved with the research, said she would have liked to have seen results concerning safety of this drug formulation, which the presentation lacked, “because historically, there have been issues with nodule formation and skin breakdown, things like that, due to the stability of the product in the subcutaneous form. … So, always to my understanding, there has been this search for things that are tolerated in the subcutaneous delivery.”

If this formulation proves safe and tolerable, Dr. Subramanian sees a potential place for it for some patients with advanced Parkinson’s disease.

“Certainly a subcutaneous formulation will be better than something that requires … deep brain surgery or even a pump insertion like Duopa [carbidopa/levodopa enteral suspension, AbbVie] or something like that,” she said. “I think [it] would be beneficial over something with the gut because the gut historically has been a problem to rely on in advanced Parkinson’s patients due to slower transit times, and the gut itself is affected with Parkinson’s disease.”

Dr. Stodtmann and all coauthors are employees of AbbVie, which was the sponsor of the study and was responsible for all aspects of it. Dr. Subramanian has given talks for Acadia Pharmaceuticals and Acorda Therapeutics in the past.

A version of this article originally appeared on Medscape.com.

, according to a new study. The beneficial effects of these phosphate prodrugs of levodopa and carbidopa were most noticeable in the early morning, results of the phase 1B study showed.

As Parkinson’s disease progresses and dosing of oral levodopa/carbidopa (LD/CD) increases, its therapeutic window narrows, resulting in troublesome dyskinesia at peak drug levels and tremors and rigidity when levels fall.

“Foslevodopa/foscarbidopa shows lower ‘off’ time than oral levodopa/carbidopa, and this was statistically significant. Also, foslevodopa/foscarbidopa (fosL/fosC) showed more ‘on’ time without dyskinesia, compared with oral levodopa/carbidopa. This was also statistically significant,” lead author Sven Stodtmann, PhD, of AbbVie GmbH, Ludwigshafen, Germany, reported in his recorded presentation at the Movement Disorders Society’s 23rd International Congress of Parkinson’s Disease and Movement Disorder (Virtual) 2020.

Continuous infusion versus oral therapy

The analysis included 20 patients, and all data from these individuals were collected between 4:30 a.m. and 9:30 p.m.

Participants were 12 men and 8 women, aged 30-80 years, with advanced, idiopathic Parkinson’s disease responsive to levodopa but inadequately controlled on their current stable therapy, having a minimum of 2.5 off hours/day. Mean age was 61.3 plus or minus 10.5 years (range 35-77 years).

In this single-arm, open-label study, they received subcutaneous infusions of personalized therapeutic doses of fosL/fosC 24 hours/day for 28 days after a 10- to 30-day screening period during which they recorded LD/CD doses in a diary and had motor symptoms monitored using a wearable device.

Following the screening period, fosL/fosC doses were titrated over up to 5 days, with subsequent weekly study visits, for a total time on fosL/fosC of 28 days. Drug titration was aimed at maximizing functional on time and minimizing the number of off episodes while minimizing troublesome dyskinesia.

Continuous infusion of fosL/fosC performed better than oral LD/CD on all counts.

“The off time is much lower in the morning for people on foslevodopa/foscarbidopa [compared with oral LD/CD] because this is a 24-hour infusion product,” Dr. Stodtmann explained.

The effect was maintained over the course of the day with little fluctuation with fosL/fosC, off periods never exceeding about 25% between 4:30 a.m. and 9 p.m. For LD/CD, off periods were highest in the early morning and peaked at about 50% on a 3- to 4-hour cycle during the course of the day.

Increased on time without dyskinesia varied between about 60% and 80% during the day with fosL/fosC, showing the greatest difference between fosL/fosC and oral LD/CD in the early morning hours.

“On time with nontroublesome dyskinesia was lower for foscarbidopa/foslevodopa, compared to oral levodopa/carbidopa, but this was not statistically significant,” Dr. Stodtmann said. On time with troublesome dyskinesia followed the same pattern, again, not statistically significant.

Looking at the data another way, the investigators calculated the odds ratios of motor symptoms using fosL/fosC, compared with oral LD/CD. Use of fosL/fosC was associated with a 59% lower risk of being in the off state during the day, compared with oral LD/CD (odds ratio, 0.4; 95% confidence interval, 0.2-0.7; P < .01). Similarly, the probability of being in the on state without dyskinesia was much greater with fosL/fosC (OR, 2.75; 95% CI, 1.08-6.99; P < .05).

Encouraging, but more data needed

Indu Subramanian, MD, of the department of neurology at the University of California, Los Angeles, and director of the Parkinson’s Disease Research, Education, and Clinical Center at the West Los Angeles Veterans Affairs Hospital, commented that the field has been waiting to see data on fosL/fosC.

“It seems like it’s pretty reasonable in terms of what the goals were, which is to improve stability of Parkinson’s symptoms, to improve off time and give on time without troublesome dyskinesia,” she said. “So I think those [goals] have been met.”

Dr. Subramanian, who was not involved with the research, said she would have liked to have seen results concerning safety of this drug formulation, which the presentation lacked, “because historically, there have been issues with nodule formation and skin breakdown, things like that, due to the stability of the product in the subcutaneous form. … So, always to my understanding, there has been this search for things that are tolerated in the subcutaneous delivery.”

If this formulation proves safe and tolerable, Dr. Subramanian sees a potential place for it for some patients with advanced Parkinson’s disease.

“Certainly a subcutaneous formulation will be better than something that requires … deep brain surgery or even a pump insertion like Duopa [carbidopa/levodopa enteral suspension, AbbVie] or something like that,” she said. “I think [it] would be beneficial over something with the gut because the gut historically has been a problem to rely on in advanced Parkinson’s patients due to slower transit times, and the gut itself is affected with Parkinson’s disease.”

Dr. Stodtmann and all coauthors are employees of AbbVie, which was the sponsor of the study and was responsible for all aspects of it. Dr. Subramanian has given talks for Acadia Pharmaceuticals and Acorda Therapeutics in the past.

A version of this article originally appeared on Medscape.com.

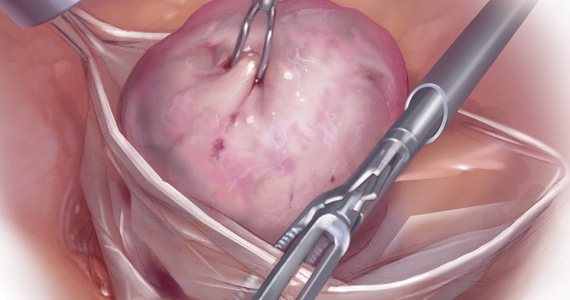

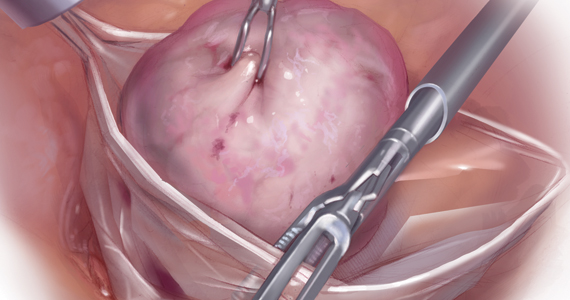

Laparoscopic specimen retrieval bags in gyn surgery: Expert guidance on selection

The use of minimally invasive gynecologic surgery (MIGS) has grown rapidly over the past 20 years. MIGS, which includes vaginal hysterectomy and laparoscopic hysterectomy, is safe and has fewer complications and a more rapid recovery period than open abdominal surgery.1,2 In 2005, the role of MIGS was expanded further when the US Food and Drug Administration (FDA) approved robot-assisted surgery for the performance of gynecologic procedures.3 As knowledge and experience in the safe performance of MIGS progresses, the rates for MIGS procedures have skyrocketed and continue to grow. Between 2007 and 2010, laparoscopic hysterectomy rates rose from 23.5% to 30.5%, while robot-assisted laparoscopic hysterectomy rates increased from 0.5% to 9.5%, representing 40% of all hysterectomies.4 Due to the benefits of minimally invasive surgery over open abdominal surgery, patient and physician preference for minimally invasive procedures has grown significantly in popularity.1,5

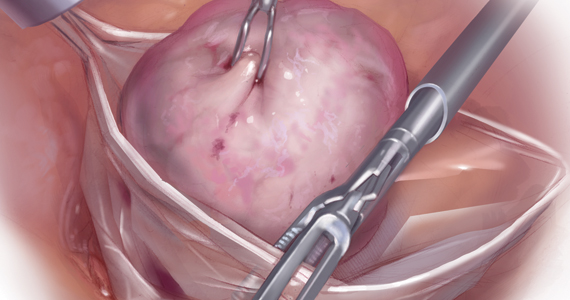

Because incisions are small in minimally invasive surgery, surgeons have been challenged with removing large specimens through incisions that are much smaller than the presenting pathology. One approach is to use a specimen retrieval bag for specimen extraction. Once the dissection is completed, the specimen is placed within the retrieval bag for removal, thus minimizing exposure of the specimen and its contents to the abdominopelvic cavity and incision.

The use of specimen retrieval devices has been advocated to prevent infection, avoid spillage into the peritoneal cavity, and minimize the risk of port-site metastases in cases of potentially cancerous specimens. Devices include affordable and readily available products, such as nonpowdered gloves, and commercially produced bags.6

While the use of specimen containment systems for tissue extraction has been well described in gynecology, the available systems vary widely in construction, size, durability, and shape, potentially leading to confusion and suboptimal bag selection during surgery.7 In this article, we review the most common laparoscopic bags available in the United States, provide an overview of bag characteristics, offer practice guidelines for bag selection, and review bag terminology to highlight important concepts for bag selection.

Controversy spurs change

In April 2014, the FDA warned against the use of power morcellation for specimen removal during minimally invasive surgery, citing a prevalence of 1 in 352 unsuspected uterine sarcomas and 1 in 498 unsuspected uterine leiomyosarcomas among women undergoing hysterectomy or myomectomy for presumed benign leiomyoma.8 Since then, the risk of occult uterine sarcomas, including leiomyosarcoma, in women undergoing surgery for benign gynecologic indications has been determined to be much lower.

Nonetheless, the clinical importance of contained specimen removal was clearly highlighted and the role of specimen retrieval bags soared to the forefront. Open power morcellation is no longer commonly practiced, and national societies such as the American Association of Gynecologic Laparoscopists (AAGL), the Society of Gynecologic Oncology (SGO), and the American College of Obstetricians and Gynecologists (ACOG) recommend that containment systems be used for safer specimen retrieval during gynecologic surgery.9-11 After the specimen is placed inside the containment system (typically a specimen bag), the surgeon may deliver the bag through a vaginal colpotomy or through a slightly extended laparoscopic incision to remove bulky specimens using cold-cutting extraction techniques.12-15

Continue to: Know the pathology’s characteristics...

Know the pathology’s characteristics

In most cases, based on imaging studies and physical examination, surgeons have a good idea of what to expect before proceeding with surgery. The 2 most common characteristics used for surgical planning are the specimen size (dimensions) and the tissue type (solid, cystic, soft tissue, or mixed). The mass size can range from less than 1 cm to larger than a 20-week sized fibroid uterus. Assessing the specimen in 3 dimensions is important. Tissue type also is a consideration, as soft and squishy masses, such as ovarian cysts, are easier to deflate and manipulate within the bag compared with solid or calcified tumors, such as a large fibroid uterus or a large dermoid with solid components.

Specimen shape also is a critical determinant for bag selection. Most specimen retrieval bags are tapered to varying degrees, and some have an irregular shape. Long tubular structures, such as fallopian tubes that are composed of soft tissue, fit easily into most bags regardless of bag shape or extent of bag taper, whereas the round shape of a bulky myoma may render certain bags ineffective even if the bag’s entrance accommodates the greatest diameter of the myoma. Often, a round mass will not fully fit into a bag because there is a poor fit between the mass’s shape and the bag’s shape and taper. (We discuss the concept of a poor “fit” below.) Knowing the pathology before starting a procedure can help optimize bag selection, streamline operative flow, and reduce waste.

Overview of laparoscopic bag characteristics and clinical applications

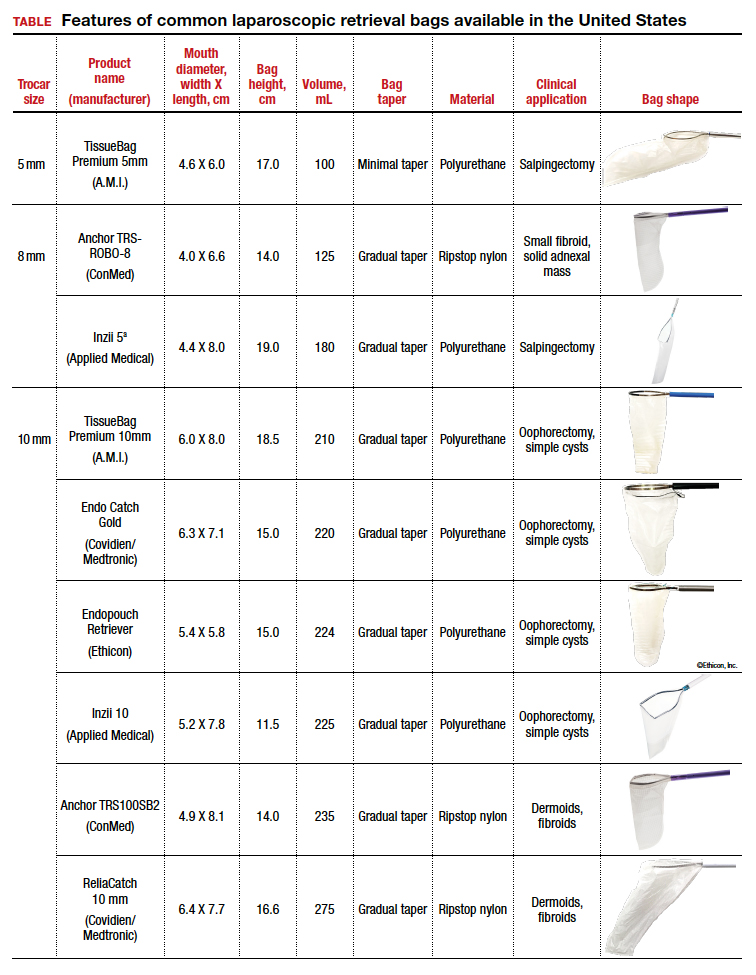

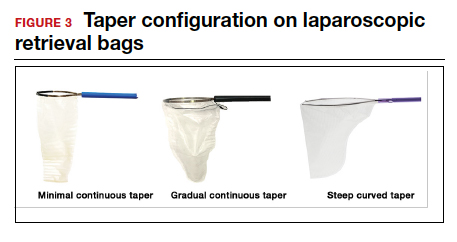

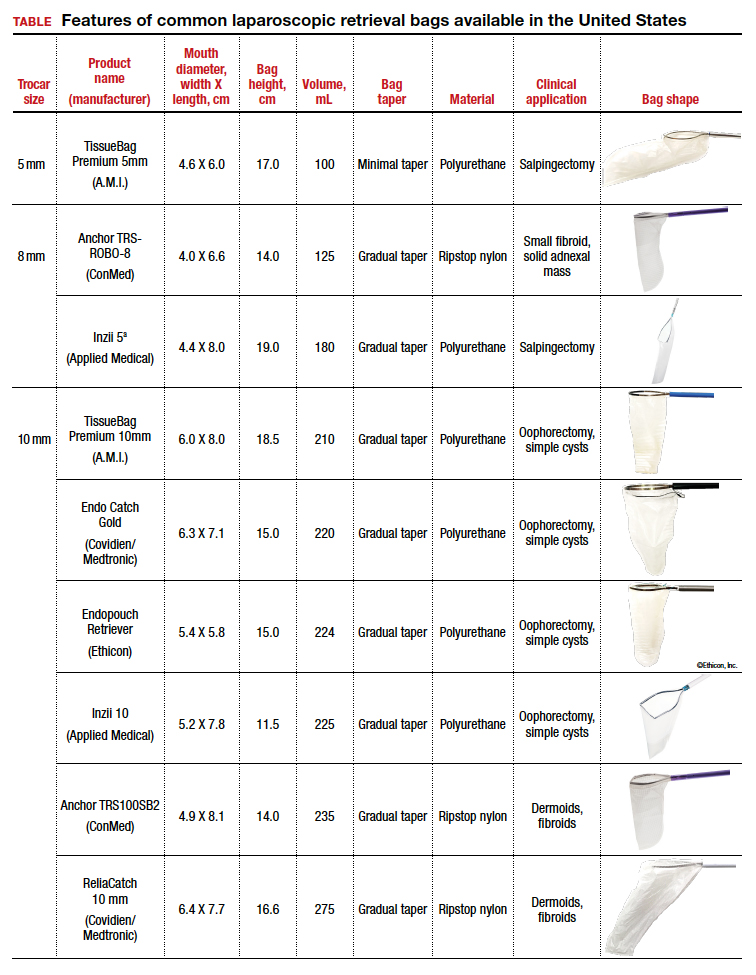

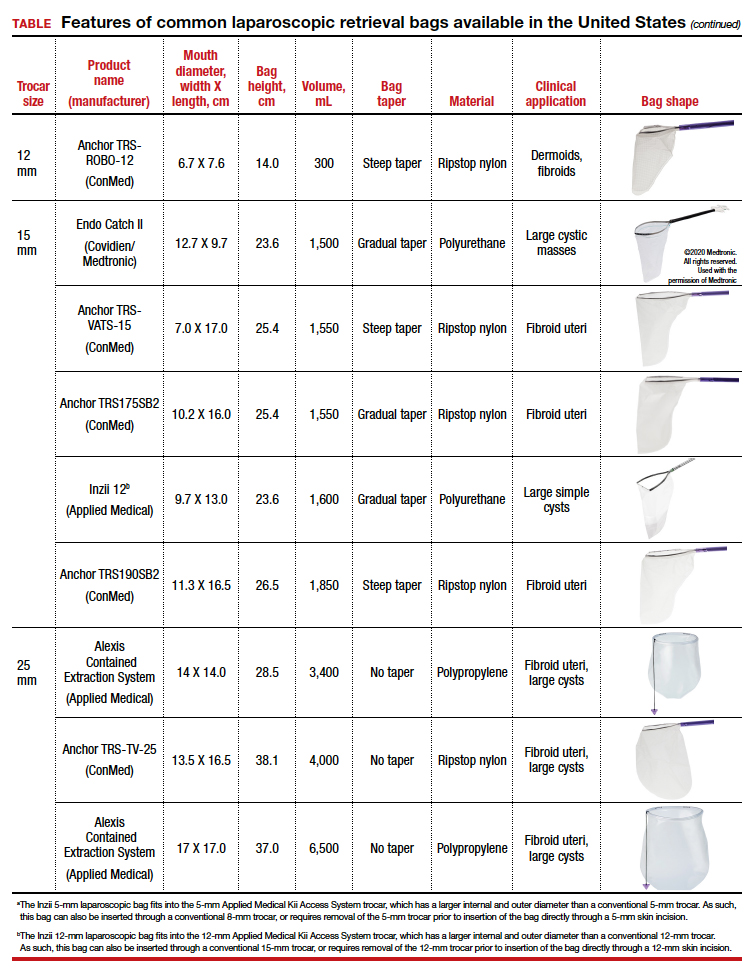

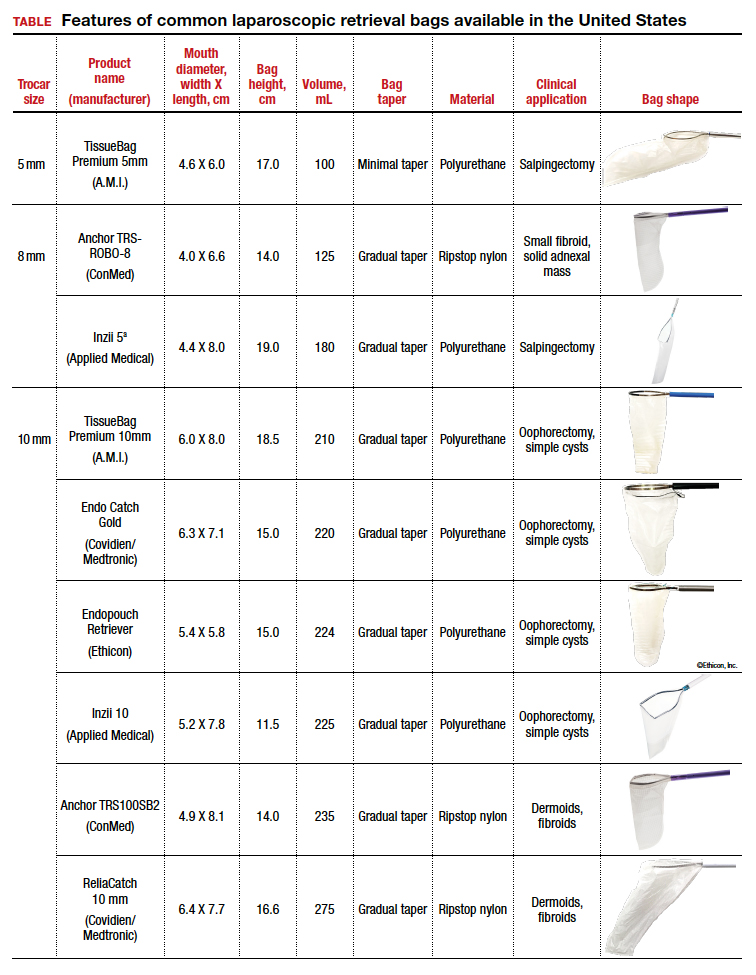

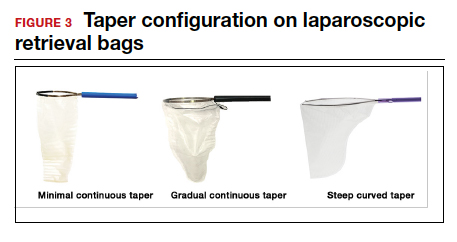

The TABLE lists the most common laparoscopic bags available for purchase in the United States. Details include the trocar size, manufacturer, product name, mouth diameter, volume, bag shape, construction material, and best clinical application.

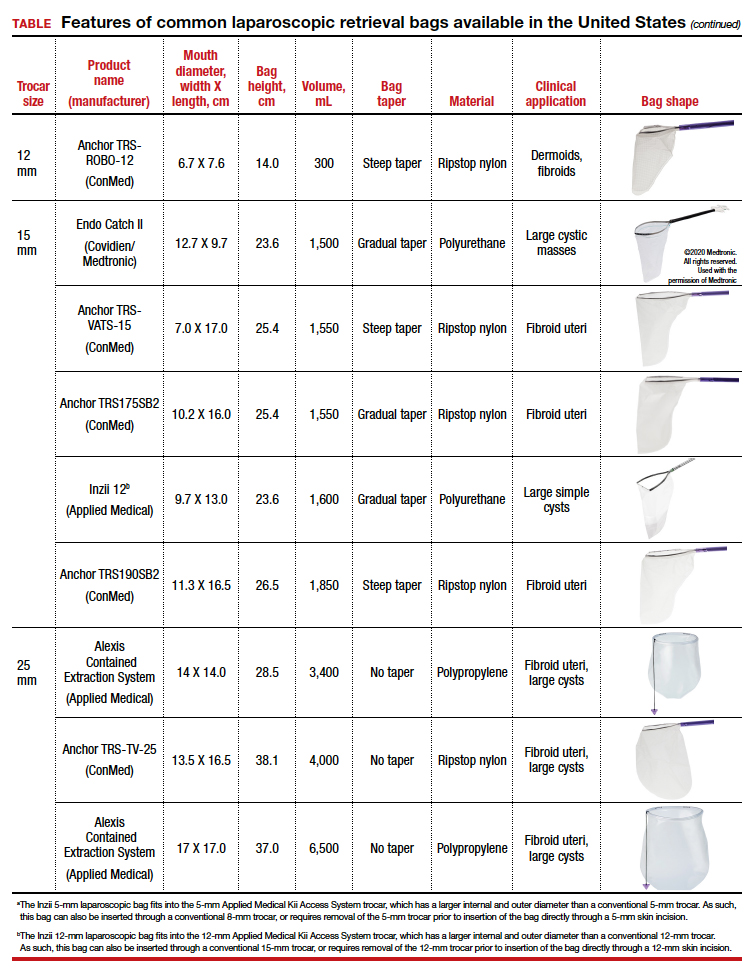

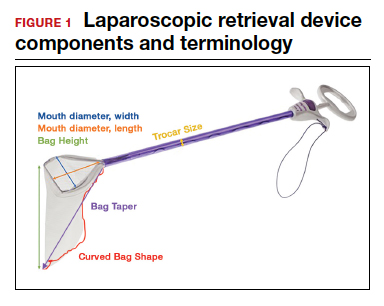

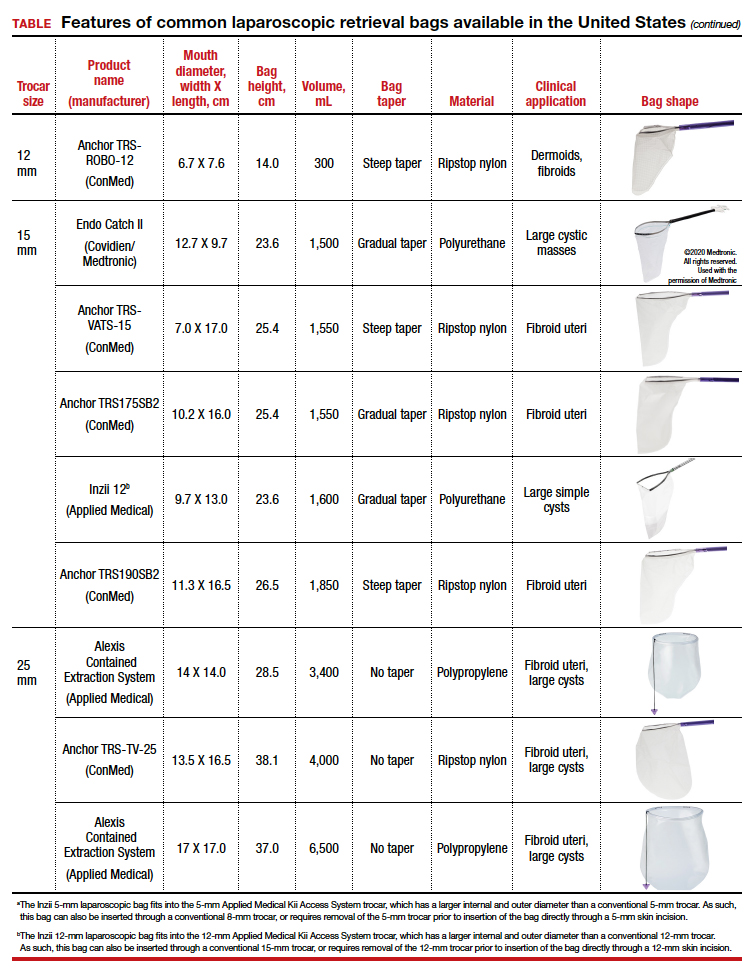

The following are terms used to refer to the components of a laparoscopic retrieval bag:

- Mouth diameter: diameter at the entrance of a fully opened bag (FIGURE 1)

- Bag volume: the total volume a bag can accommodate when completely full

- Bag rim: characteristics of the rim of the bag when opened (that is, rigid vs soft rim, complete vs partial rim mechanism to hold the bag open) (FIGURE 2)

- Bag shape: the shape of the bag when it is fully opened (square shaped vs cone shaped vs curved bag shape) (FIGURE 2)

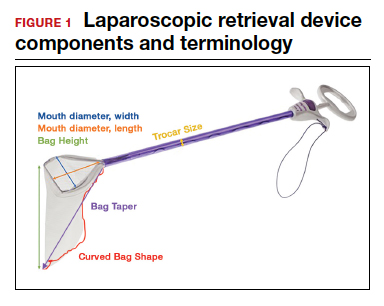

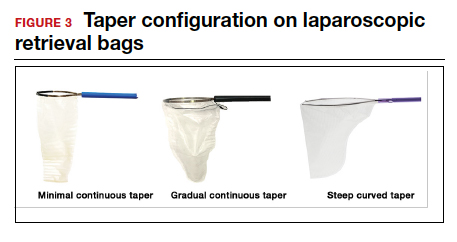

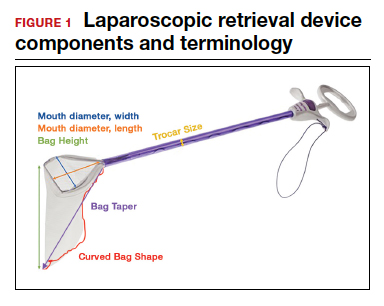

- Bag taper (severity and type): extent the bag is tapered from the rim of the bag’s entrance to the base of the bag; categorized by taper severity (minimal, gradual, or steep taper) and type (continuous taper or curved taper) (FIGURE 3)

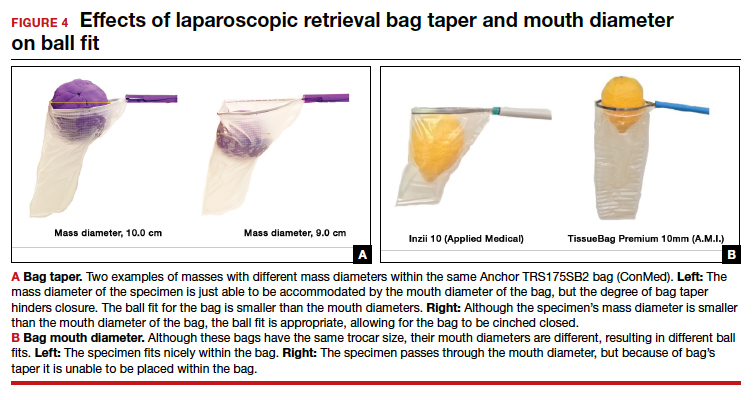

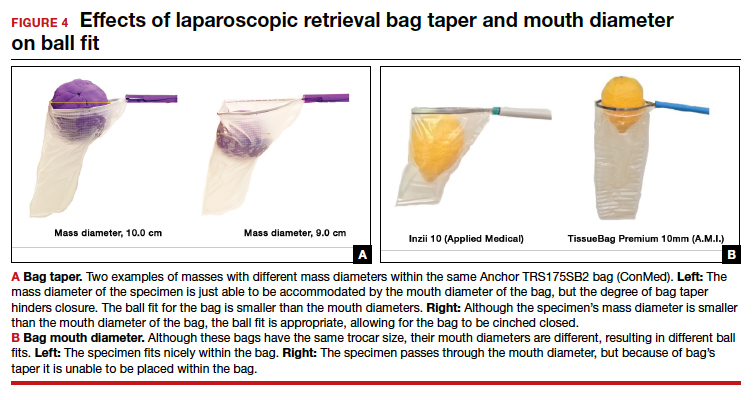

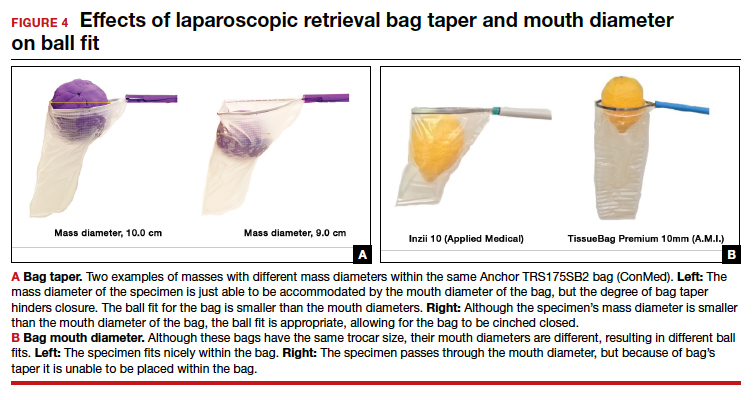

- Ball fit: the maximum spherical specimen size that completely fits into a bag and allows it to cinch closed (FIGURE 4)

- Bag strength: durability of a bag when placed on tension during specimen extraction (weak, moderate, or extremely durable).

Continue to: Mouth diameter...

Mouth diameter

Bag manufacturers often differentiate bag sizes by indicating “volume” in milliliters. Bag volume, however, offers little clinical value to surgeons, as pelvic mass dimensions are usually measured in centimeters on imaging. Rather, an important characteristic for bag selection is the diameter of the rim of the bag when it is fully opened—the so-called bag mouth diameter. For a specimen to fit, the 2 dimensions of the specimen must be smaller than the dimensions of the bag entrance.

Notably, the number often linked to the specimen bag—as, for example, in the 10-mm Endo Catch bag (Covidien/Medtronic)— describes the width of the shaft of the bag before it is opened rather than the mouth diameter of the opened bag. The number actually correlates with the trocar size necessary for bag insertion rather than with the specimen size that can fit into the bag. Therefore, a 10-mm Endo Catch bag cannot fit a 10-cm mass, but rather requires a trocar size of 10 mm or greater for insertion of the bag. Fully opened, the mouth diameters of the 10-mm Endo Catch bag are roughly 6 cm x 7 cm, which allows for delivery of a 6-cm mass.

Because 2 bags that use the same trocar size for insertion may have vastly differing bag dimensions, the surgeon must know the bag mouth diameters when selecting a bag to remove the presenting pathology. For example, the Inzii 12 (Applied Medical) laparoscopic bag has mouth diameters of 9.7 cm × 13.0 cm, whereas the Anchor TRSROBO-12 (ConMed) has mouth diameters of 6.7 cm × 7.6 cm (TABLE). Although both bags can be inserted through a 12-mm trocar, both bags cannot fit the same size mass for removal.

Shape and taper

Laparoscopic bags come in various shapes (curved, cone, or square shaped), with varying levels of bag taper (steep, gradual, or no taper) (FIGURES 2 and 3). While taper has little impact on long and skinny specimens, taper may hinder successful bagging of bulky or spherical specimens.

Each bag has different grades of taper regardless of mouth diameter or trocar size. For round masses, the steeper the taper, the smaller the mass that can comfortably fit within the bag. This concept is connected to the idea of “ball fit,” explained below.

In addition, bag shape may affect what mass size can fit into the bag. An irregularly shaped curved bag or a bag with a steep taper may be well suited for removal of multiple specimens of varying sizes or soft masses that are malleable enough to conform to the bag’s shape (such as a ruptured ovarian cyst). Alternatively, a square-shaped bag or a bag with minimal taper would better accommodate a round mass.

Ball fit

When thinking about large circular masses, such as myomas or ovarian cysts, one must consider the ball fit. This refers to the maximum spherical size of the specimen that fits completely within a bag while allowing the bag to cinch closed. Generally, this is an estimation that factors in the bag shape, extent of the bag taper, bag mouth diameter, and specimen shape and tissue type. At times, although a mass can fit through the bag’s mouth diameter, a steep taper may prevent the mass from being fully bagged and limit closure of the bag (FIGURE 4).

Curved bags like the Anchor TRSVATS-15 (ConMed), which have a very narrow bottom, are prone to a limited ball fit, and thus the bag mouth diameter will not correlate with the largest mass size that can be fitted within the bag. Therefore, if using a steeply tapered bag for removal of large round masses, do not rely on the bag’s mouth diameter for bag selection. The surgeon must visualize the ball fit within the bag, taking into account the specimen size and shape, bag shape, and bag taper. In these scenarios, using the diameter of the midportion of the opened bag may better reflect the mass size that can fit into that bag.

Bag strength

Bag strength depends on the material used for bag construction. Most laparoscopic bags in the United States are made of 3 different materials: polyurethane, polypropylene, and ripstop nylon.

Polyurethane and polypropylene are synthetic plastic polymers; in bag form they are stretchy and, under extreme force, may tear. They are best used for bagging fluid-filled cysts or soft pliable masses that will not require extensive bag or tissue handling, such as extraction of large leiomyomas. Polyurethane and polypropylene bags are more susceptible to puncture with sharp laparoscopic instruments or scalpels, and care must be taken to avoid accidentally cutting the bag during tissue extraction.

Alternatively, bags made of ripstop nylon are favored for their bag strength. Ripstop nylon is a synthetic fabric that is woven together in a crosshatch pattern that makes it resistant to tearing and ripping. It was developed originally during World War II as a replacement for silk parachutes. Modern applications include its use in sails, kites, and high-quality camping equipment. This material has a favorable strength-to-weight ratio, and, in case of a tear, it is less prone to extension of the tear. For surgical applications, these bags are best used for bagging specimens that will require a lot of bag manipulation and tissue extraction. However, the ripstop fabric takes up more space in the incision than polyurethane or polypropylene, leaving the surgeon with less space for tissue extraction. Thus, as a tradeoff for bag strength, the surgeon may need to extend the incision a little, and a small self-retracting wound retractor may be necessary to allow visibility for safe tissue extraction when using a ripstop nylon bag compared with others.

Continue to: Trocar selection is important...

Trocar selection is important

While considering bag selection, the surgeon also must consider trocar selection to allow for laparoscopic insertion of the bag. Trocar size for bag selection refers to the minimum trocar diameter needed to insert the laparoscopic bag. Most bags are designed to fit into a laparoscopic trocar or into the skin incision that previously housed the trocar. Trocar size does not directly correlate with bag mouth diameter; for example, a 10-mm laparoscopic bag that can be inserted through a 10- or 12-mm trocar size cannot fit a 10-cm mass (see the mouth diameter section above).

A tip to maximize operating room (OR) efficiency is to start off with a larger trocar, such as a 12-mm trocar, if it is known that a laparoscopic bag with a 12-mm trocar size will be used, rather than starting with a 5-mm trocar and upsizing the port site incision. This saves time and offers intraoperative flexibility, allowing for the use of larger instruments and quicker insufflation.

Furthermore, if the specimen has a solid component and tissue extraction is anticipated, consider starting off with a large trocar, one that is larger than the bag’s trocar size since the incision likely will be extended. For example, even if a myoma will fit within a 10-mm laparoscopic bag made of ripstop nylon, using a 15-mm trocar rather than a 10-mm trocar may be considered since the skin and fascial incisions will need to be extended to allow for cold-cut tissue extraction. Starting with the larger 15-mm trocar may offer surgical advantages, such as direct needle delivery of larger needles for myometrial closure after myomectomy or direct removal of smaller myomas through the trocar to avoid bagging multiple specimens.

Putting it all together

To optimize efficiency in the OR for specimen removal, we recommend streamlining OR flow and reducing waste by first considering the specimen size, tissue type, bag shape, and trocar selection. Choose a bag by taking into account the bag mouth diameter and the amount of taper you will need to obtain an appropriate ball fit. If the tissue type is soft and pliable, consider a polyurethane or polypropylene bag and the smallest bag size possible, even if it has a narrow bag shape and taper.

However, if the tissue type is solid, the shape is round, and the mass is large (requiring extensive tissue extraction for removal), consider a bag made of ripstop nylon and factor in the bag shape as well as the bag taper. Using a bag without a steep taper may allow a better fit.

After choosing a laparoscopic bag, select the appropriate trocars necessary for completion of the surgery. Consider starting off with a larger trocar rather than spending the time to upsize a trocar if you plan to use a large bag or intend to extend the trocar incision for a contained tissue extraction. These tips will help optimize efficiency, reduce equipment wastage, and prevent intra-abdominal spillage.

Keep in mind that all procedures, including specimen removal using containment systems, have inherent risks. For example, visualization of the mass within the bag and visualization of vital structures may be hindered by bulkiness of the bag or specimen. There is also a risk of bag compromise and leakage, whether through manipulation of the bag or puncture during specimen extraction. Lastly, even though removing a specimen within a containment system minimizes spillage and reports of in-bag cold-knife tissue extraction in women with histologically proven endometrial cancer have suggested that it is safe, laparoscopic bags have not been proven to prevent the dissemination of malignant tissue fragments.16,17

Overall, the inherent risks of specimen extraction during minimally invasive surgery are far outweighed by the well-established advantages of laparoscopic surgery, which carries lower risks of surgical complications such as bleeding and infection, shorter hospital stay, and quicker recovery time compared to laparotomy. There is no doubt minimally invasive surgery offers many benefits.

In summary, for best bag selection, it is equally important to know the characteristics of the pathology as it is to know the features of the specimen retrieval systems available at your institution. Understanding both the pathology and the equipment available will allow the surgeon to make the best surgical decisions for the case. ●

- Desai VB, Wright JD, Lin H, et al. Laparoscopic hysterectomy route, resource use, and outcomes: change after power morcellation warning. Obstet Gynecol. 2019;134:227-238.

- American College of Obstetricians and Gynecologists. ACOG committee opinion No. 444: choosing the route of hysterectomy for benign disease. Obstet Gynecol. 2009;114:1156-1158.

- Liu H, Lu D, Wang L, et al. Robotic surgery for benign gynecological disease. Cochrane Database Syst Rev. 2012;2:CD008978.

- Wright JD, Herzog TJ, Tsui J, et al. Nationwide trends in the performance of inpatient hysterectomy in the United States. Obstet Gynecol. 2013;122(2 pt 1):233-241.

- Turner LC, Shepherd JP, Wang L, et al. Hysterectomy surgery trends: a more accurate depiction of the last decade? Am J Obstet Gynecol. 2013;208:277.e1-7.

- Holme JB, Mortensen FV. A powder-free surgical glove bag for retraction of the gallbladder during laparoscopic cholecystectomy. Surg Laparosc Endosc Percutan Tech. 2005;15:209-211.

- Siedhoff MT, Cohen SL. Tissue extraction techniques for leiomyomas and uteri during minimally invasive surgery. Obstet Gynecol. 2017;130:1251-1260.

- US Food and Drug Administration. Laparoscopic uterine power morcellation in hysterectomy and myomectomy: FDA safety communication. April 17, 2014. https://wayback .archive-it.org/7993/20170722215731/https:/www.fda.gov /MedicalDevices/Safety/AlertsandNotices/ucm393576.htm. Accessed September 22, 2020.

- AAGL. AAGL practice report: morcellation during uterine tissue extraction. J Minim Invasive Gynecol. 2014;21:517-530.

- American College of Obstetricians and Gynecologists. ACOG committee opinion No. 770: uterine morcellation for presumed leiomyomas. Obstet Gynecol. 2019;133:e238-e248.

- Society of Gynecologic Oncology website. SGO position statement: morcellation. December 1, 2013. https://www .sgo.org/newsroom/position-statements-2/morcellation/. Accessed September 22, 2020.

- Advincula AP, Truong MD. ExCITE: minimally invasive tissue extraction made simple with simulation. OBG Manag. 2015;27(12):40-45.

- Solima E, Scagnelli G, Austoni V, et al. Vaginal uterine morcellation within a specimen containment system: a study of bag integrity. J Minim Invasive Gynecol. 2015;22:1244-1246.

- Ghezzi F, Casarin J, De Francesco G, et al. Transvaginal contained tissue extraction after laparoscopic myomectomy: a cohort study. BJOG. 2018;125:367-373.

- Dotson S, Landa A, Ehrisman J, et al. Safety and feasibility of contained uterine morcellation in women undergoing laparoscopic hysterectomy. Gynecol Oncol Res Pract. 2018;5:8.

- Favero G, Miglino G, Köhler C, et al. Vaginal morcellation inside protective pouch: a safe strategy for uterine extration in cases of bulky endometrial cancers: operative and oncological safety of the method. J Minim Invasive Gynecol. 2015;22:938-943.

- Montella F, Riboni F, Cosma S, et al. A safe method of vaginal longitudinal morcellation of bulky uterus with endometrial cancer in a bag at laparoscopy. Surg Endosc. 2014;28:1949-1953.

The use of minimally invasive gynecologic surgery (MIGS) has grown rapidly over the past 20 years. MIGS, which includes vaginal hysterectomy and laparoscopic hysterectomy, is safe and has fewer complications and a more rapid recovery period than open abdominal surgery.1,2 In 2005, the role of MIGS was expanded further when the US Food and Drug Administration (FDA) approved robot-assisted surgery for the performance of gynecologic procedures.3 As knowledge and experience in the safe performance of MIGS progresses, the rates for MIGS procedures have skyrocketed and continue to grow. Between 2007 and 2010, laparoscopic hysterectomy rates rose from 23.5% to 30.5%, while robot-assisted laparoscopic hysterectomy rates increased from 0.5% to 9.5%, representing 40% of all hysterectomies.4 Due to the benefits of minimally invasive surgery over open abdominal surgery, patient and physician preference for minimally invasive procedures has grown significantly in popularity.1,5

Because incisions are small in minimally invasive surgery, surgeons have been challenged with removing large specimens through incisions that are much smaller than the presenting pathology. One approach is to use a specimen retrieval bag for specimen extraction. Once the dissection is completed, the specimen is placed within the retrieval bag for removal, thus minimizing exposure of the specimen and its contents to the abdominopelvic cavity and incision.

The use of specimen retrieval devices has been advocated to prevent infection, avoid spillage into the peritoneal cavity, and minimize the risk of port-site metastases in cases of potentially cancerous specimens. Devices include affordable and readily available products, such as nonpowdered gloves, and commercially produced bags.6

While the use of specimen containment systems for tissue extraction has been well described in gynecology, the available systems vary widely in construction, size, durability, and shape, potentially leading to confusion and suboptimal bag selection during surgery.7 In this article, we review the most common laparoscopic bags available in the United States, provide an overview of bag characteristics, offer practice guidelines for bag selection, and review bag terminology to highlight important concepts for bag selection.

Controversy spurs change

In April 2014, the FDA warned against the use of power morcellation for specimen removal during minimally invasive surgery, citing a prevalence of 1 in 352 unsuspected uterine sarcomas and 1 in 498 unsuspected uterine leiomyosarcomas among women undergoing hysterectomy or myomectomy for presumed benign leiomyoma.8 Since then, the risk of occult uterine sarcomas, including leiomyosarcoma, in women undergoing surgery for benign gynecologic indications has been determined to be much lower.

Nonetheless, the clinical importance of contained specimen removal was clearly highlighted and the role of specimen retrieval bags soared to the forefront. Open power morcellation is no longer commonly practiced, and national societies such as the American Association of Gynecologic Laparoscopists (AAGL), the Society of Gynecologic Oncology (SGO), and the American College of Obstetricians and Gynecologists (ACOG) recommend that containment systems be used for safer specimen retrieval during gynecologic surgery.9-11 After the specimen is placed inside the containment system (typically a specimen bag), the surgeon may deliver the bag through a vaginal colpotomy or through a slightly extended laparoscopic incision to remove bulky specimens using cold-cutting extraction techniques.12-15

Continue to: Know the pathology’s characteristics...

Know the pathology’s characteristics

In most cases, based on imaging studies and physical examination, surgeons have a good idea of what to expect before proceeding with surgery. The 2 most common characteristics used for surgical planning are the specimen size (dimensions) and the tissue type (solid, cystic, soft tissue, or mixed). The mass size can range from less than 1 cm to larger than a 20-week sized fibroid uterus. Assessing the specimen in 3 dimensions is important. Tissue type also is a consideration, as soft and squishy masses, such as ovarian cysts, are easier to deflate and manipulate within the bag compared with solid or calcified tumors, such as a large fibroid uterus or a large dermoid with solid components.

Specimen shape also is a critical determinant for bag selection. Most specimen retrieval bags are tapered to varying degrees, and some have an irregular shape. Long tubular structures, such as fallopian tubes that are composed of soft tissue, fit easily into most bags regardless of bag shape or extent of bag taper, whereas the round shape of a bulky myoma may render certain bags ineffective even if the bag’s entrance accommodates the greatest diameter of the myoma. Often, a round mass will not fully fit into a bag because there is a poor fit between the mass’s shape and the bag’s shape and taper. (We discuss the concept of a poor “fit” below.) Knowing the pathology before starting a procedure can help optimize bag selection, streamline operative flow, and reduce waste.

Overview of laparoscopic bag characteristics and clinical applications

The TABLE lists the most common laparoscopic bags available for purchase in the United States. Details include the trocar size, manufacturer, product name, mouth diameter, volume, bag shape, construction material, and best clinical application.

The following are terms used to refer to the components of a laparoscopic retrieval bag:

- Mouth diameter: diameter at the entrance of a fully opened bag (FIGURE 1)

- Bag volume: the total volume a bag can accommodate when completely full

- Bag rim: characteristics of the rim of the bag when opened (that is, rigid vs soft rim, complete vs partial rim mechanism to hold the bag open) (FIGURE 2)

- Bag shape: the shape of the bag when it is fully opened (square shaped vs cone shaped vs curved bag shape) (FIGURE 2)

- Bag taper (severity and type): extent the bag is tapered from the rim of the bag’s entrance to the base of the bag; categorized by taper severity (minimal, gradual, or steep taper) and type (continuous taper or curved taper) (FIGURE 3)

- Ball fit: the maximum spherical specimen size that completely fits into a bag and allows it to cinch closed (FIGURE 4)

- Bag strength: durability of a bag when placed on tension during specimen extraction (weak, moderate, or extremely durable).

Continue to: Mouth diameter...

Mouth diameter

Bag manufacturers often differentiate bag sizes by indicating “volume” in milliliters. Bag volume, however, offers little clinical value to surgeons, as pelvic mass dimensions are usually measured in centimeters on imaging. Rather, an important characteristic for bag selection is the diameter of the rim of the bag when it is fully opened—the so-called bag mouth diameter. For a specimen to fit, the 2 dimensions of the specimen must be smaller than the dimensions of the bag entrance.

Notably, the number often linked to the specimen bag—as, for example, in the 10-mm Endo Catch bag (Covidien/Medtronic)— describes the width of the shaft of the bag before it is opened rather than the mouth diameter of the opened bag. The number actually correlates with the trocar size necessary for bag insertion rather than with the specimen size that can fit into the bag. Therefore, a 10-mm Endo Catch bag cannot fit a 10-cm mass, but rather requires a trocar size of 10 mm or greater for insertion of the bag. Fully opened, the mouth diameters of the 10-mm Endo Catch bag are roughly 6 cm x 7 cm, which allows for delivery of a 6-cm mass.

Because 2 bags that use the same trocar size for insertion may have vastly differing bag dimensions, the surgeon must know the bag mouth diameters when selecting a bag to remove the presenting pathology. For example, the Inzii 12 (Applied Medical) laparoscopic bag has mouth diameters of 9.7 cm × 13.0 cm, whereas the Anchor TRSROBO-12 (ConMed) has mouth diameters of 6.7 cm × 7.6 cm (TABLE). Although both bags can be inserted through a 12-mm trocar, both bags cannot fit the same size mass for removal.

Shape and taper

Laparoscopic bags come in various shapes (curved, cone, or square shaped), with varying levels of bag taper (steep, gradual, or no taper) (FIGURES 2 and 3). While taper has little impact on long and skinny specimens, taper may hinder successful bagging of bulky or spherical specimens.

Each bag has different grades of taper regardless of mouth diameter or trocar size. For round masses, the steeper the taper, the smaller the mass that can comfortably fit within the bag. This concept is connected to the idea of “ball fit,” explained below.

In addition, bag shape may affect what mass size can fit into the bag. An irregularly shaped curved bag or a bag with a steep taper may be well suited for removal of multiple specimens of varying sizes or soft masses that are malleable enough to conform to the bag’s shape (such as a ruptured ovarian cyst). Alternatively, a square-shaped bag or a bag with minimal taper would better accommodate a round mass.

Ball fit

When thinking about large circular masses, such as myomas or ovarian cysts, one must consider the ball fit. This refers to the maximum spherical size of the specimen that fits completely within a bag while allowing the bag to cinch closed. Generally, this is an estimation that factors in the bag shape, extent of the bag taper, bag mouth diameter, and specimen shape and tissue type. At times, although a mass can fit through the bag’s mouth diameter, a steep taper may prevent the mass from being fully bagged and limit closure of the bag (FIGURE 4).

Curved bags like the Anchor TRSVATS-15 (ConMed), which have a very narrow bottom, are prone to a limited ball fit, and thus the bag mouth diameter will not correlate with the largest mass size that can be fitted within the bag. Therefore, if using a steeply tapered bag for removal of large round masses, do not rely on the bag’s mouth diameter for bag selection. The surgeon must visualize the ball fit within the bag, taking into account the specimen size and shape, bag shape, and bag taper. In these scenarios, using the diameter of the midportion of the opened bag may better reflect the mass size that can fit into that bag.

Bag strength

Bag strength depends on the material used for bag construction. Most laparoscopic bags in the United States are made of 3 different materials: polyurethane, polypropylene, and ripstop nylon.

Polyurethane and polypropylene are synthetic plastic polymers; in bag form they are stretchy and, under extreme force, may tear. They are best used for bagging fluid-filled cysts or soft pliable masses that will not require extensive bag or tissue handling, such as extraction of large leiomyomas. Polyurethane and polypropylene bags are more susceptible to puncture with sharp laparoscopic instruments or scalpels, and care must be taken to avoid accidentally cutting the bag during tissue extraction.

Alternatively, bags made of ripstop nylon are favored for their bag strength. Ripstop nylon is a synthetic fabric that is woven together in a crosshatch pattern that makes it resistant to tearing and ripping. It was developed originally during World War II as a replacement for silk parachutes. Modern applications include its use in sails, kites, and high-quality camping equipment. This material has a favorable strength-to-weight ratio, and, in case of a tear, it is less prone to extension of the tear. For surgical applications, these bags are best used for bagging specimens that will require a lot of bag manipulation and tissue extraction. However, the ripstop fabric takes up more space in the incision than polyurethane or polypropylene, leaving the surgeon with less space for tissue extraction. Thus, as a tradeoff for bag strength, the surgeon may need to extend the incision a little, and a small self-retracting wound retractor may be necessary to allow visibility for safe tissue extraction when using a ripstop nylon bag compared with others.

Continue to: Trocar selection is important...

Trocar selection is important

While considering bag selection, the surgeon also must consider trocar selection to allow for laparoscopic insertion of the bag. Trocar size for bag selection refers to the minimum trocar diameter needed to insert the laparoscopic bag. Most bags are designed to fit into a laparoscopic trocar or into the skin incision that previously housed the trocar. Trocar size does not directly correlate with bag mouth diameter; for example, a 10-mm laparoscopic bag that can be inserted through a 10- or 12-mm trocar size cannot fit a 10-cm mass (see the mouth diameter section above).

A tip to maximize operating room (OR) efficiency is to start off with a larger trocar, such as a 12-mm trocar, if it is known that a laparoscopic bag with a 12-mm trocar size will be used, rather than starting with a 5-mm trocar and upsizing the port site incision. This saves time and offers intraoperative flexibility, allowing for the use of larger instruments and quicker insufflation.

Furthermore, if the specimen has a solid component and tissue extraction is anticipated, consider starting off with a large trocar, one that is larger than the bag’s trocar size since the incision likely will be extended. For example, even if a myoma will fit within a 10-mm laparoscopic bag made of ripstop nylon, using a 15-mm trocar rather than a 10-mm trocar may be considered since the skin and fascial incisions will need to be extended to allow for cold-cut tissue extraction. Starting with the larger 15-mm trocar may offer surgical advantages, such as direct needle delivery of larger needles for myometrial closure after myomectomy or direct removal of smaller myomas through the trocar to avoid bagging multiple specimens.

Putting it all together

To optimize efficiency in the OR for specimen removal, we recommend streamlining OR flow and reducing waste by first considering the specimen size, tissue type, bag shape, and trocar selection. Choose a bag by taking into account the bag mouth diameter and the amount of taper you will need to obtain an appropriate ball fit. If the tissue type is soft and pliable, consider a polyurethane or polypropylene bag and the smallest bag size possible, even if it has a narrow bag shape and taper.

However, if the tissue type is solid, the shape is round, and the mass is large (requiring extensive tissue extraction for removal), consider a bag made of ripstop nylon and factor in the bag shape as well as the bag taper. Using a bag without a steep taper may allow a better fit.

After choosing a laparoscopic bag, select the appropriate trocars necessary for completion of the surgery. Consider starting off with a larger trocar rather than spending the time to upsize a trocar if you plan to use a large bag or intend to extend the trocar incision for a contained tissue extraction. These tips will help optimize efficiency, reduce equipment wastage, and prevent intra-abdominal spillage.

Keep in mind that all procedures, including specimen removal using containment systems, have inherent risks. For example, visualization of the mass within the bag and visualization of vital structures may be hindered by bulkiness of the bag or specimen. There is also a risk of bag compromise and leakage, whether through manipulation of the bag or puncture during specimen extraction. Lastly, even though removing a specimen within a containment system minimizes spillage and reports of in-bag cold-knife tissue extraction in women with histologically proven endometrial cancer have suggested that it is safe, laparoscopic bags have not been proven to prevent the dissemination of malignant tissue fragments.16,17

Overall, the inherent risks of specimen extraction during minimally invasive surgery are far outweighed by the well-established advantages of laparoscopic surgery, which carries lower risks of surgical complications such as bleeding and infection, shorter hospital stay, and quicker recovery time compared to laparotomy. There is no doubt minimally invasive surgery offers many benefits.

In summary, for best bag selection, it is equally important to know the characteristics of the pathology as it is to know the features of the specimen retrieval systems available at your institution. Understanding both the pathology and the equipment available will allow the surgeon to make the best surgical decisions for the case. ●

The use of minimally invasive gynecologic surgery (MIGS) has grown rapidly over the past 20 years. MIGS, which includes vaginal hysterectomy and laparoscopic hysterectomy, is safe and has fewer complications and a more rapid recovery period than open abdominal surgery.1,2 In 2005, the role of MIGS was expanded further when the US Food and Drug Administration (FDA) approved robot-assisted surgery for the performance of gynecologic procedures.3 As knowledge and experience in the safe performance of MIGS progresses, the rates for MIGS procedures have skyrocketed and continue to grow. Between 2007 and 2010, laparoscopic hysterectomy rates rose from 23.5% to 30.5%, while robot-assisted laparoscopic hysterectomy rates increased from 0.5% to 9.5%, representing 40% of all hysterectomies.4 Due to the benefits of minimally invasive surgery over open abdominal surgery, patient and physician preference for minimally invasive procedures has grown significantly in popularity.1,5

Because incisions are small in minimally invasive surgery, surgeons have been challenged with removing large specimens through incisions that are much smaller than the presenting pathology. One approach is to use a specimen retrieval bag for specimen extraction. Once the dissection is completed, the specimen is placed within the retrieval bag for removal, thus minimizing exposure of the specimen and its contents to the abdominopelvic cavity and incision.

The use of specimen retrieval devices has been advocated to prevent infection, avoid spillage into the peritoneal cavity, and minimize the risk of port-site metastases in cases of potentially cancerous specimens. Devices include affordable and readily available products, such as nonpowdered gloves, and commercially produced bags.6

While the use of specimen containment systems for tissue extraction has been well described in gynecology, the available systems vary widely in construction, size, durability, and shape, potentially leading to confusion and suboptimal bag selection during surgery.7 In this article, we review the most common laparoscopic bags available in the United States, provide an overview of bag characteristics, offer practice guidelines for bag selection, and review bag terminology to highlight important concepts for bag selection.

Controversy spurs change

In April 2014, the FDA warned against the use of power morcellation for specimen removal during minimally invasive surgery, citing a prevalence of 1 in 352 unsuspected uterine sarcomas and 1 in 498 unsuspected uterine leiomyosarcomas among women undergoing hysterectomy or myomectomy for presumed benign leiomyoma.8 Since then, the risk of occult uterine sarcomas, including leiomyosarcoma, in women undergoing surgery for benign gynecologic indications has been determined to be much lower.

Nonetheless, the clinical importance of contained specimen removal was clearly highlighted and the role of specimen retrieval bags soared to the forefront. Open power morcellation is no longer commonly practiced, and national societies such as the American Association of Gynecologic Laparoscopists (AAGL), the Society of Gynecologic Oncology (SGO), and the American College of Obstetricians and Gynecologists (ACOG) recommend that containment systems be used for safer specimen retrieval during gynecologic surgery.9-11 After the specimen is placed inside the containment system (typically a specimen bag), the surgeon may deliver the bag through a vaginal colpotomy or through a slightly extended laparoscopic incision to remove bulky specimens using cold-cutting extraction techniques.12-15

Continue to: Know the pathology’s characteristics...

Know the pathology’s characteristics

In most cases, based on imaging studies and physical examination, surgeons have a good idea of what to expect before proceeding with surgery. The 2 most common characteristics used for surgical planning are the specimen size (dimensions) and the tissue type (solid, cystic, soft tissue, or mixed). The mass size can range from less than 1 cm to larger than a 20-week sized fibroid uterus. Assessing the specimen in 3 dimensions is important. Tissue type also is a consideration, as soft and squishy masses, such as ovarian cysts, are easier to deflate and manipulate within the bag compared with solid or calcified tumors, such as a large fibroid uterus or a large dermoid with solid components.

Specimen shape also is a critical determinant for bag selection. Most specimen retrieval bags are tapered to varying degrees, and some have an irregular shape. Long tubular structures, such as fallopian tubes that are composed of soft tissue, fit easily into most bags regardless of bag shape or extent of bag taper, whereas the round shape of a bulky myoma may render certain bags ineffective even if the bag’s entrance accommodates the greatest diameter of the myoma. Often, a round mass will not fully fit into a bag because there is a poor fit between the mass’s shape and the bag’s shape and taper. (We discuss the concept of a poor “fit” below.) Knowing the pathology before starting a procedure can help optimize bag selection, streamline operative flow, and reduce waste.

Overview of laparoscopic bag characteristics and clinical applications

The TABLE lists the most common laparoscopic bags available for purchase in the United States. Details include the trocar size, manufacturer, product name, mouth diameter, volume, bag shape, construction material, and best clinical application.

The following are terms used to refer to the components of a laparoscopic retrieval bag:

- Mouth diameter: diameter at the entrance of a fully opened bag (FIGURE 1)

- Bag volume: the total volume a bag can accommodate when completely full

- Bag rim: characteristics of the rim of the bag when opened (that is, rigid vs soft rim, complete vs partial rim mechanism to hold the bag open) (FIGURE 2)

- Bag shape: the shape of the bag when it is fully opened (square shaped vs cone shaped vs curved bag shape) (FIGURE 2)

- Bag taper (severity and type): extent the bag is tapered from the rim of the bag’s entrance to the base of the bag; categorized by taper severity (minimal, gradual, or steep taper) and type (continuous taper or curved taper) (FIGURE 3)

- Ball fit: the maximum spherical specimen size that completely fits into a bag and allows it to cinch closed (FIGURE 4)

- Bag strength: durability of a bag when placed on tension during specimen extraction (weak, moderate, or extremely durable).

Continue to: Mouth diameter...

Mouth diameter

Bag manufacturers often differentiate bag sizes by indicating “volume” in milliliters. Bag volume, however, offers little clinical value to surgeons, as pelvic mass dimensions are usually measured in centimeters on imaging. Rather, an important characteristic for bag selection is the diameter of the rim of the bag when it is fully opened—the so-called bag mouth diameter. For a specimen to fit, the 2 dimensions of the specimen must be smaller than the dimensions of the bag entrance.

Notably, the number often linked to the specimen bag—as, for example, in the 10-mm Endo Catch bag (Covidien/Medtronic)— describes the width of the shaft of the bag before it is opened rather than the mouth diameter of the opened bag. The number actually correlates with the trocar size necessary for bag insertion rather than with the specimen size that can fit into the bag. Therefore, a 10-mm Endo Catch bag cannot fit a 10-cm mass, but rather requires a trocar size of 10 mm or greater for insertion of the bag. Fully opened, the mouth diameters of the 10-mm Endo Catch bag are roughly 6 cm x 7 cm, which allows for delivery of a 6-cm mass.

Because 2 bags that use the same trocar size for insertion may have vastly differing bag dimensions, the surgeon must know the bag mouth diameters when selecting a bag to remove the presenting pathology. For example, the Inzii 12 (Applied Medical) laparoscopic bag has mouth diameters of 9.7 cm × 13.0 cm, whereas the Anchor TRSROBO-12 (ConMed) has mouth diameters of 6.7 cm × 7.6 cm (TABLE). Although both bags can be inserted through a 12-mm trocar, both bags cannot fit the same size mass for removal.

Shape and taper

Laparoscopic bags come in various shapes (curved, cone, or square shaped), with varying levels of bag taper (steep, gradual, or no taper) (FIGURES 2 and 3). While taper has little impact on long and skinny specimens, taper may hinder successful bagging of bulky or spherical specimens.

Each bag has different grades of taper regardless of mouth diameter or trocar size. For round masses, the steeper the taper, the smaller the mass that can comfortably fit within the bag. This concept is connected to the idea of “ball fit,” explained below.

In addition, bag shape may affect what mass size can fit into the bag. An irregularly shaped curved bag or a bag with a steep taper may be well suited for removal of multiple specimens of varying sizes or soft masses that are malleable enough to conform to the bag’s shape (such as a ruptured ovarian cyst). Alternatively, a square-shaped bag or a bag with minimal taper would better accommodate a round mass.

Ball fit

When thinking about large circular masses, such as myomas or ovarian cysts, one must consider the ball fit. This refers to the maximum spherical size of the specimen that fits completely within a bag while allowing the bag to cinch closed. Generally, this is an estimation that factors in the bag shape, extent of the bag taper, bag mouth diameter, and specimen shape and tissue type. At times, although a mass can fit through the bag’s mouth diameter, a steep taper may prevent the mass from being fully bagged and limit closure of the bag (FIGURE 4).

Curved bags like the Anchor TRSVATS-15 (ConMed), which have a very narrow bottom, are prone to a limited ball fit, and thus the bag mouth diameter will not correlate with the largest mass size that can be fitted within the bag. Therefore, if using a steeply tapered bag for removal of large round masses, do not rely on the bag’s mouth diameter for bag selection. The surgeon must visualize the ball fit within the bag, taking into account the specimen size and shape, bag shape, and bag taper. In these scenarios, using the diameter of the midportion of the opened bag may better reflect the mass size that can fit into that bag.

Bag strength

Bag strength depends on the material used for bag construction. Most laparoscopic bags in the United States are made of 3 different materials: polyurethane, polypropylene, and ripstop nylon.

Polyurethane and polypropylene are synthetic plastic polymers; in bag form they are stretchy and, under extreme force, may tear. They are best used for bagging fluid-filled cysts or soft pliable masses that will not require extensive bag or tissue handling, such as extraction of large leiomyomas. Polyurethane and polypropylene bags are more susceptible to puncture with sharp laparoscopic instruments or scalpels, and care must be taken to avoid accidentally cutting the bag during tissue extraction.

Alternatively, bags made of ripstop nylon are favored for their bag strength. Ripstop nylon is a synthetic fabric that is woven together in a crosshatch pattern that makes it resistant to tearing and ripping. It was developed originally during World War II as a replacement for silk parachutes. Modern applications include its use in sails, kites, and high-quality camping equipment. This material has a favorable strength-to-weight ratio, and, in case of a tear, it is less prone to extension of the tear. For surgical applications, these bags are best used for bagging specimens that will require a lot of bag manipulation and tissue extraction. However, the ripstop fabric takes up more space in the incision than polyurethane or polypropylene, leaving the surgeon with less space for tissue extraction. Thus, as a tradeoff for bag strength, the surgeon may need to extend the incision a little, and a small self-retracting wound retractor may be necessary to allow visibility for safe tissue extraction when using a ripstop nylon bag compared with others.

Continue to: Trocar selection is important...

Trocar selection is important

While considering bag selection, the surgeon also must consider trocar selection to allow for laparoscopic insertion of the bag. Trocar size for bag selection refers to the minimum trocar diameter needed to insert the laparoscopic bag. Most bags are designed to fit into a laparoscopic trocar or into the skin incision that previously housed the trocar. Trocar size does not directly correlate with bag mouth diameter; for example, a 10-mm laparoscopic bag that can be inserted through a 10- or 12-mm trocar size cannot fit a 10-cm mass (see the mouth diameter section above).

A tip to maximize operating room (OR) efficiency is to start off with a larger trocar, such as a 12-mm trocar, if it is known that a laparoscopic bag with a 12-mm trocar size will be used, rather than starting with a 5-mm trocar and upsizing the port site incision. This saves time and offers intraoperative flexibility, allowing for the use of larger instruments and quicker insufflation.

Furthermore, if the specimen has a solid component and tissue extraction is anticipated, consider starting off with a large trocar, one that is larger than the bag’s trocar size since the incision likely will be extended. For example, even if a myoma will fit within a 10-mm laparoscopic bag made of ripstop nylon, using a 15-mm trocar rather than a 10-mm trocar may be considered since the skin and fascial incisions will need to be extended to allow for cold-cut tissue extraction. Starting with the larger 15-mm trocar may offer surgical advantages, such as direct needle delivery of larger needles for myometrial closure after myomectomy or direct removal of smaller myomas through the trocar to avoid bagging multiple specimens.

Putting it all together

To optimize efficiency in the OR for specimen removal, we recommend streamlining OR flow and reducing waste by first considering the specimen size, tissue type, bag shape, and trocar selection. Choose a bag by taking into account the bag mouth diameter and the amount of taper you will need to obtain an appropriate ball fit. If the tissue type is soft and pliable, consider a polyurethane or polypropylene bag and the smallest bag size possible, even if it has a narrow bag shape and taper.

However, if the tissue type is solid, the shape is round, and the mass is large (requiring extensive tissue extraction for removal), consider a bag made of ripstop nylon and factor in the bag shape as well as the bag taper. Using a bag without a steep taper may allow a better fit.

After choosing a laparoscopic bag, select the appropriate trocars necessary for completion of the surgery. Consider starting off with a larger trocar rather than spending the time to upsize a trocar if you plan to use a large bag or intend to extend the trocar incision for a contained tissue extraction. These tips will help optimize efficiency, reduce equipment wastage, and prevent intra-abdominal spillage.

Keep in mind that all procedures, including specimen removal using containment systems, have inherent risks. For example, visualization of the mass within the bag and visualization of vital structures may be hindered by bulkiness of the bag or specimen. There is also a risk of bag compromise and leakage, whether through manipulation of the bag or puncture during specimen extraction. Lastly, even though removing a specimen within a containment system minimizes spillage and reports of in-bag cold-knife tissue extraction in women with histologically proven endometrial cancer have suggested that it is safe, laparoscopic bags have not been proven to prevent the dissemination of malignant tissue fragments.16,17

Overall, the inherent risks of specimen extraction during minimally invasive surgery are far outweighed by the well-established advantages of laparoscopic surgery, which carries lower risks of surgical complications such as bleeding and infection, shorter hospital stay, and quicker recovery time compared to laparotomy. There is no doubt minimally invasive surgery offers many benefits.

In summary, for best bag selection, it is equally important to know the characteristics of the pathology as it is to know the features of the specimen retrieval systems available at your institution. Understanding both the pathology and the equipment available will allow the surgeon to make the best surgical decisions for the case. ●

- Desai VB, Wright JD, Lin H, et al. Laparoscopic hysterectomy route, resource use, and outcomes: change after power morcellation warning. Obstet Gynecol. 2019;134:227-238.

- American College of Obstetricians and Gynecologists. ACOG committee opinion No. 444: choosing the route of hysterectomy for benign disease. Obstet Gynecol. 2009;114:1156-1158.

- Liu H, Lu D, Wang L, et al. Robotic surgery for benign gynecological disease. Cochrane Database Syst Rev. 2012;2:CD008978.

- Wright JD, Herzog TJ, Tsui J, et al. Nationwide trends in the performance of inpatient hysterectomy in the United States. Obstet Gynecol. 2013;122(2 pt 1):233-241.

- Turner LC, Shepherd JP, Wang L, et al. Hysterectomy surgery trends: a more accurate depiction of the last decade? Am J Obstet Gynecol. 2013;208:277.e1-7.

- Holme JB, Mortensen FV. A powder-free surgical glove bag for retraction of the gallbladder during laparoscopic cholecystectomy. Surg Laparosc Endosc Percutan Tech. 2005;15:209-211.

- Siedhoff MT, Cohen SL. Tissue extraction techniques for leiomyomas and uteri during minimally invasive surgery. Obstet Gynecol. 2017;130:1251-1260.

- US Food and Drug Administration. Laparoscopic uterine power morcellation in hysterectomy and myomectomy: FDA safety communication. April 17, 2014. https://wayback .archive-it.org/7993/20170722215731/https:/www.fda.gov /MedicalDevices/Safety/AlertsandNotices/ucm393576.htm. Accessed September 22, 2020.

- AAGL. AAGL practice report: morcellation during uterine tissue extraction. J Minim Invasive Gynecol. 2014;21:517-530.

- American College of Obstetricians and Gynecologists. ACOG committee opinion No. 770: uterine morcellation for presumed leiomyomas. Obstet Gynecol. 2019;133:e238-e248.

- Society of Gynecologic Oncology website. SGO position statement: morcellation. December 1, 2013. https://www .sgo.org/newsroom/position-statements-2/morcellation/. Accessed September 22, 2020.

- Advincula AP, Truong MD. ExCITE: minimally invasive tissue extraction made simple with simulation. OBG Manag. 2015;27(12):40-45.

- Solima E, Scagnelli G, Austoni V, et al. Vaginal uterine morcellation within a specimen containment system: a study of bag integrity. J Minim Invasive Gynecol. 2015;22:1244-1246.

- Ghezzi F, Casarin J, De Francesco G, et al. Transvaginal contained tissue extraction after laparoscopic myomectomy: a cohort study. BJOG. 2018;125:367-373.

- Dotson S, Landa A, Ehrisman J, et al. Safety and feasibility of contained uterine morcellation in women undergoing laparoscopic hysterectomy. Gynecol Oncol Res Pract. 2018;5:8.

- Favero G, Miglino G, Köhler C, et al. Vaginal morcellation inside protective pouch: a safe strategy for uterine extration in cases of bulky endometrial cancers: operative and oncological safety of the method. J Minim Invasive Gynecol. 2015;22:938-943.

- Montella F, Riboni F, Cosma S, et al. A safe method of vaginal longitudinal morcellation of bulky uterus with endometrial cancer in a bag at laparoscopy. Surg Endosc. 2014;28:1949-1953.

- Desai VB, Wright JD, Lin H, et al. Laparoscopic hysterectomy route, resource use, and outcomes: change after power morcellation warning. Obstet Gynecol. 2019;134:227-238.

- American College of Obstetricians and Gynecologists. ACOG committee opinion No. 444: choosing the route of hysterectomy for benign disease. Obstet Gynecol. 2009;114:1156-1158.

- Liu H, Lu D, Wang L, et al. Robotic surgery for benign gynecological disease. Cochrane Database Syst Rev. 2012;2:CD008978.

- Wright JD, Herzog TJ, Tsui J, et al. Nationwide trends in the performance of inpatient hysterectomy in the United States. Obstet Gynecol. 2013;122(2 pt 1):233-241.

- Turner LC, Shepherd JP, Wang L, et al. Hysterectomy surgery trends: a more accurate depiction of the last decade? Am J Obstet Gynecol. 2013;208:277.e1-7.

- Holme JB, Mortensen FV. A powder-free surgical glove bag for retraction of the gallbladder during laparoscopic cholecystectomy. Surg Laparosc Endosc Percutan Tech. 2005;15:209-211.

- Siedhoff MT, Cohen SL. Tissue extraction techniques for leiomyomas and uteri during minimally invasive surgery. Obstet Gynecol. 2017;130:1251-1260.

- US Food and Drug Administration. Laparoscopic uterine power morcellation in hysterectomy and myomectomy: FDA safety communication. April 17, 2014. https://wayback .archive-it.org/7993/20170722215731/https:/www.fda.gov /MedicalDevices/Safety/AlertsandNotices/ucm393576.htm. Accessed September 22, 2020.

- AAGL. AAGL practice report: morcellation during uterine tissue extraction. J Minim Invasive Gynecol. 2014;21:517-530.

- American College of Obstetricians and Gynecologists. ACOG committee opinion No. 770: uterine morcellation for presumed leiomyomas. Obstet Gynecol. 2019;133:e238-e248.

- Society of Gynecologic Oncology website. SGO position statement: morcellation. December 1, 2013. https://www .sgo.org/newsroom/position-statements-2/morcellation/. Accessed September 22, 2020.

- Advincula AP, Truong MD. ExCITE: minimally invasive tissue extraction made simple with simulation. OBG Manag. 2015;27(12):40-45.

- Solima E, Scagnelli G, Austoni V, et al. Vaginal uterine morcellation within a specimen containment system: a study of bag integrity. J Minim Invasive Gynecol. 2015;22:1244-1246.

- Ghezzi F, Casarin J, De Francesco G, et al. Transvaginal contained tissue extraction after laparoscopic myomectomy: a cohort study. BJOG. 2018;125:367-373.

- Dotson S, Landa A, Ehrisman J, et al. Safety and feasibility of contained uterine morcellation in women undergoing laparoscopic hysterectomy. Gynecol Oncol Res Pract. 2018;5:8.

- Favero G, Miglino G, Köhler C, et al. Vaginal morcellation inside protective pouch: a safe strategy for uterine extration in cases of bulky endometrial cancers: operative and oncological safety of the method. J Minim Invasive Gynecol. 2015;22:938-943.

- Montella F, Riboni F, Cosma S, et al. A safe method of vaginal longitudinal morcellation of bulky uterus with endometrial cancer in a bag at laparoscopy. Surg Endosc. 2014;28:1949-1953.

HIT-6 may help track meaningful change in chronic migraine

, recent research suggests.

Using data from the phase 3 PROMISE-2 study, which evaluated intravenous eptinezumab in doses of 100 mg or 300 mg, or placebo every 12 weeks in 1,072 participants for the prevention of chronic migraine, Carrie R. Houts, PhD, director of psychometrics at the Vector Psychometric Group, in Chapel Hill, N.C., and colleagues determined that their finding of 6-point improvement of HIT-6 total score was consistent with other studies. However, they pointed out that little research has been done in evaluating how item-specific scores of HIT-6 impact individuals with chronic migraine. HIT-6 item scores examine whether individuals with headaches experience severe pain, limit their daily activities, have a desire to lie down, feel too tired to do daily activities, felt “fed up or irritated” because of headaches, and feel their headaches limit concentration on work or daily activities.

“The item-specific responder definitions give clinicians and researchers the ability to evaluate and track the impact of headache on specific item-level areas of patients’ lives. These responder definitions provide practical and easily interpreted results that can be used to evaluate treatment benefits over time and to improve clinician-patients communication focus on improvements in key aspects of functioning in individuals with chronic migraine,” Dr. Houts and colleagues wrote in their study, published in the October issue of Headache.

The 6-point value and the 1-2 category improvement values in item-specific scores, they suggested, could be used as a benchmark to help other clinicians and researchers detect meaningful change in individual patients with chronic migraine. Although the user guide for HIT-6 highlights a 5-point change in the total score as clinically meaningful, the authors of the guide do not provide evidence for why the 5-point value signifies clinically meaningful change, they said.

Determining thresholds of clinically meaningful change

In their study, Dr. Houts and colleagues used distribution-based methods to gauge responder values for the HIT-6 total score, while item-specific HIT-6 analyses were measured with Patients’ Global Impression of Change (PGIC), reduction in migraine frequency through monthly migraine days (MMDs), and EuroQol 5 dimensions 5 levels visual analog scale (EQ-5D-5L VAS). The researchers also used HIT-6 values from a literature review and from analyses in PROMISE-2 to calculate “a final chronic migraine-specific responder definition value” between baseline and 12 weeks. Participants in the PROMISE-2 study were mostly women (88.2%) and white (91.0%) with a mean age of 40.5 years.