User login

Joint symposium addresses exocrine pancreatic insufficiency

Based on discussions during PancreasFest 2021, , according to a recent report in Gastro Hep Advances

Due to its complex and individualized nature, EPI requires multidisciplinary approaches to therapy, as well as better pancreas function tests and biomarkers for diagnosis and treatment, wrote researchers who were led by David C. Whitcomb, MD, PhD, AGAF, emeritus professor of medicine in the division of gastroenterology, hepatology and nutrition at the University of Pittsburgh.

“This condition remains challenging even to define, and serious limitations in diagnostic testing and therapeutic options lead to clinical confusion and frequently less than optimal patient management,” the authors wrote.

EPI is clinically defined as inadequate delivery of pancreatic digestive enzymes to meet nutritional needs, which is typically based on a physician’s assessment of a patient’s maldigestion. However, there’s not a universally accepted definition or a precise threshold of reduced pancreatic digestive enzymes that indicates “pancreatic insufficiency” in an individual patient.

Current guidelines also don’t clearly outline the role of pancreatic function tests, the effects of different metabolic needs and nutrition intake, the timing of pancreatic enzyme replacement therapy (PERT), or the best practices for monitoring or titrating multiple therapies.

In response, Dr. Whitcomb and colleagues proposed a new mechanistic definition of EPI, including the disorder’s physiologic effects and impact on health. First, they said, EPI is a disorder caused by failure of the pancreas to deliver a minimum or threshold level of specific pancreatic digestive enzymes to the intestine in concert with ingested nutrients, followed by enzymatic digestion of individual meals over time to meet certain nutritional and metabolic needs. In addition, the disorder is characterized by variable deficiencies in micronutrients and macronutrients, especially essential fats and fat-soluble vitamins, as well as gastrointestinal symptoms of nutrient maldigestion.

The threshold for EPI should consider the nutritional needs of the patient, dietary intake, residual exocrine pancreas function, and the absorptive capacity of the intestine based on anatomy, mucosal function, motility, inflammation, the microbiome, and physiological adaptation, the authors wrote.

Due to challenges in diagnosing EPI and its common chronic symptoms such as abdominal pain, bloating, and diarrhea, several conditions may mimic EPI, be present concomitantly with EPI, or hinder PERT response. These include celiac disease, small intestinal bacterial overgrowth, disaccharidase deficiencies, inflammatory bowel disease (IBD), bile acid diarrhea, giardiasis, diabetes mellitus, and functional conditions such as irritable bowel syndrome. These conditions should be considered to address underlying pathology and PERT diagnostic challenges.

Although there is consensus that exocrine pancreatic function testing (PFT) is important to diagnosis EPI, no optimal test exists, and pancreatic function is only one aspect of digestion and absorption that should be considered. PFT may be needed to make an objective EPI diagnosis related to acute pancreatitis, pancreatic cancer, pancreatic resection, gastric resection, cystic fibrosis, or IBD. Direct or indirect PFTs may be used, which typically differs by center.

“The medical community still awaits a clinically useful pancreas function test that is easy to perform, well tolerated by patients, and allows personalized dosing of PERT,” the authors wrote.

After diagnosis, a general assessment should include information about symptoms, nutritional status, medications, diet, and lifestyle. This information can be used for a multifaceted treatment approach, with a focus on lifestyle changes, concomitant disease treatment, optimized diet, dietary supplements, and PERT administration.

PERT remains a mainstay of EPI treatment and has shown improvements in steatorrhea, postprandial bloating and pain, nutrition, and unexplained weight loss. The Food and Drug Administration has approved several formulations in different strengths. The typical starting dose is based on age and weight, which is derived from guidelines for EPI treatment in patients with cystic fibrosis. However, the recommendations don’t consider many of the variables discussed above and simply provide an estimate for the average subject with severe EPI, so the dose should be titrated as needed based on age, weight, symptoms, and the holistic management plan.

For optimal results, regular follow-up is necessary to monitor compliance and treatment response. A reduction in symptoms can serve as a reliable indicator of effective EPI management, particularly weight stabilization, improved steatorrhea and diarrhea, and reduced postprandial bloating, pain, and flatulence. Physicians may provide patients with tracking tools to record their PERT compliance, symptom frequency, and lifestyle changes.

For patients with persistent concerns, PERT can be increased as needed. Although many PERT formulations are enteric coated, a proton pump inhibitor or H2 receptor agonist may improve their effectiveness. If EPI symptoms persist despite increased doses, other causes of malabsorption should be considered, such as the concomitant conditions mentioned above.

“As EPI escalates, a lower fat diet may become necessary to alleviate distressing gastrointestinal symptoms,” the authors wrote. “A close working relationship between the treating provider and the [registered dietician] is crucial so that barriers to optimum nutrient assimilation can be identified, communicated, and overcome. Frequent monitoring of the nutritional state with therapy is also imperative.”

PancreasFest 2021 received no specific funding for this event. The authors declared grant support, adviser roles, and speaking honoraria from several pharmaceutical and medical device companies and health care foundations, including the National Pancreas Foundation.

Recognition of recent advances and unaddressed gaps can clarify key issues around exocrine pancreatic insufficiency (EPI).

The loss of pancreatic digestive enzymes and bicarbonate is caused by exocrine pancreatic and proximal small intestine disease. EPI’s clinical impact has been expanded by reports that 30% of subjects can develop EPI after a bout of acute pancreatitis. Diagnosing and treating EPI challenges clinicians and investigators.

The contribution on EPI by Whitcomb and colleagues provides state-of-the-art content relating to diagnosing EPI, assessing its metabolic impact, enzyme replacement, nutritional considerations, and how to assess the effectiveness of therapy.

Though the diagnosis and treatment of EPI have been examined for over 50 years, a consensus for either is still needed. Assessment of EPI with luminal tube tests and endoscopic collections of pancreatic secretion are the most accurate, but they are invasive, limited in availability, and time-consuming. Indirect assays of intestinal activities of pancreatic enzymes by the hydrolysis of substrates or stool excretion are frequently used to diagnose EPI. However, they need to be more insensitive and specific to meet clinical and investigative needs.

Indeed, all tests of exocrine secretion are surrogates of unclear value for the critical endpoint of EPI, its nutritional impact. An unmet need is the development of nutritional standards for assessing EPI and measures for the adequacy of pancreatic enzyme replacement therapy. In this context, a patient’s diet, and other factors, such as the intestinal microbiome, can affect pancreatic digestive enzyme activity and must be considered in designing the best EPI treatments. The summary concludes with a thoughtful and valuable road map for moving forward.

Fred Sanford Gorelick, MD, is the Henry J. and Joan W. Binder Professor of Medicine (Digestive Diseases) and of Cell Biology for Yale School of Medicine, New Haven, Conn. He also serves as director of the Yale School of Medicine NIH T32-funded research track in gastroenterology; and as deputy director of Yale School of Medicine MD-PhD program.

Potential conflicts: Dr. Gorelick serves as chair of NIH NIDDK DSMB for Stent vs. Indomethacin for Preventing Post-ERCP Pancreatitis (SVI) study. He also holds grants for research on mechanisms of acute pancreatitis from the U.S. Department of Veterans Affairs and the Department of Defense.

Recognition of recent advances and unaddressed gaps can clarify key issues around exocrine pancreatic insufficiency (EPI).

The loss of pancreatic digestive enzymes and bicarbonate is caused by exocrine pancreatic and proximal small intestine disease. EPI’s clinical impact has been expanded by reports that 30% of subjects can develop EPI after a bout of acute pancreatitis. Diagnosing and treating EPI challenges clinicians and investigators.

The contribution on EPI by Whitcomb and colleagues provides state-of-the-art content relating to diagnosing EPI, assessing its metabolic impact, enzyme replacement, nutritional considerations, and how to assess the effectiveness of therapy.

Though the diagnosis and treatment of EPI have been examined for over 50 years, a consensus for either is still needed. Assessment of EPI with luminal tube tests and endoscopic collections of pancreatic secretion are the most accurate, but they are invasive, limited in availability, and time-consuming. Indirect assays of intestinal activities of pancreatic enzymes by the hydrolysis of substrates or stool excretion are frequently used to diagnose EPI. However, they need to be more insensitive and specific to meet clinical and investigative needs.

Indeed, all tests of exocrine secretion are surrogates of unclear value for the critical endpoint of EPI, its nutritional impact. An unmet need is the development of nutritional standards for assessing EPI and measures for the adequacy of pancreatic enzyme replacement therapy. In this context, a patient’s diet, and other factors, such as the intestinal microbiome, can affect pancreatic digestive enzyme activity and must be considered in designing the best EPI treatments. The summary concludes with a thoughtful and valuable road map for moving forward.

Fred Sanford Gorelick, MD, is the Henry J. and Joan W. Binder Professor of Medicine (Digestive Diseases) and of Cell Biology for Yale School of Medicine, New Haven, Conn. He also serves as director of the Yale School of Medicine NIH T32-funded research track in gastroenterology; and as deputy director of Yale School of Medicine MD-PhD program.

Potential conflicts: Dr. Gorelick serves as chair of NIH NIDDK DSMB for Stent vs. Indomethacin for Preventing Post-ERCP Pancreatitis (SVI) study. He also holds grants for research on mechanisms of acute pancreatitis from the U.S. Department of Veterans Affairs and the Department of Defense.

Recognition of recent advances and unaddressed gaps can clarify key issues around exocrine pancreatic insufficiency (EPI).

The loss of pancreatic digestive enzymes and bicarbonate is caused by exocrine pancreatic and proximal small intestine disease. EPI’s clinical impact has been expanded by reports that 30% of subjects can develop EPI after a bout of acute pancreatitis. Diagnosing and treating EPI challenges clinicians and investigators.

The contribution on EPI by Whitcomb and colleagues provides state-of-the-art content relating to diagnosing EPI, assessing its metabolic impact, enzyme replacement, nutritional considerations, and how to assess the effectiveness of therapy.

Though the diagnosis and treatment of EPI have been examined for over 50 years, a consensus for either is still needed. Assessment of EPI with luminal tube tests and endoscopic collections of pancreatic secretion are the most accurate, but they are invasive, limited in availability, and time-consuming. Indirect assays of intestinal activities of pancreatic enzymes by the hydrolysis of substrates or stool excretion are frequently used to diagnose EPI. However, they need to be more insensitive and specific to meet clinical and investigative needs.

Indeed, all tests of exocrine secretion are surrogates of unclear value for the critical endpoint of EPI, its nutritional impact. An unmet need is the development of nutritional standards for assessing EPI and measures for the adequacy of pancreatic enzyme replacement therapy. In this context, a patient’s diet, and other factors, such as the intestinal microbiome, can affect pancreatic digestive enzyme activity and must be considered in designing the best EPI treatments. The summary concludes with a thoughtful and valuable road map for moving forward.

Fred Sanford Gorelick, MD, is the Henry J. and Joan W. Binder Professor of Medicine (Digestive Diseases) and of Cell Biology for Yale School of Medicine, New Haven, Conn. He also serves as director of the Yale School of Medicine NIH T32-funded research track in gastroenterology; and as deputy director of Yale School of Medicine MD-PhD program.

Potential conflicts: Dr. Gorelick serves as chair of NIH NIDDK DSMB for Stent vs. Indomethacin for Preventing Post-ERCP Pancreatitis (SVI) study. He also holds grants for research on mechanisms of acute pancreatitis from the U.S. Department of Veterans Affairs and the Department of Defense.

Based on discussions during PancreasFest 2021, , according to a recent report in Gastro Hep Advances

Due to its complex and individualized nature, EPI requires multidisciplinary approaches to therapy, as well as better pancreas function tests and biomarkers for diagnosis and treatment, wrote researchers who were led by David C. Whitcomb, MD, PhD, AGAF, emeritus professor of medicine in the division of gastroenterology, hepatology and nutrition at the University of Pittsburgh.

“This condition remains challenging even to define, and serious limitations in diagnostic testing and therapeutic options lead to clinical confusion and frequently less than optimal patient management,” the authors wrote.

EPI is clinically defined as inadequate delivery of pancreatic digestive enzymes to meet nutritional needs, which is typically based on a physician’s assessment of a patient’s maldigestion. However, there’s not a universally accepted definition or a precise threshold of reduced pancreatic digestive enzymes that indicates “pancreatic insufficiency” in an individual patient.

Current guidelines also don’t clearly outline the role of pancreatic function tests, the effects of different metabolic needs and nutrition intake, the timing of pancreatic enzyme replacement therapy (PERT), or the best practices for monitoring or titrating multiple therapies.

In response, Dr. Whitcomb and colleagues proposed a new mechanistic definition of EPI, including the disorder’s physiologic effects and impact on health. First, they said, EPI is a disorder caused by failure of the pancreas to deliver a minimum or threshold level of specific pancreatic digestive enzymes to the intestine in concert with ingested nutrients, followed by enzymatic digestion of individual meals over time to meet certain nutritional and metabolic needs. In addition, the disorder is characterized by variable deficiencies in micronutrients and macronutrients, especially essential fats and fat-soluble vitamins, as well as gastrointestinal symptoms of nutrient maldigestion.

The threshold for EPI should consider the nutritional needs of the patient, dietary intake, residual exocrine pancreas function, and the absorptive capacity of the intestine based on anatomy, mucosal function, motility, inflammation, the microbiome, and physiological adaptation, the authors wrote.

Due to challenges in diagnosing EPI and its common chronic symptoms such as abdominal pain, bloating, and diarrhea, several conditions may mimic EPI, be present concomitantly with EPI, or hinder PERT response. These include celiac disease, small intestinal bacterial overgrowth, disaccharidase deficiencies, inflammatory bowel disease (IBD), bile acid diarrhea, giardiasis, diabetes mellitus, and functional conditions such as irritable bowel syndrome. These conditions should be considered to address underlying pathology and PERT diagnostic challenges.

Although there is consensus that exocrine pancreatic function testing (PFT) is important to diagnosis EPI, no optimal test exists, and pancreatic function is only one aspect of digestion and absorption that should be considered. PFT may be needed to make an objective EPI diagnosis related to acute pancreatitis, pancreatic cancer, pancreatic resection, gastric resection, cystic fibrosis, or IBD. Direct or indirect PFTs may be used, which typically differs by center.

“The medical community still awaits a clinically useful pancreas function test that is easy to perform, well tolerated by patients, and allows personalized dosing of PERT,” the authors wrote.

After diagnosis, a general assessment should include information about symptoms, nutritional status, medications, diet, and lifestyle. This information can be used for a multifaceted treatment approach, with a focus on lifestyle changes, concomitant disease treatment, optimized diet, dietary supplements, and PERT administration.

PERT remains a mainstay of EPI treatment and has shown improvements in steatorrhea, postprandial bloating and pain, nutrition, and unexplained weight loss. The Food and Drug Administration has approved several formulations in different strengths. The typical starting dose is based on age and weight, which is derived from guidelines for EPI treatment in patients with cystic fibrosis. However, the recommendations don’t consider many of the variables discussed above and simply provide an estimate for the average subject with severe EPI, so the dose should be titrated as needed based on age, weight, symptoms, and the holistic management plan.

For optimal results, regular follow-up is necessary to monitor compliance and treatment response. A reduction in symptoms can serve as a reliable indicator of effective EPI management, particularly weight stabilization, improved steatorrhea and diarrhea, and reduced postprandial bloating, pain, and flatulence. Physicians may provide patients with tracking tools to record their PERT compliance, symptom frequency, and lifestyle changes.

For patients with persistent concerns, PERT can be increased as needed. Although many PERT formulations are enteric coated, a proton pump inhibitor or H2 receptor agonist may improve their effectiveness. If EPI symptoms persist despite increased doses, other causes of malabsorption should be considered, such as the concomitant conditions mentioned above.

“As EPI escalates, a lower fat diet may become necessary to alleviate distressing gastrointestinal symptoms,” the authors wrote. “A close working relationship between the treating provider and the [registered dietician] is crucial so that barriers to optimum nutrient assimilation can be identified, communicated, and overcome. Frequent monitoring of the nutritional state with therapy is also imperative.”

PancreasFest 2021 received no specific funding for this event. The authors declared grant support, adviser roles, and speaking honoraria from several pharmaceutical and medical device companies and health care foundations, including the National Pancreas Foundation.

Based on discussions during PancreasFest 2021, , according to a recent report in Gastro Hep Advances

Due to its complex and individualized nature, EPI requires multidisciplinary approaches to therapy, as well as better pancreas function tests and biomarkers for diagnosis and treatment, wrote researchers who were led by David C. Whitcomb, MD, PhD, AGAF, emeritus professor of medicine in the division of gastroenterology, hepatology and nutrition at the University of Pittsburgh.

“This condition remains challenging even to define, and serious limitations in diagnostic testing and therapeutic options lead to clinical confusion and frequently less than optimal patient management,” the authors wrote.

EPI is clinically defined as inadequate delivery of pancreatic digestive enzymes to meet nutritional needs, which is typically based on a physician’s assessment of a patient’s maldigestion. However, there’s not a universally accepted definition or a precise threshold of reduced pancreatic digestive enzymes that indicates “pancreatic insufficiency” in an individual patient.

Current guidelines also don’t clearly outline the role of pancreatic function tests, the effects of different metabolic needs and nutrition intake, the timing of pancreatic enzyme replacement therapy (PERT), or the best practices for monitoring or titrating multiple therapies.

In response, Dr. Whitcomb and colleagues proposed a new mechanistic definition of EPI, including the disorder’s physiologic effects and impact on health. First, they said, EPI is a disorder caused by failure of the pancreas to deliver a minimum or threshold level of specific pancreatic digestive enzymes to the intestine in concert with ingested nutrients, followed by enzymatic digestion of individual meals over time to meet certain nutritional and metabolic needs. In addition, the disorder is characterized by variable deficiencies in micronutrients and macronutrients, especially essential fats and fat-soluble vitamins, as well as gastrointestinal symptoms of nutrient maldigestion.

The threshold for EPI should consider the nutritional needs of the patient, dietary intake, residual exocrine pancreas function, and the absorptive capacity of the intestine based on anatomy, mucosal function, motility, inflammation, the microbiome, and physiological adaptation, the authors wrote.

Due to challenges in diagnosing EPI and its common chronic symptoms such as abdominal pain, bloating, and diarrhea, several conditions may mimic EPI, be present concomitantly with EPI, or hinder PERT response. These include celiac disease, small intestinal bacterial overgrowth, disaccharidase deficiencies, inflammatory bowel disease (IBD), bile acid diarrhea, giardiasis, diabetes mellitus, and functional conditions such as irritable bowel syndrome. These conditions should be considered to address underlying pathology and PERT diagnostic challenges.

Although there is consensus that exocrine pancreatic function testing (PFT) is important to diagnosis EPI, no optimal test exists, and pancreatic function is only one aspect of digestion and absorption that should be considered. PFT may be needed to make an objective EPI diagnosis related to acute pancreatitis, pancreatic cancer, pancreatic resection, gastric resection, cystic fibrosis, or IBD. Direct or indirect PFTs may be used, which typically differs by center.

“The medical community still awaits a clinically useful pancreas function test that is easy to perform, well tolerated by patients, and allows personalized dosing of PERT,” the authors wrote.

After diagnosis, a general assessment should include information about symptoms, nutritional status, medications, diet, and lifestyle. This information can be used for a multifaceted treatment approach, with a focus on lifestyle changes, concomitant disease treatment, optimized diet, dietary supplements, and PERT administration.

PERT remains a mainstay of EPI treatment and has shown improvements in steatorrhea, postprandial bloating and pain, nutrition, and unexplained weight loss. The Food and Drug Administration has approved several formulations in different strengths. The typical starting dose is based on age and weight, which is derived from guidelines for EPI treatment in patients with cystic fibrosis. However, the recommendations don’t consider many of the variables discussed above and simply provide an estimate for the average subject with severe EPI, so the dose should be titrated as needed based on age, weight, symptoms, and the holistic management plan.

For optimal results, regular follow-up is necessary to monitor compliance and treatment response. A reduction in symptoms can serve as a reliable indicator of effective EPI management, particularly weight stabilization, improved steatorrhea and diarrhea, and reduced postprandial bloating, pain, and flatulence. Physicians may provide patients with tracking tools to record their PERT compliance, symptom frequency, and lifestyle changes.

For patients with persistent concerns, PERT can be increased as needed. Although many PERT formulations are enteric coated, a proton pump inhibitor or H2 receptor agonist may improve their effectiveness. If EPI symptoms persist despite increased doses, other causes of malabsorption should be considered, such as the concomitant conditions mentioned above.

“As EPI escalates, a lower fat diet may become necessary to alleviate distressing gastrointestinal symptoms,” the authors wrote. “A close working relationship between the treating provider and the [registered dietician] is crucial so that barriers to optimum nutrient assimilation can be identified, communicated, and overcome. Frequent monitoring of the nutritional state with therapy is also imperative.”

PancreasFest 2021 received no specific funding for this event. The authors declared grant support, adviser roles, and speaking honoraria from several pharmaceutical and medical device companies and health care foundations, including the National Pancreas Foundation.

FROM GASTRO HEP ADVANCES

Study of environmental impact of GI endoscopy finds room for improvement

CHICAGO – according to Madhav Desai, MD, MPH, assistant professor of medicine at the University of Minnesota, Minneapolis. About 20% of the waste, most of which went to landfills, was potentially recyclable, he said in a presentation given at the annual Digestive Disease Week® meeting.

Gastrointestinal endoscopies are critical for the screening, diagnosis, and treatment of a variety of gastrointestinal conditions. But like other medical procedures, endoscopies are a source of environmental waste, including plastic, sharps, personal protective equipment (PPE), and cleaning supplies, and also energy waste.

“This all goes back to the damage that mankind is inflicting on the environment in general, with the health care sector as one of the top contributors to plastic waste generation, landfills and water wastage,” Dr. Desai said. “Endoscopies, with their numerous benefits, substantially increase waste generation through landfill waste and liquid consumption and waste through the cleaning of endoscopes. We have a responsibility to look into this topic.”

To prospectively assess total waste generation from their institution, Dr. Desai, who was with the Kansas City (Mo.) Veterans Administration Medical Center, when the research was conducted, collected data on the items used in 450 consecutive procedures from May to June 2022. The data included procedure type, accessory use, intravenous tubing, numbers of biopsy jars, linens, PPE, and more, beginning at the point of patient entry to the endoscopy unit until discharge. They also collected data on waste generation related to reprocessing after each procedure and daily energy use (including endoscopy equipment, lights, and computers). With an eye toward finding opportunities to improve and maximize waste recycling, they stratified waste into the three categories of biohazardous, nonbiohazardous, or potentially recyclable.

“We found that the total waste generated during the time period was 1,398.6 kg, with more than half of it, 61.6%, going directly to landfill,” Dr. Desai said in an interview. “That’s an amount that an average family in the U.S. would use for 2 months. That’s a huge amount.”

Most waste consists of sharps

Exactly one-third was biohazard waste and 5.1% was sharps, they found. A single procedure, on average, sent 2.19 kg of waste to landfill. Extrapolated to 1 year, the waste total amounts to 9,189 kg (equivalent to just over 10 U.S. tons) and per 100 procedures to 219 kg (about 483 pounds).

They estimated 20% of the landfill waste was potentially recyclable (such as plastic CO2 tubing, O2 connector, syringes, etc.), which could reduce the total landfill burden by 8.6 kg per day or 2,580 kg per year (or 61 kg per 100 procedures). Reprocessing endoscopes generated 194 gallons of liquid waste (735.26 kg) per day or 1,385 gallons per 100 procedures.

Turning to energy consumption, Dr. Desai reported that daily use in the endoscopy unit was 277.1 kW-hours (equivalent to 8.2 gallons of gasoline), adding up to about 1,980 kW per 100 procedures. “That 100-procedure amount is the equivalent of the energy used for an average fuel efficiency car to travel 1,200 miles, the distance from Seattle to San Diego,” he said.

“One next step,” Dr. Desai said, “is getting help from GI societies to come together and have endoscopy units track their own performance. You need benchmarks so that you can determine how good an endoscopist you are with respect to waste.”

He commented further:“We all owe it to the environment. And, we have all witnessed what Mother Nature can do to you.”

Working on the potentially recyclable materials that account for 20% of the total waste would be a simple initial step to reduce waste going to landfills, Dr. Desai and colleagues concluded in the meeting abstract. “These data could serve as an actionable model for health systems to reduce total waste generation and move toward environmentally sustainable endoscopy units,” they wrote.

The authors reported no disclosures.

DDW is sponsored by the American Association for the Study of Liver Diseases, the American Gastroenterological Association, the American Society for Gastrointestinal Endoscopy, and The Society for Surgery of the Alimentary Tract.

CHICAGO – according to Madhav Desai, MD, MPH, assistant professor of medicine at the University of Minnesota, Minneapolis. About 20% of the waste, most of which went to landfills, was potentially recyclable, he said in a presentation given at the annual Digestive Disease Week® meeting.

Gastrointestinal endoscopies are critical for the screening, diagnosis, and treatment of a variety of gastrointestinal conditions. But like other medical procedures, endoscopies are a source of environmental waste, including plastic, sharps, personal protective equipment (PPE), and cleaning supplies, and also energy waste.

“This all goes back to the damage that mankind is inflicting on the environment in general, with the health care sector as one of the top contributors to plastic waste generation, landfills and water wastage,” Dr. Desai said. “Endoscopies, with their numerous benefits, substantially increase waste generation through landfill waste and liquid consumption and waste through the cleaning of endoscopes. We have a responsibility to look into this topic.”

To prospectively assess total waste generation from their institution, Dr. Desai, who was with the Kansas City (Mo.) Veterans Administration Medical Center, when the research was conducted, collected data on the items used in 450 consecutive procedures from May to June 2022. The data included procedure type, accessory use, intravenous tubing, numbers of biopsy jars, linens, PPE, and more, beginning at the point of patient entry to the endoscopy unit until discharge. They also collected data on waste generation related to reprocessing after each procedure and daily energy use (including endoscopy equipment, lights, and computers). With an eye toward finding opportunities to improve and maximize waste recycling, they stratified waste into the three categories of biohazardous, nonbiohazardous, or potentially recyclable.

“We found that the total waste generated during the time period was 1,398.6 kg, with more than half of it, 61.6%, going directly to landfill,” Dr. Desai said in an interview. “That’s an amount that an average family in the U.S. would use for 2 months. That’s a huge amount.”

Most waste consists of sharps

Exactly one-third was biohazard waste and 5.1% was sharps, they found. A single procedure, on average, sent 2.19 kg of waste to landfill. Extrapolated to 1 year, the waste total amounts to 9,189 kg (equivalent to just over 10 U.S. tons) and per 100 procedures to 219 kg (about 483 pounds).

They estimated 20% of the landfill waste was potentially recyclable (such as plastic CO2 tubing, O2 connector, syringes, etc.), which could reduce the total landfill burden by 8.6 kg per day or 2,580 kg per year (or 61 kg per 100 procedures). Reprocessing endoscopes generated 194 gallons of liquid waste (735.26 kg) per day or 1,385 gallons per 100 procedures.

Turning to energy consumption, Dr. Desai reported that daily use in the endoscopy unit was 277.1 kW-hours (equivalent to 8.2 gallons of gasoline), adding up to about 1,980 kW per 100 procedures. “That 100-procedure amount is the equivalent of the energy used for an average fuel efficiency car to travel 1,200 miles, the distance from Seattle to San Diego,” he said.

“One next step,” Dr. Desai said, “is getting help from GI societies to come together and have endoscopy units track their own performance. You need benchmarks so that you can determine how good an endoscopist you are with respect to waste.”

He commented further:“We all owe it to the environment. And, we have all witnessed what Mother Nature can do to you.”

Working on the potentially recyclable materials that account for 20% of the total waste would be a simple initial step to reduce waste going to landfills, Dr. Desai and colleagues concluded in the meeting abstract. “These data could serve as an actionable model for health systems to reduce total waste generation and move toward environmentally sustainable endoscopy units,” they wrote.

The authors reported no disclosures.

DDW is sponsored by the American Association for the Study of Liver Diseases, the American Gastroenterological Association, the American Society for Gastrointestinal Endoscopy, and The Society for Surgery of the Alimentary Tract.

CHICAGO – according to Madhav Desai, MD, MPH, assistant professor of medicine at the University of Minnesota, Minneapolis. About 20% of the waste, most of which went to landfills, was potentially recyclable, he said in a presentation given at the annual Digestive Disease Week® meeting.

Gastrointestinal endoscopies are critical for the screening, diagnosis, and treatment of a variety of gastrointestinal conditions. But like other medical procedures, endoscopies are a source of environmental waste, including plastic, sharps, personal protective equipment (PPE), and cleaning supplies, and also energy waste.

“This all goes back to the damage that mankind is inflicting on the environment in general, with the health care sector as one of the top contributors to plastic waste generation, landfills and water wastage,” Dr. Desai said. “Endoscopies, with their numerous benefits, substantially increase waste generation through landfill waste and liquid consumption and waste through the cleaning of endoscopes. We have a responsibility to look into this topic.”

To prospectively assess total waste generation from their institution, Dr. Desai, who was with the Kansas City (Mo.) Veterans Administration Medical Center, when the research was conducted, collected data on the items used in 450 consecutive procedures from May to June 2022. The data included procedure type, accessory use, intravenous tubing, numbers of biopsy jars, linens, PPE, and more, beginning at the point of patient entry to the endoscopy unit until discharge. They also collected data on waste generation related to reprocessing after each procedure and daily energy use (including endoscopy equipment, lights, and computers). With an eye toward finding opportunities to improve and maximize waste recycling, they stratified waste into the three categories of biohazardous, nonbiohazardous, or potentially recyclable.

“We found that the total waste generated during the time period was 1,398.6 kg, with more than half of it, 61.6%, going directly to landfill,” Dr. Desai said in an interview. “That’s an amount that an average family in the U.S. would use for 2 months. That’s a huge amount.”

Most waste consists of sharps

Exactly one-third was biohazard waste and 5.1% was sharps, they found. A single procedure, on average, sent 2.19 kg of waste to landfill. Extrapolated to 1 year, the waste total amounts to 9,189 kg (equivalent to just over 10 U.S. tons) and per 100 procedures to 219 kg (about 483 pounds).

They estimated 20% of the landfill waste was potentially recyclable (such as plastic CO2 tubing, O2 connector, syringes, etc.), which could reduce the total landfill burden by 8.6 kg per day or 2,580 kg per year (or 61 kg per 100 procedures). Reprocessing endoscopes generated 194 gallons of liquid waste (735.26 kg) per day or 1,385 gallons per 100 procedures.

Turning to energy consumption, Dr. Desai reported that daily use in the endoscopy unit was 277.1 kW-hours (equivalent to 8.2 gallons of gasoline), adding up to about 1,980 kW per 100 procedures. “That 100-procedure amount is the equivalent of the energy used for an average fuel efficiency car to travel 1,200 miles, the distance from Seattle to San Diego,” he said.

“One next step,” Dr. Desai said, “is getting help from GI societies to come together and have endoscopy units track their own performance. You need benchmarks so that you can determine how good an endoscopist you are with respect to waste.”

He commented further:“We all owe it to the environment. And, we have all witnessed what Mother Nature can do to you.”

Working on the potentially recyclable materials that account for 20% of the total waste would be a simple initial step to reduce waste going to landfills, Dr. Desai and colleagues concluded in the meeting abstract. “These data could serve as an actionable model for health systems to reduce total waste generation and move toward environmentally sustainable endoscopy units,” they wrote.

The authors reported no disclosures.

DDW is sponsored by the American Association for the Study of Liver Diseases, the American Gastroenterological Association, the American Society for Gastrointestinal Endoscopy, and The Society for Surgery of the Alimentary Tract.

AT DDW 2023

The 30th-birthday gift that could save a life

This transcript has been edited for clarity.

Welcome to Impact Factor, your weekly dose of commentary on a new medical study. I’m Dr F. Perry Wilson of the Yale School of Medicine.

Milestone birthdays are always memorable – those ages when your life seems to fundamentally change somehow. Age 16: A license to drive. Age 18: You can vote to determine your own future and serve in the military. At 21, 3 years after adulthood, you are finally allowed to drink alcohol, for some reason. And then ... nothing much happens. At least until you turn 65 and become eligible for Medicare.

But imagine a future when turning 30 might be the biggest milestone birthday of all. Imagine a future when, at 30, you get your genome sequenced and doctors tell you what needs to be done to save your life.

That future may not be far off, as a new study shows us that

Getting your genome sequenced is a double-edged sword. Of course, there is the potential for substantial benefit; finding certain mutations allows for definitive therapy before it’s too late. That said, there are genetic diseases without a cure and without a treatment. Knowing about that destiny may do more harm than good.

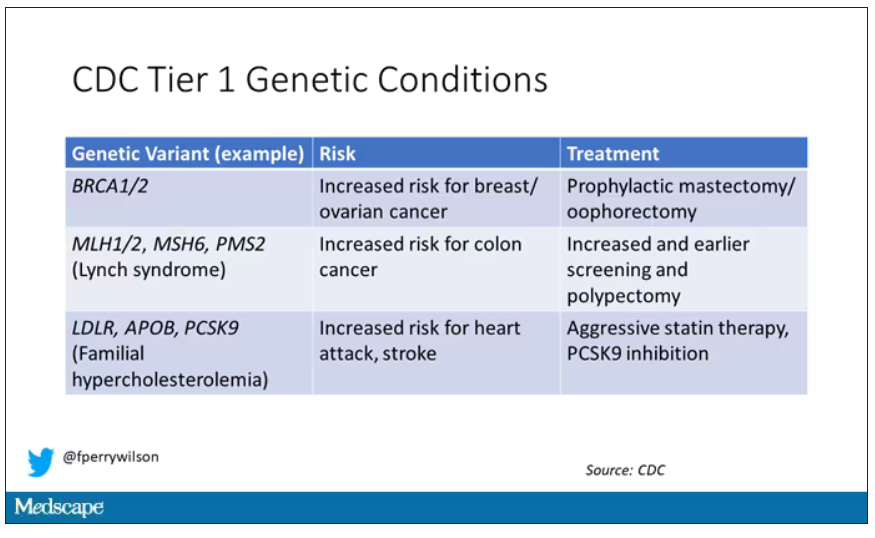

Three conditions are described by the CDC as “Tier 1” conditions, genetic syndromes with a significant impact on life expectancy that also have definitive, effective therapies.

These include mutations like BRCA1/2, associated with a high risk for breast and ovarian cancer; mutations associated with Lynch syndrome, which confer an elevated risk for colon cancer; and mutations associated with familial hypercholesterolemia, which confer elevated risk for cardiovascular events.

In each of these cases, there is clear evidence that early intervention can save lives. Individuals at high risk for breast and ovarian cancer can get prophylactic mastectomy and salpingo-oophorectomy. Those with Lynch syndrome can get more frequent screening for colon cancer and polypectomy, and those with familial hypercholesterolemia can get aggressive lipid-lowering therapy.

I think most of us would probably want to know if we had one of these conditions. Most of us would use that information to take concrete steps to decrease our risk. But just because a rational person would choose to do something doesn’t mean it’s feasible. After all, we’re talking about tests and treatments that have significant costs.

In a recent issue of Annals of Internal Medicine, Josh Peterson and David Veenstra present a detailed accounting of the cost and benefit of a hypothetical nationwide, universal screening program for Tier 1 conditions. And in the end, it may actually be worth it.

Cost-benefit analyses work by comparing two independent policy choices: the status quo – in this case, a world in which some people get tested for these conditions, but generally only if they are at high risk based on strong family history; and an alternative policy – in this case, universal screening for these conditions starting at some age.

After that, it’s time to play the assumption game. Using the best available data, the authors estimated the percentage of the population that will have each condition, the percentage of those individuals who will definitively act on the information, and how effective those actions would be if taken.

The authors provide an example. First, they assume that the prevalence of mutations leading to a high risk for breast and ovarian cancer is around 0.7%, and that up to 40% of people who learn that they have one of these mutations would undergo prophylactic mastectomy, which would reduce the risk for breast cancer by around 94%. (I ran these numbers past my wife, a breast surgical oncologist, who agreed that they seem reasonable.)

Assumptions in place, it’s time to consider costs. The cost of the screening test itself: The authors use $250 as their average per-person cost. But we also have the cost of treatment – around $22,000 per person for a bilateral prophylactic mastectomy; the cost of statin therapy for those with familial hypercholesterolemia; or the cost of all of those colonoscopies for those with Lynch syndrome.

Finally, we assess quality of life. Obviously, living longer is generally considered better than living shorter, but marginal increases in life expectancy at the cost of quality of life might not be a rational choice.

You then churn these assumptions through a computer and see what comes out. How many dollars does it take to save one quality-adjusted life-year (QALY)? I’ll tell you right now that $50,000 per QALY used to be the unofficial standard for a “cost-effective” intervention in the United States. Researchers have more recently used $100,000 as that threshold.

Let’s look at some hard numbers.

If you screened 100,000 people at age 30 years, 1,500 would get news that something in their genetics was, more or less, a ticking time bomb. Some would choose to get definitive treatment and the authors estimate that the strategy would prevent 85 cases of cancer. You’d prevent nine heart attacks and five strokes by lowering cholesterol levels among those with familial hypercholesterolemia. Obviously, these aren’t huge numbers, but of course most people don’t have these hereditary risk factors. For your average 30-year-old, the genetic screening test will be completely uneventful, but for those 1,500 it will be life-changing, and potentially life-saving.

But is it worth it? The authors estimate that, at the midpoint of all their assumptions, the cost of this program would be $68,000 per QALY saved.

Of course, that depends on all those assumptions we talked about. Interestingly, the single factor that changes the cost-effectiveness the most in this analysis is the cost of the genetic test itself, which I guess makes sense, considering we’d be talking about testing a huge segment of the population. If the test cost $100 instead of $250, the cost per QALY would be $39,700 – well within the range that most policymakers would support. And given the rate at which the cost of genetic testing is decreasing, and the obvious economies of scale here, I think $100 per test is totally feasible.

The future will bring other changes as well. Right now, there are only three hereditary conditions designated as Tier 1 by the CDC. If conditions are added, that might also swing the calculation more heavily toward benefit.

This will represent a stark change from how we think about genetic testing currently, focusing on those whose pretest probability of an abnormal result is high due to family history or other risk factors. But for the 20-year-olds out there, I wouldn’t be surprised if your 30th birthday is a bit more significant than you have been anticipating.

Dr. Wilson is an associate professor of medicine and director of Yale’s Clinical and Translational Research Accelerator in New Haven, Conn. He disclosed no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

Welcome to Impact Factor, your weekly dose of commentary on a new medical study. I’m Dr F. Perry Wilson of the Yale School of Medicine.

Milestone birthdays are always memorable – those ages when your life seems to fundamentally change somehow. Age 16: A license to drive. Age 18: You can vote to determine your own future and serve in the military. At 21, 3 years after adulthood, you are finally allowed to drink alcohol, for some reason. And then ... nothing much happens. At least until you turn 65 and become eligible for Medicare.

But imagine a future when turning 30 might be the biggest milestone birthday of all. Imagine a future when, at 30, you get your genome sequenced and doctors tell you what needs to be done to save your life.

That future may not be far off, as a new study shows us that

Getting your genome sequenced is a double-edged sword. Of course, there is the potential for substantial benefit; finding certain mutations allows for definitive therapy before it’s too late. That said, there are genetic diseases without a cure and without a treatment. Knowing about that destiny may do more harm than good.

Three conditions are described by the CDC as “Tier 1” conditions, genetic syndromes with a significant impact on life expectancy that also have definitive, effective therapies.

These include mutations like BRCA1/2, associated with a high risk for breast and ovarian cancer; mutations associated with Lynch syndrome, which confer an elevated risk for colon cancer; and mutations associated with familial hypercholesterolemia, which confer elevated risk for cardiovascular events.

In each of these cases, there is clear evidence that early intervention can save lives. Individuals at high risk for breast and ovarian cancer can get prophylactic mastectomy and salpingo-oophorectomy. Those with Lynch syndrome can get more frequent screening for colon cancer and polypectomy, and those with familial hypercholesterolemia can get aggressive lipid-lowering therapy.

I think most of us would probably want to know if we had one of these conditions. Most of us would use that information to take concrete steps to decrease our risk. But just because a rational person would choose to do something doesn’t mean it’s feasible. After all, we’re talking about tests and treatments that have significant costs.

In a recent issue of Annals of Internal Medicine, Josh Peterson and David Veenstra present a detailed accounting of the cost and benefit of a hypothetical nationwide, universal screening program for Tier 1 conditions. And in the end, it may actually be worth it.

Cost-benefit analyses work by comparing two independent policy choices: the status quo – in this case, a world in which some people get tested for these conditions, but generally only if they are at high risk based on strong family history; and an alternative policy – in this case, universal screening for these conditions starting at some age.

After that, it’s time to play the assumption game. Using the best available data, the authors estimated the percentage of the population that will have each condition, the percentage of those individuals who will definitively act on the information, and how effective those actions would be if taken.

The authors provide an example. First, they assume that the prevalence of mutations leading to a high risk for breast and ovarian cancer is around 0.7%, and that up to 40% of people who learn that they have one of these mutations would undergo prophylactic mastectomy, which would reduce the risk for breast cancer by around 94%. (I ran these numbers past my wife, a breast surgical oncologist, who agreed that they seem reasonable.)

Assumptions in place, it’s time to consider costs. The cost of the screening test itself: The authors use $250 as their average per-person cost. But we also have the cost of treatment – around $22,000 per person for a bilateral prophylactic mastectomy; the cost of statin therapy for those with familial hypercholesterolemia; or the cost of all of those colonoscopies for those with Lynch syndrome.

Finally, we assess quality of life. Obviously, living longer is generally considered better than living shorter, but marginal increases in life expectancy at the cost of quality of life might not be a rational choice.

You then churn these assumptions through a computer and see what comes out. How many dollars does it take to save one quality-adjusted life-year (QALY)? I’ll tell you right now that $50,000 per QALY used to be the unofficial standard for a “cost-effective” intervention in the United States. Researchers have more recently used $100,000 as that threshold.

Let’s look at some hard numbers.

If you screened 100,000 people at age 30 years, 1,500 would get news that something in their genetics was, more or less, a ticking time bomb. Some would choose to get definitive treatment and the authors estimate that the strategy would prevent 85 cases of cancer. You’d prevent nine heart attacks and five strokes by lowering cholesterol levels among those with familial hypercholesterolemia. Obviously, these aren’t huge numbers, but of course most people don’t have these hereditary risk factors. For your average 30-year-old, the genetic screening test will be completely uneventful, but for those 1,500 it will be life-changing, and potentially life-saving.

But is it worth it? The authors estimate that, at the midpoint of all their assumptions, the cost of this program would be $68,000 per QALY saved.

Of course, that depends on all those assumptions we talked about. Interestingly, the single factor that changes the cost-effectiveness the most in this analysis is the cost of the genetic test itself, which I guess makes sense, considering we’d be talking about testing a huge segment of the population. If the test cost $100 instead of $250, the cost per QALY would be $39,700 – well within the range that most policymakers would support. And given the rate at which the cost of genetic testing is decreasing, and the obvious economies of scale here, I think $100 per test is totally feasible.

The future will bring other changes as well. Right now, there are only three hereditary conditions designated as Tier 1 by the CDC. If conditions are added, that might also swing the calculation more heavily toward benefit.

This will represent a stark change from how we think about genetic testing currently, focusing on those whose pretest probability of an abnormal result is high due to family history or other risk factors. But for the 20-year-olds out there, I wouldn’t be surprised if your 30th birthday is a bit more significant than you have been anticipating.

Dr. Wilson is an associate professor of medicine and director of Yale’s Clinical and Translational Research Accelerator in New Haven, Conn. He disclosed no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

This transcript has been edited for clarity.

Welcome to Impact Factor, your weekly dose of commentary on a new medical study. I’m Dr F. Perry Wilson of the Yale School of Medicine.

Milestone birthdays are always memorable – those ages when your life seems to fundamentally change somehow. Age 16: A license to drive. Age 18: You can vote to determine your own future and serve in the military. At 21, 3 years after adulthood, you are finally allowed to drink alcohol, for some reason. And then ... nothing much happens. At least until you turn 65 and become eligible for Medicare.

But imagine a future when turning 30 might be the biggest milestone birthday of all. Imagine a future when, at 30, you get your genome sequenced and doctors tell you what needs to be done to save your life.

That future may not be far off, as a new study shows us that

Getting your genome sequenced is a double-edged sword. Of course, there is the potential for substantial benefit; finding certain mutations allows for definitive therapy before it’s too late. That said, there are genetic diseases without a cure and without a treatment. Knowing about that destiny may do more harm than good.

Three conditions are described by the CDC as “Tier 1” conditions, genetic syndromes with a significant impact on life expectancy that also have definitive, effective therapies.

These include mutations like BRCA1/2, associated with a high risk for breast and ovarian cancer; mutations associated with Lynch syndrome, which confer an elevated risk for colon cancer; and mutations associated with familial hypercholesterolemia, which confer elevated risk for cardiovascular events.

In each of these cases, there is clear evidence that early intervention can save lives. Individuals at high risk for breast and ovarian cancer can get prophylactic mastectomy and salpingo-oophorectomy. Those with Lynch syndrome can get more frequent screening for colon cancer and polypectomy, and those with familial hypercholesterolemia can get aggressive lipid-lowering therapy.

I think most of us would probably want to know if we had one of these conditions. Most of us would use that information to take concrete steps to decrease our risk. But just because a rational person would choose to do something doesn’t mean it’s feasible. After all, we’re talking about tests and treatments that have significant costs.

In a recent issue of Annals of Internal Medicine, Josh Peterson and David Veenstra present a detailed accounting of the cost and benefit of a hypothetical nationwide, universal screening program for Tier 1 conditions. And in the end, it may actually be worth it.

Cost-benefit analyses work by comparing two independent policy choices: the status quo – in this case, a world in which some people get tested for these conditions, but generally only if they are at high risk based on strong family history; and an alternative policy – in this case, universal screening for these conditions starting at some age.

After that, it’s time to play the assumption game. Using the best available data, the authors estimated the percentage of the population that will have each condition, the percentage of those individuals who will definitively act on the information, and how effective those actions would be if taken.

The authors provide an example. First, they assume that the prevalence of mutations leading to a high risk for breast and ovarian cancer is around 0.7%, and that up to 40% of people who learn that they have one of these mutations would undergo prophylactic mastectomy, which would reduce the risk for breast cancer by around 94%. (I ran these numbers past my wife, a breast surgical oncologist, who agreed that they seem reasonable.)

Assumptions in place, it’s time to consider costs. The cost of the screening test itself: The authors use $250 as their average per-person cost. But we also have the cost of treatment – around $22,000 per person for a bilateral prophylactic mastectomy; the cost of statin therapy for those with familial hypercholesterolemia; or the cost of all of those colonoscopies for those with Lynch syndrome.

Finally, we assess quality of life. Obviously, living longer is generally considered better than living shorter, but marginal increases in life expectancy at the cost of quality of life might not be a rational choice.

You then churn these assumptions through a computer and see what comes out. How many dollars does it take to save one quality-adjusted life-year (QALY)? I’ll tell you right now that $50,000 per QALY used to be the unofficial standard for a “cost-effective” intervention in the United States. Researchers have more recently used $100,000 as that threshold.

Let’s look at some hard numbers.

If you screened 100,000 people at age 30 years, 1,500 would get news that something in their genetics was, more or less, a ticking time bomb. Some would choose to get definitive treatment and the authors estimate that the strategy would prevent 85 cases of cancer. You’d prevent nine heart attacks and five strokes by lowering cholesterol levels among those with familial hypercholesterolemia. Obviously, these aren’t huge numbers, but of course most people don’t have these hereditary risk factors. For your average 30-year-old, the genetic screening test will be completely uneventful, but for those 1,500 it will be life-changing, and potentially life-saving.

But is it worth it? The authors estimate that, at the midpoint of all their assumptions, the cost of this program would be $68,000 per QALY saved.

Of course, that depends on all those assumptions we talked about. Interestingly, the single factor that changes the cost-effectiveness the most in this analysis is the cost of the genetic test itself, which I guess makes sense, considering we’d be talking about testing a huge segment of the population. If the test cost $100 instead of $250, the cost per QALY would be $39,700 – well within the range that most policymakers would support. And given the rate at which the cost of genetic testing is decreasing, and the obvious economies of scale here, I think $100 per test is totally feasible.

The future will bring other changes as well. Right now, there are only three hereditary conditions designated as Tier 1 by the CDC. If conditions are added, that might also swing the calculation more heavily toward benefit.

This will represent a stark change from how we think about genetic testing currently, focusing on those whose pretest probability of an abnormal result is high due to family history or other risk factors. But for the 20-year-olds out there, I wouldn’t be surprised if your 30th birthday is a bit more significant than you have been anticipating.

Dr. Wilson is an associate professor of medicine and director of Yale’s Clinical and Translational Research Accelerator in New Haven, Conn. He disclosed no relevant conflicts of interest.

A version of this article first appeared on Medscape.com.

Veterans Will Benefit if the VA Includes Telehealth in its Access Standards

The VA MISSION Act of 2018 expanded options for veterans to receive government-paid health care from private sector community health care practitioners. The act tasked the US Department of Veterans Affairs (VA) to develop rules that determine eligibility for outside care based on appointment wait times or distance to the nearest VA facility. As a part of those standards, VA opted not to include the availability of VA telehealth in its wait time calculations—a decision that we believe was a gross misjudgment with far-reaching consequences for veterans. Excluding telehealth from the guidelines has unnecessarily restricted veterans’ access to high-quality health care and has squandered large sums of taxpayer dollars.

The VA has reviewed its initial MISSION Act eligibility standards and proposed a correction that recognizes telehealth as a valid means of providing health care to veterans who prefer that option.1 Telehealth may not have been an essential component of health care before the COVID-19 pandemic, but now it is clear that the best action VA can take is to swiftly enact its recommended change, stipulating that both VA telehealth and in-person health care constitute access to treatment. If implemented, this correction would save taxpayers an astronomical sum—according to a VA reportto Congress, about $1.1 billion in fiscal year 2021 alone.2 The cost savings from this proposed correction is reason enough to implement it. But just as importantly, increased use of VA telehealth also means higher quality, quicker, and more convenient care for veterans.

The VA is the recognized world leader in providing telehealth that is effective, timely, and veteran centric. Veterans across the country have access to telehealth services in more than 30 specialties.3 To ensure accessibility, the VA has established partnerships with major mobile broadband carriers so that veterans can receive telehealth at home without additional charges.4 The VA project Accessing Telehealth through Local Area Stations (ATLAS) brings VA telehealth to areas where existing internet infrastructure may not be adequate to support video telehealth. ATLAS is a collaboration with private organizations, including Veterans of Foreign Wars, The American Legion, and Walmart.4The agency also provides tablets to veterans who might not have access to telehealth, fostering higher access and patient satisfaction.4

The VA can initiate telehealth care rapidly. The “Anywhere to Anywhere” VA Health Care initiative and telecare hubs eliminate geographic constraints, allowing clinicians to provide team-based services across county and state lines to veterans’ homes and communities.

VA’s telehealth effort maximizes convenience for veterans. It reduces travel time, travel expenses, depletion of sick leave, and the need for childcare. Veterans with posttraumatic stress disorder or military sexual trauma who are triggered by traffic and waiting rooms, those with mobility issues, or those facing the stigma of mental health treatment often prefer to receive care in the familiarity of their home. Nonetheless, any veteran who desires an in-person appointment would continue to have that option under the proposed VA rule change.

VA telehealth is often used for mental health care, using the same evidence-based psychotherapies that VA has championed and are superior to that available in the private sector.5,6 This advantage is largely due to VA’s rigorous training, consultation, case review, care delivery, measurement standards, and integrated care model. In a recent survey of veterans engaged in mental health care, 80% reported that VA virtual care via video and/or telephone is as helpful or more helpful than in‐person services.7And yet, because of existing regulations, VA telemental health (TMH) does not qualify as access, resulting in hundreds of thousands of TMH visits being outsourced yearly to community practitioners that could be quickly and beneficially furnished by VA clinicians.

Telehealth has been shown to be as clinically effective as in-person care. A recent review of 38 meta-analyses covering telehealth with 10 medical disciplines found that for all disciplines, telehealth was as effective, if not more so, than conventional care.8 And because the likelihood of not showing up for telehealth appointments is lower than for in-person appointments, continuity of care is uninterrupted, and health care outcomes are improved.

Telehealth is health care. The VA must end the double standard that has handicapped it from including telehealth availability in determinations of eligibility for community care. The VA has voiced its intention to seek stakeholder input before implementing its proposed correction. The change is long overdue. It will save the VA a billion dollars annually while ensuring that veterans have quicker access to better treatment.

1 McDonough D. Statement of the honorable Denis McDonough Secretary of Veterans Affairs Department of Veterans Affairs (VA) before the Committee on Veterans’ Affairs United States Senate on veterans access to care. 117th Cong, 2nd Sess. September 21, 2022. Accessed May 8, 2023. https://www.veterans.senate.gov/2022/9/ensuring-veterans-timely-access-to-care-in-va-and-the-community/63b521ff-d308-449a-b3a3-918f4badb805

2 US Department of Veterans Affairs, Congressionally mandated report: access to care standards. September 2022.

3 US Department of Veterans Affairs. VA Secretary Press Conference, Thursday March 2, 2023. Accessed May 8, 2023. https://www.youtube.com/watch?v=WnkNl2whPoQ

4 US Department of Veterans Affairs, VA Telehealth: bridging the digital divide. Accessed May 8, 2023. https://telehealth.va.gov/digital-divide

5 Rand Corporation. Improving the Quality of Mental Health Care for Veterans: Lessons from RAND Research. Santa Monica, CA: RAND Corporation, 2019. https://www.rand.org/pubs/research_briefs/RB10087.html.

6 Lemle, R. Choice program expansion jeopardizes high-quality VHA mental health services. Federal Pract. 2018:35(3):18-24. [link to: https://www.mdedge.com/fedprac/article/159219/mental-health/choice-program-expansion-jeopardizes-high-quality-vha-mental

7 Campbell TM. Overview of the state of mental health care services in the VHA health care system. Presentation to the National Academies’ improving access to high-quality mental health care for veterans: a workshop. April 20, 2023. Accessed May 8, 2023. https://www.nationalacademies.org/documents/embed/link/LF2255DA3DD1C41C0A42D3BEF0989ACAECE3053A6A9B/file/D2C4B73BA6FFCAA81E6C4FC7C57020A5BA54376245AD?noSaveAs=1

8 Snoswell CL, Chelberg G, De Guzman KR, et al. The clinical effectiveness of telehealth: A systematic review of meta-analyses from 2010 to 2019. J Telemed Telecare. 2021;1357633X211022907. doi:10.1177/1357633X211022907

The VA MISSION Act of 2018 expanded options for veterans to receive government-paid health care from private sector community health care practitioners. The act tasked the US Department of Veterans Affairs (VA) to develop rules that determine eligibility for outside care based on appointment wait times or distance to the nearest VA facility. As a part of those standards, VA opted not to include the availability of VA telehealth in its wait time calculations—a decision that we believe was a gross misjudgment with far-reaching consequences for veterans. Excluding telehealth from the guidelines has unnecessarily restricted veterans’ access to high-quality health care and has squandered large sums of taxpayer dollars.

The VA has reviewed its initial MISSION Act eligibility standards and proposed a correction that recognizes telehealth as a valid means of providing health care to veterans who prefer that option.1 Telehealth may not have been an essential component of health care before the COVID-19 pandemic, but now it is clear that the best action VA can take is to swiftly enact its recommended change, stipulating that both VA telehealth and in-person health care constitute access to treatment. If implemented, this correction would save taxpayers an astronomical sum—according to a VA reportto Congress, about $1.1 billion in fiscal year 2021 alone.2 The cost savings from this proposed correction is reason enough to implement it. But just as importantly, increased use of VA telehealth also means higher quality, quicker, and more convenient care for veterans.

The VA is the recognized world leader in providing telehealth that is effective, timely, and veteran centric. Veterans across the country have access to telehealth services in more than 30 specialties.3 To ensure accessibility, the VA has established partnerships with major mobile broadband carriers so that veterans can receive telehealth at home without additional charges.4 The VA project Accessing Telehealth through Local Area Stations (ATLAS) brings VA telehealth to areas where existing internet infrastructure may not be adequate to support video telehealth. ATLAS is a collaboration with private organizations, including Veterans of Foreign Wars, The American Legion, and Walmart.4The agency also provides tablets to veterans who might not have access to telehealth, fostering higher access and patient satisfaction.4

The VA can initiate telehealth care rapidly. The “Anywhere to Anywhere” VA Health Care initiative and telecare hubs eliminate geographic constraints, allowing clinicians to provide team-based services across county and state lines to veterans’ homes and communities.

VA’s telehealth effort maximizes convenience for veterans. It reduces travel time, travel expenses, depletion of sick leave, and the need for childcare. Veterans with posttraumatic stress disorder or military sexual trauma who are triggered by traffic and waiting rooms, those with mobility issues, or those facing the stigma of mental health treatment often prefer to receive care in the familiarity of their home. Nonetheless, any veteran who desires an in-person appointment would continue to have that option under the proposed VA rule change.

VA telehealth is often used for mental health care, using the same evidence-based psychotherapies that VA has championed and are superior to that available in the private sector.5,6 This advantage is largely due to VA’s rigorous training, consultation, case review, care delivery, measurement standards, and integrated care model. In a recent survey of veterans engaged in mental health care, 80% reported that VA virtual care via video and/or telephone is as helpful or more helpful than in‐person services.7And yet, because of existing regulations, VA telemental health (TMH) does not qualify as access, resulting in hundreds of thousands of TMH visits being outsourced yearly to community practitioners that could be quickly and beneficially furnished by VA clinicians.

Telehealth has been shown to be as clinically effective as in-person care. A recent review of 38 meta-analyses covering telehealth with 10 medical disciplines found that for all disciplines, telehealth was as effective, if not more so, than conventional care.8 And because the likelihood of not showing up for telehealth appointments is lower than for in-person appointments, continuity of care is uninterrupted, and health care outcomes are improved.

Telehealth is health care. The VA must end the double standard that has handicapped it from including telehealth availability in determinations of eligibility for community care. The VA has voiced its intention to seek stakeholder input before implementing its proposed correction. The change is long overdue. It will save the VA a billion dollars annually while ensuring that veterans have quicker access to better treatment.

The VA MISSION Act of 2018 expanded options for veterans to receive government-paid health care from private sector community health care practitioners. The act tasked the US Department of Veterans Affairs (VA) to develop rules that determine eligibility for outside care based on appointment wait times or distance to the nearest VA facility. As a part of those standards, VA opted not to include the availability of VA telehealth in its wait time calculations—a decision that we believe was a gross misjudgment with far-reaching consequences for veterans. Excluding telehealth from the guidelines has unnecessarily restricted veterans’ access to high-quality health care and has squandered large sums of taxpayer dollars.

The VA has reviewed its initial MISSION Act eligibility standards and proposed a correction that recognizes telehealth as a valid means of providing health care to veterans who prefer that option.1 Telehealth may not have been an essential component of health care before the COVID-19 pandemic, but now it is clear that the best action VA can take is to swiftly enact its recommended change, stipulating that both VA telehealth and in-person health care constitute access to treatment. If implemented, this correction would save taxpayers an astronomical sum—according to a VA reportto Congress, about $1.1 billion in fiscal year 2021 alone.2 The cost savings from this proposed correction is reason enough to implement it. But just as importantly, increased use of VA telehealth also means higher quality, quicker, and more convenient care for veterans.

The VA is the recognized world leader in providing telehealth that is effective, timely, and veteran centric. Veterans across the country have access to telehealth services in more than 30 specialties.3 To ensure accessibility, the VA has established partnerships with major mobile broadband carriers so that veterans can receive telehealth at home without additional charges.4 The VA project Accessing Telehealth through Local Area Stations (ATLAS) brings VA telehealth to areas where existing internet infrastructure may not be adequate to support video telehealth. ATLAS is a collaboration with private organizations, including Veterans of Foreign Wars, The American Legion, and Walmart.4The agency also provides tablets to veterans who might not have access to telehealth, fostering higher access and patient satisfaction.4

The VA can initiate telehealth care rapidly. The “Anywhere to Anywhere” VA Health Care initiative and telecare hubs eliminate geographic constraints, allowing clinicians to provide team-based services across county and state lines to veterans’ homes and communities.

VA’s telehealth effort maximizes convenience for veterans. It reduces travel time, travel expenses, depletion of sick leave, and the need for childcare. Veterans with posttraumatic stress disorder or military sexual trauma who are triggered by traffic and waiting rooms, those with mobility issues, or those facing the stigma of mental health treatment often prefer to receive care in the familiarity of their home. Nonetheless, any veteran who desires an in-person appointment would continue to have that option under the proposed VA rule change.

VA telehealth is often used for mental health care, using the same evidence-based psychotherapies that VA has championed and are superior to that available in the private sector.5,6 This advantage is largely due to VA’s rigorous training, consultation, case review, care delivery, measurement standards, and integrated care model. In a recent survey of veterans engaged in mental health care, 80% reported that VA virtual care via video and/or telephone is as helpful or more helpful than in‐person services.7And yet, because of existing regulations, VA telemental health (TMH) does not qualify as access, resulting in hundreds of thousands of TMH visits being outsourced yearly to community practitioners that could be quickly and beneficially furnished by VA clinicians.

Telehealth has been shown to be as clinically effective as in-person care. A recent review of 38 meta-analyses covering telehealth with 10 medical disciplines found that for all disciplines, telehealth was as effective, if not more so, than conventional care.8 And because the likelihood of not showing up for telehealth appointments is lower than for in-person appointments, continuity of care is uninterrupted, and health care outcomes are improved.

Telehealth is health care. The VA must end the double standard that has handicapped it from including telehealth availability in determinations of eligibility for community care. The VA has voiced its intention to seek stakeholder input before implementing its proposed correction. The change is long overdue. It will save the VA a billion dollars annually while ensuring that veterans have quicker access to better treatment.

1 McDonough D. Statement of the honorable Denis McDonough Secretary of Veterans Affairs Department of Veterans Affairs (VA) before the Committee on Veterans’ Affairs United States Senate on veterans access to care. 117th Cong, 2nd Sess. September 21, 2022. Accessed May 8, 2023. https://www.veterans.senate.gov/2022/9/ensuring-veterans-timely-access-to-care-in-va-and-the-community/63b521ff-d308-449a-b3a3-918f4badb805

2 US Department of Veterans Affairs, Congressionally mandated report: access to care standards. September 2022.

3 US Department of Veterans Affairs. VA Secretary Press Conference, Thursday March 2, 2023. Accessed May 8, 2023. https://www.youtube.com/watch?v=WnkNl2whPoQ

4 US Department of Veterans Affairs, VA Telehealth: bridging the digital divide. Accessed May 8, 2023. https://telehealth.va.gov/digital-divide

5 Rand Corporation. Improving the Quality of Mental Health Care for Veterans: Lessons from RAND Research. Santa Monica, CA: RAND Corporation, 2019. https://www.rand.org/pubs/research_briefs/RB10087.html.

6 Lemle, R. Choice program expansion jeopardizes high-quality VHA mental health services. Federal Pract. 2018:35(3):18-24. [link to: https://www.mdedge.com/fedprac/article/159219/mental-health/choice-program-expansion-jeopardizes-high-quality-vha-mental

7 Campbell TM. Overview of the state of mental health care services in the VHA health care system. Presentation to the National Academies’ improving access to high-quality mental health care for veterans: a workshop. April 20, 2023. Accessed May 8, 2023. https://www.nationalacademies.org/documents/embed/link/LF2255DA3DD1C41C0A42D3BEF0989ACAECE3053A6A9B/file/D2C4B73BA6FFCAA81E6C4FC7C57020A5BA54376245AD?noSaveAs=1

8 Snoswell CL, Chelberg G, De Guzman KR, et al. The clinical effectiveness of telehealth: A systematic review of meta-analyses from 2010 to 2019. J Telemed Telecare. 2021;1357633X211022907. doi:10.1177/1357633X211022907

1 McDonough D. Statement of the honorable Denis McDonough Secretary of Veterans Affairs Department of Veterans Affairs (VA) before the Committee on Veterans’ Affairs United States Senate on veterans access to care. 117th Cong, 2nd Sess. September 21, 2022. Accessed May 8, 2023. https://www.veterans.senate.gov/2022/9/ensuring-veterans-timely-access-to-care-in-va-and-the-community/63b521ff-d308-449a-b3a3-918f4badb805

2 US Department of Veterans Affairs, Congressionally mandated report: access to care standards. September 2022.

3 US Department of Veterans Affairs. VA Secretary Press Conference, Thursday March 2, 2023. Accessed May 8, 2023. https://www.youtube.com/watch?v=WnkNl2whPoQ

4 US Department of Veterans Affairs, VA Telehealth: bridging the digital divide. Accessed May 8, 2023. https://telehealth.va.gov/digital-divide

5 Rand Corporation. Improving the Quality of Mental Health Care for Veterans: Lessons from RAND Research. Santa Monica, CA: RAND Corporation, 2019. https://www.rand.org/pubs/research_briefs/RB10087.html.