User login

Noduloplaque on the Forehead

The Diagnosis: Giant Apocrine Hidrocystoma

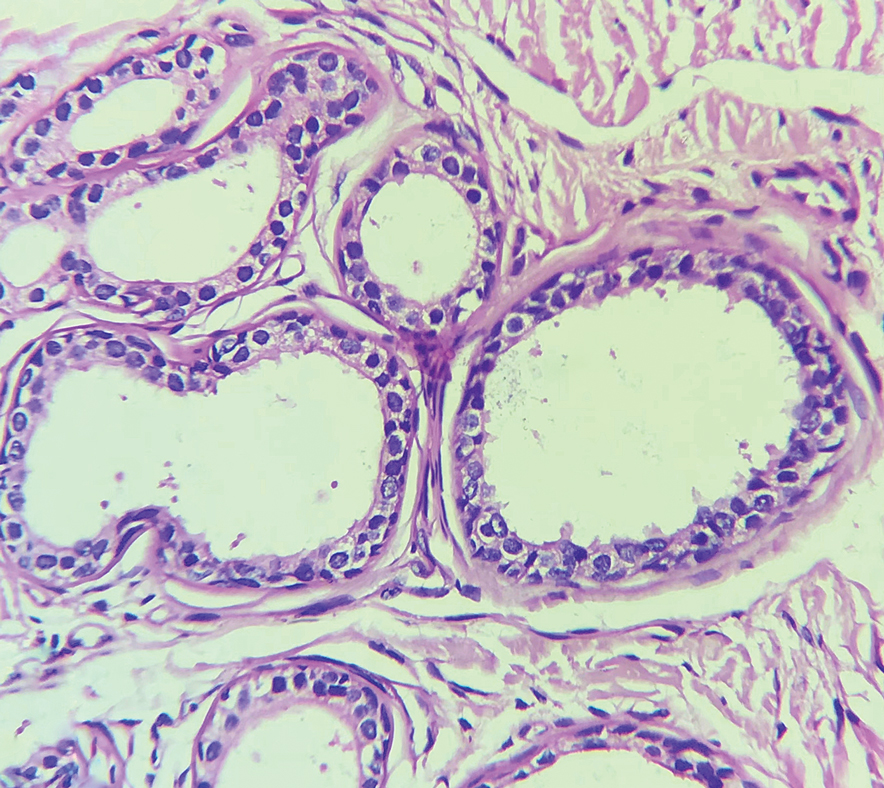

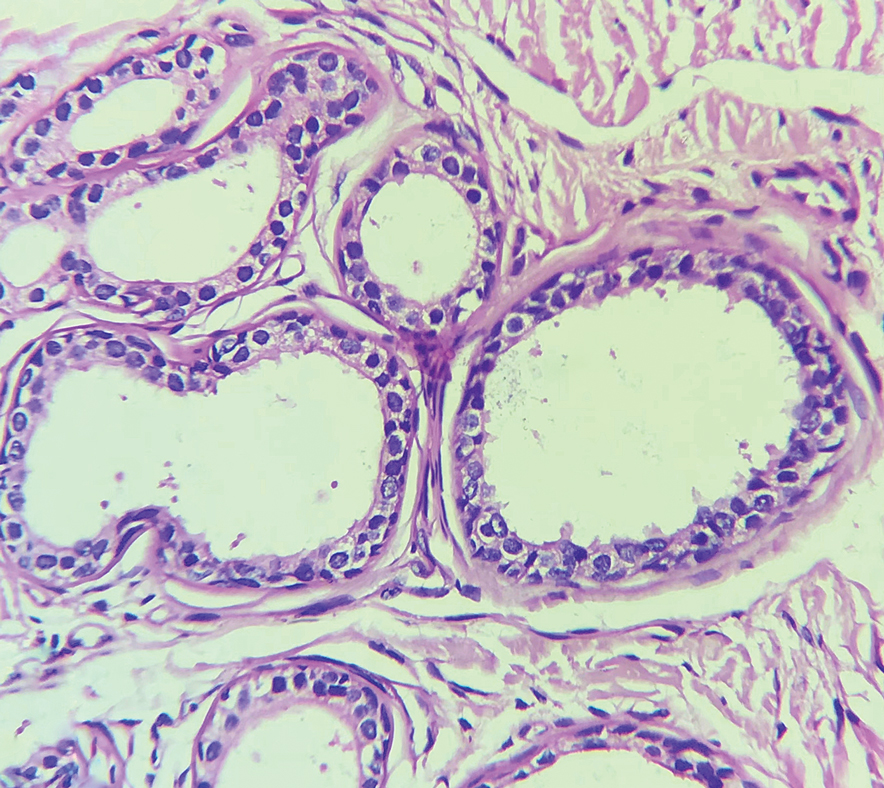

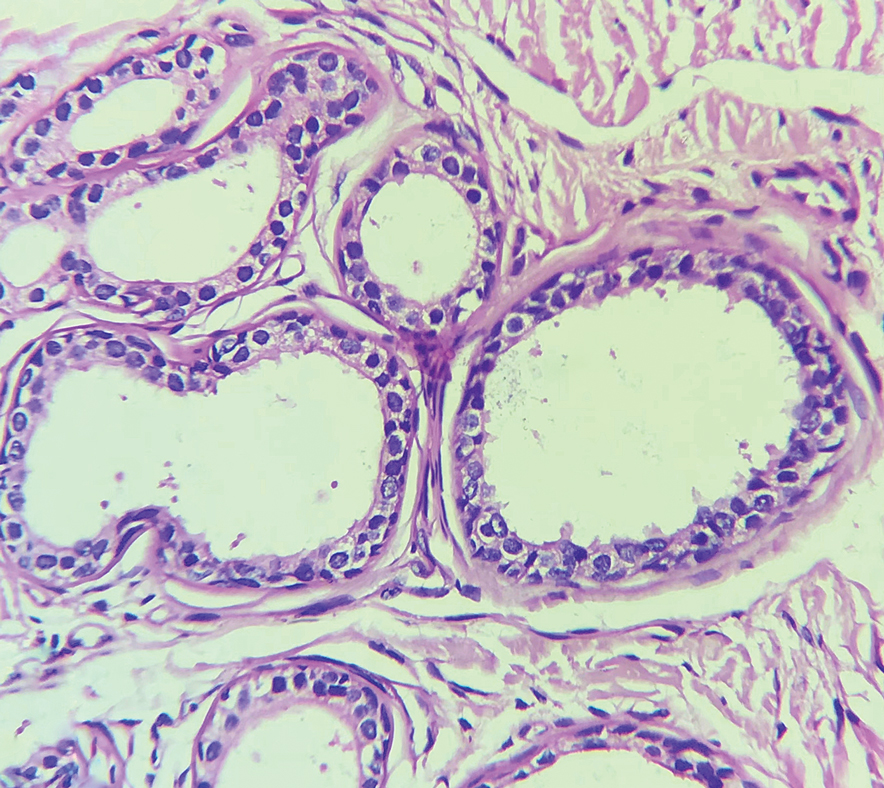

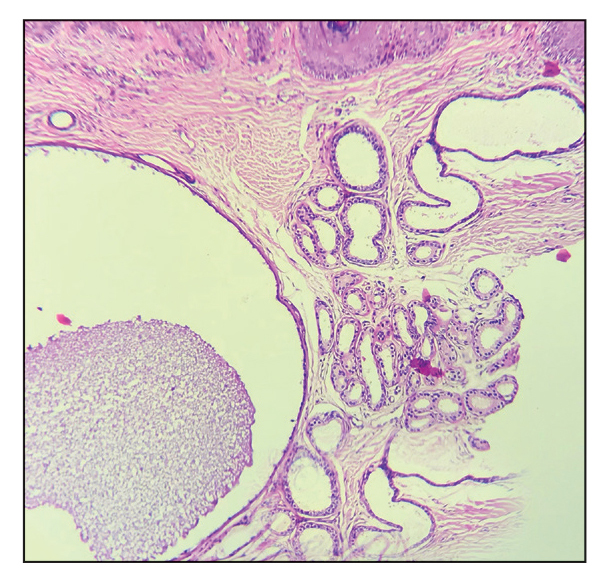

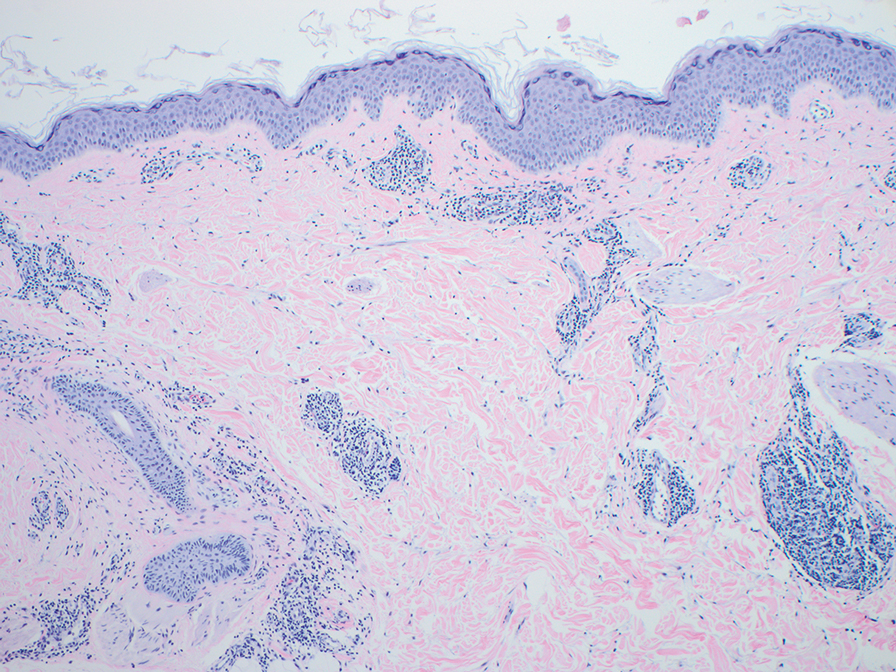

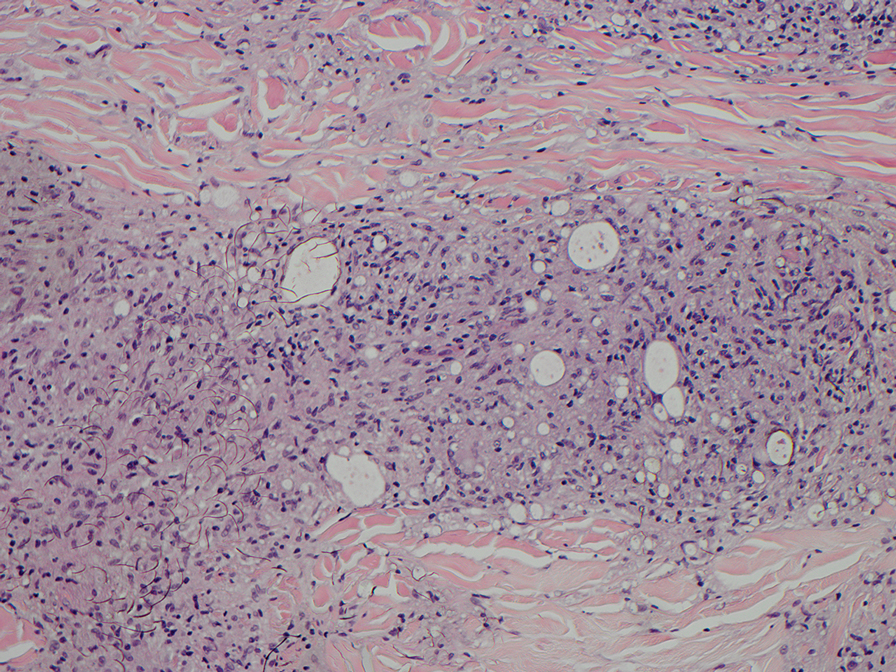

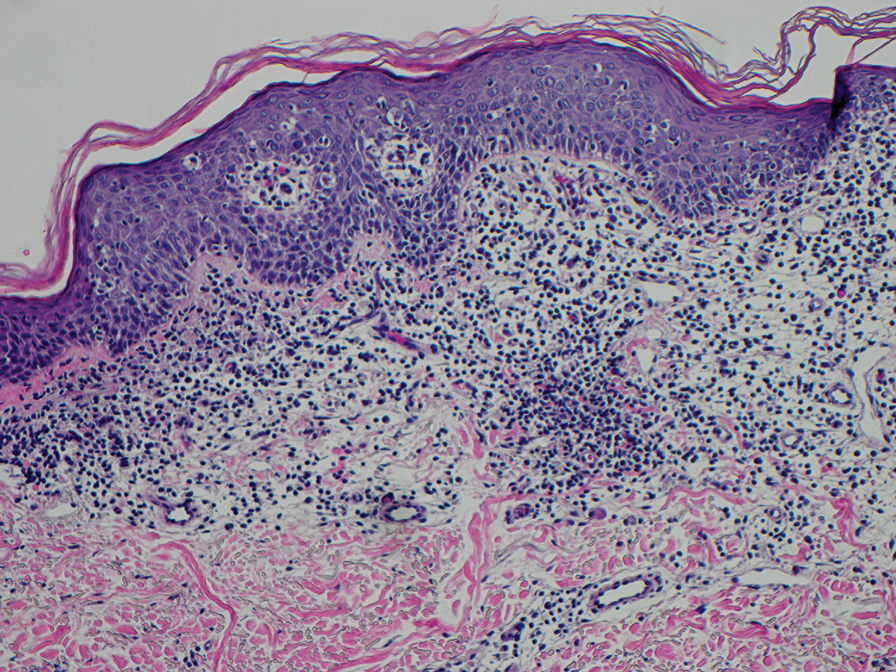

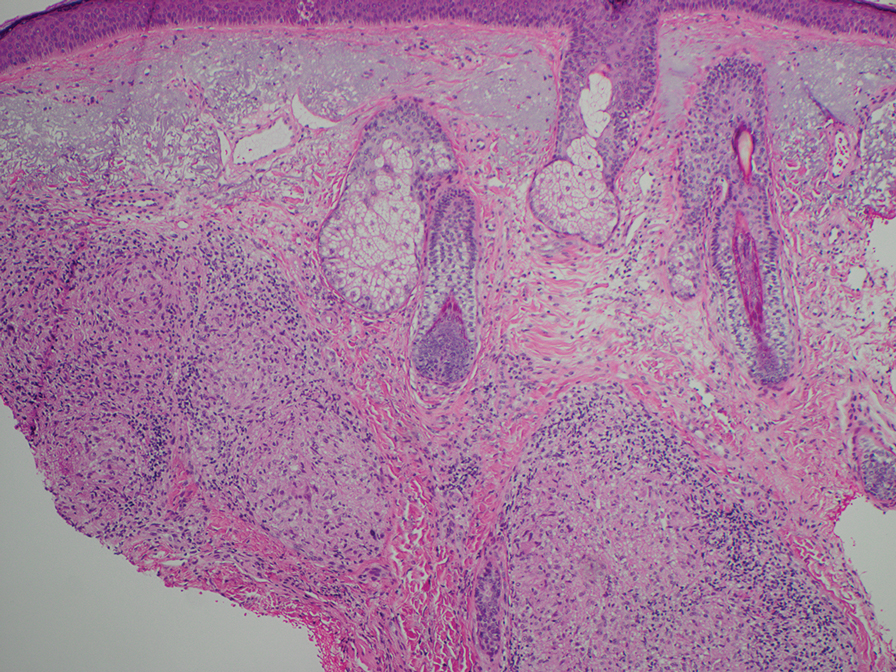

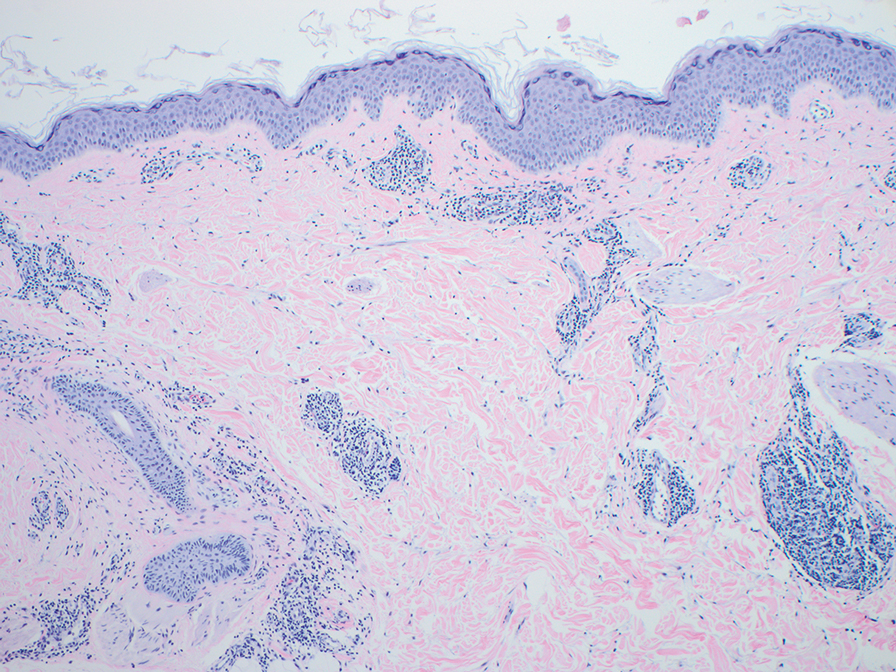

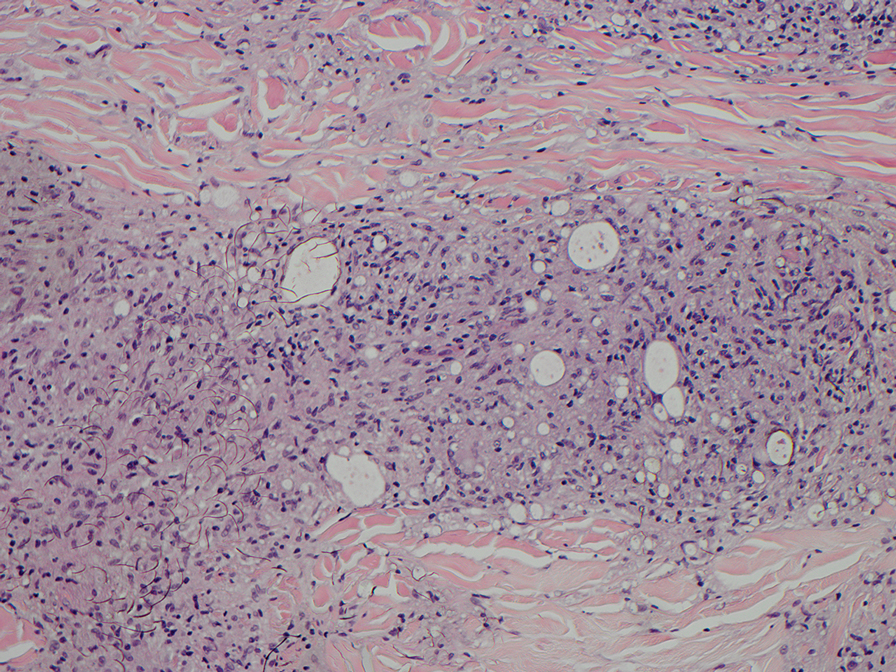

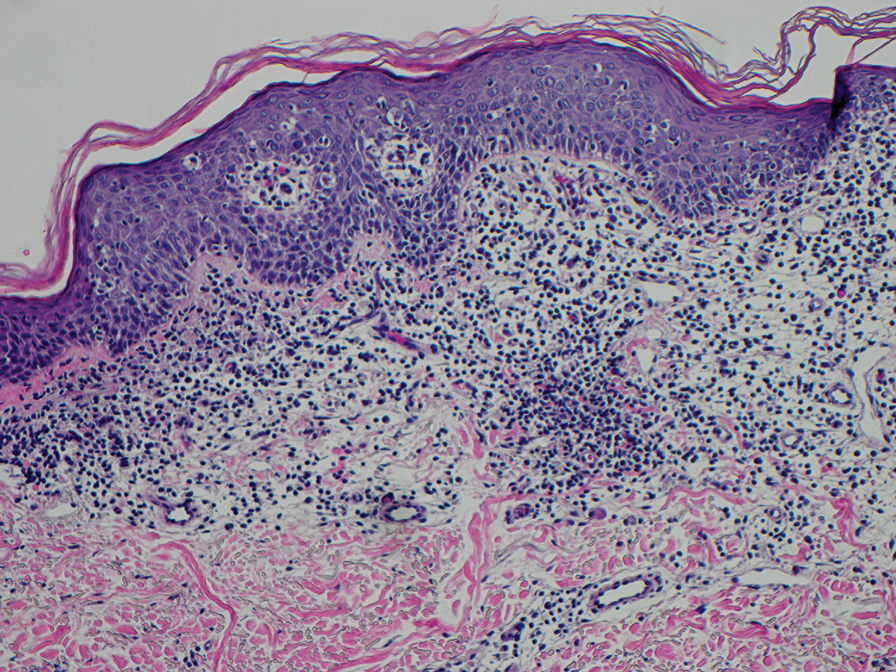

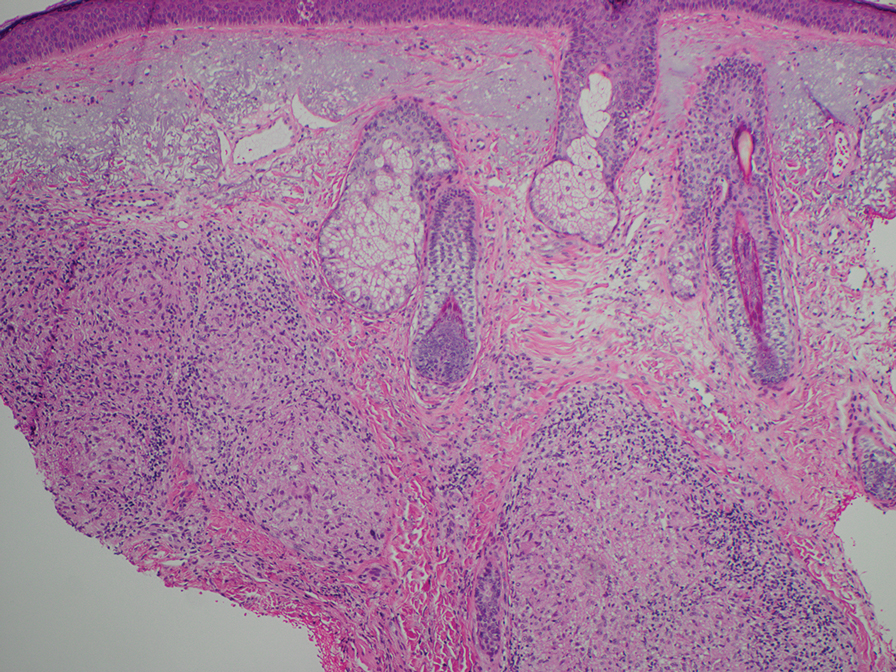

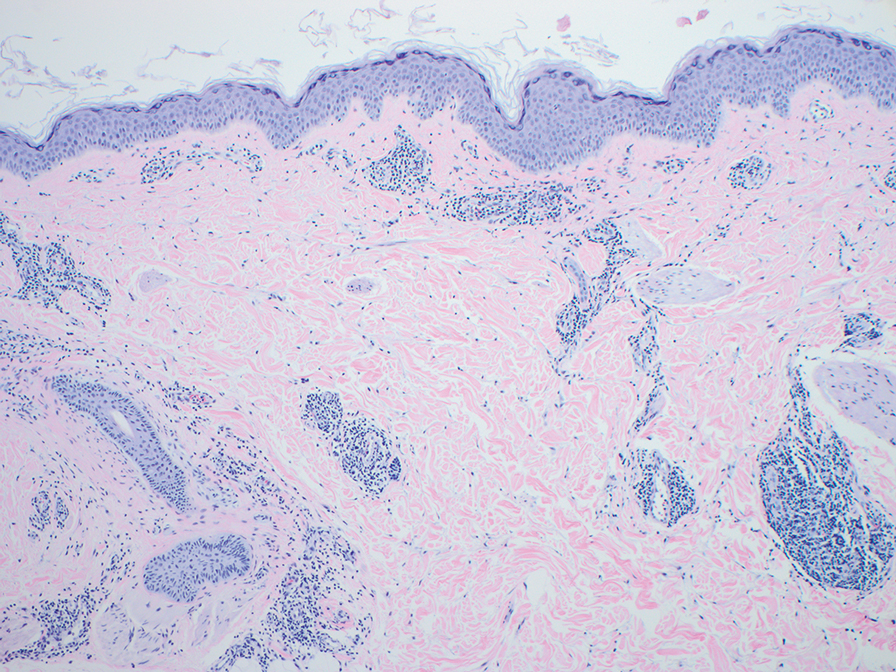

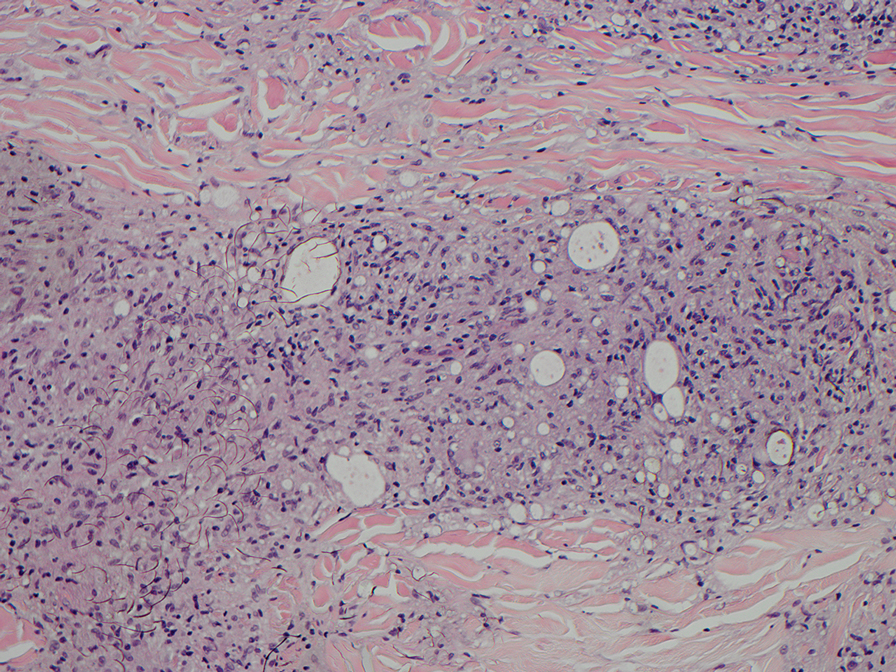

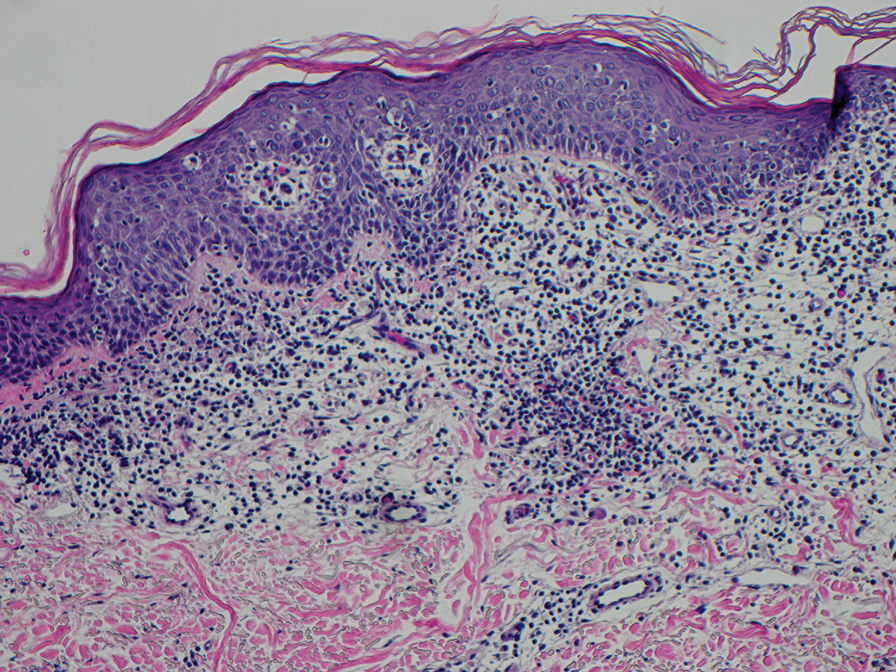

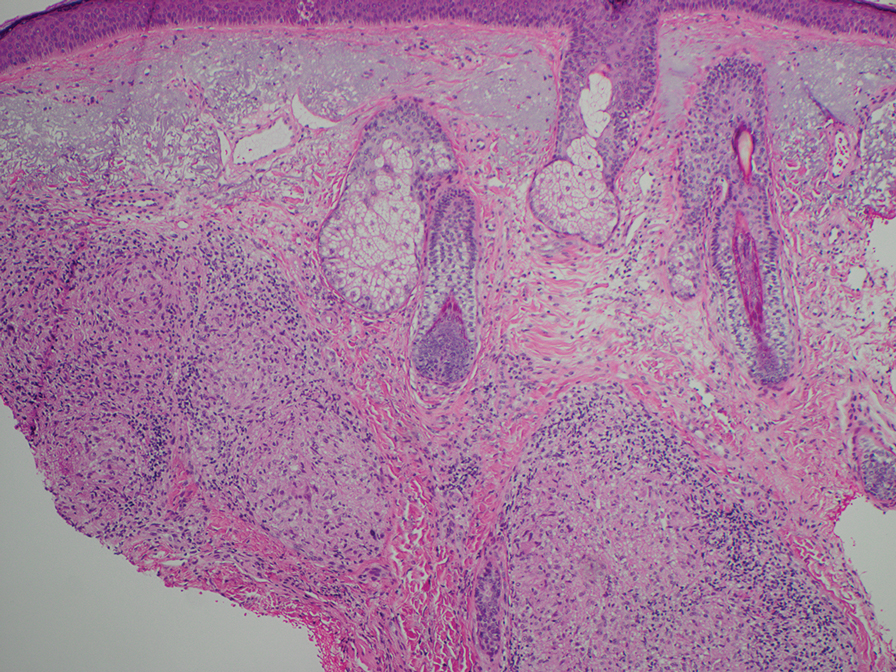

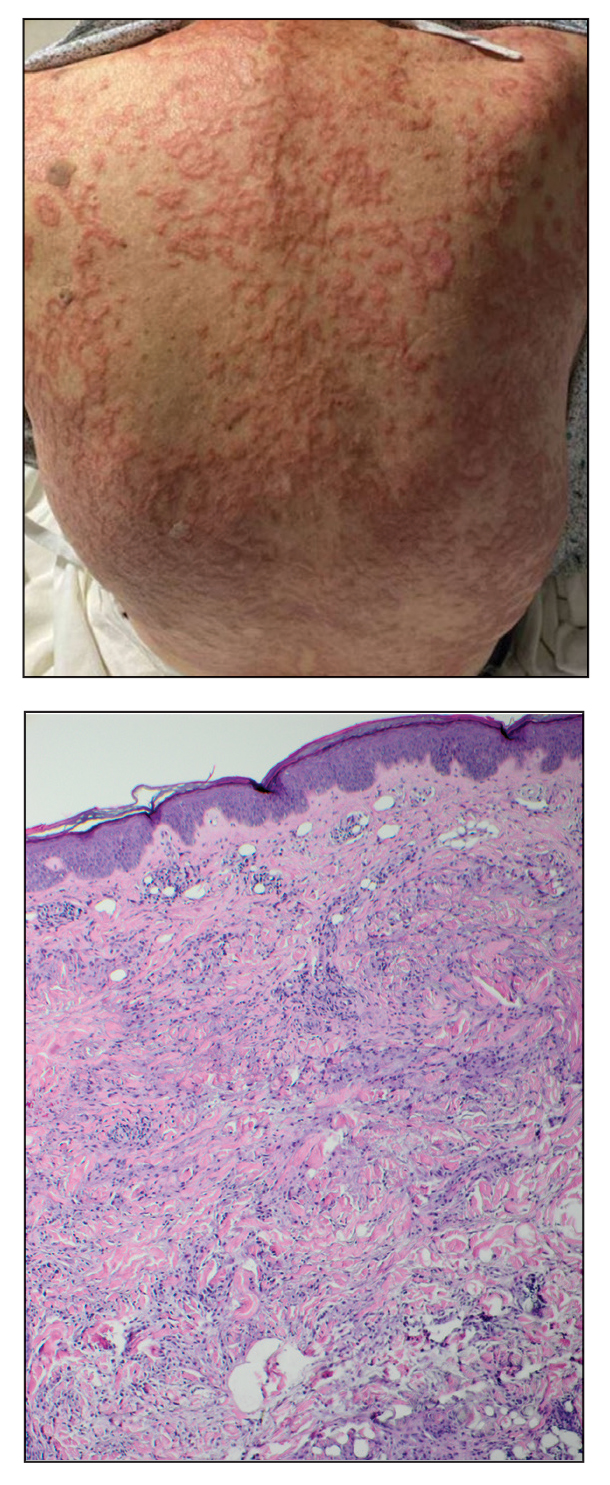

Histopathology of the noduloplaque revealed an unremarkable epidermis with multilocular cystic spaces centered in the dermis. The cysts had a double-lined epithelium with inner columnar to cuboidal cells and outer myoepithelial cells (bottom quiz image). Columnar cells showing decapitation secretion could be appreciated at places indicating apocrine secretion (Figure). A final diagnosis of apocrine hidrocystoma was made.

Hidrocystomas are rare, benign, cystic lesions derived either from apocrine or eccrine glands.1 Apocrine hidrocystoma usually manifests as asymptomatic, solitary, dome-shaped papules or nodules with a predilection for the head and neck region. Hidrocystomas can vary from flesh colored to blue, brown, or black. Pigmentation in hidrocystoma is seen in 5% to 80% of cases and is attributed to the Tyndall effect.1 The tumor usually is less than 20 mm in diameter; larger lesions are termed giant apocrine hidrocystoma.2 Apocrine hidrocystoma manifesting with multiple lesions and a size greater than 10 mm, as seen in our case, is uncommon.

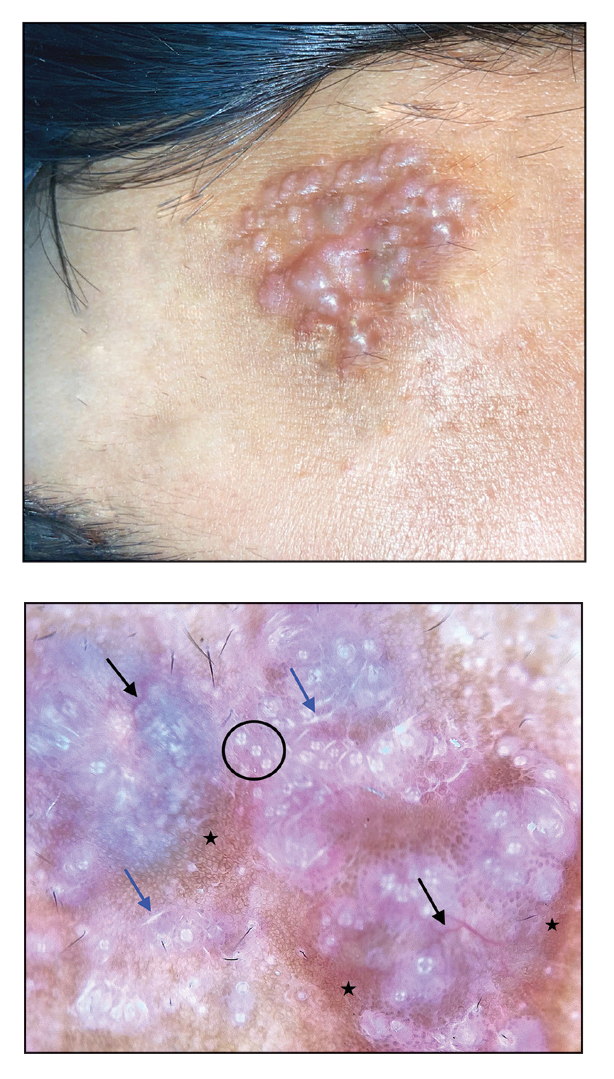

Zaballos et al3 described dermoscopy of apocrine hidrocystoma in 22 patients. Hallmark dermoscopic findings were the presence of a homogeneous flesh-colored, yellowish, blue to pinkish-blue area involving the entire lesion with arborizing vessels and whitish structures.3 Similar dermoscopic findings were present in our patient. The homogeneous area histologically correlates to the multiloculated cysts located in the dermis. The exact reason for white structures is unknown; however, their visualization in apocrine hidrocystoma could be attributed to the alternation in collagen orientation secondary to the presence of large or multiple cysts in the dermis.

The presence of shiny white dots arranged in a square resembling a four-leaf clover (also known as white rosettes) was a unique dermoscopic finding in our patient. These rosettes can be appreciated only with polarized dermoscopy, and they have been described in actinic keratosis, seborrheic keratosis, squamous cell carcinoma, and basal cell carcinoma.4 The exact morphologic correlate of white rosettes is unknown but is postulated to be secondary to material inside adnexal openings in small rosettes and concentric perifollicular fibrosis in larger rosettes.4 In our patient, we believe the white rosettes can be attributed to the accumulated secretions in the dermal glands, which also were seen via histopathology. Dermoscopy also revealed increased peripheral, brown, networklike pigmentation, which was unique and could be secondary to the patient’s darker skin phenotype.

Differential diagnoses of apocrine hidrocystoma include both melanocytic and nonmelanocytic conditions such as epidermal cyst, nodular melanoma, nodular hidradenoma, syringoma, blue nevus, pilomatricoma, eccrine poroma, nodular Kaposi sarcoma, and venous lake.1 Histopathology showing large unilocular or multilocular dermal cysts with double lining comprising outer myoepithelial cells and inner columnar or cuboidal cell with decapitation secretion is paramount in confirming the diagnosis of apocrine hidrocystoma.

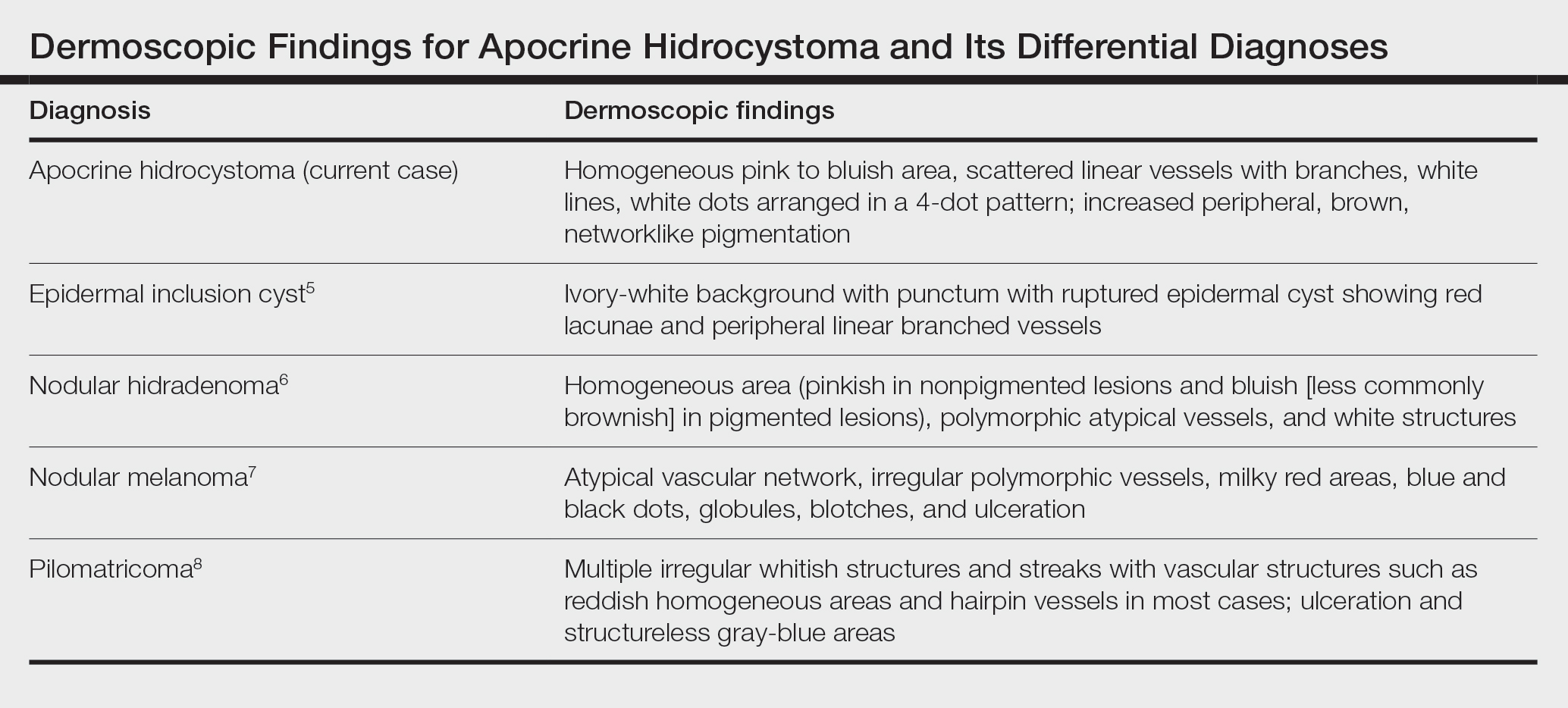

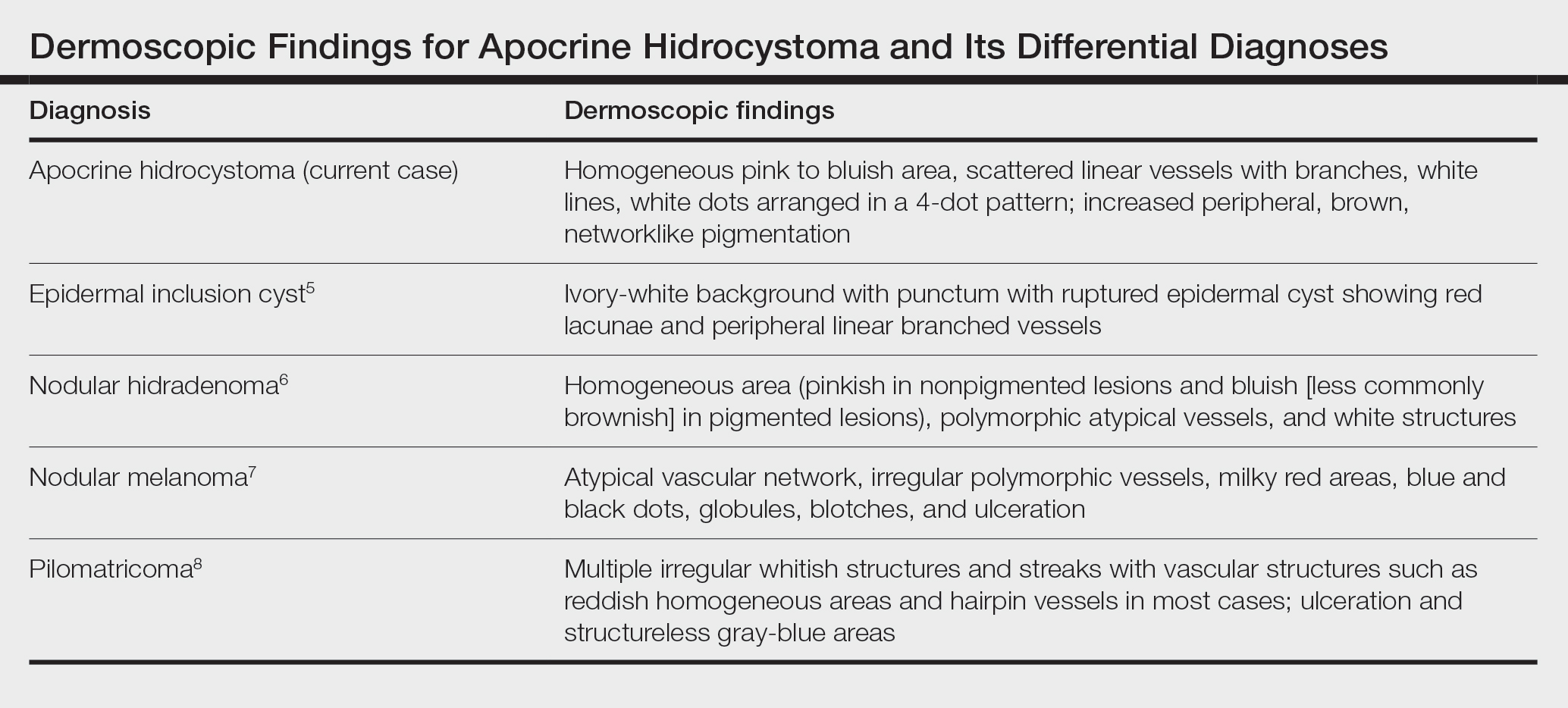

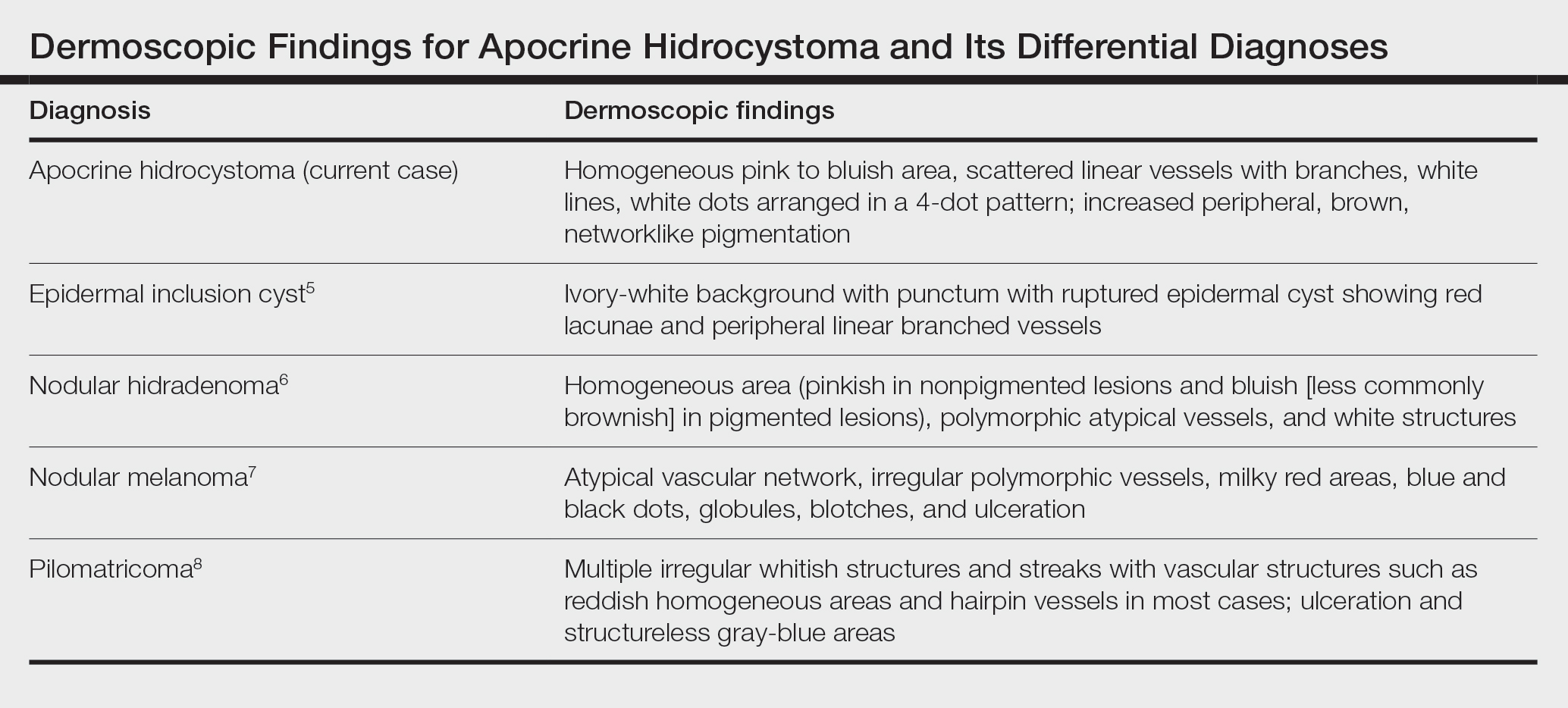

Dermoscopy can act as a valuable noninvasive modality in differentiating apocrine hidrocystoma from its melanocytic and nonmelanocytic differential diagnoses (Table).5-8 In our patient, the presence of a homogeneous pink to bluish area involving the entire lesion, linear branched vessels, and whitish structures on dermoscopy pointed to the diagnosis of apocrine hidrocystoma, which was further confirmed by characteristic histopathologic findings.

The treatment of apocrine hidrocystoma includes surgical excision for solitary lesions, with electrodesiccation and curettage, chemical cautery, and CO2 laser ablation employed for multiple lesions.1 Our patient was scheduled for CO2 laser ablation, considering the multiple lesions and size of the apocrine hidrocystoma but was subsequently lost to follow-up.

- Nguyen HP, Barker HS, Bloomquist L, et al. Giant pigmented apocrine hidrocystoma of the scalp [published online August 15, 2020]. Dermatol Online J. 2020;26:13030/qt7rt3s4pp.

- Anzai S, Goto M, Fujiwara S, et al. Apocrine hidrocystoma: a case report and analysis of 167 Japanese cases. Int J Dermatol. 2005;44:702-703. doi:10.1111/j.1365-4632.2005.02512.x

- Zaballos P, Bañuls J, Medina C, et al. Dermoscopy of apocrine hidrocystomas: a morphological study. J Eur Acad Dermatol Venereol. 2014;28:378-381. doi:10.1111/jdv.12044

- Haspeslagh M, Noë M, De Wispelaere I, et al. Rosettes and other white shiny structures in polarized dermoscopy: histological correlate and optical explanation. J Eur Acad Dermatol Venereol. 2016;30:311-313. doi:10.1111/jdv.13080

- Suh KS, Kang DY, Park JB, et al. Usefulness of dermoscopy in the differential diagnosis of ruptured and unruptured epidermal cysts. Ann Dermatol. 2017;29:33-38. doi:10.5021/ad.2017.29.1.33

- Serrano P, Lallas A, Del Pozo LJ, et al. Dermoscopy of nodular hidradenoma, a great masquerader: a morphological study of 28 cases. Dermatology. 2016;232:78-82. doi:10.1159/000441218

- Russo T, Piccolo V, Lallas A, et al. Dermoscopy of malignant skin tumours: what’s new? Dermatology. 2017;233:64-73. doi:10.1159/000472253

- Zaballos P, Llambrich A, Puig S, et al. Dermoscopic findings of pilomatricomas. Dermatology. 2008;217:225-230. doi:10.1159 /000148248

The Diagnosis: Giant Apocrine Hidrocystoma

Histopathology of the noduloplaque revealed an unremarkable epidermis with multilocular cystic spaces centered in the dermis. The cysts had a double-lined epithelium with inner columnar to cuboidal cells and outer myoepithelial cells (bottom quiz image). Columnar cells showing decapitation secretion could be appreciated at places indicating apocrine secretion (Figure). A final diagnosis of apocrine hidrocystoma was made.

Hidrocystomas are rare, benign, cystic lesions derived either from apocrine or eccrine glands.1 Apocrine hidrocystoma usually manifests as asymptomatic, solitary, dome-shaped papules or nodules with a predilection for the head and neck region. Hidrocystomas can vary from flesh colored to blue, brown, or black. Pigmentation in hidrocystoma is seen in 5% to 80% of cases and is attributed to the Tyndall effect.1 The tumor usually is less than 20 mm in diameter; larger lesions are termed giant apocrine hidrocystoma.2 Apocrine hidrocystoma manifesting with multiple lesions and a size greater than 10 mm, as seen in our case, is uncommon.

Zaballos et al3 described dermoscopy of apocrine hidrocystoma in 22 patients. Hallmark dermoscopic findings were the presence of a homogeneous flesh-colored, yellowish, blue to pinkish-blue area involving the entire lesion with arborizing vessels and whitish structures.3 Similar dermoscopic findings were present in our patient. The homogeneous area histologically correlates to the multiloculated cysts located in the dermis. The exact reason for white structures is unknown; however, their visualization in apocrine hidrocystoma could be attributed to the alternation in collagen orientation secondary to the presence of large or multiple cysts in the dermis.

The presence of shiny white dots arranged in a square resembling a four-leaf clover (also known as white rosettes) was a unique dermoscopic finding in our patient. These rosettes can be appreciated only with polarized dermoscopy, and they have been described in actinic keratosis, seborrheic keratosis, squamous cell carcinoma, and basal cell carcinoma.4 The exact morphologic correlate of white rosettes is unknown but is postulated to be secondary to material inside adnexal openings in small rosettes and concentric perifollicular fibrosis in larger rosettes.4 In our patient, we believe the white rosettes can be attributed to the accumulated secretions in the dermal glands, which also were seen via histopathology. Dermoscopy also revealed increased peripheral, brown, networklike pigmentation, which was unique and could be secondary to the patient’s darker skin phenotype.

Differential diagnoses of apocrine hidrocystoma include both melanocytic and nonmelanocytic conditions such as epidermal cyst, nodular melanoma, nodular hidradenoma, syringoma, blue nevus, pilomatricoma, eccrine poroma, nodular Kaposi sarcoma, and venous lake.1 Histopathology showing large unilocular or multilocular dermal cysts with double lining comprising outer myoepithelial cells and inner columnar or cuboidal cell with decapitation secretion is paramount in confirming the diagnosis of apocrine hidrocystoma.

Dermoscopy can act as a valuable noninvasive modality in differentiating apocrine hidrocystoma from its melanocytic and nonmelanocytic differential diagnoses (Table).5-8 In our patient, the presence of a homogeneous pink to bluish area involving the entire lesion, linear branched vessels, and whitish structures on dermoscopy pointed to the diagnosis of apocrine hidrocystoma, which was further confirmed by characteristic histopathologic findings.

The treatment of apocrine hidrocystoma includes surgical excision for solitary lesions, with electrodesiccation and curettage, chemical cautery, and CO2 laser ablation employed for multiple lesions.1 Our patient was scheduled for CO2 laser ablation, considering the multiple lesions and size of the apocrine hidrocystoma but was subsequently lost to follow-up.

The Diagnosis: Giant Apocrine Hidrocystoma

Histopathology of the noduloplaque revealed an unremarkable epidermis with multilocular cystic spaces centered in the dermis. The cysts had a double-lined epithelium with inner columnar to cuboidal cells and outer myoepithelial cells (bottom quiz image). Columnar cells showing decapitation secretion could be appreciated at places indicating apocrine secretion (Figure). A final diagnosis of apocrine hidrocystoma was made.

Hidrocystomas are rare, benign, cystic lesions derived either from apocrine or eccrine glands.1 Apocrine hidrocystoma usually manifests as asymptomatic, solitary, dome-shaped papules or nodules with a predilection for the head and neck region. Hidrocystomas can vary from flesh colored to blue, brown, or black. Pigmentation in hidrocystoma is seen in 5% to 80% of cases and is attributed to the Tyndall effect.1 The tumor usually is less than 20 mm in diameter; larger lesions are termed giant apocrine hidrocystoma.2 Apocrine hidrocystoma manifesting with multiple lesions and a size greater than 10 mm, as seen in our case, is uncommon.

Zaballos et al3 described dermoscopy of apocrine hidrocystoma in 22 patients. Hallmark dermoscopic findings were the presence of a homogeneous flesh-colored, yellowish, blue to pinkish-blue area involving the entire lesion with arborizing vessels and whitish structures.3 Similar dermoscopic findings were present in our patient. The homogeneous area histologically correlates to the multiloculated cysts located in the dermis. The exact reason for white structures is unknown; however, their visualization in apocrine hidrocystoma could be attributed to the alternation in collagen orientation secondary to the presence of large or multiple cysts in the dermis.

The presence of shiny white dots arranged in a square resembling a four-leaf clover (also known as white rosettes) was a unique dermoscopic finding in our patient. These rosettes can be appreciated only with polarized dermoscopy, and they have been described in actinic keratosis, seborrheic keratosis, squamous cell carcinoma, and basal cell carcinoma.4 The exact morphologic correlate of white rosettes is unknown but is postulated to be secondary to material inside adnexal openings in small rosettes and concentric perifollicular fibrosis in larger rosettes.4 In our patient, we believe the white rosettes can be attributed to the accumulated secretions in the dermal glands, which also were seen via histopathology. Dermoscopy also revealed increased peripheral, brown, networklike pigmentation, which was unique and could be secondary to the patient’s darker skin phenotype.

Differential diagnoses of apocrine hidrocystoma include both melanocytic and nonmelanocytic conditions such as epidermal cyst, nodular melanoma, nodular hidradenoma, syringoma, blue nevus, pilomatricoma, eccrine poroma, nodular Kaposi sarcoma, and venous lake.1 Histopathology showing large unilocular or multilocular dermal cysts with double lining comprising outer myoepithelial cells and inner columnar or cuboidal cell with decapitation secretion is paramount in confirming the diagnosis of apocrine hidrocystoma.

Dermoscopy can act as a valuable noninvasive modality in differentiating apocrine hidrocystoma from its melanocytic and nonmelanocytic differential diagnoses (Table).5-8 In our patient, the presence of a homogeneous pink to bluish area involving the entire lesion, linear branched vessels, and whitish structures on dermoscopy pointed to the diagnosis of apocrine hidrocystoma, which was further confirmed by characteristic histopathologic findings.

The treatment of apocrine hidrocystoma includes surgical excision for solitary lesions, with electrodesiccation and curettage, chemical cautery, and CO2 laser ablation employed for multiple lesions.1 Our patient was scheduled for CO2 laser ablation, considering the multiple lesions and size of the apocrine hidrocystoma but was subsequently lost to follow-up.

- Nguyen HP, Barker HS, Bloomquist L, et al. Giant pigmented apocrine hidrocystoma of the scalp [published online August 15, 2020]. Dermatol Online J. 2020;26:13030/qt7rt3s4pp.

- Anzai S, Goto M, Fujiwara S, et al. Apocrine hidrocystoma: a case report and analysis of 167 Japanese cases. Int J Dermatol. 2005;44:702-703. doi:10.1111/j.1365-4632.2005.02512.x

- Zaballos P, Bañuls J, Medina C, et al. Dermoscopy of apocrine hidrocystomas: a morphological study. J Eur Acad Dermatol Venereol. 2014;28:378-381. doi:10.1111/jdv.12044

- Haspeslagh M, Noë M, De Wispelaere I, et al. Rosettes and other white shiny structures in polarized dermoscopy: histological correlate and optical explanation. J Eur Acad Dermatol Venereol. 2016;30:311-313. doi:10.1111/jdv.13080

- Suh KS, Kang DY, Park JB, et al. Usefulness of dermoscopy in the differential diagnosis of ruptured and unruptured epidermal cysts. Ann Dermatol. 2017;29:33-38. doi:10.5021/ad.2017.29.1.33

- Serrano P, Lallas A, Del Pozo LJ, et al. Dermoscopy of nodular hidradenoma, a great masquerader: a morphological study of 28 cases. Dermatology. 2016;232:78-82. doi:10.1159/000441218

- Russo T, Piccolo V, Lallas A, et al. Dermoscopy of malignant skin tumours: what’s new? Dermatology. 2017;233:64-73. doi:10.1159/000472253

- Zaballos P, Llambrich A, Puig S, et al. Dermoscopic findings of pilomatricomas. Dermatology. 2008;217:225-230. doi:10.1159 /000148248

- Nguyen HP, Barker HS, Bloomquist L, et al. Giant pigmented apocrine hidrocystoma of the scalp [published online August 15, 2020]. Dermatol Online J. 2020;26:13030/qt7rt3s4pp.

- Anzai S, Goto M, Fujiwara S, et al. Apocrine hidrocystoma: a case report and analysis of 167 Japanese cases. Int J Dermatol. 2005;44:702-703. doi:10.1111/j.1365-4632.2005.02512.x

- Zaballos P, Bañuls J, Medina C, et al. Dermoscopy of apocrine hidrocystomas: a morphological study. J Eur Acad Dermatol Venereol. 2014;28:378-381. doi:10.1111/jdv.12044

- Haspeslagh M, Noë M, De Wispelaere I, et al. Rosettes and other white shiny structures in polarized dermoscopy: histological correlate and optical explanation. J Eur Acad Dermatol Venereol. 2016;30:311-313. doi:10.1111/jdv.13080

- Suh KS, Kang DY, Park JB, et al. Usefulness of dermoscopy in the differential diagnosis of ruptured and unruptured epidermal cysts. Ann Dermatol. 2017;29:33-38. doi:10.5021/ad.2017.29.1.33

- Serrano P, Lallas A, Del Pozo LJ, et al. Dermoscopy of nodular hidradenoma, a great masquerader: a morphological study of 28 cases. Dermatology. 2016;232:78-82. doi:10.1159/000441218

- Russo T, Piccolo V, Lallas A, et al. Dermoscopy of malignant skin tumours: what’s new? Dermatology. 2017;233:64-73. doi:10.1159/000472253

- Zaballos P, Llambrich A, Puig S, et al. Dermoscopic findings of pilomatricomas. Dermatology. 2008;217:225-230. doi:10.1159 /000148248

A 21-year-old man presented with a raised lesion on the forehead that had started as a single papule 16 years prior and gradually increased in number and size. There were no associated symptoms and no history of seasonal variation in the size of the lesions. Physical examination revealed multiple erythematous to slightly bluish translucent papules that coalesced to form a 3×3-cm noduloplaque with cystic consistency on the right side of the forehead (top). Dermoscopic examination (middle) (polarized noncontact mode) revealed a homogeneous pink to bluish background, scattered linear vessels with branches (black arrows), multiple chrysalislike shiny white lines (blue arrows), and dots arranged in a 4-dot pattern (black circle) resembling a four-leaf clover. Increased peripheral, brown, networklike pigmentation (black stars) also was noted on dermoscopy. Histopathologic examination of the noduloplaque was performed (bottom).

Cell-Free DNA May Inform IBD Diagnosis

LAS VEGAS —

“We think for indeterminate colitis, our assay can be quite beneficial in helping a clinician resolve or provide some additional insight and information. We have a very robust ability to predict from just the plasma microbial cell free DNA alone [whether] individuals are in remission or mild or moderate or active disease,” Shiv Kale, PhD, said during a presentation of the results at the annual Crohn’s & Colitis Congress®, a partnership of the Crohn’s & Colitis Foundation and the American Gastroenterological Association. Dr. Kale is director of computational biomarker discovery at Karius Inc., a company whose Karius Test is also being developed as a rapid test for various infections and febrile neutropenia.

cfDNA has proved to be a useful biomarker for a range of conditions, including cancer screening, diagnosis and monitoring, prenatal testing, and organ monitoring following transplantation. The tests rely on the fact that both human and microbial cells release DNA after cell death, and it is readily detectable in plasma.

Dr. Kale described the company’s efforts to identify microbial species biomarkers and a classification scheme that he said leads to consistent performance.

The latest study included 196 patients with Crohn’s disease and 196 with ulcerative colitis, with each group including individuals in remission and with mild, moderate, and severe disease. All patients had an endoscopic assessment within 30 days of plasma measurements.

cfDNA distinguished between patients with Crohn’s disease, ulcerative colitis, and those who were asymptomatic. It distinguished between patients with IBD and those who were asymptomatic with a sensitivity of 99.5% and a specificity of 90%, which are equivalent to or better than other traditional measures to distinguish active IBD versus asymptomatic patients.

The researchers plan a follow-up study of 1,800 samples in partnership with the Crohn’s and Colitis Foundation to examine the ability of cfDNA to determine disease severity, as well as location of disease and Crohn’s disease subtypes.

An ‘Intriguing’ Method

During the Q&A session, one questioner pointed out that colorectal cancer and infections can produce microbial signatures that could be confounding the results. He asked: “Do you have a data set to compare [cfDNA findings] to serum from C. diff, norovirus, and colorectal cancer? And if you don’t, I strongly encourage you to do so.” Dr. Kale responded that the group is working on that.

Asked for comment, session moderator Dana Lukin, MD, AGAF, offered praise for the work.

“I think it’s an intriguing method that we haven’t seen used as a predictor in IBD. Using this as a biomarker of disease type is very intriguing for confirming a diagnosis. I think probably one of the most compelling points is the distinction between IBD and non-IBD. Our current serologic-based assays do not really do that very well. I think this is a big improvement on that,” said Dr. Lukin, associate professor of clinical medicine at Weill Cornell Medical College, New York.

He echoed the questioner’s comments, suggesting that a key question will be whether cfDNA can correlate with disease activity over time.

He also called for prospective studies to validate the approach.

If successful, the technology could have broad application. “Certainly in kids this might be useful or in folks that either aren’t as inclined to have invasive testing done, or for whatever logistic reasons it’s not easy,” said Dr. Lukin.

Dr. Kale is an employee of Karius. Dr. Lukin has no relevant financial disclosures..

LAS VEGAS —

“We think for indeterminate colitis, our assay can be quite beneficial in helping a clinician resolve or provide some additional insight and information. We have a very robust ability to predict from just the plasma microbial cell free DNA alone [whether] individuals are in remission or mild or moderate or active disease,” Shiv Kale, PhD, said during a presentation of the results at the annual Crohn’s & Colitis Congress®, a partnership of the Crohn’s & Colitis Foundation and the American Gastroenterological Association. Dr. Kale is director of computational biomarker discovery at Karius Inc., a company whose Karius Test is also being developed as a rapid test for various infections and febrile neutropenia.

cfDNA has proved to be a useful biomarker for a range of conditions, including cancer screening, diagnosis and monitoring, prenatal testing, and organ monitoring following transplantation. The tests rely on the fact that both human and microbial cells release DNA after cell death, and it is readily detectable in plasma.

Dr. Kale described the company’s efforts to identify microbial species biomarkers and a classification scheme that he said leads to consistent performance.

The latest study included 196 patients with Crohn’s disease and 196 with ulcerative colitis, with each group including individuals in remission and with mild, moderate, and severe disease. All patients had an endoscopic assessment within 30 days of plasma measurements.

cfDNA distinguished between patients with Crohn’s disease, ulcerative colitis, and those who were asymptomatic. It distinguished between patients with IBD and those who were asymptomatic with a sensitivity of 99.5% and a specificity of 90%, which are equivalent to or better than other traditional measures to distinguish active IBD versus asymptomatic patients.

The researchers plan a follow-up study of 1,800 samples in partnership with the Crohn’s and Colitis Foundation to examine the ability of cfDNA to determine disease severity, as well as location of disease and Crohn’s disease subtypes.

An ‘Intriguing’ Method

During the Q&A session, one questioner pointed out that colorectal cancer and infections can produce microbial signatures that could be confounding the results. He asked: “Do you have a data set to compare [cfDNA findings] to serum from C. diff, norovirus, and colorectal cancer? And if you don’t, I strongly encourage you to do so.” Dr. Kale responded that the group is working on that.

Asked for comment, session moderator Dana Lukin, MD, AGAF, offered praise for the work.

“I think it’s an intriguing method that we haven’t seen used as a predictor in IBD. Using this as a biomarker of disease type is very intriguing for confirming a diagnosis. I think probably one of the most compelling points is the distinction between IBD and non-IBD. Our current serologic-based assays do not really do that very well. I think this is a big improvement on that,” said Dr. Lukin, associate professor of clinical medicine at Weill Cornell Medical College, New York.

He echoed the questioner’s comments, suggesting that a key question will be whether cfDNA can correlate with disease activity over time.

He also called for prospective studies to validate the approach.

If successful, the technology could have broad application. “Certainly in kids this might be useful or in folks that either aren’t as inclined to have invasive testing done, or for whatever logistic reasons it’s not easy,” said Dr. Lukin.

Dr. Kale is an employee of Karius. Dr. Lukin has no relevant financial disclosures..

LAS VEGAS —

“We think for indeterminate colitis, our assay can be quite beneficial in helping a clinician resolve or provide some additional insight and information. We have a very robust ability to predict from just the plasma microbial cell free DNA alone [whether] individuals are in remission or mild or moderate or active disease,” Shiv Kale, PhD, said during a presentation of the results at the annual Crohn’s & Colitis Congress®, a partnership of the Crohn’s & Colitis Foundation and the American Gastroenterological Association. Dr. Kale is director of computational biomarker discovery at Karius Inc., a company whose Karius Test is also being developed as a rapid test for various infections and febrile neutropenia.

cfDNA has proved to be a useful biomarker for a range of conditions, including cancer screening, diagnosis and monitoring, prenatal testing, and organ monitoring following transplantation. The tests rely on the fact that both human and microbial cells release DNA after cell death, and it is readily detectable in plasma.

Dr. Kale described the company’s efforts to identify microbial species biomarkers and a classification scheme that he said leads to consistent performance.

The latest study included 196 patients with Crohn’s disease and 196 with ulcerative colitis, with each group including individuals in remission and with mild, moderate, and severe disease. All patients had an endoscopic assessment within 30 days of plasma measurements.

cfDNA distinguished between patients with Crohn’s disease, ulcerative colitis, and those who were asymptomatic. It distinguished between patients with IBD and those who were asymptomatic with a sensitivity of 99.5% and a specificity of 90%, which are equivalent to or better than other traditional measures to distinguish active IBD versus asymptomatic patients.

The researchers plan a follow-up study of 1,800 samples in partnership with the Crohn’s and Colitis Foundation to examine the ability of cfDNA to determine disease severity, as well as location of disease and Crohn’s disease subtypes.

An ‘Intriguing’ Method

During the Q&A session, one questioner pointed out that colorectal cancer and infections can produce microbial signatures that could be confounding the results. He asked: “Do you have a data set to compare [cfDNA findings] to serum from C. diff, norovirus, and colorectal cancer? And if you don’t, I strongly encourage you to do so.” Dr. Kale responded that the group is working on that.

Asked for comment, session moderator Dana Lukin, MD, AGAF, offered praise for the work.

“I think it’s an intriguing method that we haven’t seen used as a predictor in IBD. Using this as a biomarker of disease type is very intriguing for confirming a diagnosis. I think probably one of the most compelling points is the distinction between IBD and non-IBD. Our current serologic-based assays do not really do that very well. I think this is a big improvement on that,” said Dr. Lukin, associate professor of clinical medicine at Weill Cornell Medical College, New York.

He echoed the questioner’s comments, suggesting that a key question will be whether cfDNA can correlate with disease activity over time.

He also called for prospective studies to validate the approach.

If successful, the technology could have broad application. “Certainly in kids this might be useful or in folks that either aren’t as inclined to have invasive testing done, or for whatever logistic reasons it’s not easy,” said Dr. Lukin.

Dr. Kale is an employee of Karius. Dr. Lukin has no relevant financial disclosures..

FROM CROHN’S AND COLITIS CONGRESS

Targeting Fetus-derived Gdf15 May Curb Nausea and Vomiting of Pregnancy

, and targeting the hormone prophylactically may reduce this common gestational condition.

This protein acts on the brainstem to cause emesis, and, significantly, a mother’s prior exposure to it determines the degree of NVP severity she will experience, according to international researchers including Marlena Fejzo, PhD, a clinical assistant professor of population and public Health at Keck School of Medicine, University of Southern California, Los Angeles.

“GDF15 is at the mechanistic heart of NVP and HG [hyperemesis gravidarum],” Dr. Fejzo and colleagues wrote in Nature, pointing to the need for preventive and therapeutic strategies.

“My previous research showed an association between variation in the GDF15 gene and nausea and vomiting of pregnancy and HG, and this study takes it one step further by elucidating the mechanism. It confirms that the nausea and vomiting (N/V) hormone GDF15 is a major cause of NVP and HG,” Dr. Fejzo said.

The etiology of NVP remains poorly understood although it affects up to 80% of pregnancies. In the US, its severe form, HG, is the leading cause of hospitalization in early pregnancy and the second-leading reason for pregnancy hospitalization overall.

The immunoassay-based study showed that the majority of GDF15 in maternal blood during pregnancy comes from the fetal part of the placenta, and confirms previous studies reporting higher levels in pregnancies with more severe NVP, said Dr. Fejzo, who is who is a board member of the Hyperemesis Education and Research Foundation.

“However, what was really fascinating and surprising is that prior to pregnancy the women who have more severe NVP symptoms actually have lower levels of the hormone.”

Although the gene variant linked to HG was previously associated with higher circulating levels in maternal blood, counterintuitively, this new research showed that women with abnormally high levels prior to pregnancy have either no or very little NVP, said Dr. Fejzo. “That suggests that in humans higher levels may lead to a desensitization to the high levels of the hormone in pregnancy. Then we also proved that desensitization can occur in a mouse model.”

According to Erin Higgins, MD, a clinical assistant professor of obgyn and reproductive biology at the Cleveland Clinic, Cleveland, Ohio, who was not involved in the study, “This is an exciting finding that may help us to better target treatment of N/V in pregnancy. Factors for NVP have been identified, but to my knowledge there has not been a clear etiology.”

Dr. Higgins cautioned, however, that the GDF15 gene seems important in normal placentation, “so it’s not as simple as blocking the gene or its receptor.” But since preconception exposure to GDF15 might decrease nausea and vomiting once a woman is pregnant, prophylactic treatment may be possible, and metformin has been suggested as a possibility, she said.

The study findings emerged from immunoassays on maternal blood samples collected at about 15 weeks (first trimester and early second trimester), from women with NVP (n = 168) or seen at a hospital for HG (n = 57). Results were compared with those from controls having similar characteristics but no significant symptoms.

Interestingly, GDF15 is also associated with cachexia, a condition similar to HG and characterized by loss of appetite and weight loss, Dr. Fejzo noted. “The hormone can be produced by malignant tumors at levels similar to those seen in pregnancy, and symptoms can be reduced by blocking GDF15 or its receptor, GFRAL. Clinical trials are already underway in cancer patients to test this.”

She is seeking funding to test the impact of increasing GDF15 levels prior to pregnancy in patients who previously experienced HG. “I am confident that desensitizing patients by increasing GDF15 prior to pregnancy and by lowering GDF15 levels during pregnancy will work. But we need to make sure we do safety studies and get the dosing and duration right, and that will take some time.”

Desensitization will need testing first in HG, where the risk for adverse maternal and fetal outcomes is high, so the benefit will outweigh any possible risk of testing medication in pregnancy, she continued. “It will take some time before we get to patients with normal NVP, but I do believe eventually the new findings will result in game-changing therapeutics for the condition.”

Dr. Higgins added, “Even if this isn’t the golden ticket, researchers and clinicians are working toward improvements in the treatment of NVP. We’ve already come a long way in recent years with the development of treatment algorithms and the advent of doxylamine/pyridoxine.”

This study was supported primarily by the Medical Research Council UK and National Institute for Health and Care Research UK, with additional support from various research funding organizations, including Novo Nordisk Foundation.

Dr. Fejzo is a paid consultant for Materna Biosciences and NGM Biopharmaceuticals, and a board member and science adviser for the Hyperemesis Education and Research Foundation.

Numerous study co-authors disclosed financial relationships with private-sector companies, including employment and patent ownership.

Dr. Higgins disclosed no competing interests relevant to her comments but is an instructor for Organon.

, and targeting the hormone prophylactically may reduce this common gestational condition.

This protein acts on the brainstem to cause emesis, and, significantly, a mother’s prior exposure to it determines the degree of NVP severity she will experience, according to international researchers including Marlena Fejzo, PhD, a clinical assistant professor of population and public Health at Keck School of Medicine, University of Southern California, Los Angeles.

“GDF15 is at the mechanistic heart of NVP and HG [hyperemesis gravidarum],” Dr. Fejzo and colleagues wrote in Nature, pointing to the need for preventive and therapeutic strategies.

“My previous research showed an association between variation in the GDF15 gene and nausea and vomiting of pregnancy and HG, and this study takes it one step further by elucidating the mechanism. It confirms that the nausea and vomiting (N/V) hormone GDF15 is a major cause of NVP and HG,” Dr. Fejzo said.

The etiology of NVP remains poorly understood although it affects up to 80% of pregnancies. In the US, its severe form, HG, is the leading cause of hospitalization in early pregnancy and the second-leading reason for pregnancy hospitalization overall.

The immunoassay-based study showed that the majority of GDF15 in maternal blood during pregnancy comes from the fetal part of the placenta, and confirms previous studies reporting higher levels in pregnancies with more severe NVP, said Dr. Fejzo, who is who is a board member of the Hyperemesis Education and Research Foundation.

“However, what was really fascinating and surprising is that prior to pregnancy the women who have more severe NVP symptoms actually have lower levels of the hormone.”

Although the gene variant linked to HG was previously associated with higher circulating levels in maternal blood, counterintuitively, this new research showed that women with abnormally high levels prior to pregnancy have either no or very little NVP, said Dr. Fejzo. “That suggests that in humans higher levels may lead to a desensitization to the high levels of the hormone in pregnancy. Then we also proved that desensitization can occur in a mouse model.”

According to Erin Higgins, MD, a clinical assistant professor of obgyn and reproductive biology at the Cleveland Clinic, Cleveland, Ohio, who was not involved in the study, “This is an exciting finding that may help us to better target treatment of N/V in pregnancy. Factors for NVP have been identified, but to my knowledge there has not been a clear etiology.”

Dr. Higgins cautioned, however, that the GDF15 gene seems important in normal placentation, “so it’s not as simple as blocking the gene or its receptor.” But since preconception exposure to GDF15 might decrease nausea and vomiting once a woman is pregnant, prophylactic treatment may be possible, and metformin has been suggested as a possibility, she said.

The study findings emerged from immunoassays on maternal blood samples collected at about 15 weeks (first trimester and early second trimester), from women with NVP (n = 168) or seen at a hospital for HG (n = 57). Results were compared with those from controls having similar characteristics but no significant symptoms.

Interestingly, GDF15 is also associated with cachexia, a condition similar to HG and characterized by loss of appetite and weight loss, Dr. Fejzo noted. “The hormone can be produced by malignant tumors at levels similar to those seen in pregnancy, and symptoms can be reduced by blocking GDF15 or its receptor, GFRAL. Clinical trials are already underway in cancer patients to test this.”

She is seeking funding to test the impact of increasing GDF15 levels prior to pregnancy in patients who previously experienced HG. “I am confident that desensitizing patients by increasing GDF15 prior to pregnancy and by lowering GDF15 levels during pregnancy will work. But we need to make sure we do safety studies and get the dosing and duration right, and that will take some time.”

Desensitization will need testing first in HG, where the risk for adverse maternal and fetal outcomes is high, so the benefit will outweigh any possible risk of testing medication in pregnancy, she continued. “It will take some time before we get to patients with normal NVP, but I do believe eventually the new findings will result in game-changing therapeutics for the condition.”

Dr. Higgins added, “Even if this isn’t the golden ticket, researchers and clinicians are working toward improvements in the treatment of NVP. We’ve already come a long way in recent years with the development of treatment algorithms and the advent of doxylamine/pyridoxine.”

This study was supported primarily by the Medical Research Council UK and National Institute for Health and Care Research UK, with additional support from various research funding organizations, including Novo Nordisk Foundation.

Dr. Fejzo is a paid consultant for Materna Biosciences and NGM Biopharmaceuticals, and a board member and science adviser for the Hyperemesis Education and Research Foundation.

Numerous study co-authors disclosed financial relationships with private-sector companies, including employment and patent ownership.

Dr. Higgins disclosed no competing interests relevant to her comments but is an instructor for Organon.

, and targeting the hormone prophylactically may reduce this common gestational condition.

This protein acts on the brainstem to cause emesis, and, significantly, a mother’s prior exposure to it determines the degree of NVP severity she will experience, according to international researchers including Marlena Fejzo, PhD, a clinical assistant professor of population and public Health at Keck School of Medicine, University of Southern California, Los Angeles.

“GDF15 is at the mechanistic heart of NVP and HG [hyperemesis gravidarum],” Dr. Fejzo and colleagues wrote in Nature, pointing to the need for preventive and therapeutic strategies.

“My previous research showed an association between variation in the GDF15 gene and nausea and vomiting of pregnancy and HG, and this study takes it one step further by elucidating the mechanism. It confirms that the nausea and vomiting (N/V) hormone GDF15 is a major cause of NVP and HG,” Dr. Fejzo said.

The etiology of NVP remains poorly understood although it affects up to 80% of pregnancies. In the US, its severe form, HG, is the leading cause of hospitalization in early pregnancy and the second-leading reason for pregnancy hospitalization overall.

The immunoassay-based study showed that the majority of GDF15 in maternal blood during pregnancy comes from the fetal part of the placenta, and confirms previous studies reporting higher levels in pregnancies with more severe NVP, said Dr. Fejzo, who is who is a board member of the Hyperemesis Education and Research Foundation.

“However, what was really fascinating and surprising is that prior to pregnancy the women who have more severe NVP symptoms actually have lower levels of the hormone.”

Although the gene variant linked to HG was previously associated with higher circulating levels in maternal blood, counterintuitively, this new research showed that women with abnormally high levels prior to pregnancy have either no or very little NVP, said Dr. Fejzo. “That suggests that in humans higher levels may lead to a desensitization to the high levels of the hormone in pregnancy. Then we also proved that desensitization can occur in a mouse model.”

According to Erin Higgins, MD, a clinical assistant professor of obgyn and reproductive biology at the Cleveland Clinic, Cleveland, Ohio, who was not involved in the study, “This is an exciting finding that may help us to better target treatment of N/V in pregnancy. Factors for NVP have been identified, but to my knowledge there has not been a clear etiology.”

Dr. Higgins cautioned, however, that the GDF15 gene seems important in normal placentation, “so it’s not as simple as blocking the gene or its receptor.” But since preconception exposure to GDF15 might decrease nausea and vomiting once a woman is pregnant, prophylactic treatment may be possible, and metformin has been suggested as a possibility, she said.

The study findings emerged from immunoassays on maternal blood samples collected at about 15 weeks (first trimester and early second trimester), from women with NVP (n = 168) or seen at a hospital for HG (n = 57). Results were compared with those from controls having similar characteristics but no significant symptoms.

Interestingly, GDF15 is also associated with cachexia, a condition similar to HG and characterized by loss of appetite and weight loss, Dr. Fejzo noted. “The hormone can be produced by malignant tumors at levels similar to those seen in pregnancy, and symptoms can be reduced by blocking GDF15 or its receptor, GFRAL. Clinical trials are already underway in cancer patients to test this.”

She is seeking funding to test the impact of increasing GDF15 levels prior to pregnancy in patients who previously experienced HG. “I am confident that desensitizing patients by increasing GDF15 prior to pregnancy and by lowering GDF15 levels during pregnancy will work. But we need to make sure we do safety studies and get the dosing and duration right, and that will take some time.”

Desensitization will need testing first in HG, where the risk for adverse maternal and fetal outcomes is high, so the benefit will outweigh any possible risk of testing medication in pregnancy, she continued. “It will take some time before we get to patients with normal NVP, but I do believe eventually the new findings will result in game-changing therapeutics for the condition.”

Dr. Higgins added, “Even if this isn’t the golden ticket, researchers and clinicians are working toward improvements in the treatment of NVP. We’ve already come a long way in recent years with the development of treatment algorithms and the advent of doxylamine/pyridoxine.”

This study was supported primarily by the Medical Research Council UK and National Institute for Health and Care Research UK, with additional support from various research funding organizations, including Novo Nordisk Foundation.

Dr. Fejzo is a paid consultant for Materna Biosciences and NGM Biopharmaceuticals, and a board member and science adviser for the Hyperemesis Education and Research Foundation.

Numerous study co-authors disclosed financial relationships with private-sector companies, including employment and patent ownership.

Dr. Higgins disclosed no competing interests relevant to her comments but is an instructor for Organon.

FROM NATURE

Nonblanching, Erythematous, Cerebriform Plaques on the Foot

The Diagnosis: Coral Dermatitis

At 3-week follow-up, the patient demonstrated remarkable improvement in the intensity and size of the erythematous cerebriform plaques following daily application of triamcinolone acetonide cream 0.1% (Figure). The lesion disappeared after several months and did not recur. The delayed presentation of symptoms with a history of incidental coral contact during snorkeling most likely represents the type IV hypersensitivity reaction seen in the diagnosis of coral dermatitis, an extraordinarily rare form of contact dermatitis.1 Not all coral trigger skin reactions. Species of coral that contain nematocysts in their tentacles (aptly named stinging capsules) are responsible for the sting preceding coral dermatitis, as the nematocysts eject a coiled filament in response to human tactile stimulation that injects toxins into the epidermis.2

Acute, delayed, or chronic cutaneous changes follow envenomation. Acute responses arise immediately to a few hours after initial contact and are considered an irritant contact dermatitis.3 Local tissue histamine release and cascades of cytotoxic reactions often result in the characteristic urticarial or vesiculobullous plaques in addition to necrosis, piloerection, and localized lymphadenopathy.2-4 Although relatively uncommon, there may be rapid onset of systemic symptoms such as fever, malaise, hives, nausea, or emesis. Cardiopulmonary events, hepatotoxicity, renal failure, or anaphylaxis are rare.2 Histopathology of biopsy specimens reveals epidermal spongiosis with microvesicles and papillary dermal edema.1,5 In comparison, delayed reactions occur within days to weeks and exhibit epidermal parakeratosis, spongiosis, basal layer vacuolization, focal necrosis, lymphocyte exocytosis, and papillary dermal edema with extravasated erythrocytes.1,6 Clinically, it may present as linear rows of erythematous papules with burning and pruritus.6 Chronic reactions manifest after months as difficult-to-treat, persistent lichenoid dermatitis occasionally accompanied by granulomatous changes.1,2,4 Primary prevention measures after initial contact include an acetic acid rinse and cold compression to wash away residual nematocysts in the affected area.4,7,8 If a rash develops, topical steroids are the mainstay of treatment.3,8

In tandem with toxic nematocysts, the rigid calcified bodies of coral provide an additional self-defense mechanism against human contact.2,4 The irregular haphazard nature of coral may catch novice divers off guard and lead to laceration of a mispositioned limb, thereby increasing the risk for secondary infections due to the introduction of calcium carbonate and toxic mucinous deposits at the wound site, warranting antibiotic treatment.2,4,7 Because tropical locales are home to other natural dangers that inflict disease and mimic early signs of coral dermatitis, reaching an accurate diagnosis can be difficult, particularly for lower limb lesions. In summary, the diagnosis of coral dermatitis can be rendered based on morphology of the lesion and clinical context (exposure to corals and delayed symptoms) as well as response to topical steroids.

The differential diagnosis includes accidental trauma. Variations in impact force and patient skin integrity lead to a number of possible cutaneous manifestations seen in accidental trauma,9 which includes contusions resulting from burst capillaries underneath intact skin, abrasions due to the superficial epidermis scuffing away, and lacerations caused by enough force to rip and split the skin, leaving subcutaneous tissue between the intact tissue.9,10 Typically, the pattern of injury can provide hints to match what object or organism caused the wound.9 However, delayed response and worsening symptoms, as seen in coral dermatitis, would be unusual in accidental trauma unless it is complicated by secondary infection (infectious dermatitis), which does not respond to topical steroids and requires antibiotic treatment.

Another differential diagnosis includes cutaneous larva migrans, which infests domesticated and stray animals. For example, hookworm larvae propagate their eggs inside the intestines of their host before fecal-soil transmission in sandy locales.11 Unexpecting beachgoers travel barefoot on this contaminated soil, offering ample opportunity for the parasite to burrow into the upper dermis.11,12 The clinical presentation includes signs and symptoms of creeping eruption such as pruritic, linear, serpiginous tracks. Topical treatment with thiabendazole requires application 3 times daily for 15 days, which increases the risk for nonadherence, yet this therapy proves advantageous if a patient does not tolerate oral agents due to systemic adverse effects.11,12 Oral agents (eg, ivermectin, albendazole) offer improved adherence with a single dose11,13; the cure rate was higher with a single dose of ivermectin 12 mg vs a single dose of albendazole 400 mg.13 The current suggested treatment is ivermectin 200 μg/kg by mouth daily for 1 or 2 days.14

The incidence of seabather’s eruption (also known as chinkui dermatitis) is highest during the summer season and fluctuates between epidemic and nonepidemic years.15,16 It occurs sporadically worldwide mostly in tropical climates due to trapping of larvae spawn of sea animals such as crustaceans in swimwear. Initially, it presents as a pruritic and burning sensation after exiting the water, manifesting as a macular, papular, or maculopapular rash on areas covered by the swimsuit.15,16 The sensation is worse in areas that are tightly banded on the swimsuit, including the waistband and elastic straps.15 Commonly, the affected individual will seek relief via a shower, which intensifies the burning, especially if the swimsuit has not been removed. The contaminated swimwear should be immediately discarded, as the trapped sea larvae’s nematocysts activate with the pressure and friction of movement.15 Seabather’s eruption typically resolves spontaneously within a week, but symptom management can be achieved with topical steroids (triamcinolone 0.1% or clobetasol 0.05%).15,16 Unlike coral dermatitis, in seabather’s eruption the symptoms are immediate and the location of the eruption coincides with areas covered by the swimsuit.

- Ahn HS, Yoon SY, Park HJ, et al. A patient with delayed contact dermatitis to coral and she displayed superficial granuloma. Ann Dermatol. 2009;21:95-97. doi:10.5021/ad.2009.21.1.95

- Haddad V Jr, Lupi O, Lonza JP, et al. Tropical dermatology: marine and aquatic dermatology. J Am Acad Dermatol. 2009;61:733-752. doi:10.1016/j.jaad.2009.01.046

- Salik J, Tang R. Images in clinical medicine. Coral dermatitis. N Engl J Med. 2015;373:E2. doi:10.1056/NEJMicm1412907

- Reese E, Depenbrock P. Water envenomations and stings. Curr Sports Med Rep. 2014;13:126-131. doi:10.1249/JSR.0000000000000042

- Addy JH. Red sea coral contact dermatitis. Int J Dermatol. 1991; 30:271-273. doi:10.1111/j.1365-4362.1991.tb04636.x

- Miracco C, Lalinga AV, Sbano P, et al. Delayed skin reaction to Red Sea coral injury showing superficial granulomas and atypical CD30+ lymphocytes: report of a case. Br J Dermatol. 2001;145:849-851. doi:10.1046/j.1365-2133.2001.04454.x

- Ceponis PJ, Cable R, Weaver LK. Don’t kick the coral! Wilderness Environ Med. 2017;28:153-155. doi:10.1016/j.wem.2017.01.025

- Tlougan BE, Podjasek JO, Adams BB. Aquatic sports dematoses. part 2-in the water: saltwater dermatoses. Int J Dermatol. 2010;49:994-1002. doi:10.1111/j.1365-4632.2010.04476.x

- Simon LV, Lopez RA, King KC. Blunt force trauma. StatPearls [Internet]. StatPearls Publishing; 2023. Accessed January 12, 2034. https://www.ncbi.nlm.nih.gov/books/NBK470338/

- Gentile S, Kneubuehl BP, Barrera V, et al. Fracture energy threshold in parry injuries due to sharp and blunt force. Int J Legal Med. 2019;133:1429-1435.

- Caumes E. Treatment of cutaneous larva migrans. Clin Infect Dis. 2000;30:811-814. doi:10.1086/313787

- Davies HD, Sakuls P, Keystone JS. Creeping eruption. A review of clinical presentation and management of 60 cases presenting to a tropical disease unit. Arch Dermatol. 1993;129:588-591. doi:10.1001 /archderm.129.5.588

- Caumes E, Carriere J, Datry A, et al. A randomized trial of ivermectin versus albendazole for the treatment of cutaneous larva migrans. Am J Trop Med Hyg. 1993;49:641-644. doi:10.4269 /ajtmh.1993.49.641

- Schuster A, Lesshafft H, Reichert F, et al. Hookworm-related cutaneous larva migrans in northern Brazil: resolution of clinical pathology after a single dose of ivermectin. Clin Infect Dis. 2013;57:1155-1157. doi:10.1093/cid/cit440

- Freudenthal AR, Joseph PR. Seabather’s eruption. N Engl J Med. 1993;329:542-544. doi:10.1056/NEJM199308193290805

- Odagawa S, Watari T, Yoshida M. Chinkui dermatitis: the sea bather’s eruption. QJM. 2022;115:100-101. doi:10.1093/qjmed/hcab277

The Diagnosis: Coral Dermatitis

At 3-week follow-up, the patient demonstrated remarkable improvement in the intensity and size of the erythematous cerebriform plaques following daily application of triamcinolone acetonide cream 0.1% (Figure). The lesion disappeared after several months and did not recur. The delayed presentation of symptoms with a history of incidental coral contact during snorkeling most likely represents the type IV hypersensitivity reaction seen in the diagnosis of coral dermatitis, an extraordinarily rare form of contact dermatitis.1 Not all coral trigger skin reactions. Species of coral that contain nematocysts in their tentacles (aptly named stinging capsules) are responsible for the sting preceding coral dermatitis, as the nematocysts eject a coiled filament in response to human tactile stimulation that injects toxins into the epidermis.2

Acute, delayed, or chronic cutaneous changes follow envenomation. Acute responses arise immediately to a few hours after initial contact and are considered an irritant contact dermatitis.3 Local tissue histamine release and cascades of cytotoxic reactions often result in the characteristic urticarial or vesiculobullous plaques in addition to necrosis, piloerection, and localized lymphadenopathy.2-4 Although relatively uncommon, there may be rapid onset of systemic symptoms such as fever, malaise, hives, nausea, or emesis. Cardiopulmonary events, hepatotoxicity, renal failure, or anaphylaxis are rare.2 Histopathology of biopsy specimens reveals epidermal spongiosis with microvesicles and papillary dermal edema.1,5 In comparison, delayed reactions occur within days to weeks and exhibit epidermal parakeratosis, spongiosis, basal layer vacuolization, focal necrosis, lymphocyte exocytosis, and papillary dermal edema with extravasated erythrocytes.1,6 Clinically, it may present as linear rows of erythematous papules with burning and pruritus.6 Chronic reactions manifest after months as difficult-to-treat, persistent lichenoid dermatitis occasionally accompanied by granulomatous changes.1,2,4 Primary prevention measures after initial contact include an acetic acid rinse and cold compression to wash away residual nematocysts in the affected area.4,7,8 If a rash develops, topical steroids are the mainstay of treatment.3,8

In tandem with toxic nematocysts, the rigid calcified bodies of coral provide an additional self-defense mechanism against human contact.2,4 The irregular haphazard nature of coral may catch novice divers off guard and lead to laceration of a mispositioned limb, thereby increasing the risk for secondary infections due to the introduction of calcium carbonate and toxic mucinous deposits at the wound site, warranting antibiotic treatment.2,4,7 Because tropical locales are home to other natural dangers that inflict disease and mimic early signs of coral dermatitis, reaching an accurate diagnosis can be difficult, particularly for lower limb lesions. In summary, the diagnosis of coral dermatitis can be rendered based on morphology of the lesion and clinical context (exposure to corals and delayed symptoms) as well as response to topical steroids.

The differential diagnosis includes accidental trauma. Variations in impact force and patient skin integrity lead to a number of possible cutaneous manifestations seen in accidental trauma,9 which includes contusions resulting from burst capillaries underneath intact skin, abrasions due to the superficial epidermis scuffing away, and lacerations caused by enough force to rip and split the skin, leaving subcutaneous tissue between the intact tissue.9,10 Typically, the pattern of injury can provide hints to match what object or organism caused the wound.9 However, delayed response and worsening symptoms, as seen in coral dermatitis, would be unusual in accidental trauma unless it is complicated by secondary infection (infectious dermatitis), which does not respond to topical steroids and requires antibiotic treatment.

Another differential diagnosis includes cutaneous larva migrans, which infests domesticated and stray animals. For example, hookworm larvae propagate their eggs inside the intestines of their host before fecal-soil transmission in sandy locales.11 Unexpecting beachgoers travel barefoot on this contaminated soil, offering ample opportunity for the parasite to burrow into the upper dermis.11,12 The clinical presentation includes signs and symptoms of creeping eruption such as pruritic, linear, serpiginous tracks. Topical treatment with thiabendazole requires application 3 times daily for 15 days, which increases the risk for nonadherence, yet this therapy proves advantageous if a patient does not tolerate oral agents due to systemic adverse effects.11,12 Oral agents (eg, ivermectin, albendazole) offer improved adherence with a single dose11,13; the cure rate was higher with a single dose of ivermectin 12 mg vs a single dose of albendazole 400 mg.13 The current suggested treatment is ivermectin 200 μg/kg by mouth daily for 1 or 2 days.14

The incidence of seabather’s eruption (also known as chinkui dermatitis) is highest during the summer season and fluctuates between epidemic and nonepidemic years.15,16 It occurs sporadically worldwide mostly in tropical climates due to trapping of larvae spawn of sea animals such as crustaceans in swimwear. Initially, it presents as a pruritic and burning sensation after exiting the water, manifesting as a macular, papular, or maculopapular rash on areas covered by the swimsuit.15,16 The sensation is worse in areas that are tightly banded on the swimsuit, including the waistband and elastic straps.15 Commonly, the affected individual will seek relief via a shower, which intensifies the burning, especially if the swimsuit has not been removed. The contaminated swimwear should be immediately discarded, as the trapped sea larvae’s nematocysts activate with the pressure and friction of movement.15 Seabather’s eruption typically resolves spontaneously within a week, but symptom management can be achieved with topical steroids (triamcinolone 0.1% or clobetasol 0.05%).15,16 Unlike coral dermatitis, in seabather’s eruption the symptoms are immediate and the location of the eruption coincides with areas covered by the swimsuit.

The Diagnosis: Coral Dermatitis

At 3-week follow-up, the patient demonstrated remarkable improvement in the intensity and size of the erythematous cerebriform plaques following daily application of triamcinolone acetonide cream 0.1% (Figure). The lesion disappeared after several months and did not recur. The delayed presentation of symptoms with a history of incidental coral contact during snorkeling most likely represents the type IV hypersensitivity reaction seen in the diagnosis of coral dermatitis, an extraordinarily rare form of contact dermatitis.1 Not all coral trigger skin reactions. Species of coral that contain nematocysts in their tentacles (aptly named stinging capsules) are responsible for the sting preceding coral dermatitis, as the nematocysts eject a coiled filament in response to human tactile stimulation that injects toxins into the epidermis.2

Acute, delayed, or chronic cutaneous changes follow envenomation. Acute responses arise immediately to a few hours after initial contact and are considered an irritant contact dermatitis.3 Local tissue histamine release and cascades of cytotoxic reactions often result in the characteristic urticarial or vesiculobullous plaques in addition to necrosis, piloerection, and localized lymphadenopathy.2-4 Although relatively uncommon, there may be rapid onset of systemic symptoms such as fever, malaise, hives, nausea, or emesis. Cardiopulmonary events, hepatotoxicity, renal failure, or anaphylaxis are rare.2 Histopathology of biopsy specimens reveals epidermal spongiosis with microvesicles and papillary dermal edema.1,5 In comparison, delayed reactions occur within days to weeks and exhibit epidermal parakeratosis, spongiosis, basal layer vacuolization, focal necrosis, lymphocyte exocytosis, and papillary dermal edema with extravasated erythrocytes.1,6 Clinically, it may present as linear rows of erythematous papules with burning and pruritus.6 Chronic reactions manifest after months as difficult-to-treat, persistent lichenoid dermatitis occasionally accompanied by granulomatous changes.1,2,4 Primary prevention measures after initial contact include an acetic acid rinse and cold compression to wash away residual nematocysts in the affected area.4,7,8 If a rash develops, topical steroids are the mainstay of treatment.3,8

In tandem with toxic nematocysts, the rigid calcified bodies of coral provide an additional self-defense mechanism against human contact.2,4 The irregular haphazard nature of coral may catch novice divers off guard and lead to laceration of a mispositioned limb, thereby increasing the risk for secondary infections due to the introduction of calcium carbonate and toxic mucinous deposits at the wound site, warranting antibiotic treatment.2,4,7 Because tropical locales are home to other natural dangers that inflict disease and mimic early signs of coral dermatitis, reaching an accurate diagnosis can be difficult, particularly for lower limb lesions. In summary, the diagnosis of coral dermatitis can be rendered based on morphology of the lesion and clinical context (exposure to corals and delayed symptoms) as well as response to topical steroids.

The differential diagnosis includes accidental trauma. Variations in impact force and patient skin integrity lead to a number of possible cutaneous manifestations seen in accidental trauma,9 which includes contusions resulting from burst capillaries underneath intact skin, abrasions due to the superficial epidermis scuffing away, and lacerations caused by enough force to rip and split the skin, leaving subcutaneous tissue between the intact tissue.9,10 Typically, the pattern of injury can provide hints to match what object or organism caused the wound.9 However, delayed response and worsening symptoms, as seen in coral dermatitis, would be unusual in accidental trauma unless it is complicated by secondary infection (infectious dermatitis), which does not respond to topical steroids and requires antibiotic treatment.

Another differential diagnosis includes cutaneous larva migrans, which infests domesticated and stray animals. For example, hookworm larvae propagate their eggs inside the intestines of their host before fecal-soil transmission in sandy locales.11 Unexpecting beachgoers travel barefoot on this contaminated soil, offering ample opportunity for the parasite to burrow into the upper dermis.11,12 The clinical presentation includes signs and symptoms of creeping eruption such as pruritic, linear, serpiginous tracks. Topical treatment with thiabendazole requires application 3 times daily for 15 days, which increases the risk for nonadherence, yet this therapy proves advantageous if a patient does not tolerate oral agents due to systemic adverse effects.11,12 Oral agents (eg, ivermectin, albendazole) offer improved adherence with a single dose11,13; the cure rate was higher with a single dose of ivermectin 12 mg vs a single dose of albendazole 400 mg.13 The current suggested treatment is ivermectin 200 μg/kg by mouth daily for 1 or 2 days.14

The incidence of seabather’s eruption (also known as chinkui dermatitis) is highest during the summer season and fluctuates between epidemic and nonepidemic years.15,16 It occurs sporadically worldwide mostly in tropical climates due to trapping of larvae spawn of sea animals such as crustaceans in swimwear. Initially, it presents as a pruritic and burning sensation after exiting the water, manifesting as a macular, papular, or maculopapular rash on areas covered by the swimsuit.15,16 The sensation is worse in areas that are tightly banded on the swimsuit, including the waistband and elastic straps.15 Commonly, the affected individual will seek relief via a shower, which intensifies the burning, especially if the swimsuit has not been removed. The contaminated swimwear should be immediately discarded, as the trapped sea larvae’s nematocysts activate with the pressure and friction of movement.15 Seabather’s eruption typically resolves spontaneously within a week, but symptom management can be achieved with topical steroids (triamcinolone 0.1% or clobetasol 0.05%).15,16 Unlike coral dermatitis, in seabather’s eruption the symptoms are immediate and the location of the eruption coincides with areas covered by the swimsuit.

- Ahn HS, Yoon SY, Park HJ, et al. A patient with delayed contact dermatitis to coral and she displayed superficial granuloma. Ann Dermatol. 2009;21:95-97. doi:10.5021/ad.2009.21.1.95

- Haddad V Jr, Lupi O, Lonza JP, et al. Tropical dermatology: marine and aquatic dermatology. J Am Acad Dermatol. 2009;61:733-752. doi:10.1016/j.jaad.2009.01.046

- Salik J, Tang R. Images in clinical medicine. Coral dermatitis. N Engl J Med. 2015;373:E2. doi:10.1056/NEJMicm1412907

- Reese E, Depenbrock P. Water envenomations and stings. Curr Sports Med Rep. 2014;13:126-131. doi:10.1249/JSR.0000000000000042

- Addy JH. Red sea coral contact dermatitis. Int J Dermatol. 1991; 30:271-273. doi:10.1111/j.1365-4362.1991.tb04636.x

- Miracco C, Lalinga AV, Sbano P, et al. Delayed skin reaction to Red Sea coral injury showing superficial granulomas and atypical CD30+ lymphocytes: report of a case. Br J Dermatol. 2001;145:849-851. doi:10.1046/j.1365-2133.2001.04454.x

- Ceponis PJ, Cable R, Weaver LK. Don’t kick the coral! Wilderness Environ Med. 2017;28:153-155. doi:10.1016/j.wem.2017.01.025

- Tlougan BE, Podjasek JO, Adams BB. Aquatic sports dematoses. part 2-in the water: saltwater dermatoses. Int J Dermatol. 2010;49:994-1002. doi:10.1111/j.1365-4632.2010.04476.x

- Simon LV, Lopez RA, King KC. Blunt force trauma. StatPearls [Internet]. StatPearls Publishing; 2023. Accessed January 12, 2034. https://www.ncbi.nlm.nih.gov/books/NBK470338/

- Gentile S, Kneubuehl BP, Barrera V, et al. Fracture energy threshold in parry injuries due to sharp and blunt force. Int J Legal Med. 2019;133:1429-1435.

- Caumes E. Treatment of cutaneous larva migrans. Clin Infect Dis. 2000;30:811-814. doi:10.1086/313787

- Davies HD, Sakuls P, Keystone JS. Creeping eruption. A review of clinical presentation and management of 60 cases presenting to a tropical disease unit. Arch Dermatol. 1993;129:588-591. doi:10.1001 /archderm.129.5.588

- Caumes E, Carriere J, Datry A, et al. A randomized trial of ivermectin versus albendazole for the treatment of cutaneous larva migrans. Am J Trop Med Hyg. 1993;49:641-644. doi:10.4269 /ajtmh.1993.49.641

- Schuster A, Lesshafft H, Reichert F, et al. Hookworm-related cutaneous larva migrans in northern Brazil: resolution of clinical pathology after a single dose of ivermectin. Clin Infect Dis. 2013;57:1155-1157. doi:10.1093/cid/cit440

- Freudenthal AR, Joseph PR. Seabather’s eruption. N Engl J Med. 1993;329:542-544. doi:10.1056/NEJM199308193290805

- Odagawa S, Watari T, Yoshida M. Chinkui dermatitis: the sea bather’s eruption. QJM. 2022;115:100-101. doi:10.1093/qjmed/hcab277

- Ahn HS, Yoon SY, Park HJ, et al. A patient with delayed contact dermatitis to coral and she displayed superficial granuloma. Ann Dermatol. 2009;21:95-97. doi:10.5021/ad.2009.21.1.95

- Haddad V Jr, Lupi O, Lonza JP, et al. Tropical dermatology: marine and aquatic dermatology. J Am Acad Dermatol. 2009;61:733-752. doi:10.1016/j.jaad.2009.01.046

- Salik J, Tang R. Images in clinical medicine. Coral dermatitis. N Engl J Med. 2015;373:E2. doi:10.1056/NEJMicm1412907

- Reese E, Depenbrock P. Water envenomations and stings. Curr Sports Med Rep. 2014;13:126-131. doi:10.1249/JSR.0000000000000042

- Addy JH. Red sea coral contact dermatitis. Int J Dermatol. 1991; 30:271-273. doi:10.1111/j.1365-4362.1991.tb04636.x

- Miracco C, Lalinga AV, Sbano P, et al. Delayed skin reaction to Red Sea coral injury showing superficial granulomas and atypical CD30+ lymphocytes: report of a case. Br J Dermatol. 2001;145:849-851. doi:10.1046/j.1365-2133.2001.04454.x

- Ceponis PJ, Cable R, Weaver LK. Don’t kick the coral! Wilderness Environ Med. 2017;28:153-155. doi:10.1016/j.wem.2017.01.025

- Tlougan BE, Podjasek JO, Adams BB. Aquatic sports dematoses. part 2-in the water: saltwater dermatoses. Int J Dermatol. 2010;49:994-1002. doi:10.1111/j.1365-4632.2010.04476.x

- Simon LV, Lopez RA, King KC. Blunt force trauma. StatPearls [Internet]. StatPearls Publishing; 2023. Accessed January 12, 2034. https://www.ncbi.nlm.nih.gov/books/NBK470338/

- Gentile S, Kneubuehl BP, Barrera V, et al. Fracture energy threshold in parry injuries due to sharp and blunt force. Int J Legal Med. 2019;133:1429-1435.

- Caumes E. Treatment of cutaneous larva migrans. Clin Infect Dis. 2000;30:811-814. doi:10.1086/313787

- Davies HD, Sakuls P, Keystone JS. Creeping eruption. A review of clinical presentation and management of 60 cases presenting to a tropical disease unit. Arch Dermatol. 1993;129:588-591. doi:10.1001 /archderm.129.5.588

- Caumes E, Carriere J, Datry A, et al. A randomized trial of ivermectin versus albendazole for the treatment of cutaneous larva migrans. Am J Trop Med Hyg. 1993;49:641-644. doi:10.4269 /ajtmh.1993.49.641

- Schuster A, Lesshafft H, Reichert F, et al. Hookworm-related cutaneous larva migrans in northern Brazil: resolution of clinical pathology after a single dose of ivermectin. Clin Infect Dis. 2013;57:1155-1157. doi:10.1093/cid/cit440

- Freudenthal AR, Joseph PR. Seabather’s eruption. N Engl J Med. 1993;329:542-544. doi:10.1056/NEJM199308193290805

- Odagawa S, Watari T, Yoshida M. Chinkui dermatitis: the sea bather’s eruption. QJM. 2022;115:100-101. doi:10.1093/qjmed/hcab277

A 48-year-old otherwise healthy man presented with a tender lesion on the dorsal aspect of the right foot with dysesthesia and progressive pruritus that he originally noticed 9 days prior after snorkeling in the Caribbean. He recalled kicking what he assumed was a rock while swimming. Initially there was negligible discomfort; however, on day 7 the symptoms started to worsen and the lesion started to swell. Application of a gauze pad soaked in hydrogen peroxide 3% failed to alleviate symptoms. Physical examination revealed a 4-cm region of well-demarcated, nonblanching, erythematous plaques in a lattice pattern accompanied by edematous and bullous changes. Triamcinolone acetonide cream 0.1% was prescribed.

More Side Effects With Local Therapies for Prostate Cancer

These were the findings of a retrospective cohort study in JAMA Network Open.

The standard treatment of advanced prostate cancer is androgen deprivation therapy (ADT). “The role of local therapy has been debated for several years. Studies have shown that radiation therapy or radical prostatectomy can improve patient survival under certain conditions,” said Hubert Kübler, MD, director of the Clinic and Polyclinic for Urology and Pediatric Urology at the University Hospital Würzburg in Germany. “At academic centers, a local therapy is pursued for oligometastatic patients if they are fit enough.”

The hope is to spare patients the side effects of ADT over an extended period and thus improve their quality of life. “But what impact does local therapy itself have on the men’s quality of life, especially considering that the survival advantage gained may be relatively small?” wrote study author Saira Khan, PhD, MPH, assistant professor of surgery at Washington University School of Medicine in St. Louis, Missouri, and her colleagues.

Examining Side Effects

This question has not been thoroughly examined yet. “To our knowledge, this is one of the first studies investigating the side effects of local therapy in men with advanced prostate cancer for up to 5 years after treatment,” wrote the authors.

The cohort study included 5500 US veterans who were diagnosed with advanced prostate cancer between January 1999 and December 2013. The tumors were in stage T4 (tumor is fixed or has spread to adjacent structures), with regional lymph node metastases (N1), and partially detectable distant metastases (M1).

The average age was 68.7 years, and 31% received local therapy (eg, radiation therapy, radical prostatectomy, or both), and 69% received systemic therapy (eg, hormone therapy, chemotherapy, or both).

Types of Local Therapy

Combining radiation therapy and radical prostatectomy “diminishes the meaningfulness of the study results,” according to Dr. Kübler. “The issue should have been analyzed in much finer detail. Studies clearly show, for example, that radiation therapy consistently performs slightly worse than prostatectomy in terms of gastrointestinal complaints.”

In their paper, the researchers reported that the prevalence of side effects was high, regardless of the therapy. Overall, 916 men (75.2%) with initial local therapy and 897 men (67.1%) with initial systemic therapy reported experiencing at least one side effect lasting more than 2 years and up to 5 years.

In the first year after the initial therapy, men who underwent local therapy, compared with those who underwent systemic therapy, experienced more of the following symptoms:

- Gastrointestinal issues (odds ratio [OR], 4.08)

- Pain (OR, 1.57)

- Sexual dysfunction (OR, 2.96)

- Urinary problems, predominantly incontinence (OR, 2.25)

Lasting Side Effects

Even up to year 5 after the initial therapy, men with local therapy reported more gastrointestinal and sexual issues, as well as more frequent incontinence, than those with systemic therapy. Only the frequency of pain equalized between the two groups in the second year.

“Our results are consistent with the known side effect profile [of local therapy] in patients with clinically localized prostate cancer receiving surgery or radiation therapy instead of active surveillance,” wrote the authors.

The comparison in advanced prostate cancer, however, is not with active surveillance but with ADT. “As the study confirmed, ADT is associated with various side effects,” said Dr. Kübler. Nevertheless, it was associated with fewer side effects than local therapy in this study. The concept behind local therapy (improving prognosis while avoiding local problems) is challenging to reconcile with these results.

Contradictory Data

The results also contradict findings from other studies. Dr. Kübler pointed to the recently presented PEACE-1 study, where “local complications and issues were reduced through local therapy in high-volume and high-risk patients.”

The study did not consider subsequent interventions, such as how many patients needed transurethral manipulation in the later course of the disease to address local problems. “There are older data showing that a radical prostatectomy can reduce the need for further resections,” Dr. Kübler added.

“I find it difficult to reconcile these data with other data and with my personal experience,” said Dr. Kübler. However, he agreed with the study authors’ conclusion, emphasizing the importance of informing patients about expected side effects of local therapy in the context of potentially marginal improvements in survival.

Different Situation in Germany

“As practitioners, we sometimes underestimate the side effects we subject our patients to. We need to talk to our patients about the prognosis improvement that comes with side effects,” said Dr. Kübler. He added that a similar study in Germany might yield different results. “Dr. Khan and her colleagues examined a very specific patient population: Namely, veterans. This patient clientele often faces many social difficulties, and the treatment structure in US veterans’ care differs significantly from ours.”

This article was translated from the Medscape German edition. A version of this article appeared on Medscape.com.

These were the findings of a retrospective cohort study in JAMA Network Open.

The standard treatment of advanced prostate cancer is androgen deprivation therapy (ADT). “The role of local therapy has been debated for several years. Studies have shown that radiation therapy or radical prostatectomy can improve patient survival under certain conditions,” said Hubert Kübler, MD, director of the Clinic and Polyclinic for Urology and Pediatric Urology at the University Hospital Würzburg in Germany. “At academic centers, a local therapy is pursued for oligometastatic patients if they are fit enough.”

The hope is to spare patients the side effects of ADT over an extended period and thus improve their quality of life. “But what impact does local therapy itself have on the men’s quality of life, especially considering that the survival advantage gained may be relatively small?” wrote study author Saira Khan, PhD, MPH, assistant professor of surgery at Washington University School of Medicine in St. Louis, Missouri, and her colleagues.

Examining Side Effects

This question has not been thoroughly examined yet. “To our knowledge, this is one of the first studies investigating the side effects of local therapy in men with advanced prostate cancer for up to 5 years after treatment,” wrote the authors.

The cohort study included 5500 US veterans who were diagnosed with advanced prostate cancer between January 1999 and December 2013. The tumors were in stage T4 (tumor is fixed or has spread to adjacent structures), with regional lymph node metastases (N1), and partially detectable distant metastases (M1).

The average age was 68.7 years, and 31% received local therapy (eg, radiation therapy, radical prostatectomy, or both), and 69% received systemic therapy (eg, hormone therapy, chemotherapy, or both).

Types of Local Therapy